Abstract

There are some inherent limitations to the performance of support vector regression (SVR), such as (i) the loss function, penalty parameter, and kernel function parameter usually cannot be determined accurately; (ii) the training data sometimes cannot be fully utilized; and (iii) the local accuracy in the vicinity of training points still need to be improved. To further enhance the performance of SVR, this paper proposes a novel model modification method for SVR with the help of radial basis functions. The core idea of the method is to start with an initial SVR and modify it in a subsequent stage to extract as much information as possible from the existing training data; the second stage does not require new points. Four types of modified support vector regression (MSVR), including MSVg, MSVm, MSVi, and MSVc, are constructed by using four different forms of basis functions. This paper evaluates the performances of SVR, MSVg, MSVm, MSVi, and MSVc by using six popular benchmark problems and a practical engineering problem, which is designing a typical turbine disk for an air-breathing engine. The results show that all the four types of MSVR perform better than SVR. Notably, MSVc has the best performance.

Similar content being viewed by others

References

Acar E, Rais-Rohani M (2009) Ensemble of metamodels with optimized weight factors. Struct Multidiscip Optim 37(3):279–294. https://doi.org/10.1007/s00158-008-0230-y

Acar E (2010) Various approaches for constructing an ensemble of metamodels using local measures. Struct Multidiscip Optim 42(6):879–896. https://doi.org/10.1007/s00158-010-0520-z

Aich U, Banerjee S (2014) Modeling of edm responses by support vector machine regression with parameters selected by particle swarm optimization. Appl Math Modell 38(11):2800– 2818

Basak D, Pal S, Patranabis DC (2007) Support vector regression. Neural Inf Process Lett Rev 11 (10):203–224

Cevik A, Kurtolu AE, Bilgehan M, Gulsan ME, Albegmprli HM (2015) Support vector machines in structural engineering: a review. J Civ Eng Manag 21(3):261–281

Chang CC, Lin CJ (2011) LIBSVM: a library for support vector machines. ACM Trans Intell Syst Technol 2(3):1–27. https://doi.org/10.1145/1961189.1961199

Chapelle O, Vapnik V, Bousquet O, Mukherjee S (2002) Choosing multiple parameters for support vector machines. Mach Learn 46(1-3):131–159

Chen R, Liang CY, Hong WC, Gu DX (2015) Forecasting holiday daily tourist flow based on seasonal support vector regression with adaptive genetic algorithm. Appl Soft Comput 26:435–443. https://doi.org/10.1016/j.asoc.2014.10.022

Cheng K, Lu Z (2018) Adaptive sparse polynomial chaos expansions for global sensitivity analysis based on support vector regression. Comput Struct 194:86–96

Clarke SM, Griebsch JH, Simpson TW (2005) Analysis of support vector regression for approximation of complex engineering analyses. J Mech Des 127(6):1077–1087. https://doi.org/10.1115/1.1897403

Deka PC, et al. (2014) Support vector machine applications in the field of hydrology: a review. Appl Soft Comput 19:372–386

Gu J, Zhu M, Jiang L (2011) Housing price forecasting based on genetic algorithm and support vector machine. Expert Syst Appl 38(4):3383–3386

Hsu CW, Chang CC, Lin CJ (2003) A practical guide to support vector classification. Technical Report, Department of Computer Science, National Taiwan University

Huang CL, Wang CJ (2006) A ga-based feature selection and parameters optimization for support vector machines. Expert Syst Appl 31(2):231–240

Huang J, Bo Y, Wang H (2011a) Electromechanical equipment state forecasting based on genetic algorithm – support vector regression. Expert Syst Appl 38(7):8399–8402. https://doi.org/10.1016/j.eswa.2011.01.033

Huang Z, Wang C, Chen J, Tian H (2011b) Optimal design of aeroengine turbine disc based on kriging surrogate models. Comput Struct 89(1-2):27–37. https://doi.org/10.1016/j.compstruc.2010.07.010

Jin R, Chen W, Simpson TW (2001) Comparative studies of metamodeling techniques under multiple modeling criteria. Struct Multidiscip Optim 23(1):1–13. https://doi.org/10.1007/s00158-001-0160-4

Kromanis R, Kripakaran P (2014) Predicting thermal response of bridges using regression models derived from measurement histories. Comput Struct 136(3):64–77

LaValle SM, Branicky MS, Lindemann SR (2004) On the relationship between classical grid search and probabilistic roadmaps. Int J Rob Res 23(7-8):673–692

Lin SW, Ying KC, Chen SC, Lee ZJ (2008) Particle swarm optimization for parameter determination and feature selection of support vector machines. Expert Syst Appl 35(4):1817–1824

Lu S, Li L (2011) Twin-web structure optimization design for heavy duty turbine disk based for aero-engine. JPropuls Technol 32(5):631–636

Luo X, Ding H, Zhang L (2012) Forecasting of national expressway network scale based on support vector regression with adaptive genetic algorithm. In: Inernational Conference of Logistics Engineering and Management, pp 434–441

Mehmani A, Chowdhury S, Meinrenken C, Messac A (2018) Concurrent surrogate model selection (cosmos): optimizing model type, kernel function, and hyper-parameters. Struct Multidiscip Optim 57(3):1093–1114. https://doi.org/10.1007/s00158-017-1797-y

Min JH, LY C (2005) Bankruptcy prediction using support vector machine with optimal choice of kernel function parameters. Expert Syst Appl 28(4):603–614. https://doi.org/10.1016/j.eswa.2004.12.008

Mountrakis G, Im J, Ogole C (2011) Support vector machines in remote sensing: a review. ISPRS J Photogramm Remote Sens 66(3):247–259

Pal M, Deswal S (2011) Support vector regression based shear strength modelling of deep beams. Comput Struct 89(13–14):1430–1439

Pan F, Zhu P, Zhang Y (2010) Metamodel-based lightweight design of b-pillar with twb structure via support vector regression. Comput Struct 88(1–2):36–44

Rojo-álvarez J L, Martínez-Ramón M, Mu oz-marí J, Camps-Valls G (2013) A unified svm framework for signal estimation. Digital Signal Processing

Rojo-Álvarez JL, Martínez-Ramón M, Muñoz-Marí J, Camps-Valls G (2014) A unified svm framework for signal estimation. Digital Signal Process 26:1–20

Salcedo-Sanz S, Rojo-Álvarez JL, Martínez-Ramón M, Camps-Valls G (2014) Support vector machines in engineering: an overview. Wiley Interdiscip Rev: Data Min Knowl Discov 4(3):234–267

Sanchez E, Pintos S, Queipo NV (2008) Toward an optimal ensemble of kernel-based approximations with engineering applications. Struct Multidiscip Optim 36(3):247–261. https://doi.org/10.1007/s00158-007-0159-6

Viana FAC, Simpson TW, Balabanov V, Toropov V (2014) Special section on multidisciplinary design optimization: metamodeling in multidisciplinary design optimization: How far have we really come? AIAA J 52(4):670–690. https://doi.org/10.2514/1.j052375

Xiang H, Li Y, Liao H, Li C (2017) An adaptive surrogate model based on support vector regression and its application to the optimization of railway wind barriers. Struct Multidiscip Optim 55(2):701–713. https://doi.org/10.1007/s00158-016-1528-9, identifier: 1528

Yan C, Shen X, Guo F (2018a) An improved support vector regression using least squares method. Struct Multidiscip Optim 57(6):2431–2445. https://doi.org/10.1007/s00158-017-1871-5

Yan C, Shen X, Guo F (2018b) Novel two-stage method for low-order polynomial model. Math Probl Eng 2018:1–13. https://doi.org/10.1155/2018/8156390

Zhou X, Jiang T (2016) Metamodel selection based on stepwise regression. Struct Multidiscip Optim 54 (3):641–657. https://doi.org/10.1007/s00158-016-1442-1

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Responsible Editor: Mehmet Polat Saka

Replication of results

For the convenience of other researchers, the training data and test data of the studied turbine disk will be attached to this paper.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1. Support vector regression

The general form of SVR can be written as

where x denotes the vector of input variables, \(\hat {y}(\textbf {x})\) denotes the approximation response, ψ(x) denotes the non-linear feature mapping function, ω denotes the weight vector, and b denotes the bias.

To construct the model, a following optimization problem should be solved.

where (xi,yi) (i = 1,…,m) denotes the training data subset (or the entire training dataset), 𝜖 denotes the loss function, C denotes the penalty parameter, \(\xi ^{+}_{i}\) and \(\xi ^{-}_{i}\) denotes the slack variables.

The Lagrange dual form of the above model is expressed as

where \(\alpha ^{+}_{i}\) and \(\alpha ^{-}_{i}\) denote the Lagrange multipliers. k 〈xi,xj〉 = ψ(xi)Tψ(xj) is a kernel function, which has to be continuous, symmetric, and positive definite. One of the most popular kernel function that is Gaussian kernel is selected in this paper and expressed as

According to KKT conditions, SVR can be finally obtained through

The efficient package LIBSVM developed by Chang and Lin (2011) is used to construct the SVR model in this paper.

Appendix 2. Analytical functions

-

(1)

1-variable Forrester function

$$ f(x)=(6x-2)^{2}\sin (12x-4) $$(19)where x ∈ [0, 1].

-

(2)

2-variable Goldstein Price function

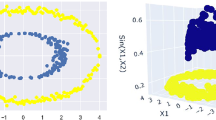

$$ \begin{array}{llll} f(\textbf{x})=\left[1+(x_{1}+x_{2}+ 1)^{2}\times\right.\\ \left.(19-14x_{1}+ 3{x_{1}^{2}}-14x_{2}+ 6x_{1}x_{2}+ 3{x_{2}^{2}})\right]\times\\ \left[30+(2x_{1}-3x_{2})^{2}\times\right.\\ \left.(18-32x_{1}+ 12{x_{1}^{2}}+ 48x_{2}-36x_{1}x_{2}+ 27{x_{2}^{2}})\right] \end{array} $$(20)where x1 ∈ [− 2, 2], and x2 ∈ [− 2, 2].

-

(3)

3-variable Perm function

$$ f(\textbf{x})=\sum\limits_{i = 1}^{3} \left( {\sum}_{j = 1}^{3} \left( j + 2 \right)\left( {x^{i}_{j}}-\frac{1}{j^{i}}\right)\right)^{2} $$(21)where xj ∈ [0, 1] for all j = 1, 2, 3.

-

(4)

4-variable Hartmann function

$$ f(\textbf{x})=-\sum\limits_{i = 1}^{4} c_{i} \exp \left[-\sum\limits_{j = 1}^{4} a_{ij}(x_{j}-p_{ij})^{2} \right] $$(22)where xj ∈ [0, 1] for all j = 1, 2, 3, 4, \(\textbf {c}=\left [\begin {array}{lllll} 1.0 &1.2& 3.0& 3.2 \end {array}\right ]^{\mathrm {T}}\), A and P are expressed as follows.

$$\begin{array}{ll} &\textbf{A}=\left[\begin{array}{llllllll} &10&3.0&17&3.5\\ &0.05&10&17&0.1\\ &3.0&3.5&1.7&10\\ &17&8.0&0.05&10 \end{array}\right]\\ &\textbf{P}=\left[\begin{array}{lllll} &0.1312&0.1696&0.5569&0.124\\ &0.2329&0.4135&0.8307&0.3736\\ &0.2348&0.1451&0.3522&0.2883\\ &0.4047&0.8828&0.8732&0.5743 \end{array}\right] \end{array} $$ -

(5)

5-variable Zakharov function

$$ f(\textbf{x})=\sum\limits_{i = 1}^{5} {{x_{i}^{2}}}+\left( \sum\limits_{i = 1}^{d} {0.5ix_{i}} \right)^{2}+\left( \sum\limits_{i = 1}^{d} {0.5ix_{i}}\right)^{4} $$(23)where xi ∈ [− 5, 10], for all i = 1,…, 5.

-

(6)

6-variable Power Sum function

$$ f(\textbf{x})=\sum\limits_{i = 1}^{6} \left[ \left( -\sum\limits_{j = 1}^{6} {x_{j}^{i}} \right)-13\right]^{2} $$(24)where xj ∈ [0, 4], for all j = 1,…, 6.

Rights and permissions

About this article

Cite this article

Yan, C., Shen, X., Guo, F. et al. A novel model modification method for support vector regression based on radial basis functions. Struct Multidisc Optim 60, 983–997 (2019). https://doi.org/10.1007/s00158-019-02251-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-019-02251-5