Abstract

Music is a ubiquitous stimulus known to influence human affect, cognition, and behavior. In the context of eating behavior, music has been associated with food choice, intake and, more recently, taste perception. In the latter case, the literature has reported consistent patterns of association between auditory and gustatory attributes, suggesting that individuals reliably recognize taste attributes in musical stimuli. This study presents subjective norms for a new set of 100 instrumental music stimuli, including basic taste correspondences (sweetness, bitterness, saltiness, sourness), emotions (joy, anger, sadness, fear, surprise), familiarity, valence, and arousal. This stimulus set was evaluated by 329 individuals (83.3% women; Mage = 28.12, SD = 12.14), online (n = 246) and in the lab (n = 83). Each participant evaluated a random subsample of 25 soundtracks and responded to self-report measures of mood and taste preferences, as well as the Goldsmiths Musical Sophistication Index (Gold-MSI). Each soundtrack was evaluated by 68 to 97 participants (Mdn = 83), and descriptive results (means, standard deviations, and confidence intervals) are available as supplemental material at osf.io/2cqa5. Significant correlations between taste correspondences and emotional/affective dimensions were observed (e.g., between sweetness ratings and pleasant emotions). Sex, age, musical sophistication, and basic taste preferences presented few, small to medium associations with the evaluations of the stimuli. Overall, these results suggest that the new Taste & Affect Music Database is a relevant resource for research and intervention with musical stimuli in the context of crossmodal taste perception and other affective, cognitive, and behavioral domains.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Music is everywhere. It has been part of the human experience since ancient times (Zatorrea & Salimpoor, 2013) and has acquired an ubiquitous presence in most daily activities, from shopping to driving, working, or even eating. Accordingly, musical stimuli have been the subject of interest for researchers, given their potential impact on how people feel, think, and behave. Considering the need for validated musical stimulus sets in research, the current study aimed to obtain subjective norms for a new set of 100 instrumental soundtracks. The soundtracks were evaluated for basic taste correspondences (e.g., sweetness, sourness), discrete emotions (e.g., joy, anger), and affective dimensions (e.g., valence, arousal).

The interest in the implications of music listening is not recent. For instance, since the introduction of the auto radio in the 1950s, researchers, manufacturers, and even insurance companies have been concerned with how music impacts people’s psychological state and driving performance (Millet et al., 2019; van der Zwaag et al., 2012). Similarly, the effects of music on daily activities, such as working (Landay & Harms, 2019; Rastipisheh et al., 2019; Shih et al., 2012), shopping (Biswas et al., 2019; Hynes & Manson, 2016; Knöferle et al., 2017; Michel et al., 2017; Yi & Kang, 2019), or exercising (Hutchinson et al., 2018; Moss et al., 2018; Terry et al., 2020) have been the focus of extensive empirical interest (see also Kämpfe et al., 2011). In most developed countries, music is also part of what is, perhaps, one of the most critical and recurring human activities: eating. Currently, several meal contexts, such as restaurants, cafés, or food courts, have background music as an important part of their atmosphere (one popular example is coffeehouse chain Starbucks, whose background music has been a key element for customers’ experience and for the brand’s identity; see Starbucks, 2014, 2015).

Previous research has documented how the presence of music may shape consumers’ behavior, including meal duration (Stroebele & de Castro, 2006), drinking and eating rates (Mathiesen et al., 2020; McElrea & Standing, 1992; Roballey et al., 1985), or meal enjoyment (Novak et al., 2010). More recently, researchers have suggested that music not only affects behavior toward food but also how we perceive it (see Spence et al., 2019a; Spence et al., 2019b). A growing body of evidence shows that sound, in general, and music, in particular, may enhance (or dampen) the perceived sensory properties of foods and drinks. While ambient sounds and noise have been shown to alter taste perception to a significant extent (Bravo-Moncayo et al., 2020; Woods et al., 2011; Yan & Dando, 2015), particular attention has been paid to music, given its ability to convey different taste-related associations. Indeed, as Kontukoski et al. (2015) noted, taste and music may be described using similar terms. Adjectives such as “sweet”, “light”, or “soft” seem to refer to common subjective experiences elicited by either foods or sounds.

In previous studies, manipulation of specific musical parameters or acoustic properties in music has resulted in different basic taste associations. For example, Mesz et al. (2011) found that trained musicians consistently manipulated specific musical parameters (such as pitch, loudness, or articulation) to convey meanings associated with basic tastes. For instance, when asked to improvise according to the word “bitter”, the resulting musical improvisations were more legato and lower pitched, whereas the word “sweet” resulted in slower, softer improvisations. When these same improvisations were presented to non-musical experts, they were able to decode the musicians’ intentions with above-chance accuracy.

Other studies have found similar consistencies in sound–taste mappings, particularly with pitch. Overall, high-pitched sounds were more frequently associated with either sweet and/or sour tastes (Crisinel et al., 2012; Crisinel & Spence, 2009, 2010a, 2010b; Knöferle et al., 2015; Reinoso-Carvalho, Wang, de Causmaecker, et al., 2016; Velasco et al., 2014; Wang et al., 2016), whereas low pitched sounds were more frequently associated with bitter tastes (Crisinel et al., 2012; Crisinel & Spence, 2009, 2010b; Knöferle et al., 2015; Qi et al., 2020; Reinoso-Carvalho, Wang, de Causmaecker, et al., 2016; Velasco et al., 2014; Watson & Gunther, 2017). Associations between basic tastes and musical instruments have also been documented, for example, between sweetness and piano or bitterness and brass (Crisinel & Spence, 2010b). Knöferle et al. (2015) also found systematic associations between basic tastes and several sonic properties such as roughness, sharpness, discontinuity, and consonance.

The growing understanding of sound–taste associations has allowed researchers and sound designers to design customized soundtracks to modulate taste perception (Wang et al., 2015). For example, Crisinel et al. (2012) developed a low-pitched brass soundtrack (“bitter”) and a high-pitched piano soundtrack (“sweet”) and found that participants rated a bittersweet cinder toffee as tasting significantly more bitter when listening to the bitter soundtrack, compared to the sweet (see also Höchenberger & Ohla, 2019). Subsequent research extended the evidence on the modulatory potential of customized music to the perception of different foods and beverages, including chocolate (Reinoso-Carvalho et al., 2015; Reinoso-Carvalho et al., 2017), juices (Wang & Spence, 2016, 2018), and beers (Reinoso-Carvalho, Velasco, van Ee, et al., 2016; Reinoso-Carvalho, Wang, Van Ee, & Spence, 2016).

Other studies have also tested the effects of familiar music on taste, such as rock and pop songs or classical music pieces (De Luca et al., 2018; Hauck & Hecht, 2019; Kantono et al., 2019; Kantono, Hamid, Shepherd, Yoo, Carr, & Grazioli, 2016; Reinoso-Carvalho et al., 2019; Reinoso-Carvalho, Velasco, et al., 2016; Spence et al., 2013; Stafford et al., 2012; Wang et al., 2019; Wang & Spence, 2015). The fact that most music has the potential to elicit not only implicit taste associations but also (and, perhaps, most notoriously) emotions raises questions about the role of affective variables in the crossmodal associations between audition and taste (Crisinel & Spence, 2012). Indeed, participants tend to match tastes that are commonly thought to be pleasant (e.g., sweetness) with sounds that are also deemed pleasant (e.g., piano) and vice-versa (Crisinel & Spence, 2010b). Previous studies (Kantono et al., 2019, Kantono, Hamid, Shepherd, Yoo, Grazioli, & Carr, 2016) also found that listening to self-selected, liked music increased the salience of sweetness attributes of gelati, whereas disliked music seemed to enhance its bitterness.

The existing literature seems consistent with the view that emotions mediate sound–taste correspondences and contribute to the multisensory experience with foods. However, the role of discrete emotions and affective variables in this context remains insufficiently explored (Reinoso-Carvalho et al., 2019; Reinoso-Carvalho, Gunn, Horst, & Spence, 2020; Wang et al., 2016). Moreover, this domain of inquiry seems to lack a systematic and integrated assessment of auditory stimuli. Despite some efforts in replicating previous findings (Crisinel & Spence, 2010b; Höchenberger & Ohla, 2019; Rudmin & Cappelli, 1983; Watson & Gunther, 2017), the existing empirical studies are based on a great diversity of auditory stimuli, often created independently (i.e., for each new study) and rarely assessed together, in a similar setting and based on equivalent assessment parameters.

Wang et al. (2015) contributed with one of the first integrative efforts by simultaneously testing 24 soundtracks previously created for scientific research or artistic performances. The soundtracks were selected based on their ability to elicit crossmodal associations with specific tastes or on their ability to shape the perception of taste in foods and drinks. The results suggested that participants were able to decode the basic taste associated with each soundtrack with above-chance accuracy, and those associations were partly mediated by pleasantness and arousal dimensions. In Wang et al.'s (2015) study, only customized soundtracks (i.e., music composed to elicit taste correspondences) were tested. However, a large body of evidence suggests that crossmodal effects may also be found for music not intended to modulate taste perception (De Luca et al., 2018; Hauck & Hecht, 2019; Kantono, Hamid, Shepherd, Yoo, Carr, & Grazioli, 2016; Kantono, Hamid, Shepherd, Yoo, Grazioli, & Carr, 2016; Kantono et al., 2019; Reinoso-Carvalho et al., 2019; Reinoso-Carvalho, Velasco, et al., 2016; Spence et al., 2013; Stafford et al., 2012; Wang et al., 2019; Wang & Spence, 2015). More importantly, these studies used musical stimuli spanning different moods and genres (e.g., pop, classical, or jazz), which may be closer to what one would expect to hear in a real-world eating environment (e.g., a restaurant) than the more abstract and homogenous soundtracks that were specifically created to mimic basic tastes (Wang et al., 2015). This point is particularly relevant for practitioners interested in designing multisensory eating experiences, for which familiarity and pleasantness could be important determinants for customer satisfaction. In the present study, we sought to extend the existing pool of stimulus materials by obtaining subjective ratings for a comprehensive set of instrumental musical stimuli that could be useful both for laboratory experiments and real-world environments (i.e., music that one could expect to hear in a restaurant). Thus, stimuli were selected to span different musical genres, as well as different “moods”. We also intended to overcome the lack of integration of emotional and affective variables in crossmodal research by concurrently testing taste correspondences, discrete emotions (e.g., joy, anger), and affective dimensions (e.g., valence, arousal).

Method

Participants

A sample of 329 respondents (83.3% women; Mage = 28.12, SD = 12.14) volunteered to participate in this study. Participants were recruited via e-mail, social media (Facebook, WhatsApp, and LinkedIn), internal university channels, and online press. Undergraduate students made up 71% of the sample, and 29% were active workers. Most participants (93.9%) were native Portuguese, and all reported having a normal audition. Only 6.5% of participants had current or past professional experience in music-related activities. Data was collected online (n = 246) and in the laboratory (n = 83).

Based on previous norming studies with auditory stimuli, a minimum of 50 evaluations per stimulus was considered adequate (Belfi & Kacirek, 2021; Imbir & Golab, 2017; Souza et al., 2020). The number of ratings per stimulus ranged from 68 to 97 (Mdn = 83) when considering the entire sample (55 to 69 for the online sample alone).

Development of the stimulus set

The stimulus set is composed of 100 royalty-free soundtracks retrieved from https://www.epidemicsound.com. All files were obtained from the music catalog, which spans over 35,000 tracks, 160 genres (e.g., jazz, pop, small emotions), and 34 “moods” (e.g., sentimental, mysterious, relaxing).

The stimuli were searched through the moods directory with the aim of covering different quadrants of the affective space. For this purpose, we used Russell's (1980) circumplex model of affect to define four search categories based on the possible combinations of arousal (high vs. low) and valence (positive vs. negative). For instance, the high arousal/positive valence quadrant was composed of soundtracks that were tagged in the moods directory as “happy”, “euphoric”, or “funny”, whereas the low arousal/negative valence quadrant included soundtracks from collections such as “sad”, “dark” or “sentimental”. The stimuli were chosen to include different genres and musical instruments. All were instrumental only to control for the potential influence of lyrics (e.g., Brattico et al., 2011; Mori & Iwanaga, 2014).

From an affective standpoint, music styles may differ in their affective charge or the type of emotions they elicit. Previous studies have provided affective norms for music of specific genres or families of genres, such as western classical (Lepping et al. (2016), film soundtracks (Eerola & Vuoskoski, 2011; Vieillard et al., 2008), or Latin music (e.g., tango, pagode; dos Santos & Silla, 2015). Considering the crossmodal associations between tastes and music parameters, such as pitch, loudness, articulation, or even instrument types (e.g., Mesz et al., 2012), it was deemed adequate to extend the search through various categories of the “genre” directory.

All soundtracks used in this study were original and not marketed directly to the general public, so they would be new and unfamiliar to most participants. In that regard, this stimulus set complements previous validation studies, which tested highly popular, familiar music. Examples include Belfi and Kacirek's (2021) Famous Melodies Stimulus Set (of highly popular, familiar, western songs, such as the “Star Wars theme”, “Jingle Bells”, or the “Happy Birthday” tune), or Song et al.'s (2016) and Imbir and Golab's (2017) datasets, primarily composed of chart-topping western hits (e.g., “November Rain” by Guns N’ Roses, “Ultraviolence” by Lana del Rey). The latter dataset also includes jazz and classical music.

The stimulus set presented in the current study comprises 100 soundtracks with 25 items per valence/arousal quadrant. All files were trimmed to a standardized duration of 30 s with a 2.5 s fade out.

Procedure and measures

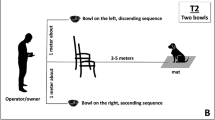

This study was approved by the Ethics Committee of Iscte-Instituto Universitário de Lisboa. The survey was programmed using Qualtrics. Participants in the online data collection sample were instructed to use headphones and choose a quiet place for participation. The laboratory data collection took place in soundproof booths equipped with similar desktop computers and headphones. The materials and procedures for both samples were the same.

Before initiating the survey, irrespectively of the data collection method, participants were asked to confirm they did not suffer from any permanent or transient hearing impairment at the time of study that could be detrimental to their performance. The informed consent provided information on the general goals of the study and stated compliance with the applicable norms of ethical conduct in research. Specifically, individuals were informed that all collected data would be treated anonymously (i.e., no information would be asked that allowed personal identification) and that their participation was voluntary (i.e., they could abandon the study at any point).

After agreeing with the terms of the informed consent and confirming having no hearing impairments, participants answered sociodemographic questions about sex, age, nationality, and occupation. Afterward, participants were asked to provide subjective ratings for 25 stimuli, randomly selected from the pool of 100 stimuli. Finally, mood and individual differences in preference for basic tastes and musical skills and behaviors were assessed using self-report scales.

Subjective ratings

Participants were told they should listen attentively to each sound clip in its entirety and rate it in several attributes (e.g., basic tastes, emotions, valence, arousal, and familiarity). For the basic taste correspondences, participants were provided two examples of foods stereotypically associated with each taste (e.g., lemon and vinegar for sourness, coffee and brussels sprouts for bitterness, potato chips and salt for saltiness, and honey and sugar for sweetness). The examples were provided to reduce ambiguity and avoid confusion between tastes, particularly between sourness and bitterness (O’mahony et al., 1979).

To avoid fatigue, each participant assessed only a random subset of 25 soundtracks. Each stimulus was presented alone on a blank screen. After 30 s, a forward button appeared on the screen, allowing participants to rate the soundtrack in 14 attributes (for instructions and scale anchors, see Table 1). A forced-choice item was presented for basic tastes, in which participants had to report if they considered the soundtrack to be sweet, bitter, salty, or sour (presented in random order). Although umami is commonly considered the fifth basic taste, Western individuals are usually less capable of discriminating this taste (Cecchini et al., 2019; Sinesio et al., 2009). For this reason, and in line with previous research testing the crossmodal associations between audition and taste, we opted to retain only four of the basic tastes (e.g., Wang et al., 2015).

The affective connotations of the auditory stimuli were assessed both from a dimensional and a categorical perspective (Lindquist et al., 2013). Psychological constructionist models of emotion postulate the existence of a core affective system underlying emotional experience. The core affect is characterized as the fluctuations in organisms’ neurophysiological and somatovisceral states in response to current events, varying in valence and arousal (Barrett, 2009, 2011). These two axes have been documented as essential across different dimensional models of affect (see Yik et al., 1999) and are relevant subjective descriptors in several databases of auditory stimuli, including natural and environmental sounds (Bradley & Lang, 2007; Fan et al., 2017; Hocking et al., 2013; Yang et al., 2018), vocalizations (Belin et al., 2008; Lassalle et al., 2019; Parsons et al., 2014), audio stories (Bertels et al., 2014), and music (Belfi & Kacirek, 2021; Imbir & Golab, 2017; Lepping et al., 2016; Song et al., 2016; Vieillard et al., 2008). In line with previous research (Ali & Peynircioǧǧlu, 2010; Schubert, 2007; Zentner et al., 2008), we differentiated perceived (P) and felt (F) affective dimensions such that participants were asked to rate valence and arousal dimensions in two ways. First, these dimensions were rated as attributes of the stimulus (“This soundtrack is…”, i.e., perceived), and second, they were used to describe the subjective emotional experience (“This soundtrack makes me feel…”, i.e., felt). In both cases, valence and arousal items were answered using seven-point rating scales.

Advocates of discrete theories of emotion contend that emotional categories like “joy”, “anger”, or “fear” elicit distinct patterns of change in cognition, judgment, experience, behavior, and physiology (Lench et al., 2011). Most theoretical accounts adhere to a functionalist perspective, in which emotions are seen as evolutionary adaptive responses, among which basic emotions represent the most primitive and universal forms of emotional expression (Ekman & Cordaro, 2011; Tracy & Randles, 2011). Although no broad consensus exists about the number and kind of emotions considered “basic”, some emotions seem to be present in most theoretical models (Ortony & Turner, 1990; Tracy & Randles, 2011). In this study, we included five discrete emotions commonly described in the literature (joy, sadness, anger, fear, and surprise) that have been previously studied in the context of music (Eerola & Vuoskoski, 2011; Juslin, 2013; Mohn et al., 2011) and sound associations (Yang et al., 2018). Individuals were asked to rate each soundtrack for each of the five emotions, using a seven-point rating scale ranging from 1 (not at all) to 7 (very much).

One relevant factor when pondering the relationship between music and emotions is familiarity. Repeated exposure may positively or negatively influence enjoyment depending on factors such as the valence of the stimulus (Witvliet & Vrana, 2007) or the focused or incidental type of exposure (Schellenberg et al., 2008; Szpunar et al., 2004). However, the effects of familiarity seem to occur even when participants have no explicit memory of the musical stimulus (van den Bosch et al., 2013). Popular music, in particular, may become personally meaningful due to associations with people, places, and past events (Krumhansl, 2002). Schulkind et al. (1999) found that older individuals preferred and showed more favorable emotional responses to songs from their youth, whereas younger individuals favored contemporary music. Although familiar music is generally more likely to evoke autobiographical memories, Janata et al. (2007) found that some participants may still report some degree of autobiographical associations in response to unfamiliar stimuli. Even though, in theory, the stimuli in the present study are likely to be unknown to participants, we assume that differences in familiarity could be observed due to individuals’ ability to make implicit associations with personally meaningful events. In this study, we asked participants to rate how familiar each soundtrack was, using a scale ranging from 1 (very unfamiliar) to 7 (very familiar).

Mood

After the stimulus rating task, participants completed a brief mood self-report scale as a post-experimental control measure. We used six pairs of bipolar adjectives (e.g., positive-negative) based on Garcia-Marques (2004). Participants answered each item using a seven-point scale.

Preference for basic tastes

There are several ways to assess basic taste preferences. While several studies employ taste testing (of aqueous solutions, odorants, or real foods) to assess preferences (Keskitalo, Knaapila, et al., 2007a; Keskitalo, Tuorila, et al., 2007b; for a review, see Drewnowski, 1997), these methods are logistically complex and challenging to adapt for online studies. One common alternative is using self-report questionnaires, generally based on hedonic ratings of food items presented verbally (Kaminski et al., 2000) or visually (Jilani et al., 2019). For instance, Meier et al. (2012), asked participants to rate their liking of foods belonging to five taste/flavor groups, using a six-point scale ranging from dislike strongly to like strongly. In the present study, we asked participants to rate their overall liking of each food group (“please indicate how much you enjoy the following tastes”), using two examples for each taste group, based on the list of food items used in Meier et al. (2012) study. To avoid ambiguity, the same examples were provided here and in the basic taste association task. Participants indicated their preference using a seven-point scale (I don’t like it at all to I like it very much).

Musical skills and behaviors

People relate to music to different degrees. Individual differences in involvement and engagement with music and musical activities have been described under many guises, such as musicality, musical intelligence, or musical talent (Baker et al., 2020). Müllensiefen et al. (2014a, 2014b) proposed the overarching concept of musical sophistication to describe different degrees of musical skills or behaviors which allow responding flexibly and effectively to different musical situations. This continuous, multidimensional conception of musical sophistication was psychometrically operationalized by the Goldsmiths Musical Sophistication Index (Gold-MSI, Müllensiefen et al., 2014a, 2014b), a self-report inventory of skilled musical behaviors for musicians and non-musicians. The Gold-MSI comprises one general sophistication index and five subscales covering active musical engagement behaviors (Active Engagement), self-assessed cognitive musical ability (Perceptual Abilities), the extent of musical training and practice (Musical Training), activities and skills particularly related to singing (Singing Abilities), and emotional responses to music (Emotions). The Gold-MSI was validated for the Portuguese population by Lima et al. (2020). The European Portuguese version of the scale (Gold-MSI-P) replicates the original factor structure and presents appropriate psychometric properties, including good internal consistency (α = .82 to .91) and test–retest reliability (r = .84 to .94). A confirmatory factor analysis suggested good fit values between the model and the observed data, χ2(627) = 1615.56, p < 0.001; CFI = 0.86; TLI = 0.84, RMSEA = 0.06, SRMR = 0.06, in line with the indices obtained with previous versions of the scale for other nationalities.

Results

The complete normative data for the 100 stimuli on the subjective dimensions of taste, emotions, valence, arousal, and familiarity are provided as supplemental material (see Supplementary File 1 at osf.io/2cqa5). In the following section, we provide the results of a) the preliminary analyses (e.g., outlier detection; impact of data collection method on ratings); b) the subjective rating norms for each dimension; c) the associations between evaluative dimensions; and d) the associations between subjective ratings and individual differences in sex, age, basic taste preferences, and musical sophistication.

Preliminary analysis

Since only completed surveys were retained, no missing data were observed. Values situated 2.5 standard deviations above or below the mean evaluation of each stimulus were considered outliers (1.24%). Moreover, no indication of systematic or random responses was observed (e.g., consistent use of a single point of the scale). Therefore, no participants were excluded.

To test the response consistency of participants’ ratings in each dimension, we compared two halves of the total sample with cases selected randomly (n1 = 165; n2 = 164) (for a similar procedure, see Garrido et al., 2017; Prada et al., 2016). No significant differences between the subsamples emerged, with all p > .193. Additionally, we tested for differences between ratings provided in the laboratory and a random subsample of equal size (n = 83) balanced for age and gender of the data collected online. We did not observe significant differences according to the data collection method for any of the taste correspondences and evaluative dimensions (all p > .071). Therefore, all subsequent analyses were conducted using the total sample. The comparison between ratings of online and laboratory participants is provided in Supplementary File 2.

Subjective rating norms

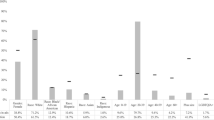

In order to define subjective rating norms, the data was coded and analyzed by soundtrack. The frequencies, means, standard deviations, and confidence intervals on each dimension for each soundtrack are provided as supplemental material (see Supplementary File 1). Based on these results, we further categorized the soundtracks as low, moderate, or high in each dimension (for a similar procedure, see Garrido & Prada, 2017; Prada et al., 2016; Rodrigues et al., 2018; Souza et al., 2021). Specifically, a soundtrack was considered moderate on a given dimension if the confidence interval included the rating scale's midpoint. If the upper bound of a given stimulus was lower than the scale’s midpoint, the stimulus was considered low on that dimension, and if the lower bound was higher than the midpoint, the stimulus was considered high. The frequencies of low, moderate, and high stimuli are presented in Fig. 1.

Most stimuli were considered moderately familiar (n = 49) and highly pleasant (n = 55), with a fair distribution across perceived arousal levels. The majority of the soundtracks elicited moderately or highly pleasant states (n = 22; n = 59) and were rated as lowly arousing (n = 49). Most of the soundtracks were rated low in discrete, unpleasant emotions like anger (n = 88), fear (n = 82), and sadness (n = 75). More than half of the soundtracks were also considered low in joy (n = 62) and surprise (n = 73). The intersection between levels of the ten dimensional variables is presented in Table 2. As can be seen, a very small number of soundtracks were evaluated as high in two discrete emotions simultaneously. Except for the emotions of fear and surprise, all the other discrete emotions presented no overlap at the high level. Felt and perceived affective dimensions (valence and arousal) were also in consonance. Specifically, no items were evaluated as low in the perceived dimension and high in the corresponding felt dimension.

For basic taste correspondences, we calculated the choice proportion of each basic taste for each soundtrack. All four tastes presented choice rates above what would be expected by chance (i.e., 25%). The total number of soundtracks above 25% and 50% levels, as well as range and mean proportions, are presented in Table 3. Overall, the mean proportion of taste correspondences across the 100 stimuli was higher for sweetness (32.5%), whereas bitterness, saltiness, and sourness presented more similar means (ranging from 20.1% to 24.2%). The highest proportion of correspondences with a given taste was observed for sweetness (for soundtrack 69, “Fruit of Lore”), corresponding to 80.5% of participants’ choices. The highest proportion of bitterness correspondences was observed for soundtrack 26 (“Intentional Evil”) with 64.4% of choices, whereas for sourness, the largest agreement was found for soundtrack 42 (“Animal Kingdom”) with 59.8%. The highest proportion of salty taste correspondences was observed for soundtrack 93 (“La Festa in Cucina”), with an accordance rate of 50.6%.

More than half of the soundtracks (n = 58) were associated with sweetness by at least 25% of participants, and 26 of these soundtracks were evaluated as sweet by more than 50% of respondents. Bitterness was associated with 43 soundtracks by at least 25% of participants, of which 11 were evaluated as bitter by more than half of the sample. Sourness was selected by more than 25% of respondents in 28 soundtracks. However, only three of these were evaluated as sour by more than half of the sample. Similarly, saltiness was associated with 38 soundtracks by more than 25% of participants. However, only one soundtrack had salty taste correspondences marginally above the 50% cut-off.

Overall, these results suggest that participants more easily decoded sweetness than the other basic tastes (see Table 3). Moreover, while some soundtracks led to a convergence of responses towards a single taste correspondence, others seemed to elicit more than one taste association. For instance, soundtrack 40 (“Liquid Core”) had an equal proportion of 41% correspondences with both bitter and sour tastes, whereas soundtrack 5 (“Not Ready to Go”) presented a bittersweet pattern of associations, with 41.2% of sweet and 43.5% of bitter correspondences.

Associations between evaluative dimensions

The correlations (Pearson’s r) between evaluative dimensions and corresponding effect sizes (Cohen’s d) are presented in Table 4. To test the associations between the quantitative rating dimensions and the choice of basic tastes, four new variables were computed based on each participants’ frequency of basic taste correspondences. For instance, if a given participant categorized four of the 25 soundtracks as being “sweet”, a score of four was assigned to the sweet taste variable. The same procedure was employed for bitterness, saltiness, and sourness ratings. The associations between the four taste variables were negative and significant (all p < .004).

Several significant correlations were also found between taste and affective variables. For instance, sweetness ratings were positively correlated with joy (r = .29, d = 0.61), and negatively correlated with sadness (r = – .12, d = 0.24), fear (r = – .20, d = 0.41), and anger (r = – .21, d = 0.43). Sweetness was also positively correlated with both perceived (r = .39, d = 0.85) and felt valence dimensions (r = .31, d = 0.65), and negatively with felt arousal (r = – .35, d = 0.75).

Bitterness ratings were positively correlated with sadness (r = .15, d = 0.30), fear (r = .26, d = 0.54), and anger (r = .27, d = 0.56), and negatively correlated with joy (r = – .12, d = 0.24). Bitterness was also negatively correlated with both perceived and felt valence dimensions (p < .001), and positively correlated with felt (r = .23, d = 0.47) arousal.

Sourness ratings were negatively correlated with joy (r = – .15, d = 0.30) and perceived valence (r = – .12, d = 0.24). A significant negative correlation with felt arousal (r = .14, d = 0.28) was also observed. Saltiness ratings presented weak associations with most affective variables, except for a moderate negative correlation with perceived valence (r = – .11, d = 0.22).

All discrete emotions were interrelated, and most of these variables were also significantly associated with valence and arousal dimensions. For instance, joy ratings were correlated with both valence dimensions and perceived arousal (all p < .001) and negatively with felt arousal (r = – .28, d = 0.58). Anger and fear were significantly correlated with both arousal dimensions and inversely correlated with valence dimensions (all p < .001). Sadness presented a similar pattern, but no association with perceived valence was observed. Surprise ratings were associated with arousal dimensions (both p > .050) but not with valence.

Associations between subjective ratings and individual differences

When comparing the ratings on the ten emotional/affective dimensions and the four taste correspondences between men and women, based on independent-samples t tests, no significant differences were observed, except for surprise ratings. Men provided higher mean ratings (M = 5.36, SD = 0.95), compared to women (M = 3.20, SD = 0.98), t(325) = 2.43, p = .016, d = 2.24 Footnote 1. Descriptive statistics (means and standard deviations) for the two groups and mean difference test results are provided in Supplementary File 3.

Pearson’s correlations between the evaluative dimensions and age indicate a tendency for older participants to provide higher sadness (r = .25, d = 0.52) and perceived arousal ratings (r = .23, d = 0.47), and more saltiness (r = .14, d = 0.28) and sourness correspondences (r = .13, d = 0.26). Inversely, age was negatively associated with bitterness correspondences (r = – .17, d = 0.35). No other significant associations were observed.

The associations between subjective ratings and individual differences in self-report measures were explored in two ways. First, the correlations between preference for basic tastes and soundtrack-taste correspondences were analyzed. Second, the associations between taste correspondences, subjective ratings, and the different dimensions of musical skills and behaviors assessed by the MSI were explored.

Overall, the associations between preference for basic tastes and soundtrack-taste correspondences were scarce, meaning that preferring foods with the predominance of a given basic taste (e.g., sweet-tasting foods, such as honey or sugar) was not significantly associated with higher identification rates for that same taste in the subset of auditory stimuli. There were, however, a few exceptions for bitter- and sour-likers. Participants who reported liking more the taste of sour foods tended to provide higher sourness ratings (r = .11, d = 0.22) and lower saltiness ratings (r = – .11, d = 0.22). Liking of bitter-tasting foods was associated with higher sourness ratings (r = .13, d = 0.26) and lower saltiness ratings (r = – .14, d = 0.28).

Individual differences in musical skills and behaviors seemed to have a small impact on the subjective evaluations of the soundtracks across the taste correspondences and affective dimensions. Overall, higher scores on the Musical Sophistication Index had small, but significant associations with perceived valence ratings (r = .11, d = 0.22) and bitterness correspondences (r = .11, d = 0.22). The Active Engagement subscale was associated with higher perceived (r = .15, d = 0.30) and felt valence ratings (r = .12, d = 0.24), as well as sweetness correspondences (r = .15, d = 0.30). The subscale Musical Training was inversely related with sourness correspondences (r = – .12, d = 0.24), whereas the subscale Singing Abilities was correlated with bitterness correspondences (r = – .16, d = 0.32). The Emotions subscale was not associated with neither of the emotional/affective scales, however, a weak positive correlation was observed with Familiarity ratings (r = .12, d = 0.24) and negatively with sourness correspondences (r = – .11, d = 0.22).

To further understand whether musical sophistication may contribute to more reliable decoding of taste–sound correspondences, we compared the response consistency of individuals with high and low scores on the full musical sophistication index. The two groups were composed of individuals above (n = 155) or below (n = 174) the median score. The agreement rate was estimated with Krippendorff's alpha test (Hayes & Krippendorff, 2007). The results indicated an overall low agreement for both groups (α < .667, Krippendorff, 2004), with those in the low sophistication group presenting lower agreement (α = .145) compared to more sophisticated individuals (α = .181).

Discussion

This article presents the first normative study with the Taste & Affect Music Database, which includes 100 instrumental soundtracks spanning different moods and genres. These soundtracks were evaluated for four basic taste correspondences and ten affective dimensions, including discrete emotions, familiarity, as well as perceived and felt affective dimensions (Valence and Arousal). The subjective norms data and research materials are available as supplemental material.

Notwithstanding the importance of music for several research domains, finding and selecting the most appropriate musical stimuli may prove an important methodological challenge. Several datasets of sounds (e.g., Bradley & Lang, 2007; Fan et al., 2017; Hocking et al., 2013) and music (e.g., Belfi & Kacirek, 2021; dos Santos & Silla, 2015; Eerola & Vuoskoski, 2011; Imbir & Golab, 2017; Lepping et al., 2016; Song et al., 2016; Vieillard et al., 2008) have been developed for specific stimuli categories (e.g., everyday sounds, classical music, or famous melodies) and subjective dimensions (e.g., discrete emotions, affective dimensions). However, norming studies in the auditory domain are still scarce compared to other sensory modalities, such as visual stimuli (Gerdes et al., 2014; Yang et al., 2018).

In the present study, we sought to obtain subjective norms for basic taste associations based on the literature on crossmodal taste perception. Research in this field has found interesting regularities in how individuals match tastes, flavors, or aromas with sound attributes. Moreover, this literature has shown that audition may play a role in modulating how foods and beverages are perceived (Spence et al., 2019a). As flavor refers to a panoply of combinations between gustatory and olfactory attributes, which are more commonly product-specific (i.e., the flavor lexicon may vary greatly between food categories or even between different products within the same food category; Suwonsichon, 2019), here we focused on the broader basic tastes categories, namely, sweetness, bitterness, sourness, and saltiness. This stimulus set adds to the research in multisensory taste perception by testing a large set of musical stimuli regarding not only taste correspondences but also emotional and affective variables, whose relevance for the multisensory tasting experience is becoming increasingly recognized (e.g., Reinoso-Carvalho, Gunn, Horst, & Spence, 2020). Providing subjective norms for large stimulus sets also allows overcoming the technical obstacles associated with stimulus development, offering a less costly and time-consuming alternative to producing stimuli for the purpose of each experiment and allowing for greater comparability and replicability between studies (Lepping et al., 2016; Shafiro & Gygi, 2004).

Basic taste correspondences and emotional and affective dimensions

The results presented here indicate that individuals were able to associate tastes and sounds in a reliable way, even though the soundtracks were not produced to elicit taste associations, as in previous studies in the field (e.g., Wang et al., 2015). Sweetness was the most easily perceived taste, as shown by the higher mean choice proportion of this taste category, as well as the higher number of soundtracks with above 25% and 50% agreement levels. Although taste matching accuracy rates vary among studies, easier recognition of sweetness in music excerpts has been previously reported (Knöferle et al., 2015; Wang et al., 2015; although see also Guetta & Loui, 2017; Mesz et al., 2011). These studies hypothesize that sweetness may be more readily attributable to music considering the metaphorical associations between certain sounds and the sweet attribute (at least in the Western culture), but also because people tend to prefer the sweet taste more, and thus, they may heuristically associate sweetness with sounds that are also pleasant.

Some of the soundtracks in this database showed clear patterns of association with a single taste, making them suitable for “sonic seasoning” experiments aiming at enhancing specific taste attributes in foods and drinks. Other soundtracks conveyed a combination of more than one taste, which may provide more adequate pairings for foods and drinks with more complex flavor matrices. For instance, for bittersweet foods, the effect of a highly sweet soundtrack that is low on bitterness may be different from that of a highly sweet soundtrack that is also bitter (Crisinel et al., 2012; Höchenberger & Ohla, 2019). Hence, understanding the configuration of taste correspondences may assist in better tailoring the choice of soundtracks and avoiding possible confounds between taste attributes.

Across the 100 stimuli, it is also possible to find various patterns of taste and emotional/affective associations. The strong interrelation between taste and affect has been a thorny issue in crossmodal research, as it is often difficult to disentangle basic taste properties (e.g., sweetness) from emotional attributes (e.g., positive valence) (e.g., Wang et al., 2015). Likewise, studies focusing on the modulatory effects of music varying in emotional content may benefit from knowing the extent to which the selected music pieces communicate gustatory attributes as well (e.g., Kantono, Hamid, Shepherd, Yoo, Grazioli, et al., 2016). Despite the noticeable correlations between emotional/affective dimensions and basic taste correspondences in this dataset, the subjective rating norms presented here indicate that it is possible to select stimuli to evoke basic taste correspondences while controlling for relevant emotional/affective variables and vice-versa. This may allow researchers to overcome puzzling situations, such as when a positive-valenced stimulus is perceived as sweeter than a stimulus crafted to evoke crossmodal correspondences (e.g., Reinoso-Carvalho, Gunn, Molina, et al., 2020).

Despite the growing awareness regarding the relationship between emotion and taste perception, these variables were seldomly tested together in a systematic way (Kantono et al., 2019; Reinoso-Carvalho, Gunn, Horst, & Spence 2020; Xu et al., 2019). One of the goals of the present study was to examine how affective and taste perceptive dimensions relate when evaluated concurrently. From that perspective, several results should be highlighted. For instance, sweet taste ratings were positively associated with positive valence dimensions and the pleasant, discrete emotion of joy. Conversely, bitter taste ratings were significantly associated with unpleasant affective dimensions and the emotions of anger, sadness, and fear. Previous studies found similar links between sweetness and positive valence, as well as between bitterness and negative valence (Wang et al., 2015; Wang et al., 2020). One possible explanation for this relationship stems from the implicit associations between tastes and hedonic outcomes. Evolutionary accounts suggest that sweetness may be innately preferred due to its presence in foods rich in carbohydrates, whereas bitterness may spark hardwired aversive reactions based on its role in signaling toxicity in foods (Beauchamp, 2016; Ventura & Mennella, 2011). These associations are also culturally disseminated through bodily metaphors linking sweetness to pleasant or nurturing affect (e.g., “love is sweet”) and bitterness and sourness with aversive emotional states (e.g., “tasting sour grapes” or “bitter with jealousy”) (Chan et al., 2013). These results also seem to align with an emotion-mediation hypothesis, which posits that shared emotional connotations may help explain the links between stimuli in different sensory modalities (Aryani et al., 2020; Spence, 2020; Walker et al., 2012). For instance, the crossmodal associations between music and colors are thought to reflect a common underlying emotional interrelation, with strong correlations between the emotional associations of music pieces and those of the colors that participants chose to match each music (Palmer et al., 2013, 2016). Similar mediation explanations have also been put forward to explain associations with other sensory modalities, such as music-odor (Levitan et al., 2015) or sound-texture associations (Spence et al., 2016).

Individual differences

Overall, individual differences, such as sex, age, taste preferences, or musical sophistication, had a small impact on taste correspondences and subjective ratings. For example, men seemed to provide higher surprise ratings. Older individuals provided higher sadness and perceived arousal ratings and made more frequent correspondences with sourness and saltiness. On the other hand, younger individuals made more frequent correspondences with bitterness. Although these differences are generally small in magnitude, future research and interventions with these stimuli should, nevertheless, consider the sociodemographic characteristics of their samples. When looking at the associations between preferences for basic tastes and correspondences for that same taste, a significant association was found only for the liking of sour-tasting foods. According to these results, preferences for the other tastes (sweetness, bitterness, and saltiness) were less consequential to the identification rates of each corresponding taste.

In this sample, only a small percentage of individuals reported having current or previous involvement with musical activities. However, quantitative differences in terms of musical sophistication (as assessed by the Gold-MSI) seemed to have a small impact on the way participants assessed the musical stimuli. Some significant associations between subscales of the Gold-MSI and the subjective ratings were observed, although no clear pattern emerged from these comparisons. One could expect higher ratings in felt affective dimensions, given that feeling moved by music is one attribute of highly sophisticated individuals (Müllensiefen et al., 2014a, 2014b). Similarly, one could expect higher consistency in sound–taste correspondences among musically sophisticated individuals. For instance, music experts are expected to have richer mental representations of audio-related information and are likely to access a broader range of music-related associations (Hauck & Hecht, 2019; Mesz et al., 2011; Talamini et al., 2022). One often-cited example involving a taste attribute is the musical term “dolce” (Italian for “sweet”), which refers to a soft, tender way of playing an instrument. The assumption that musical ability or expertise may facilitate the understanding of sound–taste mappings is also reflected in past experiments, where expert musicians have been asked to create musical improvisations to mimic basic tastes (Mesz et al., 2011) or to curate music pieces to be crossmodally congruent with wines (Spence et al., 2013).

The current findings seem to suggest that the ability to recognize affective and emotional dimensions in music is not simply a reflection of musical sophistication. Likewise, previous studies (e.g., Song et al., 2016) also reported a lack of association between subscales of the MSI and emotion ratings in musical excerpts. Notably, in the present study, we did not examine musical expertise per se, but rather a broad range of individual differences in musical behavior in a sample of the general population. When examining the consistency of sound–taste mappings among high and low scorers on the musical sophistication index, there was a tendency towards higher agreement in the first group. However, both groups presented overall low agreement levels. One may question whether larger differences in agreement rates were to be expected if we were to compare experts and non-expert groups.

Limitations and future directions

The subjective rating norms seem to indicate a fair distribution of stimuli across most dimensions. A few exceptions were found for neutral to negative emotions, such as anger, fear, sadness, and surprise, for which few items elicited ratings in the higher range (that is, items whose lower bound of the confidence interval was above the midpoint of the rating scale). One possible explanation for this result is the differentiation between “felt” and “perceived” emotion. Although individuals may identify the dysphoric emotions conveyed in a music piece (perceived emotion), they could be less likely to report feeling angry or fearful towards that same music (felt emotion). Anger, for instance, is usually felt in response to interpersonal situations of boundary invasion, violation of rights, being hurt, or frustration of a person’s wants and needs (Greenberg, 2002). Therefore, it is unlikely that a strong anger reaction would occur in response to an aesthetic stimulus such as music. Although some degree of contagion may occur between the anger conveyed by the music and the perceiver's emotions, that relationship is not perfect (Schubert, 2013; Song et al., 2016). In fact, felt and perceived emotions may differ as sharply as to be seemingly contradictory. For instance, listening to sad music may evoke a pleasant emotion in the listener due to the aesthetic appeal or the experience of feeling moved by the song (Eerola et al., 2016; Vuoskoski & Eerola, 2017). Sachs et al. (2015) argue that the sadness portrayed in music may be pleasurable when perceived as non-threatening, aesthetically pleasing, and/or when it allows psychological benefits, such as mood regulation or empathic reflection.

Another issue regarding the emotional connotations of musical stimuli is that subjective ratings are based on between subjects’ comparisons. It is likely that beyond the nomothetic conceptual connotations, a stimulus will also evoke idiographic associations based on past experience and individual memories, which could cause different individuals to attach different meanings to the same music piece. One example of the implications of adopting a nomothetic versus an idiographic approach can be found in the self-selection of musical stimuli literature. It has been previously shown that self-selected music may differ from experimenter-selected music in several ways. For instance, one study found that self-selected sad music seemed to trigger more complex and intense emotional expressions and stronger feelings of sadness and nostalgia (Weth et al., 2015). Salimpoor et al. (2009) also found evidence that self-selected music could allow for higher emotional contagion between perceived and felt emotion, whereas with experimenter-selected music, felt and perceived emotion were less associated.

Cultural variability in the assessment of auditory stimuli should also be taken into account when using the Taste & Affect Music Database. First, differences in perception of musical attributes could account for different interpretations of musical excerpts (Stevens, 2012). For instance, one study with Tunisian and French participants found that individuals synchronize differently with familiar and non-familiar music (Drake & El Heni, 2003). When asked to tap their fingers in accordance with the tempo of the musical excerpts, participants did so at a slower pace when listening to music from their own culture compared to foreign music. Second, decoding the affective attributes of the stimuli could also be liable to cultural influence. Cultural proximity seems to allow for more accurate emotional recognition in musical stimuli, with participants from the same culture as the stimuli outperforming participants from other cultures (Argstatter, 2016; Laukka et al., 2013). However, there seems to be some degree of commonalities in the way musical attributes express emotion across cultures. The accuracy in emotion identification in musical stimuli appears to be somewhat comparable to movement, facial, or verbal emotion expression (Argstatter, 2016; Fritz et al., 2009; Juslin & Laukka, 2003; Sievers et al., 2013). Some sonic attributes seem to facilitate cross-cultural recognition of affective connotations, at least for the most prototypical emotions. Joy, for instance, could be identified from fast tempo and melodic simplicity, whereas anger is usually attributed to louder volume and more complex melodies (Balkwill et al., 2004).

When it comes to the crossmodal correspondences between audition and taste, cross-cultural comparisons are still scarce. Since most of the existing evidence relies on research with Western samples, a recent study sought to test the “sonic seasoning” effect on the chocolate tasting experience of Asian and Latin-American participants (Reinoso-Carvalho, Gunn, Molina, et al., 2020). Although similar results were observed between these two groups of participants, the authors noted that the effects of the crossmodally corresponding music stimuli were less pronounced for Asian and Latin participants than previous research with Western participants would suggest. In Knöferle et al. (2015), both American and Indian participants were able to decode the basic tastes intended by the composers of music pieces with above-chance accuracy. However, American participants seemed to have an overall “better” performance (that is, they were more likely to identify as sweet a music piece composed to convey sweetness attributes).

Ngo et al. (2013) found that tasting sour juices elicited more frequent associations with low pitch and sharper speech sounds (e.g., “kiki”), while juices low in sourness were more strongly associated with high-pitched sounds and rounded speech sounds (e.g., “bouba”). This pattern of associations was observed both for Colombian and English individuals, regardless of the degree of familiarity with the juices in question. In Peng-Li et al.'s (2020) study, Chinese and Danish individuals spent more time fixating on pictures of sweet (vs. salty) foods when listening to “sweet” soundtracks and more time fixating on salty foods when listening to a “salty” soundtrack, regardless of culture. When asked to choose the food they would rather eat at the moment, Chinese participants chose more sweet foods when exposed to the sweet music condition (vs. no music), while that difference was only marginal for Danish participants. The opposite pattern was found, with Danish participants, but not the Chinese, choosing more salty foods on the salty music condition than on the no-music condition. Overall, findings on the universality of crossmodal correspondences across different sensory modalities have been mixed (Levitan et al., 2015), and more research is needed in the case of sound–taste correspondences. Particularly, if culture-specific metaphors influence crossmodal associations, perhaps research should extend beyond broad comparisons (such as Western vs. non-Western countries) to investigate which culturally situated meanings could drive sound–taste pairings.

Another question that may interest researchers is whether it is equally valid to collect data with musical stimuli online and in the laboratory. Considering the growing Internet use in everyday lives, data collection through online means is also becoming increasingly popular among researchers (Bohannon, 2016; Denissen et al., 2010; Palan & Schitter, 2018). In the past years, several validation studies have been conducted through web-based surveys, including stimuli in various sensory modalities, such as sound (e.g., Belfi & Kacirek, 2021; Lassalle et al., 2019), images (e.g., Ma et al., 2020; Prada et al., 2017, 2018), and videos (e.g., Ack Baraly et al., 2020; O’Reilly et al., 2016), however, some limitations should be taken into account. Particularly, the lower control over environmental conditions could mean that stimuli presentation is less standardized compared to laboratory settings. In the case of auditory stimuli, factors such as the properties of physical equipment, sound presentation volume, or background noise are expected to present a few variations among participants. In this study, we collected data online and in the laboratory. The full comparison of the two data collection methods is provided as supplemental material (Supplementary File 2). As these results suggest, when comparing the subjective ratings of participants in the lab with those provided by a comparable sample of online respondents (balanced for gender and age), no significant differences were observed. Thereby, it seems that, for this stimulus set, both taste correspondences and emotional/affective ratings are consistent across data collection contexts.

Final remarks

In this study, the soundtracks of the new Taste & Affect Music Database were shown to adequately convey different taste associations and emotional/affective connotations. While this is, to our best knowledge, the first large-scale database to support crossmodal research between audition and taste, the results encourage its application across different experimental and intervention settings, such as in cognitive (e.g., learning, decision making), affective (e.g., mood regulation), or behavioral (e.g., eating, buying behavior) domains. Particularly, the subjective norms across valence and arousal dimensions, as well as discrete emotions, are in line with previous validations of musical stimuli, thus complementing and extending the existing datasets.

As research on sound–taste associations grows, more attention is being paid to the applications of a multisensory framework to modulating taste perception and changing eating habits in real-world settings. Recent evidence suggests that emotion-laden music and soundtracks evoking taste associations may shape taste perception and create more pleasant tasting experiences (e.g., Reinoso-Carvalho, Gunn, Horst, & Spence, 2020, Reinoso-Carvalho, Gunn, Molina, et al., 2020). These insights may be applied by brands interested in enhancing customer’s experience but also by those interested in promoting healthier eating, for instance, by enhancing perceived sweetness or saltiness in foods and drinks with reduced sugar and salt contents (Biswas & Szocs, 2019; Thomas-Danguin et al., 2019).

Notes

When comparing the mean ratings between men (n = 53) and a random subsample of 53 women, the difference in surprise ratings remains significant. Moreover, small differences were also observed in sadness ratings (p = .035) and sweetness correspondences (p = .049), with higher means being provided by men.

References

Ack Baraly, K. T., Muyingo, L., Beaudoin, C., Karami, S., Langevin, M., & Davidson, P. S. R. (2020). Database of Emotional Videos from Ottawa (DEVO). Collabra. Psychology, 6(1), 1–26. https://doi.org/10.1525/collabra.180

Ali, S. O., & Peynircioǧǧlu, Z. F. (2010). Intensity of emotions conveyed and elicited by familiar and unfamiliar music. Music Perception, 27(3), 177–182. https://doi.org/10.1525/MP.2010.27.3.177

Argstatter, H. (2016). Perception of basic emotions in music: Culture-specific or multicultural? Psychology of Music, 44(4), 674–690. https://doi.org/10.1177/0305735615589214

Aryani, A., Isbilen, E. S., & Christiansen, M. H. (2020). Affective arousal links sound to meaning. Psychological Science, 31(8), 978–986. https://doi.org/10.1177/0956797620927967

Baker, D. J., Ventura, J., Calamia, M., Shanahan, D., & Elliott, E. M. (2020). Examining musical sophistication: A replication and theoretical commentary on the Goldsmiths Musical Sophistication Index. Musicae Scientiae, 24(4), 411–429. https://doi.org/10.1177/1029864918811879

Balkwill, L. L., Thompson, W. F., & Matsunaga, R. (2004). Recognition of emotion in Japanese, Western, and Hindustani music by Japanese listeners. Japanese Psychological Research, 46(4), 337–349. https://doi.org/10.1111/j.1468-5584.2004.00265.x

Barrett, L. F. (2009). Variety is the spice of life: A psychological construction approach to understanding variability in emotion. Cognition & Emotion, 23(7), 1284–1306. https://doi.org/10.1080/02699930902985894

Barrett, L. F. (2011). Constructing emotion. Psychological Topics, 20(3), 359–380.

Beauchamp, G. K. (2016). Why do we like sweet taste: A bitter tale? Physiology and Behavior, 164, 432–437. https://doi.org/10.1016/j.physbeh.2016.05.007

Belfi, A. M., & Kacirek, K. (2021). The famous melodies stimulus set. Behavior Research Methods, 34–48. https://doi.org/10.3758/s13428-020-01411-6

Belin, P., Fillion-Bilodeau, S., & Gosselin, F. (2008). The Montreal Affective Voices: A validated set of nonverbal affect bursts for research on auditory affective processing. Behavior Research Methods, 40(2), 531–539. https://doi.org/10.3758/BRM.40.2.531

Bertels, J., Deliens, G., Peigneux, P., & Destrebecqz, A. (2014). The Brussels Mood Inductive Audio Stories (MIAS) database. Behavior Research Methods, 46(4), 1098–1107. https://doi.org/10.3758/s13428-014-0445-3

Biswas, D., & Szocs, C. (2019). The smell of healthy choices: Cross-modal sensory compensation effects of ambient scent on food purchases. Journal of Marketing Research, 56(1), 123–141. https://doi.org/10.1177/0022243718820585

Biswas, D., Lund, K., & Szocs, C. (2019). Sounds like a healthy retail atmospheric strategy: Effects of ambient music and background noise on food sales. Journal of the Academy of Marketing Science, 47, 37–55. https://doi.org/10.1007/s11747-018-0583-8

Bohannon, J. (2016). Mechanical Turk upends social sciences. Science, 352(6291), 1263–1264. https://doi.org/10.1126/science.352.6291.1263

Bradley, M. M., & Lang, P. J. (2007). The International Affective Digitized Sounds: Affective ratings of sounds and instruction manual (Technical Report No. B-3). University of Florida, NIMH Center for the Study of Emotion and Attention

Brattico, E., Alluri, V., Bogert, B., Jacobsen, T., Vartiainen, N., Nieminen, S., & Tervaniemi, M. (2011). A functional MRI study of happy and sad emotions in music with and without lyrics. Frontiers in Psychology, 2, 1–16. https://doi.org/10.3389/fpsyg.2011.00308

Bravo-Moncayo, L., Reinoso-Carvalho, F., & Velasco, C. (2020). The effects of noise control in coffee tasting experiences. Food Quality and Preference, 86, 104020. https://doi.org/10.1016/j.foodqual.2020.104020

Cecchini, M. P., Knaapila, A., Hoffmann, E., Boschi, F., Hummel, T., & Iannilli, E. (2019). A cross-cultural survey of umami familiarity in European countries. Food Quality and Preference, 74, 172–178. https://doi.org/10.1016/j.foodqual.2019.01.017

Chan, K. Q., Tong, E. M. W., Tan, D. H., & Koh, A. H. Q. (2013). What do love and jealousy taste like? Emotion, 13(6), 1142–1149. https://doi.org/10.1037/a0033758

Crisinel, A.-S., & Spence, C. (2009). Implicit association between basic tastes and pitch. Neuroscience Letters, 464(1), 39–42. https://doi.org/10.1016/j.neulet.2009.08.016

Crisinel, A.-S., & Spence, C. (2010a). A sweet sound? Food names reveal implicit associations between taste and pitch. Perception, 39(3), 417–425. https://doi.org/10.1068/p6574

Crisinel, A.-S., & Spence, C. (2010b). As bitter as a trombone: Synesthetic correspondences in nonsynesthetes between tastes/flavors and musical notes. Attention, Perception & Psychophysics, 72(7), 1994–2002. https://doi.org/10.3758/APP

Crisinel, A.-S., & Spence, C. (2012). The impact of pleasantness ratings on crossmodal associations between food samples and musical notes. Food Quality and Preference, 24(1), 136–140. https://doi.org/10.1016/j.foodqual.2011.10.007

Crisinel, A.-S., Cosser, S., King, S., Jones, R., Petrie, J., & Spence, C. (2012). A bittersweet symphony: Systematically modulating the taste of food by changing the sonic properties of the soundtrack playing in the background. Food Quality and Preference, 24(1), 201–204. https://doi.org/10.1016/j.foodqual.2011.08.009

De Luca, M., Campo, R., & Lee, R. (2018). Mozart or pop music ? Effects of background music on wine consumers., 2012. https://doi.org/10.1108/IJWBR-01-2018-0001

Denissen, J. J. A., Neumann, L., & Van Zalk, M. (2010). How the Internet is changing the implementation of traditional research methods, people’s daily lives, and the way in which developmental scientists conduct research. International Journal of Behavioral Development, 34(6), 564–575. https://doi.org/10.1177/0165025410383746

dos Santos, C. L., & Silla, C. N. (2015). The Latin Music Mood Database. Eurasip Journal on Audio, Speech, and Music Processing, 2015(1). https://doi.org/10.1186/s13636-015-0065-6

Drake, C., & El Heni, J. B. (2003). Synchronizing with music: Intercultural differences. Annals of the New York Academy of Sciences, 999, 429–437. https://doi.org/10.1196/annals.1284.053

Drewnowski, A. (1997). Taste preferences and food intake. Annual Review of Nutrition, 17, 237–253. https://doi.org/10.1146/annurev.nutr.17.1.237

Eerola, T., & Vuoskoski, J. K. (2011). A comparison of the discrete and dimensional models of emotion in music. Psychology of Music, 39(1), 18–49. https://doi.org/10.1177/0305735610362821

Eerola, T., Vuoskoski, J. K., & Kautiainen, H. (2016) Being moved by unfamiliar sad music is associated with high empathy. Frontiers in Psychology, 7, 1176. https://doi.org/10.3389/fpsyg.2016.01176

Ekman, P., & Cordaro, D. (2011). What is meant by calling emotions basic. Emotion Review, 3(4), 364–370. https://doi.org/10.1177/1754073911410740

Fan, J., Thorogood, M., & Pasquier, P. (2017, October). Emo-soundscapes: A dataset for soundscape emotion recognition. In 7th International Conference on Affective Computing and Intelligent Interaction (ACII), 196–201. IEEE. https://doi.org/10.1109/ACII.2017.8273600

Fritz, T., Jentschke, S., Gosselin, N., Sammler, D., Peretz, I., Turner, R., Friederici, A. D., & Koelsch, S. (2009). Universal recognition of three basic emotions in music. Current Biology, 19(7), 573–576. https://doi.org/10.1016/j.cub.2009.02.058

Garcia-Marques, T. (2004). Mensuração variável “Estado de Espírito” na população Portuguesa. Laboratório de Psicologia, 2(1), 77–94.

Garrido, M. V., & Prada, M. (2017). KDEF-PT: Valence, emotional intensity, familiarity and attractiveness ratings of angry, neutral, and happy faces. Frontiers in Psychology, 8, 2181. https://doi.org/10.3389/fpsyg.2017.02181

Garrido, M. V., Lopes, D., Prada, M., Rodrigues, D., Jerónimo, R., & Mourão, R. P. (2017). The many faces of a face: Comparing stills and videos of facial expressions in eight dimensions (SAVE database). Behavior Research Methods, 49(4), 1343–1360. https://doi.org/10.3758/s13428-016-0790-5

Gerdes, A. B. M., Wieser, M. J., & Alpers, G. W. (2014). Emotional pictures and sounds: A review of multimodal interactions of emotion cues in multiple domains. Frontiers in Psychology, 5, 1–13. https://doi.org/10.3389/fpsyg.2014.01351

Greenberg, L. S. (2002). Emotion-focused therapy: Coaching clients to work through their feelings. American Psychological Association.

Guetta, R., & Loui, P. (2017). When music is salty: The crossmodal associations between sound and taste. PLoS ONE, 12(3), 1–14. https://doi.org/10.0591/journal.pone.0173366

Hauck, P., & Hecht, H. (2019). Having a drink with Tchaikovsky: The crossmodal influence of background music on the taste of beverages. Multisensory Research, 32(1), 1–24. https://doi.org/10.1163/22134808-20181321

Hayes, A. F., & Krippendorff, K. (2007). Answering the call for a standard reliability measure for coding data. Communication Methods and Measures, 1(1), 77–89. https://doi.org/10.1080/19312450709336664

Höchenberger, R., & Ohla, K. (2019). A bittersweet symphony: Evidence for taste–sound correspondences without effects on taste quality-specific perception. Journal of Neuroscience Research, 97(3), 267–275. https://doi.org/10.1002/jnr.24308

Hocking, J., Dzafic, I., Kazovsky, M., & Copland, D. A. (2013). NESSTI: Norms for Environmental Sound Stimuli. PLoS ONE, 8(9), e73382. https://doi.org/10.1371/journal.pone.0073382

Hutchinson, J. C., Jones, L., Vitti, S. N., Moore, A., Dalton, P. C., & O’Neil, B. J. (2018). The influence of self-selected music on affect-regulated exercise intensity and remembered pleasure during treadmill running. Sport, Exercise, and Performance Psychology, 7(1), 80–92. https://doi.org/10.1037/spy0000115

Hynes, N., & Manson, S. (2016). The sound of silence: Why music in supermarkets is just a distraction. Journal of Retailing and Consumer Services, 28, 171–178. https://doi.org/10.1016/j.jretconser.2015.10.001

Imbir, K., & Golab, M. (2017). Affective reactions to music: Norms for 120 excerpts of modern and classical music. Psychology of Music, 45(3), 432–449. https://doi.org/10.1177/0305735616671587

Janata, P., Tomic, S. T., & Rakowski, S. K. (2007). Characterisation of music-evoked autobiographical memories. Memory, 15(8), 845–860. https://doi.org/10.1080/09658210701734593

Jilani, H., Pohlabeln, H., De Henauw, S., Eiben, G., Hunsberger, M., Molnar, D., Moreno, L. A., Pala, V., Russo, P., Solea, A., Veidebaum, T., Ahrens, W., & Hebestreit, A. (2019). Relative validity of a food and beverage preference questionnaire to characterize taste phenotypes in children adolescents and adults. Nutrients, 11, 1453. https://doi.org/10.3390/nu11071453

Juslin, P. N. (2013). What does music express? Basic emotions and beyond. Frontiers in Psychology, 4, 1–14. https://doi.org/10.3389/fpsyg.2013.00596

Juslin, P. N., & Laukka, P. (2003). Communication of emotions in vocal expression and music performance: Different channels, same code? Psychological Bulletin, 129(5), 770–814. https://doi.org/10.1037/0033-2909.129.5.770

Kaminski, L. C., Henderson, S. A., & Drewnowski, A. (2000). Young women’s food preferences and taste responsiveness to 6-n-propylthiouracil (PROP). Physiology and Behavior, 68, 691–697. https://doi.org/10.1016/S0031-9384(99)00240-1

Kämpfe, J., Sedlmeier, P., & Renkewitz, F. (2011). The impact of background music on adult listeners: A meta-analysis. Psychology of Music, 39(4), 424–448. https://doi.org/10.1177/0305735610376261

Kantono, K., Hamid, N., Shepherd, D., Yoo, M. J. Y., Carr, B. T., & Grazioli, G. (2016). The effect of background music on food pleasantness ratings. Psychology of Music, 44(5), 1111–1125. https://doi.org/10.1177/0305735615613149

Kantono, K., Hamid, N., Shepherd, D., Yoo, M. J. Y., Grazioli, G., & Carr, B. T. (2016). Listening to music can influence hedonic and sensory perceptions of gelati. Appetite, 100, 244–255. https://doi.org/10.1016/j.appet.2016.02.143

Kantono, K., Hamid, N., Shepherd, D., Lin, Y. H. T., Skiredj, S., & Carr, B. T. (2019). Emotional and electrophysiological measures correlate to flavour perception in the presence of music. Physiology and Behavior, 199, 154–164. https://doi.org/10.1016/j.physbeh.2018.11.012

Keskitalo, K., Knaapila, A., Kallela, M., Palotie, A., Wessman, M., Sammalisto, S., Peltonen, L., Tuorila, H., & Perola, M. (2007a). Sweet taste preferences are partly genetically determined: Identification of a trait locus on chromosome 16. American Journal of Clinical Nutrition, 86, 55–63. https://doi.org/10.1093/ajcn/86.1.55

Keskitalo, K., Tuorila, H., Spector, T. D., Cherkas, L. F., Knaapila, A., Silventoinen, K., & Perola, M. (2007b). Same genetic components underlie different measures of sweet taste preference. American Journal of Clinical Nutrition, 86, 1663–1669. https://doi.org/10.1093/ajcn/86.5.1663

Knöferle, K. M., Woods, A., Käppler, F., & Spence, C. (2015). That sounds sweet: Using cross-modal correspondences to communicate gustatory attributes. Psychology and Marketing, 32(1), 107–120. https://doi.org/10.1002/mar.20766

Knöferle, K. M., Paus, V. C., & Vossen, A. (2017). An upbeat crowd: Fast in-store music alleviates negative effects of high social density on customers’ spending. Journal of Retailing, 93(4), 541–549. https://doi.org/10.1016/j.jretai.2017.06.004

Kontukoski, M., Luomala, H., Mesz, B., Sigman, M., Trevisan, M., Rotola-Pukkila, M., & Hopia, A. I. (2015). Sweet and sour: Music and taste associations. Nutrition & Food Science, 45(3), 357–376. https://doi.org/10.1108/NFS-01-2015-0005

Krippendorff, K. (2004). Content analysis: An introduction to its methodology (2nd ed.). SAGE Publications.

Krumhansl, C. L. (2002). Music: A link between cognition and emotion. Current Directions in Psychological Science, 11(2), 45–50. https://doi.org/10.1111/1467-8721.00165

Landay, K., & Harms, P. D. (2019). Whistle while you work? A review of the effects of music in the workplace. Human Resource Management Review, 29(3), 371–385. https://doi.org/10.1016/j.hrmr.2018.06.003

Lassalle, A., Pigat, D., O’Reilly, H., Berggen, S., Fridenson-Hayo, S., Tal, S., Elfström, S., Råde, A., Golan, O., Bölte, S., Baron-Cohen, S., & Lundqvist, D. (2019). The EU-Emotion Voice Database. Behavior Research Methods, 51, 493–506. https://doi.org/10.3758/s13428-018-1048-1

Laukka, P., Eerola, T., Thingujam, N. S., Yamasaki, T., & Beller, G. (2013). Universal and culture-specific factors in the recognition and performance of musical affect expressions. Emotion, 13(3), 434–449. https://doi.org/10.1037/a0031388

Lench, H. C., Flores, S. A., & Bench, S. W. (2011). Discrete emotions predict changes in cognition, judgment, experience, behavior, and physiology: A meta-analysis of experimental emotion elicitations. Psychological Bulletin, 137(5), 834–855. https://doi.org/10.1037/a0024244

Lepping, R. J., Atchley, R. A., & Savage, C. R. (2016). Development of a validated emotionally provocative musical stimulus set for research. Psychology of Music, 44(5), 1012–1028. https://doi.org/10.1177/0305735615604509

Levitan, C. A., Charney, S. A., Schloss, K. B., & Palmer, S. E. (2015). The smell of jazz: Crossmodal correspondences between music, odor, and emotion. CogSci, 1, 1326–1331.

Lima, C. F., Correia, A. I., Müllensiefen, D., & Castro, S. L. (2020). Goldsmiths Musical Sophistication Index (Gold-MSI): Portuguese version and associations with socio-demographic factors, personality and music preferences. Psychology of Music, 48(3), 376–388. https://doi.org/10.1177/0305735618801997

Lindquist, K. A., Siegel, E. H., Quigley, K. S., & Barrett, L. F. (2013). The hundred-year emotion war: Are emotions natural kinds or psychological constructions? Comment on Lench, Flores, and Bench (2011). Psychological Bulletin, 139(1), 255–263. https://doi.org/10.1037/a0029038

Ma, D. S., Kantner, J., & Wittenbrink, B. (2020). Chicago Face Database: Multiracial expansion. Behavior Research Methods. https://doi.org/10.3758/s13428-020-01482-5

Mathiesen, S. L., Mielby, L. A., Byrne, D. V., & Wang, Q. J. (2020). Music to eat by: A systematic investigation of the relative importance of tempo and articulation on eating time. Appetite, 155, 1–10. https://doi.org/10.1016/j.appet.2020.104801

McElrea, H., & Standing, L. (1992). Fast music causes fast drinking. Perceptual and Motor Skills, 75(2), 362. https://doi.org/10.2466/pms.1992.75.2.362

Meier, B. P., Moeller, S. K., Riemer-Peltz, M., & Robinson, M. D. (2012). Sweet taste preferences and experiences predict prosocial inferences, personalities, and behaviors. Journal of Personality and Social Psychology, 102(1), 163–174. https://doi.org/10.1037/a0025253

Mesz, B., Trevisan, M. A., & Sigman, M. (2011). The taste of music. Perception, 40(2), 209–219. https://doi.org/10.1068/p6801

Mesz, B., Sigman, M., & Trevisan, M. A. (2012). A composition algorithm based on crossmodal taste-music correspondences. Frontiers in Human Neuroscience, 6. https://doi.org/10.3389/fnhum.2012.00071

Michel, A., Baumann, C., & Gayer, L. (2017). Thank you for the music – or not? The effects of in-store music in service settings. Journal of Retailing and Consumer Services, 36, 21–32. https://doi.org/10.1016/j.jretconser.2016.12.008

Millet, B., Ahn, S., & Chattah, J. (2019). The impact of music on vehicular performance: A meta-analysis. Transportation Research Part F: Traffic Psychology and Behaviour, 60, 743–760. https://doi.org/10.1016/j.trf.2018.10.007

Mohn, C., Argstatter, H., & Wilker, F. W. (2011). Perception of six basic emotions in music. Psychology of Music, 39(4), 503–517. https://doi.org/10.1177/0305735610378183

Mori, K., & Iwanaga, M. (2014). Pleasure generated by sadness: Effect of sad lyrics on the emotions induced by happy music. Psychology of Music, 42(5), 643–652. https://doi.org/10.1177/0305735613483667