Abstract

After obtaining a sample of published, peer-reviewed articles from journals with high and low impact factors in social, cognitive, neuro-, developmental, and clinical psychology, we used a priori equations recently derived by Trafimow (Educational and Psychological Measurement, 77, 831–854, 2017; Trafimow & MacDonald in Educational and Psychological Measurement, 77, 204–219, 2017) to compute the articles’ median levels of precision. Our findings indicate that developmental research performs best with respect to precision, whereas cognitive research performs the worst; however, none of the psychology subfields excelled. In addition, we found important differences in precision between journals in the upper versus lower echelons with respect to impact factors in cognitive, neuro-, and clinical psychology, whereas the difference was dramatically attenuated for social and developmental psychology. Implications are discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

The importance of precision in the history of science is difficult to overestimate. As a famous example in chemistry, Lavoisier’s advances in the precision of measurement helped overturn the phlogiston theory that had dominated for approximately two centuries (see Asimov, 1965, and Trafimow & Rice, 2009, for accessible descriptions). Advances in measurement precision have also enabled important developments in physics, genetics, astronomy, and many other fields. Psychology has benefited, too. For example, advances in the measurement of psychological constructs, including ways to increase measurement reliability—thereby also increasing measurement validity—have importantly influenced many areas in psychology. There also have been advances in psychological measurement pertaining directly to validity, rather than being mediated through reliability. It is not too dramatic, for example, to credit measurement advances by Fishbein (e.g., Fishbein & Ajzen, 1975) for rescuing social psychology from the crisis of a lack of attitude–behavior correlations approximately half a century ago (Wicker, 1969). Yet, measurement precision is not the only kind of precision with which psychologists need to be concerned.

In addition to measurement precision, there also is the issue of sampling precision (Trafimow, 2018b). That is, under the usual assumptions of random and independent sampling from a population, we might ask: How well do our summary statistics—such as the sample means that psychology researchers typically compute—represent the populations they allegedly estimate? As with measurement precision, the importance of sampling precision with respect to means in the history of science is difficult to overestimate. For example, Stigler (1986) provided a compelling description of how astronomers learned to take multiple readings from their telescopes because they came to realize that the mean of many readings is superior to having only one reading. The astronomy case is particularly interesting, because it may have been the first time that scientists consciously took advantage of the ability of increased sample sizes to increase the precision of means. Porter’s (1986) excellent review reveals how, particularly through the work of Quetelet (1796–1874) in the 19th century, scientists have increasingly appreciated the relevance of the law of errors to their research.

A well-known and important implication of the law of errors is that as sample sizes increase, sampling precision also increases. In turn, as sampling precision increases, so does the probability of replication (Trafimow, 2018a). In the present environment, where there is much concern about replication in the sciences, especially in psychology (Open Science Collaboration, 2015), the importance of sampling precision should be particularly salient. Sampling precision is beneficial for the probability of replication; as sampling precision increases, so does the probability of replication (Trafimow, 2018a). In addition, as sampling precision increases, sample statistics more accurately reflect the corresponding population parameters they are used to estimate. Thus, our focus here is on sampling precision in psychology. To put our concern in the form of questions: Does psychology research have adequate sampling precision? Does sampling precision vary across areas in psychology? And does sampling precision vary depending on whether articles are sampled from journals in the upper or lower echelon with respect to impact factor?

The a priori procedure

To answer the foregoing questions, we proposed to use Trafimow’s a priori procedure (Trafimow, 2017, 2018a; Trafimow & MacDonald, 2017; Trafimow, Wang, and Wang, 2018a, b), which allows researchers to estimate sampling precision regardless of the results of the study. Because this is a relatively new procedure, and consequently less familiar than other procedures, this section is devoted to an explanation.

On the basis of the assumption that researchers wish to be confident that their sample statistics are close to their corresponding population parameters, Trafimow (2017) suggested that researchers could ask two questions pertaining to the sample size needed for researchers to be confident of being close:

-

How close is close?

-

How confident is confident?

The issue is for researchers to decide how close they want their sample statistics to be to their corresponding population parameters and what probability (confidence) they wish to have of being that close. Although the a priori procedure is not limited to means or to normal distributions (Trafimow, Wang, & Wang, 2018a, 2018b), since researchers typically use means and assume normal distributions when performing inferential statistics pertaining to means, the present research followed suit.

Trafimow (2017) provided an accessible derivation of Eq. 1 below, where the necessary sample size n to meet specifications is a function of the fraction, f, of a standard deviation within which the researcher wishes the sample mean to be of the population mean, as well as the z-score ZC that corresponds to the degree of confidence desired of being within the prescribed distance.Footnote 1

For example, suppose the researcher wishes to have what Trafimow (2018a) characterized as “excellent” precision, under 95% confidence, that the sample mean to be obtained would be within one-tenth of a standard deviation of the population mean. The z-score that corresponds to 95% confidence is 1.96, so 1.96 and .1 can be instantiated into Eq. 1 for ZC and f, respectively: \( n={\left(\frac{1.96}{.1}\right)}^2=384.16 \). Rounding upward to the nearest whole number, then, implies that the researcher would need to collect 385 participants to meet the specifications for closeness and confidence. Alternatively, Eq. 1 can be used in a posteriori fashion to estimate the precision of a study that has already been run. For example, suppose that the researcher collected 100 participants and wished to estimate precision, using the typical 95% standard for confidence. In that case, the precision could be computed as follows: \( f=\frac{1.96}{\sqrt{100}}=.196 \).

Before continuing, it is useful to draw a contrast between the a priori procedure and other frequentist methods for using intervals. Typically, the standard deviation is an important factor that influences frequentist intervals, where the sample standard deviation is used to estimate the population standard deviation, thereby enabling interval computations to proceed. In a priori equations, however, the standard deviation cancels out, so there is no need to estimate it with the sample data (see Trafimow, 2017, for a proof). Rather, the interval is expressed in terms of standard deviation units—that is, in fractions of a standard deviation. An advantage of using standard deviation units is that the standard deviation does not need to be known or estimated, which affords that calculations can be made prior to the acquisition of data. Another advantage is that studies with very different standard deviations nevertheless can be compared or contrasted in an a posteriori fashion, in standard deviation units, as will be accomplished here. (A possible disadvantage is that there is no mechanism for including prior knowledge in the calculations, though this is unimportant for the present purposes.) We emphasize that according to the a priori procedure, precision is a function of the study procedure rather than of how the data turn out.

Trafimow and MacDonald (2017) expanded the a priori procedure to apply to the means of as many groups as the researcher wishes—that is, k groups. Assuming equal sample sizes per condition, which is convenient for the present purposes, since not all researchers specify exactly the number of participants in each condition, Trafimow and MacDonald derived Eq. 2, which relates to Eq. 1 but works for k groups, as opposed to only one group.Footnote 2 In Eq. 2, p(k means) refers to the probability that the means in all groups are within the desired distances of their population means, and Φ refers to the cumulative distribution function of the normal distribution.

How the a priori procedure differs from traditional power analysis

Others have analyzed published articles from the point of view of power analysis (e.g., Fraley & Vazire, 2014), and the proposed contribution may seem similar. But this is not so, because the a priori procedure differs strongly from power analysis, as the present section will clarify.

To see the differences, it is useful to commence by considering that power analysis and the a priori procedure have very different goals. The goal of power analysis is to find the sample size necessary to have a good chance of obtaining a statistically significant p value, whereas the goal of the a priori procedure is to find the sample size necessary to be confident that one’s sample statistics are close to the population parameters of interest. To see the difference qualitatively, imagine that the expected effect size is extremely large, or near 0. If the expected effect size is extremely large, a power analysis would indicate that only a small sample size is needed in order to have a good chance of obtaining a statistically significant p value. In contrast, if the expected effect size is near 0, a power analysis would indicate that a huge sample size would be needed to have a good chance of obtaining a statistically significant p value. In opposition to this contrast, the a priori procedure does not care what the expected effect size is. What matters is obtaining sample statistics (e.g., sample means) that are close to the corresponding population parameters (e.g., population means). Thus, according to the a priori procedure, the necessary sample size has no dependence whatsoever on the expected effect size.

The foregoing paragraph shows that power analysis is sensitive to the expected effect size, whereas the a priori procedure is not. Another qualitative difference is that the a priori procedure is sensitive to the desired closeness of the sample means to the population means, whereas power analysis is not, though we hasten to add that power analysis is sensitive to the threshold for statistical significance (e.g., .05, .01, etc.), whereas the a priori procedure is not. This last occurs because the a priori procedure does not imply the performance of an eventual significance test.

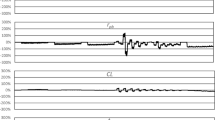

The strong qualitative differences imply strong quantitative differences, too. Imagine a study concerning a single mean, based on a sample size of 50, where the expected effect size varies between .1 and .7, keeping the threshold for statistical significance at the usual .05 level (with confidence for the a priori procedure at the usual .95 level). How does the power vary as the expected effect size varies? Figure 1 illustrates the dramatic effect that the expected effect size (along the horizontal axis) has on power (along the vertical axis); the power increases from .109 to .999 as the effect size increases from .1 to .7.Footnote 3 Note that the precision in this scenario, according to the a priori procedure, always equals \( \frac{1.96}{\sqrt{50}}=.28 \), no matter the expected effect size.

Another way to see a quantitative difference between the two procedures is to consider the sample size needed to reach arbitrary levels of precision. Continuing with the simple case of a single mean, imagine that the desired level of precision varies from .1 to .7 (remember that a smaller number implies better precision). As Fig. 2 illustrates, the necessary sample size decreases from 384.16 to 7.84 along the vertical axis as the desired level of precision becomes increasingly poor, from .1 to .7 along the horizontal axis. Note that the required sample size for any desired level of power in this scenario remains the same, no matter the desired level of precision.

Yet another way to see a difference between the two procedures is to consider the role played by the standard deviation. In the case of power analysis, keeping the mean (in a one-sample experiment) or the difference in means (in a two-sample experiment) constant, the standard deviation has a strong effect on power. As the standard deviation increases, the effect size decreases, so power likewise decreases. In contrast, because precision is defined in standard deviation units in a priori calculations, increasing the standard deviation does not influence precision.Footnote 4 To avoid overkill, we do not provide a figure.

For a dramatic conclusion to this section, imagine a one-sample experiment in which Researcher A can afford to recruit 13 participants. Fortunately, this researcher expects an effect size of .8, and this is barely sufficient for 80% power (the exact power level is 82.2%). According to the received view, this would be satisfactory. In contrast, Researcher B uses the a priori procedure to calculate that the precision is .54, which is much worse than Trafimow’s (2018a) criterion of .4 for “poor precision.” Thus, where Researcher A is satisfied with the experiment because it meets the traditional power requirement, Researcher B is not, because she knows that the precision with which the sample mean estimates the corresponding population mean is terrible. Thus, although power analysis works well for improving null hypothesis significance tests, it is not useful for ensuring that sample means are close to the corresponding population means they are used to estimate. The a priori procedure is necessary to accomplishing that goal.

Present goal

If we use the standard 95% probability for the remainder of this article, Eq. 2 reduces to the following: \( f=\left(\frac{\Phi^{-1}\left(\frac{\sqrt[k]{.95}+1}{2}\right)}{\sqrt{n}}\right) \). In other words, if one knows the number of groups in an experiment and the total sample size and is willing to divide the total sample size by the number of groups in order to approximate n, that person can estimate the precision under the stricture of 95% confidence. Or, if there are multiple experiments, one can include all the groups across all the experiments for k, to arrive at an article-wise estimate of precision. This is the practice followed here.Footnote 5

Using the a priori procedure in a posteriori fashion provided the opportunity to investigate the conditions under which psychology experiments in the literature have been performed. Specifically, we randomly chose articles in upper- and lower-tier journals, in social, cognitive, neuro-, developmental, and clinical psychology, to investigate their article-wise levels of sampling precision and to address our questions.

Method

In each subfield of psychology, we selected three journals in what might be considered relatively upper or lower tiers. We used the scientific journal ranking, or impact factor, for the year 2015 according to Thomas Reuter’s annual Journal Citation Report. Thirty journals were represented (e.g., Top Social, Bottom Social, etc.)—see Table 1.Footnote 6 The journal articles were selected using a random-digit table (Dowdy, Wearden, & Chilko, 2004).Footnote 7 Selected articles had to meet the additional criteria of using exclusively between-participants designs, reporting group means, and being published in 2015. The number of studies, groups per study, sample size per study, and total sample size across studies were obtained for each article.

Results

Because the distributions of precision values were skewed, it made more sense to use median than mean precision values.Footnote 8 Table 2 and Fig. 3 illustrate the differences in median precision, for the upper- and lower-echelon journals with respect to the impact factors of the journals in different subfields of psychology. Table 2 also gives the precision standard deviations. Developmental and social psychology did relatively well in comparison to cognitive and neuropsychology, with cognitive psychology exhibiting particularly extreme imprecision in lower-echelon journals. Clinical psychology was mixed in terms of precision, because upper-echelon clinical psychology journals exhibited precision near that of social and developmental psychology, whereas lower-echelon clinical psychology journals exhibited imprecision in the ballpark of cognitive and neuropsychology. Finally, there is an interesting effect whereby lower-echelon neuropsychology journal articles exhibited precision superior to those in the upper echelon.

Discussion

Figure 3 illustrates the median precision levels of the different areas in psychology; but how should these be evaluated, in both relative and absolute terms? In relative terms, the data are reasonably clear. Developmental and social psychology perform relatively well; cognitive and neuropsychology perform relatively poorly; and clinical psychology performs relatively well or poorly, depending on whether researchers consider articles in upper- or lower-echelon journals, respectively.

In absolute terms, however, it is not even clear that developmental and social psychology perform all that well. The median precision levels were .23 and .29, respectively. If we take .1 or less as “excellent” precision (Trafimow, 2018a), even developmental and social psychology are quite far from the goal. Of course, such designations are arbitrary, and perhaps should not be taken too seriously, but our best guess is that few researchers would be happy with median precision levels exceeding .2. And with median precision levels in cognitive and neuropsychology exceeding .4 in upper-echelon journals, there is much room for improvement.

Why should we care about sampling precision?

An obvious way to avoid having what some might consider to be the negative implications of Table 2 and Fig. 3 would be to question the importance of sampling precision for psychology research. After all, the argument might commence, researchers are interested in testing empirical hypotheses, in the interest of confirming or disconfirming the theories from which they are derived. Consequently, researchers do not, and should not, care about the sampling precision of the means they obtain. Rather, they should care about whether the hypothesized differences between means are statistically significant or not, in the interest of determining whether the hypothesized effect is “there” or “not there.”

This objection can be addressed in several ways. The first way is mathematical. That is, under the assumption of normality and assuming equal sample sizes for two groups, it works out that more participants are needed in order to achieve the same level of precision for a difference between means than for particular means.Footnote 9 Thus, if one sees Table 2 and Fig. 3 as pessimistic, the pessimism remains even if there is a switch to a focus on differences between means.

Although the mathematical argument is sufficient, this seems a good opportunity to address what can be considered a poor philosophy that underlies the objection. First, from a statistical perspective, an argument can be made that researchers make a huge mistake by dichotomous thinking in terms of whether an effect “is there” or “is not there.” It is unlikely, in the extreme, that the population effect size is exactly zero. After all, with an infinitude of possible values, it is extremely unlikely that many studies would adhere exactly to any single effect size value. Thus, it is tantamount to certainty that there is an effect, which renders the question of whether there is an effect or not moot. Rather, the important issue concerns the size of the effect. Is the effect size and direction consonant or dissonant with a theory, does the effect size suggest or fail to suggest effective applications, does the effect size support or not support the validity of new methods, etc.?Footnote 10

More generally, from the philosophy of science there is the issue of the importance of establishing the facts. There are many different philosophies of science, emphasizing verification (e.g., Hempel, 1965), falsification (e.g., Popper, 1959), abduction (e.g., Haig, 2014), and many other concerns. These different philosophies assert that there are different ideal orders of consideration of what the facts are and of theory development, as well as different sorts of relations between facts and theories. But all of these philosophies have in common that, at some point in the scientific process, it is necessary to determine what the facts are. Admitting, then, that under most respectable philosophical perspectives the facts matter at some point, researchers ought to care about the facts! And if the facts matter, it seems difficult to avoid the implication that knowing the facts as precisely as possible matters, too, as exemplified by Lavoisier’s disconfirmation of phlogiston theory, with which the present article commenced. Although it is arguable whether researchers should depend as much on means as they do (Speelman & McGann, 2016; Trafimow et al., 2018), given that dependence on means, the desirability of obtaining means with the best possible sampling precision should be clear to all. Consequently, the implications of Table 2 and Fig. 3 retain their full force.

The connection between precision and replicability

Although the present work pertains to precision rather than replicability, there may be a strong connection between them, depending on how one conceptualizes replicability. The typical way—we might even say, the received way—that researchers consider a successful replication is if an experiment results in a statistically significant finding upon both iterations. Using this conceptualization, it should be obvious that, all else being equal, the more participants the researcher has in both iterations, the greater the probability of a successful replication. In addition, the greater the population effect size, the greater the replicability. A problem with the received view, however, is that the null hypothesis significance testing procedure has come under fire in the last several years (see Hubbard, 2016, and Ziliak & McCloskey, 2016, for reviews), and it was widely criticized at the 2017 American Statistical Association Symposium on Statistical Inference. Aside from statistical issues, though, consider what might be considered the Michelson and Morley problem. Michelson and Morley (1887) performed an experiment that disconfirmed the existence of the luminiferous ether.Footnote 11 The theoretical effect size was zero (though they did obtain a small sample effect size), and physicists consider the experiment to be highly replicable. According to the received view, where replicability depends importantly on having a large population effect size, the Michelson and Morley experiment would have to be considered not to be very replicable.

Trafimow (2018a) suggested a more creative alternative that solves the Michelson and Morley problem, featuring the goal of calculating the probability of obtaining sample statistics within desired distances of the corresponding population parameters in both iterations of an experiment. This procedure distinguishes between replicability in an idealized universe, where we imagine it is possible to duplicate conditions exactly so that the only differences between iterations are due to randomness, and replicability in the real universe, where it is impossible to duplicate conditions exactly and there are systematic as well as random differences between the two iterations. Trafimow (2018a) showed that the probability of replication in the idealized universe places an upper bound on the probability of replication in the real universe. Moreover, by using a priori equations, it is possible to calculate the probability of replication in the idealized universe, even before performing the original experiment. Trafimow (2018a) showed that it is possible to algebraically rearrange a priori equations to give the probability of obtaining sample statistics within desired distances of the corresponding population parameters at the sample size the researcher plans to collect. Once this probability has been obtained, squaring to take both iterations into account renders the probability of replication in the idealized universe. The probability of replication in the real universe must be less than that figure, thereby implying that if the calculated figure is already a poor number (and it usually is), the true figure must be even worse.

Four interesting features are worth emphasizing when using a priori equations to calculate the probability of replication in the idealized universe. First, analogous to precision in the present article, replication is a function of the procedure rather than of the data. Second, precision and replicability are intimately connected. Third, just as precision does not depend on the population effect size, neither does probability of replication, which, we reiterate, solves the Michelson and Morley problem. Finally, it is quite possible for the received view of replicability and the Trafimow view to result in opposite conclusions. To see this last conclusion, suppose there is a huge population effect size in two iterations of an experiment, though the sample sizes are only moderate. Given the huge population effect size, according to the received view of replicability, there is quite a good chance of obtaining statistically significant findings in both iterations, and hence impressive replicability. In contrast, the probability of replication according to a priori equations is poor in the idealized universe, and even worse in the real one, and there is little reason to believe that the obtained sample statistics are close to their corresponding population parameters in both iterations. In contrast, suppose that the population effect size is very small for two iterations of an experiment, but the sample sizes are very large in both cases. In that case, the experimenter can be confident that the sample statistics are close to their corresponding population parameters in both iterations, so replicability is impressive, though the small population effect size renders replicability poor according to the received view.

Arguments not being made

Given the predilections of psychologists, misinterpretations of the foregoing argument seem likely. The present section is an attempt to prevent misinterpretations.

The most obvious misinterpretation is that developmental and social psychology are “better” than cognitive and neuropsychology. On the contrary, although sampling precision is important, it is only one of many criteria that can be used to evaluate psychology subfields. Other criteria include the explanatory breadth of theories, the validity of auxiliary assumptions, practical applications, and so on. There is no implication here that some psychology subfields are better than others.

Another misinterpretation is that in areas such as developmental and social psychology, where upper- and lower-echelon journal articles do not differ much with respect to sampling precision, there is no reason to distinguish different echelons of journals. In fact, there are many ways to evaluate journals. In addition to impact factors, there are acceptance rates, submission rates, reading rates, and so on. There is no reason for anyone to use the present article as a reason to argue that it is wrong to distinguish different echelons of journals in developmental and social psychology. However, that the present article should not be used in this way is not a reason to support distinguishing different echelons of journals, either. An intermediate position might be that there are different journal echelons in different areas of psychology, but the extent of the differences may be less than is typically assumed. The present authors are not committed to any position on this issue. Having said that, however, it is noteworthy that in social, cognitive, developmental, and clinical psychology, the standard deviation of precision is larger for lower- than for upper-echelon journals (see Table 2). Perhaps one difference between journal echelons in some areas of psychology pertains to variability in precision.

A third misinterpretation is that because the present focus was on between-participants designs, the present authors believe these are superior to within-participants designs. No such implication is intended, and we focused on between-participants designs for other reasons. The main reason is that it was easier to find between-participants designs across areas, and across different echelons of journals. Another reason is that the analyses are more mathematically straightforward for between-participants than for within-participants designs, and it made sense to keep the first article of this type as simple as possible. Nevertheless, there are important reasons to favor either between-participants or within-participants designs, depending on the researcher’s goals, manipulations, and other considerations (see, e.g., Smith & Little, 2018; Trafimow & Rice, 2008).

Because some areas of psychology use within-participants designs more frequently than other areas, it could be argued that the present focus on between-participants designs might underrepresent such areas. For example, cognitive psychology experiments employ within-participants designs more frequently than do social psychology experiments. This is a limitation. Future work analogous to the present work is being planned to analyze articles featuring within-participants analyses.

Should researchers increase sample sizes?

The equations render obvious that for psychology researchers to increase sampling precision, they will need to increase their sample sizes substantially. It is possible to take more than one perspective on this. One view would be that psychology researchers should use larger sample sizes and thereby obtain greater sampling precision. An alternative view might be that increased sample sizes would come at the cost of researchers being able to perform fewer experiments, and it is better to have more experiments even at the cost of decreased sampling precision. A third view might be that many experiments in psychology are flawed in multiple ways, so an advantage of fewer experiments might be that researchers would think them through more rigorously. Thus, according to this third perspective, increased sampling precision could come at little cost within rigorous experiments, even if the cost is increased for nonrigorous experiments.

Although this is not an argument that is going to be settled here, three straightforward points can be made. First, researchers who favor the first or third point of view also should favor increasing sample sizes in order to increase sampling precision. Second, researchers who favor many small experiments should be up front about admitting that the sample means cannot be trusted as accurate estimates of their corresponding population means. This issue can be extended to effect sizes; that is, effect sizes based on sample means with low sampling precision cannot be trusted as accurate estimates of the corresponding population effect sizes.

The third point to be made is that, if psychology moves in the direction of multiple small experiments, a few issues—both positive and negative—would stem from the decision. On the negative side, most “replications” nevertheless differ from each other with respect to the populations sampled from, dates, locations, and so on. Thus, an argument can be made that using multiple small experiments rather than a single large experiment risks multiple confounding across experiments. On the positive side, a counterargument could be that what can be considered “multiple confounding” alternatively can be considered “increasing generalizability.” Our expectation is that the issue of a few large studies versus many small studies invokes important conceptual and philosophical issues that require a separate article, or many such articles. A careful examination of the relevant conceptual and philosophical issues by future researchers would be a positive consequence of the present work concerning the sampling precision of different psychology subfields.

Conclusion

The present work commenced with a general point about the importance of precision in science: There is no substitute for knowing the facts as precisely as possible. The present work focused on one type of precision—that is, sampling precision. However, there are other types of precision. Trafimow (2018b) detailed two other types of precision that matter in the behavioral sciences. One of these is measurement precision, and the other is the precision of homogeneity. Measurement precision refers to a characteristic of the measuring instrument: As randomness decreases, measurement precision increases. Regarding the precision of homogeneity, as participants are more homogeneous, it is increasingly easy to observe differences between the groups in different conditions, so there is greater precision of homogeneity.

We bring up measurement precision and precision of homogeneity to emphasize that sampling precision is only one piece of the larger precision mosaic. Given the importance of precision in the history of science, investigations of different types of precision are desirable. It might well be that psychology does better or worse with respect to other types of precision than with respect to sampling precision. In addition, the relative ordering of the performance of psychology subfields might differ for different types of precision. Thus, although the present investigation concerning the sampling precision of psychology subfields is a good start, the findings raise questions about other types of precision that can only be addressed by future research.

Notes

Although Trafimow (2017) assumed a normal distribution, he also showed that Eq. 1 is robust even to severe violations of the normality assumption. In addition, Trafimow et al. (2018b) provided equations for using the a priori procedure with skewed distributions and with statistics other than means and standard deviations.

Trafimow and MacDonald (2017) also provided equations that work for unequal sample sizes, as well as different levels of precision for the means in different conditions, but these complexities are not necessary for the present purposes.

The calculator at https://www.dssresearch.com/KnowledgeCenter/toolkitcalculators/statisticalpowercalculators.aspx was used for all power calculations.

That is, the standard deviation does not influence sampling precision, which is the present topic. But the standard deviation is certainly relevant to measurement precision and the precision of homogeneity, because large standard deviations may imply that at least one of these is problematic.

To the extent that the sample sizes are not equal for the different conditions in an experiment, or for the different conditions across experiments, the estimates are optimistic and can be considered as boundaries indicating the best that can be said about the precision of the research. Thus, assuming equal sample sizes can be considered a simplifying assumption.

Some impact factors have changed dramatically. For example, the impact factor for Basic and Applied Social Psychology was 3.4 in 2017, which is a dramatic increase from the 2015 value. More generally, it is quite debatable whether impact factors index journal quality, but in deference to conventions, as well as the lack of a better index of journal quality, we went ahead and used impact factors in this way.

Researchers wishing to reproduce our findings can do so in three ways. First, all articles used are referenced in the Reference section. Second, anyone desiring access to the Excel spreadsheet we used can email the authors. Third, we provide an Open Science Foundation link to the data file: https://osf.io/ms4zg/.

Also, because the skewness was in the direction of poorer precision, medians provided more optimistic precision locations than did the means.

This effect is caused, in part, by the necessity of using the t distribution rather than the z distribution.

There may be times when an effect is so small that it can be considered unimportant. But we urge the reader to remember that the null hypothesis significance testing procedure does not test importance. In addition, if one wishes to replicate a near-zero effect, the null hypothesis significance testing procedure is completely inappropriate, though a case can be made for Bayesian procedures in this context (e.g., Morey & Rouder, 2011).

Albert Michelson received a Nobel Prize in 1907. It also is interesting to note that, as Carver (1993) showed, because the experiment used such a large sample size, had Michelson and Morley used modern null hypothesis significance testing procedures, they would have obtained a statistically significant effect, thereby supporting rather than disconfirming the existence of the luminiferous ether. The potential effects on physics in this counterfactual scenario can be considered devastating (Trafimow & Rice, 2009).

References

(* Denotes studies collected in the sample for the analysis in this article.)

*Abeyta, A. A., Routledge, C., & Juhl, J. (2015). Looking back to move forward: Nostalgia as a psychological resource for promoting relationship goals and overcoming relationship challenges. Journal of Personality and Social Psychology, 109, 1029–1044. https://doi.org/10.1037/pspi0000036

*Ahn, H. M., Kim, S. A., Hwang, I. J., Jeong, J. W., Kim, H. T., Hamann, S., & Kim, S. H. (2015). The effect of cognitive reappraisal on long-term emotional experience and emotional memory. Journal of Neuropsychology, 9(1), 64–76. https://doi.org/10.1111/jnp.12035

*Akinwumi, M. O., & Bello, T. O. (2015). Relative effectiveness of learning-cycle model and inquiry-teaching approaches in improving students’ learning outcomes in physics. Journal of Education and Human Development, 4(3), 169–180. 10.15640/jehd.v4n3a18

*Alhazmi, A. (2015). Student satisfaction among learners: Illustration by Jazan University students. Journal of Education and Human Development, 4(2(1)), 205–212. 10.15640/jehd.v4n2_1a20

*Allen, D. N., Bello, D. T., & Thaler, N. S. (2015). Neurocognitive predictors of performance-based functional capacity in bipolar disorder. Journal of Neuropsychology, 9, 159–171. https://doi.org/10.1111/jnp.12042

*Andersson, S., Egeland, J., Sundseth, Ø. Ø., & Schanke, A. (2015). Types or modes of malingering? A confirmatory factor analysis of performance and symptom validity tests. Applied Neuropsychology: Adult, 22, 215–226. 10.1080/23279095.2014.910212

*Andreychik, M. R., & Migliaccio, N. (2015). Empathizing with others’ pain versus empathizing with others’ joy: Examining the separability of positive and negative empathy and their relation to different types of social behaviors and social emotions. Basic and Applied Social Psychology, 37, 274–291. https://doi.org/10.1080/01973533.2015.1071256

Asimov, I. (1965). A short history of chemistry: An introduction to the ideas and concepts of chemistry. Garden City: Anchor Books. ISBN 13: 9780385036733

*Avent, J. R., Cashwell, C. S., & Brown-Jeffy, S. (2015). African American pastors on mental health, coping, and help seeking. Counseling and Values, 60, 32–47. https://doi.org/10.1002/j.2161-007X.2015.00059.x

*Babel, M., & McGuire, G. (2015). Perceptual fluency and judgments of vocal aesthetics and stereotypicality. Cognitive Science, 39, 766–787. https://doi.org/10.1111/cogs.12179

*Bakhsh, K., Hussain, S., Mohsin, M. N. (2015). Personality and leadership effectiveness. Journal of Education and Human Development, 4(2(1)), 139–142. 10.15640/jehd.v4n2_1a14

*Banta Lavenex, P., Boujon, V., Ndarugendamwo, A., & Lavenex, P. (2015). Human short-term spatial memory: Precision predicts capacity. Cognitive Psychology, 77, 1–19. https://doi.org/10.1016/j.cogpsych.2015.02.001

*Barabanschikov, V. A. (2015). Gaze dynamics in the recognition of facial expressions of emotion. Perception, 44, 1007–1019. https://doi.org/10.1177/0301006615594942

*Barnhofer, T., Crane, C., Brennan, K., Duggan, D. S., Crane, R. S., Eames, C., … Williams, J. G. (2015). Mindfulness-based cognitive therapy (MBCT) reduces the association between depressive symptoms and suicidal cognitions in patients with a history of suicidal depression. Journal of Consulting and Clinical Psychology, 83, 1013–1020. https://doi.org/10.1037/ccp0000027

*Beauchaine, T. P., Neuhaus, E., Gatzke-Kopp, L. M., Reid, M. J., Chipman, J., Brekke, A., … Webster-Stratton, C. (2015). Electrodermal responding predicts responses to, and may be altered by, preschool intervention for ADHD. Journal of Consulting and Clinical Psychology, 83, 293–303. https://doi.org/10.1037/a0038405

*Beevers, C. G., Clasen, P. C., Enock, P. M., & Schnyer, D. M. (2015). Attention bias modification for major depressive disorder: Effects on attention bias, resting state connectivity, and symptom change. Journal of Abnormal Psychology, 124, 463–475. https://doi.org/10.1037/abn0000049

*Bell, H., Jacobson, L., Zeligman, M., Fox, J., & Hundley, G. (2015). The role of religious coping and resilience in individuals with dissociative identity disorder. Counseling and Values, 60, 151–163. https://doi.org/10.1002/cvj.12011

*Belmi, P., Barragan, R. C., Neale, M. A., & Cohen, G. L. (2015). Threats to social identity can trigger social deviance. Personality and Social Psychology Bulletin, 41, 467–484. https://doi.org/10.1177/0146167215569493

*Benner, A. D., & Wang, Y. (2015). Adolescent substance use: The role of demographic marginalization and socioemotional distress. Developmental Psychology, 51, 1086–1097. https://doi.org/10.1037/dev0000026

*Bernecker, K., & Job, V. (2015). Beliefs about willpower are related to therapy adherence and psychological adjustment in patients with type 2 diabetes. Basic and Applied Social Psychology, 37, 188–195. https://doi.org/10.1080/01973533.2015.1049348

*Bertrams, A., Baumeister, R. F., Englert, C., & Furley, P. (2015). Ego depletion in color priming research: Self-control strength moderates the detrimental effect of red on cognitive test performance. Personality and Social Psychology Bulletin, 41, 311–322. https://doi.org/10.1177/0146167214564968

*Blackwell, S. E., Browning, M., Mathews, A., Pictet, A., Welch, J., Davies, J., … Holmes, E. A. (2015). Positive imagery-based cognitive bias modification as a web-based treatment tool for depressed adults: A randomized controlled trial. Clinical Psychological Science, 3, 91–111. https://doi.org/10.1177/2167702614560746

*Bodell, L. P., & Keel, P. K. (2015). Weight suppression in bulimia nervosa: Associations with biology and behavior. Journal of Abnormal Psychology, 124, 994–1002. https://doi.org/10.1037/abn0000077

*Borg, C., Emond, F. C., Colson, D., Laurent, B., & Michael, G. A. (2015). Attentional focus on subjective interoceptive experience in patients with fibromyalgia. Brain and Cognition, 101, 35–43. https://doi.org/10.1016/j.bandc.2015.10.002

*Borsari, B., Apodaca, T. R., Jackson, K. M., Mastroleo, N. R., Magill, M., Barnett, N. P., & Carey, K. B. (2015). In-session processes of brief motivational interventions in two trials with mandated college students. Journal of Consulting and Clinical Psychology, 83, 56–67. https://doi.org/10.1037/a0037635

*Bradshaw, C. A., Freegard, G., & Reed, P. (2015). Human performance on random ratio and random interval schedules, performance awareness and verbal instructions. Learning & Behavior, 43, 272–288. https://doi.org/10.3758/s13420-015-0178-x

*Brandone, A. C., Gelman, S. A., & Hedglen, J. (2015). Children’s developing intuitions about the truth conditions and implications of novel generics versus quantified statements. Cognitive Science, 39, 711–738. https://doi.org/10.1111/cogs.12176

*Brentari, D., Renzo, A. D., Keane, J., & Volterra, V. (2015). Cognitive, cultural, and linguistic sources of a handshape distinction expressing agentivity. Topics in Cognitive Science, 7, 95–123. https://doi.org/10.1111/tops.12123

*Broemmel, A. D., Moran, M. J., & Wooten, D. A. (2015). The impact of animated books on the vocabulary and language development of preschool-aged children in two school settings. Early Childhood Research and Practice, 17(1). Retrieved from http://ecrp.uiuc.edu/v17n1/broemmel.html

*Brown, C. B. (2015). Cyber bullying among students with serious emotional and specific learning disabilities. Journal of Education and Human Development, 4(2(1)), 50–56. 10.15640/jehd.v4n2_1a4

*Campione-Barr, N., Lindell, A. K., Giron, S. E., Killoren, S. E., & Greer, K. B. (2015). Domain differentiated disclosure to mothers and siblings and associations with sibling relationship quality and youth emotional adjustment. Developmental Psychology, 51, 1278–1291. https://doi.org/10.1037/dev0000036

*Capellini, V. L. M. F., das Neves, A. J., Fonseca, K. A., & Remoli, T. C. (2015). Curriculum adaptations: What do participants of continuing education program say about it? Journal of Education and Human Development, 4(1), 51–63. 10.15640/jehd.v4n1a7

*Carnaghi, A., Silveri, M. C., & Rumiati, R. I. (2015). On the relationship between semantic knowledge and prejudice about social groups in patients with dementia. Cognitive and Behavioral Neurology, 28, 71–79. https://doi.org/10.1097/WNN.0000000000000059

*Carr, D. C., King, K., & Matz-Costa, C. (2015). Parent–teacher association, soup kitchen, church, or the local civic club? Life stage indicators of volunteer domain. International Journal of Aging and Human Development, 80, 293–315. https://doi.org/10.1177/0091415015603608

Carver, R. P. (1993). The case against statistical significance testing, revisited. Journal of Experimental Education, 61, 287–292. https://doi.org/10.1080/00220973.1993.10806591

*Cascio, C. N., Konrath, S. H., & Falk, E. B. (2015). Narcissists’ social pain seen only in the brain. Social Cognitive and Affective Neuroscience, 10, 335–341. https://doi.org/10.1093/scan/nsu072

*Chane, S., & Adamek, M. E. (2015). “Death is better than misery”: Elders’ accounts of abuse and neglect in Ethiopia. International Journal of Aging and Human Development, 82, 54–78. https://doi.org/10.1177/0091415015624226

*Chen, Q., & Mirman, D. (2015). Interaction between phonological and semantic representations: Time matters. Cognitive Science, 39, 538–558. https://doi.org/10.1111/cogs.12156

*Cheraghi, F., Kadivar, P., Ardelt, M., Asgari, A., & Farzad, V. (2015). Gender as a moderator of the relation between age cohort and three-dimensional wisdom in Iranian culture. International Journal of Aging and Human Development, 81, 3–26. https://doi.org/10.1177/0091415015616394

*Christman, S. D., Prichard, E. C., & Corser, R. (2015). Factor analysis of the Edinburgh Handedness Inventory: Inconsistent handedness yields a two-factor solution. Brain and Cognition, 98, 82–86. https://doi.org/10.1016/j.bandc.2015.06.005

*Christopher, M. E., Hulslander, J., Byrne, B., Samuelsson, S., Keenan, J. M., Pennington, B., & Olson, R. K. (2015). Genetic and environmental etiologies of the longitudinal relations between prereading skills and reading. Child Development, 86, 342–361. https://doi.org/10.1111/cdev.12295

*Chronis-Tuscano, A., Rubin, K. H., O’Brien, K. A., Coplan, R. J., Thomas, S. R., Dougherty, L. R., … Wimsatt, M. (2015). Preliminary evaluation of a multimodal early intervention program for behaviorally inhibited preschoolers. Journal of Consulting and Clinical Psychology, 83, 534–540. https://doi.org/10.1037/a0039043

*Chuderski, A., & Andrelczyk, K. (2015). From neural oscillations to reasoning ability: Simulating the effect of the theta-to-gamma cycle length ratio on individual scores in a figural analogy test. Cognitive Psychology, 76, 78–102. https://doi.org/10.1016/j.cogpsych.2015.01.001

*Corbett, F., Jefferies, E., Burns, A., & Lambon Ralph, M. A. (2015). Deregulated semantic cognition contributes to object-use deficits in Alzheimer’s disease: A comparison with semantic aphasia and semantic dementia. Journal of Neuropsychology, 9, 219–241. https://doi.org/10.1111/jnp.12047

*Costarelli, S. (2015). The pros and cons of ingroup ambivalence: The moderating roles of attitudinal basis and individual differences in ingroup attachment and glorification. Current Research in Social Psychology, 23, 4:26–37. Retrieved from https://uiowa.edu/crisp/sites/uiowa.edu.crisp/files/crisp_23_4.pdf

*Coyle, C. T., & Rue, V. M. (2015). A thematic analysis of men’s experience with a partner’s elective abortion. Counseling and Values, 60, 138–150. https://doi.org/10.1002/cvj.12010

*Crane, C. A., Eckhardt, C. I., & Schlauch, R. C. (2015). Motivational enhancement mitigates the effects of problematic alcohol use on treatment compliance among partner violent offenders: Results of a randomized clinical trial. Journal of Consulting and Clinical Psychology, 83, 689–695. https://doi.org/10.1037/a0039345

*Crawford, M., & Freeman, B. J. (2015). The development and factor structure of the parishioner perspectives of homosexuals within religious organizations instrument (PPHRO): A pilot study. Journal of Education and Human Development, 4(3), 135–141. 10.15640/jehd.v4n3a14

*Crowell, S. E., Butner, J. E., Wiltshire, T. J., Munion, A. K., Yaptangco, M., & Beauchaine, T. P. (2017). Evaluating emotional and biological sensitivity to maternal behavior among self-injuring and depressed adolescent girls using nonlinear dynamics. Clinical Psychological Science, 5, 272–285. https://doi.org/10.1177/2167702617692861

*Cruz-Ortega, L. G., Gutierrez, D., & Waite, D. (2015). Religious orientation and ethnic identity as predictors of religious coping among bereaved individuals. Counseling and Values, 60, 67–83. https://doi.org/10.1002/j.2161-007X.2015.00061.x

*Culver, N. C., Vervliet, B., & Craske, M. G. (2015). Compound extinction: Using the Rescorla–Wagner model to maximize exposure therapy effects for anxiety disorders. Clinical Psychological Science, 3, 335–348. https://doi.org/10.1177/2167702614542103

*Davelaar, E. J. (2015). Semantic search in the Remote Associates Test. Topics in Cognitive Science, 7, 494–512. https://doi.org/10.1111/tops.12146

*Davey, C., Heard, R., & Lennings, C. (2015). Development of the Arabic versions of the Impact of Events Scale–Revised and the Posttraumatic Growth Inventory to assess trauma and growth in Middle Eastern refugees in Australia. Clinical Psychologist, 19, 131–139. https://doi.org/10.1111/cp.12043

*Davis, A., Williams, R. N., Gupta, A. S., Finch, W. H., & Randolph, C. (2015). Evaluating neurocognitive deficits in patients with multiple sclerosis via a brief neuropsychological approach. Applied Neuropsychology: Adult, 22, 381–387. https://doi.org/10.1080/23279095.2014.949717

*Dawel, A., Palermo, R., O’Kearney, R., Irons, J., & McKone, E. (2015). Fearful faces drive gaze-cueing and threat bias effects in children on the lookout for danger. Developmental Science, 18, 219–231. https://doi.org/10.1111/desc.12203

*Dawson, D., & Akhurst, J. (2015). “I wouldn’t dream of ending with a client in the way he did to me”: An exploration of supervisees’ experiences of an unplanned ending to the supervisory relationship. Counselling and Psychotherapy Research, 15, 21–30. https://doi.org/10.1080/14733145.2013.845235

*de la Fuente, J., Casasanto, D., Román, A., & Santiago, J. (2015). Can culture influence body-specific associations between space and valence? Cognitive Science, 39, 821–832. 10.1111/cogs.12177

*de Vos, C. (2015). The Kata Kolok pointing system: Morphemization and syntactic integration. Topics in Cognitive Science, 7, 150–168. https://doi.org/10.1111/tops.12124

*Delaney, M. F., & White, K. M. (2015). Predicting people’s intention to donate their body to medical science and research. Journal of Social Psychology, 155, 221–237. https://doi.org/10.1080/00224545.2014.998962

*Demopoulos, C., Arroyo, M. S., Dunn, W., Strominger, Z., Sherr, E. H., & Marco, E. (2015). Individuals with agenesis of the corpus callosum show sensory processing differences as measured by the sensory profile. Neuropsychology, 29, 751–758. https://doi.org/10.1037/neu0000165

*Dillon, M. R., & Spelke, E. S. (2015). Core geometry in perspective. Developmental Science, 18, 894–908. https://doi.org/10.1111/desc.12266

*Dockree, P. M., Brennan, S., O’Sullivan, M., Robertson, I. H., & O’Connell, R. G. (2015). Characterising neural signatures of successful aging: Electrophysiological correlates of preserved episodic memory in older age. Brain and Cognition, 97, 40–50. https://doi.org/10.1016/j.bandc.2015.04.002

Dowdy, S. M., Wearden, S., & Chilko, D. M. (2004). Statistics for research (3rd). New York: Wiley.

*Doyle, K. L., Weber, E., Morgan, E. E., Loft, S., Cushman, C., Villalobos, J., … Woods, S. P. (2015). Habitual prospective memory in HIV disease. Neuropsychology, 29, 909–918. https://doi.org/10.1037/neu0000180

*Draper, A., Jude, L., Jackson, G. M., & Jackson, S. R. (2015). Motor excitability during movement preparation in Tourette syndrome. Journal of Neuropsychology, 9, 33–44. https://doi.org/10.1111/jnp.12033

*Eavers, E. R., Berry, M. A., & Rodriguez, D. N. (2015). The effects of counterfactual thinking on college students’ intentions to quit smoking cigarettes. Current Research in Social Psychology, 23, 8:66–77. https://uiowa.edu/crisp/sites/uiowa.edu.crisp/files/crisp_23_8.pdf

*Ekanem, E. E. (2015). Time management abilities of administrators for skill improvement needs of teachers in secondary schools in Calabar, Nigeria. Journal of Education and Human Development, 4(3), 143–149. 10.15640/jehd.v4n3a15

*Etherton, J. (2015). WAIS-IV Verbal Comprehension Index and Perceptual Reasoning index performance is unaffected by cold-pressor pain induction. Applied Neuropsychology: Adult, 22, 54–60. https://doi.org/10.1080/23279095.2013.838166

*Evans, J., Olm, C., McCluskey, L., Elman, L., Boller, A., Moran, E., … Grossman, M. (2015). Impaired cognitive flexibility in amyotrophic lateral sclerosis. Cognitive and Behavioral Neurology, 28, 17–26. https://doi.org/10.1097/WNN.0000000000000049

*Everling, K. M., Delello, J. A., Dykes, F., Neel, J. L., & Hansen, B. (2015). The impact of field experiences on pre-service teachers’ decisions regarding special education certification. Journal of Education and Human Development, 4(1), 65–77. 10.15640/jehd.v4n1a8

*Ezpeleta, L., & Granero, R. (2015). Executive functions in preschoolers with ADHD, ODD, and comorbid ADHD–ODD: Evidence from ecological and performance-based measures. Journal of Neuropsychology, 9, 258–270. https://doi.org/10.1111/jnp.12049

*Fairbairn, C. E., Sayette, M. A., Aalen, O. O., & Frigessi, A. (2015). Alcohol and emotional contagion: An examination of the spreading of smiles in male and female drinking groups. Clinical Psychological Science, 3, 686–701. https://doi.org/10.1177/2167702614548892

*Fakra, E., Jouve, E., Guillaume, F., Azorin, J., & Blin, O. (2015). Relation between facial affect recognition and configural face processing in antipsychotic-free schizophrenia. Neuropsychology, 29, 197–204. 10.1037/neu0000136

*Falck-Ytter, T., Carlström, C., & Johansson, M. (2015). Eye contact modulates cognitive processing differently in children with autism. Child Development, 86, 37–47. https://doi.org/10.1111/cdev.12273

Fishbein, M., & Ajzen, I. (1975). Belief, attitude, intention and behavior: An introduction to theory and research. Reading: Addison-Wesley.

*Fitzgerald, C. J., & Lueke, A. (2015). Being generous to look good: Perceived stigma increases prosocial behavior in smokers. Current Research in Social Psychology, 23, 1:1–8. Retrieved from https://uiowa.edu/crisp/sites/uiowa.edu.crisp/files/crisp23_1.pdf

*Flanders, C. E. (2015). Bisexual health: A daily diary analysis of stress and anxiety. Basic and Applied Social Psychology, 37, 319–335. https://doi.org/10.1080/01973533.2015.1079202

*Fleming, A., & Rucas, K. (2015). Welcoming a paradigm shift in occupational therapy: Symptom validity measures and cognitive assessment. Applied Neuropsychology: Adult, 22, 23–31. https://doi.org/10.1080/23279095.2013.822873

*Foland-Ross, L. C., Gilbert, B. L., Joormann, J., & Gotlib, I. H. (2015). Neural markers of familial risk for depression: An investigation of cortical thickness abnormalities in healthy adolescent daughters of mothers with recurrent depression. Journal of Abnormal Psychology, 124, 476–485. https://doi.org/10.1037/abn0000050

Fraley, R. C., & Vazire, S. (2014). The N-Pact factor: Evaluating the quality of empirical journals with respect to sample size and statistical power. PLoS ONE, 9, e109019. https://doi.org/10.1371/journal.pone.0109019

*Fredman, S. J., Baucom, D. H., Boeding, S. E., & Miklowitz, D. J. (2015). Relatives’ emotional involvement moderates the effects of family therapy for bipolar disorder. Journal of Consulting and Clinical Psychology, 83, 81–91. https://doi.org/10.1037/a0037713

*Freeman, S. M., Clewett, D. V., Bennett, C. M., Kiehl, K. A., Gazzaniga, M. S., & Miller, M. B. (2015). The posteromedial region of the default mode network shows attenuated task-induced deactivation in psychopathic prisoners. Neuropsychology, 29, 493–500. https://doi.org/10.1037/neu0000118

*Frick, A., & Newcombe, N. S. (2015). Young children’s perception of diagrammatic representations. Spatial Cognition and Computation, 15, 227–245. https://doi.org/10.1080/13875868.2015.1046988

*Friedman, O., & Turri, J. (2015). Is probabilistic evidence a source of knowledge? Cognitive Science, 39, 1062–1080. https://doi.org/10.1111/cogs.12182

Fruth, J. D., & Huber, M. J. (2015). Teaching prevention: The impact of a universal preventive intervention on teacher candidates. Journal of Education and Human Development, 4(1), 245–254. 10.15640/jehd.v4n1a22

*Galati, A., & Avraamides, M. N. (2015). Social and representational cues jointly influence spatial perspective-taking. Cognitive Science, 39, 739–765. https://doi.org/10.1111/cogs.12173

*Gámez, P. B., & Lesaux, N. K. (2015). Early-adolescents’ reading comprehension and the stability of the middle school classroom-language environment. Developmental Psychology, 51, 447–458. https://doi.org/10.1037/a0038868

*Gavett, B. E., Vudy, V., Jeffrey, M., John, S. E., Gurnani, A. S., & Adams, J. W. (2015). The δ latent dementia phenotype in the uniform data set: Cross-validation and extension. Neuropsychology, 29, 344–352. https://doi.org/10.1037/neu0000128

*Gebauer, J. E., Sedikides, C., Wagner, J., Bleidorn, W., Rentfrow, P. J., Potter, J., & Gosling, S. D. (2015). Cultural norm fulfillment, interpersonal belonging, or getting ahead? A large-scale cross-cultural test of three perspectives on the function of self-esteem. Journal of Personality and Social Psychology, 109, 526–548. https://doi.org/10.1037/pspp0000052

*Georgiadou, L., Willis, A., & Canavan, S. (2015). “An outfield at a cricket game”: Integrating support provisions in counsellor education. Counselling and Psychotherapy Research, 15, 289–297. https://doi.org/10.1002/capr.12039

*Gerken, L., Dawson, C., Chatila, R., & Tenenbaum, J. (2015). Surprise! Infants consider possible bases of generalization for a single input example. Developmental Science, 18, 80–89. https://doi.org/10.1111/desc.12183

*Germeroth, L. J., Wray, J. M., & Tiffany, S. T. (2015). Response time to craving-item ratings as an implicit measure of craving-related processes. Clinical Psychological Science, 3, 530–544. https://doi.org/10.1177/2167702614542847

*Gershman, S. J., & Hartley, C. A. (2015). Individual differences in learning predict the return of fear. Learning & Behavior, 43, 243–250. https://doi.org/10.3758/s13420-015-0176-z

*Gibbs, Z., Lee, S., & Kulkarni, J. (2015). The unique symptom profile of perimenopausal depression. Clinical Psychologist, 19, 76–84. https://doi.org/10.1111/cp.12035

*Gillard, K., & Cramer, K. M. (2015). “Yes, I decide you will receive your choice”: Effects of authoritative agreement on perceptions of control. Current Research in Social Psychology, 23, 2:9–17. https://uiowa.edu/crisp/sites/uiowa.edu.crisp/files/crisp_23_2.pdf

*Gonzalez, M. Z., Beckes, L., Chango, J., Allen, J. P., & Coan, J. A. (2015). Adolescent neighborhood quality predicts adult dACC response to social exclusion. Social Cognitive and Affective Neuroscience, 10, 921–928. https://doi.org/10.1093/scan/nsu137

*Gonzalez, V. M., & Dulin, P. L. (2015). Comparison of a smartphone app for alcohol use disorders with an Internet-based intervention plus bibliotherapy: A pilot study. Journal of Consulting and Clinical Psychology, 83, 335–345. https://doi.org/10.1037/a0038620

*Gonzalez-Gomez, N., & Nazzi, T. (2015). Constraints on statistical computations at 10 months of age: The use of phonological features. Developmental Science, 18, 864–876. https://doi.org/10.1111/desc.12279

*Goodvin, S. B., Bakken, L., Mustafa, M. B., Jaradat, M., Eubank, H., Rathbun, S. E., … Stucky, J. (2015). A study of external stakeholders’ perspectives of a Midwestern community college. Journal of Education and Human Development, 4(4), 17–25. 10.15640/jehd.v4n4a3

*Gordillo, F., & Mestas, L. (2015). What we know about people shapes the inferences we make about their personalities. Current Research in Social Psychology, 23, 5:38–45. Retrieved from https://uiowa.edu/crisp/sites/uiowa.edu.crisp/files/crisp_23_5.pdf

*Gordon, R. L., Shivers, C. M., Wieland, E. A., Kotz, S. A., Yoder, P. J., & McAuley, J. D. (2015). Musical rhythm discrimination explains individual differences in grammar skills in children. Developmental Science, 18, 635–644. https://doi.org/10.1111/desc.12230

*Grabell, A. S., Olson, S. L., Miller, A. L., Kessler, D. A., Felt, B., Kaciroti, N., … Tardif, T. (2015). The impact of culture on physiological processes of emotion regulation: A comparison of US and Chinese preschoolers. Developmental Science, 18, 420–435. https://doi.org/10.1111/desc.12227

*Granovskiy, B., Gold, J. M., Sumpter, D. T., & Goldstone, R. L. (2015). Integration of social information by human groups. Topics in Cognitive Science, 7, 469–493. https://doi.org/10.1111/tops.12150

*Griebling, S., Elgas, P., & Konerman, R. (2015). “Trees and things that live in trees”: Three children with special needs experience the project approach. Early Childhood Research and Practice, 17(1). Retrieved from http://ecrp.uiuc.edu/v17n1/griebling.html

*Grilli, M. D., & Verfaellie, M. (2015). Supporting the self-concept with memory: Insight from amnesia. Social Cognitive and Affective Neuroscience, 10, 1684–1692. https://doi.org/10.1093/scan/nsv056

*Gromet, D. M., Hartson, K. A., & Sherman, D. K. (2015). The politics of luck: Political ideology and the perceived relationship between luck and success. Journal of Experimental Social Psychology, 59, 40–46. https://doi.org/10.1016/j.jesp.2015.03.002

*Gur, S., Beveridge, C., & Slattery Walker, L. (2015). The moderating effect of socio-emotional factors on the relationship between status and influence in status characteristics theory. Current Research in Social Psychology, 23, 6:46–55. Retrieved from https://uiowa.edu/crisp/sites/uiowa.edu.crisp/files/crisp_23_6.pdf

Haig, B. D. (2014). Investigating the psychological world: Scientific method in the behavioral sciences. Cambridge: MIT Press. ISBN: 978-0262027366

*Halkjelsvik, T., & Rise, J. (2015). Persistence motives in irrational decisions to complete a boring task. Personality and Social Psychology Bulletin, 41, 90–102. https://doi.org/10.1177/0146167214557008

*Hamburg, M. E., & Pronk, T. M. (2015). Believe you can and you will: The belief in high self-control decreases interest in attractive alternatives. Journal of Experimental Social Psychology, 56, 30–35. https://doi.org/10.1016/j.jesp.2014.08.009

*Harding, J. F. (2015). Increases in maternal education and low-income children’s cognitive and behavioral outcomes. Developmental Psychology, 51, 583–599. https://doi.org/10.1037/a0038920

*Harkness, K. L., Bagby, R. M., Stewart, J. G., Larocque, C. L., Mazurka, R., Strauss, J. S., … Kennedy, J. L. (2015). Childhood emotional and sexual maltreatment moderate the relation of the serotonin transporter gene to stress generation. Journal of Abnormal Psychology, 124, 275–287. https://doi.org/10.1037/abn0000034

*Harris, E., McNamara, P., & Durso, R. (2015a). Novelty seeking in patients with right- versus left-onset Parkinson disease. Cognitive and Behavioral Neurology, 28, 11–16. https://doi.org/10.1097/WNN.0000000000000047

*Harris, M. A., Gruenenfelder-Steiger, A. E., Ferrer, E., Donnellan, M. B., Allemand, M., Fend, H., … Trzesniewski, K. H. (2015b). Do parents foster self-esteem? Testing the prospective impact of parent closeness on adolescent self-esteem. Child Development, 86, 995–1013. https://doi.org/10.1111/cdev.12356

*Hart, E. P., Dumas, E. M., van Zwet, E. W., van der Hiele, K., Jurgens, C. K., Middelkoop, H. M., … Roos, R. C. (2015). Longitudinal pilot-study of sustained attention to response task and P300 in manifest and pre-manifest Huntington’s disease. Journal of Neuropsychology, 9, 10–20. https://doi.org/10.1111/jnp.12031

*Harwood, J. (2015). Intergroup contact, prejudicial attitudes, and policy preferences: The case of the U.S. Military’s “Don’t Ask, Don’t Tell” policy. Journal of Social Psychology, 155, 57–69. https://doi.org/10.1080/00224545.2014.959886

*Heimler, B., van Zoest, W., Baruffaldi, F., Donk, M., Rinaldi, P., Caselli, M. C., & Pavani, F. (2015). Finding the balance between capture and control: Oculomotor selection in early deaf adults. Brain and Cognition, 96, 12–27. https://doi.org/10.1016/j.bandc.2015.03.001

Hempel, C. G. (1965). Aspects of scientific explanation and other essays in the philosophy of science. New York: Free Press. ISBN: 978-0029143407

*Henniger, N. E., & Harris, C. R. (2015). Envy across adulthood: The what and the who. Basic and Applied Social Psychology, 37, 303–318. https://doi.org/10.1080/01973533.2015.1088440

*Hentges, R. F., Davies, P. T., & Cicchetti, D. (2015). Temperament and interparental conflict: The role of negative emotionality in predicting child behavioral problems. Child Development, 86, 1333–1350. https://doi.org/10.1111/cdev.12389

*Hernandez, A.-L., Redersdorff, S., & Martinot, D. (2015). Which judgement do women expect from a female observer when they claim to be a victim of sexism? Current Research in Social Psychology, 22, 13:39–47. Retrieved from https://uiowa.edu/crisp/sites/uiowa.edu.crisp/files/crisp22_13.pdf

*Herrmann, E., Misch, A., Hernandez-Lloreda, V., & Tomasello, M. (2015). Uniquely human self-control begins at school age. Developmental Science, 18, 979–993. https://doi.org/10.1111/desc.12272

*Hill, S. C., Snell, A. F., & Sterns, H. L. (2015a). Career influences in bridge employment among retired police officers. International Journal of Aging and Human Development, 81, 101–119. https://doi.org/10.1177/0091415015614947

*Hill, S. E., Prokosch, M. L., & DelPriore, D. J. (2015b). The impact of perceived disease threat on women’s desire for novel dating and sexual partners: Is variety the best medicine? Journal of Personality and Social Psychology, 109, 244–261. https://doi.org/10.1037/pspi0000024

*Holmes, C. A., Nardi, D., Newcombe, N. S., & Weisberg, S. M. (2015). Children’s use of slope to guide navigation: Sex differences relate to spontaneous slope perception. Spatial Cognition and Computation, 15, 170–185. https://doi.org/10.1080/13875868.2015.1015131

Hubbard, R. (2016). Corrupt research: The case for reconceptualizing empirical management and social science. Los Angeles: Sage. ISBN: 978-1506305356

*Incerti, C. C., Argento, O., Pisani, V., Mannu, R., Magistrale, G., Battista, G. D., … Nocentini, U. (2015). A preliminary investigation of abnormal personality traits in MS using the MCMI-III. Applied Neuropsychology: Adult, 22, 452–458. https://doi.org/10.1080/23279095.2014.979489

*Ito, T. A., Friedman, N. P., Bartholow, B. D., Correll, J., Loersch, C., Altamirano, L. J., & Miyake, A. (2015). Toward a comprehensive understanding of executive cognitive function in implicit racial bias. Journal of Personality and Social Psychology, 108, 187–218. https://doi.org/10.1037/a0038557

*Jaspar, M., Dideberg, V., Bours, V., Maquet, P., & Collette, F. (2015). Modulating effect of COMT Val158Met polymorphism on interference resolution during a working memory task. Brain and Cognition, 95, 7–18. https://doi.org/10.1016/j.bandc.2015.01.013

*Jeffery, M. K., & Tweed, A. E. (2015). Clinician self-disclosure or clinician self-concealment? Lesbian, gay and bisexual mental health practitioners’ experiences of disclosure in therapeutic relationships. Counselling and Psychotherapy Research, 15, 41–49. https://doi.org/10.1080/14733145.2013.871307

*Jellett, R., Wood, C. E., Giallo, R., & Seymour, M. (2015). Family functioning and behaviour problems in children with Autism Spectrum Disorders: The mediating role of parent mental health. Clinical Psychologist, 19, 39–48. https://doi.org/10.1111/cp.12047

*Jeppsen, B., Pössel, P., Black, S. W., Bjerg, A., & Wooldridge, D. (2015). Closeness and control: Exploring the relationship between prayer and mental health. Counseling and Values, 60, 164–185. https://doi.org/10.1002/cvj.12012

*Jiang, J., Zhang, Y., Ke, Y., Hawk, S. T., & Qiu, H. (2015). Can’t buy me friendship? Peer rejection and adolescent materialism: Implicit self-esteem as a mediator. Journal of Experimental Social Psychology, 58, 48–55. https://doi.org/10.1016/j.jesp.2015.01.001

*Jonas, C. N., & Hibbard, P. B. (2015). Migraine in synesthetes and nonsynesthetes: A prevalence study. Perception, 44, 1179–1202. https://doi.org/10.1177/0301006615599905

*Jones, A. L., & Kramer, R. S. (2015). Facial cosmetics have little effect on attractiveness judgments compared with identity. Perception, 44, 79–86. https://doi.org/10.1068/p7904

*Jung, J., Jackson, S. R., Nam, K., Hollis, C., & Jackson, G. M. (2015). Enhanced saccadic control in young people with Tourette syndrome despite slowed pro-saccades. Journal of Neuropsychology, 9, 172–183. https://doi.org/10.1111/jnp.12044

*Kaiser, F. G., & Byrka, K. (2015). The Campbell paradigm as a conceptual alternative to the expectation of hypocrisy in contemporary attitude research. Journal of Social Psychology, 155, 12–29. https://doi.org/10.1080/00224545.2014.959884

*Kanaya, S., Fujisaki, W., Nishida, S., Furukawa, S., & Yokosawa, K. (2015). Effects of frequency separation and diotic/dichotic presentations on the alternation frequency limits in audition derived from a temporal phase discrimination task. Perception, 44, 198–214. https://doi.org/10.1068/p7753

*Kang, S. K., Galinsky, A. D., Kray, L. J., & Shirako, A. (2015a). Power affects performance when the pressure is on: Evidence for low-power threat and high-power lift. Personality and Social Psychology Bulletin, 41, 726–735. https://doi.org/10.1177/0146167215577365

*Kang, S., Tversky, B., & Black, J. B. (2015b). Coordinating gesture, word, and diagram: Explanations for experts and novices. Spatial Cognition and Computation, 15, 1–26. https://doi.org/10.1080/13875868.2014.958837

*Kárpáti, J., Donauer, N., Somogyi, E., & Kónya, A. (2015). Working memory integration processes in benign childhood epilepsy with centrotemporal spikes. Cognitive and Behavioral Neurology, 28, 207–214. https://doi.org/10.1097/WNN.0000000000000075

*Kattner, F. (2015). Transfer of absolute and relative predictiveness in human contingency learning. Learning & Behavior, 43, 32–43. https://doi.org/10.3758/s13420-014-0159-5

*Kim, K., & Lee, M. (2015). Depressive symptoms of older adults living alone: The role of community characteristics. International Journal of Aging and Human Development, 80, 248–263. https://doi.org/10.1177/0091415015590315

*Kim, S. H., Yoon, H., Kim, H., & Hamann, S. (2015). Individual differences in sensitivity to reward and punishment and neural activity during reward and avoidance learning. Social Cognitive and Affective Neuroscience, 10, 1219–1227. https://doi.org/10.1093/scan/nsv007

*Kim, Y. (2015). Language and cognitive predictors of text comprehension: Evidence from multivariate analysis. Child Development, 86, 128–144. https://doi.org/10.1111/cdev.12293

*Kleinman, D., Runnqvist, E., & Ferreira, V. S. (2015). Single-word predictions of upcoming language during comprehension: Evidence from the cumulative semantic interference task. Cognitive Psychology, 79, 68–101. https://doi.org/10.1016/j.cogpsych.2015.04.001

*Koster, D. P., Higginson, C. I., MacDougall, E. E., Wheelock, V. L., & Sigvardt, K. A. (2015). Subjective cognitive complaints in Parkinson disease without dementia: A preliminary study. Applied Neuropsychology: Adult, 22, 287–292. https://doi.org/10.1080/23279095.2014.925902

*Kunst, J. R., Thomsen, L., Sam, D. L., & Berry, J. W. (2015). “We are in this together”: Common group identity predicts majority members’ active acculturation efforts to integrate immigrants. Personality and Social Psychology Bulletin, 41, 1438–1453. https://doi.org/10.1177/0146167215599349

*Kurth, F., MacKenzie-Graham, A., Toga, A. W., & Luders, E. (2015). Shifting brain asymmetry: The link between meditation and structural lateralization. Social Cognitive and Affective Neuroscience, 10, 55–61. https://doi.org/10.1093/scan/nsu029

*Lange, J., & Crusius, J. (2015). Dispositional envy revisited: Unraveling the motivational dynamics of benign and malicious envy. Personality and Social Psychology Bulletin, 41, 284–294. https://doi.org/10.1177/0146167214564959

*Laurent, S. M., Clark, B. M., & Schweitzer, K. A. (2015a). Why side-effect outcomes do not affect intuitions about intentional actions: Properly shifting the focus from intentional outcomes back to intentional actions. Journal of Personality and Social Psychology, 108, 18–36. https://doi.org/10.1037/pspa0000011

*Laurent, S. M., Nuñez, N. L., & Schweitzer, K. A. (2015b). The influence of desire and knowledge on perception of each other and related mental states, and different mechanisms for blame. Journal of Experimental Social Psychology, 60, 27–38. https://doi.org/10.1016/j.jesp.2015.04.009

*Lebois, L. M., Wilson-Mendenhall, C. D., & Barsalou, L. W. (2015). Are automatic conceptual cores the gold standard of semantic processing? The context-dependence of spatial meaning in grounded congruency effects. Cognitive Science, 39, 1764–1801. https://doi.org/10.1111/cogs.12174

*Leerkes, E. M., Supple, A. J., O’Brien, M., Calkins, S. D., Haltigan, J. D., Wong, M. S., & Fortuna, K. (2015). Antecedents of maternal sensitivity during distressing tasks: Integrating attachment, social information processing, and psychobiological perspectives. Child Development, 86, 94–111. https://doi.org/10.1111/cdev.12288

*Leman, P. J. (2015). How do groups work? Age differences in performance and the social outcomes of peer collaboration. Cognitive Science, 39, 804–820. https://doi.org/10.1111/cogs.12172

*Lemay, E. J., Lin, J. L., & Muir, H. J. (2015). Daily affective and behavioral forecasts in romantic relationships: Seeing tomorrow through the lens of today. Personality and Social Psychology Bulletin, 41, 1005–1019. https://doi.org/10.1177/0146167215588756

*Leutgeb, V., Sarlo, M., Schöngassner, F., & Schienle, A. (2015). Out of sight, but still in mind: Electrocortical correlates of attentional capture in spider phobia as revealed by a “dot probe” paradigm. Brain and Cognition, 93, 26–34. https://doi.org/10.1016/j.bandc.2014.11.005

*Lev, M., Gilaie-Dotan, S., Gotthilf-Nezri, D., Yehezkel, O., Brooks, J. L., Perry, A., … Polat, U. (2015). Training-induced recovery of low-level vision followed by mid-level perceptual improvements in developmental object and face agnosia. Developmental Science, 18, 50–64. https://doi.org/10.1111/desc.12178

*Levitt, D. H., Farry, T. J., & Mazzarella, J. R. (2015). Counselor ethical reasoning: Decision-making practice versus theory. Counseling and Values, 60, 84–99. https://doi.org/10.1002/j.2161-007X.2015.00062.x

*Leyva, D., Weiland, C., Barata, M., Yoshikawa, H., Snow, C., Treviño, E., & Rolla, A. (2015). Teacher–child interactions in Chile and their associations with prekindergarten outcomes. Child Development, 86, 781–799. https://doi.org/10.1111/cdev.12342

*Li, J., Chen, Y., & Huang, X. (2015). Materialism moderates the effect of accounting for time on prosocial behaviors. Journal of Social Psychology, 155, 576–589. https://doi.org/10.1080/00224545.2015.1024192

*Limberg, D., Ohrt, J. H., Barden, S. M., Ha, Y., Hundley, G., & Wood, A. W. (2015). A cross-cultural exploration of perceptions of altruism of counselors-in-training. Counseling and Values, 60, 201–217. https://doi.org/10.1002/cvj.12014

*Liu, K., Jiang, Q., Li, L., Li, B., Yang, Z., Qian, S., … Sun, G. (2015). Impact of elevated core body temperature on attention networks. Cognitive and Behavioral Neurology, 28, 198–206. https://doi.org/10.1097/WNN.0000000000000078

*Liu, P., & Luhmann, C. C. (2015). Evidence for online processing during causal learning. Learning & Behavior, 43, 1–11. https://doi.org/10.3758/s13420-014-0156-8

*Locke, R. L., Miller, A. L., Seifer, R., & Heinze, J. E. (2015). Context-inappropriate anger, emotion knowledge deficits, and negative social experiences in preschool. Developmental Psychology, 51, 1450–1463. https://doi.org/10.1037/a0039528