Abstract

Current eye movement data analysis methods rely on defining areas of interest (AOIs). Due to the fact that AOIs are created and modified manually, variances in their size, shape, and location are unavoidable. These variances affect not only the consistency of the AOI definitions, but also the validity of the eye movement analyses based on the AOIs. To reduce the variances in AOI creation and modification and achieve a procedure to process eye movement data with high precision and efficiency, we propose a template-based eye movement data analysis method. Using a linear transformation algorithm, this method registers the eye movement data from each individual stimulus to a template. Thus, users only need to create one set of AOIs for the template in order to analyze eye movement data, rather than creating a unique set of AOIs for all individual stimuli. This change greatly reduces the error caused by the variance from manually created AOIs and boosts the efficiency of the data analysis. Furthermore, this method can help researchers prepare eye movement data for some advanced analysis approaches, such as iMap. We have developed software (iTemplate) with a graphic user interface to make this analysis method available to researchers.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Modern eye movement tracking techniques are widely used in cognitive science studies. By analyzing eye movements, researchers are able to understand the spatial and temporal characteristics of perceptual and cognitive processes (e.g., Blais, Jack, Scheepers, Fiset, & Caldara, 2008; Henderson, 2011; Liu et al., 2011). Deviances from typical eye movement patterns have been found to be associated with deficits in cognitive functions, which serves a unique function in understanding the ontology and development of some mental deficits, such autism spectrum disorder (e.g., Dundas, Best, Minshew, & Strauss, 2012a; Dundas, Gastgeb, & Strauss, 2012b; Yi et al., 2013). The popularity of using eye-tracking techniques has engendered the development of eye movement analysis methods (e.g., Caldara & Miellet, 2011; Ellis, Xiao, Lee, & Oakes, 2017; Lao, Miellet, Pernet, Sokhn, & Caldara, 2017; Xiao et al., 2015). Most of these analysis approaches rely on computerized scripts that offer not only processing efficiency, but more importantly, standardized procedures to interpret eye movement data. However, in contrast to the computerized automated analysis procedures, area of interest (AOI) analysis, which is the fundamental procedue of these higher-level analyses, still relies on manual operations. This manual process not only is slow but leaves the consistency of analyses unchecked. For example, AOIs vary in shape, size, and location across studies, which, to some extent, hinders the generalization of eye movement patterns across studies. Here we propose a template-based eye movement data analysis approach, which offers much higher efficiency and consistency of analysis than do traditional AOI-based analysis approaches. We have also created software with a graphic user interface to implement this analysis approach.

The current eye-tracking data analysis approach

Most current eye movement data analyses rely on AOIs to help interpret the eye movements. AOI analysis usually requires researchers to define several areas related to stimuli that vary with the research’s purposes. For example, in studies using face images as stimuli, researchers are usually interested in the fixations on several facial features, such as eyes, nose, and mouth. Therefore they create AOIs for the eyes, nose, and mouth and calculate statistics related to the fixations that fall in each AOI, such as the fixation duration, number of fixations, and average fixation duration for each AOI. These eye movement statistics are crucial to understanding the underlying perceptual and cognitive mechanisms. In other words, the creation of AOIs serves as the foundation for interpreting eye movement data.

The creation of AOIs, however, can be problematic. This is due mainly to the fact that AOIs are created manually, largely on the basis of one’s subjective judgments. For example, to create an AOI for the eyes in face stimuli, one has to define the AOI in terms of its shape, size, and location. These decisions are mostly made subjectively, which leads to variances among different face stimuli and different researchers. The eye AOIs can be two oval regions, one for each eye (e.g., Ellis et al., 2017); a rectangular region covering the two eyes and the area between them (e.g., Lewkowicz & Hansen-Tift, 2012); or polygonal regions that include both the eyes and eye brows (e.g., Liu et al., 2011). These differences in the definition of AOIs in terms of size, shape, and location result in differences in determining whether or not a given fixation is included in a given AOI. Moreover, these variances in AOIs exist not only among different stimuli used in a single study, but also among different studies, which affects the validity of cross-study comparisons. Finally, these variances between AOIs affect the consistency of analyzing and interpreting eye movements.

Moreover, the variances caused by manually created AOIs are difficult to estimate or to avoid. On the one hand, this is true because the images used as experimental stimuli are heterogeneous in nature. Take face images, for example: Faces differ in size, shape, and the spatial configuration of facial features (e.g., eyes, nose, and mouth), which leads to the AOIs on different faces differing accordingly. On the other hand, this issue is also caused by human errors in creating or modifying AOIs. Although one can reduce variances in AOIs by controlling the AOIs’ shapes, sizes, and locations across stimuli and studies, these efforts usually are time-consuming and also likely to introduce more errors.

In sum, AOI-based analyses are susceptible to variances in creating the AOIs, which are difficult to measure or control. This issue affects not only the validity of the eye movement patterns described within a single study, but also comparisons among the findings of multiple studies. Furthermore, the reliance on AOIs to interpret eye movement data prevents the examination of eye movements at a micro-level, such as the investigation of the spatial distribution of eye movements at a pixel level (Caldara & Miellet, 2011; Lao et al., 2017).

A template-based eye movement data analysis approach

To address the issue of manually created AOIs in the traditional analysis approach, we propose a template-based analysis method. Instead of relying on a unique set of AOIs to analyze the eye movements on each individual stimulus, this method requires only one common set of standard AOIs for analyzing the eye movement data recorded from multiple visual stimuli, which greatly reduces the variances among multiple sets of AOIs. To this end, we used linear transformation algorithms to register the fixations for each individual image to a template space. This registration process is based on the spatial relations between fixations and the stimulus images. For example, a fixation at the tip of the nose in a single face image before transformation will be transformed to the nose tip of the template face image. In other words, the transformation retains the correspondence between gaze locations and visual features. After the transformation, the eye movements originally recorded from different stimuli are registered to the template. Thereby, this methodology enables the possibility of using only one set of standard AOIs on the template to summarize eye movements originally from various stimuli.

One of the critical technical challenges is how to transform the eye movement data for each individual stimulus to the template. To solve this problem, we capitalized on the fact that eyes’ on-screen positions recorded by eyetrackers share the same spatial referencing system with the visual stimulus (i.e., the image) presented on the screen. Specifically, the positions of both the eyes and the visual stimuli use the left top corner of the screen as the reference point (i.e., x = 0, y = 0). Thereby, any given eye position can be conceptualized as a pixel in relation to the visual stimuli. Thus, the way to transform eye movement data to match the template becomes to the method of transforming each individual stimulus to the template, which is a typical image registration problem and can be solved by various geometric algorithms.

To register images to the template, we chose the linear geometric transformation method. We chose this method because the linear geometric transformation preserves the spatial relation of the original image after transformation. The linear transformation is based on several pairs of control points placed at corresponding locations in the original image (i.e., the image to be transformed) and the template image. For example, in face image transformation, a control point would be placed on the inner corner of the left eye in the original image. A corresponding control point would be placed on the inner corner of the left eye in the template face image. This control point based linear transformation attempts to register the locations of all control points in the original image to the locations of those in the template image. This registration process is done by manipulating three parameters: translation (i.e., the amount of change in the x and y directions), scale (i.e., the amount of resize), and rotation (i.e., the amount of rotation). Ideally, with these three parameters one could perfectly transform every pixel of an original image to the template image. As such, one could transfer eye movement data to the template with the same transformation parameters.

However, with one set of transformation parameters (i.e., translation, scale, and rotation) it is usually impossible to achieve a perfect transformation in which all control points in the original and template images match perfectly. Instead, the transformation usually results in a state of mismatch between the pairs of control points. This is because different control points require different transformation parameters in order to achieve perfect registration. Therefore, one can not transform eye movement data perfectly using only one set of transformation parameters.

To solve this problem and achieve a precise registration, we used multiple sets of transformation parameters. Each set of parameters was used to register a specific region of the image. Each of these regions was defined by connecting three control points, which formed triangular regions. To do so, we segmented each image into several triangular subregions, which was done by connecting the control points by means of the two-dimensional Delaunay triangulation method (Fig. 1, top panel). The same triangular segmentation was then applied to the template image. As a result, each triangular region of the original image corresponded to that of the template image. The reason to use triangular regions is that linear transformation can achieve perfect registration within a polygon region with three edges (i.e., a triangular region). We applied the linear transformation to each triangular region separately, which generated a unique set of parameters for the region. To register any given eye movement data (e.g., fixations) on an original image, one just needed to determine which triangular region the fixation fell within and to apply the transformation parameters of that region to the fixation coordinates. In this way, on the basis of the control points, multiple sets of transformation parameters would be generated for each stimulus image, which could be used to accurately transfer the eye positions to the template.

Schematic of the template-based analysis procedure. The top panel illustrates the calculation of transformation parameters for each triangular region (e.g., the green area), which are based on the control points (i.e., the red dots). By applying transformation parameters to the original images, one can register them to the template (the middle panel), which can be used to verify the transformation parameters. As is shown in the bottom panels, by applying the transformation parameters to fixations (i.e., the blue dots on the face images), one can transform and register the fixations to the template.

Advantages of the template-based eye movement analysis approach

This template-based approach can significantly facilitate the precision and efficiency of existing eye movement analysis methods.

A single set of AOIs

Given the fact that all eye movement data are transformed and registered to a single template, one needs only one set of AOIs on the template to categorize all eye movement data originally recorded from the different images. This would greatly reduce the variances that emanate from multiple sets of manually created AOIs for each of the original images.

Easy-to-modify AOIs

The single set of AOIs also makes modification of the AOIs much easier than is possible with traditional methods. This is because users need to change only one set of AOIs on the template image, rather than changing every set of AOIs for each original image according to the traditional method. Any change made on the single set of AOIs then applies to all the eye movement data. Because researchers thus need to work on only one set of AOIs, the possibility of human error in manually modifying the AOIs is substantially reduced.

Time saving

Due to fact that only one set of AOIs is needed to process all eye movement data at once, it is not surprised that users can save a great amount of time when creating or modifying AOIs. Moreover, because AOI creation and modification are perhaps the only procedures that require manual operation in analyzing eye movement data, the template-based analysis approach would enable a fully computer-script-based analysis pipeline for eye movement data processing. This means that researchers would also be able to see the outcomes of their AOI creations and modifications almost instantly.

In addition to facilitating the efficiency and accuracy of an analysis, the template-based method also engenders several novel eye movement analysis approaches.

Fine-grained eye movement analysis

As Caldara and his colleagues have indicated (Caldara & Miellet, 2011; Lao et al., 2017), traditional AOI-based eye movement analysis ignores eye movement data that are outside the predefined areas of interest. They proposed instead analyzing eye movement data at the pixel, rather than the AOI, level, so as to have a fine-grained understanding of looking patterns and the underlying mechanisms. However, the fact that visual stimuli such as faces and natural scenes are naturally heterogeneous makes it difficult to align the eye movement data for different stimuli (e.g., images of different faces and scenes) at the pixel level. This difficulty has practically hindered the efforts to use this advanced analysis method (i.e., using the iMap analysis tool). The template-based analysis method that we propose here specifically solves this issue by registering all eye movement data to a standardized template space. This would allow one to apply fine-grained analysis directly to the transformed eye movement data, without considering morphological differences among the original images.

Comparison of eye movement patterns among studies

Currently, comparisons between the eye movement findings of different studies have been made under the assumption that the AOIs used in the different studies are defined in similar ways. However, the variance in the definitions of AOIs among studies is actually relatively large, therefore affecting the comparison outcomes. Using the present template-based analysis method, it is possible to compare the eye movement patterns among studies in detail. This is because any eye movement data from different studies can easily be registered to a common template space. Thereby, the eye movement patterns from different studies can be “standardized” and analyzed by means of AOIs that are identically defined.

The iTemplate software

To implement this template-based analysis method and make it friendly to use for researchers, we have created software with a graphic user interface (GUI). This software is named iTemplate, which is primarily designed to process the fixation data from visual perception studies. Here we use eye movement data from a face processing study as an example to demonstrate the functions of iTemplate, mainly because face images are widely used in investigating visual perception and its development. Moreover, faces are heterogeneous but they share the same geometrical structure across various categories, such as species, races, and genders—for instance, two symmetrical eyes are above the nose, and the nose is above the mouth. This shared general spatial structure among faces makes such stimuli ideal candidates for using linear transformation to register any individual face to a face template. It should be noted that although iTemplate is ideal for studies using face stimuli, it includes a set of customization options, which allows it to be used to analyze eye movement data in studies with other types of stimuli, such as visual scenes and objects.

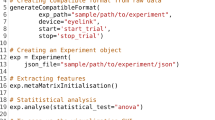

iTemplate is built using the Matlab platform by taking advantage of its image-processing algorithms. This program includes several major processing modules, for project management, landmark creation, transformation matrix calculation, eye movement data registration, AOI creation, and data export. These modules allow users to easily implement the template-based eye movement data-processing method in their data analysis practices. We introduce the functions of these modules in the following sections by following a typical procedure of visual fixation analysis.

Data preparation

iTemplate requires two types of data input: eye movement data and the face stimulus images. The eye movement data should be prepared in a spreadsheet format (e.g., .csv, .xls, or .xlsx). In the spreadsheet, each row represents an eye movement event, such as a fixation or an eye-tracking sample). As is shown in Table 1, the spreadsheet should include the following information in separate columns: participant ID, stimulus name, x-coordinate, y-coordinate, and duration. In particular, the “Stimulus name” column should list the names of the stimuli that correspond to each eye movement event. It should be noted that users need to include the full file names of stimulus images (e.g., “face_1.jpg”), rather than simply the base name of an image (e.g., “face_1”).

The coordinates in eye movement data should match the imported images, which is the default setting in most modern eyetrackers. It is recommended that the x- and y-coordinate values be based on the top left corner of the images imported to iTemplate. Accordingly, the stimulus images for iTemplate should be full-size screenshots of a stimulus during on-screen presentation. In addition to this required information, researchers are welcome to include any other information to this spreadsheet in separate columns.

Project management

The Project Management window is the first user interface that users see after starting iTemplate (Fig. 2). It provides some basic functions to manage research projects, such as to create a project or to open or delete an existing project.

To create a project, one needs to click the “New” button (Fig. 2). Then iTemplate asks users to import the stimulus images and their corresponding eye movement data files. After the images and eye movement data are successfully imported, iTemplate will examine whether the names of the imported images match the stimulus names in the eye movement data file. If all of the imported data pass this examination, the “Start” button will become active. When the user clicks the “Start” button, a new project will be created and stored in the “~/MATLAB/Eye Tracking Projects” folder, and the main window of iTemplate will appear.

To open an existing project, one needs to select the project from the project list and then click the “Open” button. Opening a project leads to the main window of iTemplate.

Main iTemplate window

In the main window of iTemplate, users can see previews of the original face images and transformed images, if the transformation has been performed (Fig. 3). There are two types of operations users can do in this window: image processing and fixation processing. the image-processing panel focuses on calculating the transformation parameters, which comprise functions such as landmark placement, transformation, template face selection, and resetting the template face. The fixation-processing panel offers functions to transform the fixations, process the AOIs, and export the processed data. Users are expected to start an analysis by placing landmarks.

Place face landmarks

Users can start placing landmarks by clicking the “Draw Landmarks” button in the main window (Fig. 3). The purpose of placing landmarks is to determine the locations of the control points for transformation. Users need to identify several points to indicate the major features of a face, such as the eyes, nose, mouth, and face contours.

In the opened Draw Landmarks window, the face template is displayed with the default landmarks on the right side. The landmarks on the face template serve as guidelines for locations of the landmarks on a face stimulus, which is displayed on the left side (Fig. 4). The landmarks on the face image and those on the face template correspond to each other, which is indicated by the number label next to each landmark. Users can drag any landmark with the mouse to locate that landmark on a face stimulus. Each landmark is supposed to be placed on the same face feature that is illustrated by its corresponding landmark on the template face.

In the default landmark layout, there are 39 landmarks that users need to place on each face image. To facilitate this process, we have designed an algorithm to estimate the positions of more than 75% of the landmarks on the basis of only nine landmarks. To achieve this semi-automatic process, we separate landmark placement into two steps: Users need first to place the nine reference landmarks on a face image according to the positions on the face template (Fig. 5, Procedures 1 & 2) and then to click the “Save” button to save these reference landmarks. iTemplate then estimates the rest of the landmarks on the face image. Once the estimated landmarks appear on the face, users only need to make some minor adjustments to the positions of the landmarks (Fig. 5, Procedures 3 & 4). It should be noted that the estimated landmarks will not necessarily land on the perfect spot on the corresponding image features. Thus, users will need to adjust the positions of the landmarks. To avoid the possibility that users will mistake the estimated landmarks as the correct ones, we purposely designed the “Save” function to be activated only if users have adjusted the locations of the estimated landmarks. If users do not change any of the estimated landmarks, the “Save” button will remain inactive. We have designed several image tools (e.g., zoom in and out) to help users place the landmarks. By clicking the “Save” button again, one can save the landmarks of a given face image.

Schematic of a typical procedure of placing landmarks: Users (1) move the nine reference landmarks to their corresponding locations and (2) save the reference landmarks. This causes the software to (3) automatically generate other landmarks based on the nine reference landmarks. Users can then (4) move the auto-generated landmarks to their corresponding locations.

Customize landmark layout

In addition to the default landmark layout, iTemplate provides the ability to customize the landmark layouts, so that users can change the number of landmarks and their positions. In general, the number of landmarks determines the precision of the registration. The more landmarks, the more precise can the registration be achieved.

This customization allows users to modify the landmark layout according to the nature of the stimuli (e.g., the faces of different species). Moreover, it offers the ability to adjust the landmarks to reflect specific research purposes. For example, the landmarks in the default layout are placed on major facial features (e.g., eyes, eye browse, nose, and mouth), given the fact that these features are the ones mostly attended to. If a study focuses on the perception of age-related facial information and the researchers wish to examine how wrinkles on the forehead are attended, it would be worthwhile to include some landmarks on the wrinkles so as to achieve precise registration of a particular region of interest. Moreover, the customization function provides the ability to use iTemplate for studies with other visual stimuli (e.g., visual scenes and objects). Users can customize the landmark layout to reflect the specific visual features of any visual stimuli.

Calculating the transformation matrices

After all the landmarks are placed on each face images, users are able to calculate the transformation parameters for each face stimulus, which are stored in matrices. These transformation matrices represent the specific sets of parameters that each image needs in order to be registered to the template. When the “Transform” button is clicked, a progress bar will appear to indicate the transformation progress, with a notification to indicate completion of the transformation.

Although transformation matrices are used to register eye movement data, being able to see how these matrices work to transform the original face images provides a straightforward method to verify the transformation. This is because the fixations and image pixels share the same reference system; therefore, the same transformation matrices for fixations can also transform each pixel of the original images to the template. Correct transformation would register a given face image to the face template, with the major facial features and face contours matched (see Fig. 1, middle panels). On the contrary, any mistake in transformation would result in a mismatch between the transformed face and the face template. Thus, users can use the match to verify the quality of the transformation. If users find any mismatch, they are encouraged to double-check the landmark placement in the “Draw Landmarks” window. To this end, after the transformation, users can see the transformed version of each face image in the right-side image of the main window.

In addition to using the default face template image, iTemplate offers the flexibility to choose any face image in the current project as the template face. To do so, users need only select a face image in the stimulus list, then click the “Set as Template Face” button. This will reset all existing transformation matrices; therefore, users would then need to redo the transformations by clicking the “Transform” button after assigning a new face as the template. The ability to choose any face as the template not only increases the software’s flexibility. More importantly, it allows users to prepare their eye movement data for analysis that is based on a specific face image. For example, some AOIs are created on the basis of a particular face.

Fixation registration

Once the transformation matrices are calculated and verified, users can move on to transform the fixations from different stimuli to the template, which is initiated by clicking the “Fixation Transform” button. After the transformation, users can choose where to save the transformed data. The transformation calculates transformed coordinates for each fixation, which will be added to end of the imported data spreadsheet in two new columns: “Xtf” and “Ytf.”

Users can import new data to any existing project by using the “Import Fixation” menu at the top left corner to import new data files. The newly imported data will replace the existing data and be saved to the project’s folder within the “~/MATLAB/Eye Tracking Projects” folder.

Previews of eye movement data

A successful transformation of eye movement data activates previews of the eye movement patterns. As is shown in Fig. 6, three eye movement data preview modes are provided: (1) Fixations mode, in which the fixations on each face will be displayed as dots on the original and the transformed images; (2) HeatMap mode, in which the fixations on each single face stimulus are presented in a heat-map format by using colors to indicate fixation durations; and (3) HeatMap - All Stimuli mode, in which all fixations across all face stimuli are presented on the transformed face. Users can choose the type of fixation preview from the drop-down menu at the top right corner.

AOI based analyses

As introduced earlier, most studies have relied on AOIs to interpret eye movement data (Fig. 7). iTemplate includes a tool to create and edit AOIs and summarize eye movement data. Users can access the AOI analysis tool by clicking the “AOI Analysis” button in the main window. Similar to most of the existing AOI analysis tools, in the AOI analysis window, users can create AOIs of three shapes: ellipses, rectangles, and polygons. Users can specify the location, size, and shape of the AOIs by dragging the AOIs with a mouse. Because all the eye movement data are registered to the face template, users only need to create or modify one set of AOIs for all eye movement data. Once the AOI creation or editing is complete, iTemplate will process the eye movement data on the basis of the AOIs and label the fixations/eye gazes with AOI names in the exported data file.

iMap analysis

iMap is a novel data-driven eye movement data analysis approach (Caldara & Miellet, 2011; Lao et al., 2017). Instead of focusing on the eye movement data at the regional level (i.e., the AOI approach), iMap examines eye movement patterns at the pixel level. The pixel-level analysis offers the capability to reveal eye movement patterns at a much broader and finer scale, which is not limited to the regions of interest.

To generate reliable cross-stimulus comparisons at the pixel level, one needs to ensure that the corresponding pixels (e.g., a pixel at the 100th row and 100th column) of different images represent similar image features (e.g., the outer corner of the left eye). However, given the fact that faces are morphologically heterogeneous in nature, this correspondence at the pixel level is difficult to achieve using the original, nonedited face images. Consequently, this issue has generally kept researchers from using iMap to analyze eye movement data.

The registration of fixations and face images in iTemplate provides a solution to the practical issue in using iMap. Due to the fact that all fixations are registered to a template face space, the pixel correspondence issue is largely addressed by the registration process. As is shown in Fig. 8, the registration results in quite different aggregated eye movement patterns than do the nontransformed fixation data. This example indicates that iTemplate benefits the use of iMap by offering higher-level and more accurate fixation coordinates. To this end, we created an export function to convert the registered fixation data for iMap analysis. Users can import the output data files directly into the iMap 4 application. It should be noted that the benefit of using iTemplate is not limited to iMap analysis; our software can provide strong support for any spatial analysis methods existing or to be developed in the future.

Illustration of the fixation patterns (the colored regions) aggregated from different face stimuli. The pattern on the left is from the original fixation data, and the right one is from the same fixation data after template-based transformation. The same fixation data exhibit different patterns, suggesting that the template-based transformation can greatly reduce errors in aggregating cross-stimulus eye movement data at a fine-grained level.

System requirements

iTemplate has been tested in and is supported by MATLAB 2013b and later versions in both the Mac and Windows operation systems. Compatibility with Linux systems has yet to be tested.

Availability

The iTemplate software, example images, and eye movement data can be found at GitHub (https://github.com/DrXinPsych/iTemplate) and the MATLAB File Exchange (https://www.mathworks.com/matlabcentral/fileexchange/65097-itemplate).

Installation and update

Installation requires only double-clicking the “iTemplate.mlappinstall” installation file. Once iTemplate is installed in Matlab, one can open it by clicking the iTemplate icon in the Apps tab of Matlab. Alternatively, users can download all of the iTemplate files from GitHub and use iTemplate by running the iTemplate.m file in Matlab.

To update iTemplate, users can install the latest “iTemplate.mlappinstall” file. They also can replace the existing files with the updated ones on GitHub.

Conclusion

We have proposed a template-based eye movement analysis approach. By registering eye movement data to a template, it greatly reduces the errors common in current AOI analyses. Moreover, this approach greatly reduces the reliance on manual operations and promotes the standardization of eye movement data analysis procedures. Furthermore, this approach allows researchers to use fine-grained analysis methods more readily and opens up possibilities to compare eye movement data across studies. To implement this analysis method, we have developed iTemplate, which offers a user-friendly graphic interface to process eye movement data. We believe that the template-based analysis approach and iTemplate software will facilitate eye movement data analysis practices and advance our understanding of eye movements and their underlying cognitive and neural mechanisms.

Author note

This research was supported by grants from the Natural Science and Engineering Research Council of Canada and the National Institutes of Health (R01 HD046526).

References

Blais, C., Jack, R. E., Scheepers, C., Fiset, D., & Caldara, R. (2008). Culture shapes how we look at faces. PLoS ONE, 3, e3022. doi:https://doi.org/10.1371/journal.pone.0003022

Caldara, R., & Miellet, S. (2011). iMap: A novel method for statistical fixation mapping of eye movement data. Behavior Research Methods, 43, 864–878. doi:https://doi.org/10.3758/s13428-011-0092-x

Dundas, E. M., Best, C. A., Minshew, N. J., & Strauss, M. S. (2012a). A lack of left visual field bias when individuals with autism process faces. Journal of Autism and Developmental Disorders, 42, 1104–1111. doi:https://doi.org/10.1007/s10803-011-1354-2

Dundas, E. M., Gastgeb, H., & Strauss, M. S. (2012b). Left visual field biases when infants process faces: A comparison of infants at high- and low-risk for autism spectrum disorder. Journal of Autism and Developmental Disorders, 42, 2659–2668. doi:https://doi.org/10.1007/s10803-012-1523-y

Ellis, A. E., Xiao, N. G., Lee, K., & Oakes, L. M. (2017). Scanning of own- versus other-race faces in infants from racially diverse or homogenous communities. Developmental Psychobiology, 59, 613–627. doi:https://doi.org/10.1002/dev.21527

Henderson, J. M. (2011). Eye movements and scene perception. In S. P. Liversedge, I. D. Gilchrist, & S. Everling (Eds.), The Oxford handbook of eye movements (pp. 593–606). Oxford, UK: Oxford University Press.

Lao, J., Miellet, S. B., Pernet, C., Sokhn, N., & Caldara, R. (2017). iMap4: An open source toolbox for the statistical fixation mapping of eye movement data with linear mixed modeling. Behavior Research Methods, 49, 559–575. doi:https://doi.org/10.3758/s13428-016-0737-x

Lewkowicz, D. J., & Hansen-Tift, A. M. (2012). Infants deploy selective attention to the mouth of a talking face when learning speech. Proceedings of the National Academy of Sciences, 109, 1431–1436. doi:https://doi.org/10.1073/pnas.1114783109

Liu, S., Quinn, P. C., Wheeler, A., Xiao, N. G., Ge, L., & Lee, K. (2011). Similarity and difference in the processing of same- and other-race faces as revealed by eye tracking in 4- to 9-month-olds. Journal of Experimental Child Psychology, 108, 180–189. doi:https://doi.org/10.1016/j.jecp.2010.06.008

Xiao, N. G., Quinn, P. C., Liu, S., Ge, L., Pascalis, O., & Lee, K. (2015). Eye tracking reveals a crucial role for facial motion in recognition of faces by infants. Developmental Psychology, 51, 744–757. doi:https://doi.org/10.1037/dev0000019

Yi, L., Fan, Y., Quinn, P. C., Feng, C., Huang, D., Li, J., . . . Lee, K. (2013). Abnormality in face scanning by children with autism spectrum disorder is limited to the eye region: Evidence from multi-method analyses of eye tracking data. Journal of Vision, 13(10), 5. doi:https://doi.org/10.1167/13.10.5

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Xiao, N.G., Lee, K. iTemplate: A template-based eye movement data analysis approach. Behav Res 50, 2388–2398 (2018). https://doi.org/10.3758/s13428-018-1015-x

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13428-018-1015-x