Abstract

Semantic feature production norms provide many quantitative measures of different feature and concept variables that are necessary to solve some debates surrounding the nature of the organization, both normal and pathological, of semantic memory. Despite the current existence of norms for different languages, there are still no published norms in Spanish. This article presents a new set of norms collected from 810 participants for 400 living and nonliving concepts among Spanish speakers. These norms consist of empirical collections of features that participants used to describe the concepts. Four files were elaborated: a concept–feature file, a concept–concept matrix, a feature–feature matrix, and a significantly correlated features file. We expect that these norms will be useful for researchers in the fields of experimental psychology, neuropsychology, and psycholinguistics.

Similar content being viewed by others

Numerous theories have sought to describe the features that form an essential part of semantic representations. From the earliest semantic-feature models, such as the one proposed by Smith, Shoben, and Rips (1974), to recent models of semantic representation, both feature-based (Cree & McRae, 2003; Taylor, Moss, & Tyler, 2007; Vigliocco, Vinson, Lewis, & Garrett, 2004) and distributional (Baroni, Murphy, Barbu, & Poesio, 2010; Griffiths, Steyvers, & Tenenbaum, 2007; Jones & Mewhort, 2007), many theories have studied the properties and organization of the semantic features that constitute concepts. One fruitful way to study these issues is by collecting semantic feature production norms. According to Buchanan, Holmes, Teasley, and Hutchison (2013), researchers’ need for such norms is growing.

Semantic feature production norms consist of empirical collections of features that people use to describe concepts. Data are obtained by implementing a property generation task (also named the feature-listing task): participants are asked to enumerate the features that best describe a certain set of concepts. This task and the resulting norms are relevant in diverse areas of psychology, and have been used over decades to solve theoretical and practical problems, but only recently some norms became publicly available. Nowadays, there are still no published norms in Spanish. That is why the aim of the present article is to describe a large set of semantic feature production norms in Spanish that have been collected for 400 living and nonliving thing concepts. In the first place, we will briefly explain the importance of the norms and its main uses. Then, we will describe the norms that are available in other languages. After that, we will offer a detailed description of our norms: we will expose the methods we implemented to record the features, the variables we considered, and the statistical analyses we performed.

Before starting the exposure of the aforementioned topics, it is important to note that we do not consider that the lists of features gathered in the norms form the conceptual representations themselves. If this is not taken into account, a series of problems would arise: for instance, consider the fact that some features that are important for certain concepts are rarely—if ever—listed by the participants who participate in the property generation task (e.g., the feature <has lungs> was never listed in our norms for any of the concepts that belong to the living things domain). The quantity and kinds of features produced by participants during the property generation task are influenced by the instruction they receive, the amount of time they count on, the salience of the different features, if they are verbalizable or not, and so forth. Therefore, it would be wrong to consider that the features gathered in the norms exhaust all the relevant information involved in the representation of different concepts. For a detailed analysis of the problems that emerge from the assumption that the norms are a verbatim record of conceptual representations, see Rogers et al. (2004). Considering this, we agree with what McRae, Cree, Seidenberg, and McNorgan (2005) assert: the norms do not provide an exact record of conceptual representations. Nonetheless, the information they provide is valid because participants employ systematically those representations when they generate semantic features. Like these researchers, we also agree with Barsalou’s proposal concerning how semantic features are produced in a property generation task: “participants construct a holistic simulation of the target category (e.g., a particular chair), and then interpret this simulation using property and relation simulators (e.g., property simulators for seat, back and legs). Feature listing simply reflects one of many possible temporary abstractions that can be constructed online to interpret a particular member” (Barsalou, 2003, p. 1184).

Importance of feature norms

The elaboration of semantic feature production norms is important for several reasons. In this section, we will consider some of the theoretical, experimental, and practical issues that could be faced and solved by means of the norms.

Regarding theoretical matters, it is worth noting that the norms provide many quantitative and qualitative measures of different feature variables that are necessary to resolve the debates surrounding the nature of the organization, both normal and pathological, of semantic memory. For example, according to some proposals (Farah, & McClelland, 1991; Warrington, & Shallice, 1984), feature type is the crucial factor that differentiates the concepts that belong to the living things domain from those that belong to the nonliving-things one, and therefore would be the key factor in the organization of semantic memory. Sensory features would be more important in the representation of the first kind of concepts, and functional features would be more relevant in the second kind. Plus, this systematic difference has been used to account for the patterns of damage that are characteristic of some kinds of semantic-category deficits (Warrington & Shallice, 1984). Other researchers assert that feature type is not the only important factor in the organization and eventual degradation of semantic memory, but that other variables (such as feature distinctiveness and feature correlations) are relevant as well. To assess these different claims, semantic feature production norms are very useful. This is shown, for example, in the investigations conducted by Cree and McRae (2003), who studied the factors that determine the pattern of semantic categories observed in category-specific semantic deficits and concluded that, besides knowledge type, other variables, such as feature informativeness, concept confusability, visual complexity, familiarity, and name frequency, also have influences. Moreover, Zannino, Perri, Pasqualetti, Caltagirone, and Carlesimo (2006) observed that the data regarding category composition vary depending on the selection of concept-dependent or concept-independent correlation measures. They also showed that feature dominance plays an important role on the analysis of the feature type that is relevant for either living or nonliving domains.

The debate surrounding the representational code (or codes) of conceptual knowledge is another interesting theoretical issue that can be addressed using feature norms. This is shown in a study conducted by Wu and Barsalou (2009), though these researchers worked with a property generation or feature listing task (the type of task we used to collect the norms) but not with norms themselves.

Another interesting research devoted to elucidate the relationship between different models of conceptual representation has been carried out by Riordan and Jones (2011). They compare and integrate distributional and feature-based models of semantic memory.

Semantic feature production norms have also been used to construct experimental stimuli. The norms provide a large number of variables that can be used to select stimuli. For example, researchers may need to select features with certain properties, such as high distinctiveness or relevance, to study their influence in certain phenomena, such as semantic interference (e.g., Vieth, McMahon, & de Zubicaray, 2014). They could also need to select concepts with specific characteristics, such as an elevated number of defining features (semantic richness), to analyze their effect on certain cognitive processes (e.g., Grondin, Lupker, & McRae, 2009; Pexman, Hargreaves, Siakaluk, Bodner, & Pope, 2008; Yap, Lim, & Pexman, 2015). Moreover, the norms are useful to measure semantic similarity between concepts in order to study semantic effects (Montefinese, Zannino, & Ambrosini, 2015; Vigliocco, Vinson, Damian, & Levelt, 2002; Vigliocco et al., 2004). For these and many other purposes, semantic feature production norms are the best database to obtain relevant information.

The norms can also have a practical application in the design of technological devices adapted to local needs, such as psychometric tools for clinical diagnosis and intervention. An example of this consists on the use of semantic similarity values (Moldovan, Ferré, Demestre, & Sánchez-Casas, 2014) as a measure of the strength of the relatedness of pairs of concepts selected as response options in matching to sample tasks. Another example consists on the selection of semantic features with high production frequency as semantic cues in naming tests (see, e.g., Manoiloff et al., 2013).

Existing norms

Semantic feature production norms have been collected by other researchers, especially in English. Perhaps the earliest feature collection tasks were carried out by Rosch and Mervis (1975), Ashcraft (1978), and Hampton (1979). More recent work has been done by Wu and Barsalou (2009), Devlin, Gonnerman, Andersen, and Seidenberg (1998), and Moss, Tyler, and Devlin (2002). Those bases were all built from English speakers and for concrete objects.

In spite of the relevance of those databases, none was publicly available. The first norms that became published are the ones collected by McRae and colleagues (McRae et al., 2005). These norms include 541 living and nonliving thing concepts and provide a large number of variables that characterize the concepts and its features. These norms were collected for English speaking population and include information regarding individual concepts, features in general, and features for each concept. They also include information about similarities between concepts.

Among the rest of the publicly available norms, it is worth mentioning those collected by Vinson and Vigliocco (2008), who have made an interesting contribution because their norms include not only concrete nouns referring to objects but also nouns and verbs referring to events. This information shed light into the differences in the representation of these types of grammatical categories. Recently, Buchanan et al. (2013) have developed a searchable web portal including these and McRae et al.’s (2005) norms, as well as other normative data. This allows researchers to create experimental stimuli in an easy way.

Another interesting collection of normative data has been developed by De Deyne et al. (2008) from the Dutch population. These norms provide useful data not only regarding semantic features, but also about the relation between the exemplar and the category, as well as other psycholinguistic variables related to the concepts (such as age of acquisition, familiarity, and imageability).

In the last years, other novel norms have been published. For example, Devereux, Tyler, Geertzen, and Randall (2014) collected data for 638 concepts, thus extending the number of concepts selected by McRae and colleagues and including features that were produced by at least two participants (in McRae et al.’s, 2005, norms, the production frequency of a feature instead had to be greater than or equal to five for that feature to be included). Other recent norms have been collected by Lenci et al. (2013), who included both blind and sighted participants. These norms comprise nouns and verbs and offer an interesting comparison between groups. Moreover, Lebani, Bondielli, and Lenci (2015) developed a normative study to characterize the semantic content of thematic roles for a group of Italian verbs.

In recent years, norms in some other languages have appeared: in Italian (Kremer & Baroni, 2011; Lenci et al., 2013; Montefinese, Ambrosini, Fairfield, & Mammarella 2013), German (Kremer & Baroni, 2011), and Dutch (Ruts et al., 2004). However, as far as we know, the feature production norms we are presenting here are the first ones for Spanish speakers. Although previous work has been done regarding linguistic corpus with Spanish and Argentinean populations (Peraita & Grasso, 2010), and regarding psycholinguistic variables involved in lexico-semantic processing (Isura, Hernández-Muñoz, & Ellis, 2005), these are the first Spanish norms concerning semantic feature production.

It is worth noting that, when we consider human communities that share their basic cultural foundations, studies have suggested no remarkable differences across languages regarding the features that participants use to characterize concepts (Kremer & Baroni, 2011). However, some linguistic and cultural factors do have an effect on feature production, and these differences deserve being reported. For instance, in McRae et al.’s (2005) norms the feature <polka> was produced by many participants for the concept accordion, but the feature <tango> was never mentioned; on the contrary, in our norms <tango> shows a high production frequency for the concept accordion, while <polka> was never listed. These, as well as other peculiarities, are captured by the development of local norms.

The Spanish norms

Participants

Our sample consisted of 810 undergraduate students (73 % women) of the National University of Mar del Plata, Argentina. Their ages ranged from 20 to 40 years old (mean = 24 years). All of them gave their informed consent to participate in this research. Every participant was a native Argentinean Spanish speaker.

Stimuli

The concepts were extracted from the data base built by Cycowicz, Friedman, Rothstein, and Snodgrass (1997). The following criteria were used for selection of the concepts: (a) these concepts and the corresponding pictures are frequently used in psychological experiments and tests about semantic memory, (b) these concepts correspond to those included in the expanded norms for experimental pictures in Argentinean Spanish-speaking population (Manoiloff, Artstein, Canavoso, Fernández, & Segui, 2010), and (c) most of them are included in McRae et al.’s norms (precisely, 229 concepts). Each concept corresponds to a single noun in Argentinean Spanish. In case of polysemic terms, a key was added to clarify the target meaning.

The norms include 400 concepts belonging to 22 semantic categories from the living and nonliving things domains. Next, we specify these categories and the number of exemplars that compose each of them (this number is indicated inside the parentheses next to each category name). Living things domain (129): animals (93), vegetables (12), fruits (15), and plants (9). Nonliving things domain (227): accessories (19), weapons (4), tools (33), constructions (17), house parts (10), clothing (17), utensils (29), furniture (14), vehicles (17), devices (13), objectsFootnote 1 (27), containers (16), and toys (11). Salient exceptions (44): food (6), musical instruments (14), body parts (19), and nature (5). Food, musical instruments, and body parts are exceptional cases because, according to the category-specific deficits literature they do not behave neither as nonliving nor living things (see Mahon & Caramazza, 2009). We also added the category nature as a salient exception because it includes concepts such as cloud and moon that are nonliving but, at the same time, are not manufactured by men. The concepts were also chosen to span a wide range of familiarity values, although a minimum value of familiarity was obviously required so participants could give useful information.

Data collection

The norms were collected over a period of 3 years at the National University of Mar del Plata (Argentina). Concepts were distributed in groups of 15 in different spreadsheets in such a way that categories were homogeneously represented. Each participant listed features for only one set of concepts. Care was taken to avoid including similar concepts in the same spreadsheet. By “similar concepts” we mean concepts that belong to the same semantic subcategory. For example, cat and ant appear together in a spreadsheet, but ant and spider do not, because both of them are not just animals, but also arthropods (same phylum).

Participants were asked to list the features that describe the things to which the presented words referred. They were provided with 15 blank lines per concept to write down their corresponding features. They were instructed to list different types of features, such as those regarding internal parts and physical properties (their appearance, sound, smell, or touch). They were also encouraged to think about where, when, and what for they use the object at issue, and to consider the category to which it belongs. Two examples were provided, one for each domain. The instructions that were employed are presented in Appendix A. In every case, 30 participants listed features for each concept. Participants were not given time limit; they took approximately 20–30 min to complete the task.

Recording

An individual file was created for each spreadsheet, assigning one page to each concept. Because a very large number of participants contributed to the construction of the norms, their spontaneous answers obviously consisted in a quite large variety of ways of expressing the same features. For instance, to characterize the concept sun some participants wrote “yellow,” whereas others wrote “is yellow” or “its color is yellow.” The variety of spontaneous features is even wider in Spanish than, for instance, in English because in that language adjectives can be feminine or masculine and singular or plural, for they vary in concordance with the noun they qualify. To deal with variability, it was necessary to do an extensive work to ensure that features that conveyed the same meaning were recorded identically, both within each concept and among them. It was relevant as well to ensure that features that had dissimilar meanings were recorded with different labels. These recording procedures constituted a process named unification. This data treatment implies the adjustment of most of the features produced by participants, but it must be executed avoiding the alteration of the original content of those features. There are at least two important reasons to unify the features. First of all, the norms intend to capture the regularities in the production of semantic features; therefore, the wide variety of spontaneous formulations of those features must be reduced. Otherwise, the vast information provided by the norms would be useless, and its analysis would be impossible. In the second place, the unification of the features is mandatory in order to correctly compute many feature variables: on the one hand, variables such as production frequency (i.e., the number of participants who wrote a certain feature within a specific concept) would be erroneously calculated if features were not unified inside each concept. On the other hand, variables such as distinctiveness (that depends on the quantity of concepts in which a certain feature is listed) would not be correctly calculated if features were not unified among all concepts. Considering these reasons, we respected the recording criteria proposed by McRae and colleagues (2005); but we had to add new criteria to perform this process successfully. Next, we report the most important criteria we employedFootnote 2:

All features consisting in adjectives were written as singular and masculine independently of the number and gender of the corresponding concept.

Quantifiers (e.g., “generally” or “usually”) were eliminated, because the information provided by these words is expressed by the production frequency of the feature.

To identify the features that referred to a subtype of a concept, we used the expression <can be> (e.g., for the concept apple, <can be red> and <can be green> were used).Footnote 3

The features constituted by a quantifier adjective preceding a noun such as “has four legs,” were divided into two separate features: <has four legs> and <has legs>. This decision was taken because two bits of information are contained in features like these, and we intended to preserve both.

Disjunctive features (such as “is red or black” in the case of ant) were also divided (in this example, into <is red> and <is black>). However, if a feature conveyed a conjunction (such as “is black and yellow,” in the case of the concept bee), it was not divided.

In some cases, some words were added to the features. For example, an indefinite article (“a” or “an”) was added to the features that referred to superordinated categories (for instance, “animal” was transformed into <an animal>), and the expression “used for” was incorporated into the features that referred to a function (for example, the feature “to carry things” was transformed into <used for carrying things>).

Every feature that consisted in a verb was conjugated in the indicative mood of the present tense (e.g., “roar” was transformed into <roars> in the case of the concept lion).

The word “has” was added to every feature that made reference to the possession of a certain part or object; and that word replaced any synonym of it, such as “possesses,” and any other word that conveyed a similar meaning, such as “with” (e.g., in the case of the concept lion, the features “possesses a mane,” “with a mane,” and “mane” were all replaced by <has a mane>).

Measures and statistics

In the following paragraphs, the measures contained in the norms and the statistics calculated from them will be described.

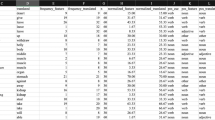

The total number of features produced by participants was 21,630. However, it is important to remark that we only included those features that were produced by at least five participants, as McRae and colleagues (2005) did.Footnote 4 We did not take into account those features whose production frequency values were lower than 5, because we considered that they were not representative of the knowledge that the community has about the concepts at issue. Consequently, only 3,064 features were kept, 766 of which are distinctive. The mean of the features produced by each participant was 5.82 (SD 2.25; Max. 17–Min. 1).

Four files were elaboratedFootnote 5: a concept–feature file, a concept–concept matrix, a feature–feature matrix, and a significantly correlated features file. Next, we will describe each of these files.

File 1. concept–feature

The first and second columns of this file correspond to the concept name in Spanish and English; the two following columns correspond to the semantic-category name in both languages; then, the feature name in both languages is shown. The following column corresponds to the variable feature type, according to the coding scheme proposed by Wu and Barsalou (2009) in an updated version sent personally by the last author. These researchers considered five major categories: taxonomic categories (C), situation properties (S), entity properties (E), introspective properties (I), and miscellaneous (M). These categories are represented in the column named WB_Label with a capital letter as indicated above. In the present norms, 520 features were coded as taxonomic categories, 1,064 as situational properties, 1,383 as entity properties, and 97 as introspective properties. Wu and Barsalou (2009) also included a more detailed feature type classification, which is expressed in lowercase after the hyphen (to see the complete coding scheme, go to Appx. B). Some features conveyed information that was related to more than one classification category; in spite of this, in general terms we decided to allude to just one of these categories, following McRae and colleagues’ (2005) criterion. Nonetheless, there were some exceptions, such as the following: the features that alluded to quantities (such as <has two wings>) were codified as E-quant + the corresponding feature category (in this example, E-excomp), and those that included negations (like <cannot fly>) were codified as I-neg + the corresponding feature category (in this example, E-beh). After that, four columns were included with the amount of each type of feature within the concept at issue according to Wu and Barsalou’s (2009) major categories. (The miscellaneous category was excluded, because no single feature in our norms corresponds to it.)

The following columns correspond to production frequency (Prod_Freq)—that is, the number of participants who wrote that feature within the concept at issue; ranked production frequency (Rank_PF)—that is, the rank according to production frequency of the feature at issue with respect to the rest of the features within the concept; total production frequency (Sum_PF), which expresses the sum of the production frequencies of that feature across all concepts in which it appears; and CPF, which indicates the number of concepts in which that feature appears. Two measures related to CPF, which reflect whether or not the feature is shared among concepts, are also included: a qualitative binary variable (Disting) that indicates whether or not the feature is distinguishing by considering whether it appears only in one or two concepts, or in more than two; and Distinctiveness, a quantitative continuous measure that indicates the position of the feature in a range that goes from truly distinguishing features to highly shared ones (Devlin et al., 1998; Garrard, Lambon Ralph, Hodges, & Patterson, 2001). This last variable was calculated, as McRae and colleagues (2005) indicated, as the inverse of the number of concepts in which the feature appears in the norms (i.e., 1/CPF). In concert with these last researchers, this variable was calculated considering all the concepts included in the norms, instead of only taking into account the concepts that constitute a particular semantic category. Cue validity, which was calculated as the production frequency of the feature divided by the sum of the production frequencies of that feature for all the concepts in which it appears, was also added. It is important to note here that, unlike McRae and colleagues, we included taxonomic features in this last calculus. The reason for this is that this calculus does not include other features (as it is the case, for example, of intercorrelational strength), so it does not mix taxonomic features with other kind of features. Consequently, we provide researchers with information about taxonomic features that could be of interest without generating interference in the other features` measures.

We also included another feature property with (as the ones that were mentioned previously) demonstrated influence in different cognitive processes, named relevance (Rel) (Marques, Cappa, & Sartori, 2011; Sartori & Lombardi, 2004; Sartori, Lombardi, & Mattiuzzi, 2005; Sartori, Gnoato, Mariani, Prioni, & Lombardi, 2007). This variable is closely related to the distinguishing/nondistinguishing variable, distinctiveness and cue validity, because the four of them are measures of feature informativeness. In spite of this tight relationship, which explains the high correlation that exists among these variables, their importance differs in diverse kinds of tasks (as was shown in Sartori et al., 2005). This is the reason why we decided to include all of them in the Spanish norms. Relevance integrates two different components: a local one that may be interpreted as production frequency or dominance, which expresses the importance of a certain feature for a particular concept, and a global one that may be interpreted as distinctiveness, which expresses to what extent the feature at issue contributes to the meaning of the rest of the concepts. To calculate the values of this variable, we used the equation employed by Sartori et al. (2007):

In this equation, kij represents the relevance value of a feature j for a concept i, lij stands for the local component of relevance, and gj represents its global component. lij, which is equivalent to xij, is the production frequencyFootnote 6 of feature j over concept i. gj is equivalent to log (I/Ij), where I stands for the total number of concepts that constitute the data base at issue, and Ij represents the number of concepts of that data base in which feature j occurs.

Regarding these last four variables, it is essential to consider that as the norms only include a limited number of concepts, they cannot reflect with complete accuracy the actual distinctive quality of the features, because those features can also be defining of concepts that were not included in the norms. To solve this limitation, Devereux and colleagues (2014) decided to include related concepts for each of the categories that conformed their norms, in order to avoid having just one concept within certain semantic categories (e.g., they included at least two kinds of flowers). However, this proposal does not insure to solve the problem completely because the norms still constitute a limited sample of the universe of existing concepts. Moreover, the criteria they used to define what they considered to be a “related concept” is not clear. A different proposal regarding this problem was presented by Sartori et al. (2005), who focused on relevance in particular. These researchers highlighted that this variable may be greatly influenced by the total number of concepts contained in a certain normative database. To investigate this influence, they compared the relevance values of diverse features when computed in sets of different sizes (containing 50, 100, 150, and 254 concepts, respectively). The results they obtained indicated that the relevance values of the features that were calculated using the smaller samples predicted with high accuracy the relevance values that were obtained when the 254-concept normative database was used. Despite the fact that these findings reveal the stability of the relevance values from sample to sample, the original problem remains unresolved: the available databases do not exhaust the vastness of our conceptual knowledge.

Some physical characteristics of features’ and concepts’ names were also included, such as feature length including spaces between words (Feat_Lenght_Including_Spaces), and numbers of letters (Length_Letters), phonemes (Length_Phonemes), and syllables (Length_Syllables) of the concepts. Another reported concept variable is familiarity (Familiarity), which was extracted from the Argentinean psycholinguistic norms (Manoiloff et al., 2010).

Other variables refer to the concept’s feature composition. We included here: the number of features used to define the concept, including every produced feature (even those produced by just one person) (Total_Feat); the amount of features produced by at least five people, including taxonomic features (5_Feat_Tax), and excluding them (5_Feat_No_Tax). The reason to include these variants of the variable at issue is that they have been used as a measure of semantic richness but authors do not always agree in the criteria used to delimitate which features to consider (e.g., Pexman, Lupker, & Hino, 2002; Pexman et al., 2008; Yap, Pexman, Wellsby, Hargreaves, & Huff, 2012). Some authors exclude taxonomic features because they consider these features convey a different type of information than the rest of them. That is why we decided to give the interested reader the three options.

In this file, we also included four concept variables derived from the feature–feature matrix. The first is intercorrelational strength (Intercor_Strength_No_Tax) of the concept’s features, which is the strength with which a target feature (e.g., <is golden>) is correlated with the rest of the features of certain concept (e.g., bell). It is calculated by adding the features’ shared variances (i.e., r 2) with the rest of the features of the same concept. For this calculus, we considered a level of significance of p ≤ .05, which corresponds to a |r| > .164 (that is a 2.7 % of shared variance; Sheskin, 2007). The second is intercorrelational density (Density_No_Tax), which is the sum of r 2 for the concept’s significantly correlated features. This is a measure of the degree with which a concepts’ features are intercorrelated. Whereas intercorrelational strength is a feature variable, intercorrelational density is a concept variable. Both variables were calculated excluding taxonomic features. This decision was taken because we considered that other kinds of features have an asymmetrical relation with taxonomic features and are included directly in the definition of the taxonomic category itself. For example, to say that something is <an_animal> includes the idea that it can have hair (<has_hair>) or legs (<has_legs>).

The last two variables are the number of significantly correlated feature pairs in concepts excluding taxonomic features (Num_Correl_Pairs_No_Tax) and the percentage of significantly correlated feature pairs excluding taxonomic features as well (%_Correl_Pairs_No_Tax).

Other potentially relevant concept variables regarding their feature composition were included. To calculate some of these variables, taxonomic features were excluded: number of distinguishing features (Num_Disting_Feats_No_Tax), percentage of distinguishing features (Disting_Feats_%_No_Tax), mean distinctiveness (Mean_Distinct_No_Tax), and mean cue validity (Mean_CV_No_Tax).

Another concept variable, derived from the concept–concept matrix, was mean correlation (Mean_Corr). This variable is similar to the notion of normalized centrality degree (Freeman, 1979), according to which concepts are considered as vectors of features and concept proximities are a resultant of the number of shared features. These relations can then be represented as a two dimensional semantic network. Consequently, the normalized centrality degree would be the calculus that links the actual relations among concepts with their potential relations, expressed as a percentage.

File 2. concept–concept matrix

This matrix is composed of the 400 concepts and reflects the semantic distances between every pair of concepts according to their featural composition. Semantic distances were calculated by establishing the correlation between concepts using the geometric technique of comparing two vectors in the n-dimensional Euclidean space by the (least) angle between them. Parallelism (i.e., a cosine equal to 1 or –1) represents the maximum similarity, and orthogonality (a cosine equal to 0), the maximum difference. The computation of that angle (or actually its cosine) was made in the usual way, computing the ratio between the “component wise” inner product and the product of the respective Euclidean norms. It is worth mentioning that the idea of measuring the semantic distance through the construction of two vectors from a set of features that defines a certain concept was originally proposed by Kintsch (2001).

As a result of this calculation, we generated a mode-1 squared matrix (Borgatti & Everett, 1997) considering the semantic distances between pairs of concepts. To verify the validity of this matrix, a method to analyze emergent clusters (Johnson, 1967) was applied, using Ucinet 6 (Borgatti, Everett & Freeman, 2002). These clusters are depicted in Fig. 1. It is important to note that the aim of this figure is just to illustrate the clustering. The full information regarding the concepts’ correlations can be found in the concept–concept matrix.

As can be seen in Fig. 1, concepts that belong to the same semantic category are clustered together. In other words, the more features that overlap, the greater the proximity among concepts. For example, animals, means of transport, clothing, and musical instruments are clearly clustered, whereas concepts such as anchor or bird nest, which do not share many features with other concepts, do not show links with any of them. It is worth noting that this plot was built considering only those correlations ≥.4. The clusters’ sizes might slightly vary if this cutting point were modified.

File 3. feature–feature matrix

To construct this matrix, following McRae et al.’s (2005) criterion, only the features that were shared among at least three concepts were included. This allowed to avoid spurious correlations between features. Of the 1,315 total features produced, 186 were selected considering the criterion mentioned above. The final squared matrix in which the statistical co-occurrence was calculated contained 17,205 feature pairs, which were derived from the multiplication of 186 by 186 and subtracting 186 (because a squared matrix includes the combinations of each feature with itself), then divided by 2, due to the symmetric nature of the matrix.

Correlations were calculated using the CORREL function of Google Spreadsheets service. From the resulting squared matrix, the determination coefficient was calculated by squaring each correlation value and multiplying them per 100. As a result, we obtained a second squared matrix.

From this last matrix, we extracted some of the previously mentioned variables: features’ intercorrelational strength (Intercorr_Str_No_Tax), concepts’ density (Density_No_Tax), the number of significantly correlated feature pairs (Num_Corred_Pairs_No_Tax), and the percentage of significantly correlated feature pairs (%_Corred_Pair_No_Tax).

File 4. significantly correlated feature pairs

This file includes the r 2 value of each pair of features that was significantly correlated, excluding taxonomic features.

Summary and future directions

Semantic feature production norms are crucial for the construction and empirical testing of theories about semantic memory and conceptual knowledge. Their importance has been shown over the years in numerous investigations, by means of which several problems regarding those topics have been faced.

In this article, we have presented the first semantic feature production norms in Spanish language. These norms cover a large set of concepts and include many of the most relevant feature and concept measures reported in previous norms. We expect this information will be very useful for researchers in the fields of experimental psychology, neuropsychology, and psycholinguistics.

Because feature norms have mostly been developed with adult populations, it would be very useful to investigate semantic feature norms for other age groups. We are currently collecting norms for elderly people and children, in order to explore developmental similarities and differences.

Another interesting line of research that makes use of the currently existing semantic feature norms consists in comparing the concepts’ semantic compositions among different languages. A study regarding this issue has been carried out by Kremer and Baroni (2011), who compared Italian, German, and English. Now it would also be possible to include Spanish in subsequent comparisons. We are currently exploring this topic (Lamas et al. 2012; Vorano et al. 2014).

Norms can also be used to identify and describe the features that constitute the core meaning of a concept, as well as the features that are more peripheral. This information is relevant because, as many authors have shown (e.g., Montefinese, Ambrosini, Fairfield, & Mammarella, 2013; Sartori & Lombardi, 2004), not every feature is equally important for the representations of diverse concepts. We are currently developing a line of research about this topic. Furthermore, we are also calculating a new measure called “relative weight,” which is very similar to accessibility (proposed by Montefinese et al., 2013).

To sum up, the existence of semantic feature production norms allows us to construct theoretical models, test hypotheses, generate experimental stimuli, and create clinical tests. The great relevance of each of these purposes is what has motivated the construction of the various existing norms, including the present Spanish semantic feature production norms.

Notes

This category refers to the nonliving things concepts that were not included in any other category of that domain. Cree and McRae (2003) used the term “miscellaneous nonliving-things category” to refer to this type of concept, but we chose the term “object” because this word supplies more information about the concepts it includes.

A much more extended list of unification criteria can be requested from the authors of this article.

The reader will note that the expression “can be” (puede ser in Spanish) was only used in the Spanish version of the features. In the English version, that expression was replaced by “E.g.,” because this is what McRae and colleagues (2005) used.

Zannino et al. (2006) made an interesting comment about the exclusion of many features due to the application of this production frequency cutoff.

These four files contain concepts and/or features in both Spanish and English. However, it is important to take into account that the English version has been published merely to facilitate communication with English readers. Therefore, the information that those files contain is not itself meant to be used as English normative data.

It is important to note that to calculate relevance we used the raw production frequencies instead of dominance (this last feature variable is calculated as the ratio between the production frequency of a certain feature and the number of participants presented with the concept who produced that feature). We employed raw production frequencies because in our norms every concept was always presented to 30 participants. Sartori et al. (2007) also employed raw production frequencies, but they used the terms “production frequency” and “dominance” as synonyms. On the contrary, some authors (such as Montefinese et al., 2013) have used dominance to calculate relevance because they presented their concepts to variable numbers of participants.

References

Ashcraft, M. H. (1978). Property norms for typical and atypical items from 17 categories: A description and discussion. Memory & Cognition, 6, 227–232. doi:10.3758/BF03197450

Baroni, M., Murphy, B., Barbu, E., & Poesio, M. (2010). Strudel: A corpus-based semantic model based on properties and types. Cognitive Science, 34, 222–254.

Barsalou, L. W. (2003). Abstraction in perceptual symbol systems. Philosophical Transactions of the Royal Society B, 358, 1177–1187.

Borgatti, S. P., & Everett, M. G. (1997). Network analysis of 2-mode data. Social Networks, 19, 243–269.

Borgatti, S.P., Everett, M.G., & Freeman, L.C. (2002). Ucinet for Windows: Software for Social Network Analysis. Harvard, MA: Analytic Technologies.

Buchanan, E. M., Holmes, J. L., Teasley, M. L., & Hutchison, K. A. (2013). English semantic word-pair norms and a searchable Web portal for experimental stimulus creation. Behavior Research Methods, 45, 746–757. doi:10.3758/s13428-012-0284-z

Cree, G. S., & McRae, K. (2003). Analyzing the factors underlying the structure and computation of the meaning of chipmunk, cherry, chisel, cheese, and cello (and many other such concrete nouns). Journal of Experimental Psychology: General, 132, 163–201. doi:10.1037/0096-3445.132.2.163

Cycowicz, Y. M., Friedman, D., Rothstein, M., & Snodgrass, J. G. (1997). Picture naming by young children: Norms for name agreement, familiarity, and visual complexity. Journal of Experimental Child Psychology, 65, 171–237. doi:10.1006/jecp.1996.2356

De Deyne, S., Verheyen, S., Ameel, E., Vanpaemel, W., Dry, M. J., Voorspoels, W., & Storms, G. (2008). Exemplar by feature applicability matrices and other Dutch normative data for semantic concepts. Behavior Research Methods, 40, 1030–1048. doi:10.3758/BRM.40.4.1030

Devereux, B. J., Tyler, L. K., Geertzen, J., & Randall, B. (2014). The Centre for Speech, Language and the Brain (CSLB) concept property norms. Behavior Research Methods, 46, 1119–1127. doi:10.3758/s13428-013-0420-4

Devlin, J. T., Gonnerman, L. M., Andersen, E. S., & Seidenberg, M. S. (1998). Category-specific semantic deficits in focal and widespread brain damage: A computational account. Journal of Cognitive Neuroscience, 10, 77–94.

Farah, M. J., & McClelland, J. L. (1991). A computational model of semantic memory impairment: Modality specificity and emergent category specificity. Journal of Experimental Psychology: General, 120, 339–357. doi:10.1037/0096-3445.120.4.339

Freeman, L. C. (1979). Centrality in social networks: Conceptual clarification. Social Networks, 1, 215–239.

Garrard, P., Lambon Ralph, M. A., Hodges, J. R., & Patterson, K. (2001). Prototypicality, distinctiveness, and intercorrelation: Analyses of the semantic attributes of living and nonliving concepts. Cognitive Neuropsychology, 18, 125–174.

Griffiths, T. L., Steyvers, M., & Tenenbaum, J. B. (2007). Topics in semantic representation. Psychological Review, 114, 211–244. doi:10.1037/0033-295X.114.2.211

Grondin, R., Lupker, S. J., & McRae, K. (2009). Shared features dominate semantic richness effects for concrete concepts. Journal of Memory and Language, 60, 1–19. doi:10.1016/j.jml.2008.09.001

Hampton, J. A. (1979). Polymorphous concepts in semantic memory. Journal of Verbal Learning and Verbal Behavior, 18, 441–461.

Isura, C., Hernández-Muñoz, N., & Ellis, A. (2005). Category norms for 500 Spanish words in five semantic categories. Behavior Research Methods, 37, 385–397.

Johnson, S. (1967). Hierarchical clustering schemes. Psychometrika, 32, 241–253.

Jones, M. N., & Mewhort, D. J. K. (2007). Representing word meaning and order information in a composite holographic lexicon. Psychological Review, 114, 1–37. doi:10.1037/0033-295X.114.1.1

Kintsch, W. (2001). Predication. Cognitive Science, 25, 173–202. doi:10.1207/s15516709cog2502_1

Kremer, G., & Baroni, M. (2011). A set of semantic norms for German and Italian. Behavior Research Methods, 43, 97–109. doi:10.3758/s13428-010-0028-x

Lamas, V., Vivas, J., & Vorano, A. (2012). Comparación de atributos semánticos entre diferentes lenguas. Poster session presented at the IV Congreso Internacional de Investigación y Práctica Profesional en Psicología, Buenos Aires, Argentina. Available at www.aacademica.org/000-072/193.pdf

Lebani, G. E., Bondielli, A., & Lenci, A. (2015). You are what you do: An empirical characterization of the semantic content of the thematic roles for a group of Italian verbs. Journal of Cognitive Science, 16, 399–428.

Lenci, A., Baroni, M., Cazzolli, G., & Marotta, G. (2013). BLIND: A set of semantic feature norms from the congenitally blind. Behavior Research Methods, 45, 1218–1233.

Mahon, B. Z., & Caramazza, A. (2009). Concepts and categories: A cognitive neuropsychological perspective. Annual Review Psychology, 60, 27–51. doi:10.1146/annurev.psych.60.110707.163532

Manoiloff, L., Artstein, M., Canavoso, M., Fernández, L., & Segui, J. (2010). Expanded norms for 400 experimental pictures in an Argentinean Spanish speaking population. Behavior Research Methods, 42, 452–460. doi:10.3758/BRM.42.2.452

Manoiloff, L., Fernández, L., Del Boca, M. L., Andreini, C., Fuentes, M., Vivas, L., & Segui, J. (2013). Desarrollo del test argentino psicolingüístico de denominación de imágenes (PADPI). Article presented at the XXXIV Congreso Interamericano de Psicología, Brasilia, Brazil.

Marques, J. F., Cappa, S., & Sartori, G. (2011). Naming from definition, semantic relevance and feature type: The effects of aging and Alzheimer’s disease. Neuropsychology, 25, 105–113.

McRae, K., Cree, G. S., Seidenberg, M. S., & McNorgan, C. (2005). Semantic feature production norms for a large set of living and nonliving things. Behavior Research Methods, 37, 547–559. doi:10.3758/BF03192726

Moldovan, C., Ferré, P., Demestre, J., & Sánchez-Casas, R. (2014). Semantic similarity: Normative ratings for 185 Spanish noun triplets. Behavior Research Methods, 47, 788–799.

Montefinese, M., Ambrosini, E., Fairfield, B., & Mammarella, N. (2013). Semantic memory: A feature-based analysis and new norms for Italian. Behavior Research Methods, 45, 440–461. doi:10.3758/s13428-012-0263-4

Montefinese, M., Ambrosini, E., Fairfield, B., & Mammarella, N. (2014). Semantic significance: A new measure of feature salience. Memory & Cognition, 42, 355–369. doi:10.3758/s13421-013-0365-y

Montefinese, M., Zannino, G. D., & Ambrosini, E. (2015). Semantic similarity between old and new items produces false alarms in recognition memory. Psychological Research, 79, 785–794. doi:10.1007/s00426-014-0615-z

Moss, H. E., Tyler, L. K., & Devlin, J. T. (2002). The emergence of category-specific deficits in a distributed semantic system. In E. M. E. Forde & G. W. Humphreys (Eds.), Category-specificity in brain and mind (pp. 115–146). Hove: Psychology Press.

Peraita, H., & Grasso, L. (2010). Corpus lingüístico de definiciones de categorías semánticas de sujetos ancianos sanos y con la enfermedad de Alzheimer: Una investigación transcultural hispano-argentina. Ianua. Revista Philologica Romanica, 10, 203–221.

Pexman, P. M., Hargreaves, I. S., Siakaluk, P. D., Bodner, G. E., & Pope, J. (2008). There are many ways to be rich: Effects of three measures of semantic richness on visual word recognition. Psychonomic Bulletin & Review, 15, 161–167. doi:10.3758/PBR.15.1.161

Pexman, P. M., Lupker, S. J., & Hino, Y. (2002). The impact of feedback semantics in visual word recognition: Number-of-features effects in lexical decision and naming tasks. Psychonomic Bulletin & Review, 9, 542–549.

Riordan, B., & Jones, M. N. (2011). Redundancy in perceptual and linguistic experience: Comparing feature-based and distributional models of semantic representation. Topics in Cognitive Science, 3, 303–345. doi:10.1111/j.1756-8765.2010.01111.x

Rogers, T. T., Garrad, P., McClelland, J. L., Lambon Ralph, M. A., Bozeat, S., Hodges, J. R., & Patterson, K. (2004). Structure and deterioration of semantic memory: A neuropsychological and computational investigation. Psychological Review, 111, 205–235.

Rosch, E., & Mervis, C. B. (1975). Family resemblances: Studies in the internal structure of categories. Cognitive Psychology, 7, 573–605. doi:10.1016/0010-0285(75)90024-9

Ruts, W., De Deyne, S., Ameel, E., Vanpaemel, W., Verbeemen, T., & Storms, G. (2004). Dutch norm data for 13 semantic categories and 338 exemplars. Behavior Research Methods, Instruments, & Computers, 36, 506–515. doi:10.3758/BF03195597

Sartori, G., Gnoato, F., Mariani, I., Prioni, S., & Lombardi, L. (2007). Semantic relevance, domain specificity and the sensory/functional theory of category-specificity. Neuropsychologia, 45, 966–976.

Sartori, G., & Lombardi, L. (2004). Semantic relevance and semantic disorders. Journal of Cognitive Neuroscience, 16, 439–452. doi:10.1162/089892904322926773

Sartori, G., Lombardi, L., & Mattiuzzi, L. (2005). Semantic relevance best predicts normal and abnormal name retrieval. Neuropsychologia, 43, 754–770. doi:10.1016/j.neuropsychologia.2004.08.001

Sheskin, D. J. (2007). Handbook of parametric and nonparametric statistical procedures (4th ed.). Boca Raton: Chapman & Hall/CRC.

Smith, E. E., Shoben, E. J., & Rips, L. J. (1974). Structure and process in semantic memory: A feature model for semantic decisions. Psychological Review, 81, 214–241.

Taylor, K. I., Moss, H. E., & Tyler, L. K. (2007). The conceptual structure account: A cognitive model of semantic memory and its neural instantiation. In J. Hart & M. Kraut (Eds.), The neural basis of semantic memory (pp. 265–301). Cambridge: Cambridge University Press.

Vieth, H. E., McMahon, K. L., & de Zubicaray, G. I. (2014). The roles of shared versus distinctive conceptual features in lexical access. Frontiers in Psychology, 16, 1014.

Vigliocco, G., Vinson, D. P., Damian, M. F., & Levelt, W. (2002). Semantic distance effects on object and action naming. Cognition, 85, B61–B69. doi:10.1016/S0010-0277(02)00107-5

Vigliocco, G., Vinson, D. P., Lewis, W., & Garrett, M. F. (2004). Representing the meanings of object and action words: The featural and unitary semantic space hypothesis. Cognitive Psychology, 48, 422–488. doi:10.1016/j.cogpsych.2003.09.001

Vinson, D. P., & Vigliocco, G. (2008). Semantic feature production norms for a large set of objects and events. Behavior Research Methods, 40, 183–190. doi:10.3758/BRM.40.1.183

Vorano, A., Zapico, G., Corda, L., Vivas, J., & Vivas, L. (2014). Comparación de atributos semánticos entre castellano rioplatense e inglés. Poster session presented at the VI Congreso Marplatense de Psicología, Mar del Plata.

Warrington, E. K., & Shallice, T. (1984). Category specific semantic impairments. Brain, 107, 829–853. doi:10.1093/brain/107.3.829

Wu, L.-L., & Barsalou, L. W. (2009). Perceptual simulation in conceptual combination: Evidence from property generation. Acta Psychologica, 132, 173–189. doi:10.1016/j.actpsy.2009.02.002

Yap, M. J., Lim, G. Y., & Pexman, P. M. (2015). Semantic richness effects in lexical decision: The role of feedback. Memory & Cognition, 43, 1148–1167.

Yap, M. J., Pexman, P. M., Wellsby, M., Hargreaves, I. S., & Huff, M. J. (2012). An abundance of riches: Cross-task comparisons of semantic richness effects in visual word recognition. Frontiers in Human Neuroscience, 6(72), 1–10. doi:10.3389/fnhum.2012.00072

Zannino, G. D., Perri, R., Pasqualetti, P., Caltagirone, C., & Carlesimo, G. A. (2006). Analysis of the semantic representations of living and nonliving concepts: A normative study. Cognitive Neuropsychology, 23, 515–540. doi:10.1080/02643290542000067

Author note

This research was funded by a grant given by the National University of Mar del Plata (Argentina), Project Codes 15/H178 and 15/H209. We thank every student from the National University of Mar del Plata who collaborated with this research. We are also very grateful to Francisco Lizarralde, who contributed to our calculation of the concept–concept matrix; Nicolás Kruk and Cecilia Lagorio, who contributed to our calculation of the feature–feature matrix and its derived variables; and Andrea Menegotto and Sofia Romanelli, for their collaboration with calculating the linguistic variables.

Author information

Authors and Affiliations

Corresponding author

Appendices

APPENDIX A: instructions for participants

This experiment is part of an investigation into how people give meaning to the words they read. On the next page are a series of words. Please list all the features that come to your mind to describe the concept to which each word refers. You can write down different characteristics: physical properties, internal parts, their appearance, and their sounds, smell, or touch. You can think about what for, where, and when it is used, and the category to which it belongs. Here you can see two examples of the kinds of features that people give:

Knife | Swallow |

Cuts | Is a bird |

Is dangerous | Is an animal |

Found in kitchens | Flies |

Is a weapon | Emigrates |

Is a utensil | Lays eggs |

Cutlery | Has wings |

Has a beak | |

Poetry | |

Has feathers | |

Lives in balconies | |

Lives in the water | |

Spring |

Appendix B

Taxonomic Categories (C) A category in the taxonomy to which a concept belongs.

Synonym (C-syn) A synonym of a concept (e.g., car–AUTOMOBILE; cat–FELINE).

Ontological category (C-ont) A category for a basic kind of thing in existence, including thing, substance, object, human, animal, plant, location, time, activity, event, action, state, thought, emotion (e.g., cat–ANIMAL; computer–OBJECT).

Superordinate (C-super) A category one level above a concept in a taxonomy (e.g., car–VEHICLE; apple–FRUIT).

Coordinate (C-coord) Another category in the superordinate category to which a concept belongs (e.g., apple–ORANGE; oak–ELM).

Subordinate (C-subord) A category one level below the target concept in a taxonomy (e.g., chair–ROCKING CHAIR; frog–TREE FROG).

Individual (C-indiv) A specific instance of a concept (e.g., car–MY CAR; house–MY PARENTS’ HOUSE).

Situation Properties (S) A property of a situation, where a situation typically includes one or more agents, at some place and time, engaging in an event, with one or more entities in various semantic roles (e.g., picnic, conversation, vacation, meal).

Person (S-person) An individual person or multiple people in a situation (e.g., toy–CHILDREN; car–PASSENGER; furniture–PERSON).

Living thing (S-living) A living thing in a situation that is not a person, including other animals and plants (e.g., sofa–CAT; park–GRASS).

Object (S-object) An inanimate object in a situation, except buildings (e.g., watermelon–on a PLATE; cat–scratch SOFA).

Social organization (S-socorg) A social institution, a business, or a group of people or animals in a situation (e.g., freedom–GOVERNMENT; radio–K-MART; picnic–FAMILY; dog–PACK).

Social artifact (S-socart) A relatively abstract entity—sometimes partially physical (book) and sometimes completely conceptual (verb)—created in the context of socio-cultural institutions (e.g., farm–a book (about), a movie (about); invention–a group project; to carpet–a verb).

Building (S-build) A building in a situation (e.g., book-LIBRARY; candle-CHURCH)

Location (S-loc) A place in a situation in which an entity can be found, or in which people engage in an event or activity (e.g., car–IN A PARK; buy–IN PARIS).

Spatial relation (S-spat) A spatial relation between two or more things in a situation (e.g., watermelon–the ants crawled ACROSS the picnic table; vacation–we slept BY the fire).

Time (S-time) A time period associated with a situation or with one of its properties (e.g., picnic–FOURTH OF JULY; sled–DURING THE WINTER). When an event is used as a time (e.g., muffin-BREAKFAST), code the event as S-event.

Action (S-action) An action (not introspective) that an agent (human or non-human) performs intentionally in a situation (e.g., shirt–WEAR; apple-EAT). When the action is chronic and/or characteristic of the entity, use E-beh.

Event (S-event) A stand-alone event or activity in a situation in which the action is not foregrounded but is on a relatively equal par with the setting, agents, entities, and so forth (e.g., watermelon–PICNIC, car–TRIP; church–WEDDING). Use SA when the action is foregrounded (e.g., use SEV for church–MARRY vs. but use SA for church–WEDDING).

Manner (S-manner) The manner in which an action or event is performed in a situation (e.g., watermelon–SLOPPY eating; car–FASTER than walking). Typically the modification of an action in terms of its quantity, duration, style, and so forth. Code the action itself as S-action, S-event, or E-beh.

Function (S-func) A typical goal or role that an entity serves for an agent in a situation by virtue of its physical properties with respect to relevant actions (e.g., car–TRANSPORTION; clothing–PROTECTION).

Physical state (S-physt) A physical state of a situation or any of its components except entities whose states are coded with ESYS, and social organizations whose states are coded with SSS (e.g., mountains–DAMP; highway–CONGESTED).

Social state (S-socst) A state of a social organization in a situation (e.g., family–COOPERATIVE; people–FREE).

Quantity (S-quant) A numerosity, frequency, intensity, or typicality of a situation or any of its properties except of an entity, whose quantitative aspects are coded with EQ (e.g., vacation–lasted for EIGHT days; car–a LONG drive).

Entity Properties (E) Properties of a concrete entity, either animate or inanimate. Besides being a single self-contained object, an entity can be a coherent collection of objects (e.g., forest).

External component (E-excomp) A three-dimensional component of an entity that, at least to some extent, normally resides on its surface (e.g., car–HEADLIGHT; tree–LEAVES).

Internal component (E-incomp) A three-dimensional component of an entity that normally resides completely inside the closed surface of the entity (e.g., apple–SEEDS; jacket–LINING).

External surface property (E-exsurf) An external property of an entity that is not a component, and that is perceived on or beyond the entity’s surface, including shape, color, pattern, texture, touch, smell, taste, sound, and so forth (e.g., watermelon–OVAL; apple–RED).

Internal surface property (E-insurf) An internal property of an entity that is not a component, that is not normally perceived on the entity’s exterior surface, and that is only perceived when the entity’s interior surface is exposed; includes color, pattern, texture, size, touch, smell, taste, and so forth (e.g., apple–WHITE, watermelon–JUICY).

Substance/material (E-mat) The material or substance of which something is made (e.g., floor–WOOD; shirt–CLOTH

Spatial relation (E-spat) A spatial relation between two or more properties within an entity, or between an entity and one of its properties (e.g., car–window ABOVE door; watermelon–green OUTSIDE).

Systemic property (E-sys) A global systemic property of an entity or its parts, including states, conditions, abilities, traits, and so forth (e.g., cat−ALIVE; dolphin−INTELLIGENT; car−FAST).

Larger whole (E-whole) A whole to which an entity belongs (e.g., window–HOUSE; apple–TREE).

Entity behavior (E-beh) A chronic behavior of an entity that is characteristic of its nature, and that is described as a characteristic property of the entity, not as a specific intentional action in a situation (e.g., tree–BLOWS IN THE WIND; bird–FLIES; person–EATS).

Abstract entity property (E-abstr) An abstract property of the target entity not dependent on a particular situation (e.g., teacher–DEMOCRAT; transplanted Californian–BUDDHIST).

Quantity (E-quant) A numerosity, frequency, size, intensity, or typicality of an entity or its properties (e.g., jacket–an ARTICLE of clothing; cat–FOUR legs; tree–LOTS of leaves; apple–COMMON fruit; watermelon–USUALLY green; apple–VERY red).

Introspective Properties (I) A property of a subject’s mental state as he or she views a situation, or a property of a character’s mental state in a situation.

Affect/emotion (I-emot) An affective or emotional state toward the situation or one of its components by either the subject or a participant (e.g., magic–a sense of EXCITEMENT; vacation–I was HAPPY; smashed car–ANGER).

Evaluation (I-eval) A positive or negative evaluation of a situation or one of its components by the either the subject or a participant (e.g., apples–I LIKE them; vacation–I wrote a STUPID paper). Typically more about the situation or component than about the perceiver, often attributing a trait to it (e.g., BEAUTIFUL, COMMON). Use I-emot when the focus is more on the perceiver and on a traditional emotional state.

Representational state (I-rep) A relatively static or stable representational state in the mind of a situational participant, including beliefs, goals, desires, ideas, perceptons, and so forth (e.g., smashed car–believed it was not working; tree–wanted to cut it down; tree—I had a good VIEW of a bird in it).

Cognitive operation (I-cogop) An online operation or process on a cognitive state, including retrieval, comparison, learning, and so forth (e.g., watermelon–I REMEMBER a picnic; rolled grass–LOOKS LIKE a burrito; car–I LEARNED how to drive).

Contingency (I-contin) A contingency between two or more aspects of a situation, including: conditionals and causals, such as if, enable, cause, because, becomes, underlies, depends, requires, and so forth; correlations such as correlated, uncorrelated, negatively correlated, and so forth; others including possession and means (e.g., car–REQUIRES gas; tree–has leaves DEPENDING ON the type of tree; vacation–FREE FROM work; magic–I was excited BECAUSE I got to see the magician perform; car—MY car).

Negation (I-neg) An explicit mention of the absence of something, with absence requiring a mental state that represents the opposite (e.g., car–NO air conditioning, apple–NOT an orange).

Quantity (I-quant) A numerosity, frequency, intensity or typicality of an introspection or one of its properties (e.g., truth–a SET of beliefs; buy–I was VERY angry at the saleswoman; magic–I was QUITE baffled by the magician).

Miscellaneous (M) Information in a protocol not of theoretical interest.

Cue (M-cue) The cue concept given to the subject (e.g., car, apple).

Hesitation (M-hesit) A non-word utterance, or an incomplete utterance (e.g., um, uh, ah)

Repetition (M-repit) Repetition of an item already coded. These primarily refer to repetitions at the conceptual level. Thus, two repetitions of the same word may not be repetitions, and two different words could be repetitions. Also, when a different instance of the same concept is mentioned, these are not counted as repetitions.

Meta-comment (M-meta) A meta-comment having to do with the generation task that is not part of the conceptual content (e.g., house–THEY CAN TAKE SO MANY FORMS; transplanted Californian–IT IS HARD TO IMAGINE THIS).

Rights and permissions

About this article

Cite this article

Vivas, J., Vivas, L., Comesaña, A. et al. Spanish semantic feature production norms for 400 concrete concepts. Behav Res 49, 1095–1106 (2017). https://doi.org/10.3758/s13428-016-0777-2

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13428-016-0777-2