Abstract

A state-of-the-art data analysis procedure is presented to conduct hierarchical Bayesian inference and hypothesis testing on delay discounting data. The delay discounting task is a key experimental paradigm used across a wide range of disciplines from economics, cognitive science, and neuroscience, all of which seek to understand how humans or animals trade off the immediacy verses the magnitude of a reward. Bayesian estimation allows rich inferences to be drawn, along with measures of confidence, based upon limited and noisy behavioural data. Hierarchical modelling allows more precise inferences to be made, thus using sometimes expensive or difficult to obtain data in the most efficient way. The proposed probabilistic generative model describes how participants compare the present subjective value of reward choices on a trial-to-trial basis, estimates participant- and group-level parameters. We infer discount rate as a function of reward size, allowing the magnitude effect to be measured. Demonstrations are provided to show how this analysis approach can aid hypothesis testing. The analysis is demonstrated on data from the popular 27-item monetary choice questionnaire (Kirby, Psychonomic Bulletin & Review, 16(3), 457–462 2009), but will accept data from a range of protocols, including adaptive procedures. The software is made freely available to researchers.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Appropriately trading off the immediacy versus the magnitude of a reward is a fundamental aspect of decision making across many domains. Would you like 1 marshmallow now, or 2 in 15 minutes? Should you spend your wages on a holiday now, or contribute to a larger pension in a few decades time? Should society consume fossil fuels now or maintain the biosphere in the long run? Learning how people discount future rewards is crucial across many fields of study, so that we can understand, predict, and nudge people’s decisions.

Psychologists attempt to survey the behavioural phenomena and propose cognitive mechanisms (Mischel et al. 1972; Green et al. 1994; Weatherly and Weatherly 2014). Neuroscientists study the neural mechanisms (Cohen et al. 2004; Kable and Glimcher 2007; Kalenscher and Pennartz 2008; Peters and Büchel 2011). Theorists attempt to explain why discounting behaviour might arise in the first place (Kurth-Nelson et al. 2012; Killeen 2009; Fawcett et al. 2012; Stevens and Stephens 2010; Cui 2011; Sozou 1998). Economists attempt to understand microeconomic decision making with focus upon any violations of rationality (Frederick et al. 2002). And policy theorists study how groups with different time preferences could come to a collective decision over issues such as climate change (Millner and Heal 2014). Therefore, making accurate and rich inferences about how people discount future rewards is important in a wide variety of domains.

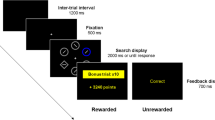

The delay discounting (or inter-temporal choice) task is one way in which people study this trade-off between the immediacy and magnitude of a reward (see Fig. 1). The task consists of participants repeatedly answering questions of the form “Would you prefer £A now, or £B in D days.” Most people would choose an immediate reward of £100 now over £101 in 1 year, but as the value of the delayed reward increases there will be a point at which the delayed reward becomes preferable. Behaviour in delay discounting tasks will depend upon how a participant discounts future rewards, and this is often measured in the form of a discount function describing how the present subjective value of a reward decreases as its delivery is delayed. A more general form of the delay discounting question has also been explored in the form “Would you prefer £A in D A days, or £B in D B days.”

Estimating a discount function from data of a single hypothetical participant in the delay discounting task. A total of 28 questions were asked (points; filled = chose delayed, unfilled = chose immediate reward) with 2 possible response errors. The normalised discount function \(\frac {A}{V}\) defines the indifference point, where the subjective value of a larger future reward B is equal to a smaller immediate reward A. It is estimated by finding the indifference point where preferences reverse from immediate to delayed. Which of the 3 functions shown is the correct one, and how can we infer this from the data?

Many studies of temporal discounting use a common workflow (see Fig. 2). After collecting delay discounting data, best guess discount rates are estimated for a number of participants. Hypothesis tests can then be carried out to probe for relationships between the discount rates and other participant variables of interest, such as age, reward magnitude, fMRI activity in a region of interest, experimental vs. control condition etc. There are a number of challenges to drawing robust research conclusions about discounting behaviour which are outlined in the next section. The present work will seek to solve or at least partially address these using a Bayesian approach by either: producing better best-guess discount rates to be used in subsequent hypothesis tests, or through combined parameter estimation and hypothesis testing. The former approach is more general, but we loose knowledge of how uncertain we are about participant’s discount rates. The latter approach retains this information and so may lead to more robust conclusions, but may require the model to be extended to deal with particular research contexts.

The role of the present work (dashed boxes) in delay discounting research. A traditional research workflow may involve estimating discount rates based upon delay discounting data. Best-guess discount rates are then combined with other participant variables to form a dataset suitable for hypothesis tests. The present work can aid this traditional workflow through improved parameter estimation. It also allows for combined parameter estimation and hypothesis testing. This could afford more robust research conclusions because participant level uncertainty can propagate to the group level which is often where hypothesis tests are targeted

Challenges

The first challenge is that inferences about a participant’s discounting behaviour are based upon limited number of data. For example, given the data points from a hypothetical participant in Fig. 1, we can see that we will have a degree of uncertainty over the discount function; each of the 3 discount functions shown are about equally consistent with the data. If we had more data points we could better infer the discount function, but this comes at a cost. Data cost is most acute in experiments paying real monetary rewards, but even if the rewards are hypothetical, testing time is still a limiting factor, particularly so when testing special populations.

Second, participants occasionally make response errors so some of the data does not accurately represent subjective preferences. Figure 1 demonstrates two likely response errors; should we let these bias our estimates of a participant’s discount rate, or should we discount them as response errors. How can we incorporate this uncertainty when inferring a participant’s discount rate?

Third, previous research has established that a participants’ intertemporal preferences are influenced by many factors such as age (Green et al. 1994), income (Green et al. 1996), and the magnitude of rewards on offer (Kirby and Maraković 1996; Johnson and Bickel 2002). Can we achieve better parameter estimates and more robust hypothesis tests by incorporating prior knowledge that discount rates may vary as a function of covariates of interest?

Solutions

The challenges set out above involve uncertainty, prior knowledge, and inference, and so it is natural to seek solutions in the form of Bayesian analysis methods. The overall aim of this work is to allow more robust research conclusions to be drawn through improved analysis of delay discounting data. The approach outlined below is implemented in Matlab code which is available to downloadFootnote 1. The software is simple to use (see Section “Using the software”), installation and usage instructions are provided, and can fit within a research workflow described in Fig. 2. By addressing the challenges set out above, this work makes a number of contributions to the analysis of delay discounting data:

-

1.

I formulated a novel Bayesian probabilistic generative model of behaviour in the delay discounting task (Section “The model”). This allows us to: specify prior knowledge about latent variables driving discounting behaviour, update this knowledge rationally in the light of new empirical data, specify our level of confidence (or lack thereof) in our beliefs, and to conduct Bayesian hypothesis testing. The proposed model allows hierarchical Bayesian inference (also known as multi-level modelling) to be conducted on the trial level, the participant level, and at the group level. This means, for example, that relationships between participant covariates (such as age) and discount rates can affect inferences made at the participant level. The model is flexible enough to analyse data obtained from a variety of delay discounting protocols where the question is in the form “Would you prefer £A in D A days, or £B in D B days.” A rich set of inferences result from using this model, demonstrated in Section “An example” with a dataset consisting of 15 participants who completed the widely used 27-item monetary choice questionnaire (Kirby 2009).

-

2.

I explicitly account for measurement error, that is erroneous responses given by the participant in the delay discounting task which are not truly reflective of their discounting preferences. This is done with a psychometric function which describes how participants’ responses are probabilistically related to the present subjective value of the sooner and later rewards (Section “Choosing between options”). This approach is common in visual psychophysics, and I propose it is useful to incorporate it into what is essentially financial psychophysics. This allows us to distinguish between a participant’s baseline response error rate, and errors that may arise from their imprecise comparison between the present subjective values of A and B.

-

3.

I utilise prior knowledge of factors that influence discount rates in two ways. Firstly, the structure of the model incorporates our knowledge that participant’s discount rate decreases as the reward magnitude increases (the magnitude effect, see Section “Calculating present subjective value”). So rather than estimating a discount rate, we estimate how the discount rate varies as a function of reward magnitude. Secondly, Appendix B discusses the approach to extend the model to consider linear relationships between participant covariates (such as age) and group level parameters.

The model

The probabilistic generative model

A probabilistic generative model was created to describe the putative causal processes which give rise to observed participant response data, given a set of monetary choices (see Fig. 3). Readers are directed to Lee and Wagenmakers (2014) for a thorough and approachable introduction to the methods used here.

A graphical model of the delay discounting task. Shaded nodes correspond to observed data, unshaded nodes are latent variables. Double bordered nodes are deterministic quantities. Circles represent continuous variables, and the square node represents a discreet variable. P is the number of participants, T p the total number of trials available for participant p. Throughout this paper, normal distributions are parameterised by mean and standard deviation

Calculating present subjective value

On each trial, participants make a comparison between the sooner smaller reward (£A) and the longer larger reward (£B), see next section. However the participant’s do not compare these quantities directly, but the present subjective value of each reward. That is, the present subjective value of a reward V reward is equal to the actual reward multiplied by a discount factor. We assume the simple 1-parameter hyperbolic discount functionFootnote 2 (Mazur 1987),

Note that in the special case where the sooner reward is to be delivered immediately (D A = 0), then V A = A. No strong claim is being made that Equation 1 is a true description of how people discount future rewards (e.g. Luhmann, 2013), it was chosen because of its ubiquitous use in the literature, but also see Section “Choice of the 1-parameter discount function”. However, the magnitude effect shows that the discount rate k varies as a function of the magnitude of the delayed reward (see Fig. 4), so the actual function used was

where f(reward) describes the magnitude effect. Based upon Figure 8 of Johnson and Bickel (2002) we assume log(k) = mlog(reward) + c, where m and c describe the slope and intercept of a line describing how the log discount rate decreases linearly as log reward magnitude increases (also see Appendix A). Therefore

and the resulting subjective present value is

While the magnitude effect is not a new idea, Equations 2 – 4 constitute, as far as the author is aware, a novel proposal to specify the discount factor as a function of both delay and reward magnitude (see Fig. 4).

Modelling the magnitude effect with a discount surface. Shown are two examples (rows) with different slope and intercept parameters (m, c). These parameters describe the magnitude effect (a, c), how discount rate is related to delayed reward magnitude. This function in turn describes a range of discount functions (b, d) which together form a discount surface

Choosing between options

Intuitively, a participant will prefer the option with higher present subjective value, so a simple decision rule would be to choose the delayed reward if V B−V A>0, or the immediate reward otherwise. Participant’s responses may not be so clear cut however, so we model the participant’s probability of choosing the delayed reward using a psychometric function (see Fig. 5). This relates the difference between the present subjective value of the delayed and immediate rewards (V B−V A) to the probability of choosing the delayed reward,

where Φ() represents the standard cumulative normal distribution. The parameter α defines the ‘acuity’ of the comparison between options (Fig. 5). The mechanisms responsible for this uncertainty are not explored further here. But if α = 0, then the psychometric function would be a step function and participants would always choose the reward with highest present subjective value (Fig. 5a). As α increases however, it means there is more error in this comparison between V A and V B. Comparison acuity α takes on positive values, and we define a simple Normal prior distribution (truncated at zero) with uninformative uniform priors over the mean μ α and standard deviation σ α. The psychometric function also incorporates that participants make response errors at some unknown rate 𝜖 (Fig. 5c, d) regardless of the proximity of the present subjective values of the rewards.

Example psychometric functions which relate the difference between V B and V A to the probability of choosing the delayed reward. Panels a and c represent participants with precise comparison of present subjective values, panels b and d show examples when this comparison is imprecise. Panels a and b represent a participant who does not make systematic response errors, in contrast to a systematically ‘clumsy’ responder in panels c and d

Responses are modelled as Bernoulli trials (a biased coin flip) where P(choose delayed) is the bias, and a value of R = 1 means the delayed choice (B) was preferred.

Hierarchical modelling

Figure 3 shows that we model at the trial, participant, and group levels. Interested readers are referred to Lee (2011) for an introduction to hierarchical Bayesian modelling. The model presented here is useful when analysing data from participants assumed to be drawn from a single group level population.

Each participant has 4 parameters governing their magnitude effect (slope m p , and intercept c p ), comparison acuity α p , and error rate ε p . Participant level magnitude effect parameters were assumed to be normally distributed. Comparison acuity parameters were also assumed to be normally distributed but were constrained to take on positive values. The error rate parameter was assumed to be Beta distributed, but values above 0.5 were not allowed as this flips the psychometric function, implying that participants prefer the smaller of the present subjective values.

Rather than making inferences about participants in isolation, it is appealing to be able to infer something about the population that these participants are drawn from. The hierarchical modelling approach allows us to do this by modelling participants (and their 4 parameters per person) as being drawn from a group level distribution (see outer nodes in Fig. 3). The actual priors therefore are placed upon these group-level parameters. The group level magnitude effect slope and intercept priors were based upon estimates from previous literature (see Fig. 3 and Appendix A). Uninformative priors were chosen for the group level comparison acuity parameters. The group level error rate priors represented a mild belief that the error rate was 1 % (for ω) and an uninformative prior was placed over the concentration parameter K.

Our knowledge of the population from which the participants were drawn from (the group level) can be summarised simply by generating posterior predictive distributions for our 4 parameters (m, c, α, ε), which we label as G m,G c,G α,G ε, respectively. These can also be thought of as representing our prior over the parameters of an untested participant randomly selected from the population, given the currently observed participant dataset.

Using the software

Figure 2 shows the broader context in which this analysis software can be used. While the model presented above may look complex, practical use of the software is straight-forward, consisting of a few steps:

-

1.

Collect experimental data and save it in a tab-delimited text file with columns representing A, D A, B, D B, R, and each row representing a trial. Example datasets are provided.

-

2.

Start Matlab, navigate to the folder containing the analysis software downloaded from the webpage above.

-

3.

An analysis session can be run with a few simple commands, outlined in full in the software documentation. Example analysis scripts are provided. Functions are provided to export publication-quality figures such as those shown in Section “An example”, and best-guess (point estimate) parameters for later use in hypothesis testing.

One complexity however will be ensuring that the prior distributions over magnitude effect parameters are appropriate for the particular research context (see Appendix A). Full installation and usage instructions are provided onlineFootnote 3 where the software is freely available to download.

Inference using MCMC

The analysis code is written with Matlab. The JAGS package was used to conduct Markov chain Monte Carlo (MCMC) sampling based inference (Plummer 2003). By default, the posterior distributions are estimated with a total of 100,000 MCMC samples over 2 chains. This excludes the first 1,000 samples of each chain which were discarded (the burn-in interval). Convergence upon the true posterior distributions was checked by visual inspection of the MCMC chains, and by the \(\hat {R}\) statistic being closer to 1 than 1.001 for all estimated parameters.

An example

Delay discounting dataset

The analysis software was applied to delay discounting data from 15 participants who completed a 27-item monetary choice questionnaire (Kirby, 2009), denominated in pounds sterling rather than U.S. dollars. This questionnaire does not include a front-end delay, so the smaller sooner reward was to be delivered immediately (D A = 0). No particular research question was posed, the example is provided to demonstrate the nature of the inferences drawn, and how to use the software.

Summarising our inferences about delay discounting behaviour

Figure 6 shows a summary of our inferences about each parameter. Not only do we obtain a single most likely parameter value (posterior mode; points) but we also obtain a distribution of belief over each parameter which expresses our confidence (or lack thereof) in these estimates. This is summarised by plotting 95 % credible intervals which are different from, and superior to, confidence intervals (Morey et al. 2015).

This particular dataset shows, for all parameters, little between-participant variance. The parameter distributions for each participant are broadly similar to the group level parameter distributions. This could be because participants display similar discounting behaviour, or it could be because the the data is insufficient to draw more precise conclusions about each participant. This should not be surprising as we only have 27 questions per participant, and the fact that the rewards cover a limited range of magnitudes (£11 – £85) means that a priori we should not expect the data to maximally constrain our estimates of the magnitude effect.

Rich forms of analysis

However, we are able to present much richer forms of analysis. Figure 7 (top row) shows the group-level inferences as well as participant-level analyses (subsequent rows) of participants 1–3. The posterior distribution of error rates and comparison acuity parameters are plotted in a bivariate density plot (column 1). These parameters determine the psychometric function, which is plotted along with 95 % credible regions in column 2. The magnitude effect parameters (m, c) are also shown as a bivariate density plot (column 3). This magnitude effect function is visualised in column 4, again with 95 % credible regions. The MAP estimate m and c parameters were used to visualise the discount surface (column 4). Participant level plots also show raw response data. This is not present at the group level because the hierarchical inference does not operate by simply pooling all the participant-level data; there is no group level data, just latent parameters describing the group’s properties.

An example of hierarchical Bayesian inference conducted on delay discounting data. The top row shows group-level inferences, subsequent rows correspond to 3 of 15 total participants. Shaded regions represent the 95 % credible intervals. Inset text presents posterior mode, and 95% highest density intervals in parentheses

Visualising the psychometric function (column 2) is informative. A perfect financial decision maker would have a psychometric curve that was a step function, which means they would always choose the higher of the immediate reward V A = A and the present subjective value of the delayed reward V B. Inspecting the response data and discount surfaces (column 5) we can see that with rare exceptions, the discount surface successfully separates immediate and delayed choices.

The bivariate density plots of the magnitude effect parameters (column 3) demonstrates our uncertainty about the parameters is anti-correlated, which is expected for slope and intercept parameters. This knowledge was not available from the univariate summary in Fig. 6.

When the posterior of these parameters is used to generate a magnitude effect plot (column 4) we can see that our certainty is highest around the reward magnitudes in the monetary choice questionnaire, between £11 – £85. We rightly have less confidence in the group and participant discount rates at much lower or higher reward magnitudes.

Hypothesis tests

In order to demonstrate how the parameter estimation can contribute to research conclusions, we test whether there is evidence that participants exhibit a magnitude effect, more specifically that the slope of the magnitude effect at the group level is less than zero.

Traditional workflow

Using the traditional workflow (see Fig. 2), point estimates (posterior mode) of m p were analysed using JASP statistical software (Love et al. 2015). A Bayesian one-sample t-test (Rouder et al. 2009) was conducted to evaluate if the population mean μ was less than 0: \(\mathcal {H}_{0}: \mu = 0, \mathcal {H}_{1}: \mu < 0\). The resulting log Bayes Factor was log(B F 10)=43.2 meaning that, under the scale of Jeffreys (1961), we have decisive evidence for \(\mathcal {H}_{1}\), that there is a population level magnitude effect.

Fully Bayesian methods

However, we can achieve more robust research conclusions by using the more advanced workflow (see Fig. 2). By exporting point estimates of the slope of the magnitude effect for each participant m p , we lost all knowledge of how certain or uncertain we were in those estimates. A fully Bayesian approach can be taken by drawing research conclusions from posterior distributions directly, in this case the group level magnitude effect slope G m.

There are two approaches that could be taken. The first is Bayesian hypothesis testing and results in a Bayes Factor summarising the evidence for or against competing hypotheses. The second approach is parameter estimation, where for example the focus is on estimating an effect size of value of a parameter. Rather than one being more correct than another, they achieve different goals (Kruschke 2011). There is a lively debate over which of these methods will be most scientifically useful (e.g. Morey et al., 2014; Kruschke and Liddell, 2015; Wagenmakers et al., 2015) and so I demonstrate both methods applied to the question, is the magnitude effect slope less than zero at the group level?

Under the Bayesian hypothesis testing approach we can define our hypotheses as \(\mathcal {H}_{0}: G^{m}=0\) and \(\mathcal {H}_{1}: G^{m}<0\). The Savage-Dickey method was used to calculate a Bayes Factor (see Wagenmakers et al., 2010; Lee and Wagenmakers, 2014), achieved by removing positive MCMC samples of the the prior and posterior distributions because the hypothesis was directional. This resulted in B F 01≈545 (see Fig. 8a), meaning that again we have decisive evidence that the slope of the magnitude effect at the group level is less than 0 (Jeffreys 1961).

Both a hypothesis test (left) and an estimation approach (right) indicate that the slope of the magnitude effect at the group level is less than zero. For the Bayes Factor, the lighter and darker grey distributions represent the prior and posterior distributions (respectively) of belief over G m under the directional hypothesis \(\mathcal {H}_{1}\). The Bayes Factor is the ratio of the probability density of the prior and posterior at G m = 0 (points). For the estimation approach (right), light and dark distributions represent the prior and posterior over G m, and the bar shows the 95 % credible interval of the posterior

Under the parameter estimation approach however, we simply examine our posterior over the relevant latent variable G m (see Fig. 8b). We see that the 95 % credible region is far from the value of interest, G m = 0 and so it is reasonable to believe the slope of the magnitude effect at the group level is less than one.

Extending the model

The default analysis strategy is to estimate best guess parameters and conduct hypothesis testing alongside other participant data in an alternative software package (see Fig. 2 and Section “Traditional workflow”). In some research contexts however the ‘fully Bayesian’ approach can be taken, as in the above section, but in many research contexts this will require extending the model. Readers are referred to Kruschke (2015) for a thorough overview of Bayesian approaches to general linear modelling, which can be added on to account for relationships between latent parameters and observed participant covariates. Appendix B outlines two examples of how to do this: when participants are members of 1 of G groups (a discrete, within participant variable), and when a group-level parameter varies as a function of a covariate.

Discussion

Choice of the 1-parameter discount function

The aim of this paper is to introduce Bayesian estimation and hypothesis testing for delay discounting tasks. It is open for debate whether discounted value or discounted utility is the best way to frame discounting behaviour and we do not know the ‘correct’ discount function. The 1-parameter hyperbolic discount function (Equation 2) was used for a number of reasons. First, even though it is unlikely to be a complete description of how people discount future rewards (e.g. Luhmann, 2013), the 1-parameter hyperbolic model is simple and provides a good account of discounting in human (McKerchar et al. 2009) and non-human animals (Freeman et al. 2009). Second, a comparison of 4 prominent models shows clear superiority for the 1-parameter hyperbolic over a 1-parameter exponential model, but there is no clear rationale for placing attention on one of the more complex discount functions (McKerchar et al. 2009; Doyle 2013).

Comparison to existing analysis approaches

A common way to analyse data from delay discounting tasks is to fit a curve to indifference points as a function of delay (Robles and Vargas 2008; Reynolds and Schiffbauer 2004; McKerchar et al. 2009; Whelan and McHugh 2009). The benefit of this approach is its simplicity. However, it does not easily allow for the number of trials to influence the certainty we have about the estimates made. Bootstrap procedures may be used, but they do not have the same intuitive meaning as Bayesian posterior distributions or credible intervals. A straight forward extension to estimating the magnitude effect (by independently estimating discount rates for different reward magnitudes) would also be limited without some form of hierarchical treatment They also place emphasis upon the parametric form of the discount function rather than the processes underlying behaviour. The approach presented does indeed rely upon a parametric form of the discount rate, but the explicit modelling of choices made on a trial to trial basis means that this model can be extended in future work to explain causal processes responsible for discounting behaviour (see Vincent, 2015, for a perceptual decision making example).

An advantage of the present model is that it utilises all trial data from each participant. This, in combination with Bayesian inference methods, allows the confidence in parameters to be influenced by the number of trials and participants. Wileyto et al. (2004) present an analysis approach which also utilises data from every trial, which was also applied to the 27 item questionnaire. While this approach is better than fitting parameters to indifference points, that approach did not model the magnitude effect, did not include hierarchical estimation, and did not use a Bayesian inference approach.

Advantages of this approach

While the modelling details presented in this paper are more complex than existing approaches, it is likely that a net benefit is conferred to researchers. Firstly, the complexities of the model are not as relevant as the ease of using the software (see Section “Using the software” and online usage instructions). Secondly, the Bayesian approach taken here provides a range of non-trivial advantages.

First, the full joint posterior distribution over parameters is calculated which represents our degree of belief in these parameters having observed the data. Section “Rich forms of analysis” showed how this can provide greater understanding of our data and of our uncertainty in latent variables putatively underlying the participant’s behaviour.

Second, hierarchical Bayesian estimation simultaneously estimates trial-level responses, and participant- and group-level parameters. This has numerous benefits, most notably when it comes to providing additional prior knowledge (see Appendix B) and incorporating participant level uncertainty into hypothesis testing.

Third, the widely used and easily interpreted 1-parameter hyperbolic discount function is used to not only estimate a participant’s discount rate, but how that varies as a function of reward magnitude (the magnitude effect). The model is extendable to investigate alternative, or even multiple, discount functions.

Fourth, the psychometric function incorporates measurement errors (participant response errors). Effects of baseline error rates and comparison acuity can be separated which could be informative in comparing theoretical explanations of discounting behaviour. It could also provide a quantitative justification to exclude participants from a larger dataset on the basis of a high error rate. However this is optional, group-level inferences are less likely to be affected by such individuals as the error rate parameter can account for inconsistent responding rather than affecting more critical parameters related to discounting behaviour.

Fifth, the estimation procedure is flexible enough to utilise data obtained from a variety of different delay discounting protocols such as: studies with or without a front-end delay before the sooner reward, the Kirby 27-item test, fixed immediate reward protocol, fixed delayed reward protocol, and adaptive protocols.

Conclusion

The probabilistic model (Fig. 3) underlying the data analysis approach described here, and the use of Bayesian inference, offers a number of advantages over traditional approaches to both estimating discount rates and subsequent hypothesis tests.

The proposed analysis can fit into traditional research workflows (see Fig. 2) by exporting best guess parameter estimates which have been derived with all the advantages of the Bayesian approach and the particular model proposed. This approach is most flexible in allowing for hypothesis tests in a wide range of research contexts. The approach also allows for a more fully Bayesian approach to hypothesis testing however, in that uncertainty at the participant level propagates to the group level where hypothesis tests are typically focussed. Examples have also been provided to show how this latter approach can be extended to more complex research questions (see Appendix B).

In conclusion, using this hierarchical Bayesian data analysis approach with the freely available software allows researchers from multiple disciplines to draw more robust research conclusions by making the best use of their prior knowledge and new delay discounting data.

Notes

This class of discounting model assumes it is the value of the reward itself that is discounted. Models where it is the utility of a reward that is discounted may offer a broader explanation of discounting behaviour (Killeen 2009).

References

Cohen, J. D., McClure, S. M., Laibson, D. I., & Loewenstein, G. (2004). Separate neural systems value immediate and delayed monetary rewards. Science, 306(5695), 503–507.

Cui, X. (2011). Hyperbolic discounting emerges from the scalar property of interval timing. Frontiers in integrative neuroscience, 5, 24.

Doyle, J. R. (2013). Survey of time preference, delay discounting models. Judgment and Decision Making, 8 (2), 116–135.

Fawcett, T. W., McNamara, J. M., & Houston, A. I. (2012). When is it adaptive to be patient? A general framework for evaluating delayed rewards. Behavioural Processes, 89(2), 128–136.

Frederick, S., Loewenstein, G., & O’Donoghue, T. (2002). Time discounting and time preference: A critical review. Journal of economic literature. XL, 351–401.

Freeman, K. B., Green, L., Myerson, J., & Woolverton, W. L. (2009). Delay discounting of saccharin in rhesus monkeys. Behavioural Processes, 82(2), 214–218.

Green, L., Fry, A. F., & Myerson, J. (1994). Discounting of delayed rewards: A life-span comparison. Psychological science, 5(1), 33–36.

Green, L., Myerson, J., Lichtman, D., Rosen, S., & Fry, A. (1996). Temporal discounting in choice between delayed rewards: the role of age and income. Psychology and Aging, 11(1), 79–84.

Jeffreys, S. H. (1961). The Theory of Probability, 3rd: Oxford University Press.

Johnson, M. W., & Bickel, W. K. (2002). Within-subject comparison of real and hypothetical money rewards in delay discounting. Journal of the experimental analysis of behavior, 77(2), 129– 146.

Kable, J. W., & Glimcher, P. W. (2007). The neural correlates of subjective value during intertemporal choice. Nature Neuroscience, 10(12), 1625–1633.

Kalenscher, T., & Pennartz, C. M. A. (2008). Is a bird in the hand worth two in the future? The neuroeconomics of intertemporal decision-making. Progress in Neurobiology, 84(3), 284– 315.

Killeen, P. R. (2009). An additive-utility model of delay discounting. Psychological Review, 116(3), 602–619.

Kirby, K. N. (2009). One-year temporal stability of delay-discount rates. Psychonomic Bulletin & Review, 16 (3), 457–462.

Kirby, K. N., & Maraković, N. N. (1996). Delay-discounting probabilistic rewards: Rates decrease as amounts increase. Psychonomic Bulletin & Review, 3(1), 100–104.

Kruschke, J. K. (2011). Bayesian Assessment of Null Values Via Parameter Estimation and Model Comparison. Perspectives on Psychological Science, 6(3), 299–312.

Kruschke, J. K. (2015). Doing Bayesian Data Analysis: A Tutorial with R, JAGS, and Stan, 2nd: Academic Press.

Kruschke, J. K., & Liddell, T. M. (2015). The Bayesian New Statistics: Two historical trends converge, (pp. 1–21).

Kurth-Nelson, Z., Bickel, W. K., & Redish, A. D. (2012). A theoretical account of cognitive effects in delay discounting. European Journal of Neuroscience, 35(7), 1052–1064.

Lee, M. D. (2011). How cognitive modeling can benefit from hierarchical Bayesian models. Journal of Mathematical Psychology, 55(1), 1–7.

Lee, M. D., & Wagenmakers, E.-J. (2014). Bayesian cognitive modeling: A practical course. Cambridge: Cambridge University Press. Gronau, Q., Morey, R. D., Rouder, J. N., Wagenmakers, E.-J.

Love, J., Selker, R., Verhagen, J., Smira, M., Wild, A., Marsman, M., & et al. (2015). JASP (version 0.6.6). http://www.jasp-stats.org.

Luhmann, C. C. (2013). Discounting of delayed rewards is not hyperbolic. Journal of Experimental Psychology: Learning, Memory, and Cognition, 39(4), 1274–1279.

Mazur, J. E. (1987). An adjusting procedure for studying delayed reinforcement. In Commons, M. L., Mazur, J. E., Nevin, J. A., & Rachlin, H. (Eds.) Quantitative Analyses of Behavior (pp. 55–73). Hillsdale, NJ.

McKerchar, T. L., Green, L., Myerson, J., Pickford, T. S., Hill, J. C., & Stout, S. C. (2009). A comparison of four models of delay discounting in humans. Behavioural Processes, 81(2), 256–259.

Millner, A., & Heal, G. (2014). Resolving Intertemporal Conflicts: Economics vs Politics. Technical Report 196. Cambridge, MA: National Bureau of Economic Research.

Mischel, W. W., Ebbesen, E. B. E., & Zeiss, A. R. A. (1972). Cognitive and attentional mechanisms in delay of gratification. Journal of Personality and Social Psychology, 21(2), 204–218.

Morey, R. D., Hoekstra, R., Rouder, J. N., Lee, M. D., & Wagenmakers, E.-J. (2015). The Fallacy of Placing Confidence in Confidence Intervals, Psychonomic Bulletin & Review, 1–21.

Morey, R. D., Rouder, J. N., Verhagen, J., & Wagenmakers, E.-J. (2014). Why hypothesis tests are essential for psychological science: a comment on Cumming (2014). Psychological science, 25(6), 1289–1290.

Peters, J., & Büchel, C. (2011). The neural mechanisms of inter-temporal decision-making: understanding variability. Trends in Cognitive Sciences, 15(5), 227–239.

Plummer, M. (2003). JAGS: A program for analysis of Bayesian graphical models using Gibbs sampling. In Proceedings of the 3rd International Workshop on Distributed Statistical Computing (DSC 2003) (pp. 20–22).

Reynolds, B., & Schiffbauer, R. (2004). Measuring state changes in human delay discounting: an experiential discounting task. Behavioural Processes, 67(3), 343–356.

Robles, E., & Vargas, P. A. (2008). Parameters of delay discounting assessment: Number of trials, effort, and sequential effects. Behavioural Processes, 78(2), 285–290.

Rouder, J. N., Speckman, P. L., Sun, D., Morey, R. D., & Iverson, G. (2009). Bayesian t tests for accepting and rejecting the null hypothesis. Psychonomic Bulletin & Review, 16(2), 225– 237.

Sozou, P. D. (1998). On hyperbolic discounting and uncertain hazard rates. Proceedings of the Royal Society of London Series B-Biological Sciences, 265(1409), 2015–2020.

Stevens, J. R., & Stephens, D. W. (2010). The adaptive nature of impulsivity. In Madden, G. J., & Bickel, W. K. (Eds.) Impulsivity: The Behavioral and Neurological Science of Discounting. Washington DC: American Psychological Association.

Vincent, B. T. (2015). A tutorial on Bayesian models of perception. Journal of Mathematical Psychology, 1–34.

Wagenmakers, E.-J., Lodewyckx, T., Kuriyal, H., & Grasman, R. (2010). Bayesian hypothesis testing for psychologists: A tutorial on the Savage–Dickey method. Cognitive Psychology, 60(3), 158–189.

Wagenmakers, E.-J., Verhagen, J., Ly, A., Matzke, D., Steringoever, H., Rouder, J. N., & Morey, R. D. (2015). The Need for Bayesian Hypothesis Testing in Psychological Science. pages 1–29.

Weatherly, J. N., & Weatherly, J. N. (2014). On several factors that control rates of discounting. Behavioural Processes, 104, 84–90.

Whelan, R., & McHugh, L. A. (2009). Temporal discounting of hypothetical monetary rewards by adolescents, adults, and older adults. The Psychological Record, 59, 247–258.

Wileyto, E. P., Audrain-McGovern, J., Epstein, L. H., & Lerman, C. (2004). Using logistic regression to estimate delay-discounting functions. Behavior Research Merhods, Instruments, & Computers, 36(1), 41–51.

Acknowledgments

The author thanks Rebecca Morris and Vaidas Staugaitis for help with collecting data.

Author information

Authors and Affiliations

Corresponding author

Additional information

The analysis code is freely downloadable from https://github.com/drbenvincent/delay-discounting-analysis.

Appendix

Appendix

Priors for m and c

In the proposed model, each person’s log discount rate is assumed to be linearly related to log reward magnitude. This is described by participant level slope m p and intercept c p parameters which are assumed to be drawn from a group-level distribution. We need to define prior distributions over the group level parameters μ m, σ m, μ c, σ c.

In some research situations it may be best to define only weakly informative priors, in others we may wish to fully incorporate prior knowledge from previous studies, and so specification of the priors should be seen as situation dependent. However, as a general guide I outline an approach to define our prior knowledge based on previous empirical data. As more studies are conducted, this approach can be used to update our prior knowledge to help inform inference on future studies.

A.1 Priors based on previous research

The subsequent analysis used empirical data of the magnitude effect reporting discount rates log(k) as a function of reward magnitude log(reward). Data was obtained from 19 previous studies using hypothetical monetary rewards, summarised in Figure 8 of Johnson and Bickel (2002). Data points were extracted resulting in a dataset with 3 columns: log(reward), log(k), and study number. The data were analysed using a Bayesian hierarchical linear regression: a slope and intercept were estimated for each study, where these are assumed to be samples from a multi-study level distribution. The analysis resulted in the following priors (note the parameters are mean and standard deviation; see Fig. 9):

Establishing priors for the magnitude effect parameters. Data from Figure 8 of Johnson and Bickel (2002) are shown (points, top) along with the mean group-level magnitude effect (thick black line) and 1000 samples from the group level distribution of G m and G c (transparent lines). Each of these are described by the parameters μ m, σ m, μ c, σ c (bottom plots) which are estimated from the empirical data. Text annotations describe parametric fits to the MCMC samples

The most important points here are that the mean slope of the magnitude effect (in log-log space) is −0.243, and that the uncertainty of the intercept σ c is quite high at 1.085 (see Fig. 9). This latter point reflects the fact that there is a lot of between-study variation in the discount rates for any given reward magnitude.

A.2 Priors used in the present study

While the priors above might be reasonable, there are problems directly using them in the present approach. The analysis above equates data from studies and multiple-studies in Johnson and Bickel (2002) with participants and group (study) in the present approach. Therefore the priors above are probably overconfident.

The priors governing the slope (μ m and σ m) from above were used as the basis for those used to analyse the data in the paper. But we multiply the standard deviations by 10 in order to reflect our increased uncertainty. The prior distribution of G m is shown in Fig. 8b.

The priors governing the intercept (μ c and σ c) were changed to be largely uninformative. The rationale is that these studies were derived from experiments where the reward is expressed in terms of US dollars, whereas the present delay discounting experiment was denominated in pounds sterling. A conservative set of largely uninformative priors were used for the group level intercept parameters:

A.3 Uninformative priors

In other research contexts it may be appropriate to not specify any prior knowledge about the slope of the magnitude effect. This can be achieved by using the negligibly informative intercept priors in the previous section, and also using largely uninformative priors for the slope,

B Extending the models for specific research situations

The traditional workflow of obtaining point estimates of participant parameters (such as m and c) and subjecting these to hypothesis tests was shown in Fig. 2 and Section “Traditional workflow”. Whilst that workflow was very flexible it has two small disadvantages. Firstly, when the best-guess parameter estimates are used, we loose our representation of the uncertainty in those parameter estimates. And so it would be appealing to use the ‘fully Bayesian’ approach, such as that used in Section “Fully Bayesian methods”. Secondly, there is scope to make even better parameter estimates by incorporating further prior knowledge. For example, if we know (or suspect) that discount rates vary as a function of a participant covariate (such as age, or fMRI activation of a particular brain region) then we could incorporate this into the structure of the model.

The range of possible research contexts is very high. In one situation we may have a single continuous participant covariate (such as age), the relationship between covariates could be linear or non-linear, or the covariates could be discreet (such as sex, or control v.s. experimental condition). As such it is not possible to create a single probabilistic model to capture all of these research situations, hence the recommended traditional workflow in Fig. 2 and Section “Traditional workflow”. Below I use two examples to demonstrate the approach researchers could use to customise the model for their specific research context. The extensive examples of Bayesian general linear models by Kruschke (2015) is a recommended resource for further customisation.

B.1 Participants belong to 1 of G groups

The model presented in Fig. 3 assumes participants are drawn from a single group. However we can imagine research questions where each participant belongs to 1 of G groups. Group is a discrete between-participant variable, so this model would be appropriate for variables such as sex, age category, or treatment level.

One straightforward analysis approach would be to use the model presented in the paper and simply analyse data from different groups separately. However we could also extend the model to assert that each participant is drawn from 1 of G groups, and this group membership is known. In this case, the model presented in Fig. 3 can be extended by simply increasing the number of group-level parameters (adding a plate around all the group-level magnitude effect parameters G m, μ m, σ m, G c, μ c, σ c). We would now have multiple instances of these latent variables, one for each group (\({G_{g}^{m}}, {\mu _{g}^{m}}, {\sigma _{g}^{m}}, {G_{g}^{c}}, {\mu _{g}^{c}}, {\sigma _{g}^{c}}\)) where g = 1,…, G. We would also update participant level distributions to be \(c_{p} \sim \text {Normal}(\mu ^{c}_{g_{p}}, \sigma _{g_{p}}^{c})\) and \(m_{p} \sim \text {Normal}(\mu ^{m}_{g_{p}}, \sigma _{g_{p}}^{m})\) where g p is the group that participant p belongs to. Hypothesis testing would then consist of testing for differences in the group-level slope and intercept parameter distributions (\({G_{g}^{m}} \sim \text {Normal}({\mu _{g}^{m}}, {\sigma _{g}^{m}})\), \({G_{g}^{c}} \sim \text {Normal}({\mu _{g}^{c}}, {\sigma _{g}^{c}})\)).

B.2 Where group-level parameters are linearly related to a covariate

If we suspected that discount rates vary linearly as a function of some covariate then we could update the model using the following approach. A model could be proposed where the mean group level intercept μ c was a function of a participant level covariate. So we could specify that μ c∼Normal(β 0 + β 1 x p , β 2) where x p is the participant’s covariate value (e.g. age) and β 0, β 1 represent the intercept, slope of a linear relationship between x p and μ c, and β 2 is the standard deviation of participants about this trend line. Of course, similar linear relationships between the covariate and other group level parameters could be proposed.

Rights and permissions

About this article

Cite this article

Vincent, B.T. Hierarchical Bayesian estimation and hypothesis testing for delay discounting tasks. Behav Res 48, 1608–1620 (2016). https://doi.org/10.3758/s13428-015-0672-2

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13428-015-0672-2