Abstract

We report four experiments, wherein subjects engaged in either problem-solving practice or example study. First, subjects studied an example problem. Subjects in the example study condition then studied two more analogous problems, whereas subjects in the problem-solving practice conditions solved two such problems, each followed by correct-answer feedback. In Experiment 1, subjects returned 1 week later and completed a posttest on an analogous problem; in Experiments 2–4, subjects completed this posttest immediately after the learning phase. Additionally, Experiment 3 consisted of a control condition, wherein subjects solved these same problems, but did not receive feedback. Experiments 3 and 4 also included a mixed study condition, wherein subjects studied two examples and then solved one with feedback during the learning phase. Across four experiments, we found that the training conditions (i.e., problem-solving practice, mixed, and example study) performed equally well on the posttest. Moreover, subjects in the training conditions outperformed control subjects on the posttest, indicating that the null findings were due to the training conditions learning and transferring their knowledge equally well. After the posttest in Experiment 4, subjects were asked to solve repeated problems from the learning phase. Subjects in the problem-solving practice and mixed study conditions performed better on repeated problems than subjects in the example study condition, indicating that they better learned the solution strategies for these problems than subjects in the example study condition. Nevertheless, this benefit was insufficient to produce differential transfer of learning among the training conditions on the posttest.

Similar content being viewed by others

Introduction

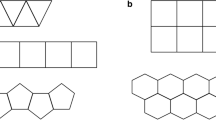

One of the most robust findings in cognitive psychology is that the practice of retrieving information leads to better learning than re-studying it (Bjork & Bjork, 1992; Carpenter, 2009, 2011, 2012; Carrier & Pashler, 1992; Dunlosky et al., 2013; Roediger & Butler, 2011). However, much of this work has involved assessments of memory performance. Recent work has attempted to extend the benefits of retrieval practice to tasks that involve problem-solving transfer, which requires more than memorization. Consider the solution strategy to the problem in Fig. 1A. Although applying this solution strategy to analogous problems (see Fig. 1B–D) involves memorizing the solution strategy, it also involves learning to recognize when and how to use it (Corral et al., 2021). If either of these latter two processes fail, even if a solution strategy is memorized, the learner will not be able to transfer it to new situations (Gick & Holyoak, 1987).

Accordingly, learners often fail to apply solutions from previous problems to novel, analogous scenarios that differ superficially (e.g., Butler, 2010; Gick & Holyoak, 1980, 1983). However, learners can solve such problems when they are reminded to think about how a novel problem relates to previous examples. These findings suggest that learners can successfully acquire and apply to-be-learned solution strategies but struggle to recognize when to use them (formally known as the inert knowledge problem; Whitehead, 1929). Thus, for retrieval practice to aid problem-solving transfer, it must help learners (a) acquire the corresponding solution strategy and (b) recognize when to apply it.

Present theories on the benefits of retrieval practice focus on how retrieval strengthens memory of the information that is retrieved, but do not directly explain how retrieval aids the transfer of learning (Carpenter et al., 2022; Pan & Rickard, 2018). One possibility is that when learners attempt to solve a problem, it allows them the opportunity to retrieve and apply the solution strategy. This opportunity is not afforded to learners when they study worked examples, as worked examples already contain the solution strategy and its application. Based on the voluminous literature on retrieval practice, problem-solving practice should therefore produce better learning and memory of to-be-learned solution strategies, leading to superior problem solving.

It is also possible that problem-solving practice enhances memory of previous problems, which might help learners recognize the similarity among old problems and novel analogs. Critically, this recognition can help learners figure out when to use the correct solution strategy.

These hypotheses offer a mechanistic account of how retrieval practice (via problem-solving practice) can facilitate problem-solving transfer. Nevertheless, these ideas have not been empirically examined and are presently open questions.

On the other hand, research on the worked example effect has found evidence against the benefits of retrieval practice on problem-solving tasks, as subjects who study worked examples often outperform subjects who engage in problem-solving practice (Cooper & Sweller, 1987; Sweller & Cooper, 1985; Van Gog & Kester, 2012; Van Gog et al., 2011). However, these studies often do not provide feedback with problem-solving practice. Feedback is critical for learning (Benassi et al., 2014) and is necessary for complex concept acquisition (Corral & Carpenter, 2020). Thus, not presenting feedback during problem-solving practice might undermine learning. In such cases, it is unclear whether the results are due to the advantage of studying worked examples over problem-solving practice, or if those results are driven by differences in feedback presentation.

Recent studies have applied retrieval practice to problem solving by having subjects read a problem scenario and retrieve its surface details (instead of solving the problem; Hostetter et al., 2018; Peterson & Wissman, 2018). Although this approach has not yielded a clear benefit of retrieval over study, retrieving a problem’s surface features can lead to forming incorrect representations, which can inhibit learning and transfer (Anderson, 1993; Corral & Jones, 2014; Holyoak & Koh, 1987; Ross, 1987, 1989; Sweller et al., 1983).

In the present paper, we explore the effects of retrieval practice and example study on problem-solving transfer through a design that addresses the aforementioned limitations. We report four experiments that compare problem-solving practice and example study. If problem-solving practice enhances memory for a solution strategy and when to apply it, then problem-solving practice should lead to best performance on a posttest involving application of the solution strategy to a new problem.

Experiment 1

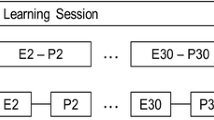

Subjects were presented four analogous problems from Catrambone and Holyoak (1989). First, subjects were asked to study a single problem scenario with the solution strategy. Subjects in the example condition were then presented two more example problems to study, whereas subjects in the problem-solving practice condition were asked to solve two problems, each followed by correct-answer feedback. Subjects returned 1 week later and completed a posttest that consisted of a novel problem.

Methods

Subjects

One hundred thirty-eight undergraduate students from Iowa State University (ISU) participated in this experiment for course credit in an introductory psychology course. Approximately 67% of students who attend ISU are 21 years of age or younger, approximately 43% identify as female, and approximately 75% are White.

Thirty-one subjects did not return for the second part of the experiment (i.e., the posttest) and were not included in any of the analyses. All reported analyses were thus based on the remaining 106 subjects: (a) problem-solving practice (n = 54) and (b) example study (n = 52).

Experiments 1–3 were approved by the Institutional Review Board at ISU. The sample size for Experiments 1–3 were approximated based on an a priori power analysis with 80% power to detect a medium effect size (f = .25; α = .05).

Design and materials

Subjects were randomly assigned to one of two conditions: (a) problem-solving practice and (b) example study. All instructions and materials were presented on a computer monitor on a white background at the center of the screen. Subjects entered all responses using a computer keyboard and mouse.

The materials consisted of four analogous problems (see Fig. 1), which were taken from Catrambone and Holyoak (1989; also see Gick & Holyoak, 1980, 1983). These problems consisted of Dunker’s radiation problem (1945) and three analogs. These problems all differ superficially, but consist of the same structure, wherein there are enough resources/forces in the problem scenario to reach the problem’s goal state, but to do so, those resources/forces must be fully concentrated on a given target, and there is an obstruction that prevents this from occurring. Due to their shared structure, these problems can be solved by using the same solution strategy, wherein the resources/forces in the problem scenario are divided along alternate paths and then converge simultaneously on the specified target (i.e., a divide and converge strategy).

The correct response for each problem was broken down into three components: (a) dividing the resources/forces in the problem, (b) sending resources/forces down different paths that surround the target location, and (c) the resources/forces converging on the target location simultaneously (for a similar approach to scoring these types of problems, see Snoddy & Kurtz, 2021). Responses were scored on a scale of 0–1. A response was scored as fully correct if all three of these solution components were included. Partial credit was awarded for responses that included one (1/3 partial credit) or two (2/3 partial credit) of these solution components. Posttest responses were scored blindly to condition; responses from the learning phase from subjects in the problem-solving practice condition were scored blindly to the order that the problems were presented. This same scoring scheme was used for Experiments 2–4.

Procedure

This experiment was two parts: The learning phase occurred in the first part and the posttest occurred in the second part, 1 week later. During the learning phase, all subjects were presented with three of the four problems (one at a time). At the beginning of the learning phase, all subjects were presented with one of these problems along with the corresponding solution (as shown in Fig. 1) and were asked to carefully read and study them.

For subjects in the example study condition, they were then shown two more of these example problems (one at a time), along with their corresponding solutions. For subjects in the problem-solving practice condition, after being shown the first example problem, they were asked to solve two problems (one at a time). Subjects were asked to type out their solution into a textbox, which was presented directly beneath the scenario. After responding, these subjects were presented with correct-answer feedback (as shown in Fig. 1), wherein they were shown the solution to the problem and were asked to carefully study the problem and its solution. Subjects in both conditions were therefore presented identical materials and feedback. Thus, the only difference between these conditions was whether subjects were asked to solve the problem prior to being shown its solution.

All aspects of the experiment (i.e., example and feedback study, problem solving) were self-paced. To move to the next problem, a prompt and continue button were presented near the right side of the bottom of the screen, which notified subjects that they could move on when they were ready by clicking on this button. For each problem that subjects were asked to solve (i.e., problem-solving practice and posttest problems), they were required to type a response into the textbox before they were allowed to see the correct solution (applicable for problem-solving practice problems) or move on (applicable for posttest problems).

After completing the learning phase, all subjects were thanked for their participation and were presented with a prompt reminding them to return 1 week later for the second part of the experiment. Table 1 shows mean completion times on the learning phase partitioned by training condition for each experiment. Subjects took approximately 8 min to complete the learning phase (M = 8.03 min).

Upon returning 1 week later, all subjects were given a posttest in which they were asked to solve one problem. For all subjects, the posttest problem that they were presented was the problem that was withheld during the learning phase. The order in which the problems were presented was randomized for each subject but was constrained by pseudo counterbalancing, wherein the same presentation orders were used in each condition equally.Footnote 1 Thus, the order in which the problem scenarios were presented was identical across training conditions. Table 2 shows mean posttest performance for each problem scenario (collapsing across training conditions).

Results and discussion

Table 3 shows mean performance for each problem that was solved in the learning phase and the posttest across each experiment. Table 4 shows mean performance on the posttest partitioned by condition in each experiment. Because time spent on the learning phase was allowed to vary across conditions, it was included as a covariate in all experiments.

An Analysis of Covariance (ANCOVA) (with time on the learning phase as a covariate) revealed no reliable posttest differences between conditions (see top row of Table 4), F(1,103) = 0.028, p = .868, MSE = .119, ηp2 = .000.Footnote 2 Furthermore, a Bayesian version of this ANCOVA found support for the null hypothesis (BF = .209), as these results were 4.80 times more likely to occur under the null model.Footnote 3 Thus, when the problem-solving and example study conditions were carefully controlled and only differed on whether subjects attempted to solve the problems, subjects in both conditions produced similar levels of transfer on the posttest.

Experiment 2

In Experiment 1, the posttest occurred 1 week after the learning phase. One possibility is that differences in learning exist between the training conditions, but that information was forgotten over the delay, which obscured differences in posttest performance. Thus, we conducted a second experiment, identical to Experiment 1, except that the posttest was immediate.

Methods

Subjects and procedure

One hundred fifteen undergraduate students from ISU participated in this experiment for course credit in an introductory psychology course. Aside from replacing the delayed posttest with an immediate posttest, the design, materials, and procedures were identical to Experiment 1. Subjects took approximately 11 min to complete the learning phase (M = 10.62 min; see second row of Table 1). After completing the learning phase, all subjects were notified that they would be shown one more scenario and would be required to solve it. Subjects were then given an immediate posttest, which consisted of a novel problem scenario.

Results and discussion

An ANCOVA (with learning phase time as a covariate) revealed no reliable posttest differences between conditions, F(1,112) = 3.570, p = .062, MSE = .117, ηp2 =.031. A follow-up Bayesian version of this ANCOVA found support for the null hypothesis (BF = .278), as the results were 3.60 times more likely to occur under the null model. These findings replicate the results from Experiment 1 and demonstrate that when the training conditions are carefully controlled, both produce similar levels of transfer on an immediate posttest.

Experiment 3

One possibility is that the results from Experiments 1 and 2 were due to floor effects, wherein subjects failed to learn enough during the learning phase to solve novel, analogous problems. Another possibility is that for problem-solving practice to be effective, learners must have enough knowledge about the problem type before engaging in problem solving (see Corral et al., 2020). Subjects in the problem-solving practice condition were only presented one example to study, which may have been insufficient for them to learn enough about the problem type to benefit from problem-solving practice.

Thus, in addition to the problem-solving practice and example study conditions, Experiment 3 included a control condition, wherein subjects attempted to solve each of the four problems, but were not shown any examples nor provided feedback. This condition provides a baseline measure of how well subjects can solve these problems without any training, and thus allows for a direct assessment of whether the training conditions produce sufficient learning to facilitate the transfer of knowledge to analogous, novel scenarios. Furthermore, to allow subjects greater opportunity to acquire the necessary knowledge about the to-be-learned problem type before engaging in problem-solving practice, a mixed study condition was included, in which subjects studied two examples before attempting to solve a problem.

If learning occurs during training, subjects who receive training should outperform control subjects on the posttest. Furthermore, if subjects require more than one example to benefit from problem-solving practice, then perhaps studying a second example before engaging in problem solving is beneficial. If so, subjects in the mixed study condition should perform best on the posttest.

Methods

Subjects

Two hundred twenty-eight undergraduate students from ISU participated in this experiment for course credit in an introductory psychology course.

Design, materials, and procedure

Subjects were randomly assigned to one of four conditions: (a) mixed study (n = 58), (b) problem-solving practice (n = 56), (c) example study (n = 58), and (d) control (n = 56). The example study and problem-solving practice conditions were identical to those in Experiments 1 and 2.

In the mixed study condition, after subjects were presented with the first example for study, subjects were shown a second example (as in the example study condition). Next, these subjects were presented with a third problem and were asked to solve it. After entering a response, these subjects were shown the correct solution and were asked to study it (as in the problem-solving practice condition). Subjects took approximately 7 min to complete the learning phase (M = 7.45 min; see third row of Table 1).

In the control condition, subjects were asked to solve four problems (one at a time); no feedback was presented on any of these problems. All other procedures and materials were identical to Experiment 2.

Results and discussion

Control versus training conditions

For problems the control subjects completed, a repeated-measures ANOVA revealed no differences in performance between the first (M = .208, SE = .039), second (M = .220, SE = .044, third (M = .226, SE = .040), and fourth problems (M = .232, SE =.046), F(3,165) = 0.057, p = .982, MSE = 0.102, ηp2 = .001. For this reason, control subjects’ mean performance on these problems was compared to training subjects’ posttest performance.

An ANOVA revealed that subjects in the training conditions performed better on the posttest than control subjects, F(3,224) = 6.380, p < .001, MSE = 0.110, ηp2 = .079; this outcome occurred for each of the training conditions (all ps < .007 and all ds > 0.611; see unadjusted means in Table 4). Thus, subjects in each training condition were able to learn and comprehend the problem solutions well enough to transfer this knowledge to novel, analogous problems.

Training conditions

An ANCOVA (with time on the learning phase as a covariate) revealed no posttest differences among the training conditions, F(2,168) = 1.952, p = .145, MSE = 0.123, ηp2 = .023 (see third row of Table 4). Moreover, a Bayesian version of this ANCOVA found support for the null hypothesis (BF = .297), as the results were 3.37 times more likely to occur under the null model. Given that subjects in the training conditions demonstrated better problem solving than control subjects, these findings indicate that each of the training conditions facilitate learning and transfer, but do so to a comparable degree.

Experiment 4

Experiments 1–3 show that subjects who engage in problem-solving practice do not transfer solutions to new problems better than example study subjects. Better memory of the content that learners practice retrieving is arguably the primary mechanism through which retrieval practice benefits learning (Butler et al., 2017; Pan & Rickard, 2018). Thus, if subjects who engage in problem-solving practice do not have better memory of the problem’s solution strategy than example study subjects, it would highlight a critical limitation in using retrieval practice to improve problem solving. Experiments 1–3 do not reveal whether problem-solving practice fails to benefit memory for the problem solution, application of that solution to a new problem, or both.

We therefore conducted a fourth experiment, which was similar to Experiment 3, but did not include a control condition. After the posttest, subjects were asked to solve the problems they were presented with during the learning phase (i.e., repeated problems). If problem-solving practice benefits memory of solution strategies, subjects in the problem-solving practice and mixed study conditions should outperform example study subjects on repeated problems.

Methods

Subjects

Three hundred forty-one undergraduate students from Syracuse University participated in this experiment for course credit in an introductory psychology course. This experiment was approved by the Institutional Review Board at Syracuse University. The experiment site was changed from the previous experiments because the first author changed institutions, which afforded the opportunity to increase our external validity and extend the findings from the previous experiments to a different and more diverse population. Approximately 61% of students who attend Syracuse University are 21 years of age or younger, 54% identify as female, and approximately 56% are White.

To decrease the chance of committing a type two error, the present experiment adopted a more conservative a priori power analysis than Experiments 1–3. We based the sample size for Experiment 4 on an a priori power analysis with at least 90% power to detect a small-medium effect size (f = .20; α = .05).

Design, materials, and procedure

Subjects were randomly assigned to one of three conditions: (a) example study (n = 116), (b) problem-solving practice (n = 109), and (c) mixed study (n = 116). Apart from not including a control condition, through the posttest, the design and procedure in Experiment 4 were identical to those of Experiment 3. Subjects took approximately 10 min to complete the learning phase (M = 10.34 min; see fourth row of Table 1).

After the posttest, all subjects were asked to solve the same problem scenarios that they were presented with during the learning phase. The order in which these problems were presented was randomized for each subject. No feedback was presented on repeated problems.

Lastly, to get a better sense of subjects’ knowledge about the problems, we asked them two supplementary questions: (a) whether they recognized any connection or commonality among the problem scenarios, and if so, what it was (structural similarity question), and (b) whether they used a common rule or solution for solving the problems, and if so, what it was (solution similarity question). For each of these questions, subjects were asked to type their response into a textbox, which was located directly beneath the question.

Scoring of similarity questions

Both structural and solution similarity questions were scored on a scale of 0–4. As noted earlier, the problem scenarios consist of the same relational structure, such that there are (a) sufficient resources/forces to reach the goal state, but (b) doing so requires concentrating all available resources on a given point, and (c) there is an obstacle that prevents this from happening. Accordingly, these problems can be solved using the same solution strategy, as the (a) resources/forces in the scenario must be partitioned and (b) sent along different routes and then (c) converge simultaneously on the target. Thus, there are three shared structural components in the problem scenarios and three corresponding shared components in the solution strategy.

Structural similarity questions. For the structural similarity questions, subjects received a 0 if they reported not recognizing any commonalities among the problem scenarios; subjects received a 1 if they reported noticing a commonality, but did not note any of the problems’ structural or solution components, a 2 if they noted one of the problems’ structural or solution components, a 3 if they noted two of the problems’ structural or solution components, and a 4 if they noted three of the problems’ structural or solution components.

Solution similarity questions. For the solution similarity questions, subjects received a 0 if they reported not using any similar or common solution to solve the problems; subjects received a 1 if they reported using a common or similar solution, but did not note any of the problems’ solution components, a 2 if they noted one of the problem’s solution components, a 3 if they noted two of the problem’s solution components, and a 4 if they noted three of the problems’ solution components. Table 5 includes mean scores on both similarity questions partitioned by training condition.

Results and discussion

Figure 2 shows mean performance on the posttest and repeated problems for each condition. To examine performance differences among the training conditions, we conducted a mixed ANCOVA, with training condition as a between-subjects factor (mixed study vs. problem-solving practice vs. example study), learning phase time as a covariate, and test phase as a within-subjects factor (posttest vs. repeated problems). A reliable interaction was observed between the training conditions and the test phase, F(2,337) = 5.29, p = .005, MSE = 0.056, ηp2 = .030, such that differences in performance among conditions depended on test phase.

Mean performance and standard errors of the mean on the posttest and repeated problems for each condition in Experiment 4

Specifically, no performance differences were observed on the posttest, F(2,337) = 0.476, p = .622, MSE = 0.128, ηp2 = .003. Additionally, a follow-up Bayesian ANCOVA (as in Experiment 3) revealed very strong evidence for the null hypothesis (BF = .014), as the posttest results were 73.39 times more likely to occur under the null model.

However, performance differences did emerge on repeated problems, F(2,337) = 3.36, p = .036, MSE = 0.093, ηp2 = .020, as subjects in the mixed study and problem-solving practice conditions performed better on repeated problems than subjects in the example study condition (both ps < .050).

Thus, subjects who engaged in problem solving during learning (i.e., mixed study and problem-solving practice conditions) were better able to retrieve and apply the solutions to those same problems later than subjects in the example study condition. Nevertheless, this benefit did not lead to better transfer on the posttest.

General discussion

Across four experiments (also see the combined analysis in the Appendix), subjects in the training conditions performed comparably on the posttest. Experiment 3 suggests that this finding is due to the training conditions producing similar levels of transfer, as subjects in all of the training conditions outperformed control subjects on the posttest. This finding indicates that the training conditions facilitated the transfer of learning (otherwise posttest differences between the training and control conditions should not have emerged). Further support for this conclusion comes from subjects in the problem-solving practice and mixed study conditions generally performing better on novel problems as the study progressed (see Table 3). Thus, the null findings reported among the training conditions were the result of these subjects being able to transfer what they learned to a comparable degree.

Furthermore, Experiment 4 showed that subjects who engaged in problem-solving practice performed better than subjects who engaged in example study on repeated problems. This outcome can be thought of as a type of testing effect, as subjects who had the opportunity to practice retrieving solution strategies during training were better able to recall those solutions than subjects who studied examples. This result is similar to recent data showing that problem-solving practice benefits subsequent performance on identical problems (Yeo & Fazio, 2019). Critically, however, this superior memory was not enough to produce differential posttest transfer among the training conditions.

These results are in line with recent work suggesting that memory of to-be-learned material is necessary, but insufficient for the transfer of learning (Butler et al., 2017). Memory alone, however, does not facilitate transfer unless learners recognize the relevance of the learned information in the current situation.

Indeed, in Experiment 4, although problem-solving practice and mixed study subjects had the best memory for the solution strategies, the similarity questions revealed that there were no condition differences in subjects’ recognizing the relevance of these solution strategies across different problem scenarios (see Table 5). These findings might explain why transfer more often occurs under conditions where learners are provided with hints about the relevance of learned information to a current situation (Barnett & Ceci, 2002; Butler, 2010; Gick & Holyoak, 1983), as learners often do not recognize that their prior knowledge is applicable.

Thus, even when learners have superior knowledge of a problem’s solution strategy, this knowledge is not enough to facilitate the transfer of learning. This takeaway highlights that other aspects of problem solving might be particularly important for facilitating transfer (e.g., recognizing a problem type’s structure; see Corral & Kurtz, 2023). Accordingly, work in mathematics suggests that students do not struggle to learn a problem’s solution strategy or in how to use it, but rather in recognizing when to apply it (Mayer, 1998). Therefore, although retrieval practice can aid learners’ knowledge of solution strategies, our findings suggest that additional training is needed to facilitate learners’ application of that knowledge to new situations.

These findings offer important new insights into the mechanistic relationship between retrieval practice and the transfer of learning, and how the former impacts the latter. Retrieval practice seems to provide a greater benefit than studying to the learning and memory component that is involved in the transfer of learning. However, one limitation is that this benefit might be restricted to the information that learners attempt to retrieve (e.g., solution strategies). Indeed, when compared to example study, retrieval practice does not appear to better improve the recognition component of knowledge transfer.

Critically, the recognition component of transfer seems to give rise to the inert knowledge problem (Snoddy & Kurtz, 2021), and has been posited to be the most central component in the successful transfer of learning (Corral & Kurtz, 2023). It is thus particularly noteworthy that retrieval (via problem-solving practice) does not appear to better improve the recognition of when to apply a corresponding solution strategy any more so than studying examples. One possibility is that for problem-solving practice to produce a greater benefit to transfer than example study, it must better aid learners’ recognition of when to apply the corresponding solution strategy.

The present results differ from the multitude of studies showing advantages of retrieval practice over restudy (for reviews, see Agarwal et al., 2021; Pan & Rickard, 2018). It is worth noting, however, that most studies on retrieval practice are based on tasks involving recall of information from memory (which we also find evidence for in Experiment 4); tasks involving transfer of learning – and in particular transfer of a solution strategy to novel scenarios – have not been thoroughly explored with retrieval practice (see Carpenter et al., 2020).

The current results thus contribute critical new data showing that retrieval practice benefits memory, but not necessarily transfer. These findings point to potential boundary conditions and limitations to the benefits that retrieval practice provides. These takeaways highlight an often-overlooked distinction by theoreticians between memory and the transfer of learning. Indeed, theories on retrieval practice primarily focus on how retrieval engages mechanisms that strengthen memory of the information that is retrieved (Carpenter et al., 2022; Pan & Rickard, 2018). However, these theories do not directly explain how this enhanced memory might facilitate the transfer of learning, nor (and perhaps more importantly) how it might aid learners in recognizing when to apply their corresponding knowledge. The present findings thus call for theoreticians to consider more careful and nuanced hypotheses on the relationship between retrieval practice and the transfer of learning.

Data Availability

Our data are not publicly available, but will be made available to interested parties upon request to the authors.

Notes

It is important to note that, by and large, studies that compare problem-solving practice to example study (e.g., Cooper & Sweller, 1987; Sweller & Cooper, 1985; Van Gog & Kester, 2012; Van Gog et al., 2011; also see Hostetter et al., 2018; Peterson & Wissman, 2018) do not typically randomize the presentation order of the problems, which may introduce order effects that differentially interact with the training conditions. Randomizing the presentation order of the problems thus affords a more rigorous form of experimental control.

A main effect of experiment was observed, F(3, 723) = 4.07, p = .007, MSE = .127, ηp2 = .017, but no interactions occurred between the training conditions and the experiments, F(4, 723) = 1.19, p = .314, MSE = .127, ηp2 = .007.

References

Agarwal, P. K., Nunes, L. D., & Blunt, J. R. (2021). Retrieval practice consistently benefits student learning: A systematic review of applied research in schools and classrooms. Educational Psychology Review, 33, 1409–1453.

Anderson, J. R. (1993). Problem solving and learning. American Psychologist, 48, 35–44. https://doi.org/10.1037/0003-066X.48.1.35

Barnett, S. M., & Ceci, S. J. (2002). When and where do we apply what we learn?: A taxonomy for far transfer. Psychological Bulletin, 128, 612–637. https://doi.org/10.1037/0033-2909.128.4.612

Benassi, V., Overson, C., & Hakala, C. (Eds.). (2014). Applying science of learning in education: Infusing psychological science into the curriculum. American Psychological Association.

Bjork, R. A., & Bjork, E. L. (1992). A new theory of disuse and an old theory of stimulus fluctuation. In A. F. Healy, S. M. Kosslyn, & R. M. Shiffrin (Eds.), Essays in honor of William K. Estes, Vol. 1. From learning theory to connectionist theory; Vol. 2. From learning processes to cognitive processes (pp. 35–67). Lawrence Erlbaum Associates Inc.

Butler, A. C. (2010). Repeated testing produces superior transfer of learning relative to repeated studying. Journal of Experimental Psychology: Learning, Memory, and Cognition, 36, 1118–1133. https://doi.org/10.1037/a0019902

Butler, A. C., Black-Maier, A. C., Raley, N. D., & Marsh, E. J. (2017). Retrieving and applying knowledge to different examples promotes transfer of learning. Journal of Experimental Psychology: Applied, 23, 433–446.

Carrier, M., & Pashler, H. (1992). The influence of retrieval on retention. Memory and Cognition, 20, 632–642. https://doi.org/10.3758/bf03202713

Carpenter, S. K. (2009). Cue strength as a moderator of the testing effect: The benefits of elaborative retrieval. Journal of Experimental Psychology: Learning, Memory, and Cognition, 35, 1563–1569.

Carpenter, S. K. (2011). Semantic information activated during retrieval contributed to later retention: Support for the mediator effectiveness hypothesis of the testing effect. Journal of Experimental Psychology: Learning, Memory, and Cognition, 37, 1547–1552.

Carpenter, S. K. (2012). Testing enhances the transfer of learning. Current Directions in Psychological Science, 21, 279–283.

Carpenter, S. K., Endres, T., & Hui, L. (2020). Students’ use of retrieval in self-regulated learning: Implications for monitoring and regulating effortful learning experiences. Educational Psychology Review, 32, 1029–1054. https://doi.org/10.1007/s10648-020-09562-w

Carpenter, S. K., Pan, S. C., & Butler, A. C. (2022). The science of effective learning with spacing and retrieval practice. Nature Reviews Psychology, 1, 496–511.

Catrambone, R., & Holyoak, K. J. (1989). Overcoming contextual limitations on problem solving transfer. Journal of Experimental Psychology: Learning, Memory, and Cognition, 15, 1147–1156.

Corral, D., & Carpenter, S. K. (2020). Facilitating transfer through incorrect examples and explanatory feedback. Quarterly Journal of Experimental Psychology, 73, 1340–1359.

Corral, D., & Jones, M. (2014). The effects of relational structure on analogical learning. Cognition, 132, 280–300.

Corral, D., & Kurtz, K. (2023). Improving the transfer of learning in education through category learning. Manuscript submitted for publication.

Corral, D., Quilici, J. L., & Rutchick, A. M. (2020). The effects of early schema acquisition on mathematical problem solving. Psychological Research, 84, 1495–1506.

Corral, D., Carpenter, S. K., & Clingan-Siverly, S. (2021). The effects of delayed versus immediate explanatory feedback on complex concept learning. Quarterly Journal of Experimental Psychology, 74, 786–799.

Cooper, G., & Sweller, J. (1987). Effects of schema acquisition and rule automation on mathematical problem-solving transfer. Journal of Educational Psychology, 79, 347–362.

Duncker, K. (1945). On problem-solving (L. S. Lees, Trans.). Psychological Monographs, 58, i–113. https://doi.org/10.1037/h0093599

Dunlosky, J., Rawson, K. A., Marsh, E. J., Nathan, M. J., & Willingham, D. T. (2013). Improving students’ learning with effective learning techniques: Promising directions from cognitive and educational psychology. Psychological Science in the Public Interest, 14, 4–58.

Hostetter, A. B., Penix, E. A., Norman, M. Z., Batsell, W. R., & Carr, T. H. (2018). The role of retrieval practice in memory and analogical problem-solving. Quarterly Journal of Experimental Psychology, 72, 858–871. https://doi.org/10.1177/1747021818771928

Gick, M. L., & Holyoak, K. J. (1980). Analogical problem solving. Cognitive Psychology, 12, 306–355.

Gick, M. L., & Holyoak, K. J. (1983). Schema induction and analogical transfer. Cognitive Psychology, 15, 1–38. https://doi.org/10.1016/0010-0285(83)90002-6

Gick, M. L., & Holyoak, K. J. (1987). The cognitive basis of knowledge transfer. In S. M. Cormier & J. D. Hagman (Eds.), Transfer of learning: Contemporary research and applications (pp. 9–46). Academic Press.

Holyoak, K. J., & Koh, K. (1987). Surface and structural similarity in analogical transfer. Memory & Cognition, 15, 332–340. https://doi.org/10.3758/BF03197035

Mayer, R. (1998). Cognitive, metacognitive, and motivational aspects of problem solving. Instructional Science, 26, 49–63. https://doi.org/10.1023/a:1003088013286

Pan, S. C., & Rickard, T. C. (2018). Transfer of test-enhanced learning: Meta-analytic review and synthesis. Psychological Bulletin, 144, 710–756.

Peterson, D. J., & Wissman, K. T. (2018). The testing effect and analogical problem-solving. Memory, 26, 1460–1466.

Roediger, H. L., & Butler, A. C. (2011). The critical role of retrieval practice in long-term retention. Trends in Cognitive Sciences, 15, 20–27.

Ross, B. H. (1987). This is like that: The use of earlier problems and the separation of similarity effects. Journal of Experimental Psychology: Learning, Memory, and Cognition, 13, 629–639. https://doi.org/10.1037/0278-7393.13.4.629

Ross, B. H. (1989). Distinguishing types of superficial similarities: Different effects on the access and use of earlier problems. Journal of Experimental Psychology: Learning, Memory, and Cognition, 15, 456–468. https://doi.org/10.1037/0278-7393.15.3.456

Snoddy, S., & Kurtz, K. J. (2021). Preventing inert knowledge: Category status promotes spontaneous structure-based retrieval of prior knowledge. Journal of Experimental Psychology: Learning, Memory, and Cognition, 47, 571–607. https://doi.org/10.1037/xlm0000974

Sweller, J., & Cooper, G. (1985). The use of worked examples as a substitute for problem solving in learning algebra. Cognition and Instruction, 2, 59–89.

Sweller, J., Mawer, R., & Ward, M. (1983). Development of expertise in mathematical problem solving. Journal of Experimental Psychology: General, 112, 639–661.

Whitehead, A. N. (1929). The aims of education and other essays. Macmillan.

Van Gog, T., & Kester, L. (2012). A test of the testing effect: acquiring problem-solving skills from worked examples. Cognitive Science, 36, 1–10.

Van Gog, T., Kester, L., & Paas, F. (2011). Effects of worked examples, example-problem, and problem-example pairs on novices’ learning. Contemporary Educational Psychology, 36, 212–218.

Yeo, D. J., & Fazio, L. K. (2019). The optimal learning strategy depends on learning goals and processes: Retrieval practice versus worked examples. Journal of Educational Psychology, 111, 73–90. https://doi.org/10.1037/edu0000268

Acknowledgements

This material is based upon work supported by the James S. McDonnell Foundation 21st Century Science Initiative in Understanding Human Cognition, Collaborative Grant No. 220020483. Any opinions, findings, conclusions, or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the McDonnell Foundation.

Portions of this work were presented at the 60th Annual Meeting of The Psychonomic Society, Montreal, Canada.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Open practices statements

None of the experiments were preregistered and all of the data are available upon request.

Appendix

Appendix

Combined analyses (Experiments 1–4)

To further increase confidence in our conclusions, we conducted a set of supplementary analyses, in which we pooled the data from Experiments 1–4 into a single dataset. In total, this combination led to 734 subjects: (a) mixed study (n = 174), (b) problem-solving practice (n = 277), and (c) example study (n = 283).

We conducted a 3 × 4 ANCOVA with training condition (example study vs. problem-solving practice vs. mixed study) and experiment (Experiment 1 vs. Experiment 2 vs. Experiment 3 vs. Experiment 4) as between-subject factors, time on the learning phase as a covariate, and posttest performance as the dependent measure. This analysis revealed no differences in performance among the training conditions on the posttest (see Table 4 for condition means), F(2, 723) = 1.16, p = .315, MSE = 0.127, ηp2 = .003.Footnote 4 Moreover, a Bayesian version of this ANCOVA found strong support for the null hypothesis (BF = .041), as these findings were 24.10 times more likely to occur under the null model. These findings therefore confirm the patterns from the four experiments and suggest that the three training conditions seem to produce similar amounts of learning and transfer during problem solving.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Corral, D., Carpenter, S.K. & St. Hilaire, K.J. The effects of retrieval versus study on analogical problem solving. Psychon Bull Rev 30, 1954–1965 (2023). https://doi.org/10.3758/s13423-023-02268-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-023-02268-4