Abstract

Working memory capacity is an important psychological construct, and many real-world phenomena are strongly associated with individual differences in working memory functioning. Although working memory and attention are intertwined, several studies have recently shown that individual differences in the general ability to control attention is more strongly predictive of human behavior than working memory capacity. In this review, we argue that researchers would therefore generally be better suited to studying the role of attention control rather than memory-based abilities in explaining real-world behavior and performance in humans. The review begins with a discussion of relevant literature on the nature and measurement of both working memory capacity and attention control, including recent developments in the study of individual differences of attention control. We then selectively review existing literature on the role of both working memory and attention in various applied settings and explain, in each case, why a switch in emphasis to attention control is warranted. Topics covered include psychological testing, cognitive training, education, sports, police decision-making, human factors, and disorders within clinical psychology. The review concludes with general recommendations and best practices for researchers interested in conducting studies of individual differences in attention control.

Similar content being viewed by others

A robust finding from the cognitive psychology literature is that the amount of information humans can temporarily hold in consciousness at any given time is severely limited (e.g., Atkinson & Shiffrin, 1968; Broadbent, 1958; Cowan, 2001; Jahanshahi et al., 2008; Lachman et al., 1979; Miller, 1956; Shannon & Weaver, 1949). Miller et al. (1960) coined the term working memory to distinguish between the passive holding of information (short-term memory) versus memory involved in planning and carrying out behavior in the service of ongoing mental activity. The concept of working memory was therefore developed to refer to the more controlled and adaptive aspects of information processing. More formally, working memory can be defined as a limited capacity system that allows the temporary storage, manipulation, and maintenance of information in performing complex cognitive tasks (cf. Baddeley, 2000) and can be conceived as a description of how short-term memory is used while under cognitive load.

Baddeley and Hitch (1974; also see Baddeley, 1992) later developed the tripartite model of working memory, which heavily influenced subsequent research on working memory and human cognition more broadly. In this model, working memory consists of two storage systems—the phonological loop for verbal information and visuospatial sketchpad for visual/spatial information—and the central executive. The central executive is a flexible system that is responsible for the processing and manipulation of information, such as integrating information from the phonological loop and visuospatial sketchpad and connecting short-term memory to long-term memory (Baddeley, 2000). The central executive is also particularly important for managing the flow of information in situations of high cognitive load or demand in which capacity limits are exceeded and selective attention is required (e.g., Fukuda et al., 2016; Unsworth et al., 2004).

Working memory capacity

Definition and assessment

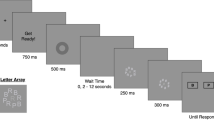

The functional capability of one’s working memory system is known as working memory capacity and is often indexed in terms of the number of units of information an individual can hold in primary memory at a given time (often referred to as span) while under cognitive load. Working memory capacity tasks are similar to simple span measures of short-term memory, in which a series of to-be-remembered stimuli are presented followed by immediate recall, but working memory measures must necessarily involve additional cognitive demand (Table 1; see Conway et al., 2005; Oberauer, 2005). The purpose of the additional demand is to prevent the respondent from rehearsing to-be-remembered information, thereby requiring them to maintain and manage the memoranda in a manner requiring more controlled processing, thus engaging the central executive. In complex span tasks (Fig. 1), this additional demand is in the form of a secondary processing (distractor) task based on the storage plus processing working memory hypothesis laid out by Baddeley and Hitch (1974). But the additional demand can come in other forms, for example in working memory updating tasks cognitive load is imposed via the requirement to continuously add new information into primary memory at the expense of previously relevant, but now irrelevant, information (see Fig. 2 for two examples of updating tasks).

Complex span tasks. In each task, the storage and processing components are independent. After 2–9 trials of storage + processing are presented, the examinee is asked to recall the memoranda in the order in which they were presented. Performance is often scored using the partial span score, which is simply the total number of items recalled in the correct position (see Conway et al., 2005). Pictures are to scale

Working memory updating tasks. a In running span, to-be-remembered stimuli are presented sequentially, and the respondent is asked to recall the last n of them in order. Here, n = 3 and the correct response is “6, 1, 3.” b In this example of mental counters, the respondent begins each trial by imagining the number “555.” Then, a series of cues quickly flash above or below lines that correspond to each counter, indicating that the respondent should update the original number by adding (flash above line) or subtracting (flash below line) 1 from the appropriate counter. In this example trial of Set Size 3, the correct response is “554.” Presentation times in updating tasks are generally quick to prevent rehearsal. Not to scale

Psychological importance

Working memory capacity has become an important construct in cognitive psychology as it has been repeatedly shown that it is a broad and domain general ability (e.g., Kane et al., 2004) that correlates with a wide range of cognitive abilities and real-world behaviors, often above other predictors. Further, many psychopathologies and situational phenomena are marked by deficits in working memory capacity, and it is often suggested that higher levels of working memory capacity provide protective effects. The list of abilities and phenomena associated with working memory capacity is long but include language and reading comprehension (Daneman & Carpenter, 1980; Daneman & Merikle, 1996), quantitative ability (Turner & Engle, 1989), acquisition of native language (Gathercole & Baddeley, 1989) and second language (Wen, 2015), following directions (Engle et al., 1991), reasoning ability and fluid intelligence (Engle et al., 1999; Kyllonen & Christal, 1990), attention control (Draheim et al., 2021; Shipstead et al., 2015) and everyday attention failures (Unsworth et al., 2012) long-term memory (McCabe, 2008), rejection of false memories (Leding, 2012), accuracy of eye-witness testimony (Jaschinski & Wentura, 2002), prospective memory (Brewer et al., 2010), multitasking (Redick et al., 2019), task switching (Draheim et al., 2016), emotion regulation (Schmeichel et al., 2008), performance after interruptions (Foroughi, Werner, et al., 2016c; Westbrook et al., 2018), performance during extreme sleep deprivation (Lopez et al., 2012), anxiety (Moran, 2016), depression (Nikolin et al., 2021), stress (K. Klein & Boals, 2001), schizophrenia (Forbes et al., 2009), posttraumatic stress disorder (Shaw et al., 2009), Alzheimer’s (Rosen et al., 2002), stereotype threat (Schmader & Johns, 2003), and alcoholism (Finn et al., 2002).

To provide some context for the popularity of assessing working memory capacity, our lab website has links to several versions of the complex span tasks for download. They have been downloaded thousands of times and independently translated into more than 15 languages despite the tasks requiring access to proprietary software (E-Prime) and an expensive license. According to Google Scholar, as of August 22, 2021, the methodological review and guide to measuring working memory capacity (Conway et al., 2005) has been cited more than 3,000 times, and the article that introduced the computerized version of the operation span task (Unsworth et al., 2005) has more than 2,000 citations. Further, the first two papers showing that complex span performance correlates with reading comprehension and scores on the Scholastic Aptitude Test combine for more than 12,000 citations (Daneman & Carpenter, 1980; Turner & Engle, 1989). Clearly, there is great interest in discovering and explaining associations between working memory and behavior.

Connecting working memory capacity, fluid intelligence, and attention control importance of attention control

One of the most notable features of working memory capacity is its substantial correlation with fluid intelligence, which is the ability to reason in novel situations (Engle et al., 1999). The precise magnitude of this relationship has been the subject to debate, but the two constructs typically share at least half of their variance at the latent level (see Kane et al., 2005; Oberauer et al., 2005). The relationship is sometimes considered to be causal in that individuals with higher levels of working memory capacity can better store and maintain representations that allow for generating and testing of hypotheses in fluid intelligence tasks (e.g., Chuderski et al., 2012; Kali, 2007; Kyllonen & Christal, 1990; Shah & Miyake, 1996; Unsworth et al., 2014; Verguts & De Boeck, 2002). But Engle (2002) proposed that attentional mechanisms largely account for individual differences in both working memory capacity and fluid intelligence, and therefore attentional mechanisms are the primary and causal reason for the relationship between the two constructs (see Barrett et al., 2004; Engle, 2002; Engle et al., 1999; Heitz et al., 2005, 2006; Shipstead et al., 2016; Unsworth & Engle, 2005; also see Burgoyne et al., 2019; Wiley et al., 2011). This executive attention view of individual differences in working memory capacity is notably compatible with K. Kovacs and Conway’s (2016) recent and novel account of intelligence called process overlap theory; for example, Conway et al. (2021) stated, “A central claim [of process overlap theory] is that domain-general executive attention processes play a critical role in intelligence, acting as a central bottleneck on task performance and a constraint on development of domain-specific cognitive abilities” (p. 2).

To map the executive attention theory of individual differences in working memory capacity to the Baddeley and Hitch (1974) model, the most important aspect of working memory is the central executive component and not the storage systems. Initial evidence of this claim came largely from extreme-groups studies showing that individuals who perform poorly on working memory capacity tasks also perform worse on attention-demanding tasks that have minimal memory demands. For example, individuals with a larger working memory capacity are better able to avoid looking at a flashing distractor in their peripheral vision to catch a target on the opposite side of the screen (Unsworth et al., 2004), quicker to narrow the size of their visual lens to only include stimuli in a target area of space (Heitz & Engle, 2007), better able to ignore their name in a dichotic listening task when it appears in the to-be-ignored channel (Conway et al., 2001), better at filtering distracting color words in Stroop tasks (Kane et al., 2001), and are less likely to experience attentional lapses (McVay & Kane, 2009).

In a recent elaboration on the executive attention view, Shipstead et al. (2016) proposed that maintenance and disengagement represent core top-down attentional mechanisms through which working memory capacity relates to fluid intelligence (Fig. 3a). According to this hypothesis, maintenance and disengagement are both necessary in performing working memory capacity and fluid intelligence tasks, but measures of working memory and fluid intelligence place differential demands on one or the other (Fig. 3b). Working memory tasks place more demand on maintenance and fluid intelligence tasks place more emphasis on disengagement (see also Mashburn et al., 2020). While the mechanisms of maintenance and disengagement are in opposition of one another, they work in tandem to facilitate goal-directed behavior. As such, the strong relationship between working memory capacity and fluid intelligence can be explained by their common reliance on a top-down executive attention system, which regulates both maintenance and disengagement. This top-down executive attention system is how attention control is implemented, which we define as the general ability to engage in goal-directed behavior via (1) maintaining goal-relevant behavior and information, particularly in the face of distraction and interference, and (2) filtering or otherwise blocking irrelevant and inappropriate information and behavior. Therefore, what distinguishes working memory capacity from attention control is that the former places more emphasis on specifically maintaining information in primary memory, whereas attention control refers to how limited-capacity domain-general attention is applied to the management of goal-directed behavior, which may (or may not) involve the maintenance of multiple pieces of information (also see Martin, Mashburn, & Engle, 2020).

Maintenance/disengagement hypothesis. In the maintenance/disengagement hypothesis, top-down signals in the form of attention control organize maintenance and disengagement around a particular goal (a). The relative emphasis on maintenance and disengagement in carrying out these top-down goals relies largely on the nature and demands of the to-be-performed task (b). The pie charts show the hypothetical relative proportion of total performance variance attributable to specific processes. For example, working memory tasks will require more maintenance than disengagement, whereas fluid intelligence tasks will require more disengagement than maintenance. The mental counters task (illustrated in Fig. 2b) may correlate about as strongly with both fluid intelligence and working memory capacity measures because maintenance and disengagement are required to roughly the same degree. The percentages are for illustrative purposes only and should not be considered veridical

In the maintenance/disengagement hypothesis, attention control is the commonality between working memory capacity and fluid intelligence and therefore the primary reason that these constructs (and perhaps all higher-order cognitive abilities) are related is due to their reliance on top-down executive attention (see Burgoyne & Engle, 2020; also see Conway et al., 2021; Rueda, 2018, for similar views). It should therefore be the case that, in most situations, attention control is a better indicator of one’s overall cognitive capability than working memory capacity and/or fluid intelligence alone. In other words, knowledge of an individual’s ability to control their attention should explain more variation in higher-order cognitive behaviors and performance than either working memory capacity or fluid intelligence.

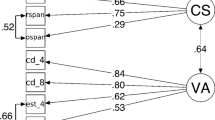

Indeed, several independent lines of research support the theoretical position that attention control underlies higher-order cognition and therefore working memory capacity’s broad predictive powers can be largely attributed to attentional factors rather than the maintenance of information specifically (e.g., Draheim et al., 2021; Fukuda et al., 2016; S. Gray et al., 2017; McCabe et al., 2010; McVay & Kane, 2012a; Rueda, 2018; Tsukahara et al., 2020). Some of these studies involve measuring various abilities and testing whether attention control can mediate (or account) for the relationships between other cognitive abilities at the latent level. Examples include Draheim et al. (2021), a large-scale correlational study of 396 students and community members in which the authors found that the strong relationship between working memory capacity and fluid intelligence was no longer statistically significant when accounting for the shared variance between the two constructs and attention control (Fig. 4). Similarly, Tsukahara et al. (2020) found in each of two independent datasets that the relationships between sensory discrimination ability and working memory capacity—and sensory discrimination ability and fluid intelligence—were both completely accounted for by attention control. McVay and Kane (2012a) found that the relationship between working memory capacity and reading comprehension was no longer statistically significant after accounting for the shared variance with mind wandering and other attention control measures. And, finally, Frith et al. (2021) reported results suggestive that individual differences in attention control are the reason for the relationship between fluid intelligence and creativity. Partially based on these findings, we argue that attention control should demonstrate greater predictive power for cognitive behavior and real-world outcomes as opposed to working memory capacity or even fluid intelligence.

Attention control mediating the working memory capacity-fluid intelligence relationship. Structural equation model from Draheim et al. (2021) showing that the relationship between working memory capacity and fluid intelligence is not statistically significant when attention control is added as a mediator. The numbers on each path between the constructs can be likened to correlations between the latent (unobserved) abilities, with the path between working memory capacity and fluid intelligence representing the relationship between the two abilities that was left over after the contribution of attention control was partialled out. Each construct was measured with three tasks, which are shown here along with their respective factor loadings (likened to the correlation between each task and its corresponding construct). Note that this study included 10 total attention control tasks, and full mediation of the working memory capacity-fluid intelligence relationship was present with multiple combinations of accuracy-based attention control measures, but not in models involving reaction time-based attention control tasks. N = 396

Attention control or long-term memory?

Our argument is that individual differences in working memory capacity are primarily attentional in nature and that attention control mostly accounts for working memory capacity’s strong relationship with an array of cognitive behaviors and phenomena. But there are competing theories, and it is feasible that working memory capacity has a unique relationship to some aspects of cognitive behavior which cannot be fully accounted for by attention control (e.g., more lower-level processing which depends more on short-term memory capacity than goal maintenance). Examples of phenomena which may be driven more so by individual differences in short-term and working memory capacity than attention control may include rate of encoding into long-term memory (Fukuda & Vogel, 2019), long-term associative learning (G. Jones & Macken, 2018), and creation of false memories (e.g., Peters et al., 2007). Some models and theories of working memory therefore place less emphasis on the role of attention (see Adams et al., 2018, for a review) and more on other processes, most notably long-term memory, which is often closely linked with working memory in many theoretical and descriptive models of working memory (e.g., Baddeley, 2000; Cantor & Engle, 1993; Cowan, 1988, 2008, 2017; Lewis-Peacock & Postle, 2008; Oberauer, 2002; Ruchkin et al., 2003). A well-known example is Cowan’s embedded process model, in which working memory is effectively information in long-term memory that is both activated and within the focus of attention (Cowan, 1988, 1999, 2017; also see Oberauer, 2002; Ruchkin et al., 2003). Baddeley (2000) also revised the Baddeley and Hitch (1974) multicomponent model of working memory by adding a fourth component—the episodic buffer—to incorporate the role of long-term memory in working memory (see Fig. 5). Baddeley stated that the episodic buffer “comprises a limited capacity system that provides temporary storage of information held in a multimodal code, which is capable of binding information from the subsidiary systems, and from long-term memory, into a unitary episodic representation” (p. 417). Baddeley’s motivation for adding the episodic buffer was to account for more complex aspects of cognition in working memory and emphasize integration of the subsystems rather than their isolation. For example, several studies showed that individuals with short-term memory deficits also displayed long-term memory deficits, suggesting a stronger interaction between long-term memory and short-term memory than assumed under the original model (also see Burgess & Hitch, 2005).

Updated multicomponent model of working memory from Baddeley (2000). Baddeley updated the multicomponent model to include the episodic buffer, in large part to account for the contributions of long-term memory to working memory. It is noteworthy that, according to Baddeley, the episodic buffer is controlled by the central executive as are the original two storage systems (visuospatial sketchpad and phonological loop), and that the central executive is synonymous with attention control

Individual differences research supports that one particularly important aspect of working memory capacity is the ability to perform a controlled search of activated information contained in long-term memory (e.g., Mogle et al., 2008; Unsworth & Engle, 2005; Unsworth & Spillers, 2010). For example, Unsworth and Engle (2005) proposed a dual-component model which postulated that limitations in working memory (i.e., individual differences) arise due to individual differences in (1) the ability to active maintain information in primary memory (involving both short-term memory capacity and attention control) and (2) the ability to search for and retrieve information from secondary memory. To elaborate on the second component, Unsworth and Engle assumed that capacity limitations result in lost and displaced items from the contents of primary memory, thus necessitating controlled search and retrieval from long-term (secondary) memory to recover them. Individual differences in working memory capacity therefore arise partially due to individual variation and limitations in the ability to perform this recovery, for example differing ability to initially encode items in long-term memory or combat proactive interference. These sources of individual variation were argued to be at least partially independent from attention control, and in a test of this idea, Unsworth et al. (2014) found that a full mediation of the working memory capacity-fluid intelligence relationship was possible, but only when primary memory capacity, attention control, and secondary memory were all included in the model as mediators, and that these three factors each had an independent contribution to the working memory capacity-fluid intelligence relationship (see also Unsworth & Spillers, 2010). They therefore concluded that individual differences in attention control, memory capacity, and long-term memory retrieval were jointly responsible for producing individual differences in working memory capacity and thus the likely mechanisms behind the strong criterion validity of working memory capacity measures.

While many researchers have linked working memory and long-term memory and shown that long-term memory processes are an important aspect of individual differences in working memory capacity, it is not clear whether the ability to search and recover information from secondary memory into working memory is an independent or separable from the ability to control attention more generally. For example, in discussing the episodic buffer being added to the multicomponent model, Baddeley (2000) stated that the buffer was assumed to be controlled by the central executive, which he noted was “an attentional control system” (p. 418), and that attentional mechanisms were largely responsible for the binding and integration of information in the episodic buffer specifically and working memory more generally. This is largely consistent with our lab’s view and supported by our recent finding that attention control fully accounted for the working memory capacity-fluid intelligence relationship (Draheim et al., 2021). Further, we would argue that the most parsimonious reason other studies (e.g., Unsworth et al., 2014; Unsworth & Spillers, 2010) have failed to find a full mediation with attention control alone is due to methodological considerations with how attention control is typically measured, which is the focus of the next section.

Assessing individual differences in attention control

Even though attention control has been identified as a central and important ability for human cognition (e.g., Broadbent, 1957; Engle, 2002; Norman & Shallice, 1986; Posner & Snyder, 2004), the role of working memory capacity has largely been studied and emphasized more so for explaining real-world behaviors, performance, phenomena, and outcomes. This is likely because the claim that attention control is the most predictive marker or driver of cognitive performance remains contentious, largely owing to how difficult it is for researchers to establish a strong and coherent factor of attention control and related mechanisms (Draheim et al., 2021; Friedman & Miyake, 2004, 2017; Hedge et al., 2018; Rey-Mermet et al., 2018; Rey-Mermet et al., 2019; Rouder et al., 2019). We have argued that this difficulty has not been because of theoretical or substantive reasons, but instead because most measures of attention control are psychometrically poor (see Draheim et al., 2019; Draheim et al., 2021). In this section, we discuss the challenges investigators face when trying to assess individual differences in attention control and describe some recent work aimed at addressing these challenges.

Challenges with measuring attention control

Perhaps the most widely used working memory capacity measures, complex span, were theoretically motivated tasks specifically developed for individual differences research (Daneman & Carpenter, 1980; Kane et al., 2004; Turner & Engle, 1989). They work well for this purpose because between-subjects variance is sufficiently large and reliability is high, producing strong individual differences and therefore adequate statistical power to detect correlations of interest (see Cronbach, 1957, for the differences between the experimental and individual differences approach). Subsequent research in individual differences in working memory capacity were successful thanks to the availability of psychometrically strong and validated tasks, and it was soon found that working memory capacity was strongly associated with intelligence and myriad other abilities. On the other hand, investigators studying individual differences in attention control were not afforded the same luxury. Studies of attention control often failed to show shared variance among tasks designed to measure inhibitory processing (e.g., Earles et al., 1997; Friedman & Miyake, 2004; Kramer et al., 1994). Because of the continued failed attempts for researchers to establish a coherent factor using attention tasks, some researchers have questioned whether the same inhibitory and attentional mechanisms are involved in performing different tasks (e.g., Hedge et al., in press; Rouder et al., 2019; Rouder & Haaf, 2019). Most notably, Rey-Mermet et al. (2018; Rey-Mermet et al., 2019) found little evidence of a common cross-task attentional ability and argued that it was time for researchers to simply stop thinking about “inhibition” as a unitary concept. In other words, they concluded that a general ability to control attention does not exist.

At present, the issues of measurement of attention and whether attention control is a psychometric construct are topics of debate among researchers. This is further complicated in that studies of attention control often operationalize it in terms of “inhibition,” and it is not entirely clear if what is labeled as inhibition is equivalent to what we and others call attention control (i.e., jingle-jangle fallacies; Kelley, 1927; also see Conway et al., 2021, for terminology confusion in this area). On the surface, it would appear so because (a) many of the same tasks are traditionally used to measure these abilities (such as Stroop, flanker, antisaccade; see Fig. 6), (b) inhibition appears to be a common way to conceptualize broad attention control (e.g., Rey-Mermet et al., 2018; von Bastian et al., 2020), and (c) some authors seem to use attention and inhibition interchangeably (e.g., Friedman & Miyake, 2004). On the other hand, it may be that what is commonly called inhibition is a specific and much narrower ability than attention control (cf. Draheim et al., 2021; Friedman & Miyake, 2017). To facilitate discussion, throughout this article we assume that what is called inhibition is similar enough to our view of attention control—namely, because the same tasks are being used to measure an underlying ability, and inhibition is often considered one of the most important functions or facets of the more general attention control. But it should be noted that the term inhibition does appear to be overly used in the literature, and likely reflects a wide variety of measurement tasks and mechanisms (see Bjork, 1989). We also argue strongly that attention control is much broader than inhibition. For example, one aspect of attention control that may not be encompassed by a narrower conceptualization of inhibition is the ability to maintain current task goals and avoid attentional lapses. Such lapses can be internally driven via intrusive and task-unrelated thoughts which disrupt task performance (known as mind wandering). Research suggests that individual differences in mind wandering are distinct, but correlated, with susceptibility to external distraction (e.g., Unsworth & McMillan, 2014) and correlated with both working memory capacity and fluid intelligence (Kane & McVay, 2012; McVay & Kane, 2012a; Unsworth & McMillan, 2014). Mind wandering researchers often emphasize that attentional lapses in the form of intrusive and task-unrelated thoughts are a sustained attention failure which result in extremely slow reaction times and therefore poor performance (known as the worst performance rule, see Löffler et al., 2021; McVay & Kane, 2012b; Welhaf et al., 2020). Another aspect of attentional lapses may be more on the micro level—for example, when performing an antisaccade task (see Fig. 6a) the respondent has a very brief window to execute the appropriate saccade away from the distractor and toward the target on the other side of the screen. Any attentional lapse, even for a fraction of the second, can have a deleterious effect on performance if said lapse occurs at a critical part of the trial (i.e., just before or as the distractor appears). We hypothesize that part of the reason for our finding from Draheim et al. (2021) that attention control fully mediated the working memory capacity-fluid intelligence relationship was because our attention control battery involved measures which heavily tapped the ability to avoid not only macro-level attentional lapses but also micro-level ones, thereby affording higher ability participants to apply intensive attentional resources to the trials as needed.

Attention control tasks. a In this version of the antisaccade task (Hutchison, 2007), the respondent is asked to start each trial by looking at the center of the screen. A distractor appears on one side of the screen and then a target letter (Q or O) appears for only 100 ms on the other side. The respondent is asked to identify the letter. Accuracy rate is the dependent variable for this version, although some versions are scored using reaction time, difference scores (in reaction time or accuracy, using prosaccade trials as baseline), or eye-tracking with no behavioral response. For added effect, the distractor may blink several times while on screen. b In the color Stroop task, a color word is presented, and the respondent is asked to indicate the color of the ink in which the word is printed. On congruent trials, the word and ink color match. On incongruent trials, they do not. The dependent variable is usually the difference in reaction time between incongruent and congruent (baseline) trials, although accuracy differences are sometimes used. c In the arrow flanker task, five arrows are presented, and the respondent is asked to indicate which direction the central arrow is pointing. On congruent trials, the central arrow and flanking arrows point in the same direction, whereas on incongruent trials the flanking arrows point in the opposite direction. As with Stroop tasks, the dependent variable is usually the difference in reaction time between incongruent and congruent trials, although accuracy differences may be used. Not to scale

The reliability paradox

Despite the disagreements and ambiguities over the nature of attention control or inhibition, there is a growing consensus among researchers that commonly used measures to assess these constructs (i.e., Stroop and flanker) typically reflect very little shared attention-related variance. Specifically, reliability of attention control tasks are often inadequate, intercorrelations are weak, a unitary factor is difficult to establish in latent analyses, and performance does not correlate as strongly as expected with other cognitive indicators (see Hedge et al., 2018; Hedge et al., in press; Paap & Sawi, 2016; Rey-Mermet et al., 2018; Rey-Mermet et al., 2019; Rouder et al., 2019; Rouder & Haaf, 2019; von Bastian et al., 2020).

At the core of this problem is that individual differences in attention have historically been assessed using paradigms born out of the experimental approach—the epitome of which is the Stroop task (Stroop, 1935; Fig. 6b). In the basic version of this task, the test-taker is asked to resist the automaticity of reading a color word to instead name the color of ink in which the word is printed. For example, they see “RED” in blue ink and are asked to respond by pressing a key corresponding to the response “BLUE.” One of the most robust and almost universal findings from this paradigm is known as the Stroop effect, which is that responses are slower and more error-prone when the color word is incongruent with the color of ink it is printed in (e.g., “RED” in blue ink), as opposed to when the two are congruent (e.g., “RED” in red ink). The Stroop task, and similar measures such as flanker (Fig. 6c; Eriksen & Eriksen, 1974) and Simon (Simon & Wolf, 1963; collectively known as conflict or interference tasks) have a rich history within the experimental literature and are successful in experimental researchFootnote 1 for the same reason that makes them poorly suited to individual differences research—the minimization of between-subjects variance (see Cronbach, 1957; Draheim et al., 2019; Hedge et al., 2018). The typical finding that tasks which exhibit strong and robust experimental effects fail to produce strong and reliable individual differences was dubbed “the reliability paradox” by Hedge et al. (2018), and there are several reasons for this phenomenon. We have argued that the primary reasons for why popular experimental tasks are typically poorly suited to correlational research are the use of difference scores and reaction times to assess task performance in many widely used measures of attention, as well as the failure for researchers to account for speed–accuracy interactions (see Draheim et al., 2019, for an extensive review and analysis of this problem).

Difference scores

The logic of using difference scores follows the Donders (1969) subtraction methodology, in which the difference is taken between two related but different variables to separate out cognitive processes (or, sometimes, to assess change over time), with one variable serving as the baseline or control. This method has been criticized for not isolating processes of interest as well as believed (e.g., Sternberg, 1969; Verhaeghen & De Meersman, 1998). Further, while difference scores can maximize statistical power when comparing performance at the group level, making them particularly useful in experimental designs (Overall & Woodward, 1975; but see Mashburn et al., 2020), they are clearly suboptimal for individual differences pursuits (see Cronbach & Furby, 1970; Draheim et al., 2016; Draheim et al., 2019; J. R. Edwards, 2001; Hedge et al., 2018; Lord, 1956; Paap & Sawi, 2016). This is because subtraction effectively removes the (generally strong) correlation between the two component scores, which, by nature, is reliable variance, thereby increasing the overall proportion of unreliable variance in the resulting difference score. The extent to which reliability is lost depends primarily on (1) the reliability of the component scores, and (2) how strongly the component scores are correlated. Critically, the stronger the correlation of the component scores, the lower the reliability of the resulting difference. With attention tasks in which the different trial types (conditions) are highly similar, correlations are generally quite strong and effect sizes are rather small (generally around 50 ms; see Rouder et al., 2019), and therefore using difference scores is a clear problem. For example, von Bastian et al. (2020) reviewed 76 individual differences studies and found an average reported reliability of just .63 for attention tasks when the dependent variable was in the form of a difference or contrast.

Reaction time and the relationship between speed and accuracy

Another factor that we argue contributes to problems with attention measures is the use of reaction time, specifically regarding individual differences in speed–accuracy emphasis (see Draheim et al., 2018; Heitz, 2014; Luce, 1986; Wickelgren, 1977). Individuals naturally differ on the extent to which they prioritize speed and accuracy (e.g., Forstmann et al., 2011; Starns & Ratcliff, 2010), and diffusion modeling studies from Hedge and colleagues have shown that individual differences in speed–accuracy emphasis are correlated across attention tasks even in young adults (Hedge et al., 2019; Hedge et al., in press). At issue is that most attention measures are scored not only using difference scores, but difference scores in reaction time, as is the case with conflict tasks in which the dependent variable is usually the difference in reaction time between incongruent and congruent trial performance. This is problematic in that the underlying processes reflected by reaction times have been shown to be multiply determined and not process pure (e.g., Hedge et al., 2019; Miller & Ulrich, 2013; Verhaeghen & De Meersman, 1998). Further, accuracy rates are often completely ignored and unaccounted for in these measures, meaning that respondents with the same general ability to control their attention will score differently on, say, a Stroop or flanker task if they have differences in baseline speed–accuracy emphasis and/or if they adjust their speed–accuracy emphasis during the task. Scores will also be affected by the extent to which any speed–accuracy relationships and interactions are systematically related to cognitive ability, for example if higher ability individuals slow down on a task after making an error to minimize the chances of committing subsequent errors (e.g., Draheim et al., 2016). To that end, Hedge et al. (in press) argued that performance in the most popular class of attention tasks (conflict tasks such as Stroop, flanker, and Simon) is not a reflection of attention-specific mechanisms, but instead are contaminated with variance attributable to processing speed and response cautiousness. As such, research using measures of attention control to answer questions about individual differences has stagnated relative to research on working memory capacity due to lack of reliable and valid measures of attention control.

To summarize, we believe that applied research has emphasized the role and importance of working memory capacity more so than attention control for two primary reasons. The first is simply inertia; early research on individual differences in working memory capacity was successful in establishing it as a broad and domain-general construct and other researchers were quick to expand this work when it was shown that working memory capacity correlated substantially with intelligence. Second, the availability of several psychometrically sound and accuracy-based measures of working memory capacity facilitated this surge of research. In contrast, assessing individual differences in attention control has not been so straightforward. Researchers interested in individual differences in attention control adopted established paradigms from the experimental literature, which proved to be poorly suited for correlational research for a variety of reasons. In our estimation, the lack of psychometrically strong attention control measures has undoubtedly stunted theoretical advancements in this area and likely set research back decades, just as Friedman and Miyake (2004) predicted could happen if improved measures were not developed. However, the problems are being addressed, and the possibility exists that assessing individual differences in attention will soon be as streamlined as assessing differences in other notable abilities such as working memory capacity and fluid intelligence.

Overcoming the challenges

Despite the controversy over measurement of attention control, we argue that there are reasons to be optimistic. One reason is that a pair of psychometrically sound attention control tasks has existed for some time. The first, the antisaccade task, is an instructionally simple yet highly difficult task that requires the respondent to look away from a flashing distractor on one side of the screen to instead catch a target on the opposite side before it is masked (see Hutchison, 2007; Fig. 6a). If the respondent looks in the direction of the distracting stimulus for even a moment, they will be unable to identify the target. This design is effective because animals are evolutionarily wired to look at something flashing in our environment, as this suggests movement which could indicate the presence of either danger or food (e.g., Howard & Holcombe, 2010). Therefore, the respondent must override or otherwise inhibit this strong evolutionarily engrained behavior to look toward the distractor, a quintessential example of a situation in which the control of attention is required. Another paradigm, visual arrays, is a change detection task in which stimuli are very briefly flashed on the screen and then reappear after a short delay (Fig. 7). In a typical visual arrays task, one of the stimuli change in some manner from the first display to the second on half the trials, and the respondent’s job is to judge whether something has changed. Performance on visual arrays is usually transformed into a capacity (k) score which is an estimate of how many items the individual can hold in primary memory (see Cowan et al., 2005).

Visual arrays task. In this version of the task (see Shipstead et al., 2014), an array of rectangles briefly appears, disappears, and then reappears with one rectangle probed with a white dot. The respondent is asked to indicate whether this probed rectangle changed orientation from the initial display. Accuracy performance is typically converted into a capacity (k) score to estimate how many items the respondent can hold in primary memory. The trial shown is Set Size 3, and so 100% accuracy on a series of such trials would produce a k score of 3, whereas 50% (chance) performance would produce a k score of 0. a No distractors present (nonselective visual arrays). b Respondent is cued to attend to only a subset of the to-be-presented stimuli, and distractors are presented with the targets (selective visual arrays). Not to scale

Psychometrically speaking, the appeal of the antisaccade and visual arrays tasks are that they are entirely accuracy-based measures that do not involve difference scores for measuring performance. Because speeded responding is not required, the aforementioned issues with reaction time are avoided (see Draheim et al., 2019; Draheim et al., 2021). Chiefly, individual differences in speed–accuracy emphasis and processing speed should be minimally impactful on the overall accuracy score. These desirable characteristics are shared with many successful measures of working memory capacity and fluid intelligence, and thus reliability and criterion validity of antisaccade and visual arrays approach that of measures such as complex span and matrix reasoning tasks (see Draheim et al., 2021). Unfortunately, many versions of antisaccade are employed—some variants involve reaction time and/or difference scores, and characteristics (namely, visual angle and presentation timings) of the task need to be properly tuned to produce sufficient individual variation. As such, antisaccade tasks only sometimes display high reliability, strong inter-correlations and factor loadings, and strong correlations to other cognitive measures (see Appendix B of Rey-Mermet et al., 2018; also see Hutton & Ettinger, 2006, for a review of antisaccade). As for visual arrays, this paradigm is often thought to be a measure of visual working memory capacity, which is evident in that performance is usually transformed into capacity (k) scores (Cowan et al., 2005). This classification is sensible given the task involves holding target stimuli in primary memory, but a growing body of research supports our contention that individual differences in performance of some types of visual arrays tasks are due more to attentional factors than memory (e.g., Balaban et al., 2019; Cusack et al., 2009; Draheim et al., 2021; Fukuda et al., 2016; Fukuda & Vogel, 2011; Martin et al., 2021; Shipstead & Engle, 2013; Souza & Oberauer, 2015; Vogel et al., 2005; Wheeler & Treisman, 2002). Critically, visual arrays tasks can be broken down into two categories, selective and nonselective (see Fig. 7). Nonselective visual arrays do not involve distractors and therefore place no demand on filtering irrelevant stimuli. On the other hand, selective versions of visual arrays include distractors, and generally a prompt before each trial indicates which subset of the to-be-presented stimuli should be selected and which subset should be ignored. For example, the respondent might see “BLUE” just before the presentation of the first array consisting of both red and blue stimuli, which means they should only attend to the blue items and ignore the red ones. While the case can be made that individual differences in nonselective visual arrays performance are jointly attributable to working memory and attentional mechanisms, what is clearer is that individual differences in selective visual arrays are primarily attentional in nature (cf. Martin et al., 2021). Several studies have shown that the additional filtering demand produces large individual differences attributable to selective attention, as performance is greatly reduced for individuals who do not attend to the selection cue (also possibly due to mind wandering or lack of ability to sustain attention) and/or are unable to properly select the target stimuli and ignore/filter the irrelevant stimuli (see Draheim et al., 2021; Draheim & Engle, 2021; Fukuda et al., 2016; Martin et al., 2021; Vogel et al., 2005). Additionally, Fukuda and Vogel (2009, 2011) found that individual differences in selective visual arrays performance were in part due to individual differences in the ability to recover from attentional capture. Fukuda and Vogel (2011) noted the similarities of resisting attentional capture in visual arrays with the demand to override prepotent eye movements toward distractors in the antisaccade, and we would argue both tasks place a strong demand on avoiding micro-level attentional lapses to intensely focus attention at a critical point in each trial. Supporting this, we recently found in Draheim et al. (2021) that antisaccade and selective visual arrays performance correlated strongly (r = .45), loaded onto an attention factor in the .60–.70 range, and had statistically equivalent correlations to working memory capacity and fluid intelligence composite scores. These were very strong effect sizes for two very different tasks, and much larger than the typical correlations of around r = .10–.20 and factor loadings below .40 for Stroop and flanker tasks (e.g., Friedman & Miyake, 2004; Rey-Mermet et al., 2018; Rouder et al., 2019; Rouder & Haaf, 2019).

Another reason for optimism is recent and ongoing developments to create and validate new measures of attention control. In Draheim et al. (2021) we argued that developing new and modified tasks was a straightforward way to tackle the challenges in assessing attention control (cf. Friedman & Miyake, 2004). We reasoned that avoiding difference scores and either controlling for accuracy (using adaptive procedures) or pushing all performance variance into accuracy (by making reaction time irrelevant) would increase the chances of the task having psychometric properties on par with measures of working memory capacity and fluid intelligence. We administered a combination of ten existing, new, and modified attention tasks. Included among these were modified versions of the Stroop and flanker tasks that involved adaptive response deadlines or presentation times and were designed to assess how quickly one could respond or how brief the presentation of the target stimulus could be at the same level of accuracy for each participant. We also included an accuracy analog of the psychomotor vigilance task, which is a reaction time-based sustained attention task which asks the respondent to press a key as soon as a timer on the screen begins counting up from zero. While we found that the two strongest attention measures (according to the criteria we outlined in the paperFootnote 2) were the pure accuracy versions of antisaccade and selective visual arrays tasks, the accuracy analog of the psychomotor vigilance task was just behind them, and the three modified Stroop and flanker tasks were also clear improvements to their reaction time and difference score counterparts. To quantify the relative improvements, performance in the antisaccade and visual arrays tasks each had about five times as much reliable and predictive variance as the traditional Stroop and flanker tasks and the three modified Stroop and flanker tasks had around three times as much. By using these more reliable measures of attention control, we found that attention control fully mediated the relationship between working memory capacity and fluid intelligence at the latent level (Fig. 4). Importantly, we only found this full mediation when the attention control factor was composed of tasks with accuracy-based dependent variables that did not involve difference scores (such as antisaccade, selective visual arrays, adaptive Stroop and flanker tasks, and a sustained attention measure). If traditional reaction time measures (psychomotor vigilance, Stroop, and flanker) were included, full mediation did not occur, just as it did not occur for Unsworth and Spillers (2010) and Unsworth et al. (2014), of which four out of their seven attention control tasks involved difference scores. We therefore argue that the discrepancy in the results between our study and the studies of Unsworth and colleagues was due to how attention control was assessed. One caveat is that the attention control’s full mediation of the working memory capacity-fluid intelligence relationship found in Draheim et al. (2021) was novel, and so it is yet to be established that this finding can be replicated, ideally across labs and with different populations and diverse tasks for the relevant constructs.

The central claim of this article is that these recent developments in the understanding (e.g., Shipstead et al., 2016; Tsukahara et al., 2020) and measurement (e.g., Draheim et al., 2021; Martin et al., 2021) of attention control provide a solid foundation from which to argue that attention control is more important than working memory capacity for explaining higher-order cognitive performance, both in and outside the laboratory (see also Burgoyne & Engle, 2020; Mashburn et al., 2020). In the following sections, we outline several areas of research in which working memory has been identified as an important predictor of real-world outcomes. For each, we provide explicit reasoning for why we think attention control better explains these phenomena.

Review of working memory and attention in the real world

In the following review of specific areas of applied research, we encourage the reader to keep in mind that the quality and nature of measurement may vary substantially across studies. Researchers often employ different tasks to measure the same construct, use the same tasks but give a different label to the underlying ability, use only one task but frame their findings as if they had measured a construct, administer too few trials to too few participants (see Rouder & Haaf, 2019), do not have a representative sample, and so on. In the following review we will generally grant that constructs have been assessed with some validity so as not to distract from the overall argument, but on occasion it will be necessary for us to mention methodological considerations to properly evaluate a study and the authors’ conclusions.

A recurring theme throughout this literature review is that working memory and attention are intertwined (Engle, 2002) and therefore difficult to disentangle. It is commonplace for researchers to hypothesize that behaviors are driven by attentional mechanisms but use working memory tasks (e.g., operation span) to index “executive attention” or as a proxy for attentional mechanisms. This practice is understandable given that many theories of working memory involve attention as a central component (see Baddeley, 1992; Engle, 2002). But because attention tasks with minimal storage demands (e.g., measures of attention control) are often less reliable and predictive than, say, complex span tasks, researchers who used working memory tasks as a proxy for attention were more likely to find significant results than researchers who instead used the traditional, and flawed, measures of attention control. This is relevant throughout this review, and we encourage the reader to keep in mind that individual differences studies of attention control and inhibition have historically relied on psychometrically poor measures, and so correlations involving these tasks are expected to be highly attenuated and thus conclusions drawn by the investigators using these measures may minimize the role of attention control in favor of other abilities (often working memory capacity and/or intelligence).Footnote 3

Education, learning, and child development

Working memory is a powerful explanatory tool for children’s learning, classroom performance, and overall academic achievement, as illustrated by the opening lines of Chapter 5 in Dehn (2008):

“Working memory capacity is more highly related to . . . learning, both short-term and long-term, than is any other cognitive factor” –P. Kyllonen

Educational and psychological research on working memory (e.g., Gathercole et al., 2006; Swanson et al., 1990) over the past 20 years has repeatedly affirmed the hypothesis that working memory processes underlie individual differences in learning ability. Working memory is required whenever anything must be learned. (p. 92)

Indeed, there is a solid basis for these claims and some research has shown that working memory capacity is an even better predictor of early academic achievement than psychometric intelligence (e.g., Alloway & Alloway, 2010; Cockcroft, 2015). The formation of new concepts and accumulation of information involves the manipulation of information and eventual storage into long-term memory, which requires information passing through working memory. Working memory has thus historically been viewed as a portal to long-term memory, particularly in early stages of development when learning is most important due to low levels of knowledge and automatized skills (Cowan, 2014; Forsberg et al., 2021). An individual with low working memory capacity will struggle to combine capacity, speed, knowledge, and strategies necessary for problem solving, inference making, and learning of complex skills and concepts (Alloway, 2006; Cowan, 2014; Halford et al., 1998; Reid, 2009). It is therefore not surprising that working memory capacity predicts an array of behaviors important for learning and classroom performance, such as reading comprehension (Daneman & Carpenter, 1980), reasoning ability (Kyllonen & Christal, 1990), direction following (Engle et al., 1991), long-term memory retrieval (Brewer & Unsworth, 2012), and language acquisition (Gathercole & Baddeley, 1989). To quantify these relationships, according to the Woodcock-Johnson III Technical Manual (McGrew & Woodcock, 2001), working memory capacity has an aggregate correlation over r = .50 with eleven specified achievement clusters for children and adolescents in the domains of reading, writing, comprehension, reasoning, and mathematics.

Working memory is therefore often implicated as a cause of learning disabilities, and students with low working memory capacity generally perform poorly in classroom settings (e.g., Cowan, 2014; Gathercole et al., 2006; Sabol & Pianta, 2012). Reduced working memory capacity is viewed as a causal source of the co-occurrence of inattentive behavior and working memory problems (Diamond, 2005; Gathercole et al., 2008) and may mediate the (negative) relationship between trait anxiety and academic performance (Owens et al., 2008). Some researchers argue that working memory limitations are the primary cause of learning disabilities (see Dehn, 2008), whereas another argument is that both working memory capacity and fluid intelligence contribute to learning disabilities because they work together to support problem solving in facilitation of learning and educational achievement (Cockcroft, 2015; Cowan, 2014). If we interpret this argument with the lens of the maintenance/disengagement framework (Shipstead et al., 2016), then it would be expected that attention control is the primary driving force behind learning and, by extension, scholastic achievement.

It is often hinted or implied that scholastic achievement and learning difficulties have an attentional origin. Working memory is sometimes fractionated into different components, such as visuospatial working memory, verbal working memory, and executive working memory (Dehn, 2008).Footnote 4 Executive working memory refers to the central executive system of the Baddeley and Hitch (1974) model and is synonymous with executive attention/attention control. It has been argued that executive working memory is by far the best predictor of learning ability. For example, Dehn (2008) stated, “Research has consistently found students with specific learning difficulties to be most deficient in the executive processing components of working memory (Swanson et al., 1990)” (p. 96) and “Executive-loaded working memory tasks provide the best discrimination between children with and without learning disabilities (Henry, 2001)” (p. 96). Supporting these assertions, Gathercole and Pickering (2000) tested 6- and 7-year-old children and reported that scores on their central executive subtest scores predicted performance on arithmetic, vocabulary, and literacy a year later above and beyond scores on the phonological or visuospatial subtests.

There is also extensive and diverse research more explicitly outlining the role of attention in learning and academic achievement. For example, it has been shown that higher levels of anxiety result in worse academic performance and working memory capacity is thought to mediate this relationship (Owens et al., 2008). But another hypothesis is that anxiety produces specific attentional deficits—such as the propensity for a student high in anxiety to divide attention, devoting attentional resources to task-irrelevant thoughts and behaviors (see Beilock, 2007). A systematic review by Polderman et al. (2010) found that attentional factors were strong correlates of academic achievement even after controlling for intelligence, socioeconomic status, and comorbid disorders. Similarly, Steinmayr et al. (2010) found that scores on a sustained attention test moderated the relationship between intelligence and grades in high school students, and that error rates on this sustained attention test predicted overall school performance above and beyond intelligence. In a longitudinal study, Rhoades et al. (2011) reported that kindergarteners’ attention level was a strong mediator of their preschool emotional knowledge and their first-grade academic achievement, even after accounting for socioeconomic status and verbal skills. Bull and Espy (2006) reported that inhibitory control substantially predicted mathematical performance in preschoolers even when age, verbal intelligence, and maternal education were factored out, and inhibition also accounted for 12% of variability in mathematical skills in preschoolers above and beyond working memory capacity. Finally, St Clair-Thompson and Gathercole (2006) administered a battery of executive functioning tasks to middle school-aged children and found that working memory capacity and inhibition each uniquely predicted various measures of scholastic achievement and attainment in mathematics, science, and English.

Other scholars have argued that reading comprehension is heavily dependent on attention—specifically, the ability to discard previously relevant but now irrelevant information (e.g., Carretti et al., 2009; De Beni & Palladino, 2000; Savage et al., 2006). For example, difficulties in mathematics and problem solving may arise less so due to working memory factors and instead due to limitations in the ability to filter or otherwise block irrelevant information (e.g., Passolunghi et al., 1999; Passolunghi & Siegel, 2001). In a review on the role of working memory in learning disabilities, Swanson and Siegel (2011) stated that individuals with learning disabilities often have severe attentional deficits in the form of general inhibitory functioning, sustained attention, selective attention, divided attention, and switching attention. Their argument was, relative to age-matched controls, individuals with learning disabilities struggle to allocate attentional resources on high demand tasks, struggle to maintain information in the face of attention-capturing events or general distraction, are more likely to report irrelevant nontarget words in a recall task, and are worse at selectively attending to the relevant features of primary and secondary tasks when put into a divided-attention situation. Finally, Fenesi et al. (2015) argued that researchers interested in education and learning often too strongly emphasize the short-term storage aspects of the Baddeley and Hitch (1974) multicomponent model. They instead suggested an increased focus on the role of attention control (based on the executive attention view of individual differences in working memory; Engle, 2002) and long-term memory (based on the embedded process model; Cowan, 1988, 1999) in education research—the former is precisely what we also argue here. One example provided by Fenesi et al. is that individuals with attention problems (such as attention-deficit/hyperactivity disorder; ADHD) have difficulties with reading comprehension, which can be misinterpreted as a working memory issue and therefore misdiagnosed as dyslexia, resulting in parents and teachers attempting to correct a suspected reading problem when the underlying issue is instead attentional in nature. Another example they offered was in the domain of mathematical performance, in which individuals with worse attention control are more drawn to superficially relevant, garden-pathing, and distracting aspects of the problem, particularly in word problems, hence affecting their ability to stay on track and solve the problem at hand. Highlighting relevant parts of a mathematical problem was shown to help students focus their attention and improve mathematical performance in those with attentional problems (Kercood & Grskovic, 2009).

Still, work in the areas of academic learning and achievement generally examines the role of working memory and storage-based deficits for learning difficulties and poor academic performance, with generally less emphasis on the more fundamental deficits in attentional abilities (unless the deficits are severe enough to be considered pathological, such as with ADHD). Potential remediations are therefore designed to address and target working memory more so than attentional deficits. These include working memory training, teaching of mnemonic and other memory strategies, reducing the working memory demands of classroom activities and assignments, and providing regular positive feedback (see Alloway, 2006; Cockcroft, 2015; Cowan, 2014; St Clair-Thompson et al., 2010). While targeted approaches such as memory-related strategy training and reducing the working memory load of material may be helpful, attention-based remediations would be expected to have more generalizable benefits. Attention deficits, such as those in individuals with ADHD, are more generalized because they manifest as a global problem with maintaining goal-directed thoughts, information, and behaviors as well as blocking inappropriate and/or now-irrelevant ones, whereas working memory deficits may involve more specific issues with maintaining, processing, and/or storing information. Because we argue that attention control is the basis for both working memory capacity and fluid intelligence, researchers, educators, and parents may find more success if they focus on the underlying attentional deficits in students. Attentional-specific interventions are more likely to produce broad and farther-reaching benefits for the child.

Cognitive training

The repeated demonstration that working memory capacity is correlated with a host of other cognitive behaviors has resulted in widespread testing of the hypothesis that training or otherwise boosting one’s working memory capacity ought to result in long-term improvements to general cognitive and intellectual functioning. The idea of cognitive training is certainly not new and was argued to be ineffective over a century ago (e.g., James, 1890; Woodworth & Thorndike, 1901), but researchers now had a new realm in which to test it—operating under the assumption that working memory capacity is a causal source of individual differences in other domains such as reading comprehension, mathematical skills, language ability, and fluid intelligence (cf. Melby-Lervåg et al., 2016). The holy grail of working memory training is to establish far-transfer, which is training-induced improvement on untrained and novel tasks of a different ability, to measures of intelligence. Some studies purported to find just that (e.g., Jaeggi et al., 2008; Klingberg et al., 2002), resulting in a flurry of scientific interest and funding devoted to working memory training as well as commercial brain training products which purport to improve cognitive functioning by way of working memory training.

Unfortunately, systematic reviews and meta-analyses have consistently shown a lack of evidence for far-transfer to intelligence after training neurotypical individuals (Dougherty et al., 2016; Melby-Lervåg et al., 2016; Melby-Lervåg & Hulme, 2013; Schwaighofer et al., 2015; Shipstead et al., 2010; Shipstead et al., 2012a, b; Soveri et al., 2017). It is often observed that the relatively few studies that report far-transfer to intelligence have severe methodological limitations (see Dougherty et al., 2016; T. L. Harrison et al., 2015; Melby-Lervåg et al., 2016; Rodas & Greene, 2021; Shipstead et al., 2010; Shipstead et al., 2012a, b; Simons et al., 2016), and that any training-induced improvements are typically limited to the tasks that were directly trained (or highly similar tasks) and short lived (e.g., Melby-Lervåg & Hulme, 2013; Soveri et al., 2017). In other words, the most robust finding is that people get better on the tasks they practice but not much else. As a result, researchers are highly skeptical that working memory training or brain training products can improve general cognitive functioning (see Simons et al., 2016). For example, Stojanoski et al. (2021) surveyed more than 8,000 individuals regarding their use of brain training programs and then administered a battery of cognitive tasks to each. They found no relationship between any of those cognitive measures and participant engagement (use and duration) in brain training, even for the most committed brain trainers and those who fully expected it to work. Despite the overwhelming evidence against working memory training, it still receives a good deal of interest from researchers and companies offering “brain training” programs continue to be highly successful.

Why working memory training does not work

A central challenge for the working memory training hypothesis is that, for training to be effective, it must first be shown that working memory capacity can indeed be improved through training, sometimes referred to as moderate or intermediate transfer (Harrison et al., 2015; von Bastian et al., 2013). That is, effortful and intensive practice and/or training on a subset of working memory tasks should lead to robust and lasting improvements in performance on another subset of untrained working memory tasks, and improvements must not be due to the application of highly specific strategies common across the two subsets of tasks (cf. Shipstead et al., 2012a, b). There is little reason to believe that the broad ability of working memory capacity can be improved after training, and thus evidence for moderate transfer is sporadic and inconsistent among the relatively few studies that properly assess it; some report strong moderate transfer (e.g., Holmes et al., 2009), some report moderate transfer for a subset of tasks but not others (e.g., T. L. Harrison, Shipstead, et al., 2013b), and many report no moderate transfer (see Shipstead et al., 2012a, b; Simons et al., 2016).

Another challenge is that even if moderate transfer could readily be achieved it must be the case that working memory has a causal influence on other cognitive abilities such as fluid intelligence. As noted by Shipstead et al. (2016), it was not clear why working memory capacity and fluid intelligence were so strongly related yet the underlying assumption that working memory capacity determined their fluid intelligence was rarely questioned (cf. Burgoyne et al., 2019; T. L. Harrison et al., 2015). As such, some scholars have been critical of the hypothesis that a more efficient working memory system causes higher performance on fluid intelligence tasks due to the ability to maintain more information in the form of partial solutions, hypotheses, and subgoals (e.g., Burgoyne et al., 2019; Cusack et al., 2009; Unsworth & Engle, 2005; Wiley et al., 2011). Alternatives therefore need to be considered. As discussed in the introduction, the theoretical framework that we are operating with is specifically that limitations in both working memory capacity and fluid intelligence are primarily due to individual differences in attention control.

Training narrower abilities and skills

While reviews have consistently found that working memory training is ineffective, it should be possible to teach and train specific strategies, which could result in desirable outcomes to the extent that those strategies are shared across different tasks and cognitive domains (see Bailey et al., 2008, for a discussion of the strategy affordance hypothesis). Ideally, individuals would also be able to modify and improve their strategies and apply them to novel situations, further increasing the benefit of strategy training. Several studies support this notion (e.g., Dunning & Holmes, 2014; Paas, 1992; Turley-Ames & Whitfield, 2003; Uttal et al., 2013; also see Ceci & Papierno, 2005), including some from our lab. In a training study that expectedly found no evidence of far-transfer, Foster et al. (2017) noted that there was evidence that spatial abilities were improved after training for individuals of higher ability, as variance in spatial ability increased after twenty days of training. This finding is consistent with Uttal et al.’s (2013) meta-analysis of 217 studies showing that the average effect size of spatial training was just shy of half a standard deviation and that training-induced transfer to untrained spatial tasks is routinely reported. In a study aimed at assessing the role of proactive interference in working memory training and transfer, Redick et al. (2019) reported strategy-specific benefits on transfer in tasks specifically involving letter stimuli. In another training study, T. L. Harrison, Shipstead, et al. (2013b) found that individuals who trained on tasks involving retrieval from secondary (long-term) memory performed better than controls on both complex and simple span tasks. They offered two possible explanations: either working memory training improved a component of working memory (such as secondary memory), or participants developed strategies that were applicable to some tasks but not others, thus resulting in sporadic training effects. In a nontraining study of strategy discovery and implementation in immediate free recall, T. L. Harrison, Hertzog, and Engle (2013a) reported two interesting findings. First, most participants were able to implement a particular organizational recall strategy after being informed of it. Second, the relationship between working memory capacity and memory recall was stronger after participants were informed about the organizational strategy than when no specific strategy instructions or information were given. This study further supported the viability of strategy-specific training and showed that such training may be particularly useful for higher ability individuals who are able to successfully execute said strategy and generalize it to other tasks (see also Ceci & Papierno, 2005).

In most of the above examples of strategy training, the goal was to train individuals on strategies specifically related to either general memory capacity (short term and long term) or modal information (verbal or visuospatial). This sort of memory training should still be viewed with skepticism, as it has not been established that training effects will transfer to other areas or produce real-world significance (e.g., Stieff & Uttal, 2015). This is summed up quite well in a pessimistic review of memory strategy training in children by Bjorklund et al. (1997), who argued that training children was often successful in teaching a strategy, but children rarely showed any measurable benefit of said training. We instead propose that training of strategies of an attentional nature has a higher chance to succeed than training ones related to memory capacity or modalities, as the prospect of chunking or mnemonic training resulting in widespread cognitive improvements remains dubious. There are a handful of studies showing benefits of training and interventions that are more targeted towards attention and self-regulation than capacity, but less attention has been given to this area from researchers who study individual differences in executive functioning. We think this area warrants further research, particularly given theoretical and methodological advances in the realm of individual differences in attention.

The potential for attention-based training