Abstract

The underlying processes and mechanisms supporting the recognition of visually and auditorily presented words have received considerable attention in the literature. To a lesser extent, the interplay between visual and spoken lexical representations has also been investigated using cross-modal lexical processing paradigms, yielding evidence that auditorily presented words influence visual word recognition, and vice versa. The present study extends this work by examining and comparing the relative sizes of cross-modal repetition (cat–CAT) and semantic (dog–CAT) priming in auditory lexical decision, using heavily masked, briefly presented visual primes and a common set of auditory targets. Even when conscious awareness of the prime was minimized, reliable cross-modal repetition and semantic priming was observed. More critically, repetition priming was stronger than semantic priming, consistent with the idea that multiple pathways connect the two modalities. Implications of the findings for the bidirectional interactive activation model (Grainger & Ferrand, 1994) are discussed.

Similar content being viewed by others

A substantial amount of research has considered the processes that support the recognition of visually and auditorily presented words (see Dahan & Magnuson, 2006, and Balota, Yap, & Cortese, 2006, for reviews on auditory and visual word recognition, respectively). There is also increasing evidence from cross-modal studies that auditorily presented words can influence visual word recognition, and vice versa. The present study addresses a number of important empirical and theoretical gaps in the literature by comparing the effects of masked cross-modal repetition and semantic priming.

Repetition and semantic priming in visual and auditory word recognition

Repetition and semantic priming, respectively, refer to facilitated recognition of a target word (e.g., YOURS) when it is preceded by an identical (e.g., yours) or semantically/associatively related (e.g., mine) prime. Robust effects of repetition and semantic priming have been reported in both the visual (Forster & Davis, 1984) and auditory (Radeau, Besson, Fonteneau, & Castro, 1998) modalities. More intriguingly, these effects persist even when conscious, strategic processing of primes is minimized through the use of briefly presented, heavily masked primes (Forster, 1998). For example, masked repetition- and semantic-priming effects have been observed in both visual (Holcomb & Grainger, 2006) and auditory (Kouider & Dupoux, 2005) word recognition.

However, the foregoing work on masked repetition and semantic priming has been within-modal in nature. That is, visual targets are preceded by visual primes, whereas auditory targets are preceded by auditory primes. This limits what researchers can infer about the possible interplay between the two modalities during the recognition of a word. There is increasing evidence that orthographic characteristics influence phonological processing and that phonological characteristics influence orthographic processing. Specifically, spoken word recognition is facilitated when prime–target pairs share both orthography and phonology in the initial syllable (e.g., mess–MESSAGE), but not when the overlap is limited to either orthography (e.g., legislate–LEG) or phonology (e.g., definite–DEAF) (Slowiaczek, Soltano, Wieting, & Bishop, 2003). Similarly, lexical decisions (i.e., the classification of letter strings as words or nonwords) to visually presented targets (e.g., BRAIN) are facilitated by pseudohomophone primes (i.e., nonwords that are homophonous with real words; e.g., brane), relative to orthographic control primes (e.g., broin) (Lukatela & Turvey, 1994).

Cross-modal repetition and semantic priming

The evidence described above reveals how information from a nonpresented modality can influence word processing in some other modality. To more directly explore the nature of the potential interactions between representations from different modalities, researchers have also leveraged on cross-modal priming paradigms. For example, in a cross-modal priming task, participants might see visual primes paired with auditory targets or auditory primes paired with visual targets. Cross-modal studies that have used masked repetition primes (e.g., yours–YOURS) have yielded mixed findings. For example, Kouider and Dupoux (2001), who were the first to explore the effects of masked visual primes on auditory targets in lexical decision, found that cross-modal repetition priming was reliable only at longer prime durations (67 ms) that allowed the primes to be consciously processed. In contrast, within-modal priming, using both visual primes and targets, was reliable with prime exposure durations as short as 33 or 50 ms. Kouider and Dupoux (2001) concluded that a modality-independent central executive, which serves to integrate information from the two modalities, operates only under conscious conditions.

Kouider and Dupoux’s (2001) findings are inconsistent with a later cross-modal repetition-priming study by Grainger, Diependaele, Spinelli, Ferrand, and Farioli (2003). Specifically, Grainger et al. (2003) were able to obtain masked cross-modal repetition-priming effects at a prime duration of 53 ms, using random letter strings that served as more effective backward masks than the more typically used hash marks or ampersands. More recently, some event-related potential studies have provided support for both unmasked (Holcomb, Anderson, & Grainger, 2005) and masked (Kiyonaga, Grainger, Midgley, & Holcomb, 2007) cross-modal repetition priming.

The bimodal interactive activation model (BIAM), the dominant theoretical framework for modeling early, automatic interactions between the visual and auditory modalities (Grainger et al., 2003; Grainger & Ferrand, 1994), provides a unified explanation for the foregoing masked repetition-priming effects (see Fig. 1). In this model, orthographic (O) and phonological (P) units are represented at three levels (featural, sublexical, and lexical). Importantly, the interface between orthography and phonology is reflected both by direct connections between O-words and P-words and by indirect connections mediated by the sublexical interface (O↔P). The two panels represent the time courses of feedforward activation in the network after a visual prime has been presented; note that the amount of activation is largely (but not exclusively) determined by prime exposure duration.

Hypothesized states of activation in the bimodal interactive activation model (Grainger & Holcomb, 2010) following prime exposure durations of 33 ms (left) and 50 ms (right). The darkness of the lines reflects the extent of activation flow, and lowercase letters are used to label each processing stage. Dotted lines refer to activation flow that is irrelevant to the present study. V = visual; O = orthographic; P = phonological; A = auditory. From “Neural Constraints on a Functional Architecture for Word Recognition,” by J. Grainger and P. J. Holcomb, in P. L. Cornelissen, P. C. Hansen, M. L. Kringelbach, and K. Pugh (Eds.), The Neural Basis of Reading (p. 24), 2010, New York, NY: Oxford University Press. Copyright 2010 by Oxford University Press. Adapted with permission. Source: Oxford University Press

When a masked visual prime is presented for 33 ms (left panel), orthographic lexical representations (O-words) are activated (pathway “a–b”), but not phonological lexical representations (P-words; pathway “a–b–c”); this yields within-modality repetition priming. However, when the masked prime duration is extended to 50 ms or longer (right panel), pathway “a–b–c” becomes active, allowing the masked visual repetition prime to activate its auditory target; this yields cross-modal repetition priming.

Interestingly, there has been even less work on cross-modal semantic priming (e.g., mine–YOURS). An early study by Anderson and Holcomb (1995) revealed strong priming when unmasked visual primes preceded auditory targets; this priming was reliable across a range of stimulus onset asynchronies (SOAs; 0, 200, and 800 ms). Interestingly, with auditory primes and visual targets, priming effects were also obtained. However, to our knowledge, no study to date has explored masked cross-modal semantic priming when visual primes and auditory targets are used. Similarly, no study has directly compared the relative magnitudes of masked cross-modal repetition- and semantic-priming effects for a common set of targets.

The present study

To recapitulate, a number of issues have not been well addressed in the literature. First, there have been relatively few masked cross-modal priming studies, and the effects observed have been modest in size and sometimes contradictory. Second, cross-modal studies have typically focused on repetition priming. The handful of exceptions include Anderson and Holcomb’s (1995) study of unmasked cross-modal semantic priming and a study by Dell’Acqua and Grainger (1999) with masked picture primes and visual word targets (see Chapnik Smith, Meiran, & Besner, 2000, for a replication with unmasked picture primes). In short, whether it is possible to obtain reliable unconscious cross-modal semantic priming effects remains an open empirical question, when masked visual word primes and auditory word targets are used.

In the present study, using a common set of auditory targets, we investigated the influence of masked cross-modal repetition and semantic priming on spoken word recognition performance, when masked visual words served as the primes and the prime durations were set at 33 ms (Exp. 1) and 50 ms (Exp. 2). In addition to ascertaining the reliability of unconscious cross-modal semantic priming, the present results will also help provide insights into the interplay between orthography, phonology, and semantics over time, which could then be used to provide additional constraints for the BIAM. To provide finer-grained insights into the mechanisms underlying cross-modal priming, the data will also be represented via quantile plots, to explore the influence of masked cross-modal priming on different portions of the response time (RT) distribution (Balota & Yap, 2011). Using visually presented primes and targets, Gomez, Perea, and Ratcliff (2013) reported that masked repetition and semantic priming were reflected by a shift in the entire RT distribution, consistent with the idea that masked primes provide a head start to the stimulus-encoding process (Forster, Mohan, & Hector, 2003).

Method

Participants

In total, 207 students from the National University of Singapore (NUS) took part in the experiment (99 in Exp. 1 and 108 in Exp. 2). Participants were awarded course credit or S$5 for their participation. All participants were native speakers of English and had normal or corrected-to-normal vision and hearing.

Materials

The 156 auditory word targets were drawn from a previous study examining individual differences in repetition and semantic priming (Tan & Yap, 2016). Each target was paired with a semantic or repetition prime, or their respective unrelated controls (see Table 1); across participants, stimuli were counterbalanced across the four different prime conditions (see Table 2 for the prime and target properties). The semantic primes in this study were selected to yield symmetric prime–target pairs, which possessed similar forward (i.e., prime-to-target) and backward (i.e., target-to-prime) association strengths (Nelson, McEvoy, & Schreiber, 2004). Unrelated primes were created by re-pairing the primes and targets. An additional 156 nonwords were created via the Wuggy program (Keuleers & Brysbaert, 2010), an automated nonword generator that ensures that words and nonwords are matched on number of letters, number of syllables, and orthographic neighborhood size. These nonwords were paired with 156 primes that were matched to the primes for the target words on frequency, letter length, syllabic length, morphemic length, orthographic similarity, and phonological similarity.

A linguistically trained female native speaker of Singapore English was then recruited to record the 156 auditory tokens in 16-bit mono, 44.1-kHz wav files. The files were normalized to 70 dB, to ensure that the tokens were matched on root-mean-square amplitude. The tokens were presented to 30 participants (who did not take part in the main experiment) to verify that they met threshold for intelligibility. Tokens that did not achieve at least 80% correct identifications were re-recorded and tested, and all tokens used in the experiment were associated with a least a 70% correct identification rate (M = 94.9%, SD = 7.65%).

Procedure

Participants were tested in individual sound-attenuated cubicles. Prior to the auditory lexical decision task (LDT), participants worked on a computer-based task that assessed their spelling and vocabulary knowledge. For spelling, 88 letter strings had to be classified as correctly or incorrectly spelled via a button press (Andrews & Hersch, 2010), and vocabulary knowledge was assessed by the 40-item vocabulary subscale of the Shipley Institute of Living Scale (Shipley, 1940).

Turning to the LDT, participants were instructed to decide whether the auditorily presented token formed a word or nonword by pressing the right and left shift keys, respectively. Each trial comprised the following events: (1) a forward mask (###########) presented for 500 ms; (2) the uppercase prime, for either 33 ms (Exp. 1) or 50 ms (Exp. 2); (3) a backward mask of random letters (length-matched to the prime); and (4) the auditory presented target (see Fig. 2 for the trial structure). The backward mask remained on the screen until the auditory token’s offset. Participants were encouraged to respond to the auditory target as quickly and accurately as possible; RTs were recorded from the onset of the auditory token. Twenty practice trials were administered before the experimental trials began, and the order of trials was randomized anew for each participant. In all, each participant went through 156 word-target trials (39 semantically related, 39 semantically unrelated, 39 repetition related, and 39 repetition unrelated) and 156 nonword-target trials (78 repetition related and 78 repetition unrelated). Each target was counterbalanced across prime type and relatedness, yielding a total of eight counterbalancing versions. Additionally, no target was repeated within an experiment. At the end of the experiment, a verbal prime visibility check was carried out, to check whether the participants could identify the masked visual primes, and if they did, whether they were aware of the relationship between prime and target.

Results

On the basis of the prime visibility check, data from three and 12 of the participants, respectively, were excluded from Experiments 1 and 2, because these participants claimed that they could identify more than five of the primes during the experimental session. Our analyses were consequently based on the remaining 192 participants (96 in each experiment). In Experiment 1, the mean spelling and vocabulary scores were 85% (SD = 11%) and 76% (SD = 8%), respectively; in Experiment 2, the mean spelling and vocabulary scores were 81% (SD = 15%) and 76% (SD = 10%), respectively. Errors (8%) were first removed. Following this, RTs faster than 200 ms or slower than 3,000 ms were removed, and the remaining latencies that were not within three standard deviations of each participant’s mean were excluded. These criteria eliminated an additional 1.47% of responses. Table 3 presents descriptive statistics for the different experimental conditions.

Next we analyzed our data using linear mixed effect (LME) models (Baayen, Davidson, & Bates, 2008) in R (R Core Team, 2014). Given the low error rates and the multiple sources of lexical decision error, the present analyses focus on RT data. The raw RT data were fitted using the lme4 package (Bates, Mächler, Bolker, & Walker, 2015), and p values for fixed effects were derived from the lmerTest package (Kuznetsova, Brockhoff, & Christensen, 2016). Because the raw RT data are typically skewed in cognitive tasks, some researchers have suggested using reciprocally transformed RTs (– 1/RT; Masson & Kliegl, 2013) to normalize the residuals.Footnote 1 However, reciprocal transformations have been shown to systematically alter the joint effects of two variables by producing more underadditive patterns (Balota, Aschenbrenner, & Yap, 2013). It has also been documented that violating the normality-of-residuals assumption has virtually no impact on the estimation of regression slopes (Gelman & Hill, 2007).

The main effects of relatedness (related vs. unrelated), prime type (semantic vs. repetition), the Relatedness × Prime Type interaction, and token duration were treated as fixed effects. Tables 4 and 5, respectively, present the effects for Experiments 1 and 2. Effect coding was used for the categorical factors, whereby related and unrelated trials were, respectively, coded as – .5 and .5, whereas repetition and semantic trials were, respectively, coded as – .5 and .5; by-participant and by-target random slopes for relatedness were also included.

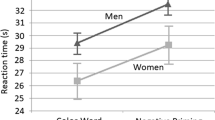

In Experiment 1, in which visual primes were presented for 33 ms, only the fixed effect of duration was significant (see Table 4): Participants responded more slowly when tokens with longer durations were presented. In Experiment 2, in which the prime duration was extended to 50 ms, the fixed effects of relatedness, prime type, and duration were all statistically significant, ps < .01 (see Table 5). RTs were faster when targets were preceded by a related prime, when the primes were repetition primes, and when the token durations were shorter. Importantly, the Relatedness × Prime Type interaction was also significant, p = .040. Specifically, the masked repetition-priming effect (M = 23 ms, p < .001) was larger than the masked semantic-priming effect (M = 11 ms, p = .015).

The quantile plots for Experiments 1 and 2 are, respectively, presented on Figs. 3 and 4. To generate these, each participant’s RTs were first rank-ordered (from fastest to slowest) as a function of condition, followed by computing the priming effects for different quantiles (e.g., .15, .25, .35, etc.). The bottommost panel in each figure represents the magnitudes of repetition and semantic priming across quantiles. Interestingly, the reliable masked-priming effects in Experiment 2 were reflected by distributional shifting (i.e., the priming was of comparable magnitude across quantiles), consistent with Gomez et al. (2013).

Discussion

In the present study we examined and compared cross-modal repetition- and semantic-priming effects in an auditory lexical decision task with masked visual primes. The study generated a number of noteworthy findings that are easily summarized. First, both masked cross-modal repetition- and semantic-priming effects were statistically unreliable when the prime duration was 33 ms, but they were significant when the prime duration was extended to 50 ms. Second, the priming effects in Experiment 2 were mediated by RT distributional shifting, extending to cross-modal priming Gomez et al.’s (2013) observations, which were based on within-modal masked priming. This pattern further reinforces the idea that masked priming reflects a relatively modular head-start mechanism (Forster et al., 2003). Most importantly, a significant interaction was found between prime type and relatedness, wherein cross-modal priming was stronger when repetition, as compared to semantic, primes were used.

Time course of masked cross-modal repetition and semantic priming

Consistent with earlier work (e.g., Kouider & Dupoux, 2001; Grainger et al., 2003), the present study provides additional evidence that with a prime exposure of 33 ms, masked cross-modal priming (both repetition and semantic) is absent. Importantly, it replicates and extends previous studies by showing that when the prime exposure is lengthened to 50 ms, masked cross-modal repetition and semantic priming can be reliably observed, with repetition priming (23 ms) being approximately twice as large as semantic priming (11 ms). To our knowledge, this is the first empirical demonstration of unconscious cross-modal priming in the literature when visual primes and auditory targets are used. As we discussed in the introduction, the BIAM can successfully explain why cross-modal repetition priming requires a longer prime exposure than within-modal repetition priming. As can be seen in Fig. 1, pathway “a–b–c,” which is required for cross-modal repetition priming, is active only when the masked prime duration is extended to 50 ms or longer. However, the framework described in Fig. 1 does not contain semantic units, and is therefore silent on semantic-priming effects.

To accommodate the present findings, we turn to the embellished BIAM (see Fig. 5) described in Grainger and Holcomb (2010); in this modified model, semantic representations (S-units) are connected to whole-word orthographic and phonological units. With a 33-ms prime exposure, we found no evidence for cross-modal repetition or semantic priming, which contrasts with empirical demonstrations of within-modal masked repetition (Kouider & Dupoux, 2001) and semantic (Reimer, Lorsbach, & Bleakney, 2008) priming at this SOA (see also Tan & Yap, 2016, for similar findings with 40-ms primes). This is reflected by Fig. 5 (left panel): Pathways “a–b” and “a–b–i” are active, which, respectively, support within-modal repetition and semantic priming. More notably, the present study has revealed that when the prime duration was lengthened to 50 ms, small but significant effects of masked cross-modal semantic priming could now be observed. Although masked cross-modal semantic priming is not precisely novel (see, e.g., Dell’Acqua & Grainger, 1999), this is the first study, to our knowledge, to report semantic priming with masked visual word primes and auditory word targets. This indicates that at a prime duration of 50 ms (see Fig. 5, right panel), both pathways “a–b–c” and “a–b–i–j” are active. Masked cross-modal semantic priming (cat–DOG) is mediated by the “a–b–i–j” pathway, while masked cross-modal repetition priming (cat–CAT) is jointly mediated by “a–b–c” and “a–b–i–j.”

Hypothesized states of activation in the bimodal interactive activation model (Grainger & Holcomb, 2010) following prime exposure durations of 33 ms (left) and 50 ms (right). The darkness of the lines reflects the extent of activation flow, and lowercase letters are used to label each processing stage. Dotted lines refer to activation flow that is irrelevant to the present study. V = visual; O = orthographic; P = phonological; S = semantic; A = auditory. From “Neural Constraints on a Functional Architecture for Word Recognition,” by J. Grainger and P. J. Holcomb, in P. L. Cornelissen, P. C. Hansen, M. L. Kringelbach, and K. Pugh (Eds.), The Neural Basis of Reading (p. 6), 2010, New York, NY: Oxford University Press. Copyright 2010 by Oxford University Press. Adapted with permission. Source: Oxford University Press

It is also worth highlighting that we observed an interaction between prime type and relatedness with a prime duration of 50 ms. Specifically, masked cross-modal repetition-priming effects were approximately twice as large as the semantic-priming effects. This finding nicely results from the BIAM model, which contains multiple pathways (both semantic and nonsemantic) for connecting orthographic and phonological representations. Specifically, repetition priming is stronger than semantic priming because the former is mediated by both the “a–b–c” and “a–b–i–j” pathways, whereas the latter is exclusively mediated by “a–b–i–j.”

The present results add to the broad range of cross-modal priming effects across the time course that can be accommodated by the BIAM framework (Grainger & Holcomb, 2010). Although this result is beyond the scope of the present article, the model also provides a principled explanation for why masked pseudohomophone priming (e.g., using brane to prime /breɪn/) requires longer prime durations (i.e., 67 ms), whether the priming occurs within or across modalities. Pseudohomophone priming necessarily implicates the orthographic-to-phonological (O→P) interface, which is required in order to map sublexical orthographic units onto sounds. The pathways involving the O→P interface (“a–d–e–f” or “a–d–e–g”) are only active at relatively long (supraliminal) prime exposure durations.

Finally, we should note that our findings diverge from those of Dell’Acqua and Grainger (1999), who reported masked repetition- and semantic-priming effects of comparable magnitudes when picture primes and visual word targets were used. Why are there presemantic pathways between orthographic and phonological representations, but not between orthographic and pictorial representations? One could argue that there is a more fundamental link between speech and writing than between pictures and writing. In the evolution of language, speech came first, and writing was specifically invented to capture and archive speech, rather than to capture pictures or label objects in the world. Related to this, the mapping between the written and spoken forms of English words is generally systematic, with some exceptions; this sort of quasiregular relationship is absent between pictures and words. This suggests that the present findings may not generalize to an orthographically deep writing system like Mandarin, in which the spelling–sound mapping is far more arbitrary.

Future directions and conclusion

One obvious limitation of the present work is that our findings are based on masked visual primes and auditory targets, similar to much of the extant empirical literature. In principle, the symmetry of the BIAM architecture predicts that our findings ought to generalize to when masked auditory primes and visual targets are used. Although it is methodologically more challenging to mask auditory than to mask visual stimuli, Kouider and Dupoux (2005) showed that this could be done by masking spoken primes within a stream of speech-like sounds.

The present study explored the interplay between orthographic and phonological representations and revealed unconscious priming between the two modalities, when both repetition and, to a lesser extent, semantic primes were used. Importantly, our findings also provide compelling support for multiple semantic and nonsemantic pathways between orthographic and phonological representations, consistent with the BIAM (Grainger & Holcomb, 2010).

Notes

We also conducted parallel analyses with reciprocally transformed RTs and obtained the same pattern of results.

References

Anderson, J. E., & Holcomb, P. J. (1995). Auditory and visual semantic priming using different stimulus onset asynchronies: An event-related brain potential study. Psychophysiology, 32, 177–190.

Andrews, S., & Hersch, J. (2010). Lexical precision in skilled readers: Individual differences in masked neighbor priming. Journal of Experimental Psychology: General, 139, 299–318.

Baayen, R. H., Davidson, D. J., & Bates, D. M. (2008). Mixed-effects modeling with crossed random effects for subjects and items. Journal of Memory and Language, 59, 390–412. https://doi.org/10.1016/j.jml.2007.12.005

Balota, D. A., Aschenbrenner, A., & Yap, M. J. (2013). Additive effects of word frequency and stimulus quality: The influence of trial history and data transformations. Journal of Experimental Psychology: Learning, Memory, and Cognition, 39, 1563–1571.

Balota, D. A., & Yap, M. J. (2011). Moving beyond the mean in studies of mental chronometry: The power of response time distributional analyses. Current Directions in Psychological Science, 20, 160–166. https://doi.org/10.1177/0963721411408885

Balota, D. A., Yap, M. J., & Cortese, M. J. (2006). Visual word recognition: The journey from features to meaning (a travel update). In M. Traxler & M. A. Gernsbacher (Eds.), Handbook of psycholinguistics (2nd ed., pp. 285–375). Amsterdam, The Netherlands: Elsevier/Academic Press.

Bates, D., Mächler, M., Bolker, B., & Walker, S. (2015). Fitting linear mixed-effects models using lme4. Journal of Statistical Software, 67, 1–48. https://doi.org/10.18637/jss.v067.i01

Chapnik Smith, M., Meiran, N., & Besner, D. (2000). On the interaction between linguistic and pictorial systems in the absence of semantic mediation: Evidence from a priming paradigm. Memory & Cognition, 28, 204–213.

Dahan, D., & Magnuson, J. S. (2006). Spoken word recognition. In M. Traxler & M. A. Gernsbacher (Eds.), Handbook of psycholinguistics (2nd ed., pp. 249–283). Amsterdam, The Netherlands: Elsevier/Academic Press.

Dell’Acqua, R., & Grainger, J. (1999). Unconscious semantic priming from pictures. Cognition, 73, B1–B15. https://doi.org/10.1016/S0010-0277(99)00049-9

Forster, K. I. (1998). The pros and cons of masked priming. Journal of Psycholinguistic Research, 27, 203–233. https://doi.org/10.1023/A:1023202116609

Forster, K. I., & Davis, C. (1984). Repetition priming and frequency attenuation in lexical access. Journal of Experimental Psychology: Learning, Memory, and Cognition, 10, 680–698. https://doi.org/10.1037/0278-7393.10.4.680

Forster, K. I., Mohan, K., & Hector, J. (2003). The mechanics of masked priming. In S. Kinoshita & S. J. Lupker (Eds.), Masked priming: The state of the art (pp. 2–21). Hove, UK: Psychology Press.

Gelman, A., & Hill, J. (2007). Data analysis using regression and multilevel/hierarchical models. New York, NY: Cambridge University Press.

Gomez, P., Perea, M., & Ratcliff, R. (2013). A diffusion model account of masked versus unmasked priming: are they qualitatively different? Journal of Experimental Psychology: Human Perception and Performance, 39, 1731–1740.

Grainger, J., Diependaele, K., Spinelli, E., Ferrand, L., & Farioli, F. (2003). Masked repetition and phonological priming within and across modalities. Journal of Experimental Psychology: Learning, Memory, and Cognition, 29, 1256–1269. https://doi.org/10.1037/0278-7393.29.6.1256

Grainger, J., & Ferrand, L. (1994). Phonology and orthography in visual word recognition: Effects of masked homophone primes. Journal of Memory and Language, 33, 218–233.

Grainger, J., & Holcomb, P. J. (2010). Neural constraints on a functional architecture for word recognition. In P. L. Cornelissen, P. C. Hansen, M. L. Kringelbach, & K. Pugh (Eds.), The neural basis of reading (pp. 3–33). New York, NY: Oxford University Press.

Holcomb, P. J. Anderson, J., & Grainger, J. (2005). An electrophysiological investigation of cross-modal repetition priming. Psychophysiology, 42, 493–507.

Holcomb, P. J., & Grainger, J. (2006). On the time course of visual word recognition: An event-related potential investigation using masked repetition priming. Journal of Cognitive Neuroscience, 18, 1631–1643. https://doi.org/10.1162/jocn.2006.18.10.1631

Keuleers, E., & Brysbaert, M. (2010). Wuggy: A multilingual pseudoword generator. Behavior Research Methods, 42, 627–633. https://doi.org/10.3758/BRM.42.3.627

Kiyonaga, K., Grainger, J., Midgley, K., & Holcomb, P. J. (2007). Masked cross-modal repetition priming: An event-related potential investigation. Language and Cognitive Processes, 22, 337–376. https://doi.org/10.1080/01690960600652471

Kouider, S., & Dupoux, E. (2001). A functional disconnection between spoken and visual word recognition: Evidence from unconscious priming. Cognition, 82, B35–B49.

Kouider, S., & Dupoux, E. (2005). Subliminal speech priming. Psychological Science, 16, 617–625. https://doi.org/10.1111/j.1467-9280.2005.01584.x

Kuznetsova, A., Brockhoff, P. B., & Christensen, R. H. B. (2016). lmerTest: Tests in linear mixed effect models (R Package Version 2.0-30) [Software]. Retrieved from https://cran.r-project.org/web/packages/lmerTest/index.html

Lukatela, G., & Turvey, M. T. (1994). Visual lexical access is initially phonological: I. Evidence from associative priming by words, homophones, and pseudohomophones. Journal of Experimental Psychology: General, 123, 107–128. https://doi.org/10.1037/0096-3445.123.2.107

Masson, M. E. J., & Kliegl, R. (2013). Modulation of additive and interactive effects in lexical decision by trial history. Journal of Experimental Psychology: Learning, Memory, and Cognition, 39, 898–914.

Nelson, D. L., McEvoy, C. L., & Schreiber, T. A. (2004). The University of South Florida free association, rhyme, and word fragment norms. Behavior Research Methods, Instruments, & Computers, 36, 402–407. https://doi.org/10.3758/BF03195588

R Core Team. (2014). R: A language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing. Retrieved from www.R-project.org

Radeau, M., Besson, M., Fonteneau, E., & Castro, S. L. (1998). Semantic, repetition, and rime priming between spoken words: Behavioral and electrophysiological evidence. Biological Psychology, 48, 183–204.

Reimer, J. F., Lorsbach, T. C., & Bleakney, D. M. (2008). Automatic semantic feedback during visual word recognition. Memory & Cognition, 36, 641–658. https://doi.org/10.3758/MC.36.3.641

Shipley, W. C. (1940). A self-administering scale for measuring intellectual impairment and deterioration. Journal of Psychology: Interdisciplinary and Applied, 9, 371–377. https://doi.org/10.1080/00223980.1940.9917704

Slowiaczek, L. M., Soltano, E. G., Wieting, S. J., & Bishop, K. L. (2003). An investigation of phonology and orthography in spoken-word recognition. Quarterly Journal of Experimental Psychology, 56A, 233–262.

Tan, L. C., & Yap, M. J. (2016). Are individual differences in masked repetition and semantic priming reliable? Visual Cognition, 24, 182–200.

Author information

Authors and Affiliations

Corresponding author

Additional information

Author note

This work was supported in part by a Heads and Deanery Research Support Scheme (HDRSS) grant (R-581-000-176-101) to M.J.Y. Portions of this research were carried out as an undergraduate honors thesis by K.Y.T.C. under the direction of M.J.Y. and W.D.G. We thank Aaron Lim for his help with data collection, and an anonymous reviewer for comments on an earlier draft.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Chng, K.Y.T., Yap, M.J. & Goh, W.D. Cross-modal masked repetition and semantic priming in auditory lexical decision. Psychon Bull Rev 26, 599–608 (2019). https://doi.org/10.3758/s13423-018-1540-8

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-018-1540-8