Abstract

Sound symbolism refers to an association between phonemes and stimuli containing particular perceptual and/or semantic elements (e.g., objects of a certain size or shape). Some of the best-known examples include the mil/mal effect (Sapir, Journal of Experimental Psychology, 12, 225–239, 1929) and the maluma/takete effect (Köhler, 1929). Interest in this topic has been on the rise within psychology, and studies have demonstrated that sound symbolic effects are relevant for many facets of cognition, including language, action, memory, and categorization. Sound symbolism also provides a mechanism by which words’ forms can have nonarbitrary, iconic relationships with their meanings. Although various proposals have been put forth for how phonetic features (both acoustic and articulatory) come to be associated with stimuli, there is as yet no generally agreed-upon explanation. We review five proposals: statistical co-occurrence between phonetic features and associated stimuli in the environment, a shared property among phonetic features and stimuli; neural factors; species-general, evolved associations; and patterns extracted from language. We identify a number of outstanding questions that need to be addressed on this topic and suggest next steps for the field.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Sound symbolism

In this review, we use the term sound symbolism to refer to an association between phonemes and particular perceptual and/or semantic elements (e.g., large size, rounded contours).Footnote 1 These associations arise from some quality of the phonemes themselves (e.g., their acoustic and/or articulatory features), and not because of the words in which they appear. Thus, we exclude associations deriving from patterns of phoneme use in language (i.e., conventional sound symbolism; Hinton, Nichols, & Ohala, 1994) from our definition. We also exclude associations deriving from direct imitations of sound (i.e., imitative sound symbolism; Hinton et al., 1994).Footnote 2 We exclude these associations because, like Hinton et al., we think they are distinct categories and that they do not necessarily share underlying mechanisms with the phenomenon we seek to explain. As illustrated in Table 1, the definition of sound symbolism that we offer here is similar to a number of other definitions for the phenomenon that can be found in the literature.

The term association is somewhat difficult to characterize in this context; broadly, it refers to the sense that the phonemes in question seem related to, or to naturally go along with, stimuli possessing the associated elements or features (e.g., objects of a certain size or shape). Sound symbolic associations emerge behaviorally in reports that nonwords containing certain phonemes are especially good labels for particular targets (e.g., Maurer, Pathman, & Mondloch, 2006; Nielsen & Rendall, 2011). They may also emerge on implicit tasks, such that congruent phoneme-stimuli pairings are responded to differently than incongruent pairings (e.g., Hung, Styles, & Hsieh, 2017; Ohtake & Haryu, 2013; Westbury, 2005).

These sound symbolic associations have important implications for our understanding of language. While the arbitrariness of language has long been considered one of its defining features (e.g., Hockett, 1963), sound symbolism allows one way for nonarbitrariness to play a role. It does this through congruencies between the sound symbolic associations of a word’s phonemes and the word’s meaning. An example of this could be when a word denoting something small contains phonemes that are sound symbolically associated with smallness (i.e., an instance of indirect iconicity, discussed later). These congruencies can have effects on language learning (e.g., Asano et al., 2015; Imai, Kita, Nagumo, & Okada, 2008; Perry, Perlman, & Lupyan, 2015; for a review, see Imai & Kita, 2014) and processing (e.g., Kanero, Imai, Okuda, Okada, & Matsuda, 2014; Lockwood & Tuomainen, 2015; Sučević, Savić, Popović, Styles, & Ković, 2015). Moreover, sound symbolic associations have also been shown to impact cognition more broadly, including effects on action (Parise & Pavani, 2011; Rabaglia, Maglio, Krehm, Seok, & Trope, 2016; Vainio, Schulman, Tiippana, & Vainio, 2013; Vainio, Tiainen, Tiippana, Rantala, & Vainio, 2016), memory (Lockwood, Hargoort, & Dingemanse, 2016; Nygaard, Cook, & Namy, 2009; Preziosi & Coane, 2017), and categorization (Ković, Plunkett, & Westermann, 2010; Lupyan & Casasanto, 2015; for a recent review of sound symbolism effects, see Lockwood & Dingemanse, 2015).

Interest in sound symbolism within psychology is on the rise. Ramachandran and Hubbard’s (2001) article, which rekindled interest in the phenomenon,Footnote 3 was one of only 28 published on sound symbolism and/or the closely related topic of iconicity (discussed later) in that year. For comparison, a total of 193 articles were published on sound symbolism and/or iconicity in 2016 (see Fig. 1). However, despite growing interest in the phenomenon, one topic that has largely been neglected is the mechanism underlying these associations. That is, mechanisms to explain why certain phonemes come to be associated with particular perceptual and/or semantic features. While there are a number of proposals, there is a scarcity of experimental work focused on adjudicating between them. One potential reason for this is that the mechanisms have yet to be thoroughly described and evaluated in a single work (though see Deroy & Auvray, 2013; Fischer-Jørgensen, 1978; French, 1977; Johansson & Zlatev, 2013; Masuda, 2007; Nuckolls, 1999; Shinohara & Kawahara, 2010); that is the aim of the present article. We begin by describing two well-known instances of sound symbolism to serve as reference points. Then, as an illustration of this topic’s importance, the role of sound symbolism in language is reviewed. Next, we review the features of phonemes that may be involved in associations, and then explore the proposed mechanisms by which these features come to be associated with particular kinds of stimuli. Finally, we identify the outstanding issues that need to be addressed on this topic and suggest potential next steps for the field.

Size and shape symbolism

The two most well-known sound symbolic effects are typically traced to a pair of works from 1929 (though there are relevant earlier observations; e.g., Jesperson, 1922; von der Gabelentz, 1891; for a review, see Jakobson & Waugh, 1979). One of these is the mil/mal eff ect (Sapir, 1929), referring to an association between high and front vowels (see Table 2), and small objects; and low and back vowels, and large objects (Newman, 1933; Sapir, 1929). That is, when individuals are asked to pair nonwords such as mil and mal with a small and a large shape, most will pair mil with the small shape and mal with the large shape. Beyond a number of such explicit demonstrations (e.g., Thompson & Estes, 2011), the effect has also been shown to emerge implicitly. Participants are faster to respond on an implicit association task (IAT) if mil/small shapes and mal/large shapes share response buttons compared to when the pairing is reversed (Parise & Spence, 2012). In addition, participants are faster to classify a shape’s size if a sound-symbolically-congruent (vs. incongruent) vowel is simultaneously presented auditorily (Ohtake & Haryu, 2013). The effect has been demonstrated across speakers of different languages (e.g., Shinohara & Kawahara, 2010) and at different points in the life span (e.g., in the looking times of 4-month-old infants; Peña, Mehler, & Nespor, 2011).

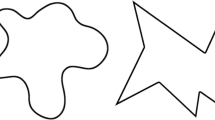

Another well-studied sound symbolic association is the maluma / takete effect (Köhler, 1929), referring to an association between certain phonemes and either round or sharp shapes. More recently, this has often been called the bouba/kiki effect, referring to the stimuli used by Ramachandran and Hubbard (2001) in their demonstration of the effect. In general, voiceless stop consonants (i.e., /p/, /t/, and /k/)Footnote 4 and unrounded front vowels (e.g., /i/ as in heed) seem to be associated with sharp shapes; while sonorant consonants (e.g., /l/, /m/, and /n/), the voiced bilabial stop consonant /b/, and rounded back vowels (e.g., /u/ as in who’d), are associated with round shapes (D’Onofrio, 2013; Nielsen & Rendall, 2011; Ozturk, Krehm, & Vouloumanos, 2013; cf. Fort, Martin, & Peperkamp, 2014). As with the mil/mal effect, the maluma/takete effect has been repeatedly demonstrated using explicit matching tasks (e.g., Maurer et al. 2006; Nielsen & Rendall, 2011; Sidhu & Pexman, 2016). It also emerges on implicit tasks such as the IAT (Parise & Spence, 2012) and on lexical decision tasks, such that nonwords are responded to faster when presented inside of congruent (vs. incongruent) shape frames (e.g., a sharp nonword inside of a jagged vs. curvy frame; Westbury, 2005; cf. Sučević et al., 2015). It has been demonstrated in speakers of a number of different languages (e.g., Bremner et al., 2013; Davis, 1961; cf. Rogers & Ross, 1975) and in the looking times of 4-month-old infants (Ozturk et al., 2013; cf. Fort, Weiß, Martin, & Peperkamp, 2013; Pejovic & Molnar, 2016).

Arbitrariness and nonarbitrariness

Sound symbolism is relevant to our understanding of the fundamental nature of spoken language, in particular, to the relationship between the form of a word (i.e., its articulation, phonology, and/or orthography) and its meaning. One possibility is that this relationship is arbitrary, with no special connection between form and meaning (e.g., Hockett, 1963).Footnote 5 Hockett (1963) described this lack of special connection as the absence of a “physical or geometrical resemblance between [form and meaning]” (p. 8). However this seems to only contrast arbitrariness with iconicity (see below). A more general way of characterizing this lack of a special connection is that aspects of a word’s form cannot be used as cues to its meaning (Dingemanse, Blasi, Lupyan, Christiansen, & Monaghan, 2015). As an illustration, it would be difficult to derive the meaning of the word fun from aspects of its form.Footnote 6 An important related concept is conventionality, the notion that words only mean what they do because a group of language users have agreed upon a definition.

It is also possible for the relationship between form and meaning to be nonarbitrary, either through systematicity or iconicity (Dingemanse et al., 2015). Systematicity refers to broad statistical relationships among groups of words belonging to the same semantic or syntactic categories. For instance, Farmer, Christiansen, and Monaghan (2006) showed that English nouns tend to be more phonologically similar to other nouns than to verbs (and vice versa for verbs). Similarly, Reilly and Kean (2007) demonstrated that there are general differences in the forms of concrete and abstract English nouns. Importantly, systematicity does not involve relationships between words’ forms and their specific meanings but broad relationships between groups of words and linguistic categories (Dingemanse et al., 2015). For instance, the nouns member, prison, and student are systematic in that they have a stress on their initial syllable (as do most disyllabic nouns; Sereno, 1986). This is a nonarbitrary property in that it is possible to derive grammatical category from word form. However, initial syllable stress is not related to these words’ specific meanings in any particular way. While systematicity tends to occur on a large scale within a language, specific patterns of systematicity vary from language to language (Dingemanse et al., 2015).

The other way that language can be nonarbitrary is through iconicity: a resemblance between form and meaning.Footnote 7 Instead of a holistic resemblance, this often emerges as a structural, resemblance-based mapping between aspects of a word’s form and aspects of its meaning (Emmorey, 2014; Meir, Padden, Aronoff, & Sandler, 2013; Taub, 2001). For instance, consider the example used by Emmorey (2014), of the hand sign for bird in American Sign Language, in Fig. 2. Notice that specific features of the form map on to specific features of the meaning (e.g., the presence of a protrusion at the mouth, the ability of that protrusion to open vertically). Because only certain aspects of meaning are included in the mapping, there are elements of the concept bird that are not represented (e.g., its wings). An example of this in spoken language is onomatopoeia: words that sound like their referent. Take for instance the word ding, whose abrupt onset and fading offset map onto these features in the sound of a bell (Taub, 2001; see Assaneo, Nichols, & Trevisan, 2011). The preceding examples could be considered instances of direct iconicity, in which form maps directly onto meaning via resemblance (Masuda, 2007). This mapping is of course constrained by the form’s modality; spoken language affords direct mapping onto meanings related to (or involving) sound, while signed languages are able to directly map onto spatial and kinesthetic meanings (Meir et al., 2013; Perniss, Thompson, & Vigliocco, 2010).

The sign for bird in American Sign Language. Notice that specific aspects of the word’s form map onto specific aspects of its meaning. For instance, the presence of a protrusion at the mouth, and the ability of that protrusion to open vertically. Note. From “ASLU,” by W. Vicars, 2015 (http://lifeprint.com/index.htm). Copyright 1997 by Lifeprint Institute. Reprinted with permission.

It is also possible for language to display indirect iconicity in which it is the forms’ associations that map onto meaning (Masuda, 2007). This was put elegantly by Von Humboldt (1836) as cases in which sounds “produce for the ear an impression similar to that of the object upon the soul” (p. 73). In indirect iconicity it is the impression of the sound that maps onto meaning as opposed to the sound itself.Footnote 8 Consider for instance the word teeny (/tini/). Because its meaning is not related to sound, its phonemes cannot map onto meaning directly. However, as mentioned, the high-front vowel phoneme /i/ is sound symbolically associated with smallness. Thus, this phoneme maps onto smallness indirectly, by way of its sound symbolic association, allowing teeny to be indirectly iconic. This is the relevance of sound symbolism to language: it provides one mechanism by which words can be nonarbitrarily associated with their meanings.

The preceding examples of iconicity would be considered instances of imagic iconicity: a relationship between a single form and meaning (Peirce, 1974). However, some have proposed that sound symbolism plays a role in diagrammatic iconicity: cases in which the relationship between two forms resembles the relationship between their two meanings. Imagic and diagrammatic iconicity are sometimes referred to as absolute and relative iconicity, respectively (e.g., Dingemanse et al., 2015). Diagrammatic iconicity is often seen in ideophones, a class of words that depict various sensory meanings (beyond sounds) through iconicity (see Dingemanse, 2012). For instance, the Japanese ideophones goro and koro mean a heavy and a light object rolling, respectively. Note that goro begins with a voiced consonant while koro begins with a voiceless consonant; voiced (voiceless) consonants are associated with heaviness (lightness; Saji, Akita, Imai, Kantartzis, & Kita, 2013). Thus. the relationship between the sound symbolic properties of each word (i.e., one being sound symbolically heavier than the other) reflects the relationship between their meanings. At the moment it is unclear whether sound symbolism primarily contributes to indirect imagic iconicity or requires the comparison inherent in diagrammatic iconicity (e.g., Gamkrelidze, 1974). In Figure 6, in the Appendix, we propose a taxonomy of iconicity that is an attempt to synthesize the various distinctions that have been made in the literature.

There is a good deal of work demonstrating that iconicity is present in the lexicons of spoken languages.Footnote 9 The clearest example of this is the widespread existence of ideophones. Although they are rare in Indo-European languages, they are common in many others, including sub-Saharan African languages, Australia Aboriginal languages, Japanese, Korean, Southeast Asian languages, South America indigenous languages, and Balto-Finnic languages (Perniss et al., 2010). Additionally, speaking to the psychological reality of ideophones, studies have shown that there are both behavioral (e.g., Imai et al., 2008; Lockwood, Dingemanse, & Hagoort, 2016) and neural differences (e.g., Kanero et al., 2014; Lockwood et al., 2016; Lockwood & Tuomainen, 2015) in the learning and processing of ideophones as compared to nonideophonic words (or ideophones paired with incorrect meanings).

There is also evidence that iconicity plays a role in the lexicon beyond ideophones. For instance, Ultan (1978) found that among languages that use vowel ablauting to denote diminutive concepts, most do so with high-front vowels. This is an example of indirect iconicity, occurring via high-front vowels’ sound symbolic associations with smallness. In addition, Blasi, Wichmann, Hammarström, Stadler and Christiansen (2016) compared the forms of 100 basic terms across 4,298 languages and found, in addition to other patterns, that words for the concept small tended to include the high-front vowel /i/. Cross-linguistic studies have also reported evidence of indirect iconicity in, among other things, proximity terms (e.g., Johansson & Zlatev, 2013; Tanz, 1971), singular versus plural markers (Ultan, 1978), and animal names (Berlin, 1994). Additionally, the ability of individuals to guess the meanings of foreign antonyms at an above chance rate (e.g., Bankieris & Simner, 2015; Brown, Black, & Horowitz, 1955; Klank, Huang, & Johnson, 1971) has been attributed to indirect iconicity.

Taking an even broader view of language, Perry et al. (2015) and Winter et al. (2017) conducted large-scale norming studies in which 3,001 English words were rated on a scale with 0 indicating arbitrariness and 5 indicating iconicity. Many words had an average rating significantly greater than zero, indicating that this sample of words was not entirely arbitrary. Moreover, the iconicity of words in this sample is related to age of acquisition (Perry et al., 2015), frequency, sensory experience (Winter et al., 2017), and semantic neighborhood density (Sidhu & Pexman, 2017). Thus, instead of being a linguistic curiosity, iconicity appears to be a general property of language that behaves in a predictable manner, even in a less obviously iconic language such as English.

Of course, the existence of systematicity and iconicity does not discount the premise that arbitrariness is a fundamental property of language. As put by Nuckolls (1999), “throughout the exhaustive dissections and criticisms of the principle of arbitrariness, there has never been a serious suggestion that it be totally abandoned” (p. 246). Instead, arbitrariness, systematicity, and iconicity are seen as three coexisting aspects of language (Dingemanse et al., 2015). In fact, there is a growing appreciation that words do not fall wholly into the categories arbitrary and nonarbitrary but rather that individual words can contain both arbitrary and nonarbitrary elements (e.g., Dingemanse et al., 2015; Perniss et al., 2010; Waugh, 1992). For instance, consider the word hiccups. It is a noun with a stressed first syllable (a systematic property); it also imitates aspects of its meaning (an iconic property). However, without knowing its definition, one would not be able to fully grasp its meaning based solely on its form (an arbitrary property). It seems that each of these properties contribute to language in varying proportions; they each also provide unique benefits to language. That is, systematicity facilitates the learning of linguistic categories (e.g., Cassidy & Kelly, 1991; Fitneva, Christiansen, & Monaghan, 2009; Monaghan, Christiansen, & Fitneva, 2011). Iconicity makes communication more direct and vivid (Lockwood & Dingemanse, 2015), and can facilitate language learning (e.g., Imai et al., 2008; for a review, see Imai & Kita, 2014). Lastly, decoupling form and meaning (i.e., arbitrariness) allows language to denote potentially limitless concepts (Lockwood & Dingemanse, 2015) and avoids confusion among similar meanings with similar forms (e.g., Gasser, 2004; Monaghan et al., 2011).

Phonetic features involved in sound symbolism

Before turning to a discussion of how sound symbolic associations between phonemes and particular stimuli arise, it is important to make clear that in the present review we conceptualize these associations as arising from associations between specific phonetic features Footnote 10 and particular perceptual and/or semantic features. For instance, the association between high-front vowels and smallness (i.e., the mil/mal effect) is seen as arising from an association between some component acoustic or articulatory feature of high-front vowels, and smallness. Phonemes are multidimensional bundles of acoustic and articulatory features, any or all of which may afford an association with particular stimuli (e.g., Tsur, 2006). Indeed, Jakobson and Waugh (1979) opine that “most objections to the search for the inner significance of speech sounds arose because the latter were not dissected into their ultimate constituents” (p. 182). Thus, the first step is to delineate these various features of vowel and consonant phonemes that may be involved in associations.

Vowels are phonemes produced by changing the shape and size of the vocal tract through moving the tongue’s position in the mouth and opening the jaw. This is done without obstructing the airflow—without having the articulators (e.g., tongue, lips) come together. The three main articulatory features that determine vowels’ identity are: height (proximity of the tongue body to the roof of the mouth), frontness (proximity of the highest point of the tongue to the front of the mouth; see Fig. 3) and lip rounding.Footnote 11 Vowels are described acoustically in terms of their formants: bands of high acoustic energy at particular frequencies. Tongue and jaw position serve to change the configuration of the vocal tract and affect which frequencies will resonate most strongly. The lowest of these formants (i.e., fundamental frequency) corresponds with the pitch of a vowel; it tends to be higher for high vowels, potentially because the tongue’s height “pulls on the larynx, and thus increases the tension of the vocal cords” (Ohala & Eukel, 1987, p. 207), increasing pitch. The next three formants determine the identity of a vowel. The frequency of the first formant (F1) is negatively correlated with height; the frequency of the second formant (F2) is positively correlated with frontness. These relationships are due to changes in the volume of resonating cavities in the vocal tract when the articulators are in different positions. In addition, lip rounding lowers the frequency of all formants above the first (in particular, the third). The distance between these formants (i.e., formant dispersion) is also important. For instance, front vowels are characterized by larger formant dispersion (between F1 and F2) than back vowels.

In articulating consonants, the airstream is obstructed in some way; consonants are defined based on the manner of this obstruction and the place where it occurs (Ladefoged & Johnson, 2010). Broadly speaking, consonants’ manner of articulation can be divided into obstruents (produced with a severe obstruction of airflow) and sonorants (produced without complete stoppage of, or turbulence in, the airflow; Reetz & Jongman, 2009). Obstruents include stops (in which airflow is entirely blocked and then released in a burst), fricatives (in which airflow is made turbulent by bringing two articulators together), and affricates (a combination of the two). Sonorants include nasals (in which airflow proceeds through the nasal cavity) and approximants (in which airflow is affected by bringing two articulators together, though not enough to create turbulence). In the case of obstruent consonants, they can also be distinguished by whether the vocal folds are brought close together enough to vibrate (i.e., voiced consonants) or not (i.e., voiceless consonants); sonorant consonants are typically voiced. Place of articulation refers to the location at which the airflow is affected, and especially relevant categories include bilabials (in which the lips are brought together), alveolars (in which the tongue tip is brought to the alveolar ridge), and velars (in which the back of the tongue is brought to the soft palate).

As with vowels, each of these articulatory features of consonants have acoustic consequences. Stops involve a period of silence (potentially with voicing) followed by a burst of sound as they are released (potentially with aspiration). Fricatives cause turbulent noise in higher frequencies; nasals involve formants similar to vowels, though much fainter, while approximants have stronger formant structures.

Consonants and vowels also affect one another through coarticulation. That is, very few words involve a single phoneme. The gestures involved in producing sequences of phonemes are quick and result in adjacent sounds influencing the articulation of one another. For instance, vowels can affect consonants’ formant transitions (an acoustic cue to the place of articulation). In addition, a vowel’s pitch can be affected by the consonant that precedes it (e.g., higher when preceded by a voiceless obstruent; Kingston & Diehl, 1994).

Mechanisms for associations between phonetic and semantic features

Next we turn to the main topic of this review: how these phonetic features come to be associated with particular kinds of stimuli. This discussion will draw heavily from the literature on crossmodal correspondences, which, broadly speaking, can be defined as “the mapping that observers expect to exist between two or more features or dimensions from different sensory modalities (such as lightness and loudness), that induce congruency effects in performance and often, but not always, also a phenomenological experience of similarity between such features” (Parise & Spence, 2013, p. 792; also reviewed in Parise, 2016; Spence, 2011). For instance, individuals more readily associate bright objects with high-pitched sounds than with low-pitched sounds (Marks, 1974), and are faster to respond to objects if their brightness is congruent with a simultaneously presented tone (Marks, 1987). Our grouping of proposed explanations owes much to Spence’s (2011) grouping of proposed mechanisms for such crossmodal correspondences.

As noted by Parise (2016), the term crossmodal correspondence has been used to refer to associations between simple unidimensional stimuli, consisting of a single basic feature (e.g., pitch of pure tones, brightness of light patches) as well as associations between more perceptually complex, multidimensional stimuli, composed of multiple features from different modalities (e.g., linguistic stimuli, which contain multiple acoustic and articulatory features). If one considers crossmodal correspondences to encompass all associations between stimuli in different modalities, then sound symbolic associations would certainly fall into this category (as in Parise & Spence, 2012; Spence, 2011). However, associations involving either simple or complex stimuli could potentially be distinct phenomena (see Parise, 2016). Thus, in the following review, we use the term crossmodal correspondence only to refer to associations between basic perceptual dimensions (e.g., brightness and pitch), which make up the majority of the term’s usage (Parise, 2016). This draws a distinction between sound symbolic associations and crossmodal correspondences. Because phonemes are multidimensional stimuli, sound symbolism would be considered a distinct, though related, phenomenon from crossmodal correspondences. Thus, while mechanisms invoked to explain crossmodal correspondences can be informative, we must be cautious when extending them to sound symbolic associations.

In the following sections we group proposed explanations for sound symbolic associations into themes; note that although we think this grouping is helpful, there may be instances in which a given explanation could fit under multiple themes. Additionally, while we have included the themes that we feel best represent the existing literature, we acknowledge the possibility that other mechanisms may exist.

Mechanism 1: Statistical co-occurrence

One mechanism proposed to explain associations between sensory dimensions is the reliability with which they co-occur in the environment (see Spence, 2011). That is, experiencing particular stimuli co-occurring in the world may lead to an internalization of these probabilities. This typically involves stimuli from a particular end of Dimension A tending to co-occur with stimuli from a particular end of Dimension B. One way of framing this is through the modification of Bayesian coupling priors, and the belief one has about the joint distribution of two sensory dimensions based on prior experience (Ernst, 2007).

Statistical co-occurrence has been proposed to explain the crossmodal correspondence between high (low) pitch and small (large) size (e.g., Gallace & Spence, 2006), due to the fact that smaller (larger) things tend to resonate at higher (lower) frequencies (see Spence, 2011). Another example is the association between high (low) auditory volume and large (small) size (e.g., Smith & Sera, 1992), which may arise from the fact that larger entities tend to emit louder sounds (see Spence, 2011). The plausibility of this mechanism has been demonstrated experimentally, by artificially creating co-occurrences between stimuli. Ernst (2007) presented participants with stimuli that systematically covaried in stiffness and brightness (e.g., for some participants, stiff objects were always bright). After several hours of exposure, participants demonstrated a crossmodal correspondence between these previously unrelated dimensions. Further evidence comes from a neuroimaging study that showed that after presenting participants with co-occurring audiovisual stimuli, the presentation of stimuli in one modality was associated with activity in both auditory and visual regions (Zangenehpour & Zatorre, 2010).

This mechanism has been used to explain several sound symbolic associations. In these proposals, some component feature of the phonemes is claimed to co-occur with related stimuli in the environment. The most obvious application is to the mil/mal effect (see Spence, 2011). As mentioned, small (large) things tend to resonate at a high (low) frequency. Thus, front vowels may be associated with smaller objects because of front vowels’ higher frequency F2. Similarly, the association between high vowels and smaller objects may be due to high vowels’ higher pitch (Ohala & Eukel, 1987).Footnote 12 A similar explanation has also been applied to the association between front (back) vowels and short (long) distances (Johansson & Zlatev, 2013; Rabaglia et al., 2016; Tanz, 1971). Johansson and Zlatev (2013) noted that lower frequencies are able to travel longer distances and are therefore more likely to be heard from far away. Thus, we often experience more distant entities co-occurring with lower frequency sounds; this could potentially contribute to the association between back vowels (which have a lower F2) and long distance.

The mechanism of statistical co-occurrence has also been applied to internally experienced co-occurrences. For instance, Rummer, Schweppe, Schlegelmilch, and Grice (2014; also see Zajonc, Murphy, & Inglehart, 1989) proposed that some phonemes might develop associations with particular emotions due to an overlap between the muscles used for articulation and those used for emotional expression. Previous research suggested that simply adopting the facial posture of an emotion can facilitate experience of that emotion (i.e., the facial feedback hypothesis; Strack, Martin, & Stepper, 1988). Rummer et al. (2014) noted that articulating an /i/ involves contracting the zygomaticus major muscle which is also involved in smiling; conversely, articulating an/o/(as in the German hohe) involves contracting the orbicularis oris muscle, which blocks smiling. They proposed that over time, the increased positive affect felt while articulating /i/ (due to facial feedback) will lead to that phoneme becoming associated with positive affect. Indeed, they showed that participants found cartoons funnier while articulating an /i/ as opposed to an /o/. However, they did not directly examine facial feedback as a mechanism. In addition, the validity of the facial feedback hypothesis has recently been called into question by failures to replicate Strack et al.’s original finding (Wagenmakers et al., 2016). Nevertheless, the notion that co-occurrences of phonemes and internal sensations can lead to sound symbolic associations is a possibility that invites further evaluation.

One final statistical co-occurrence account is worth mentioning, despite the fact that it is not presented as an account of sound symbolism. Gordon and Heath (1998) reviewed findings that several vowel shifts (systematic changes in how vowels are articulated in a population) seem to be moderated by gender, with females leading raising and fronting changes and males leading lowering and backing changes. The term raising, for instance, refers to a given vowel being articulated with the tongue in a higher position than previously. They theorized that the different vocal tracts of women and men (contributing to women naturally having larger F2–F1 dispersion) might create an association between females and high-front vowel space (which has larger F2–F1 dispersion) and males and low-back vowel space. Females and males might then be drawn to gender stereotypical vowel space, leading to gender moderated vowel changes.Footnote 13 Although the authors do not mention it, there is some evidence of a sound symbolic association between high-front vowels (low-back vowels) and femininity (masculinity; Greenberg & Jenkins, 1966; Tarte, 1982; Wu, Klink, & Guo, 2013; cf. Sidhu & Pexman, 2015). One might speculate that the natural co-occurrence between sex and formant dispersion contributes to this association.

There is a good deal of work that needs to be done to demonstrate that statistical co-occurrence is a viable mechanism for sound symbolism. The experimental evidence demonstrating that it can indeed create crossmodal correspondences (e.g., Ernst, 2007) makes it a promising mechanism. However, this evidence has been provided in the context of simple sensory dimensions; what remains to be seen is if such correspondences can then contribute to sound symbolic associations. That is, can a co-occurrence-based association between a component feature of a phoneme and certain stimuli create a sound symbolic association for that phoneme as a whole? One way to examine this question would be to present participants with isolated phonetic components (e.g., high vs. low frequencies) co-occurring with perceptual features (e.g., rough vs. smooth textures). Experimenters could then examine if this co-occurrence led to a sound symbolic association between phonemes containing said phonetic components (e.g., phonemes with a high vs. low frequency F2) and targets containing said perceptual feature (e.g., rough vs. smooth textures). Another approach would be to interfere with existing associations by presenting stimuli that contradict them (e.g., large objects making high-pitched noises) and then examining the effect on sound symbolic associations.

An important feature of this mechanism is that it requires experience, and thus assumes that at least some sound symbolic associations are not innate (though, as will be discussed later, there are theories regarding evolved innate sensitivities to, and/or predispositions to acquire associations based on, certain statistical co-occurrences). As such, we might not expect associations that depend on statistical co-occurrences to be present from birth. Although Peña et al. (2011) found evidence for the mil/mal effect in four-month-old infants, it is possible that even these very young infants had already begun to gather statistical information about the environment (see Kirkham, Slemner, & Johnson, 2002). Testing infants at an even younger age could allow us to investigate if less exposure to statistical co-occurrences results in a weaker sound symbolism effect (or the absence of an effect altogether). Of course, any differences between younger and older infants could simply be attributable to differences in cognitive development. Thus, another approach could be to test infants of the same age for associations based on co-occurrences that they are more or less likely to have experienced. For instance, young infants may have more experience of certain frequencies co-occurring with different sizes than with different distances; the effects of these differences in experience could be tested. Also, we would only expect associations of this kind to be universal if they are based on a universal co-occurrence. While natural co-occurrences reflecting physical laws (e.g., between pitch and size) may be relatively universal, it might be possible to find others that vary by location. For instance, some have speculated that advertising can create statistical co-occurrences that are relatively local, and that these potentially contribute to cultural variations in some crossmodal correspondences (e.g., Bremner et al., 2013). It could be informative to examine instances in which populations differ in culturally based statistical co-occurrences, and to compare their demonstrated associations. As mentioned by Wan et al. (2014), one might also consider effects of geographical differences (e.g., in landscape or vegetation) on statistical co-occurrences.

Mechanism 2: Shared properties

Another broad class of accounts includes proposals that phonemes and associated stimuli may share certain properties, despite being in different modalities. Again, these properties in phonemes would likely derive from one or more of their component features. Individuals may then form associations based on these shared properties. These explanations can be divided into those involving low-level properties (i.e., perceptual) and those involving high-level properties (i.e., conceptual, affective, or linguistic).

Low-level properties

Some perceptual features may be experienced in multiple modalities. For instance, one can experience size in both visual and tactile modalities. One way of explaining sound symbolic associations is to suggest that they involve an experience of the same perceptual feature in both phonemes and associated stimuli. For instance, Sapir (1929; see also Jesperson, 1922) theorized that participants might have associated high vowels with small shapes in part because for high vowels the oral cavity is smaller during articulation. Thus, both phonemes and shapes had the property of smallness. Similarly, Johansson and Zlatev (2013) proposed this as one potential explanation for the association between high-front vowels and small distances. Many have also pointed out that the vowels associated with roundness (i.e.,/u/, and /oʊ / as in hoed) involve a rounded articulation (e.g., French, 1977; Ikegami & Zlatev, 2007; also suggested in Ramachandran & Hubbard, 2001). Note that these accounts involve some amount of abstraction or other mechanism by which features can be united across modalities, and do not necessarily imply that phonemes and stimuli possess identical perceptual features. Nevertheless, they do imply a certain amount of imitation between phonemes and associated features.

Others have proposed similar, though less direct, accounts. For instance, Saji et al. (2013) theorized that the association between voiced (voiceless) consonants and slow (fast) actions has to do with the shared property of duration. That is, in voiced consonants, the vocal cords vibrate prior to stop release, and thus for a longer time than in voiceless consonants. This longer duration might unite them with slow movements, which take a longer time to complete. Ramachandran and Hubbard (2001) also speculated that the maluma/takete effect might owe to an abruptness, or “sharp inflection” (p. 19) in both voiceless stops and sharp shapes. Indeed, voiceless stops involve a complete absence followed by an abrupt burst of sound; similarly, the outlines of sharp shapes involve abrupt changes in direction.

One final proposal is that a phoneme may be associated with body parts highlighted in its articulation (originally suggested by Greenberg, 1978). This account stands out from those discussed elsewhere in this review in that associations purported to derive from it have not been demonstrated experimentally but rather inferred from comparisons across languages. For instance, Urban (2011) found that across a sample of languages, words for nose and lips were more likely to contain nasals and labial stops, respectively, than a set of control words. In addition, Blasi et al. (2016) found that words for tongue tended to include the phoneme /l/ (for which the airstream proceeds around the sides of the tongue), while words for nose tended to include the nasal /n/. Importantly, the patterns documented by Blasi et al. did not seem to be a result of shared etymologies or areal dispersion; thus, the authors speculated that they could potentially have derived from sound symbolic associations (or a related phenomenon). If the association between phonemes and body parts that these findings seem to hint at exists, it would be much more direct and limited than other associations discussed in this review. Future behavioral studies might examine if, beyond these quasi-imitative relationships, phonemes are also associated with stimuli that are related to the relevant body partFootnote 14 (e.g., nasals and objects with salient odors). Such associations could ostensibly derive from the shared property of a salient body part.

High-level properties

Others have proposed that the shared properties that produce sound symbolism are more conceptual in nature. For instance, L. Walker, Walker, and Francis (2012) suggested that crossmodal correspondences might emerge due to shared connotative meaning (i.e., what the stimuli suggest, imply, or evoke) among stimuli. Note that this is distinct from what the stimuli denote (i.e., what they directly represent). That is, a bright object denotes visual brightness, but this is distinct from a connotation of brightness, which can apply across modalities. For example, tastes and melodies can seem “bright.”

When we consider the fact that these suprasensory properties can be shared by stimuli across modalities, it becomes apparent that shared connotations might explain a wide variety of observed crossmodal correspondences. As an example, consider that high-pitched tones have the connotations of being brighter, sharper, and faster than low-pitched tones (L. Walker et al., 2012). These connotations of high-pitched tones might explain the association between high pitches and small stimuli (which also share these connotations). Moreover, P. Walker and Walker (2012; see also Karwoski, Odbert, & Osgood, 1942) proposed that there are a set of aligned connotations, such that a stimulus possessing one of them will also tend to possess the others. For instance, stimuli with the connotation of brightness will also tend to have connotations of sharpness, smallness, and quickness (L. Walker et al., 2012).

This framework may extend to sound symbolic associations (see P. Walker, 2016). That is, some sound symbolic associations might arise due to phonemes and stimuli sharing connotations. The connotations of phonemes would derive from the connotations of their component features. For instance, high-front vowels, which are high in frequency, have the same connotations as high frequency pure tones (e.g., brighter, sharper, faster). This might explain their association with small stimuli, which, as reviewed above, also share these connotations. In a test of this proposal, French (1977) hypothesized, and then investigated, a sound symbolic association between high-front vowels and coldness, based on a similarity in connotation between coldness and smallness. Indeed, his participants reported that nonwords containing the vowel /i/ were the “coldest” while those containing /ɑ/ (as in hawed) were the “warmest.”

Similar explanations have also been applied to shape sound symbolism. Bozzi and Flores D’Arcais (1967) asked participants to rate compatibility between nonwords and shapes, and also to rate both kinds of stimuli on semantic differential scales (i.e., Likert scales anchored by polar adjectives, used to measure connotations). They found that compatible nonwords and shapes tended to have similar connotations (e.g., sharp nonwords and shapes were both rated as being fast, tense, and rough). Gallace, Boschin, and Spence (2011) made a similar proposal to explain their finding that round and sharp nonwords were differentially associated with certain tastes. They found that these associations were predicted by similar ratings of nonwords and tastes on connotative dimensions such as tenseness or activity.

A limitation of this account is that it begs the question of how phoneme features come to be associated with their connotations. There are also several conceptual clarifications required. For instance, in cases of several shared connotations, is one primary in creating the association? In addition, there is a need to clarify the distinction between a given phoneme’s connotations and its sound symbolic associations. That is, when participants rate a given vowel as belonging to the “small” end of a large/small semantic differential scale, does that describe a connotation, an associated perceptual (i.e., denotative) feature, or both? Should connotations themselves be considered instances of sound symbolism? The exact connotative dimensions involved also require further elaboration. Much of Walker’s work focuses a core set of connotations including: light/heavy, sharp/blunt, quick/slow, bright/dark, and small/large (e.g., P. Walker & Walker, 2012). Others have focused on connotations that comprise the three factors of connotative meaning discovered by Osgood, Suci, and Tannenbaum (1957), namely, evaluation (e.g., good/bad), potency (e.g., strong/weak), and activity (e.g., active/passive; e.g., Miron, 1961; Tarte, 1982).

It has also been proposed that some crossmodal correspondences arise via transitivity (e.g., Deroy, Crisinel, & Spence, 2013). That is, if there exists an association between Dimensions A-B, and B-C, this might create an association between Dimensions A-C. French (1977) made a similar suggestion for sound symbolic associations. He theorized that phonemes are only directly related with a small number of stimulus dimensions, and that these mediate relationships with other stimulus dimensions. For instance, high-front vowels may be directly associated with smallness, which mediates a relationship between high-front vowels and other properties related to smallness (e.g., thinness, lightness, quickness). A clarification to make going forward is whether these mediated effects involve relationships among denotative (as in Deroy et al., 2013) or connotative dimensions, or both (see Fig. 4).

Different ways in which denotative and/or connotative dimensions may result in mediated relationships. a The sort of mediation discussed by Deroy et al. (2013), in which the perceptual dimension of smallness might mediate a relationship between high/front vowels and the perceptual dimension of quickness via transitivity. b A relationship based off of P. Walker and Walker (2012; which might be considered a mediated one) in which the connotation of smallness might mediate a relationship between high/front vowels and the perceptual dimension of quickness. c An example of mediation involving both denotative and connotative dimensions, in which high/front vowels are associated with the perceptual dimension of quickness because of the phonemes’ association with the perceptual dimension of smallness, and that dimension’s association with the perceptual dimension of quickness (via a shared connotation)

A related proposal is that stimuli may be associated by virtue of having the same impression on a person. That is, instead of being united through a shared conceptual property, stimuli may be associated because they have a similar effect on a person’s level of arousal or affect (Spence, 2011). Indeed there is some evidence of hedonic value (Velasco, Woods, Deroy, & Spence, 2015) and associated mood (Cowles, 1935) underlying crossmodal correspondences. This account has not yet been examined in the context of sound symbolism. However, as is discussed elsewhere in this review, there has been some work proposing a link between phonemes and particular affective states (e.g., Nielsen & Rendall, 2011, 2013; Rummer et al., 2014).

Lastly, some have theorized that crossmodal correspondences arise when the two dimensions share the same labels (e.g., Martino & Marks, 1999). For instance, the correspondence between pitch and elevation may derive from the use of the labels high and low for both. Evidence for this has come from the fact that speakers of languages using different labels for pitch (e.g., high/low in Dutch; thin/thick in Farsi) show different crossmodal correspondences (e.g., height and pitch in Dutch speakers; height and thickness in Farsi speakers; Dolscheid, Shayan, Majid, & Casasanto, 2013). Although this has not yet been proposed for sound symbolic associations, there are some relevant observations. For instance, front and back vowels are sometimes referred to as bright and dark vowels, respectively (e.g., Anderson, 1985). This corresponds to the visual stimuli with which either group of phonemes is associated (Newman, 1933). However, this example is only intended to serve as an illustration; at the moment, the relevance of this account to sound symbolism is purely speculative. In addition, a question related to this general explanation is one of directionality: do shared linguistic labels create associations, or vice versa, or both? Dolscheid et al. (2013) demonstrated that teaching Dutch speakers to refer to pitch in terms of thickness led to effects that resembled those of Farsi speakers. Speaking to the converse, Marks (2013) discussed the notion that crossmodal correspondences might contribute to the creation of linguistic metaphors, and the use of a term from one sensory modality to describe sensations in another (see also Shayan, Ozturk, & Sicoli, 2011).

An important step in testing theories based on shared properties will be demonstrating the involvement of the hypothesized shared properties (see Table 3 for a summary of properties). With regard to conceptual properties, a potential starting point could be to examine ratings on semantic differential scales for phonemes and associated stimuli, to test whether they indeed share connotations. The next step could be to verify activation of the shared conceptual properties, potentially by examining if they become more accessible following sound symbolic matching. For affect-based associations, one could examine whether phonemes and associated stimuli elicit comparable changes in a person’s self-reported mood. Deroy et al. (2013) theorized that mediated relationships (involving denotative dimensions) should be weaker than direct ones. This provides a potential way of detecting such relationships. In addition, several of these theories may depend on developmental milestones (e.g., the acquisition of language) and thus make different predictions for sound symbolic effects when individuals are tested before and after these milestones are reached. Lastly, associations based on shared properties would be expected to vary cross-culturally to the extent that stimuli differ in their associated properties across cultures.

An outstanding question that is important for these accounts is whether participants only recognize shared properties and form associations when asked to do so during a task. For instance, when asked to rate the similarity between nonwords and tastes, participants might very well consider properties that the two have in common. However, this does not mean that such associations exist outside of that task context. One could address this issue by examining whether associations are detectable using implicit measures (e.g., priming) that do not force participants to consider the relationships between stimuli in an overt way. Indeed, P. Walker and Walker (2012) demonstrated that a crossmodal correspondence based on connotation could affect responses on an implicit task.

Mechanism 3: Neural factors

The third mechanism includes proposals that sound symbolic associations arise because of structural properties of the brain, or the ways in which information is processed in the brain. To be clear, this is not to imply that other mechanisms do not rely on neural factors. The difference here is that the following theories propose neural factors to be the proximal causes of the associations.

A theory described in the crossmodal correspondence literature suggests that there may be a common neural coding mechanism for stimulus magnitude, regardless of modality. For instance, Stevens (1957) noted that increases in stimulus intensity result in higher neuronal firing rates. In a similar vein, Walsh (2003) proposed that a system in the inferior parietal cortex is responsible for coding magnitude, again across modalities. Thus, for stimulus dimensions that can be quantified in terms of more or less (e.g., more or less loud, more or less bright), this common neural coding mechanism may lead to an association between the “more” and the “less” ends of each dimension (see Spence, 2011). For instance, the correspondence between high (low) volume and bright (dim) objects (Marks, 1987) may have to do with the fact that they are both high (low) in magnitude (see Spence, 2011). So far this has not been extended to sound symbolic associations. However, it may be a viable mechanism when involving phonetic features that can be characterized in terms of magnitude.Footnote 15

Another relevant theory is based on a hypothesized relationship between the brain regions associated with grasping and with articulation. Some have proposed that the articulatory system originated from a neural system responsible for grasping food with the hands and opening the mouth to receive it, resulting in a link between articulation and grasping (see Gentilucci & Corballis, 2006). Vainio et al. (2013) demonstrated that participants were faster to make a precision grip (i.e., thumb and forefinger) while articulating the phonemes /t/ or /i/, and faster to make a power grip (i.e., whole hand) while articulating the phonemes /k/ or /ɑ/. Note that the articulation of each set of phonemes reflects the performance of either kind of grip.Footnote 16 Vainio et al. theorized that the mil/mal effect might emerge from these associations (see also Gentilucci & Campione, 2011). For instance, seeing a small shape may elicit the simulation of a precision grip (Tucker & Ellis, 2001), which would then also activate a representation of the phoneme /i/’s articulation. It should be noted, however, that a follow up study by this group found participants were no faster to articulate an /i/ (/ɑ/) in response to a small vs. large (large vs. small) target (Vainio et al., 2016).Footnote 17 Thus, there is still a need for more direct evidence of the proposed links.

An ideal way to examine these neural theories would be to use neuroimaging. For instance, it would be informative to test for activation in the hypothesized magnitude-coding region when processing phonemes and related stimuli. Likewise, testing for activation in motor regions associated with articulation, in response to graspable objects, could also provide insight into articulation/grasping as a neural mechanism. There is recent evidence for the converse relationship: increased activity in motor regions associated with performing a precision or power grip, while articulating /ti/ or /kɑ/, respectively (Komeilipoor, Tiainen, Tiippana, Vainio, & Vainio, 2016). These mechanisms should be largely universal, and thus the neural accounts predict that sound symbolic associations should not be modulated by culture.

Mechanism 4: Species-general associations

Some have explained sound symbolism as based on species-general, inherited associations. While other mechanisms may involve evolved processes, the following theories propose that the associations themselves (as opposed to the processes leading to those associations) are a result of evolution.

One of the most widely cited explanations for the mil/mal effect is Ohala’s (1994) frequency code theory. This is based on the observation that many nonhuman species use low-pitched vocalizations when attempting to appear threatening, and high-pitched vocalizations when attempting to appear submissive or nonthreatening (Morton, 1977). Ohala proposes that these vocalizations appeal to, and are indicative of, an innate cross-species association between high (low) pitches and small (large) vocalizers (viz. the frequency code). Thus, when an animal wants to appear threatening, they use a low-pitched vocalization in order to give off an impression of largeness. Ohala theorizes that humans’ association between frequency (e.g., in vowels’ fundamental frequency and F2) and size is due to this same frequency code. At a fundamental level, this explanation is based on co-occurrence (i.e., between pitch and size); however, it is argued that sensitivity to this co-occurrence has become innate. As evidence for this innateness, Ohala points to the fact that male voices lower at puberty: precisely when they will need to use aggressive displays (i.e., low-pitched vocalizations) to compete for a mate. He argues that such an elaborate anatomical evolution would only have been worthwhile if it appealed to an innate predisposition in listeners. Nevertheless, Ohala concedes that the frequency code may require some postnatal experience of relevant environmental stimuli, to be fully developed. Thus, one might regard the frequency code hypothesis as an innate predisposition to develop an association, rather than as an innate association per se.

It is important to note that while many studies have found a relationship between fundamental frequency and body size in several species (e.g., Bowling et al., 2017; Charlton & Reby, 2016; Gingras, Boeckle, Herbst, & Fitch, 2013; Hauser, 1993; Wallschläger, 1980), others have not (e.g., Patel, Mulder, & Cardoso, 2010; Rendall, Kollias, Ney, & Lloyd, 2005; Sullivan, 1984). As noted by Bowling et al. (2017), a relevant factor seems to be the range in body sizes studied, with more equivocal effects when studying the relationships within a given category than across categories (e.g., within a species vs. across species; cf. Davies & Halliday, 1978; Evans, Neave, & Wakelin, 2006). In response to these equivocal findings, Fitch (1997) presented results from research with rhesus macaques, demonstrating that formant dispersion may be a better indicator of body size than fundamental frequency. It is beyond the scope of this review to adjudicate between these two cues. However, to the extent that formant dispersion is a more reliable cue to size than fundamental frequency, the frequency code hypothesis may require reframing. It is relevant to note that the mil/mal effect can be characterized in terms of formant dispersion, which is larger for front vowels than back vowels, and decreases from high-front vowels to low-front vowels.Footnote 18

In a similar vein, Nielsen and Rendall (2011, 2013) note that many nonhuman species use harsh punctuated sounds in situations of hostility and high arousal; and smoother, more harmonic sounds in situations of positive affiliation and low arousal.Footnote 19 Notably, the meanings of these calls do not need to be learned by conspecifics, suggesting an innate sensitivity to their meanings (Owren & Rendall, 2001). There is also evidence of this in humans: infants use harsh (smooth) sounds in situations of distress (contentment); adults use harsh and punctuated voicing patterns in periods of high stress (Rendall, 2003). Nielsen and Rendall theorize that the evolved semantic-affective associates of these two types of sounds may extend to phonemes with similar acoustic properties: namely obstruents and sonorants. For instance, swear words (which can be considered threatening stimuli) contain a relatively large proportion of obstruents (Van Lancker & Cummings, 1999). This could contribute to the maluma/takete effect, and to associations between stop phonemes (sonorant phonemes) and sharp (round) shapes. Such an account would depend on sharp shapes seeming more dangerous than round shapes, and indeed there is some speculation in this regard (Bar & Neta, 2006).

A potential limitation of the claims regarding evolved and/or innate traits is the challenge of generating testable hypotheses from these accounts. One approach would be to examine whether the relevant associations are present universally, and from a very young age. While there is evidence for sensitivity to the mil/mal effect (Peña et al., 2011) and the maluma/takete effect (Ozturk et al., 2013) in four month-old infants, it is notable that two other studies have failed to find evidence of infant sensitivity to the maluma/takete effect at that age (Fort et al., 2014; Pejovic & Molnar, 2016). In addition, one might debate whether observing an effect at four months of age is sufficient to infer its innateness. Thus, the evidence for innateness is not overwhelming at present. At least one crossmodal correspondence has been demonstrated in infants between 20 and 30 days old (Lewkowicz & Turkewitz, 1980), and it would be informative for future studies to examine sensitivity to sound symbolism at a similar age. Another approach could be a comparative one, examining if non-humans demonstrate sound symbolism. Ludwig, Adachi, and Matsuzawa (2011) reported a crossmodal correspondence between pitch and brightness in chimpanzees, suggesting that such an investigation might be worthwhile.

Mechanism 5: Language patterns

One final group of theories proposes that sound symbolic associations emerge due to patterns in language. This is, of course, related to the first mechanism discussed (i.e., statistical co-occurrence); the important distinction is that, as opposed to observing co-occurrences in the environment, the theories to be discussed propose sound symbolic associations might derive from co-occurrences between phonological and semantic features in language. An example of this would be associations derived from phonesthemes: phoneme clusters that tend to occur in words with similar meanings (e.g., gl- in words relating to light, such as glint, glisten, glow; see Bergen, 2004). After repeated exposure, individuals might come to associate /gl/ with brightness, for instance. Indeed there is evidence of individuals using their knowledge of phonesthemes when asked to generate novel words (e.g., using the onset gl- when asked to create a nonword related to brightness; Magnus, 2000). Bolinger (1950) even suggested that phonesthemes may “attract” the meanings of semantically unrelated words that contain the relevant phoneme clusters, leading to semantic shifts towards the phonesthemic meaning.

Such proposals are typically presented as an explanation for a distinct subset of sound symbolism, and not as an explanation for sound symbolism as a whole (e.g., Hinton et al., 1994). Indeed, our operational definition would consider associations arising in this manner to be a separate phenomenon altogether. Nevertheless, some have proposed that language patterns can explain all of sound symbolism (e.g., Taylor, 1963). This kind of proposal has, however, not been supported by large-scale corpus analyses. For instance, a study by Monaghan, Mattock, and Walker (2012) did not find overwhelming evidence that certain phonemes tend to occur in meanings related to roundness or sharpness. This would seem to suggest that the maluma/takete effect cannot be explained by language patterns. We described some other instances of indirect iconicity in the lexicon earlier in this paper, but the fact that many of these instances emerge across large samples of languages leads to the conclusion that they are the result of sound symbolism as opposed to the cause of it (e.g., Blasi et al., 2016; Wichmann, Holman, & Brown, 2010).

There is, however, support for a weaker version of this claim, namely, that language patterns modify and constrain sound symbolism. For instance, Imai and Kita (2014) proposed that young infants are sensitive to a wide variety of sound symbolic associations, but that associations not supported by the phonology of an infant’s language, or inventory of sound symbolic words, tend to fade away as the infant develops. This proposal is supported by evidence of a greater sensitivity to foreign sound symbolic words in children as compared to adults (Kantartzis, 2011). There is also evidence of a language’s phonology moderating sound symbolic associations for speakers of that language. A basic example of this is the finding that individuals perceptually assimilate phonemes that do not appear in their language into ones that do (e.g., Tyler, Best, Faber, & Levitt, 2014; see Best, 1995). Sapir (1929) theorized that this may have been the reason English speaking participants did not rate nonwords containing /e/ (as in the French été) as being as small as expected. Because this phoneme does not appear in English, participants may have projected onto it the qualities of the diphthong /eɪ/ (as in hay), which begins lower than /e/ for many speakers (Ladefoged & Johnson, 2010). Another example comes from a study by Saji et al. (2013), who found that high-back vowels were associated with slowness by Japanese speakers but with quickness by English speakers. They theorized that this was because this vowel is rounded in English but not in Japanese. Lastly, there is recent evidence that the distributional properties of phonemes in a given language can impact their tendency to show sound symbolic associations for speakers of that language (i.e., less frequent phonemes may be more likely to have sound symbolic associations; Westbury, Hollis, Sidhu, & Pexman, 2017).

Contextual factors

One final topic that deserves mention is the role of various contextual factors in sound symbolism. As in the weaker version of the language patterns theory outlined above, contextual factors likely moderate the expression of sound symbolic associations rather than create them. For instance, some have theorized that forced-choice tasks may lead participants to become aware of shared properties among stimuli that they would not have considered otherwise (e.g., Bentley & Varron, 1933; French, 1977). In addition, some authors have speculated that pairing sounds with congruent meanings in real language may serve to highlight potential associations (e.g., Waugh, 1993). Dingemanse et al. (2016) point out that in some cases it is necessary to know the definition of a word in order to appreciate the sound symbolic association between its phonemes and meaning. That is, would one appreciate the sound symbolism of goro without knowing that its definition related to heaviness? Tsur (2006) characterizes sound symbolic associations as “meaning potentials” (p. 917) that can be actualized by associating phonemes with meanings in language. As noted by Werner and Kaplan (1963), sounds demonstrate plurisignificance, in that they are able to be associated with multiple different dimensions. Tsur suggests that the semantic context in which words appear might highlight some potential associations over others. Lastly, prosody has been theorized to direct individuals towards particular sound symbolic associations (Dingemanse et al., 2016).

Another potential factor to consider is cultural variation in conceptualizations of the relationship between sound and meaning. Nuckolls (1999) reviewed case studies of a number of societies in which language sounds are seen as intimately related to the external world. For instance, the Navajo view air as a source of life, and manipulating that air in the service of creating linguistic sound as one way of making “contact with the ultimate source of life” (Reichard, 1944, 1950; Witherspoon, 1977, p. 61). As another example, different states of water (e.g., swirling, splashing) represent important landmarks for the Kaluli people of Papua New Guinea (Feld, 1982). Their language contains a number of ideophones that depict these different states of water, representing a fascinating interplay of linguistic sound and geography. This interplay is exemplified in their poetry, which depicts waterways in both sound and structure. Indeed, some have speculated that variations in ideophone usage may result from cultural variation in cognitive styles (e.g., Werner & Kaplan, 1963). One wonders if cultural factors may moderate the expression of sound symbolic associations.

Outstanding issues and future directions

We have outlined five mechanisms that have been proposed to explain sound symbolic associations: the features of the phonemes co-occurring with stimuli in the environment, shared properties among phoneme features and stimuli, overlapping neural processes, associations created by evolution, and patterns extracted from language. There are a number of outstanding issues in the literature, and it is to these that we now turn our attention.

Phonetic features

So far in this review we have been equivocal on whether sound symbolism involves acoustic or articulatory features. In fact, there is no need to attribute the phenomenon to one or the other; most theorists allow for both to potentially play a role (e.g., Newman, 1933; Nuckolls, 1999; Ramachandran & Hubbard, 2001; Sapir, 1929; Shinohara & Kawahara, 2010; Westermann, 1927). This is commensurate with the notion of phonemes as bundles of acoustic and articulatory features, either/both of which can be associated with targets in sound symbolism (e.g., Tsur, 2006).Footnote 20 Indeed there is evidence of both playing a role. For instance, Tarte’s (1982) research comparing vowels to pure tones showed that vowels are associated with some stimuli in a way that would be expected if pairings were based on vowels’ component frequencies. Eberhardt’s (1940) discovery of sound symbolism in profoundly deaf individuals suggested that articulatory features in isolation can contribute to sound symbolism (though admittedly in a specific population; cf. Johnson, Suzuki & Olds, 1964).

While it seems reasonable to assume that both articulatory and acoustic features can play a role in sound symbolism, a potential topic for future research could be examining their relative contributions to particular effects. It may be that some associations are more dependent on articulatory features while others are more dependent on acoustic features. Of course, because acoustic and articulatory features are often inextricably linked (i.e., changes in articulation often result in changes in acoustics), this is an extremely difficult question to address. Even presenting linguistic stimuli visually for silent reading, or auditorily for passive listening, would not be sufficient to isolate acoustic features, as studies have shown that these can both lead to covert articulations (e.g., Fadiga, Craighero, Buccino, & Rizzolatti, 2002; Watkins, Strafella, & Paus, 2003). Moreover, as pointed out by Eberhardt (1940), even acoustic features such as frequency can have tactile properties (i.e., felt vibrations). Nevertheless, because mechanisms of association are often based on particular features (e.g., the statistical co-occurrence of acoustic frequency and size), pinpointing the features involved could help adjudicate between potential mechanisms’ roles in a certain effect. Future research might examine this by manipulating the component frequencies of vowels while maintaining their identity, or interfering with covert articulations, and then observing the effect on specific associations. In addition, beyond simply comparing the relative weighting of acoustic and articulatory features as a whole, it will be important to also consider the relative weighting among various acoustic features and articulatory features.

A related question is how individuals navigate the various associations afforded by phonemes’ bundle of features. For instance, what leads individuals to weigh certain phoneme features more heavily than others? Recall that the phoneme /u/ is associated with largeness (Newman, 1933). This seems to suggest that individuals place more emphasis on the association afforded by this phoneme’s features as a back vowel (i.e., largeness) than as a high vowel (i.e., smallness).Footnote 21 The matter is further complicated by the possibility that a given feature can afford associations with different ends of the same dimension. As an illustration, consider Diffolth’s (1994) observation that the Bahnar language contains associations between high vowels and largeness (which contrasts with the typical mil/mal effect). Diffolth theorized that this resulted from a focus on the amount of space that the tongue takes up in the vocal tract (larger for high vowels), as opposed to the amount of space left empty (smaller for high vowels). Thus, this articulatory feature might potentially afford different (and conflicting) associations. Of course, this begs the question of why certain potential associations are more commonly observed than others (Nuckolls, 1999). Understanding what leads to the formation of certain associations out of the myriad of possibilities is an important topic for future research. This not only includes associations on an individual level, but also the crystallization of these associations in a given lexicon (in cases of indirect iconicity).

Lastly, it is worth briefly considering the role of visual features in sound symbolic effects. One example is the letters used to code for phonemes; for instance, visual features are sometimes presented as an important contributor to the maluma/takete effect (see Cuskley, Simner, & Kirby, 2015). In fact, Cuskley et al. (2015) showed that the visual roundness/sharpness of letters was a stronger predictor of nonword-shape pairing than was consonant voicing. However, given that sound symbolic effects emerge in a culture without a writing system (Bremner et al., 2013), in preliterate infants (Ozturk et al., 2013; Peña et al., 2011; cf. Fort et al., 2013; Pejovic & Molnar, 2016), with learned neutral orthographies (Hung et al., 2017), and are not affected by direct manipulations of font (Sidhu, Pexman, & Saint-Aubin, 2016), it seems probable that orthography is, as the very least, not the sole contributor to these effects. Nevertheless the contribution of orthography relative to those of acoustics and articulation is still an open question. If orthographic features were found to play a large role in sound symbolism, it might weaken the claims of some theories that rest on phonological and/or articulatory features (e.g., the frequency code hypothesis, double grasp neurons). Associations based in orthography would likely be due to shared low-level perceptual features among letters and associated stimuli (though for potential roles of connotation, see Koriat & Levy, 1977; P. Walker, 2016). In addition, some articulatory features have very strong visual cues (e.g., lip rounding). It remains to be seen if it is possible to separate these features from the tactile properties of articulation.

Relationship with crossmodal correspondences

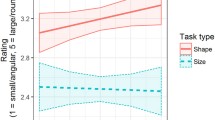

An open question is the extent to which discoveries regarding crossmodal correspondences can be applied to sound symbolism. In particular, one might wonder how well mechanisms of association for crossmodal correspondences can translate to sound symbolic associations. The issue is that while crossmodal correspondences involve simple, unidimensional stimuli (e.g., pure tones), linguistic stimuli are by their very nature more perceptually complex and multidimensional. Thus, while it might be tempting (and potentially correct) to explain sound symbolic associations as arising from a crossmodal correspondence of a phoneme’s component feature (e.g., between pitch and size; see Fig. 5), the fact that the feature is embedded in a multidimensional stimulus will necessarily complicate matters (see Parise, 2016). As an illustration of these complexities, D’Onofrio (2013) found that the influence of voicing in the maluma/takete effect was moderated by place of articulation. Nevertheless there is evidence that the two classes of effects are related. For instance, when Parise and Spence (2012) studied the mil/mal and maluma/takete effects using an IAT, they also examined crossmodal correspondences (e.g., between pitch and size). All of these effects were found to have the same effect size, which was interpreted as being indicative of a common mechanism.

The relationship between sound symbolic associations and crossmodal correspondences (as the terms are used in this review). Sound symbolic associations are between a phoneme as a whole (including all of its component multidimensional features) and a particular stimulus dimension. Crossmodal correspondences are between simple stimulus dimensions (e.g., brightness or pitch). Crossmodal correspondences may exist between the component features of a phoneme and a particular dimension (illustrated by the example crossmodal correspondence between phoneme pitch and size), and could potentially contribute to a sound symbolic association of that phoneme