Abstract

The distinctiveness effect refers to the finding that items that stand out from other items in a learning set are more likely to be remembered later. Traditionally, distinctiveness has been defined based on item features; specifically, an item is deemed to be distinctive if its features are different from the features of other to-be-learned items. We propose that distinctiveness can be redefined based on context change—distinctive items are those with features that deviate from the others in the current temporal context, a recency-weighted running average of experience—and that this context change modulates learning. We test this account with two novel experiments and introduce a formal mathematical model that instantiates our proposed theory. In the experiments, participants studied lists of words, with each word appearing on one of two background colors. Within each list, each color was used for 50% of the words, but the sequence of the colors was controlled so that runs of the same color for that list were common in Experiment 1 and common, rare, or random in Experiment 2. In both experiments, participants’ source memory for background color was enhanced for items where the color changed, especially if the change occurred after a stable run without color changes. Conversely, source memory was not significantly better for nonchanges after runs of alternating colors with each item. This pattern is inconsistent with theories of learning based on prediction error, but is consistent with our context-change account.

Similar content being viewed by others

Distinctiveness is one of the most widely studied concepts in episodic memory (Wallace, 1965; Hunt, 1995; Hunt, 2006; Schmidt, 1991; Schmidt, 2008), yet the nature of distinctiveness and the cognitive processes that underlie it remain elusive. As both Hunt (1995, 2006) and Schmidt (1991, 2008) point out, distinctiveness is a psychological construct wherein some information stands out from other information, typically due to differences in perceptual or semantic features. The differences thereby render the distinctive information more memorable than it would otherwise be. Many distinctiveness effects have been reported in the literature, ranging from the classic isolation effect (von Restorff, 1933) to the recently discovered production effect (Icht, Mama, & Algom, 2014; MacLeod, Gopie, Hourihan, Neary, & Ozubko, 2010). Although the nature of these effects and the theoretical explanations for them have been reviewed extensively in other work (Hunt, 2006; Schmidt, 2008), key questions remain unanswered. In this paper, we examine two of these questions: What makes an item distinctive? And why are distinctive items remembered better?

Two main challenges have to be overcome to answer these questions. First, a wide variety of manipulations have been shown to lead to distinctiveness effects. These include varying perceptual features such as font, color, or size (e.g., von Restorff, 1933); varying semantic features and categories (e.g., Geraci & Rajaram, 2004); using different orienting/encoding tasks (e.g., difference judgment vs. similarity judgment; Hunt & Smith, 1996); and even manipulating the affective valence of the stimuli (Schmidt & Saari, 2007). This challenge is compounded by the inherent difficulty of separating the effects of encoding and retrieval processes (Schmidt, 1985, 2008). This difficulty arises because any behavioral test of memory must include, by default, information from the retrieval process. Distinctiveness effects have been measured almost exclusively using explicit-memory tests such as free recall or item recognition, where the influence of retrieval processes could contaminate the measurement of encoding effects (Hunt, 2006). Although implicit memory tasks could arguably provide a better measure of the effect of distinctiveness on encoding, the few studies that have examined distinctiveness effects in implicit tasks have yielded mixed results (Geraci & Rajaram, 2004; Oker & Versace, 2010; Smith & Hunt, 2000).

These challenges become more obvious when we break the big questions down. To determine what makes an item distinctive, it is necessary to first identify the characteristics of an item that differentiate it from others and then to specify how information is represented in memory such that an item can be distinctive from other items. As we discuss below, most prior attempts to understand distinctiveness effects suffer from either a limited definition of distinctiveness or an underspecification of how items are represented in memory. To determine why distinctive items are remembered better, it is necessary to separate the effects of encoding and retrieval processes. This can be done by specifying mechanisms for how the representation of the item is encoded into memory and how items are retrieved, as is done in many computational theories, or by careful selection of experimental tasks to isolate the influence of one of the processes while minimizing the influence of the other.

Current theoretical frameworks

Given the challenges to explaining distinctiveness effects, it is not surprising that a number of theoretical approaches have been advanced. These fall into three general categories, each of which tend to focus on selected types of distinctiveness effects, and each of which has limitations, as we outline below.

Computational models have been used extensively to explain feature-based distinctiveness effects. These models include the SIMPLE model (Brown, Neath, & Chater, 2007), the SAC model (Park, Arndt, & Reder, 2006), global-matching models such as MINERVA II and CHARM (Hintzman, 1984; Metcalfe, 1990; Oker & Versace, 2014), and a modification of the activation-buffer model (Elhalal, Davelaar, & Usher, 2014; Davelaar, 2013). The general approach in these models is to represent items as vectors of features that may be abstract or may map directly to the perceptual or semantic elements that differ between items, and then to mathematically or algorithmically specify encoding and retrieval processes. Critically, retrieval processes in these models work by comparing the similarity of a retrieval cue with other items in memory.

A major advantage of the computational approach is that it allows the effects of encoding and retrieval processes to be clearly separated; however, this has not always lead to clarity when comparing different models. For example, the SIMPLE model (Brown et al., 2007), the SAC model (Park et al., 2006), and MINERVA II (Hintzman, 1984) all explain feature-based distinctiveness effects as being due to reductions in interference during the retrieval process that are driven by the fact that distinctive items are represented differently in memory than are other items. Specifically, to capture distinctiveness arising from the production effect, Jamieson, Mewhort, and Hockley (2016) provided an implementation of MINERVA II that favored spoken word recognition by means of more encoded features than nonspoken words in both mixed and pure lists. By contrast, modifications of the activation-buffer model (Elhalal et al., 2014; Davelaar, 2013) explain these effects as being due to a boost in encoding for items whose features (e.g., color, size, font, etc.) stand out from the other items on the list.

The second set of theoretical approaches focus on effects that arise due to some particular task during learning and are generally explained using verbal theories such as transfer appropriate processing (Morris, Bransford, & Franks, 1977) or the source monitoring framework (Johnson, Hashtroudi, & Lindsay, 1993). According to these theories, distinctiveness is defined based on different cognitive processes that participants might employ during the encoding task. Which cognitive processes are used while encoding each specific item is affected by orienting tasks as well as by differences in the nature of the stimuli. The processing task used during learning is encoded along with the item itself and can later be used as part of the memory cue during retrieval. This allows distinctiveness effects to arise during retrieval due to two mechanisms. First, the specificity of the encoding task allows a cue at retrieval to be more available to contact a target in memory. Second, the distinctive trace provides a more effective cue–target representation (Hunt & Mitchell, 1978).

In contrast to the feature-based computational models, these process-based verbal theories may offer a more expansive definition of distinctiveness that is more in line with the principles laid out by Hunt (2006) and could potentially explain a wider range of distinctiveness effects; however, because they do not specify the actual processes that are used during encoding or retrieval, they may not allow for a clear picture of the loci of the effects (Schmidt, 1985). Specifically, any given distinctiveness effect could arguably be due to differences in encoding processes, enhanced retrieval processes, or some combination of these. For example, it could be argued that embedding a word in the middle of a list of digits would lead to deeper processing of that word, thus making the encoding process the locus of the effect. On the other hand, these theories are also compatible with retrieval-based explanations, which posit that items with distinctive representations comprise a different, and much smaller, search set than the common items. This gives the distinctive item two advantages during a memory retrieval attempt. Because it is associated with different features/processes than the common items, the distinctive item can be accessed through different cue sets; and because there are fewer competitors associated to the cues, the item will not be as subject to interference during retrieval (Hunt & McDaniel, 1993; Capaldi & Neath, 1995; Anderson & Neely, 1996).

The third theoretical framework for distinctiveness effects builds on old ideas about the role of “surprise” during encoding (Green, 1958a, 1958b), but adds a new twist based on theories of the role of prediction in neurological and cognitive processing. For convenience, we will refer to this as the “prediction-error framework.” In this framework, the characteristics (perceptual features, semantic features, type of processing, or emotionality) that are shared by the common items in a study list form a context for that list, and the cognitive system learns to expect that those characteristics will be the same for all the list items (Gati & Ben-Shakhar, 1990). Items that do not match the context lead to “surprise” (Green, 1958a, 1958b) or an expectation violation (Donchin, 1981; Fabiani & Donchin, 1995). Consequently, attention is focused on the item that is different (Swartz, Pronko, & Engstrand, 1958), sensory processing is enhanced (Rao & Ballard, 1999; Friston, 2012), and the item’s memory strength is increased relative to the common items, making it “distinct” (Karis, Fabiani, & Donchin, 1984; Hirshman, Whelley, & Palij, 1989).

Predictive coding theory falls within this prediction-error framework. In predictive coding, sensory input is sent through a feedback loop that simultaneously monitors incoming information and makes inferences about future events based on past information (Friston & Kiebel, 2009; Mumford, 1992; Rao & Ballard, 1999). Thus, the brain is seen as an information-processing instrument that integrates top-down expectations and bottom-up stimulus information occurring across multiple sensory levels and pathways (Bubic, von Cramon, & Schubotz, 2010). Under this predictive coding account, any event or experience would be distinctive if it was poorly predicted and hence gave rise to a large prediction error, thus putting a large emphasis on a notion of expectation that might be divorced from the features of the items themselves.

A major advantage of the prediction-error framework is that it makes unique testable predictions in situations that are not covered by the existing feature-based computational models or by the process-based verbal theories. Specifically, because distinctiveness is based on the context of a list, not the individual items or cognitive processes, the theory not only predicts that distinctiveness effects should be seen whenever a single item stands out from the context of the list (e.g., the classic isolation effect of von Restorff, 1933), but it also predicts that any change from the list context or structure should lead to enhanced encoding and thus a distinctiveness effect, even if the items themselves are balanced throughout the list.

The balanced-features design used by Green (1958a) provides a way to test this hypothesis. Unlike in a standard isolation paradigm, where there are frequent items with common features and rare items with different features (von Restorff, 1933), in a balanced-features design, features are distributed equally across the list items. For example, in contrast to von Restorff’s classic isolation experiment, where a study list consisted of one letter trigram in a list with nine number trigrams, Green (Experiment 2) created three different lists using the same set of six letter bigrams and six two-digit numbers by arranging the stimuli in specific temporal sequences. All the lists used the same critical item—the bigram CZ—placed in Serial Position 4, but the items that surrounded that item varied according to the structure of the list. Because the features are balanced across the list items, no items stand out on a list level during encoding due to distinct features. Instead, the temporal sequencing of the item features is used to create “different” items (Green, 1958a).

In Green's (1958a) control condition, the stimuli alternated systematically between letters and numbers for the entire list. In the change condition, the first three and last three list items were numbers, and the remaining items were letter bigrams. Thus, there was a run of three numbers prior to the critical item, and the critical item was the first item in a new run of letter bigrams. In the isolation condition, the numbers were placed in Serial Positions 1–3 and 5–7 so that the critical item was by itself in what was otherwise a long run of numbers. Each subject was presented a single list and given a 1-minute free-recall test. An examination of the serial position curves from Green shows precisely the pattern that would be predicted by a prediction-error account: There was a boost in memory for the critical item at Serial Position 4 in both the change and the isolation conditions but not in the control condition.

The context-change account

Here, we propose an alternative to a prediction-error account of distinctiveness, which is instead based on a computational model of perception and memory, the temporal context model (TCM; Howard & Kahana, 2002; Sederberg, Howard, & Kahana, 2008; Polyn, Norman, & Kahana, 2009a). The TCM is an association model that explicitly represents the perceptual, semantic, affective, and mental features of items, the context in which those items are experienced, and the associations between items and context. As in previous context models in the TCM framework, context is defined as a recency-weighted running average of experience.

Based on the mechanisms in our proposed model, which we define in detail below, an item can be considered to be distinctive, and hence modulates the strength of learning, if the item causes the prevailing internal representation of temporal context to change. The context for each list item is established by the items that precede it. A stable context can be established by having a run of stimuli that share a critical feature, and an item can be defined as “different” if it does not share this feature. Within the model, this deviation from a stable temporal context gives rise to a larger change in the context representation, and enhanced perceptual processing of the item causing the change, which results in a temporary increase in learning rate. Thus, an item becomes distinctive if it causes a large change in the temporal context, regardless of the relationship of that item to the list as a whole, sometimes called the list context (Fabiani & Donchin, 1995; Geraci & Manzano, 2010; Park et al., 2006; Rangel-Gomez & Meeter, 2013).

We explain the implications of our account in more detail in the General Discussion, but for the moment we note that our context-change definition of distinctiveness has several major advantages over the standard feature-based and process-based definitions. First, it provides a clear theoretical statement about what it means to say that an item is distinctive. Second, because it is based in a computational model in which the encoding and retrieval processes are specified mathematically, a context-change definition allows for a clearer theoretical understanding of the roles these processes may play in the distinctiveness effect. Finally, it allows for a more expansive understanding of distinctiveness and the ways in which it may influence perception and memory in various tasks in and outside the lab.

We highlight this expansiveness through two novel experiments that show encoding-based distinctiveness effects using an operational definition of “different” that is based on temporal context rather than the features of the items and the list as a whole. To focus on encoding-based processes that affect the subsequent memorability of individual items in a study list, these experiments employ a balanced-features design inspired by early isolation experiments (Siegel, 1943; Green, 1958a), with the addition of a source memory task (Johnson et al., 1993), in which subjects were tested on their memory for the background color of the studied words. The balanced-features design allows us to address which mechanisms could give rise to any observed item-based distinctiveness effects during the initial processing of the list, even in the absence of a traditional item-level isolation. The addition of a source monitoring task to this design reduces the likelihood that any observed distinctiveness effects are due to processes that operate during retrieval by ensuring that the search sets for the “different” items and the control items are essentially equivalent. In contrast to free-recall tests, there is a clearly defined search set that is the same for both context-change and nonchange items. Furthermore, because the source features were balanced during study, there are no systematic differences in the fan size between the item types, ruling out the most common retrieval-based explanation for any observed distinctiveness effects (Park et al., 2006).

The purpose of our inquiry was to examine item-level distinctiveness effects of context change within the balanced-features design. Although the balanced-features design has been used extensively in other domains (Icht et al., 2014), it has only been used in a few studies of distinctiveness effects (Siegel, 1943; Green, 1958a; Saltzman & Carterette, 1959; Erickson, 1963; Deutsch & Sternlicht, 1967) and, to our knowledge, has never before been used to examine item-level effects or with a source monitoring task. Thus, we started with a simplified conceptual replication of the second experiment reported by Green for Experiment 1, and then expand the experimental design for Experiment 2 to further test differential predictions of the prediction-error and context-change accounts of distinctiveness. Finally, we present a formal mathematical model that instantiates our proposed context-based distinctiveness theory, which we then fit to the data from Experiment 2.

Experiment 1

The design for Experiment 1 is a conceptual replication of the change condition from Green (1958a, Experiment 2). As discussed above, Green used a set of six letter bigrams and six two-digit numbers to create balanced lists in which a critical item—the bigram CZ, placed in Serial Position 4—could be considered an ordinary item, a change item, or an isolate, depending on the items that surrounded it. In Green’s change condition, the first three and last three list items were numbers, and the remaining items were letter bigrams. Thus, there was a run of three numbers before the critical item, and the critical item was the first item in a new run of letter bigrams. To maximize the memorability of the stimuli, we used unrelated words rather than letters and numbers as the to-be-learned items and manipulated the color of the background on which they were presented, with half of the words for any list being presented with a green background and half with a blue background. Thus, the variable of interest is context change, operationally defined as the change in the sequence of background colors (e.g., a switch from a run of items on a blue background to a run of items on a green background, or vice versa). We also use longer lists, examine memory for multiple change items on each list, and present multiple lists to each subject using a within-subjects design, all to gain more power to identify item-level distinctiveness effects. Finally, we use a source memory test rather than a free-recall test to measure memory for the change and nonchange items to reduce the effect of retrieval dynamics on the observed encoding-related distinctiveness effects. We hypothesize that source memory for a color feature will be better for items that are presented when a context change occurs.

Method

Participants

Eighty-eight volunteers (ages 18–29 years), recruited from introductory psychology classes at The Ohio State University, participated in Experiment 1. All participants gave written informed consent and were given partial course credit for participating. The experiment was approved by the OSU Institutional Review Board.

Materials and design

Stimuli consisted of a pool of 1,039 four-letter to seven-letter words selected from the University of South Florida Free Association Norms (Nelson, McEvoy, & Schreiber, 2004). For each subject, 384 words were randomly drawn from the pool and randomly assigned to 12 study lists with 32 words on each list. All tasks were performed on a computer keyboard, and all stimuli were presented on a 17-inch monitor. Stimulus selection and presentation were controlled using a custom script written using the PyEPL experimental library (Geller, Schleifer, Sederberg, Jacobs, & Kahana, 2007). During the study phase, each word was displayed in black font over a filled rectangle. Half of the words were presented with a blue background (RGB: 0, 170, 255) , and half over a green background (RGB: 50, 205, 50). Luminance of the background colors was equated using software settings.

The sequence of the colors was arranged to form runs of words with the same background color (see Fig. 1). Run length was determined by random sampling from a uniform distribution of lengths of three to eight items. The independent variable was the event type for each word. Words that were presented on a different background color than the previous word were identified as change events; words that were presented on the same background color as the previous word were identified as nonchange events.

Examples of the three list structures used in Experiments 1 (low change only) and 2. All three structures used balanced lists, with the same number of items on a blue background as on a green background. In the low-change lists, study trials were ordered to form a series of long runs with the same background color. In the high-change lists, background colors usually alternated on each item presentation, but there were occasional runs of two items with the same background color. In the random-change lists, the background colors were in a true random order. (Color figure online)

Procedure

Each experimental session took approximately 50–55 minutes. All tasks were completed in blocks, consisting of a study phase, a distractor task, and a source memory test (see Fig. 2). A practice block preceded the 12 self-initiated experimental blocks. Each block began with a crosshair at the center of a white screen indicating the beginning of a study phase. Study items were then presented at a rate of 1,800 ms per item and were separated by a blank white screen during a 600–900-ms jittered interstimulus interval (ISI). During the study phase, participants performed a simple color-detection task using key presses to indicate the background color on which the study words were displayed. Participants were instructed to respond as quickly and as accurately as possible, to avoid thinking about previous words on the list, and to study the word for a later memory test.

The study phase was followed immediately by a 30-second distractor phase. Simple equations were presented in the form A ± B ± C = D, where A, B, and C were randomly chosen positive integers from the set 1–9, and D was either a correct or incorrect solution to the arithmetic problem. Participants indicated whether each equation was correct via key press. Participants had 4 seconds per equation to respond, with feedback, until time expired.

For the source memory test, the most recently presented study list was randomized, and each of the words were presented in black font on a neutral white background for 1,800 ms with a 160 ms ISI. During this time period, participants indicated which background color they remembered for that item by a key press.

Data quality control

Before conducting our planned analyses, we established a data control protocol to filter out participants who failed to follow instructions and items that could introduce confounds. Participants who failed to follow instructions were identified using a binomial distribution test to examine performance on the math distractor task. Data from 16 individuals whose performance was at or below chance were removed before analyzing source memory. Memory performance for the remaining 72 participants was evaluated across subjects by means of a paired-samples t test to compare source memory performance for the change versus the nonchange events. To absorb the typical effects of primacy and recency in memory for lists, two buffer items at the beginning and ending of each list were excluded from analysis.

Results and discussion

Because our 12-block design differs from previous distinctiveness work (e.g., Green, 1958a), we performed a 2 × 12 repeated-measures ANOVA, using event type and block as factors. There was neither a main effect of block, F(11,781) = 2.30, MSE = 2.51, p = .134, nor was there an interaction between event type and block, F(11,781) = 0.91, MSE = 0.018, p = .764. As shown in Fig. 3, there was a significant effect of event type, t(71) = 4.76, p < .001, Cohen’s d = 0.27, such that source memory was more accurate for the change events (M = 0.67, SEM = 0.016) than for the nonchange events (M = 0.63, SEM = 0.015). Because the change items were at different serial positions in every list, we were not able to examine the change items using a serial position analysis, as in Green (1958a); however, we were able to perform a similar analysis based on the relative serial position of each item to the context-change event. As Fig. 3 shows, source memory was more accurate for the items at the context-change event (Lag 0) than for the adjacent items where there was no context change. Adjacent items prior to the change event appear to form an approximate baseline, whereas items after the event seem to decay down to baseline as a new run of similar features unfolds. These results replicate Green’s (1958a) results showing that distinctiveness effects can be based on the temporal structure of a list, even when none of the stimuli have distinct features on a list level, and extend this finding into the domain of source memory. They are also consistent with predictions from both the prediction-error account and our context-change account.

Mean proportion of correct source memory responses as a function of event type and serial position relative to the change items in Experiment 1. Change events are items with a background color different from the previous item. Nonchange events are items with a background color the same as the previous item. Lags −3 to −1 are the last few items in a stable run with the same background color. The context change item at Lag 0 starts a new run with a different background color, and next three nonchange items within this run are at Lags 1–3. Error bars indicate ±95% within-subjects confidence intervals (Loftus & Masson, 1994)

Experiment 2

Having established that a context-change event gives rise to enhanced source memory, we next modified the experimental design to test competing predictions from the prediction-error account and our context-change theory and to identify boundary conditions for this distinctiveness effect. In Experiment 2, we used the list structure from Experiment 1 and added two new kinds of lists to create a list structure factor to explore the extent to which the context-change distinctiveness effect depends on the establishment of stable contexts (see Fig. 1). We call the list structure from Experiment 1 a low-change list because it has stable runs with only a few feature changes during the course of the list. The high-change lists are on the opposite side of the spectrum. In these lists, the background colors routinely alternate, with a few exceptions. Thus, there are stable runs of changing items giving rise to many feature changes in the list sequence. Finally, the random-change lists represent a middle ground where the sequence of background colors is completely random. In this condition, stable runs of nonchanging items can occur, but they may not be as common as the low-change lists. Importantly, each of these list structures maintains the balanced design, so that the only difference between the types of lists is in the sequence of the background colors.

Based on the results from Experiment 1 and from Green (1958a), we expected that source memory for the change items in the low-change lists would be enhanced relative to the nonchange items. This finding would further support our context-change account but would not help differentiate it from the prediction-error account because the rare changes would induce both a large context change relative to the recent past and also a large prediction error.

Predictions for the high-change condition provide a key test case for the prediction-error account and our proposed context-change theory. In our search of the literature, we were not able to find any previous studies that have examined potential item-based effects in lists where feature change is the rule rather than the exception. Nevertheless, this is an important case to consider. Whereas the results from the low-change condition in Experiment 1 could be accounted for by either a context change or a prediction-error mechanism, the two mechanisms produce diverging predictions for the high-change lists. Under a prediction-error framework, participants are assumed to create a mental representation of the structure of the high-change lists as consisting of a relatively regular sequence of color changes using either an online process in which a representation is actively built in working memory (Gati & Ben-Shakhar, 1990; Schubotz, 2007) or by retrieval of similar types of high-change scenarios from long-term memory (Bar, 2007). This mental representation leads to a prediction on each trial that the background features will change. Thus, the nonchange events would give rise to the largest prediction errors that could, in turn, elicit a distinctiveness effect. The context-change account, on the other hand, would suggest that a nonchange event would give rise to minimal context change relative to the recent past and would therefore give rise to no item-level distinctiveness effect and potentially a drop in subsequent source memory relative to the change events.

Finally, although the random change condition serves primarily as a control condition, it also provides an opportunity to test theoretical predictions. Under the prediction-error account, because changes and nonchanges are randomly distributed through the list, there is no systematic basis for making predictions about deviations from the list context. Thus, prediction errors should be distributed randomly through the list. By contrast, the context-change account predicts that local changes in the temporal context (i.e., changes from randomly generated stable runs) should lead to enhanced source memory for subsequent items after a large context change, regardless of where it is in the sequence. These results would provide additional support for our hypothesis that distinctiveness is based on changes in temporal context rather than prediction errors.

Method

Participants

One hundred and three volunteers (ages 18–26 years), recruited from introductory psychology classes at The Ohio State University, participated in Experiment 2. All participants gave informed written consent and were given partial course credit. The experiment was approved by the OSU Institutional Review Board.

Materials and design

Experiment 2 used the same stimuli and design as in Experiment 1, with the following exceptions. First, the lists were shortened to 30 words each. Second, the design incorporated three list types instead of just one, thereby creating a 3 (list structure: low change, high change, or random change) × 2 (event type: change or nonchange) within-subjects design. Examples of the list structures are shown in Fig. 1. The low-change lists contained six runs of three to six items with the same background color, with the first item in a run serving as a change event and the other items in each run being nonchange events. The high-change lists also contained six runs of three to six items, but with alternating background colors interrupted by five nonchange events. The random-change lists contained an equal number of items for each background color arranged in random order. Although each random list contained both change and nonchange events, there were varying numbers of these events in each list. Similarly, the random-change lists had varying numbers of runs and run lengths of the same color. List types were presented in random order for each participant.

Procedure

All procedures were the same as in Experiment 1, except that we changed the duration of study and test trials to 2,300 ms each, with an ISI of 200 ms.

Data quality control

We used an a priori data quality control protocol similar to the one used in Experiment 1. Three buffer items from the beginning and end of each list were removed from analysis in order to avoid confounds from primacy and recency effects. Participants who failed to follow instructions were identified using a binomial distribution test for at-chance or below-chance performance on the math distractor test (N = 13) and on the random-change lists (N = 13). We chose to be conservative and only report results using data from the participants (N = 73) whom we could be reasonably sure performed the experimental tasks as instructed; however, supplementary analyses including the participants who were identified by at-chance source memory performance on the random lists showed the same overall pattern of results, including the main effect of list structure, and the effect of change in the t test for the low-change list (see below).

Power

Statistical power analysis was performed for sample-size estimation, based on data from Experiment 1 (N = 72), comparing change with nonchange events. With an alpha of 0.05 and power = 0.80, the projected sample size needed for an effect size of d = 0.27 is approximately N = 86 for a within-groups comparison. Although our final sample size was slightly less due to attrition, our final sample size of N = 73 using an effect size of d = 0.27 yields power = 0.739.

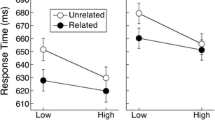

Results and discussion

Participants’ source memory performance is shown in Fig. 4. Overall, participants’ source memory judgments were moderately accurate (M = 0.69, SEM = 0.012). We again first calculated an ANOVA for the block factor, this time using event type, list structure, and block as factors. There was no significant main effect of block, F(11,792) = 1.01, MSE = 0.58, p = .318, nor did the block factor interact with event type, F(11,792) = 2.29, MSE = 1.32, p = .135, or with list structure, F(11,792) = 1.34, MSE = 0.77, p = .268. A 3 × 2 repeated-measures ANOVA, using event type and list structure as factors, revealed a main effect of list structure, F(2, 144) = 5.33, MSE = 2.18, p = .006, ηp2 = 0.069, such that source memory was more accurate for the low-change (M = 0.70, SEM = 0.013) and random-change (M = 0.70, SEM = 0.013) lists than for the high-change lists (M = 0.68, SEM = 0.016). There was no significant main effect of event type, F(1,72) = 1.43, MSE = 0.47, p > .10, ηp2 = 0.020, nor was the interaction between list structure and event type significant, F(2, 144) = 1.96, MSE = 0.40, p > .10, ηp2 = 0.026. As predicted, results from a planned comparison for the effect of event type within the low-change lists showed the source memory was more accurate for the change events (M = 0.73, SEM = 0.017) than for the nonchange events (M = 0.69, SEM = 0.014), t(72) = 3.43, p = .001, Cohen’s d = 0.31. This replicates the result from Experiment 1, further demonstrating that context change can give rise to a distinctiveness effect, even when none of the stimuli can stand out due to unique features. The effect of event type was not statistically significant in either the high-change lists, t(72) = 1.86, p = .067, or in the random-change lists, t(72) = 0.68, p = .499. As we discuss in more detail below, although this is a null effect, the lack of a boost for the nonchange items in the high-change lists, which actually shows a trend where the source memory for change items is more than for the nonchange items, provides some evidence against a prediction-error account and for our context-change theory of distinctiveness.

Source memory performance as a function of list structure and event type for Experiment 2. Change events are items whose background color was different from the previous item. Nonchange events are items whose background color was the same as the previous item. Error bars indicate ±95% within-subjects confidence intervals

The effects of context change can be seen most clearly by examining source memory as a function of the serial position relative to a change item. If, as we suggest, the change item induces a context change that then leads to more effective memory encoding, then there should be a discontinuity between the last few items in a stable run and the first few items in a new run. As Fig. 5 clearly shows, this is precisely the pattern seen in the low-change lists when one run of the same background color ends and another begins. Although this plot shows the same pattern of results that are consistently seen in the isolation paradigm (von Restorff, 1933), note that the item is not sandwiched between other items with different features, as is typical in the isolation paradigm. Instead, it represents a change from one context to another.

Mean correct source memory in the low-change and random-change lists as a function of serial position relative to the change item in Experiment 2. Lags −3 to −1 are the last few items in a stable run of items with the same background color. The context change item at Lag 0 starts a new run with a different background color, and next three items within this run are at Lags 1–3. The context change item at Lag 0 has a different background color and starts a new run. Error bars indicate ±95% confidence intervals

We can also examine this effect in the random change lists. For these lists, the sequence of the background colors was completely random, so runs might be as short as a single item or as long as half the list. In our lists, the mean run length was 2.85 items, and run length varied from one item to a maximum of 11 items in a row with the same background color. Across all subjects, there were a total of 470 runs of four items or greater. As can be seen in Fig. 5, source memory for change events that follow a stable run (M = 0.75, SEM = 0.018) is improved relative to the last few events from that run (M = 0.66, SEM = 0.021), t(68) = 4.67, p < .001, Cohen’s d = 0.51, even though the change item might or might not start a new run.

Finally, we conducted a paired-samples t test for the change events in random lists that followed a stable run versus the changes events that did not. The difference was significant, t(72) = 3.88, p < .001, Cohen’s d = 0.14, with change events following a stable run remembered better (M = 0.75, SEM = 0.018) than those not following a stable run (M = 0.69, SEM = 0.017). This result further supports our theory that distinctiveness results from change relative to the current temporal context, which would be greater following a stable run of nonchange events.

Model and simulations

To make explicit how our context change account fares in light of the full Experiment 2 result, we implemented a generative process model within the TCM framework (Howard & Kahana, 2002; Sederberg et al., 2008; Polyn et al., 2009a). In TCM, items are bound to temporal context, which is a recency-weighted running average of experience. Items are represented as vectors of features, f, as is the internal representation of context, t. To model this task, we represent each word as an orthogonal unit vector, with the additional activation of one of two features representing the background color of the item presentation. While modeling this task required the addition of features for background color, our framework could flexibly include features for any aspect of experience, including task, item type (e.g., modality), semantic associations, or other experimental manipulations. Context updates with the following equation:

where ρ is the drift rate governing the rate of change due to each item presentation, r is a scalar measure of context change, ti − 1 is the state of context prior to the item presentation, and ti is the state of context after presentation of item fi. The context vector is initialized with a single active orthogonal feature prior to each study list and is normalized to unit length following each item presentation. Larger values of ρ give rise to faster changes in context, such that the old context decays away quickly and is replaced with the features of the new item fi, whereas smaller values near zero give rise to small changes in context due to each item presentation. The measure of context change is captured by the scaler r, which is calculated based on the overlap between an item and the previous state of context:

As the overlap between the new item and context goes to zero, the measure of context change goes to one. Thus, if an item overlaps with the current context (i.e., the item’s background color matches the active colors in context vector), the context change scaler will be small and context will drift less, whereas if the overlap between the new item and the context is small, it will cause the context to change more, up to the full value of ρ.

Learning involves binding the internal context representation to the presented item via a standard Hebbian outer product stored in the matrix M, which is initialized to all zeros at the start of each list:

where α is the learning rate, T is the transpose operator, and r is the same measure of context change from above. Therefore, the less an item overlaps with the current context, the more context updates to be like that item, and the more that item is bound to the newly updated context.

After the presentation of an entire list to the model, source memory is tested by probing the memory matrix with an item vector with only a word active (i.e., without the color it was paired with at encoding) to reinstate the context bound to that item:

where fw is the word vector without the color, and tw' is the reinstated context. This reinstated context is then used to retrieve the strengths of the color associated with the probe word:

where fw' is the retrieved item vector, which contains both word and color feature activations, into which we index to pull out the strength of the retrieved color features, sblue and sgreen. To make the the source memory decision, we put these strengths into a standard softmax decision rule, with the strength for the correct color in the numerator and the strengths for both colors in the denominator:

where τ is a temperature parameter governing how the strengths of other items affect source memory performance.

In all, the model has three parameters—ρ, α, and τ—which govern the three main processes in the model: contextual drift, learning, and retrieval, respectively. The key feature distinguishing the present model from prior work is that the contextual-drift and learning processes are modulated by the context change scaler r, which is calculated based on the overlap between the presented item and the prevailing context when that item is presented. If a new item does not match the current context, it causes a larger contextual drift (pushing the prior context out and taking its place) and a boost in learning rate.

To fit the model to the data from Experiment 2, we employed a Bayesian approach with differential evolution Markov chain Monte Carlo (DE-MCMC; Ter Braak, 2006; Turner, Sederberg, Brown, & Steyvers, 2013) with 50 chains fit to each participant separately. We used uninformative uniform priors for the three parameters: ρ = U(0, 1), α = U(0, 10), and τ = U(0, 10). For each parameter proposal, we calculate its likelihood that it generated the data by simulating the model for each list and multiplying the probabilities of each observed actual response (excluding the buffer items, as in the behavioral analyses) generated via the source memory decision equation provided above. After 250 burn-in iterations, we ran 750 additional iterations in sample mode to ensure that stable parameter posteriors were obtained for each participant. Across all subjects, the mean and standard deviation maximum a posterior (MAP) estimates from the final 500 iterations were ρ = 0.79 ± 0.17, α = 0.42 ± 0.53, and τ = 4.06 ± 2.38. Figure 6 illustrates the mean best-fitting model performance overlaid on the actual behavioral data. Note that our three-parameter model provides solid qualitative and quantitative fits to all measures, including the overall change versus nonchange source memory performance in each condition, as well as the lag plots conditional on color changes. Interestingly, a similar model where the trial-level measure of context change only modulated the learning rate, and not the contextual-drift rate, provided a significantly worse fit to the data as determined by pairwise comparison of Bayesian predictive information criterion (BPIC; Ando, 2007) values across participants (a Bayesian paired t test resulted in a Bayes factor value of 77.5, indicating that it is more than 77 times more likely that the full model produced better/lower BPIC values than the alternative, where context change does not modulate contextual drift rate.) Although this could be due to constraints from building a model within the TCM framework, which prescribes binding items to context, it is nonetheless a potentially important finding that speaks to the interaction of perceptual processing and memory formation. If the features of experience do not match the current context, we push out the current context, replace that context with the new features of experience, and bind the new item to this new context. This process could generate distinctive event boundaries that act as anchor points in our experience (Reynolds, Zacks, & Braver, 2007; Swallow, Zacks, & Abrams, 2009).

Model estimates with behavioral data for Experiment 2. Red circles indicate best fits generated from the maximum a posteriori (MAP) estimates from the model for each participant. Lags −3 to −1 are the average of the sequence of items before context change items. Lag 0 represents the context change items for a particular list type. Lags 1–3 are the items immediately following the context change items. a Source memory performance as a function of list structure and event type for Experiment 2. b Mean correct source memory in the random-change lists as a function of serial position relative to the change items following runs of at least three nonchange items. c Mean correct source memory for change items following runs of at least three nonchange items in the low-change lists as a function of serial position relative to the change items. d Mean correct source memory in the high-change lists as a function of serial position relative to the change item. (Color figure online)

General discussion

This study explored what makes items distinctive and, consequently, better remembered. We employed a balanced-features design in combination with a source memory test to isolate encoding-related memory effects. The primary finding, replicated across two experiments, was a boost in source memory performance for items presented with a feature change relative to a stable prevailing context (i.e., items that cause a context change are distinctive). Importantly, in the high-change lists with frequently occurring changes, we did not observe a memory boost for the rare nonchange events. We argue that this finding provides evidence that conflicts with a strict prediction-error account of item-level distinctiveness. Such a strict prediction-error account posits that memory should be improved for these poorly predicted nonchange events because they violate the prediction of change (e.g., Marvin & Shohamy, 2016; den Ouden, Kok, & De Lange, 2012; Frank, Woroch, & Curran, 2005). In fact, while not significant, we observed a trend of better source memory for change events for the high-change condition, which is in the opposite direction of an effect that would be generated by a prediction violation process. One limitation of our study is that we do not have additional empirical evidence to demonstrate that participants were predicting an alternating pattern of color changes in the high-change lists; thus, we lack explicit proof that nonchange events would elicit a prediction error. Nevertheless, we interpret our combined results to support the hypothesis that distinctiveness is due to relative context change in the features of experience and not merely due to errors in predicting those features. In support of our theory, we provided an explicit computational model that was able to capture the full pattern of results with only three parameters.

We do not dispute that distinctiveness can arise from changes in a global list structure, as has been long established by Green (1958a). However, we extend the explanation of distinctiveness by showing that the effect is not driven simply by deviations in global list structure, but by deviations from the item-level sequence of the past few events leading up to a context change. Context-change-based modulation of subsequent memory has been observed in other paradigms, as well. In a task with a similar study structure to our low-change condition, with sequences of objects encoded on colored backgrounds that change after a random run of the same color, Davachi and colleagues have demonstrated modulations in temporal order memory, judgements of recency, and memory for when an item occurred (DuBrow & Davachi, 2013; Heusser, Poeppel, Ezzyat, & Davachi, 2016; Sols, DuBrow, Davachi, & Fuentemilla, 2017). Other studies have demonstrated item-specific memory improvements. For example, Polyn, Norman, and Kahana (2009b) found a transient memory boost (in this case, a midlist primacy effect in free recall) when the encoding task changed from a pleasantness judgment to a size judgment during the study list. Similarly, Davelaar (2013) showed that primacy and midlist primacy effects in free recall can be captured by a model of distinctiveness based on a novelty signal that increases context change before binding novel items to that context representation. Although this model does not provide a mechanism for generating a novelty signal, in a follow-up study, they added a novelty-detection mechanism based on prediction error, providing simulations of the von Restorff effect (Elhalal et al., 2014). Our proposed model shares some similarities to those of Davelaar and colleagues with regard to the enhanced context update and binding processes; however, we differ on whether modulation of these processes that give rise to distinctiveness arise from prediction error or context change.

Thus, our work extends previous experimental findings into the source memory domain and provides a context-change, as opposed to prediction-error, account of distinctiveness. To our knowledge, the change-based distinctiveness effect observed in our study cannot be captured by any of the canonical context-based memory models on which our model is based (e.g., Anderson & Bower, 1972; Mensink & Raaijmakers, 1989; Howard & Kahana, 2002; Sederberg et al., 2008; Polyn et al., 2009a) without some additional mechanism to modulate learning rate based on context change. However, item-level modulation of learning rate does have precedence in other domains. For example, Nassar et al. (2012) have demonstrated how a participant’s learning rate can transiently increase when a new input induces a significant update in their underlying beliefs about the likelihood a change just occurred—in this case, that a target number they would have to predict would now be drawn from a new distribution (see also Nassar, Wilson, Heasly, & Gold, 2010; McGuire, Nassar, Gold, & Kable, 2014). Although this change in belief state is driven by prediction error in their model, the subsequent change in learning rate could provide the mechanism underlying the change-based memory boost observed in our data. This situation is demonstrated nicely in our low-change lists, in which relatively consistent features and the low likelihood of a change point renders the actual change points more salient and distinctive. Conversely, multiple change points in a sequence of events reflect instability, and will generate greater relative uncertainty about the underlying structure of the environment. Greater uncertainty in the context of frequent change points drives down overall learning rate during those sequences (Nassar et al., 2010). This reduction in learning rate could provide an alternate explanation for the overall lower performance and lack of distinctiveness effect in our high-change lists.

One question that remains is what mechanism underlies the relative increase in learning rate for items inducing a large context change. One strong candidate is an attention-based mechanism. For example, the effect we generate is very similar to an attentional boost effect (ABE) shown by Swallow and Jiang (2010, 2013). Using scene stimuli embedded with rare target response cues (white squares) to which participants were instructed to respond, and more frequent distractor cues (black squares) to which participants were instructed not to respond, Swallow and Jiang found that scenes paired with target cues were better recognized later relative to scenes paired with the common distractor cues. This main ABE has been demonstrated to arise for perceptually and semantically isolated stimuli (Smith & Mulligan, 2018), though it was not found to transfer to irrelevant background features of the experience, such as the font or color of words (Mulligan, Smith, & Spataro, 2015). The overlap with these studies suggest that the item presentations inducing a large relative context change engage attention, thereby increasing item processing and subsequent memory.

Another question is whether the observed pattern of results would be different if our participants had been explicitly instructed to form an association between each word and its background color, as in a deeper levels-of-processing or transfer-appropriate-processing task (Craik & Lockhart, 1972; Morris et al., 1977). It is possible that a levels-of-processing task manipulation could affect the encoding or context update processes, and, thus, modulate the magnitude of the distinctiveness effects. Although an interaction between different encoding tasks and the distinctiveness effect would certainly be interesting, given that we employed a source memory task, we believe there would be little effect of providing an alternative encoding instruction because participants already know that they will be tested on the association between the color and the word. However, it remains critical that we still understand what features of experience are salient enough to cause a context-based distinctiveness effect.

Importantly, we were able to uncover an item-level distinctiveness effect based on memory for changing contextual features without the need to embed unique response targets into the stimuli and without explicit encoding instructions other than to remember the stimuli presented for a subsequent memory test. This memory boost is accomplished in our model by a combination of a transient increase in contextual drift rate, which serves to push out the previous context and replace it with the new item information, and a corresponding increase in item-to-context binding.

Conclusion

The purpose of this study was to identify cognitive processes that contribute to what makes an experience distinctive and, hence, better remembered. Our results provide new insight into how distinctiveness is driven by feature-level context change relative to recent experience, as opposed to a prediction-error-based mechanism. Future work will include a formal comparison of computational theories of the distinctiveness effects driven by context change presented in this study, as well as exploring their psychophysiological and neural substrates.

References

Anderson, J. R., & Bower, G. H. (1972). Recognition and retrieval processes in free recall. Psychological Review, 79(2), 97–123. https://doi.org/10.1037/h0033773

Anderson, M. C., & Neely, J. H. (1996). Interference and inhibition in memory retrieval. In E. L. Bjork & R. A. Bjork (Eds.), Memory (pp. 237–313). San Diego, CA: Academic Press. Retrieved from https://www.sciencedirect.com/science/article/pii/B9780121025700500100

Ando, T. (2007). Bayesian predictive information criterion for the evaluation of hierarchical Bayesian and empirical Bayes models. Biometrika, 94(2), 443–458. https://doi.org/10.1093/biomet/asm017

Bar, M. (2007). The proactive brain: Using analogies and associations to generate predictions. Trends in Cognitive Science, 11, 280–289. https://doi.org/10.1016/j.tics.2007.05.005

Brown, G. D. A., Neath, I., & Chater, N. (2007). A temporal ratio model of memory. Psychological Review, 114(3), 539–576. https://doi.org/10.1037/0033-295X.114.3.539

Bubic, A., von Cramon, D. Y., & Schubotz, R. I. (2010). Prediction, cognition and the brain. Frontiers in Human Neuroscience, 4. https://doi.org/10.3389/fnhum.2010.00025

Capaldi, E. J., & Neath, I. (1995). Remembering and forgetting as context discrimination. Learning & Memory, 2(3/4), 107–132.

Craik, F. I. M., & Lockhart, R. S. (1972). Levels of processing: A framework for memory research. Journal of Verbal Learning and Verbal Behavior, 11(6), 671–684. https://doi.org/10.1016/S0022-5371(72)80001-X

Davelaar, E. J. (2013). A novelty-induced change in episodic (NICE) context account of primacy effects in free recall. Psychology, 04(09), 695–703.

den Ouden, H. E., Kok, P., & de Lange, D. (2012). How prediction errors shape perception, attention, and motivation. Frontiers in Psychology, 3. https://doi.org/10.3389/fpsyg.2012.00548

Deutsch, M. R., & Sternlicht, M. (1967). The role of “surprise” in the von Restorff effect. The Journal of General Psychology, 76(2), 151–159. https://doi.org/10.1080/00221309.1967.9710384

Donchin, E. (1981). Surprise!… Surprise? Psychophysiology, 18(5), 493–513. https://doi.org/10.1111/j.1469-8986.1981.tb01815.x

DuBrow, S., & Davachi, L. (2013). The influence of context boundaries on memory for the sequential order of events. Journal of Experimental Psychology. General, 142(4), 1277–1286. https://doi.org/10.1037/a0034024

Elhalal, A., Davelaar, E. J., & Usher, M. (2014). The role of the frontal cortex in memory: An investigation of the von Restorff effect. Frontiers in Human Neuroscience, 8, 410. https://doi.org/10.3389/fnhum.2014.00410

Erickson, R. L. (1963). Relational isolation as a means of producing the von Restorff effect in paired-associate learning. Journal of Experimental Psychology, 66(2), 111–119. https://doi.org/10.1037/h0039791

Fabiani, M., & Donchin, E. (1995). Encoding processes and memory organization: A model of the von Restorff effect. Journal of Experimental Psychology: Learning, Memory, and Cognition, 21(1), 224–240.

Frank, M. J., Woroch, B. S., & Curran, T. (2005). Error-related negativity predicts reinforcement learning and conflict biases. Neuron, 47(4), 495–501. https://doi.org/10.1016/j.neuron.2005.06.020

Friston, K. (2012). Prediction, perception and agency. International Journal of Psychophysiology, 83(2), 248–252. https://doi.org/10.1016/j.ijpsycho.2011.11.014

Friston, K., & Kiebel, S. (2009). Predictive coding under the free-energy principle. Philosophical Transactions of the Royal Society, B: Biological Sciences, 364(1521), 1211–1221. https://doi.org/10.1098/rstb.2008.0300

Gati, I., & Ben-Shakhar, G. (1990). Novelty and significance in orientation and habituation: A feature-matching approach. Journal of Experimental Psychology. General, 119(3), 251–263.

Geller, A. S., Schleifer, I. K., Sederberg, P. B., Jacobs, J., & Kahana, M. J. (2007). PyEPL: A cross-platform experiment-programming library. Behavior Research Methods, 39(4), 950–958. https://doi.org/10.3758/BF03192990

Geraci, L., & Manzano, I. (2010). Distinctive items are salient during encoding: Delayed judgements of learning predict the isolation effect. The Quarterly Journal of Experimental Psychology, 63(1), 50–64. https://doi.org/10.1080/17470210902790161

Geraci, L., & Rajaram, S. (2004). The distinctiveness effect in the absence of conscious recollection: Evidence from conceptual priming. Journal of Memory and Language, 51(2), 217–230.

Green, R. T. (1958a). Surprise, isolation, and structural change as factors affecting recall of a temporal series. British Journal of Psychology, 49(1), 21–30. https://doi.org/10.1111/j.2044-8295.1958.tb00633.x

Green, R. T. (1958b). The attention-getting value of structural change. British Journal of Psychology, 49(4), 311–314.

Heusser, A. C., Poeppel, D., Ezzyat, Y., & Davachi, L. (2016). Episodic sequence memory is supported by a theta-gamma phase code. Nature Neuroscience, 19(10), 1374–1380. https://doi.org/10.1038/nn.4374

Hintzman, D. L. (1984). MINERVA 2: A simulation model of human memory. Behavior Research Methods, Instruments, & Computers, 16(2), 96–101. https://doi.org/10.3758/BF03202365

Hirshman, E., Whelley, M. M., & Palij, M. (1989). An investigation of paradoxical memory effects. Journal of Memory and Language, 28(5), 594–609. https://doi.org/10.1016/0749-596X(89)90015-6

Howard, M. W., & Kahana, M. J. (2002). A distributed representation of temporal context. Journal of Mathematical Psychology, 46(3), 269–299. https://doi.org/10.1006/jmps.2001.1388

Hunt, R. R. (1995). The subtlety of distinctiveness: What von Restorff really did. Psychonomic Bulletin & Review, 2(1), 105–112. https://doi.org/10.3758/BF03214414

Hunt, R. R. (2006). The concept of distinctiveness in memory research. In R. R. Hunt & J. B. Worthen (Eds.), Distinctiveness and memory (pp. 2–25). Oxford, UK: Oxford University Press.

Hunt, R. R., & McDaniel, M. A. (1993). The enigma of organization and distinctiveness. Journal of Memory and Language, 32(4), 421–445. https://doi.org/10.1006/jmla.1993.1023

Hunt, R. R., & Mitchell, D. (1978). Specificity in nonsense orienting tasks and distinctive memory traces. Journal of Experimental Psychology: Human Learning and Memory, 4(2), 121–135. https://doi.org/10.1037/0278-7393.4.2.121

Hunt, R. R., & Smith, R. E. (1996). Accessing the particular from the general: The power of distinctiveness in the context of organization. Memory & Cognition, 24(2), 217–225.

Icht, M., Mama, Y., & Algom, D. (2014). The production effect in memory: Multiple species of distinctiveness. Frontiers in Psychology, 5. https://doi.org/10.3389/fpsyg.2014.00886

Jamieson, R. K., Mewhort, D. J. K., & Hockley, W. E. (2016). A computational account of the production effect: Still playing twenty questions with nature. Canadian Journal of Experimental Psychology/Revue canadienne de psychologie expérimentale, 70(2), 154–164. https://doi.org/10.1037/cep0000081

Johnson, M. K., Hashtroudi, S., & Lindsay, S. D. (1993). Source monitoring. Psychological Bulletin, 114(1), 3–28. https://doi.org/10.1037/0033-2909.114.1.3

Karis, D., Fabiani, M., & Donchin, E. (1984). “P300” and memory: Individual differences in the von Restorff effect. Cognitive Psychology, 16(2), 177–216. https://doi.org/10.1016/0010-0285(84)90007-0

Loftus, G. R., & Masson, M. E. J. (1994). Using confidence intervals in within-subjects designs. Psychonomic Bulletin & Review, 1(4), 476–490. https://doi.org/10.3758/BF03210951

MacLeod, C. M., Gopie, N., Hourihan, K. L., Neary, K. R., & Ozubko, J. D. (2010). The production effect: Delineation of a phenomenon. Journal of Experimental Psychology. Learning, Memory, and Cognition, 36, 671–685. https://doi.org/10.1037/a0018785

Marvin, C. B., & Shohamy, D. (2016). Curiosity and reward: Valence predicts choice and information prediction errors enhance learning. Journal of Experimental Psychology: General, 145(3), 266–272. https://doi.org/10.1037/xge0000140

McGuire, J. T., Nassar, M. R., Gold, J. I., & Kable, J. W. (2014). Functionally dissociable influences on learning rate in a dynamic environment. Neuron, 84(4), 870–881. https://doi.org/10.1016/j.neuron.2014.10.013

Mensink, G.-J. M., & Raaijmakers, J. G. W. (1989). A model for contextual fluctuation. Journal of Mathematical Psychology, 33(2), 172–186. https://doi.org/10.1016/0022-2496(89)90029-1

Metcalfe, J. (1990). Composite holographic associative recall model (CHARM) and blended memories in eyewitness testimony. Journal of Experimental Psychology: General, 119(2), 145–160.

Morris, C. D., Bransford, J. D., & Franks, J. J. (1977). Levels of processing versus transfer appropriate processing. Journal of Verbal Learning and Verbal Behavior, 16(5), 519–533. https://doi.org/10.1016/S0022-5371(77)80016-9

Mulligan, N. W., Smith, S. A., & Spataro, P. (2015). The attentional boost effect and context memory. Journal of Experimental Psychology: Learning, Memory, and Cognition. https://doi.org/10.1037/xlm0000183

Mumford, D. (1992). On the computational architecture of the neocortex. Biological Cybernetics, 66(3), 241–251. https://doi.org/10.1007/BF00198477

Nassar, M. R., Rumsey, K. M., Wilson, R. C., Parikh, K., Heasly, B., & Gold, J. I. (2012). Rational regulation of learning dynamics by pupil-linked arousal systems. Nature Neuroscience, 15(7), 1040–1046. https://doi.org/10.1038/nn.3130

Nassar, M. R., Wilson, R. C., Heasly, B., & Gold, J. I. (2010). An approximately Bayesian delta-rule model explains the dynamics of belief updating in a changing environment. The Journal of Neuroscience, 30(37), 12366–12378. https://doi.org/10.1523/JNEUROSCI.0822-10.2010

Nelson, D. L., McEvoy, C. L., & Schreiber, T. A. (2004). The University of South Florida Free Association, Rhyme, and Word Fragment Norms. Behavior Research Methods, Instruments, & Computers, 36(3), 402–407. https://doi.org/10.3758/BF03195588

Oker, A., & Versace, R. (2010). Distinctiveness effect due to contextual information in a categorization task. Current Psychology Letters. Behaviour, Brain & Cognition, 26(1). Retrieved from http://cpl.revues.org/4975

Oker, A., & Versace, R. (2014). Non-abstractive global-matching models: A framework for investigating the distinctiveness effect on explicit and implicit memory. Psychologie Française, 59(3), 231–246. https://doi.org/10.1016/j.psfr.2014.04.001

Park, H., Arndt, J., & Reder, L. M. (2006). A contextual interference account of distinctiveness effects in recognition. Memory & Cognition, 34(4), 743–751.

Polyn, S. M., Norman, K. A., & Kahana, M. J. (2009a). A context maintenance and retrieval model of organizational processes in free recall. Psychological Review, 116, 129–156.

Polyn, S. M., Norman, K. A., & Kahana, M. J. (2009b). Task context and organization in free recall. Neuropsychologia, 47(11), 2158–2163. https://doi.org/10.1016/j.neuropsychologia.2009.02.013

Rangel-Gomez, M., & Meeter, M. (2013). Electrophysiological analysis of the role of novelty in the von Restorff effect. Brain and Behavior, 3(2), 159–170. https://doi.org/10.1002/brb3.112

Rao, R. P. N., & Ballard, D. H. (1999). Predictive coding in the visual cortex: A functional interpretation of some extra-classical receptive-field effects. Nature Neuroscience, 2(1), 79–87. https://doi.org/10.1038/4580

Reynolds, J. R., Zacks, J. M., & Braver, T. S. (2007). A computational model of event segmentation from perceptual prediction. Cognitive Science, 31(4), 613–643. https://doi.org/10.1080/15326900701399913

Saltzman, I. J., & Carterette, T. S. (1959). Incidental and intentional learning of isolated and crowded items. The American Journal of Psychology, 72, 230–235. https://doi.org/10.2307/1419367

Schmidt, S. R. (1985). Encoding and retrieval processes in the memory for conceptually distinctive events. Journal of Experimental Psychology: Learning, Memory, and Cognition, 11(3), 565–578.

Schmidt, S. R. (1991). Can we have a distinctive theory of memory? Memory & Cognition, 19(6), 523–542. https://doi.org/10.3758/BF03197149

Schmidt, S. R. (2008). Distinctiveness and memory: A theoretical and empirical review. In J. H. Byrne (Ed.), Learning and memory: A comprehensive reference (pp. 125–144). Oxford, UK: Academic Press. Retrieved from http://www.sciencedirect.com/science/article/pii/B9780123705099001431

Schmidt, S. R., & Saari, B. (2007). The emotional memory effect: Differential processing or item distinctiveness? Memory & Cognition, 35(8), 1905–1916. https://doi.org/10.3758/BF03192924

Schubotz, R. I. (2007). Prediction of external events with our motor system: Towards a new framework. Trends in Cognitive Science, 11, 211–218. https://doi.org/10.1016/j.tics.2007.02.006

Sederberg, P. B., Howard, M. W., & Kahana, M. J. (2008). A context-based theory of recency and contiguity in free recall. Psychological Review, 115(4), 893–912. https://doi.org/10.1037/a0013396

Siegel, P. S. (1943). Structure effects within a memory series. Journal of Experimental Psychology, 33(4), 311.

Smith, A. S., & Mulligan, N. W. (2018). Distinctiveness and the attentional boost effect. Journal of Experimental Psychology: Learning, Memory, and Cognition, 44(9), 1464–1473. https://doi.org/10.1037/xlm0000531

Smith, R. E., & Hunt, R. R. (2000). The influence of distinctive processing on retrieval-induced forgetting. Memory & Cognition, 28(4), 503–508. https://doi.org/10.3758/BF03201240

Sols, I., DuBrow, S., Davachi, L., & Fuentemilla, L. (2017). Event boundaries trigger rapid memory reinstatement of the prior events to promote their representation in long-term memory. Current Biology: CB, 27(22), 3499–3504. https://doi.org/10.1016/j.cub.2017.09.057

Swallow, K. M., & Jiang, Y. V. (2010). The attentional boost effect: Transient increases in attention to one task enhance performance in a second task. Cognition, 115(1), 118–132. https://doi.org/10.1016/j.cognition.2009.12.003

Swallow, K. M., & Jiang, Y. V. (2013). Attentional load and attentional boost: A review of data and theory. Frontiers in Psychology, 4. https://doi.org/10.3389/fpsyg.2013.00274

Swallow, K. M., Zacks, J. M., & Abrams, R. A. (2009). Event boundaries in perception affect memory encoding and updating. Journal of Experimental Psychology: General, 138(2), 236. https://doi.org/10.1037/a0015631

Swartz, P., Pronko, N. H., & Engstrand, R. D. (1958). An extension of Green’s inquiry into surprise as a factor in the von Restorff effect. Psychological Reports, 4, 431–432. https://doi.org/10.2466/pr0.1958.4.h.431

Ter Braak, C. J. F. (2006). A Markov chain Monte Carlo version of the genetic algorithm differential evolution: Easy Bayesian computing for real parameter spaces. Statistics and Computing, 16(3), 339–249 https://doi.org/10.1007/s11222-006-8769-1

Turner, B. M., Sederberg, P. B., Brown, S. D., & Steyvers, M. (2013). A method for efficiently sampling from distributions with correlated dimensions. Psychological Methods, 18(3), 368–384. https://doi.org/10.1037/a0032222

von Restorff, H. (1933). Über die wirkung von bereichsbildungen im spurenfeld. Psychologische Forschung, 18(1), 299–342. https://doi.org/10.1007/BF02409636

Wallace, W. P. (1965). Review of the historical, empirical, and theoretical status of the von Restorff phenomenon. Psychological Bulletin, 63(6), 410–424. https://doi.org/10.1037/h0022001

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Siefke, B.M., Smith, T.A. & Sederberg, P.B. A context-change account of temporal distinctiveness. Mem Cogn 47, 1158–1172 (2019). https://doi.org/10.3758/s13421-019-00925-5

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13421-019-00925-5