Abstract

A core assumption underlying competitive-network models of word recognition is that in order for a word to be recognized, the representations of competing orthographically similar words must be inhibited. This inhibitory mechanism is revealed in the masked-priming lexical-decision task (LDT) when responses to orthographically similar word prime–target pairs are slower than orthographically different word prime–target pairs (i.e., inhibitory priming). In English, however, behavioral evidence for inhibitory priming has been mixed. In the present study, we utilized a physiological correlate of cognitive effort never before used in the masked-priming LDT, pupil size, to replicate and extend behavioral demonstrations of inhibitory effects (i.e., Nakayama, Sears, & Lupker, Journal of Experimental Psychology: Human Perception and Performance, 34, 1236–1260, 2008, Exp. 1). Previous research had suggested that pupil size is a reliable indicator of cognitive load, making it a promising index of lexical inhibition. Our pupillometric data replicated and extended previous behavioral findings, in that inhibition was obtained for orthographically similar word prime–target pairs. However, our response time data provided only a partial replication of Nakayama et al. Journal of Experimental Psychology: Human Perception and Performance, 34, 1236–1260, 2008. These results provide converging lines of evidence that inhibition operates in word recognition and that pupillometry is a useful addition to word recognition researchers’ toolbox.

Similar content being viewed by others

A viable model of visual word recognition should specify not only what internal representation subserves lexical access, but also how these representations interact with one another. According to competitive-network models of visual word recognition (e.g., the dual-route cascaded model—Coltheart, Rastle, Perry, Langdon, & Ziegler, 2001; the self-organising lexical acquisition and recognition model—Davis, 1999; spatial coding model--Davis, 2010; the multiple-read-out model—Grainger & Jacobs, 1996; and the interactive-activation model—McClelland & Rumelhart, 1981), similar orthographic representations (traditionally operationalized as words that are one letter different, while preserving total length and letter position; i.e., substitution neighbors (Coltheart et al. 1977)) modulate word recognition through a complex process of facilitative and inhibitory interactions (Davis, 2003; Lupker & Davis, 2009). For example, presentation of the word blur activates the feature, letter, and lexical representations consistent with blur, while also partially activating lexical representations of orthographically similar words, such as blue. Activated lexical representations are typically thought to compete as a function of frequency via lateral inhibition, with higher-frequency words acting as stronger competitors by imparting higher levels of inhibition on their competitors. The word representation with the most activation “beats down” its orthographically similar neighbors, resulting in the winner’s activation level reaching a threshold, and thus being recognized.

A paradigm widely used to examine this putative inhibitory mechanism is the masked-priming lexical-decision task (LDT; see Grainger, 2008, for a review). In this task, a briefly presented (~60-ms) prime in lowercase letters is preceded by a premask (####) and is followed by a word or nonword target in uppercase letters. The target generally remains on the screen until participants make a speeded word–nonword decision. Because the prime appears for such a short duration and is forward- and backward-masked, the masked-priming procedure purportedly taps nonstrategic/automatic lexical processing (but see Bodner & Masson, 1997, for an alternative account).

Within the framework of competitive-network models, performance in the masked-priming LDT is the result of facilitatory and inhibitory interactions (Lupker & Davis, 2009). The logic is that presentation of the prime leads to the activation of the prime itself, if it has a lexical entry, as well as of lexical representations that are orthographically similar to the prime. This preactivation influences response times (RTs) to the target. If the target is orthographically similar to the prime, its representation is preactivated, allowing the target a head start toward selection. If there are no strong competitors, the preactivated target will reach the recognition threshold faster than it would have otherwise. By contrast, if one or more items create strong competition for the target, the target’s representation is inhibited; in this situation, the target must overcome the inhibition in addition to attaining the activation level needed to meet the recognition threshold. In this way, the presence of competitors can delay target recognition, resulting in inhibitory priming (e.g., Lupker & Davis, 2009). Although word primes are more likely to produce strong competitors than nonword primes (in part because nonwords do not have their own lexical entries), inhibitory priming is not guaranteed with word primes.

The behavioral evidence of inhibitory priming has been mixed. In English, the first demonstrations of inhibitory priming were provided by Forster, Davis, Schoknecht, and Carter (1987, Exp. 5) and Davis and Lupker (2006) with substitution neighbors; however, the finding of inhibitory priming has been difficult to replicate, calling into question the veracity of an inhibitory mechanism operating in visual word recognition. For instance, Forster (1987) and Forster and Veres (1998). using seven- to eight-letter words, showed either facilitation or null effects for similar word primes, and not the expected inhibitory effect.Footnote 1 Furthermore, Zimmerman and Gomez (2012, Exp. 1). using Davis and Lupker’s (2006) stimuli, failed to demonstrate inhibitory priming using the standard masked-priming paradigm; the expected effect was found only when long (64-ms) and short (48-ms) duration primes were intermixed within the same block, thereby suggesting that attention may moderate inhibitory priming in the masked-priming paradigm. Nevertheless, other investigators have succeeded in obtaining inhibitory priming using different English-language stimulus sets, insofar as the stimuli are short in length (four to five letters) and have dense neighborhoods (e.g., Morris & Still, 2012; Nakayama, Sears, & Lupker, 2008). In sum, the literature shows that inhibitory priming is not always found when it is expected. Although competitive-network models can accommodate both the presence and absence of behavioral inhibitory priming, it seems that inhibitory priming should be reliably obtained when strong competitors are used. Therefore, in light of the assumption that lexical inhibition plays a key role in word recognition and in competitive-network models, researchers should continue working to resolve these disparate findings. One avenue for further investigation is to use an alternative dependent measure.

Pupillometry

Most studies to date have assessed lexical competition in the masked-priming LDT using response latencies—which provide a summative index of processing from perception until the behavioral response (cf. Massol, Grainger, Dufau, & Holcomb, 2010). Given the somewhat elusive nature of inhibitory priming in the masked-priming LDT using the standard behavioral measure, the focus of this study will be to examine a physiological measure not yet utilized in the masked-priming paradigm—pupillometry (i.e., the measurement of pupil diameter). Although pupillometry has been used by experimental psychologists as an indirect measure of psychological processing for over half a century, recently there has been a resurgence of interest in its use (see Laeng, Sirois, & Gredebäck, 2012, for a review). Although the pupillary response is most commonly modulated by low-level processes (e.g., pupillary light reflex and accommodation), it is also affected by phasic, task-specific cognitive processing (e.g., cognitive effort; Beatty & Lucero-Wagoner, 2000; Goldwater, 1972). The pupillary response evoked by cognitive effort has been used to examine many higher-level mental processes, including word recognition (e.g., Kuchinke, Võ, Hofmann, & Jacobs, 2007; Papesh & Goldinger, 2012).

The increases in pupil size as a function of cognitive effort are generally no more than 0.5 mm, with the pupillary response occurring in as little as 200 ms following stimulus onset and terminating when processing is completed—the pupillary response typically reaches its peak between 500 and 1,000 ms after a behavioral response (Beatty & Lucero-Wagoner, 2000). This small, fast, and involuntary increase in pupil diameter due to phasic (effortful) processing is called the task-evoked pupillary response (TEPR; Beatty, 1982). In the domain of word recognition, one factor thought to modulate the ease of lexical access is word frequency. In behavioral tasks, high-frequency words engender shorter latencies (and fewer errors) than low-frequency words—called the word frequency effect (see Monsell, 1991, for a review). The typical explanation for this phenomenon is that high-frequency words are more entrenched in memory (i.e., have more robust mental representations), and thus are easier to process than low-frequency words. Bolstering this claim, Kuchinke et al. (2007) examined the TEPRs of high- and low-frequency words varying in valence, in a single-presentation LDT. A pupillary word frequency effect was obtained whereby low-frequency words engendered larger peak pupil dilations than did high-frequency words. Similarly, Papesh and Goldinger (2012) found that low-frequency words produced larger TEPRs than high-frequency words in a modified delayed-naming task. The aforementioned studies thus provide support for the utility of pupillometry in examining the cognitive effort involved in word recognition.

Given the productivity of traditional behavioral measures like RTs and error rates and the growing body of event-related potential (ERP) and fMRI studies, one might wonder how pupillometric data could add anything of value to word recognition research. Generally speaking, we see pupillometry as a relatively low-cost physiological measure that can be obtained from any commercial eyetracker. As such, it has some advantages over RTs and error rates. One advantage is that data can be collected continuously, allowing for a more detailed, fine-grained (temporal) analysis of the effects occurring throughout a task (see Duñabeitia & Costa, 2014; Papesh & Goldinger, 2012). Furthermore, collecting pupillometric data does not require overt responses. This is especially useful when the acquisition of overt responses is not possible—for example, in preverbal infants (e.g., Hochmann & Papeo, 2014) or in neurological patients suffering from language impairments (e.g., Laeng et al., 2012). It is also useful in situations in which overt responses could contaminate another source of data (e.g., ERP and skin-conductance responses). Thus, pupil size may provide a more sensitive index of the underlying psychological mechanisms.

Papesh and Goldinger (2012) have provided a good example of how pupillometric data can be informative beyond RTs and error rates. They utilized a modified delayed-naming task in their experiment. After a target word was presented, the participant had to wait 250 to 2,000 ms until a tone was played before making a response. The tone dictated what response the participant should give. On 80 % of the trials, participants simply named the target; the other 20 % were catch trials, during which participants were signaled to say blah instead of naming the target word. The purpose of the study was to examine the effects of word frequency on speech planning. RTs, error rates, and pupillary data were recorded. On standard naming trials, a frequency effect was observed in both the RTs and the pupil data (larger TEPRs for low-frequency than for high-frequency words). By contrast, on catch trials, frequency effects were observed only in the pupillary data. The RT frequency effect on catch trials had been found before (e.g., Goldinger, Azuma, Abramson, & Jain, 1997). therefore, Papesh and Goldinger’s RT results might have been interpreted as a conceptual failure to replicate. But the presence of a pupillary frequency effect on those catch trials suggests that in the latter experiment, the RT data lacked sensitivity or were obscured in some way by the delayed-naming task. Therefore, the pupil data provided a secondary measure for examining processing during the catch trials. In the present experiment, however, the advantages of collecting pupillary data go beyond increased sensitivity to frequency effects, to the ability to measure processes that RTs cannot measure. Specifically, pupil data can be collected before, during, and after a response has been made. By design, the RT is recorded at the time the response occurs and is, therefore, seldom able to capture effects that occur postresponse.Footnote 2 In their experiment, Papesh and Goldinger found that pupillary frequency effects continued after task completion; these data suggest that low-frequency words demand cognitive resources for an extended period of time (Papesh & Goldinger, 2012, p. 762).

As is illustrated in Papesh and Goldinger (2012). TEPRs can provide data that RTs cannot. For the purpose of the present experiment, TEPRs have another advantage, in that they seem to be a more selective measure of later-stage processing. For example, Kuchinke et al. (2007) used an LDT to examine the effects of frequency and emotional valance on word recognition. RT data revealed a frequency effect as well as an effect of emotional valance—faster responses to positive and negative than to neutral words. The main effects were qualified by an interaction whereby valance effects were larger for low-frequency words. The pupillary data also revealed a frequency effect, but no statistically significant effect of valence, and no interaction. Kuchinke et al. posited that this dissociation arose due to valence affecting a very early stage of processing to which the pupil is not sensitive. RTs, at least in the LDT, index both early and late processes (e.g., Balota & Chumbley, 1984; Balota & Spieler, 1999). it is possible that the pupillary response, on the other hand, is sensitive to a decisional stage, associated with lexical selection difficulty.

To date, the use of pupillometry in word recognition has been sparse. Nevertheless, pupillometry appears to be a promising tool for word recognition researchers, particularly those who are interested in inhibitory processes. Evidence from a variety of experiments suggests that inhibition results from processing difficulty at the lexical stage. For example, using the standard masked-priming task, Zimmerman and Gomez (2012, Exp. 1) examined the distribution of RTs for similar prime–target pairs and observed that the magnitude of the inhibitory effect was greatest at later quantiles. Given that inhibition arises at later quantiles, a decisional component is strongly implicated in the effect. Similarly, examination of participants’ eye movements during normal reading has revealed that inhibitory processes might reflect processing difficulty manifested as misperception of the target word as an orthographic neighbor. That is, words with higher-frequency neighbors are more likely to show more total time spent on the target word, spillover, and regressions back to the target word, (e.g., Perea & Pollatsek, 1998; Slattery, 2009; but cf. Sears et al., 2006). Finally, examining ERPs, Massol et al. (2010) found that the N400 component (a negative-going deflection that peaks around 400 ms posttarget and indexes processing difficulty) is sensitive to lexical inhibition, whereas an earlier component associated with orthographic overalp between the prime and target (i.e., the N250) is not.

Given the TEPR’s locus, pupillometry might prove to be a fruitful method to examine inhibitory processes operating in word recognition. Previous research has already established pupil sensitivity to word frequency, but what about orthographic similarity? In this article, we examine the pupil’s sensitivity to inhibitory effects purported to arise from orthographically similar words in the masked-priming LDT. We hypothesize that targets preceded by orthographically similar word primes will be more difficult to process due to inhibitory processes associated with the selection of the appropriate lexical candidate. This selection difficulty should result in longer RTs and larger pupil sizes on trials with orthographically similar word primes and targets than in an orthographically dissimilar control condition.

The present study

In the experiment that follows, we utilized pupillometry, in addition to recording behavioral responses (RTs and error rates), to examine inhibitory priming in the masked-priming LDT. Our rationale for collecting RTs and pupil size was simple: We wanted to be able to compare the sensitivities of the two measures to inhibitory priming. Given the equivocal RT findings in the literature, the addition of pupillometry also allowed us to have a second dependent measure to assess inhibitory priming. Finally, this experiment would provide the first examination of the sensitivity of pupillometry to inhibitory processes in the masked-priming LDT.

Classic substitution neighbors were used to examine inhibitory priming; these stimuli were a logical choice, given they have been the stimuli most used to examine such inhibitory effects. We adopted a within-item design, which manipulated primes and held targets constant across similar and different conditions. The stimuli used herein were adopted from Nakayama et al. (2008, Exp. 1). who manipulated relative word frequency and orthographic similarity between the prime and target.

Although the main objectives of this experiment were to replicate the inhibitory neighbor effect and to examine pupil sensitivity to inhibition resulting from orthographic similarity, relative word frequency was also manipulated. If word frequency influences how effectively a prime competes with the target for recognition, then inhibitory priming should be affected by the relative prime–target frequency. On the basis of the assumptions governing competitive-network models, low-frequency targets preceded by high-frequency neighbor primes should be more likely to produce inhibitory priming than low-frequency neighbor primes (e.g., Segui & Grainger, 1990). The rationale was that because high-frequency primes are at a higher level of baseline activation than low-frequency primes, they act as stronger competitors. One should then observe an interaction between frequency and prime similarity. In English, at least, the observation of this interaction appears to be moderated by prime neighborhood size—that is, primes with dense neighborhoods should elicit inhibitory priming, regardless of the target’s own frequency (Nakayama et al., 2008). Because the interaction between orthographic similarity and relative prime–target frequency is a theoretically important aspect of inhibitory priming, we manipulated relative prime–target frequency while recording pupil size and RTs concomitantly. Can pupillometry provide a more nuanced examination of lexical inhibition than RTs alone? We examine this question herein.

Method

Participants

Seventy-sixFootnote 3 Iowa State University undergraduates participated for course credit. All participants were native speakers of English and had corrected-to-normal vision.

Stimuli

Forty pairs of substitution neighbor word targets were adopted from Nakayama et al. (2008, Exp. 1).Footnote 4 All of their word stimuli were four letters in length and had a dense neighborhood (M = 9.8). Furthermore, each member of their word pairs served as either a prime or target, depending on the condition. Each of the word pairs consisted of a high-frequency word (Kučera & Francis, 1967, mean frequency = 529.2) and a low-frequency word (Kučera & Francis, 1967, mean frequency = 14.6). In their substitution neighbor conditions, if a high-frequency word was designated as a target, a low-frequency neighbor served as the prime, and vice versa. In their different conditions, high-frequency word targets were paired with orthographically different (all letters different) primes of lower normative frequency (Kučera & Francis, 1967, mean frequency = 14.5) but similar neighborhood size (M = 9.9); similarly, low-frequency targets were paired with orthographically different primes of higher normative frequency (Kučera & Francis, 1967, mean frequency = 535.1) but similar neighborhood size (M = 7.8). This resulted in four different prime–target conditions: a high-frequency neighbor prime–low-frequency target condition; a high-frequency different prime–low-frequency target condition; a low-frequency neighbor prime–high-frequency target condition; and a low-frequency different prime–high-frequency target condition. Four counterbalanced lists were created so that these stimuli could be presented in each of the conditions without repeating any items (as a prime or target) for an individual participant. For example, one list would contain cold–CORD (high-frequency neighbor prime–low-frequency target condition), another list rest–CORD (high-frequency different prime–low-frequency target condition), another list cord–COLD (low-frequency neighbor prime–high-frequency target condition), and the final list maze–COLD (low-frequency different prime–high-frequency target condition). The English Lexicon Project database (Balota et al., 2007) was used to ensure that all word stimuli had high accuracy rates (96.9 % for low-frequency targets and 95.6 % for high-frequency targets).

Forty nonword targets were constructed in a similar manner to the selection of word targets. All nonword targets were four letters in length and had a dense neighborhood (M = 9.8). Nonword targets were paired with a neighbor word prime (Kučera & Francis, 1967, mean frequency = 22.1; mean neighborhood size = 10) and a different word prime (Kučera & Francis, 1967, mean frequency = 22.9; mean neighborhood size = 10). Two counterbalanced lists were created for the nonword targets.

Apparatus and procedure

Testing occurred individually under normal indoor lighting conditions. A chin and forehead rest maintained head position during the experiment. One computer presented the stimuli via E-Prime 2.0 software (Psychology Software Tools, Pittsburgh, PA), while another recorded the eyetracking data. Data were collected using a Sensomotoric Instruments (SMI; Teltow, Germany) RED 500 remote eyetracker, which recorded the horizontal and vertical coordinates of the participants’ pupils (measured in millimeters) at a sampling rate of 500 Hz. Data were sampled binocularly, but only data from the left eye were used. An E-Prime response box recorded participants’ RTs.

Stimuli were presented on a 22-in. thin-film transistor Dell monitor with a resolution of 1,680 × 1,050 pixel and refresh rate of 60 Hz. Participants were seated at a distance of 70 cm from the monitor. All stimuli appeared in the center of the screen in black print (Arial font, size 24) on a white background. This ensured that any changes in pupil diameter could not be attributed to luminance.

Before the experiment, participants were briefly familiarized with the eyetracker and the procedure. A 9-point calibration grid was used to ensure gaze accuracy during the experiment. The experiment began with the presentation of a fixation stimulus in the center of the screen for 1,500 ms. The trial then progressed as follows: (1) a forward mask was presented for 500 ms (####); (2) the prime in lowercase letters was presented for 40 ms; and (3) the target in uppercase letters was presented until response. Participants indicated, by pressing one of two buttons, whether the target was a word or a nonword. The next trial began after a 3,000-ms interval. This long intertrial interval attenuated the possibility of pupil dilation effects carrying over from the previous trial. The trial order was randomized for each participant. Prior to the experiment proper, participants engaged in ten practice trials. No stimuli used in the practice trials were utilized in the experiment proper.

Results

The data from participants with overall error rates greater than 30 % were excluded from further analysis (n = 3); participants with missing pupil data greater than 6 % were also removed (n = 5). All excluded participants were replaced with additional participants in order to maintain complete counterbalancing across all experiment lists. Therefore, the analyses described herein include data from 76 participants.

A widely used analytic technique in psycholinguistics is to aggregate trial-level data onto subject (F 1) and item (F 2) levels and conduct separate analyses of variance (ANOVAs) to ensure that both sources of variance (subjects and items) are treated as random, and not fixed, factors. Obtaining reliable effects in both analyses meets the F 1 × F 2 criterion (Raaijmakers, Schrijnemakers, & Gremmen, 1999). An alternative analytic technique gaining traction within the psycholinguistic community is linear mixed modeling (LMM; sometimes called hierarchical linear modeling or multilevel modeling). Mixed models offer many advantages over the traditional F 1 and F 2 tests. Specifically, LMMs can model item and subject random effects simultaneously, are highly flexible (both categorical and continuous predictors can be utilized), and are purported to be more robust/powerful than ANOVA (Hoffman & Rovine, 2007; Locker, Hoffman, & Bovaird, 2007). For our primary analyses, we fit separate LMMs with subjects and items as crossed random effects on the trial level, unaggregated correct RTs, and baseline-corrected pupil diameters using PROC MIXED in SAS Version 9.3. For error rates, we utilized generalized linear models using PROC GLIMMEX in SAS Version 9.3. Degrees of freedom were estimated using the Satterthwaite approximation. Before the RT and baseline-corrected pupil diameter analyses took place, all incorrect responses (5 %) and RTs less than 200 ms and greater than 2,000 ms (2 %) were excluded. All analyses were performed on untransformed data (log and reciprocal [–1/RT] transformations produced similar results). As is most often the case in masked priming, the data from words and nonwords were analyzed separately.

Behavioral data

Word targets

To determine the appropriate random-effects structure, we began with a maximum model (Barr, Levy, Scheepers, & Tily, 2013). including the fixed factors frequency (high vs. low) and similarity (similar vs. different) and their interaction, and random slopes and intercepts by subjects and by items. The model was progressively simplified by excluding each random factor if the more complex model did not fit the data better. For the response latency analysis, the final model included fixed effects of frequency and similarity, the interaction between the two variables, random frequency and similarity slopes (and their interaction), and intercepts by subjects and random intercepts by items:

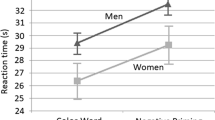

Mean RTs and error rates are shown in Table 1. The main effect of frequency was significant, with longer response latencies for low-frequency than for high-frequency targets, F(1, 71.7) = 32.02, p < .0001. We also found a significant effect of similarity, with similar prime–target pairs producing longer latencies than different prime–target pairs, F(1, 146) = 4.33, p = .04. The interaction between frequency and similarity was significant, F(1, 146) = 3.84, p = .05. Simple-effects analyses indicated an inhibitory effect for low-frequency targets, t(2017.6) = 2.87, p = .004, but not for high-frequency targets, t(1971) = 0.08, p = .94.

The accuracy data were tested with a generalized linear model using a binominal distribution. The final model included two fixed effects (frequency and similarity) as well as the interaction between the two variables, and random frequency and similarity slopes and intercepts by subjects and random intercepts by items:

Frequency was the only factor that had an effect on accuracy, F(1, 94.49) = 8.76, p = .004. High-frequency targets were responded to more accurately (had fewer errors) than low-frequency targets. No other effects were significant, both Fs < 0.05.

Nonword targets

RTs and accuracy for nonword targets were examined with the same models used above. For the analysis of response latencies, the final model included the fixed effect of similarity, along with random intercepts and slopes by subjects and random intercepts by items:

We found no significant difference between similar and different prime–nonword target pairs, F(1, 444.13) = 0.44, p = .51. The error analysis did not reveal any difference between the similar and different conditions, F(1, 3078) = 3.07, p = .08.

Pupil data

When an artifact (e.g., blinks) or any other interruption appears in the data stream during pupil monitoring, the SMI RED 500 automatically fills in the pupil diameter with the last valid pupil value. In some instances, however, data were missing (i.e., due to uneven trial lengths). When this occurred, the missing data were linearly interpolated. The missing pupil data were not systematically distributed, and none of the participants whose data were retained had more than 6 % missing data. The average pupil diameter 200 ms prior to the onset of the prime served as the baseline for each trial. To calculate the baseline-corrected peak pupil diameter, we took the baseline and subtracted it from the max pupil trace that occurred between prime onset and the end of the trial. All pupil analyses were performed on the trial-level baseline-corrected peak pupil diameters.

Word targets

The final model used to analyze the mean baseline-corrected pupil diameter included two fixed effects (frequency and similarity) as well as the interaction, with random slopes, for the effect of frequency, and intercepts by subjects:

The pupillary analysis indicated that low-frequency words had a greater peak pupil diameter (0.92 mmd), than high-frequency words (0.87 mmd), F(1, 75.01) = 6.28, p = .01. Furthermore, similar prime–target pairs had a greater peak pupil diameter (0.93 mmd) than different stimuli (0.87 mmd), F(1, 74.7) = 4.30, p = .04. The interaction between frequency and similarity was not significant, F(1, 2734) = 0.04, p = .85. The pupillary inhibitory effects were statistically equivalent for high- and low-frequency targets (0.06 mmd and 0.05 mmd, respectively).

Because this experiment presents the first use of pupillometric data from the masked-priming LDT, we believe it will be valuable to further examine how pupil diameter changes over time. Figure 1 presents the continuous average baseline-corrected pupillary waveforms obtained in this experiment. These data differ from those analyzed, in that the continuous graph represents the average pupil dilation in each condition at specific points in time. The pupillary data associated with similar and different prime–target pairs for low-frequency targets appear in the top panel, and pupillary data for the high-frequency targets appear in the lower panel. It is clear from the data presented in the two panels that the average pupil diameters run parallel to the results from our peak pupil diameter analyses: Low-frequency targets were associated with greater average pupil diameters than high-frequency targets, and similar prime–target word pairs were associated with numerically greater average pupil diameters than different prime–target word pairs (low-frequency similar M = 0.14 mmd, low-frequency different M = 0.11 mmd, high-frequency similar M = 0.10 mmd, high-frequency different M = 0.09 mmd). For both low- and high-frequency targets, the similar condition produced an extended period of increased pupil diameter in comparison to the different condition; this difference became stable approximately 1,500 ms post-target-onset. Although differences did seem to emerge at the start of the time course, we believe that this is a spurious effect. It is generally assumed that the effects of lexical processing in the TEPR do not emerge until 200–300 ms after stimulus onset, with the peak not being reached until 500–1,000 ms postresponse (Kuchinke et al., 2007; Papesh & Goldinger, 2012). Therefore, we would not expect any systematic effects to emerge within the first 300 ms. Likewise, the RTs we obtained in this experiment led us to expect pupil dilations to peak 1,100–1,600 ms after target onset for high-frequency targets and 1,200–1,700 ms after target onset for low-frequency targets. Finally, it may seem odd that high-frequency targets in the different condition appear to have a higher average peak than the similar targets. However, it is important to note that the different condition peaks earlier and constricts more quickly than the similar condition. The protracted period of increased pupil dilation associated with the similar condition suggests that that condition is more difficult than the different condition. As can be seen from the pupillary waveforms, this is warranted, as the pupillary waveforms peak after a response is made.

Nonword targets

Baseline-corrected peak pupil diameters were examined with the same model used for the word targets. The final model included the fixed effect of similarity and random by-subjects intercepts:

No significant effect of similarity was apparent, t < 0.50.

Discussion

To date, the available literature regarding inhibitory priming using English stimuli has been mixed; studies have shown all possible effects (i.e., facilitatory, inhibitory, and null priming effects; see, e.g., Davis & Lupker, 2006; Forster, 1987; Forster & Veres, 1998; Morris & Still, 2012; Nakayama et al., 2008). In the present experiment, we assessed the inhibitory priming elicited by substitution neighbors in the masked-priming LDT using standard behavioral measures (i.e., RTs and error rates), as well as pupillometry—a measure known to index cognitive effort. By utilizing a physiological measure of cognitive effort, we hoped to provide another avenue for examining the inhibitory effects assumed in competitive-network models, as well as to provide evidence for the utility of pupillometry in the masked-priming task.

To summarize, the behavioral data partially replicated Nakayama et al. (2008, Exp. 1). We observed an interaction between target frequency and orthographic similarity. Specifically, an inhibitory RT effect was found for low-frequency targets preceded by high-frequency neighbor primes (40 ms), but not for high-frequency targets preceded by low-frequency neighbor primes (–1 ms). This result is consistent with assumptions about word frequency and lexical inhibition in competitive-network models. The pupillary results revealed a frequency effect (Kuchinke et al., 2007; Papesh & Goldinger, 2012) and, more importantly, a general inhibitory effect for orthographically similar targets. Interestingly, our analysis of peak pupil diameter revealed that the magnitudes of the pupillary inhibitory effect were similar for both low- and high-frequency targets. Therefore, the pupillary data provide a conceptual replication of Nakayama et al.’s Experiment 1 results.

Given these results, two important questions remain: Why did we find an interaction in the RT data between target frequency and orthographic similarity, when Nakayama et al. (2008, Exp. 1) did not, and why do our RT and pupillary data diverge? One potential reason for the discrepancy between our behavioral results and Nakayama et al.’s is a difference in prime stimulus onset asynchronies (SOAs): We used an SOA of 40 ms, whereas they used an SOA of 50 ms. Like Nakayama et al., Davis and Lupker (2006, Exp. 1) used a 57-ms prime SOA and also found no interaction between orthographic similarity and frequency, although the priming effects appeared numerically greater for low-frequency targets.Footnote 5 Previous behavioral findings have indicated that inhibitory effects tend to increase with prime exposure duration (e.g., De Moor et al., 2007; Grainger, 1992). De Moor et al., for example, utilized an incremental-priming paradigm (Jacobs, Grainger, & Ferrand, 1995) to examine inhibitory priming at different prime SOAs and found that employing a prime duration of 57 ms instead of 43 ms increased the amount of inhibitory priming by 40 ms. One might tentatively assume, therefore, that our brief prime exposure duration was not sufficient for low-frequency neighbor primes to interfere with target processing.

Another tenable explanation for the difference between our study and Nakayama et al. (2008, Exp. 1) is the pace of our experiment. To ensure that pupils returned to baseline before the start of the next trial, a long interstimulus interval (i.e., 3,000 ms) was utilized. This resulted in slower responses, overall, than in Nakayama et al. (2008, Exp. 1). The slower responses may reflect a difference in participants’ attention to the prime, whereby our participants may have been slower to engage attention on most trials. When attention is not focused on the prime, masked-priming effects can be diminished (see, e.g., Naccache, Blandin, & Dehaene, 2002; but see also Zimmerman & Gomez, 2012). If our participants allocated less attention to prime processing, this would disadvantage processing more for low-frequency than for high-frequency primes. This could have had the undesired consequence of attenuating inhibitory priming for the low-frequency prime–high-frequency target pairs in our experiment.

Although the explanations above are possible, it is also important to consider how the pupillary data might inform our interpretation of the RT data. Pupillary changes in this experiment were thought to index the cognitive difficulty that arises when more than one competing lexical entry is activated. Because only one lexical entry can be selected, the other competitors must be inhibited. We saw evidence of these inhibitory effects for both high- and low-frequency neighbor targets, and the effects were similar in magnitude. If the pupillary data reflect lexical inhibition, then, it would be inaccurate to say that low-frequency neighbor primes were not interfering with target processing—the tentative explanation for the behavioral differences between our results and Nakayama et al.’s (2008, Exp. 1). Instead, it seems plausible that the inhibition in the low-frequency prime–high-frequency target conditions was simply not detectable in the RT data with our prime SOA. It is not uncommon for RT and pupillometric data to diverge (e.g., Kuchinke et al., 2007; Papesh & Goldinger, 2012). The present results bring forth the intriguing possibility that pupillometry might be more sensitive than RTs to inhibition operating in the masked-priming paradigm.

Although we did not manipulate the prime SOA in this experiment, the primary difference between our experiment and Nakayama et al.’s (2008) Experiment 1 was prime SOA. It also happens that the behavioral results differed such that our RTs appeared to be less sensitive to inhibition than those obtained by Nakayama et al. With this in mind, it is possible that the LDT is sensitive to prime SOA, whereas the pupil is less so (since the pupil data mirror the RTs of Nakayama et al., 2008). If, as Kuchinke et al. (2007) suggested, the pupil is not sensitive to early processes in the LDT, our results may indicate that prime SOA has an early impact on the LDT and on the subsequent RT data. Furthermore, those early effects could obscure the RT data such that lexical inhibition was more difficult to detect. A high-frequency word prime, for example, is already at a higher level of baseline activation; thus, a short prime SOA might not hinder its ability to be an effective competitor. Low-frequency words, however, are at a lower level of baseline activation, and thus a short SOA renders the low-frequency word prime inadequate to induce priming. Our hypothesis that the pupil is less sensitive to prime SOA than RTs could easily be tested by manipulating SOA in a similar masked-priming experiment.

Up to this point, we have simply referred to lexical inhibition in a relatively generic sense. A more detailed analysis may be warranted, though. Nakayama et al. (2008) suggested that prime neighborhood size, in addition to orthographic similarity and relative word frequency, is a critical factor in lexical inhibition. To examine this possibility, Nakayama et al. (Exp. 3) manipulated prime neighborhood size as well as relative prime–target frequency. When prime neighborhoods were large, similar amounts of inhibition were obtained in conditions with low-frequency and high-frequency primes. By contrast, when prime neighborhoods were sparse, inhibition was only obtained with high-frequency primes. These findings suggest that prime neighborhood size modulates the magnitude of inhibitory priming. Considering the role of prime neighborhood size in lexical inhibition and our pupillometric results, it is quite possible that our pupillary data reflect inhibition associated specifically with prime neighborhood size. This hypothesis could be tested by manipulating prime neighborhood size similar to how Nakayama et al. did in their Experiment 3. In that test, it would be important to also manipulate the relative prime–target frequency, to test for the possibility that pupil dilation is simply insensitive to relative prime–target frequency.

Conclusion

Previous studies have shown that the size of the pupil is influenced by lexical factors such as word frequency (e.g., Kuchinke et al., 2007; Papesh & Goldinger, 2012). Herein, we have shown for the first time the sensitivity of the pupil to the cognitive effort associated with competitive interactions induced by orthographically similar words primes in the masked-priming LDT. Previous failures to replicate the inhibitory-priming effect have cast doubt on the existence of an inhibitory mechanism operating in visual word recognition. Our results (both behavioral and physiological) bolster this basic assumption that underlies competitive-network models. That is, when a word is preceded by an orthographically similar word prime, inhibitory processes occur that allow word identification to take place; this process occurs at a later, lexical stage of processing and requires mental effort. Furthermore, our results highlight the value of using both behavioral and physiological measures, such as pupillometry, to examine masked-priming effects. Our pupil and RT data did not perfectly converge; we believe this is because pupillometry may be more sensitive to inhibitory effects than are RTs. Because of this, it might be fruitful to use pupillometry to reexamine studies that have failed to find inhibitory priming between word primes and targets (e.g., Forster, 1987; Forster & Veres, 1998). In sum, researchers wanting to examine factors that might influence a later stage of processing should consider placing pupillometry into their methodological toolboxes. These results show that pupillometric data provide a viable avenue for investigating orthographic masked-priming effects.

Notes

There is some controversy, however, regarding the interpretation of these findings. It could be that greater letter overlap between the prime–target pairs serves to increase sublexical facilitation, which in turn obscures behavioral measures of inhibition (De Moor, Van der Herten, & Verguts, 2007).

Masson and Kliegl (2013) examined how trial history (i.e., how difficult or easy previous trials were) moderated the additivity and interactivity of word frequency and stimulus quality in semantic priming.

The number of participants is unusually high for a masked-priming experiment, but it is pertinent to note that Nakayama, Sears, and Lupker (2008, Exp. 1) also utilized a large sample size (n = 60). Moreover, when replicating a past finding, it is recommended that a larger sample size be utilized to ensure adequate power (e.g., Brandt et al., 2014).

On the basis of the stimulus list provided in their appendix, three words appear to have been reused across conditions in Nakayama et al. (2008, Exp. 1). Bark was listed as a neighbor prime for the high-frequency target back and as an unrelated prime for the high-frequency target like. Fork was listed as a low-frequency word target and as a similar neighbor for the nonword target fock. Finally, well was listed as similar prime for the low-frequency word target bell and as a different prime for mace. In our design, this reuse of items would cause some primes to be presented more than once to the same participant. To avoid this, we replaced the repeated stimuli with words that possessed similar characteristics, to ensure that no primes or targets appeared more than once in each list. Torn replaced bark as the different word prime for the high-frequency word like; dock replaced fork as the similar neighbor prime for the nonword target fock; and bulk replaced well as the different prime for mace.

Davis and Lupker (2006) analyzed word and nonword primes together, so it is possible that they could have found an interaction if they had analyzed the word prime trials separately. However, Zimmerman and Gomez (2012) utilized Davis and Lupker’s word prime stimuli and found no evidence of an interaction.

References

Balota, D. A., & Chumbley, J. I. (1984). Are lexical decisions a good measure of lexical access? The role of word frequency in the neglected decision stage. Journal of Experimental Psychology: Human Perception and Performance, 10, 340–357. doi:10.1037/0096-1523.10.3.340

Balota, D. A., & Spieler, D. H. (1999). Word frequency, repetition, and lexicality effects in word recognition tasks: Beyond measures of central tendency. Journal of Experimental Psychology: General, 128, 32–55. doi:10.1037/0096-3445.128.1.32

Balota, D. A., Yap, M. J., Cortese, M. J., Hutchison, K. A., Kessler, B., Loftis, B., & Treiman, R. (2007). The English Lexicon Project. Behavior Research Methods, 39, 445–459. doi:10.3758/BF03193014

Barr, D. J., Levy, R., Scheepers, C., & Tily, H. J. (2013). Random effects structure for confirmatory hypothesis testing: Keep it maximal. Journal of Memory and Language, 68, 255–278. doi:10.1016/j.jml.2012.11.001

Beatty, J. (1982). Task-evoked pupillary responses, processing load, and the structure of processing resources. Psychological Bulletin, 91, 276–292. doi:10.1037/0033-2909.91.2.276

Beatty, J., & Lucero-Wagoner, B. (2000). The pupillary system. In Handbook of psychophysiology (2nd ed., pp. 142–162). Cambridge, UK: Cambridge University Press.

Bodner, G. E., & Masson, M. E. J. (1997). Masked repetition priming of words and nonwords: Evidence for a nonlexical basis for priming. Journal of Memory and Language, 37, 268–293. doi:10.1006/jmla.1996.2507

Brandt, M. J., IJzerman, H., Dijksterhuis, A., Farach, F. J., Geller, J., Giner-Sorolla, R., & van ’t Veer, A. (2014). The replication recipe: What makes for a convincing replication? Journal of Experimental Social Psychology, 50, 217–224. doi:10.1016/j.jesp.2013.10.005

Coltheart, M., Davelaar, E., Jonasson, J. T., & Besner, D. (1977). Access to the internal lexicon. In S. Dornic (Ed.), Attention and performance VI (pp. 535–555). Hillsdale: Erlbaum.

Coltheart, M., Rastle, K., Perry, C., Langdon, R., & Ziegler, J. (2001). DRC: A dual route cascaded model of visual word recognition and reading aloud. Psychological Review, 108, 204–256. doi:10.1037/0033-295X.108.1.204

Davis, C. J. (1999). The self-organising lexical acquisition and recognition (SOLAR) model of visual word recognition (University of New South Wales, Sydney, NSW, Australia). Dissertation Abstracts International, 62, 594.

Davis, C. J. (2003). Factors underlying masked priming effects in competitive network models of visual word recognition. In S. Kinoshita & S. J. Lupker (Eds.), Masked priming: The state of the art (pp. 121–170). Hove: Psychology Press.

Davis, C. J. (2010). The spatial coding model of visual word identification. Psychological Review, 117, 713–758. doi:10.1037/a0019738

Davis, C. J., & Lupker, S. J. (2006). Masked inhibitory priming in English: Evidence for lexical inhibition. Journal of Experimental Psychology: Human Perception and Performance, 32, 668–687. doi:10.1037/0096-1523.32.3.668

De Moor, W., Van der Herten, L., & Verguts, T. (2007). Is masked neighbor priming inhibitory? Evidence using the incremental priming technique. Experimental Psychology, 54, 113–119.

Duñabeitia, J. A., & Costa, A. (2014). Lying in a native and foreign language. Psychonomic Bulletin & Review, 22, 1124–1129. doi:10.3758/s13423-014-0781-4

Forster, K. I. (1987). Form-priming with masked primes: The best match hypothesis. In M. Coltheart (Ed.), Attention and performance XII (pp. 127–146). Hillsdale: Erlbaum.

Forster, K. I., Davis, C., Schoknecht, C., & Carter, R. (1987). Masked priming with graphemically related forms: Repetition or partial activation. Quarterly Journal of Experimental Psychology, 39A, 211–251. doi:10.1080/14640748708401785

Forster, K. I., & Veres, C. (1998). The prime lexicality effect: Form-priming as a function of prime awareness, lexical status, and discrimination difficulty. Journal of Experimental Psychology: Learning, Memory, and Cognition, 24, 498–514. doi:10.1037/0278-7393.24.2.498

Goldinger, S. D., Azuma, T., Abramson, M., & Jain, P. (1997). Open wide and say “blah!”: Attentional dynamics of delayed naming. Journal of Memory and Language, 37, 190–216.

Goldwater, B. C. (1972). Psychological significance of pupillary movements. Psychological Bulletin, 77, 340–355.

Grainger, J. (1992). Orthographic neighborhoods and visual word recognition. In R. Frost & L. Katz (Eds.), Orthography, phonology, morphology, and meaning (pp. 131–146). Amsterdam: Elsevier Science. doi:10.1016/S0166-4115(08)62792-2

Grainger, J. (2008). Cracking the orthographic code: An introduction. Language and Cognitive Processes, 23, 1–35. doi:10.1080/01690960701578013

Grainger, J., & Jacobs, A. M. (1996). Orthographic processing in visual word recognition: A multiple read-out model. Psychological Review, 103, 518–565. doi:10.1037/0033-295X.103.3.518

Hochmann, J.-R., & Papeo, L. (2014). The invariance problem in infancy: A pupillometry study. Psychological Science, 25, 2038–2046.

Hoffman, L., & Rovine, M. J. (2007). Multilevel models for the experimental psychologist: Foundations and illustrative examples. Behavior Research Methods, 39, 101–117.

Jacobs, A. M., Grainger, J., & Ferrand, L. (1995). The incremental priming technique: A method for determining within-condition priming effects. Perception & Psychophysics, 57, 1101–1110. doi:10.3758/BF03208367

Kučera, H., & Francis, W. (1967). Computational analysis of present-day American English. Providence: Brown University Press.

Kuchinke, L., Võ, M. L.-H., Hofmann, M., & Jacobs, A. M. (2007). Pupillary responses during lexical decisions vary with word frequency but not emotional valence. International Journal of Psychophysiology, 65, 132–140. doi:10.1016/j.ijpsycho.2007.04.004

Laeng, B., Sirois, S., & Gredebäck, G. (2012). Pupillometry: A window to the preconscious? Perspectives on Psychological Science, 7, 18–27. doi:10.1177/17456916114273055

Locker, L., Hoffman, L., & Bovaird, J. A. (2007). On the use of multilevel modeling as an alternative to items analysis in psycholinguistic research. Behavior Research Methods, 39, 723–730. doi:10.3758/BF03192962

Lupker, S. J., & Davis, C. J. (2009). Sandwich priming: A method for overcoming the limitations of masked priming by reducing lexical competitor effects. Journal of Experimental Psychology: Learning, Memory, and Cognition, 35, 618–639.

Massol, S., Grainger, J., Dufau, S., & Holcomb, P. (2010). Masked priming from orthographic neighbors: An ERP investigation. Journal of Experimental Psychology: Human Perception and Performance, 36, 162–174. doi:10.1037/a0017614

Masson, M. E. J., & Kliegl, R. (2013). Modulation of additive and interactive effects in lexical decision by trial history. Journal of Experimental Psychology: Learning, Memory, and Cognition, 39, 898–914. doi:10.1037/a0029180

McClelland, J. L., & Rumelhart, D. E. (1981). An interactive activation model of context effects in letter perception: I. An account of basic findings. Psychological Review, 88, 375–407. doi:10.1037/0033-295X.88.5.375

Monsell, S. (1991). The nature and locus of word frequency effects in reading. In D. Besner & G. W. Humphreys (Eds.), Basic processes in reading: Visual word recognition (pp. 148–197). Hillsdale: Erlbaum.

Morris, A. L., & Still, M. L. (2012). Orthographic similarity: The case of “reversed anagrams.”. Memory & Cognition, 40, 779–790. doi:10.3758/s13421-012-0183-7

Naccache, L., Blandin, E., & Dehaene, S. (2002). Unconscious masked priming depends on temporal attention. Psychological Science, 13, 416–424. doi:10.1111/1467-9280.00474

Nakayama, M., Sears, C. R., & Lupker, S. J. (2008). Masked priming with orthographic neighbors: A test of the lexical competition assumption. Journal of Experimental Psychology: Human Perception and Performance, 34, 1236–1260.

Papesh, M. H., & Goldinger, S. D. (2012). Pupil-BLAH-metry: Cognitive effort in speech planning reflected by pupil dilation. Attention, Perception, & Psychophysics, 74, 754–765. doi:10.3758/s13414-011-0263-y

Perea, M., & Pollatsek, A. (1998). The effects of neighborhood frequency in reading and lexical decision. Journal of Experimental Psychology: Human Perception and Performance, 24, 767–779. doi:10.1037/0096-1523.24.3.767

Raaijmakers, J. G. W., Schrijnemakers, J. M. C., & Gremmen, F. (1999). How to deal with “the language-as-fixed-effect fallacy”: Common misconceptions and alternative solutions. Journal of Memory and Language, 41, 416–426. doi:10.1006/jmla.1999.2650

Sears, C. R., Campbell, C. R., & Lupker, S. J. (2006). Is there a neighborhood frequency effect in English? Evidence from reading and lexical decision. Journal of Experimental Psychology: Human Perception and Performance, 32, 1040–1062. doi:10.1037/0096-1523.32.4.1040

Segui, J., & Grainger, J. (1990). Priming word recognition with orthographic neighbors: Effects of relative prime–target frequency. Journal of Experimental Psychology: Human Perception and Performance, 16, 65–76. doi:10.1037/0096-1523.16.1.65

Slattery, T. J. (2009). Word misperception, the neighbor frequency effect, and the role of sentence context: Evidence from eye movements. Journal of Experimental Psychology: Human Perception and Performance, 35, 1969–1975. doi:10.1037/a0016894

Zimmerman, R., & Gomez, P. (2012). Drawing attention to primes increases inhibitory word priming effects. Mental Lexicon, 7, 119–146. doi:10.1075/ML.7.2.01zim

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Geller, J., Still, M.L. & Morris, A.L. Eyes wide open: Pupil size as a proxy for inhibition in the masked-priming paradigm. Mem Cogn 44, 554–564 (2016). https://doi.org/10.3758/s13421-015-0577-4

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13421-015-0577-4