Abstract

When people estimate their memory for to-be-learned material over multiple study–test trials, they tend to base their judgments of learning (JOLs) on their test performance for those materials on the previous trial. Their use of this information—known as the memory for past-test (MPT) heuristic—is believed to be responsible for improvements in the relative accuracy (resolution) of people’s JOLs across learning trials. Although participants seem to use past-test information as a major basis for their JOLs, little is known about how learners translate this information into a judgment of learning. Toward this end, in two experiments, we examined whether participants factored past-test performance into their JOLs in either an explicit, theory-based way or an implicit way. To do so, we had one group of participants (learners) study paired associates, make JOLs, and take a test on two study–test trials. Other participants (observers) viewed learners’ protocols and made JOLs for the learners. Presumably, observers could only use theory-based information to make JOLs for the learners, which allowed us to estimate the contribution of explicit and implicit information to learners’ JOLs. Our analyses suggest that all participants factored simple past-test performance into their JOLs in an explicit, theory-based way but that this information made limited contributions to improvements in relative accuracy across trials. In contrast, learners also used other privileged, implicit information about their learning to inform their judgments (that observers had no access to) that allowed them to achieve further improvements in relative accuracy across trials.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

How well people’s judgments of learning (JOLs) discriminate learned from unlearned items—relative accuracy—is a central topic in metamemory research (Dunlosky & Metcalfe, 2009; Dunlosky, Serra, & Baker, 2007). On an initial study–test trial, people’s JOLs typically exhibit modest relative accuracy (typically operationalized as a gamma correlation between participants’ JOLs and recall outcomes across items). This measure, however, improves on subsequent study–test trials, largely because people use their performance on the previous study–test trial as a major basis for their JOLs for the same items on the current trial (Ariel & Dunlosky, 2011; England & Serra, 2012; Finn & Metcalfe, 2007, 2008; King, Zechmeister, & Shaughnessy, 1980; Tauber & Rhodes, 2012; Vesonder & Voss, 1985). Researchers refer to people’s use of this information for JOLs as the memory for past test (MPT) heuristic. Although it seems clear that people use this information to inform their JOLs, it is unclear how people incorporate this information into their judgments. The present experiments examined whether people use MPT to make JOLs in an implicit way or an explicit, theory-based way.

Implicit versus explicit cue usage

Theories about how people make metamemory judgments have centered on the assertion that metacognitive judgments are inferential in nature (Schwartz, Benjamin, & Bjork, 1997). Inferences about a given cognitive process are informed by cues (information about the target cognition) that people consult to judge the status of that process (Koriat, 1997). One distinction related to the types of cues people use to make JOLs focuses on how people use cues. More specifically, people can use cues to make their judgments in an explicit, theory-based way (i.e., on the basis of their beliefs or naïve theories about how that cue is related to memory; Briñol, Petty, & Tormala, 2006; Mueller, Tauber, & Dunlosky, 2013; Serra & Dunlosky, 2010), or they can use cues in a more implicit, experience-based way (Koriat & Ackerman, 2010; Koriat, Nussinson, Bless, & Shaked, 2008). In the case of implicit cue usage, evidence suggests that people use cues on the basis of how those cues predict memory in the environment, presumably on the basis of their past experiences of their predictability (Gigerenzer & Goldstein, 1996; Koriat, 2008; Koriat & Ma’ayan, 2005; but see Serra & England, 2012).

Admittedly, it can be difficult to differentiate between implicit and explicit cognitive processes, or even to get researchers to agree on what constitutes an implicit or explicit process (Dienes & Perner, 1999; Shanks & St John, 1994). For the present purposes, we adopt the same distinction between implicit and explicit cue usage for metacognitive judgments that others have used (i.e., Koriat & Ackerman, 2010; Koriat et al., 2008). More specifically, Koriat and Ackerman defined explicit cue usage as “the deliberate application of theories and beliefs that yield an educated judgment” (p. 253) and implicit cue usage as “metacognitive feelings [formed] on the basis of heuristics that operate below full consciousness” (p. 253). In laboratory settings where participants make metacognitive judgments such as JOLs, the judgments themselves are clearly explicit and conscious; the question, instead, is whether people use a cue for JOLs such as past-test performance in either an explicit (deliberate and conscious) or an implicit (below full consciousness) way.

To date, the modal approach for examining whether people use a specific cue in either an implicit or an explicit way to inform their JOLs has involved comparing people’s (henceforth, learners) own JOLs with JOLs that another group of participants (henceforth, observers) made for imagined, hypothetical, or yoked learners. This method was introduced by Vesonder and Voss (1985) and has been used by numerous others since to answer a variety of questions (Brennan & Williams, 1995; Jameson, Nelson, Leonesio, & Narens, 1993; Koriat & Ackerman, 2010; Matvey, Dunlosky, & Guttentag, 2001; Undorf & Erdfelder, 2011, 2013). The rationale behind this method is that observers have no access to the learners’ privileged, implicit information. As such, observers can only make theory-based JOLs for learners. By comparing how JOLs for the two groups differ in magnitude, accuracy, or cue utilization, researchers can infer whether the learners use a given cue in either a theory-based or an implicit way to make JOLs.

For example, Matvey et al. (2001) examined how people use retrieval fluency as a cue for JOLs. They had learners generate responses to items and make JOLs. Yoked observers made JOLs for the learners on the basis of a variety of information that was provided to them (which the authors varied across conditions). Most central to their research question, when the observers had access to participants’ retrieval latency, they showed the same correlations between JOLs and retrieval latency as did the learners. Importantly, by providing one group of observers with the to-be-generated information already filled in during the observation task, Matvey et al. deprived these “pure observers” of their ability to themselves attempt the generation of their own responses. As such, these observers were limited to explicit information when making their judgments for the yoked learner and could not feasibly perform the generation task themselves and use their own experience to make judgments. Nevertheless, their judgments were correlated with learners’ generation latencies, just as were the learners’ judgments. This outcome suggested that peoples’ use of retrieval fluency to inform JOLs was based on an explicit inference about how it relates to memory performance.

The present experiments

The purpose of the present experiments was to determine whether people use MPT information to make JOLs in an implicit way or in an explicit (theory-based) way. This research question is actually quite similar to that of Vesonder and Voss (1985). They had learners and observers (dubbed “listeners,” rather than “observers,” because they received information about the learners’ performance aurally) make JOLs for the learners over multiple study trials. In that experiment, both groups made only moderate use of previous-trial performance to make JOLs on the current trial, but the two groups’ use of this information did not differ. As such, Vesonder and Voss concluded that both groups used this information explicitly. Although these results are informative, Vesonder and Voss’s participants made their JOLs on a binary (yes/no) scale. Such a scale denies participants the ability to make fine distinctions with their judgment magnitudes, and precludes more advanced analyses of the accuracy and cue usage of their JOLs. For this reason, we had participants make JOLs on a 0 %–100 % scale, as is more common in JOL research (Dunlosky & Metcalfe, 2009; Dunlosky et al., 2007).

Using a different method, Tauber and Rhodes (2012) recently compared older and younger adults’ use of the MPT heuristic and found that both groups’ JOLs made equal use of this heuristic. Because older adults show deficits in explicit memory, as compared with younger adults, they concluded that participants’ utilization of MPT for JOLs must involve at least some implicit processes (otherwise, older adults’ use of MPT would be impaired). Both groups, however, actually performed the memory task and, hence, also had implicit information available to them while making JOLs (in addition to past-test information). This information was likely correlated with past-test performance and could have aided them when making JOLs, even if utilized implicitly. Unfortunately, there was no way to separate the older and younger adults’ use of implicit or explicit information in Tauber and Rhodes’s study. Therefore, in the present experiment, we adopted a procedure based on Matvey et al.’s (2001) learner and pure observer groups to further examine how people use past-test performance as a cue for JOLs.

If participants use MPT in a mostly theory-based way (Vesonder & Voss, 1985), we would expect trial 2 JOLs for both learners and observers to be sensitive to past-test performance for items. If so, we would expect both groups’ trial 2 JOLs to correlate with the learners’ trial 1 recall performance and to demonstrate an increase in relative accuracy across trials (England & Serra, 2012; Finn & Metcalfe, 2007, 2008). If, however, participants use MPT in a mostly implicit way (Tauber & Rhodes, 2012), we would expect only the learners’ JOLs to show sensitivity to past-test performance on trial 2 and to increase in relative accuracy across trials.

Furthermore, the present method allows us to examine relative accuracy over study–test trials using analyses introduced by Ariel and Dunlosky (2011). These authors decomposed participants’ trial 2 relative accuracy into three subcomponents: (1) the sensitivity of JOLs to MPT, (2) the sensitivity of JOLs to new learning between trials 1 and 2, and (3) the sensitivity of JOLs to new forgetting between trials 1 and 2. They found that MPT information contributed greatly to increases in relative accuracy across study–test trials but that the sensitivity of JOLs to new learning and forgetting over trials also contributed to this increase. Learners (i.e., standard participants in most JOL studies) can likely incorporate MPT and other cues that predict new learning and new forgetting into their JOLs in either an implicit or an explicit way (Ariel & Dunlosky, 2011; Tauber & Rhodes, 2012). Although observers might be able to use explicit, theory-based information about learners’ past-test performance to make JOLs, they might not be able to infer learners’ new learning or forgetting over trials, because they will not have access to experiential cues that are diagnostic of each. Therefore, we would expect learners and observers to make comparable use of MPT to make trial 2 JOLs but would expect the observers to be unable to use privileged information about learners’ new learning or forgetting over trials. If so, we would expect the relative accuracy of the learners’ JOLs to exceed those of the observers.

Experiment 1

Method

Participants, materials, and design

One hundred undergraduates at Kent State University participated in exchange for course credit. The materials were 66 unrelated noun pairs (e.g., spinach–typhoon; corn–planet) from Serra, Dunlosky, and Hertzog (2008). The independent variable was whether a participant was a learner or an observer.

Procedure

Our assignment of participants to groups was quasirandom; some learners had to complete the procedure first to create data for subsequent yoked observers to participate. After we obtained some initial learner data, we were able to randomly assign subsequent participants to one of the two groups. For both groups, personal computers running a custom program presented the materials to participants and collected all of their responses.

Learners

Participants in the learner group first read instructions informing them of the memory task (i.e., that they would be studying several-dozen paired associates over two study–test trials) and JOL task. The computer program randomly selected 60 pairs to serve as each learner’s study list for the two study–test trials. The computer program then randomized the order of these pairs for each learner and presented them individually for a fixed duration of 7 s/pair. Learners made a JOL for each item immediately after studying it. They were presented with the first word in the pair and were asked, “How likely do you think you will be to type the second word of this pair when shown the first word approximately 10 minutes from now?” This JOL was made on a scale from 0 (0 % chance of recalling) to 100 (100 % chance of recalling). After learners studied all of the items, the program presented the pairs in a newly randomized order for recall. The instructions encouraged learners to type a response corresponding to each stimulus but allowed omissions. The program then repeated the entire procedure for a second study–test trial in which the learners again studied, judged, and tested over the same items (in newly randomized orders).

Observers

We based the procedure for the observer group on that of the “pure observer” group in Matvey et al. (2001). Observers first read instructions informing them of the task (Table 7 in the Appendix presents these instructions in their entirety). The instructions differed from those of the learner group in that they explained that another participant (i.e., a learner) had already completed the task (i.e., they had already studied 60 paired associates for 7 s each over two study–test trials) and that the observer would be making JOLs about the likelihood that the learner recalled each item on the two tests. Importantly, the instructions indicated that observers were not to study the items themselves but to simply make predictions about the learner’s performance. The instructions also informed the observers that they would see the person’s responses during the recall phases (although this actually occurred only on trial 1). Observers saw each item for 7 s in the same order as their yoked learner did and made a JOL for the learner for each item immediately after seeing it. Specifically, observers were asked, “How likely do you think the original learner was to type the second word of this pair when shown the first word approximately 10 minutes after studying it?”

Importantly, much like the pure observer condition in Matvey et al. (2001), during the trial 1 recall phase, the observers saw each pair in full regardless of whether the learner recalled it correctly on trial 1. We did this to prevent the observers from attempting retrieval so they could not use their own retrieval outcome or retrieval fluency experience as the basis for their JOLs for the learner. For each item, observers also saw the learner’s response appear in full (if the learner entered a response) at the latency it took the learner to enter it. A “Next Item” icon then appeared on the screen, which the observers clicked to move to the next item. On trial 2, observers again made JOLs predicting the learners’ recall on that trial. The observers then completed a surprise trial 2 cued recall test to measure their incidental learning of items.

Results and discussion

Table 1 presents the mean recall, JOL, relative accuracy, MPT correlation, and retrieval fluency correlation for the two groups. Relative accuracy is the mean gamma correlation between participants’ JOLs and recall on a given trial (Dunlosky et al., 2007), and MPT is the mean gamma correlation between participants’ trial 2 JOLs and trial 1 recall (Finn & Metcalfe, 2007, 2008). The retrieval fluency correlations are the mean gamma correlation between the learners’ trial 1 retrieval fluency and trial 2 JOLs (Serra & Dunlosky, 2005). Mean JOL magnitude did not differ for the two groups and did not change across trials. Learners’ recall increased from trial 1 to trial 2, F(1, 49) = 258.4, MSE = 68.3, p < .001, η p 2 = .8. Recall on trial 2 was higher for learners than for observers, F(1, 98) = 5.6, MSE = 570.7, p = .02, η p 2 = .05.

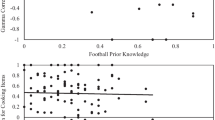

Relative-accuracy scores were higher for the learners (correlating the learners’ JOLs with their own recall) than for the observers (correlating the observers’ JOLs with the learners’ recall), F(1, 95) = 101.5, MSE = 0.1, p < .001, η p 2 = .5, and relative accuracy improved across trials, F(1, 95) = 13.3, MSE = 0.1, p < .001, η p 2 = .1. That said, the interaction between group and trial was significant, F(1, 95) = 7.4, MSE = 0.1, p = .008, η p 2 = .07, since most of the improvement across trials was from the learner group. Accordingly, follow-up t-tests indicated that these relative accuracy scores improved across trials for the learners, t(48) = 4.6, p < .001, d = 0.9, but not for the observers. Furthermore, follow-up t-tests indicated that relative accuracy was higher for the learners than for the observers on both trial 1, t(98) = 5.2, p < .001, d = 1.1, and on trial 2, t(98) = 8.8, p < .001, d = 1.8. MPT correlations were higher for the learners (correlating the learners’ trial 1 recall with their trial 2 JOLs) than for the observers (correlating the learners’ trial 1 recall with the observers’ trial 2 JOLs), F(1, 97) = 65.7, MSE = 0.1, p < .001, η p 2 = 0.4. The means of both retrieval fluency correlations were small, and the difference in these means for the two groups was only marginally significant, F(1, 97) = 3.8, MSE = 0.04, p = .06, η p 2 = .04. Therefore, neither group used this information as a major basis for their JOLs (but see Matvey et al., 2001; Serra & Dunlosky, 2005).

We also calculated relative accuracy correlations between the observers’ JOLs on trial 2 (made for the learners) and the observers’ own performance on the surprise trial 2 test (Table 1). Observers’ JOLs on trial 2 did not predict their own recall on the test significantly better than they predicted the learners’, t(46) = 1.7, p = .09, d = 0.8, although a trend was apparent.

Decomposing relative accuracy

Next, we examined the contribution of JOL sensitivity to (1) MPT, (2) new learning, and (3) new forgetting to the relative accuracy of JOLs by using a decomposition of this measure (for details, see Ariel & Dunlosky, 2011; Nelson, Narens, & Dunlosky, 2004). This decomposition involves computing trial 2 relative accuracy (gamma) for subsets of items conditionalized on the items’ trial 1 recall status. To examine the influence of MPT on JOL relative accuracy, we analyzed only dyads of items consisting of comparisons between items recalled on trial 1 and items not recalled on trial 1 (γ RN). To evaluate whether the relative accuracy of JOLs on trial 2 was sensitive to new learning for items across trials, we computed gamma only for dyads of items that were not recalled on trial 1 by learners (referred to as γ NN). Finally, to assess the influence of sensitivity to forgetting on trial 2, we computed gamma using only dyads of items that were recalled on trial 1 by learners (γ RR). Given that the dyads used to compute γ RN, γ NN, and γ RR consist of all pairs used to compute overall relative accuracy, one can derive the value for overall relative accuracy using the following equation:

The P RN, P NN, and P RR parameters reflect the proportion of dyads used to compute each respective gamma. Thus, the magnitude of the P parameter indicates the weighted contribution of the corresponding factor to overall relative accuracy, with low values reflecting limited influence and high values reflecting large influences (independently of the magnitude of that gamma).

We present means for the parameters in Eq. 1 based on the learners’ recall outcomes on the two trials in the left half of Table 2. Note that the proportion of dyads contributing to the computation of each gamma is functionally equivalent for both groups (i.e., P RN, P NN, P RR). This indicates that differences in relative accuracy on trial 2 are due to differences in discriminative ability between subsets of items and not due to differences in the types or proportion of items contributing to their computation.

First, consider the potential contribution of MPT to trial 2 relative accuracy by examining γ RN. For γ RN, both learners’, t(48) = 29.07, p < .001, d = 8.4, and observers’, t(47) = 5.80, p < .001, d = 1.7, values differed significantly from zero. Thus, both groups’ JOLs on trial 2 discriminated between items previously recalled and items previously not recalled by learners. However, γ RN was higher for the learners (.83) than for the observers (.33), t(95) = 7.84, p < .001, d = 1.6. This discrimination factored into the magnitude of learners’ JOLs far more than it factored into that of observers’ JOLs, resulting in different overall gamma correlations.

Next, we examined γ NN, which reflects the contribution of sensitivity to new learning to relative accuracy. Only the learners’ JOLs were sensitive to new learning on trial 2 (i.e., differed from zero), t(48) = 8.72, p < .001, d = 2.5, and accordingly γ NN was significantly greater for learners (.42) than for observers (.04), t(92) = 5.99, p < .001, d = 1.3. These data suggest that people’s JOLs tap idiosyncratic cues that predict new learning for items but that these cues—being based on privileged information such as encoding fluency (Ariel & Dunlosky, 2011; Tauber & Rhodes, 2012)—are available only to the learners. Although γ NN was modest in magnitude even for the learners, it contributed to almost half of the overall relative accuracy values (proportion-wise) on trial 2 for both groups. Given that both the γ RN and γ NN values for the observers greatly trailed those for the learners, it is not surprising that the observers did not achieve the same overall level of relative accuracy as the learners (Table 1).

Inferential statistics were not conducted for γ RR because of the low value for P RR for both groups. The low P RR value indicates that a very small proportion of dyads contributed to the computation of γ RR (because so few items that learners correctly recalled on trial 1 were forgotten on trial 2). Thus, even if JOLs were sensitive to forgetting, JOL relative accuracy would not be greatly influenced by this sensitivity.

By performing the same decomposition using the observers’ trial 2 recall, we were able to further consider the sensitivity of participants’ JOLs to new learning and forgetting. The bottom-left quarter of Table 2 reveals that observers were not sensitive to learners’ new learning across trials. When we compared these γ NN and γ RR values to the values using the observers’ trial 2 recall (bottom-right quarter of Table 2), however, values were higher for γ NN, t(45) = 2.37, p = .02, and for γ RR, t(15) = 2.49, p = .03, d = 1.3. Therefore, much like learners, observers used privileged information about their own learning to inform their JOLs for the learners. This suggests that all participants factor new learning and forgetting across trials into their JOLs in an implicit way. Regardless, we conducted a second experiment to address some of the limitations of Experiment 1.

Experiment 2

The results of Experiment 1 revealed that learners and observers both use MPT when constructing JOLs. Given that observers did not engage in actual study or testing before making their trial 2 JOLs (and the learners’ prior recall was not their own prior recall), they apparently used MPT in a theory-based manner (Vesonder & Voss, 1985). Even so, MPT exerted a stronger influence on learners’ than on observers’ JOLs. One explanation for this difference is that observers may have had poorer memory for past-test performance than did learners. Learners’ memory for their own previous test performance is typically near ceiling (Finn & Metcalfe, 2008; Klee & Gardiner, 1976), but it is unknown how well people can remember the past-test performance of others. If observers’ memory for the test performance of learners is inaccurate, it would reduce our estimates of their use of MPT as a basis for the JOLs they made for learners.

In Experiment 2, we directly evaluated observers’ memory for learners’ recall by including a new group, observers with MPT tested. Immediately before making each JOL on trial 2, these observers reported whether the learner whose performance they were predicting correctly recalled that item on the previous test. This allowed us to determine how accurate their memory was for the learners’ previous-trial recall and also allowed us to conduct MPT analyses based on both the learners’ actual recall and these observers’ memory of that recall.

Given the possibility that observers might have impaired memory for the learners’ past-test recall, as compared with the learners themselves (who typically have near-perfect memory for past-test; Finn & Metcalfe, 2008), we also included a second new group, observers with MPT provided, to which we provided explicit information about the learners’ previous recall status for each item prior to their making each trial 2 JOL. This allowed us to equate accuracy of MPT information for these observers and the learners and to rule out an alternative explanation for the influence of MPT on observers’ JOLs. If observers’ use of MPT information is not purely theory based but is also based, in part, on their experience of retrieving learners’ past-test performance from trial 1, then the experience of recalling that event in an episodic way could provide cues that influence MPT use beyond just previous objective recall status. If observers still rely on recall status for items when they are not required to retrieve it themselves, it would definitively demonstrate that people use MPT in a theory-based manner.

It is also possible that we disadvantaged the observers in Experiment 1 by using study materials that did not differ greatly in normative difficulty across items. As a fairer test of observers’ ability to predict memory performance for the learners, in Experiment 2 we used study materials that varied in normative difficulty across items.

Method

Participants, materials, and design

One hundred eighty undergraduates at Texas Tech University participated for course credit. The materials were 120 Lithuanian–English word pairs (e.g., arbata–tea; plyta–brick) from Grimaldi, Pyc, and Rawson (2010), which involved greater variation in normative difficulty across items (at least for English speakers who do not know Lithuanian) than did the unrelated word pairs in Experiment 1. As in Experiment 1, the computer program randomly selected 60 of the 120 pairs for each learner. The independent variable was whether a participant was a learner, an observer, an observer for whom the learner’s MPT info was tested when making trial 2 JOLs, or an observer for whom the learner’s MPT info was provided when making trial 2 JOLs.

Procedure

Our assignment of individual participants to groups was quasirandom in that some participants in the learner group had to complete the procedure first to create data for subsequent yoked observers to participate. After we had obtained some initial learner data, however, we were able to randomly assign subsequent participants to one of the four groups.

Learners

The procedure for the learners was the same as that for the learners in Experiment 1, except for the change in the study materials.

Observers

The procedure for the observers was the same as that for the observers in Experiment 1, except for the change in the study materials and the fact that we did not provide any retrieval latency information during the trial 1 test. Instead, as the observers viewed the learners’ performance on the trial 1 test, the learners’ recall output for each pair appeared on the screen immediately as each pair appeared on the screen. As in Experiment 1, we did not provide the observers with any feedback about learners’ recall accuracy. In between the trial 2 study and testing phases (and after we informed the observers of the surprise trial 2 test), we asked observers whether they had expected such a test; they clicked “YES” or “NO” on the screen to answer. The outcome of this response did not affect the results, so we do not report this outcome here. Instead, we discuss the implications of this measure in the “General discussion” section.

Observers with MPT tested

The procedure for the observers with MPT tested was the same as that for the observers in Experiment 2, except that we tested their memory for the learners’ past-test performance for each item on trial 2. After they viewed each item during the trial 2 study phase, the program asked them whether or not the learner recalled that item on the trial 1 test. Participants clicked either a “YES” or “NO” icon on the screen and then made their JOL.

Observers with MPT provided

The procedure for the observers with MPT provided was the same as that for the observers with MPT tested in Experiment 2, except that after they had viewed each item during the trial 2 study phase, the program indicated whether the learner recalled that item correctly on the trial 1 test. The participants clicked an “OK” icon to acknowledge the information and then made their JOL.

Results and discussion

Table 3 presents the mean recall, JOL, relative accuracy, and MPT correlation for each group. The overall JOL magnitude did not change across trials, and trial did not interact with group. The JOL magnitude, however, did differ by group, F(3, 176) = 3.2, MSE = 609.0, p = .02, η p 2 = .05. Tukey HSD post hoc comparisons revealed that JOLs were significantly lower for the observers with MPT provided than for the standard observer group (p = .01, d = 0.7), but no other differences were significant. Learners’ recall increased from trial 1 to trial 2, F(1, 44) = 193.5, MSE = 60.9, p < .001, η p 2 = .8. Recall differed between groups on trial 2, F(3, 176) = 22.8, MSE = 230.3, p < .001, η p 2 = .3. Tukey HSD post hoc comparisons revealed that trial 2 recall was significantly higher for the learners, as compared with the three observer groups (ps < .001, ds > 1.1), but the recall of the three observer groups did not differ.

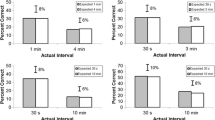

Overall relative accuracy scores (correlating all groups’ JOLs with the learners’ recall) differed by group, F(3, 166) = 20.5, MSE = 0.1, p < .001, η p 2 = .3. Tukey HSD post hoc comparisons revealed that relative accuracy was significantly higher for the learners than for the three observer groups (ps < .001, ds > 0.8), but the relative accuracy of the three observer groups did not differ. Relative accuracy was marginally higher for observers with MPT provided than for observers with MPT tested (p = .06, d = 0.7). Overall, relative accuracy improved across trials, F(1, 166) = 29.4, MSE = .06, p < .001, η p 2 = .2, but the interaction between group and trial was not significant. We conducted follow-up t-tests, as in Experiment 1, to examine whether the relative accuracy of the groups’ JOLs increased across trials. These analyses indicated that relative accuracy improved across trials for the learner group, t(41) = 4.5, p < .001, d = 1.4, for the observer group, t(42) = 2.7, p = .01, d = 0.8, and for the observer group with MPT provided, t(41) = 3.3, p = .002, d = 1.1, but not for the observer group with MPT tested.

As in Experiment 1, we also conducted focused comparisons of relative accuracy between groups. Relative accuracy on trial 1 differed for the four groups, F(3, 170) = 8.3, MSE = 0.1, p < .001, η p 2 = .1. Tukey HSD post hoc comparisons revealed that trial 1 relative accuracy was significantly higher for the learners than for the three observer groups (ps < .01, ds > 0.7) but did not differ between the three observer groups. So, although the new materials might have provided more information about the normative difficulty of the items to the observers than did the materials in Experiment 1, learners’ relative accuracy was still better than that of observers on trial 1. Similarly, relative accuracy on trial 2 differed between groups, F(3, 171) = 18.9, MSE = 0.1, p < .001, η p 2 = .2. Tukey HSD post hoc comparisons revealed that trial 2 relative accuracy was significantly higher for the learners than for the three observer groups (ps < .005, ds > 0.8). It was also significantly higher for the observer group with MPT provided than for the observer group with MPT tested (p = .002, d = 0.7). These results indicate that no observer group’s relative accuracy matched that of the learner group but that having MPT information provided on trial 2 did improve relative accuracy somewhat (Ariel & Dunlosky, 2011).

MPT correlations differed for the four groups, F(3, 167) = 10.7, MSE = 0.1, p < .001, η p 2 = .2. Tukey HSD post hoc comparisons revealed that MPT correlations were significantly higher for the learners than for the observers and the observers with MPT tested (both ps < .001, ds > 1.1) but did not differ from those of the observers with MPT provided (although this difference might have also been significant if we had greater statistical power). Furthermore, MPT correlations were significantly higher for the observers with MPT provided than for the observers and the observers with MPT tested (ps < .05, ds > 0.5). In line with the relative accuracy results, these MPT results suggest that observers were unable to utilize MPT information to the same extent as the learners were, unless it was provided to them. But how well could the observer groups recall the learners’ past-test performance on their own?

The observer group for which we tested MPT on trial 2 correctly recalled 77 % of the learners’ past performance outcomes. This is a respectable score but does not match the near-perfect ability of learners to explicitly recall their own past-test performance (Finn & Metcalfe, 2008). As such, at least some of the impairment in observers’ use of MPT information could stem from their reduced memory for the learners’ past-test performance. To examine this, we calculated MPT correlations for the observer group with MPT tested on the basis of what they thought the learners’ past-test performance was. This yielded a mean MPT correlation of .83 (SD = .32). This value was significantly higher than the value calculated for this group using the learners’ actual past-test performance, t(42) = 5.1, p < .001, d = 1.6. Furthermore, this value was statistically equivalent to that of the learner group, t(84) = 1.0, p = .1, d = 0.2.

Although, at first, the finding that observers’ inaccurate memory for past-test performance might explain away the results of Experiment 1, consider the observer group to which we provided MPT information in Experiment 2. This group used MPT information to inform their JOLs for the learners to the same extent as the actual learners, and yet their trial 2 relative accuracy did not match that of the learners. Therefore, it is further apparent that MPT information alone—even when perfectly accurate—is not sufficient to produce the increases in relative accuracy typically observed across study–test trials (Ariel & Dunlosky, 2011).

We also calculated relative accuracy correlations between each observer groups’ JOLs on trial 2 (made for the learners) with their own performance on the surprise trial 2 test (Table 3). In this case, relative accuracy differed across the four groups, F(3, 165) = 4.5, MSE = 0.1, p = .005, η p 2 = .08. Tukey HSD post hoc comparisons revealed that relative accuracy differed only for the learners and the observers with MPT tested (p = .002, d = 0.8). This suggests that at least two of the observer groups (observers and observers with MPT provided) used information about their own incidental learning for the materials as part of their basis for their JOLs. This further supports our conclusion from Experiment 1 that people incorporate new learning (and new forgetting, when it occurs) across trials into their JOLs in a mostly implicit way. To further explore this, we again conducted decompositions of relative accuracy.

Decomposing relative accuracy

We used the same decomposition as in Experiment 1 to examine the contribution of JOL sensitivity to (1) MPT, (2) new learning, and (3) new forgetting to the relative accuracy of each group’s JOLs. We present means for the parameters in Eq. 1 based on the learners’ recall outcomes on the two trials in the left half of Table 4.

First, consider the potential contribution of MPT to trial 2 relative accuracy by examining γ RN. The mean value of γ RN differed by group, F(3, 167) = 8.7, MSE = 0.1, p < .001, η p 2 = .1. More specifically, Tukey HSD post hoc comparisons revealed that this value was higher for the learners than for the three observer groups (p < .001, d = 1.2 for the observers and observers with MPT tested; p < .05, d = 0.6 for the observers with MPT provided). The values for the three observer groups did not differ from each other. Therefore, this discrimination factored into the magnitude of learners’ JOLs far more than it factored into the magnitude of the observer groups’ JOLs—even for the observer group to which we provided accurate MPT information—resulting in different overall gamma correlations.

Next, we examined γ NN, which reflects the contribution of sensitivity to new learning to relative accuracy. The mean value of γ NN differed by group, F(3, 169) = 14.6, MSE = 0.1, p < .001, η p 2 = .2. More specifically, Tukey HSD post hoc comparisons revealed that this value was higher for the learners than for any of the observer groups (ps < .001, ds > 0.9), but the values for the observer groups did not differ. As in Experiment 1, these data suggest that people’s JOLs tap idiosyncratic cues that predict new learning for items but that these cues—being based on privileged information such as encoding fluency—are available only to the learners.

Inferential statistics were not conducted for γ RR because the low values for P RR indicate that a very small proportion of dyads contributed to the computation of γ RR (because so few items correctly recalled on trial 1 were forgotten on trial 2). Thus, even if JOLs were sensitive to forgetting, JOL relative accuracy would not be greatly influenced by this sensitivity.

By performing the same decomposition of relative accuracy using the observers’ trial 2 recall (right half of Table 4), we were able to further consider the sensitivity of participants’ JOLs to new learning. Ignoring the learners, the left half of Table 4 reveals that the observer groups were not sensitive to learners’ new learning across trials (see γ NN). However, when we computed γ NN values using the observers’ trial 2 recall (right half of Table 2), γ NN differed significantly from zero for all three observer groups (ps < .005, ds > 1.0). Therefore, observers used privileged information about their own learning to inform their JOLs for the learners.

General discussion

The purpose of the present experiments was to examine improvements in the relative accuracy of JOLs across study–test trials. More specifically, we examined whether people factor MPT into their JOLs in either an implicit or an explicit way. To do so, we used an established method (i.e., the learner–observer method; Matvey et al., 2001) and a statistical decomposition of the relative accuracy measure (Ariel & Dunlosky, 2011). Importantly, our results resolve the conflicting results of earlier studies (Tauber & Rhodes, 2012; Vesonder & Voss, 1985).

Our results demonstrate that both learners and observers used learners’ test performance on trial 1 as a basis for JOLs for the learners on trial 2 but that the learners made greater use of this information than did the observers. Given that both groups used past-test information as a cue for trial 2 JOLs—and observers could not use past test information implicitly (i.e., on the basis of an experience of recalling or not recalling the items themselves on trial 1)—it seems that people in general use this information in an explicit, theory-based way to make JOLs (Vesonder & Voss, 1985). Furthermore, learners used information about their own new learning across trials when making trial 2 JOLs that was privileged to them and likely stemmed from their experiences studying the materials (e.g., encoding fluency). Related to this, observers consistently used privileged information about their own memory to make JOLs. This finding further supports our conclusion that people use information related to new learning across trials as a cue in an implicit way, since observers’ judgments for another person were biased by their own mental states (including their incidental learning). So, why do our results and conclusions differ from those of previous studies examining MPT?

First, Vesonder and Voss (1985) concluded that both learners and observers made explicit—and equal—use of past-test information to make JOLs across study trials. Our results further support their conclusion that people use past-test information in an explicit, theory-based way, but not their conclusion that learners and observers do so to an equivalent degree. Their results were likely limited by having their participants provide binary JOLs that did not allow them to make fine-grained discriminations between items. In contrast, our use of a 0 %–100 % likelihood scale made it clear that observers could indeed factor the learners’ past-test performance into their JOLs but that they could not make further discriminations based on new learning or new forgetting, as did the learners (see Tables 2 and 3). As such, the relative accuracy of the observers on trial 2 was lower than that of the learners.

Second, Tauber and Rhodes (2012) compared older and younger adults’ use of MPT and found that both groups made equal use of this heuristic to inform their JOLs. However, both of their groups actually performed the memory task and, hence, had implicit information available to them while making JOLs in addition to past-test information. Importantly, and as acknowledged by Tauber and Rhodes, our decomposition of relative accuracy makes it clear that past-test information is not the only factor that leads to increases in this measure across study–test trials (Ariel & Dunlosky, 2011). As such, their older adults likely used other cues besides MPT to make JOLs. In addition, the results of our observers demonstrate that, on its own, explicit past-test information is hardly sufficient to produce large increases in relative accuracy across trials (even when we provided perfectly accurate MPT information to a group of observers in Experiment 2). So, even if older adults truly have impaired episodic memory for their own past-test performance (which Tauber & Rhodes did not actually demonstrate), they may still be able to make highly accurate JOLs across study–test trials by relying on other implicit cues that are predictive of new learning and forgetting across trials.

Taken together, the present results further suggest that participants utilize a combination of cues in both explicit, theory-based ways (e.g., MPT) and privileged, implicit ways (e.g., factors related to new learning) to make JOLs across multiple study–test trials (Ariel & Dunlosky, 2011; Tauber & Rhodes, 2012). Importantly, it is the use of cues in implicit (i.e., mnemonic) ways that produces the greatest increases in relative accuracy across trials (Koriat, 1997). Although MPT is important for aiding the absolute accuracy of participants’ JOLs across trials (e.g., reducing underconfidence; see England & Serra, 2012), participants’ explicit use of past-test information to inform their JOLs seems to contribute little to the typical increases in the relative accuracy of JOLs across trials (e.g., observers with MPT provided in Experiment 2).

Potential limitations

Differences in JOL effort

One issue of concern with the present experiments is that observers might have put less thought or effort into making JOLs than did learners, which might explain why observers’ JOLs were, overall, less accurate than learners’ JOLs. To consider this, we report the mean amount of time (in seconds) that participants spent making JOLs in Table 5. Indeed, in Experiment 1, learners spent more time making JOLs than did observers on both trial 1 (p = .04, d = 0.4) and trial 2 (p = .002, d = 0.6); in Experiment 2, the groups did not differ on trial 1 (ps > .05), but learners spent more time making JOLs on trial 2 than did the other groups (ps < .005, ds > 0.6). There are, however, several potential reasons why learners might have spent more time making JOLs than the other groups.

First, it is possible that learners took longer than observers to make JOLs because learners engaged in more analytical thinking than did the observers. This idea, however, is inconsistent with our decomposition results, which showed that both groups used learners’ past-test performance as cue in a presumably analytic way. In contrast, a second possibility is that learners needed extra time to factor experiential information into their JOLs than did the observers, for whom we attempted to minimize experiential information. This possibility is better supported by our decomposition results than is the first possibility, but nevertheless we do not have enough information to fully conclude that this is why JOL response times differed. Third, given that learners’ study of the items was experimenter-controlled, it is also possible that learners spent more time making JOLs than did observers because learners used the JOL prompts as an opportunity for additional study time. This possibility seems likely given that we instructed the learners to encode the items and instructed observers not to encode them. Finally, it is also possible that observers began to consider their JOLs during the “study phase” of each item and then only needed time to enter their JOL value when given the prompt. In contrast, learners were presumably studying each item during the study phase and did not begin to form their JOLs until they reached the JOL prompt. This possibility would also produce the present pattern.

Deliberate encoding by observers

Despite our instructions to the contrary, it is possible that some observers might have chosen to purposely study the items, which could reduce the validity of our conclusions. If so, the observers’ recall should have been equal to—if not greater than—that of the learners, which did not obtain.

To evaluate this possibility further, we asked participants in the three observer groups in Experiment 2 whether or not they expected the surprise trial 2 test. Across the three observer groups, approximately 60 % of the participants reported that they did expect such a test, and approximately 40 % reported that they did not expect such a test. Although this outcome at first seems problematic, we suspect that many “yes” responses were the result of a hindsight or self-serving bias. In support of our assertion, we analyzed the groups’ trial 2 recall performance (Table 6) on the basis of their self report of whether or not they expected the test. The observer groups’ recall (Rows 2–4 of Table 6) did not differ on the basis of whether or not they reported expecting the test, F(1, 129) = 0.1 MSE = 187.5, p = .7, η p 2 = .001. Furthermore, consider that the learners’ recall was significantly higher (all ps < .001, ds > 1.2) than that of the three subsets of observers who reported that they expected the test (left half of Table 6). These outcomes support our assertion that the observers did not try to learn the items themselves.

Broader implications of the present results

In addition to increasing our understanding of how people use the MPT heuristic to inform their JOLs, the present results also have implications for understanding how people use cues to inform their JOLs in general (Koriat, 1997, 2008). Consider that trial 1 relative accuracy differed for the groups in the present experiments, even when the study materials varied in normative difficulty across items (Experiment 2). Koriat (1997) reported that switching one participant’s JOLs with those of another participant’s JOLs (matched by item) produced equivalent relative accuracy on an initial study–test trial. This was taken as evidence that participants used static intrinsic information about the items to make their JOLs. If participants in our experiments used the same intrinsic or normative cues to make their JOLs, however, then relative accuracy should have been equivalent for the groups. Given that this did not obtain, learners presumably used at least some information about their experience studying the items (which observers had no access to) to make their JOLs, which is why relative accuracy differed by group in the present experiments (even on trial 1 and even in Experiment 2 when the items varied in normative difficulty). Our observers did not have access to comparable implicit information, because they apparently were not studying the items themselves. This possibility can help explain why JOLs demonstrated equivalent accuracy when switched across participants (Koriat, 1997) and why older adults could demonstrate relative accuracy comparable to that of younger adults (Tauber & Rhodes, 2012), yet our observers demonstrated impairments. Put differently, people’s utilization of implicit cues likely requires performing the task in order to experience the implicit cues themselves (Koriat & Ackerman, 2010).

Although people’s utilization of implicit cues is believed to be largely nonconscious and, therefore, does not require a person to hold an explicit theory to apply it (Koriat et al., 2008; Mueller et al., 2013), recent research has demonstrated that it is nevertheless possible to provide an explicit theory that can affect a person’s use of implicit information to make a judgment (including overriding past predictability of the cue). For example, Briñol et al. (2006) affected people’s use of retrieval fluency (a presumably implicit cue) by telling participants that experienced ease-of-retrieval was either positive or negative and—depending on the experimental condition—that the person experiencing it was either intelligent or unintelligent. Although people typically apply a heuristic indicating that ease-of-retrieval is associated with better memory (Matvey et al., 2001; Serra & Dunlosky, 2005) and continue to do so when explicitly told that this experience reflects intelligence, people quite readily applied a heuristic indicating that their experienced difficulty of retrieval was associated with better memory when told that this experience reflected intelligence (Briñol et al., 2006). Combined with other evidence that simple changes to the metacognitive situation, such as framing JOLs differently, can affect people’s cue utilization (Serra & England, 2012) and that people likely use explicit beliefs about how specific cues affect their memory to inform their JOLs (Mueller et al., 2013), future theories about how people select and use cues for metacognitive judgments may need to include a more nuanced discrimination between theory-based and implicit cue utilization.

References

Ariel, R., & Dunlosky, J. (2011). The sensitivity of judgment-of-learning resolution to past test performance, new learning, and forgetting. Memory & Cognition, 39, 171–184. doi:10.3758/s13421-010-0002-y

Brennan, S. E., & Williams, M. (1995). The feeling of another’s knowing: Prosody and filled pauses as cues to listeners about the metacognitive states of speakers. Journal of Memory and Language, 34, 383–398.

Briñol, P., Petty, R. E., & Tormala, Z. L. (2006). The malleable meaning of subjective ease. Psychological Science, 17, 200–206.

Dienes, Z., & Perner, J. (1999). A theory of implicit and explicit knowledge. Behavioral and Brain Sciences, 22, 735–808.

Dunlosky, J., & Metcalfe, J. (2009). Metacognition. Thousand Oaks, CA: Sage Publications, Inc.

Dunlosky, J., Serra, M. J., & Baker, J. M. C. (2007). Metamemory. In F. T. Durso, R. S. Nickerson, S. T. Dumas, S. Lewandowsky, & T. J. Perfect (Eds.), Handbook of applied cognition (2nd ed., pp. 137–161). Chichester, West Sussex, England: John Wiley & Sons, Ltd.

England, B. D., & Serra, M. J. (2012). The contribution of anchoring and past-test performance to the underconfidence-with-practice effect. Psychonomic Bulletin & Review, 19, 715–722. doi:10.3758/s13423-012-0237-7

Finn, B., & Metcalfe, J. (2007). The role of memory for past test in the underconfidence with practice effect. Journal of Experimental Psychology: Learning, Memory, and Cognition, 33, 238–244. doi:10.1037/0278-7393.33.1.238

Finn, B., & Metcalfe, J. (2008). Judgments of learning are influenced by memory for past test. Journal of Memory and Language, 58, 19–34. doi:10.1016/j.jml.2007.03.006

Gigerenzer, G., & Goldstein, D. G. (1996). Reasoning the fast and frugal way: Models of bounded rationality. Psychological Review, 103, 650–669.

Grimaldi, P. J., Pyc, M. A., & Rawson, K. A. (2010). Normative multitrial recall performance, metacognitive judgments, and retrieval latencies for Lithuanian–English paired associates. Behavior Research Methods, 42, 634–642. doi:10.3758/BRM.42.3.634

Jameson, A., Nelson, T. O., Leonesio, R. J., & Narens, L. (1993). The feeling of another person’s knowing. Journal of Memory and Language, 32, 320–335.

King, J. F., Zechmeister, E. B., & Shaughnessy, J. J. (1980). Judgments of knowing: The influence of retrieval practice. American Journal of Psychology, 93, 329–343.

Klee, H., & Gardiner, J. M. (1976). Memory for remembered events: Contrasting recall and recognition. Journal of Verbal Learning and Verbal Behavior, 15, 471–478.

Koriat, A. (1997). Monitoring one’s own knowledge during study: A cue-utilization approach to judgments of learning. Journal of Experimental Psychology: General, 126, 349–370.

Koriat, A. (2008). Easy comes, easy goes? The link between learning and remembering and its exploitation in metacognition. Memory & Cognition, 36, 416–428. doi:10.3758/MC.36.2.416

Koriat, A., & Ackerman, R. (2010). Metacognition and Mindreading: Judgments of learning for Self and Other during self-paced Study. Consciousness and Cognition, 19, 251–264. doi:10.1016/j.concog.2009.12.010

Koriat, A., & Ma’ayan, H. (2005). The effects of encoding fluency and retrieval fluency on judgments of learning. Journal of Memory and Language, 52, 478–492. doi:10.1016/j.jml.2005.01.001

Koriat, A., Nussinson, R., Bless, H., & Shaked, N. (2008). Information-based and experience-based metacognitive judgments: Evidence from subjective confidence. In J. Dunlosky & R. A. Bjork (Eds.), Handbook of memory and metamemory (pp. 117–134). Mahwah, NJ: Erlbaum.

Matvey, G., Dunlosky, J., & Guttentag, R. (2001). Fluency of retrieval at study affects judgments of learning (JOLs): An analytic or nonanalytic basis for JOLs? Memory & Cognition, 29, 222–232.

Mueller, M. L., Tauber, S. K., & Dunlosky, J. (2013). Contributions of beliefs and processing fluency to the effect of relatedness on judgments of learning. Psychonomic Bulletin & Review, 20, 378–384. doi:10.3758/s13423-012-0343-6

Nelson, T. O., Narens, L., & Dunlosky, J. (2004). A revised methodology for research on metamemory: Pre-judgment recall and monitoring (PRAM). Psychological Methods, 9, 53–69. doi:10.1037/1082-989X.9.1.53

Schwartz, B. L., Benjamin, A. S., & Bjork, R. A. (1997). The inferential and experiential basis of metamemory. Current Directions in Psychological Science, 6, 132–137.

Serra, M. J., & Dunlosky, J. (2005). Does retrieval fluency contribute to the underconfidence-with-practice effect? Journal of Experimental Psychology: Learning, Memory, and Cognition, 31, 1258–1266. doi:10.1037/0278-7393.31.6.1258

Serra, M. J., & Dunlosky, J. (2010). Metacomprehension judgments reflect the belief that diagrams improve learning from text. Memory, 18, 698–711. doi:10.1080/09658211.2010.506441

Serra, M. J., Dunlosky, J., & Hertzog, C. (2008). Do older adults show less confidence in their monitoring of learning? Experimental Aging Research, 34, 379–391. doi:10.1080/03610730802271898

Serra, M. J., & England, B. D. (2012). Magnitude and accuracy differences between judgments of remembering and forgetting. Quarterly Journal of Experimental Psychology, 65, 2231–2257. doi:10.1080/17470218.2012.685081

Shanks, D. R., & St John, M. F. (1994). Characteristics of dissociable human learning systems. Behavioral and Brain Sciences, 17, 367–395.

Tauber, S. K., & Rhodes, M. G. (2012). Multiple bases for young and older adults’ judgments-of-learning (JOLs) in multitrial learning. Psychology and Aging, 27, 474–483. doi:10.1037/a0025246

Undorf, M., & Erdfelder, E. (2011). Judgments of learning reflect encoding fluency: Conclusive evidence for the ease-of-processing hypothesis. Journal of Experimental Psychology: Learning, Memory, and Cognition, 37, 1264–1269. doi:10.1037/a0023719

Undorf, M., & Erdfelder, E. (2013, online version). Separation of encoding fluency and item difficulty effects on judgments of learning. Quarterly Journal of Experimental Psychology. doi:10.1037/a0023719

Vesonder, G. T., & Voss, J. F. (1985). On the ability to predict one’s own responses while learning. Journal of Memory and Language, 24, 363–376.

Author information

Authors and Affiliations

Corresponding author

Appendix

Rights and permissions

About this article

Cite this article

Serra, M.J., Ariel, R. People use the memory for past-test heuristic as an explicit cue for judgments of learning. Mem Cogn 42, 1260–1272 (2014). https://doi.org/10.3758/s13421-014-0431-0

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13421-014-0431-0