Abstract

Behavior reduced as a consequence of extinction or intervention can relapse. According to behavioral momentum theory, the extent to which behavior persists and relapses once it has been eliminated depends on the relative training reinforcement rate among discriminative stimuli. In addition, studies of context renewal reveal that relapse depends on the similarity between the training stimulus context and the test stimulus context following disruption by extinction. In the present experiments with pigeons, we arranged different reinforcement rates in the presence of distinct discriminative stimuli across components of a multiple schedule. Following extinction, we attempted to reinstate responding in the presence of those target components with response-independent food presentations. Importantly, we arranged the reinstating food presentations either within the target components or in separate components, either paired with extinction (Experiment 1) or reinforcement (Experiment 2) during baseline. Reinstatement increased with greater training reinforcement rates when the reinstating food presentations were arranged in the target components and the separate components paired with reinforcement during training. Reinstatement was smaller and was not systematically related to training reinforcement rates in the target components when reinstating food presentation occurred in separate components paired with extinction. These findings suggest that relapse depends on the history of reinforcement associated with the discriminative stimuli in which the relapse-inducing event occurs.

Similar content being viewed by others

From the three-term contingency (Skinner, 1969), it is possible to derive the factors affecting stable performance. On the one hand, the antecedent discriminative stimulus sets the occasion for responding and dictates which response in an organism’s repertoire may produce a given consequence, while the rate of reinforcement mediates the rate at which the response will be emitted. Whereas some research has focused solely on certain subportions of the three-term contingency, behavioral momentum theory asserts that both aspects of the contingency affect responding (Nevin & Grace, 2000). According to behavioral momentum theory, the rate of responding during stable baseline conditions is governed by the contingency between responding and reinforcement (i.e., the response-reinforcer relation). Thus, the rate of a response is a direct reflection of the rate of reinforcement that follows the behavior (e.g., Herrnstein, 1970). In addition, the relation between an antecedent discriminative stimulus and the rate of reinforcement obtained in the presence of the stimulus affects resistance to disruption, or the persistence, of the behavior (i.e., the stimulus reinforcer relation; see Nevin, 2009; Nevin & Grace, 2000, for reviews).

A primary concern of behavioral momentum theory is deriving the set of conditions that lead to greater persistence independently from factors that influence response rates (Nevin, Tota, Torquato, & Shull, 1990). Podlesnik and Shahan (2009), for example, assessed the effect of response-reinforcer and stimulus-reinforcer relations established during training on response persistence in the presence of different discriminative stimuli. They arranged a multiple schedule of reinforcement with pigeons, in which two differentially signaled components that alternated on a single key within sessions. In the presence of both components, responding was maintained by equal variable-interval (VI) 120-s schedules of reinforcement (Ferster & Skinner, 1957). However, in one of the components, response-independent reinforcer presentations were arranged according to a variable-time (VT) 180-s schedule. These response-independent reinforcer presentations enhanced the stimulus-reinforcer relation in that component during baseline by increasing the number of reinforcers presented in that component. However, the added reinforcers also degraded the response-reinforcer relation in that component, as revealed by lower rates of responding in that component (see also Nevin et al., 1990; Podlesnik & Shahan, 2009, 2010).

Once stable performance was reached, Podlesnik and Shahan (2009) disrupted responding in both components with extinction to assess persistence. Measures of behavioral persistence are obtained by assessing response rates during individual extinction sessions relative to baseline response rates. Although ongoing exposure to extinction eventually eliminated responding in both components, Podlesnik and Shahan observed that responding in the component with the added response-independent reinforcer presentations persisted for longer, as seen by a slower decrease in responding in relation to the other component. In other words, they found that resistance to extinction was greater in the presence of the component stimulus with the added reinforcer presentations, even though baseline response rates were lower. Thus, added reinforcers in the presence of one component stimulus had two separable effects: They weakened the response-reinforcer relation during baseline but strengthened the stimulus-reinforcer relation (see Nevin & Grace, 2000; Nevin & Shahan, 2011). Moreover, greater reinforcement rates produce greater resistance to disruption irrespective of whether the additional reinforcement is presented response independently, as in Podlesnik and Shahan (2009), dependent on the same response (e.g., Nevin, 1974; Nevin, Mandell, & Atak, 1983), or contingent upon a concurrently available response (e.g., Mauro & Mace, 1996; Nevin et al., 1990; Podlesnik, Bai, & Elliffe, 2012; Rau, Pickering, & McLean, 1996). These findings are generally robust and have been observed in a variety of animal species, ranging from fish to humans, as well as several response types and reinforcer manipulations (Ahearn, Clark, Gardenier, Chung, & Dube, 2003; Cohen, 1996; Grimes & Shull, 2001; Harper, 1999; Igaki & Sakagami, 2004; Mace et al., 1990; Shahan & Burke, 2004). However, exceptions to the finding that resistance to disruption is independent from baseline response rate responding have been observed (e.g., Lattal, 1989; Nevin, Grace, Holland, & McLean, 2001; Podlesnik, Jimenez-Gomez, Ward, & Shahan, 2006).

Another feature of persistent behavior is its tendency to return, or relapse. Once responding reached near-zero rates after several sessions of extinction, Podlesnik and Shahan (2009) reinstated extinguished responding by presenting two reinforcers in both components only at the beginning of sessions, either response dependent or independent. We will term the reinforcer presentations presented during tests for reinstatement reinstating reinforcers. Reinstatement of responding, also estimated by comparing response rates during tests for reinstatement to baseline response rates, was, like resistance to extinction, greater in the presence of the component stimulus associated with a higher rate of reinforcement during baseline. The same was true in separate experiments assessing different types of relapse preparations (i.e., resurgence, context renewal). Thus, their results suggest that both persistence and relapse are a function of baseline stimulus-reinforcer relations (see Podlesnik & DeLeon, 2015; Podlesnik & Shahan, 2010; Pritchard, Hoerger, & Mace, 2014, for reviews).

The amount of relapse that may follow extinction also depends on contextual factors across the three successive training, extinction, and relapse phases (see reviews by Bouton, 2004; Bouton & Swartzentruber, 1991). In these studies, context is defined as more global environmental features of the operant chamber (e.g., odor, pattern on walls, floor texture) as opposed to the more local discriminative stimuli used by Podlesnik and Shahan (2009; e.g., key color). For example, in an ABA context-renewal procedure, responding is conditioned in Context A and extinguished in a novel Context B. Following extinction in Context B, Context A is reintroduced, which produces relapse of the trained response. Renewal of responding also occurs in other forms, including the transition to an untrained and novel Context C in the final phase. Thus, simply ending extinction is sufficient to produce relapse. Despite observing both ABA and ABC renewal, the extent of the increase in responding typically is much stronger in ABA renewal (Bouton & Bolles, 1979; Harris, Jones, Bailey, & Westbrook, 2000; Havermans, Keuker, Lataster, & Jansen, 2005; Neumann, 2006; Üngör & Lachnit, 2006; Zironi, Burattini, Aicardi, & Janak, 2006; see review by Bouton, Winterbauer, & Todd, 2012). This implies that both the removal of the extinction context (Context B), as well as a return to the original training context (Context A), contribute to the renewal effect.

Greater renewal when returning to Context A than Context C implies an important role for the relation between context signaled by global stimuli and reinforcement in producing relapse. Such relations between context and reinforcement have also been shown to determine the amount of reinstatement (Baker, Steinwald, & Bouton, 1991; Bouton & Bolles, 1979; Bouton & King, 1983; Bouton & Peck, 1989; Schachtman, Brown, & Miller, 1985). Baker et al. (1991), for example, first trained rats to respond to a single lever for food reinforcers on a VI 64-s schedule in two contexts signaled by two distinct odors. Second, responding was extinguished in both contexts. Third, they differentially manipulated the relation between context and reinforcement – the lever was retracted and the rats received response-independent reinforcer presentations in one context and no presentations in the other context. Finally, in the following session, the lever was reintroduced, and presenting reinstating reinforcers in both contexts produced greater reinstatement in the context paired with response-independent reinforcers in the previous phase in the absence of the lever. These results suggest that global contexts with greater associations with reinforcement increase the effectiveness of the reinstating reinforcers to produce relapse in those contexts.

Both contextual factors (e.g., Baker et al., 1991) and training rates of reinforcement (e.g., Podlesnik & Shahan, 2009) influence the amount of relapse of extinguished operant behavior. The present experiments assessed the interaction of local contextual factors (i.e., alternating component stimuli) and training reinforcement rates on reinstatement. Across two experiments, pigeons were trained on multiple schedules of reinforcement during a baseline condition, as shown in Fig. 1. We arranged Target and Non-Target components. The Target components were the Rich and Lean components, in which we arranged higher and lower rates of response-dependent reinforcers, respectively. The Non-Target components were the Other 1 and Other 2 components, in which we arranged extinction (Experiment 1) or a moderate reinforcement rate compared to the Rich and Lean components (Experiment 2). Following baseline, we assessed extinction and then one of two types of reinstatement tests. Our primary questions were whether presenting the reinstating food reinforcers either in the Target components (Same-Context Reinstatement; SCR in Fig. 1) or Non-Target components (Different-Context Reinstatement; DCR in Fig. 1) would produce differential reinstatement (1) as a function of reinstatement type (SCR vs. DCR) and/or (2) as a function of the different baseline reinforcement rates in the Target components (Rich vs. Lean components).

An illustration of the multiple schedule of reinforcement in effect across conditions and experiments. Across all conditions and experiments, four components of a multiple schedule alternated within sessions. The schedule associated with each of the components is shown below the keys. The components associated with a VI 30-s and a VI 120-s schedule across experiments were termed the Target components. The other two components, which were associated with either extinction (Experiment 1) or VI 48 s (Experiment 2), were termed the Non-Target components. Baseline was followed by extinction (nonreinforcement in all components) and one type of reinstatement. These reinstatement tests, Same-Context Reinstatement (SCR) and Different-Context Reinstatement (DCR), different with respect to the stimulus context in which the reinstating reinforcers (Rein) were presented

Experiment 1

In Experiment 1, we arranged nonreinforcement during baseline in the Non-Target components and assessed whether reinstatement between the Rich and Lean Target components was affected by the component stimulus in which the reinstating response-independent reinforcers were presented.

Method

Subjects

Six pigeons with prior experience in operant-responding experiments and numbered E1 to E6 were used. The pigeon’s prior experience consisted of a single experiment arranging a three-component multiple schedules of reinforcement with different discriminative stimuli than those used in the current experiment. The pigeons were housed in their own individual cage in a room containing other pigeons with a 12-hour light–dark cycle. When experimental sessions were scheduled, the pigeons were transported to a separate room, which contained four Med Associates pigeon chambers. Experimental sessions were run at approximately the same time each day starting at 8:00 a.m. Sessions were arranged once daily over 5 days each week. The weight of all pigeons was kept at 85% (±15 g) of free-feeding body weight by postsession supplementary feeding of mixed grain when necessary. Water and grit was always available in the pigeons’ home cages.

Apparatus

Experimental sessions took place in four sound- and light-proof Med Associates pigeon chambers. Each chamber measured 300 mm in height, 250 mm in width, and 295 mm in depth. The front, back, and left side walls of the chambers were constructed of clear Plexiglas, while the floor was constructed of metal rods that were placed 30 mm apart. The right side wall of each chamber contained the response panel. Each response panel was equipped with three plastic response keys that were 190 mm from the floor, 25 mm in diameter and 60 mm away from adjacent keys. All response keys could be lit white, red, green, blue, and orange, but only the center key was used. Responses to a lit key that exceeded approximately .1 N in force closed a micro switch behind the keys, produced a feedback click, and were registered for subsequent analyses.

Key pecks to the lit center key were occasionally followed by food delivery according to VI schedules. The hopper that contained the wheat reinforcer was located behind a magazine that was situated 40 mm above the floor on the response panel. The magazine was 50 mm high, 70 mm wide, and 50 mm deep. During reinforcement, the light located behind the magazine aperture was lit and the hopper was raised for 2 s, providing access to wheat. Key lights were extinguished during reinforcement. Also in the adjacent room was a PC that ran the MED-PC, which arranged and recorded all experimental events. Across conditions, we recorded the number of responses emitted in the presence of each component.

Procedure

The start of a session and the end of a component were marked by a 40-s period in blackout. At this time, all lights in the chambers were turned off with the exception of the house light, which was located on the left wall. At the end of the 40-s blackout, the center key was lit marking the start of a 60-s component and the availability of food at different rates. The order of components within sessions was selected randomly without replacement, and each component was equally likely to begin a session. Each component was presented nine times each session.

Baseline

The baseline reinforcement rates in the four components of the multiple schedule were differentially signaled by key-light colors. A white center key signaled a VI 30-s schedule (hereafter Rich component). A green key signaled a VI 120-s schedule (hereafter Lean component). The Rich and Lean components will be referred to as the Target components (see Fig. 1). The VI schedules in the Rich and Lean components were based on eight-interval progressions, as described by Flesher and Hoffman (1962). Orange and blue keys were both associated with extinction and comprised the Other 1 and Other 2 components. These components will be referred to as the Non-Target components (see Fig. 1).

Extinction

Once all pigeons received 15 sessions of baseline, an extinction condition was arranged in which no response-dependent reinforcers were available. Sessions of extinction were continued until the rate of responding in the Rich and Lean components decreased below 15% of baseline response rates (see Podlesnik & Shahan, 2009).

Reinstatement tests

Following extinction, one of two reinstatement tests was arranged (see Fig. 1). In one reinstatement test, three 2-s response-independent reinforcers were arranged for the first presentation of the Rich and Lean components of each session (see Podlesnik & Fleet, 2014). We termed this test Same-Context Reinstatement (SCR in Fig. 1) because the response-independent reinforcers were presented in the Target components (i.e., the Rich and Lean components). In the other reinstatement test, three 2-s response-independent reinforcers were arranged for the first presentation of the Other 1 and Other 2 components of each session (see Podlesnik & Fleet, 2014). We termed this test Different-Context Reinstatement (DCR in Fig. 1) because the response-independent reinforcers were presented in the Non-Target components (i.e., the Other 1 and Other 2 components). Regardless of the reinstatement condition in effect, the food presentations occurred at 5, 10, and 15 s into the relevant components and were the only reinforcers available in each reinstatement session.

The order in which the two types of reinstatement tests were conducted following extinction was counterbalanced across pigeons. Pigeons E1 to E3 received the Different-Context Reinstatement test first, whereas Pigeons E4 to E6 received the Same-Context Reinstatement test first. Once all pigeons had received four reinstatement sessions, we began another cycle of baseline, extinction, and reinstatement. Thus, all pigeon subjects were exposed to all of the experimental manipulations.

Data analyses

The total rates of responding in a component were the sum of responses recorded in that component divided by the duration of the component. Component length was exclusive of each 2-s reinforcer. Before detailed analyses of the data we assessed the stability of baseline response rates in the Rich and Lean components by running a version of Davison’s (1972) stability analysis (see also Miranda-Dukoski, Davison, & Elliffe, 2014). We considered response rates in the Rich and Lean components for any pigeon stable once the median rate of responding across successive blocks of three sessions did not differ by more than 5% from the median rate of responding across the previous block of three sessions. According to this criterion, the individual-pigeon data was stable by the time extinction begun, with response rates in the Rich component reaching stability at about 11 sessions since the start of baseline. Response rates in the Lean component also reached stability before the start of extinction, typically at about 12 sessions since the start of baseline. Hereafter, baseline response rates will be presented as the mean of total rates of responding in the Rich, Lean and Non-Target components across the last five sessions of each baseline condition. Baseline response rates across the last five sessions of baseline will hereafter be termed mean baseline response rates.

Performance during extinction and reinstatement tests was calculated as response rates during each extinction and reinstatement session as a proportion of mean baseline response rates (i.e., Extinction / Baseline). The effect of our reinstatement-test types on relapse was further analyzed by calculating differences between the log proportion of mean baseline response rates, averaged across all sessions of each reinstatement test for the Rich and Lean components, for example, log(RichSCR) – log(LeanSCR).

Statistical analyses were conducted to assess the size of the effect of baseline rates of reinforcement on mean baseline response rates, performance during extinction, and performance during reinstatement tests for the Rich and Lean components. Statistical analyses in the current experiment were three-way repeated-measures ANOVA. For the baseline data, a three-way repeated-measures ANOVA with component, session, and baseline number as factors was used. The factors for the three-way repeated-measures ANOVA for the extinction and reinstatement-tests data were component, session, and extinction number and component, session, and reinstatement-test type, respectively. All statistical analyses were conducted with an alpha-level criterion of .05.

Results and discussion

Figure 2 shows mean baseline response rates for the individual subject and the mean group data. Table 1, which shows raw response rates across conditions, can be found in the appendix. Overall, response rates were consistently greater in the Rich component compared to the Lean component. A three-way repeated-measures ANOVA (component × baseline number × session number) confirmed these conclusions with a significant main effect of component, F(1, 5) = 24.21, p = .004. The main effect of baseline was also significant, F(1, 5) = 8.59, p = .033. As shown in Fig. 2, mean baseline response rates increased across consecutive baseline conditions for four out of six pigeons. However, the main effect of session was nonsignificant, F(4, 20) = 2.24, p = .101, as were all possible interactions.

Baseline response rates per min (±SEM) averaged over the last five sessions of each baseline condition for all pigeons and the mean group data (M) for Experiment 1

Figure 2 also shows that mean baseline response rates in the Other 1 and Other 2 components were generally near zero across pigeons and the group mean data. Response rates in the Non-Target components continued to be near-zero values throughout the rest of the conditions (i.e., extinction and reinstatement), as shown in the appendix. There was an occasional increase in Non-Target-components response rates during tests for reinstatement, but the extent of the increase was not systematic across pigeons and tests for reinstatement.

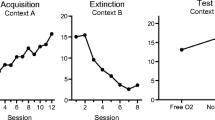

Figure 3 shows proportion of mean baseline response rates across all sessions of extinction and reinstatement for the Rich and Lean components. Same-Context Reinstatement and its immediately preceding extinction condition are shown first in Fig. 3, even though Pigeons E1 to E3 received this test for reinstatement second. Proportion of baseline response rates during extinction were generally greater in the Rich than in the Lean component. A three-way (component × baseline number × session) repeated-measures ANOVA supported visible differences between Rich- and Lean-component proportion of baseline across sessions with a significant interaction for component × session, F(6, 30) = 3.62, p = .008.

Measures of proportion of mean baseline response rates (±SEM) across all sessions of extinction and reinstatement for Experiment 1 are shown. Illustrated across successive panels (starting from the left-most panel) is proportion of baseline response rates during extinction, Same-Context reinstatement (SCR), and Different-Context reinstatement (DCR)

Figure 3 also shows the amount of relapse by Same- and Different-Context Reinstatement, revealed as proportions of baseline response rates for the Rich and Lean components. Same-Context Reinstatement typically produced a greater overall increase in proportion of baseline response rates across both components. Additionally, during Same-Context Reinstatement, there was generally a differential effect of training reinforcement rates with Rich-component proportion of mean baseline response rates being greater than proportion of mean baseline response rates in the Lean component. During Different-Context Reinstatement, however, proportion of mean baseline response rates were generally nondifferential across Rich and Lean components. Thus, responding increased to a greater extent, overall, during Same-Context Reinstatement than Different-Context Reinstatement, and the extent of the increase in responding was generally only differential with respect to training reinforcer rates in Same-Context Reinstatement.

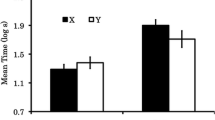

Figure 4 highlights differences in the effect of different reinstatement-test types as log differences between Rich and Lean component proportion of baseline response rates. Presenting reinstating reinforcers in the Rich and Lean components (i.e., Same-Context Reinstatement; SCR) produced more pronounced differences between Rich and Lean proportion of mean baseline response rates than presenting reinstating reinforcers elsewhere for 4 out of 6 pigeons (i.e., Different-Context Reinstatement; DCR). Moreover, this value was positive for five out of six pigeons with SCR but only for three out of six pigeons for DCR. A three-way (component × reinstatement type × session) repeated-measures ANOVA showed significant main effects of component, F(1, 5) = 19.44, p = .007, and reinstatement type, F(1, 6) = 25.29, p = .004, and a nonsignificant main effect of session, F(3,15) = 2.13, p = .139. There were no significant interactions.

As a general conclusion, the results from Experiment 1 suggest that the component discriminative stimulus in which the reinstating reinforcers are presented during reinstatement has an effect on the amount of relapse that follows. When the reinstating reinforcers are arranged in the presence of component stimuli associated with a history of response-dependent reinforcers during baseline (i.e., Target components during SCR tests), relapse is generally both greater (see Baker et al., 1991) and differential as a function of baseline reinforcement rates across components (see Podlesnik & Shahan, 2009). By contrast, relapse was much smaller and nondifferential across Target components when the reinstating reinforcers were presented in the presence of different component stimuli (i.e., Non-Target components during DCR tests). These findings suggest that control by differential training reinforcement rates on reinstatement, as well as the overall level of reinstatement, is dependent on the component stimulus in which reinstating reinforcers happen.

It is possible that reinstatement effects were, in part, a result of reconditioning due to there being a 5-s period between reinstating reinforcers in which a response could be coincidentally followed by a reinstating reinforcer. If reinstatement effects were confounded by the effect of adventitious reinforcement, we would expect response rates to be higher in the second of the components arranging reinstating reinforcers than in the first. In a final analysis, we compared the rate of responding before the delivery of the first reinstating reinforcers between the two components arranging reinforcers during tests for reinstatement. Thus, the dyads of components of interest were Rich–Lean and Lean–Rich during Same-Context Reinstatement, and Other 1–Other 2 and Other 2–Other 1 during Different-Context Reinstatement.

If adventitious reinforcement were a contributing factor in reinstatement effects, Fig. 5 would show higher rates of responding in the second component (gray bars) of each dyad across both Same- and Different-Context Reinstatement. Figure 5 shows that response rates during the second of the components was not consistently greater, and therefore the reinstatement effects cannot be attributed solely to reconditioning through adventitious reinforcement.

Response rates before the delivery of any reinstating reinforcer in the first (black bars) and second (gray bars) component arranging reinstating reinforcers across Same- and Different-Context Reinstatement in Experiment 1. Only dyads of components arranging reinstating reinforcers in the tests for reinstatement (i.e., the Rich and Lean components in Same-Context Reinstatement, and the Other 1 and Other 2 components in Different-Context Reinstatement) were examined

In sum, the joint effect of baseline rates of reinforcement and the component stimulus in which the reinstating reinforcers occurred, which was apparent in Figs. 3 and 4, suggests that the reinstating food presentations in the Target components (i.e., Same-Context Reinstatement) produced a discrimination that the original training contingencies of reinforcement had been reestablished (see Franks & Lattal, 1976). Because no reinforcement was arranged in the Non-Target components during baseline, the reinstating reinforcers in the Non-Target components (i.e., Different-Context Reinstatement) did not produce a discrimination that the original training contingencies had been reestablished. Thus, following Different-Context Reinstatement, reinstatement was generally small and nondifferential across the Rich and Lean components (see Figs. 3 and 4).

Experiment 2

If the reinstating reinforcers presented during both Same- and Different-Context Reinstatement were to occur in the presence of component stimuli associated with reinforcement during training, the amount of relapse observed during Same- and Different-Context Reinstatement might be similar. Thus, in Experiment 2, we investigated whether reinstatement in the Target components would be similar during Same- and Different-Context Reinstatement if the Non-Target components were associated with a baseline rate of reinforcement. During reinstatement, reinstating reinforcers in any component would occur in the presence of a component stimulus context associated with reinforcement during training. If a signaled return to the original training contingencies determines the amount of relapse that will follow, both Same- and Different-Context Reinstatement should produce similar amounts of relapse. Additionally, both types of reinstatements should produce differential relapse with respect to reinforcement rate in the Target components.

Method

Subjects, apparatus and procedure

Eleven pigeons, numbered V1 to V11, were used. The experimental chambers and housing conditions in Experiment 2 were the same as in Experiment 1.

Pretraining

The pigeons were experimentally naïve and were initially trained to eat from the food hopper by lighting the magazine and raising the hopper on a variable-time 180-s schedule. Food deliveries were 15 s in duration and decreased to 2 s across daily sessions. Once eating reliably, we trained key pecking using an auto shaping procedure (Brown & Jenkins, 1968), also on a variable-time 180-s schedule. When the schedule timed out, one of the response keys was lit white, red, or green for 15 s. If a response occurred within this time, food was made available immediately. If no response had occurred by the end of the 15-s period, food delivery was presented response independently. This procedure remained in effect until all pigeons pecked the response key on at least 95% of key-light illuminations. Once the pigeons reliably pecked during auto shaping, they were trained to respond to a four-component multiple schedule. The VI-schedule values were made progressively leaner until the baseline schedule values of VI 30 s, VI 120 s, VI 48 s, VI 48 s were reached (see Fig. 1). At this point, the experiment began.

Baseline, extinction and reinstatement tests

The same basic experimental procedure used in Experiment 1 was used in Experiment 2. The difference between Experiments 1 and 2 was that the components that were previously associated with extinction during baseline in Experiment 1, the Non-Target components, were associated with separate VI 48-s schedules in Experiment 2. VI 48-s schedules were the average reinforcement rate presented between the Rich (VI 30 s) and Lean (VI 120 s) components. Both of these schedules in the Non-Target components were differentially signaled with a blue and red center-key illumination. Pigeons experienced baseline, extinction and one type of reinstatement before repeating the cycle with the other type of reinstatement. The order in which individual pigeon subjects were exposed to each of the reinstatement conditions was counterbalanced with Pigeons V1 to V6 experiencing Different-Context Reinstatement first.

Results and discussion

All Experiment-2 data analyses and figures were calculated and plotted in the same way as in Experiment 1. Stability analyses were conducted before extensive analyses in the same way as reported in Experiment 1. Generally, baseline response rates in the Rich and Lean components reached stability following 12 sessions of baseline for all pigeons.

Figure 6 shows group-mean and individual-pigeon mean baseline response rates in each of the components averaged across the last five sessions of each consecutive baseline condition. The raw rates of responding can be found in Table 2 in the appendix. As in Experiment 1, response rates in the Rich component were greater than in the Lean component. This finding was supported using a three-way (component × reinstatement type × session) repeated-measures ANOVA with significant main effect of component, F(1, 10) = 22.56, p = .001. The main effects of baseline number, F(1, 10) = 3.29, p = .100, and session, F(4, 40) = 0.74, p = .573, were nonsignificant, as were all interactions.

Baseline response rates per min (±SEM) averaged over the last five sessions of each baseline condition for all pigeons and the mean group data (M) for Experiment 2

Figure 7 shows the group-mean and individual-pigeon proportion of baseline response rates for all components across sessions of extinction and reinstatement. Generally, proportion of baseline was highest in the Rich component, closely followed by the Non-Target components and the Lean component. A three-way (component × baseline number × session) repeated-measures ANOVA was conducted on the extinction data. The test indicated significant interactions for component × baseline number, F(1, 10) = 10.48, p = .009, and component × session, F(5, 50) = 9.18, p < .001.

Measures of proportion of baseline response rates (±SEM) across all sessions of extinction and reinstatement for Experiment 2 are shown. Illustrated across panels (starting from the left-most panel) is proportion of baseline response rates during extinction, Same-Context Reinstatement (SCR), and Different-Context Reinstatement (DCR)

Figure 7 also shows that the effect of Same- and Different-Context Reinstatement on proportion of mean baseline response rates was similar. In both reinstatement tests, there was generally an overall increase in responding across all of the components. Additionally, during both reinstatement tests, there was an effect of training reinforcer rates as shown by greater increases in proportion of baseline response rates in the Rich than in the Non-Target and Lean components. Figure 8 clearly shows that reinstatement effects were not differential with respect to reinstatement-test type, as log differences between Rich and Lean component proportion of baseline response rates were above zero in both reinstatement tests for 10 out of 11 pigeons for SCR and all 11 pigeons for DCR.

We assessed the overall effect of the reinstatement tests on Rich- and Lean-component proportion of baseline response rates using a three-way (component × reinstatement type × session) ANOVA. There were significant interactions for reinstatement type × component, F(1, 10) = 15.84, p = .003, and, reinstatement type × session, F(1, 10) = 8.58, p = .015. As Fig. 7 shows, proportion of mean baseline response rates were generally greater in the Rich than in the Lean component. The effect of training reinforcer rates was further modulated by the number of session in reinstatement tests with differences between Rich- and Lean-component proportion of mean baseline response rates decreasing across sessions. Though both tests for reinstatement produced greater proportion of mean baseline response rates in the Rich than the Lean component, the overall difference between the components was greater in Different-Context Reinstatement for 7 out of 11 pigeons (see Fig. 7). Thus, the findings from Experiment 2 suggest that when the Non-Target components produce response-dependent food presentations during baseline, the effect of reinstatement type is lessened compared to when these components are associated with extinction (Experiment 1).

Were the reported reinstatement effects during both tests for reinstatement in part due to reconditioning of responding that was coincidentally followed by a reinstating reinforcer? Figure 9 shows the rate of responding in the 5 s before the delivery of reinstating reinforcers in dyads of components arranging the reinforcers. Specifically, response rates before the delivery of a reinstating reinforcers were not consistently greater in the second component of each dyad (gray bars) relative to the first component (black bars). These findings suggest that reconditioning due to adventitious reinforcement did not contribute to the reinstatement effects shown in Fig. 7. Thus, results shown in Figs. 7 and 9 suggest that stimulus–reinforcer relations established during baseline as the main factor in the present experiment’s reinstatement effects.

Response rates before the delivery of any reinstating reinforcer in the first (black bars) and second (gray bars) component arranging reinstating reinforcers across Same- and Different-Context Reinstatement in Experiment 2

General discussion

Across two experiments, we assessed the interaction between training reinforcement rate and the history of reinforcement associated with various component stimuli on relapse by reinstatement. The reinstatement tests differed with respect to the component stimulus in which the reinstating reinforcers were presented. Specifically, the Same-Context reinstatement test presented the reinstating reinforcers within the Target (Rich and Lean) components (see Fig. 1). The Different-Context Reinstatement test presented the reinstating reinforcers outside the Target components (i.e., within the Non-Target components; see Fig. 1). Overall, resistance to extinction was typically greater in the Rich component compared to the Lean component (see Figs. 3 and 7). Reinstatement also was generally greater during the Rich component compared to the Lean during all Same-Context Reinstatement tests. However, reinstatement was only greater in the Rich component compared to the Lean component during Different-Context Reinstatement when reinforcement was presented during baseline in the Non-Target components in Experiment 2 (see Figs. 3 and 7). When the Non-Target components were associated with extinction in Experiment 1, reinstatement effects were small and not differential across the Rich and Lean components (see Fig. 3). Therefore, baseline reinforcement rate determines reinstatement effects, but only when the reinstating reinforcers occur in component stimulus previously associated with reinforcement.

As found by earlier studies (e.g., Nevin et al., 1990; Podlesnik & Shahan, 2008, 2009), responding was more resistant to extinction in the Rich component compared to the Lean component (see Figs. 3 and 7). When reinstatement was assessed by presenting the reinstating reinforcers within the Rich and Lean components, responding generally relapsed to a greater extent in the Rich component (see Figs. 3 and 7), also replicating previous findings (e.g., Podlesnik & Shahan, 2009). From a glance, the results of the present experiments suggest that the baseline stimulus-reinforcer relation determines relative resistance to extinction and reinstatement, consistent with behavioral momentum theory (Nevin & Shahan, 2011; Podlesnik & Shahan, 2010). However, the present experiment was not designed to elucidate the contributions of stimulus–reinforcer and response–reinforcer relations to resistance to extinction and reinstatement since our study produced Rich and Lean components with different rates of response-dependent reinforcement. Previous experiments also arranging response-dependent rates of reinforcement during baseline have also shown an effect of training reinforcer rates on resistance to extinction and relapse, similar to the effect shown when reinforcement in increased in the Rich relative to the Lean component by (1) adding response-independent reinforcers to one of two otherwise equal components, or (2) arranging reinforcers contingent upon an alternative response (Rau et al., 1996; Nevin, 1974; Nevin et al., 1983; Nevin et al., 1990; Mauro & Mace, 1996; Podlesnik, Bai, & Elliffe, 2012; Podlesnik & Shahan, 2009). Thus, the present findings and those of similar experiments attest to the range of conditions in which the effect of stimulus–reinforcer relations on resistance to extinction and relapse can be tested.

The main purpose of the present study was to assess whether reinstatement is affected by the component stimulus in which the reinstating reinforcers are presented. We found that the component stimulus in which the reinstating reinforcers occurred modulated reinstatement depending on whether reinforcement was presented during baseline in the Non-Target components across Experiments 1 and 2. In Experiment 1, no reinforcement was arranged in the Non-Target components during baseline. As a result, reinstatement was greater overall and a function of training reinforcement rates only during Same-Context Reinstatement (see Figs. 3 and 4). In addition, reinstatement was smaller and not differential with respect to training reinforcement rates during Different-Context Reinstatement. Conversely, Experiment 2 arranged reinforcement to be presented during baseline in the Non-Target components. We found both greater reinstatement overall as well as differential reinstatement during both the Same-Context and Different-Context Reinstatement tests compared to the results of Experiment 1 (see Figs. 7 and 8).

These findings are consistent with experiments assessing relapse in contexts defined by more global environmental features, such as Baker et al. (1991), who extinguished reinforced responding in rats across two distinct odor contexts. With levers retracted in both contexts, they then presented response-independent reinforcers in one context but none in the other context. When levers again became available in both contexts during a test for reinstatement, they found greater reinstatement effects in the context that previously included the response-independent reinforcer presentations. Therefore, the present findings and Baker et al. reveal that contexts more closely associated with reinforcement produce greater reinstatement effects, whether context is defined by global environmental features or local discriminative stimuli (see also Bouton & King, 1983, for similar findings with Pavlovian conditioning). The present findings further extend this idea by revealing differential control by training reinforcement rates only when the reinstating reinforcers are presented in contexts previously associated with reinforcement and not extinction.

Differences between the overall level of relapse during Different-Context Reinstatement found across Experiments 1 and 2 might in part be attributable to the extent to which the reinstating reinforcers are correlated with the reestablishment of the training reinforcement contingencies. Bouton and his collaborators suggest that reinstating reinforcers retrieve the context in which the response-reinforcer associations formed during training (Baker et al., 1991; Bouton, 2004; Bouton & Bolles, 1979; Bouton & King, 1983; Bouton & Peck, 1989; Bouton & Swartzentruber, 1991; Schachtman et al., 1985). Similarly, the reinstating reinforcers presented in the components with a history of reinforcement during baseline may be discriminative for, or signal, a return to the baseline contingencies whereby responding in the Rich component is reinforced at a higher rate (see Franks & Lattal, 1976, for a relevant discussion).

The occurrence of reinstating reinforcers in components with a history of reinforcement, which were the Rich and Lean components of the present Experiments 1 and 2 as well as in the Non-Target components in Experiment 2 (see Figs. 3 and 7), potentially would have signaled a return to training contingencies. However, the reinstating reinforcers were placed in a component stimulus with no prior history of reinforcement during Different-Context Reinstatement in Experiment 1. Therefore, the reinstating reinforcers in the presence of component stimuli associated with extinction were unlikely to signal a return to a prior baseline rate of reinforcement. Instead, these reinstating reinforcers might signal that although the extinction contingency is over, the current contingencies are largely discrepant from prior baseline reinforcer conditions. As a consequence, the reinstating reinforcers in the Non-Target components produced patterns of responding that were not similar to those obtained during the previous baseline condition in the Target components. Such discriminative control over responding has been implied in Pavlovian conditioning (see Rescorla, 1972, for a review), and, more recently, with local effects of reinforcement on operant choice behavior (e.g., Boutros, Elliffe, & Davison, 2011; Cowie, Davison, & Elliffe, 2011; Miranda-Dukoski et al., 2014).

The effect of correlations between training reinforcer rates and context found in the present experiments offer additional practical implications for clinicians wishing to determine the factors that lead to relapse following treatment. As suggested by Podlesnik and Shahan (2010), behavioral momentum theory predicts that the extent to which problem behavior (e.g., drug seeking or overeating) will relapse following treatment is related to the baseline stimulus-reinforcer relation (see also Preston et al., 1998). The present findings suggest the likelihood of relapse also is influenced not only by the history of reinforcement in a given context but also by the context in which the relapse-inducing events happens. If relapse-inducing events are contacted in a context with a long history of reinforced problem behavior, relapse likely will be greater and more pervasive than if the relapse-inducing event occurs in an unrelated, neutral, or less established context.

In conclusion, the present study found greater resistance to extinction and reinstatement within discriminative component stimuli signaling greater training reinforcement rates. These findings are consistent with the assertions of behavioral momentum theory (see Nevin & Shahan, 2011; Podlesnik & Shahan, 2010). Moreover, we found that the history of reinforcement associated with components in which the reinstating reinforcers occurred modulated the patterns of reinstatement. When arranging the reinstating reinforcers during separate discriminative component stimuli never paired with reinforcement during training (Experiment 1), reinstatement was unrelated to training reinforcement rates. When arranging the reinstating reinforcers during separate discriminative component stimuli paired with reinforcement during training (Experiment 2), reinstatement was greater with greater training reinforcement rates. Therefore, the present findings reveal an interaction between training reinforcement rates and the discriminative stimuli in which relapse-inducing events occur.

References

Ahearn, W. H., Clark, K. M., Gardenier, N. C., Chung, B. I., & Dube, W. V. (2003). Persistence of stereotyped behavior: Examining the effects of external reinforcers. Journal of Applied Behavior Analysis, 36, 439–448.

Baker, A. G., Steinwald, H., & Bouton, M. E. (1991). Contextual conditioning and the reinstatement of extinguished instrumental responding. Quarterly Journal of Experimental Psychology, 43B, 199–218.

Bouton, M. E. (2004). Context and behavioral processes in extinction. Learning & Memory, 11, 485–494.

Bouton, M. E., & Bolles, R. C. (1979). Role of conditioned contextual stimuli in reinstatement of extinguished fear. Journal of Experimental Psychology: Animal Behavior Processes, 5, 165–179.

Bouton, M. E., & King, D. A. (1983). Contextual control of the extinction of conditioned fear: Tests for the associative value of the context. Journal of Experimental Psychology: Animal Behavior Processes, 9, 248–265.

Bouton, M. E., & Peck, C. A. (1989). Context effects on conditioning, extinction, and reinstatement in an appetitive conditioning preparation. Animal Learning & Behavior, 17, 188–198.

Bouton, M. E., & Swartzentruber, D. (1991). Sources of relapse after extinction in Pavlov Ian and instrumental learning. Clinical Psychology Review, 11, 123–140.

Bouton, M. E., Winterbauer, N. E., & Todd, T. P. (2012). Relapse processes after the extinction of instrumental learning: Renewal, resurgence and reacquisition. Behavioural Processes, 90, 130–141.

Boutros, N., Elliffe, D., & Davison, M. (2011). Examining the discriminative and strengthening effects of reinforcers in concurrent schedules. Journal of the Experimental Analysis of Behavior, 96, 227–324.

Brown, P. L., & Jenkins, H. M. (1968). Autoshaping of the pigeon’s key-peck. Journal of the Experimental Analysis of Behavior, 11, 1–8.

Cohen, S. L. (1996). Behavioral momentum of typing behavior in college students. Journal of Behavior Analysis & Therapy, 1, 36–51.

Cowie, S., Davison, M., & Elliffe, D. (2011). Reinforcement: Food signals the time and location of future food. Journal of the Experimental Analysis of Behavior, 96, 63–86.

Davison, M. (1972). Preference for mixed-interval versus fixed-interval schedules: Number of component intervals. Journal of the Experimental Analysis of Behavior, 17, 169–176.

Ferster, C. B., & Skinner, B. F. (1957). Schedules of reinforcement. New York: Appleton-Century-Crofts.

Flesher, M., & Hoffman, H. S. (1962). A progression for generating variable-interval schedules. Journal of the Experimental Analysis of Behavior, 90, 313–331.

Franks, G. J., & Lattal, K. A. (1976). Antecedent reinforcement schedule training and operant response reinstatement in rats. Animal Learning & Behavior, 4, 374–378.

Grimes, J. A., & Shull, R. L. (2001). Response-independent milk deliveries enhances persistence of pellet-reinforced lever pressing by rats. Journal of the Experimental Analysis of Behavior, 76, 179–194.

Harper, D. N. (1999). Drug-induced changes in responding are dependent on baseline stimulus-reinforcer contingencies. Psychobiology, 27, 95–104.

Harris, J. A., Jones, M. L., Bailey, G. K., & Westbrook, R. F. (2000). Contextual control over conditioned responding in an extinction paradigm. Journal of Experimental Psychology: Animal Behavior Processes, 26, 174–185.

Havermans, R. C., Keuker, J., Lataster, T., & Jansen, A. (2005). Contextual control of extinguished conditioned performance in humans. Learning and Motivation, 36, 1–19.

Herrnstein, R. J. (1970). On the law of effect. Journal of the Experimental Analysis of Behavior, 13, 243–266.

Igaki, T., & Sakagami, T. (2004). Resistance to change in goldfish. Behavioural Processes, 66, 139–152.

Lattal, K. A. (1989). Contingencies on response rate and resistance to change. Learning and Motivation, 20, 191–203.

Mace, F. C., Lalli, J. S., Shea, M. C., Lalli, E. P., West, B. J., Roberts, M., & Nevin, J. A. (1990). The momentum of human behavior in a natural setting. Journal of the Experimental Analysis of Behavior, 54, 163–172.

Mauro, B. C., & Mace, F. C. (1996). Differences in the effect of Pavlovian contingencies upon behavioral momentum using auditory versus visual stimuli. Journal of the Experimental Analysis of Behavior, 65, 389–399.

Miranda-Dukoski, L., Davison, M., & Elliffe, D. (2014). Choice, time and food: Continuous cyclical changes in food probability between reinforcers. Journal of the Experimental Analysis of Behavior, 101, 406–421.

Neumann, D. L. (2006). The effects of physical context changes and multiple extinction contexts on two forms of renewal in a conditioned suppression task with humans. Learning and Motivation, 37, 149–175.

Nevin, J. A. (1974). Response strength in multiple schedules. Journal of the Experimental Analysis of Behavior, 21, 389–408.

Nevin, J. A. (2009). Stimuli, reinforcers, and the persistence of behavior. The Behavior Analyst, 32, 285–291.

Nevin, J. A., & Grace, R. C. (2000). Behavioral momentum and the law of effect. Behavioral & Brain Sciences, 23, 73–130.

Nevin, J. A., & Shahan, T. A. (2011). Behavioral momentum: Equation and applications. Journal of Applied Behavior Analysis, 44, 877–895.

Nevin, J. A., Mandell, C., & Atak, J. R. (1983). The analysis of behavioral momentum. Journal of the Experimental Analysis of Behavior, 39, 49–59.

Nevin, J. A., Tota, M. E., Torquato, R. D., & Shull, R. L. (1990). Alternative reinforcement increases resistance to change: Pavlovian or operant contingencies? Journal of the Experimental Analysis of Behavior, 53, 359–379.

Nevin, J. A., Grace, R. C., Holland, S., & McLean, A. P. (2001). Variable‐ratio versus variable‐interval schedules: Response rate, resistance to change, and preference. Journal of the Experimental Analysis of Behavior, 76, 43–74.

Podlesnik, C. A., Bai, J. Y., & Elliffe, D. (2012). Resistance to extinction and relapse in combined stimulus contexts. Journal of the Experimental Analysis of Behavior, 98, 169 - 189.

Podlesnik, C. A., & DeLeon, I. G. (2015). Behavioral momentum theory: Understanding persistence and improving treatment. In F. D. DiGennaro Reed & D. D. Reed (Eds.), Bridging the gap between science and practice in autism service delivery. New York: Springer.

Podlesnik, C. A., & Fleet, J. D. (2014). Signaling added response-independent reinforcement to assess Pavlovian processes in resistance to change and relapse. Journal of the Experimental Analysis of Behavior, 102, 179–197.

Podlesnik, C. A., & Shahan, T. A. (2008). Response–reinforcer relations and resistance to change. Behavioural Processes, 77, 109 - 125.

Podlesnik, C. A., & Shahan, T. A. (2009). Behavioral momentum and relapse of extinguished operant responding. Learning & Behavior, 37, 357–364.

Podlesnik, C. A., & Shahan, T. A. (2010). Extinction, relapse and behavioral momentum. Behavioural Processes, 84, 400–411.

Podlesnik, C. A., Jimenez-Gomez, C., Ward, R. D., & Shahan, T. A. (2006). Resistance to change of responding maintained by unsignaled delays to reinforcement: A response-bout analysis. Journal of the Experimental Analysis of Behavior, 85, 329–347.

Preston, K. L., Silverman, K., Higgens, S. T., Brooner, R. K., Montoya, I., Schuster, C. R., & Cone, E. J. (1998). Cocaine use early in treatment predicts outcome in a behavioral treatment program. Journal of Consulting and Clinical Psychology, 66, 691–696.

Pritchard, D., Hoerger, M., & Mace, F. C. (2014). Treatment relapse and behavioral momentum theory. Journal of Applied Behavior Analysis, 47, 814–833.

Rau, J. C., Pickering, L. D., & McLean, A. P. (1996). Resistance to change as a function of concurrent reinforcer magnitude. Behavioural Processes, 38, 253–264.

Rescorla, R. A. (1972). Informational variables in Pavlovian conditioning. In G. H. Bower (Ed.), The psychology of learning & motivation: Advances in research and theory (pp. 1–46). New York: Academic Press.

Schachtman, T. R., Brown, A. M., & Miller, R. R. (1985). Reinstatement-induced recovery of a taste-LiCl association following extinction. Animal Learning & Behavior, 13, 223–227.

Shahan, T. A., & Burke, K. A. (2004). Ethanol-maintained responding of rats is more resistant to change in a context with added non-drug reinforcement. Behavioral Pharmacology, 15, 279–285.

Skinner, B. F. (1969). Contingencies of reinforcement. East Norwalk: Appleton-Century-Crofts.

Üngör, M., & Lachnit, H. (2006). Contextual control in discrimination reversal learning. Journal of Experimental Psychology: Animal Behavior Processes, 32, 441–453.

Zironi, I., Burattini, C., Aicardi, G., & Janak, P. H. (2006). Context is a trigger for relapse to alcohol. Behavioural Brain Research, 167, 150–155.

Author Note

The experiments were conducted as part of an undergraduate laboratory project in the School of Psychology, University of Auckland. We thank the students involved in this course in 2011 and 2012 for their help conducting the experiments. We also thank the graduate teaching assistants involved in the same course (John Bai, Sarah Cowie, Denys Brand, and Stuart McGill) for their help in conducting the experiments, as well as Mike Owens for taking care of the pigeons. Reprints may be obtained from the first author via correspondence (School of Psychology, The University of Auckland, Private Bag 92019, Auckland, New Zealand) or from any author via e-mail (e-mails: ludmila.miranda-dukoski@ auckland.ac.nz; josh.bensemann@auckland.ac.nz; cpodlesnik@ fit.edu). The research was conducted under Approval TR901 of the University of Auckland Animal Ethics Committee.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Rights and permissions

About this article

Cite this article

Miranda-Dukoski, L., Bensemann, J. & Podlesnik, C.A. Training reinforcement rates, resistance to extinction, and the role of context in reinstatement. Learn Behav 44, 29–48 (2016). https://doi.org/10.3758/s13420-015-0188-8

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13420-015-0188-8