Abstract

In Conditions 1 and 3 of our Experiment 1, rats pressed levers for food in a two-component multiple schedule. The first component was concurrent variable-ratio (VR) 20 variable-interval (VI) 90 s, and the second was concurrent yoked VI (its reinforcement rate equaled that of the prior component’s VR) VI 90 s. In Condition 2, the VR was changed to tandem VR 20, differential reinforcement of low rates (DRL) 0.8 s. Local response rates were higher in the VR than in the yoked VI schedule, and this difference disappeared between tandem VR DRL and yoked VI. The relative time allocations to VR and yoked VI, as well as to tandem VR DRL and yoked VI, were approximately the same across conditions. In Experiment 2, rats chose in a single session between five different VI pairs, each lasting for 12 reinforcer presentations (variable-environment procedure). The across-schedule hourly reinforcement rates were 120 and 40, respectively, in Conditions 1–3 and 4–6. During Conditions 2 and 5, one lever’s VI was changed to tandem VI, DRL 2 s. High covariation between relative time allocations and relative reinforcer frequencies, as well as invariance in local response rates to the schedules, was evident in all conditions. In addition, the relative local response rates were biased toward the unchanged VI in Conditions 2 and 5. These results demonstrate two-process control of choice: Inter-response-time reinforcement controls the local response rate, and relative reinforcer frequency controls relative time allocations.

Similar content being viewed by others

Herrnstein and Heyman (1979) exposed pigeons to concurrent variable-ratio (VR) variable-interval (VI) schedules. They recorded the number of responses and reinforcers to each schedule, as well as the cumulative time that pigeons allocated to responding to a given schedule. Two of their findings were of note. First, manipulations of schedule values that produced changes in the relative VI rate of reinforcement were closely followed by similar changes in the relative time allocations—an outcome termed “matching” (see Baum, 1974). Second, they found that the local response rate (responses to a schedule/time making consecutive responses to a schedule) was higher to the VR than to the VI schedule, a result often found when VR and VI schedules are presented individually (e.g., Peele, Casey, & Silberberg, 1984; Tanno & Sakagami, 2008).

Tanno, Silberberg, and Sakagami (2010) suggested that these two results might be due to the operation of separate processes. Preference, expressed in terms of relative time allocations, might be controlled by the relative frequency of reinforcement, just as Herrnstein and Heyman (1979) suggested. Local response rate, on the other hand, might be controlled by the relation between the duration of the interval between two successive responses to a schedule (the inter-response time, or IRT) and the probability of reinforcement. Support for the latter attribution of the control of response emission has come from studies such as Peele et al. (1984), which showed that (a) the functions relating IRT duration to reinforcer probability differ between VR and VI schedules, and (b) when these functions are equated, the response-rate difference between the schedules is eliminated.

Tanno et al. (2010) tested this two-process account of response emission. They showed that (a) a local VR–VI rate difference emerged when rats were exposed to a concurrent VR 30 VI 30-s schedule; (b) the rate difference decreased when the VR was replaced by a VI that provided the same rate of reinforcement that had been obtained from the previous VR; and (c) changing the replaced VI so that it reinforced the same IRTs previously obtained when that schedule had been VR caused the local VR–VI rate difference to emerge again. These results give credence to the idea that a two-process account of choice is in order, one that determines what portion of session time an animal will allocate to a schedule, and one that determines the rate of response emission to that chosen alternative.

Although Tanno et al. (2010) claimed to demonstrate that IRT reinforcement controls the local response rate within a choice procedure, confidence in their conclusion is diminished by procedural and empirical limitations of their work. One procedural limitation is that their demonstration of IRT control was asymmetric: Although they showed that imposing a VR schedule’s reinforced IRTs on a VI causes the VI schedule to appear VR-like in terms of local rates, they did not provide the complementary test of showing that imposing a VI schedule’s reinforced IRTs on a VR causes the VR to appear VI-like in terms of the local rates.

A second procedural limitation is that Tanno et al. (2010) made no assessment of how the relative frequency of reinforcement controls time allocation within the same experiment. In consequence, the interaction between the control of IRT reinforcement on local response rates and the control of the relative frequency of reinforcement on relative time allocation was not tested. In addition, their data had an important empirical limitation: Two of their six subjects failed to show an increase in the difference between response rates maintained by a VI schedule when VR-like response emission on that schedule was required to produce reinforcement.

The goal of the present work was to redress these inadequacies. To anticipate the conclusion that this work supports, Tanno et al. (2010) were correct in their claim that choice behavior is controlled by two processes, one based on the relative frequency of reinforcement and the other on the IRT reinforcement contingencies that each schedule imposes.

Experiment 1

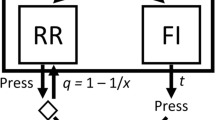

In the present experiment, we conducted the complementary test of IRT reinforcement made in Tanno et al.’s (2010) study: Instead of imposing VR-like reinforced IRTs on a VI schedule, VI-like reinforced IRTs would be required for reinforcement on a VR schedule. In addition, we wished to test for IRT-based control of local response rates in concurrent schedules in a design that was less susceptible to the performance variability seen by Tanno et al. In principle, within-session comparison by using a multiple schedule might produce more orderly outcomes than were seen in Tanno et al.’s between-condition comparisons.

Method

Subjects

Eight male Wistar rats, approximately 120 days old at the beginning of the experiment, served as the subjects. They were individually housed in a hanging wire home cages with internal dimensions of 20 cm (length) by 20 cm (width) by 25 cm (height) in a temperature-controlled vivarium on a 12-h light/dark cycle, where they had continuous access to water and were fed after each session to maintain them at 85 % of their free-feeding weights. These rats had previously participated in a Pavlovian conditioning study, but were naïve to experimenter-arranged operant contingencies.

Apparatus

The experiment was conducted in four identical sound-attenuated enclosures, each of which contained an operant chamber with internal dimensions of 32 cm (length) by 24 cm (width) by 29.5 cm (height). The front and back walls were made of stainless steel. Except for these walls and the metal grid floor, all other surfaces were Plexiglas. On the front wall were two retractable levers (MED Associates, Inc., ENV-112B) that intruded 1.7 cm into the chamber when extended. The centers of the levers were 7.5 cm above the floor, 3.5 cm apart from their proximal side walls. A 2.8-W lamp that produced white light was centered 27 cm above the floor. A 2.5-cm diameter translucent circle that could be illuminated was located on the response panel above each lever. A 5 × 5 cm opening located equidistant between the two levers was available for food pellet delivery. A 45-mg food pellet served as the reinforcer (Bio-Serv, #F0021 dustless precision pellets, rodent purified diet). A ventilation fan masked extraneous sounds. All experimental events and data recording were computer controlled by MED-PC IV software and a PC-compatible interface (Med Associates, Inc.).

Procedure

Rats were initially trained by successive approximation to press the left or the right lever, with lever position counterbalanced across subjects. Once reliable lever-pressing was established, a pretraining session was conducted in which either the right or the left lever was randomly inserted at the beginning of a session or after each reinforcer except the last. The lever was withdrawn for 0.5 s after each reinforcer presentation. In the first session, a single response produced a food pellet. Then, over four additional sessions, the number of responses required to produce a pellet was increased to two, then four, then eight, and finally 16. Each session ended after 60 reinforcers.

Following this single-lever pretraining regimen, rats were exposed for five pretraining sessions to a two-component multiple schedule in which the “A” component was a concurrent VR 10 VI 90-s schedule, and the “B” component was a concurrent VI X-s VI 90-s schedule for which the value of X equaled .83 of the time that it had taken to earn reinforcement on the prior VR in Component A (yoked VI). This use of a multiplier was based on a pilot study in which we observed that, despite the yoking of the VI to the Component-A VR, the VI reinforcement rates produced were approximately 20 % below those of the comparison VR. This modification approximately equated the VR, yoked-VI reinforcement rates in the pilot study and was used in this study as well. VR-schedule values were defined by interrogating a probability gate after every response. The same device was used to define the VI 90-s inter-reinforcement intervals (IRIs) by interrogating the probability gate every 1 s with p = .0111.

Each choice pretraining session began with a 5-min timeout, during which the chamber was dark and both levers were withdrawn. Following this interval, the levers were inserted into the chamber. Each session consisted of six strictly alternating 5-min components, beginning with Component A. The lever associated with VI 90 s in Component A was always associated with VI 90 s in Component B (fixed alternative), and the lever associated with VR 10 in Component A was also always associated with the yoked VI in Component B (variable alternative). The lever positions for the fixed and the variable alternatives were counterbalanced across subjects. Each component was cued by a houselight that was illuminated either continuously or according to a 0.5-s on/off cycle during the component. The cuing stimuli and component designations were counterbalanced across subjects. Components were separated by a 30-s interval, during which the houselight was extinguished and both levers were withdrawn. A changeover delay (see Herrnstein, 1961), which prevented reinforcer availability until at least 2 s had elapsed since a changeover response, was imposed on choices.

Following choice pretraining, the VR-10 schedule was changed to VR 20, and the first experimental condition began (Condition 1). After 13 sessions in this 50-session condition, the number of components for each session was reduced from six to four, to reduce the possibility of food satiation in the subjects. Then, in Condition 2, the VR 20 in Component A was changed to a tandem VR 20, differential reinforcement of low rate (DRL) 0.8-s schedule. In this schedule, reinforcement is delivered for the first IRT that exceeds 0.8 s after the response requirement of the VR schedule has been fulfilled. By the definition of a tandem schedule, no stimulus change accompanied completion of the VR 20. Upon completion of Condition 2, the procedure of Condition 1 was repeated as Condition 3 (A–B–A design). Conditions 2 and 3 lasted for 30 sessions each. With few exceptions, sessions were conducted once daily, 6 or 7 days per week.

Before Conditions 2 and 3, rats were given three sessions of training after eating 6 g of standard chow 1 h before the session. Because these manipulations were unrelated to the purposes of the present work, the results from these prefeeding sessions are not reported here.

Results

The top and middle panels of Fig. 1, respectively, present the local response rates (successive responses to a schedule/time to emit them) to the variable and fixed alternatives, and relative time allocation (time in the presence of the variable alternative/time in the presence of both alternatives) as a function of five-session blocks in the first (left panels), second (center panels), and third (right panels) conditions of Experiment 1. Visual inspection of the figure shows that (a) local response rates tended to be higher in the VR than in the yoked VI schedule in Conditions 1 and 3, and were approximately equal between the tandem VR DRL and yoked VI in Condition 2; (b) local response rates for the variable alternative were largely higher than those for the fixed alternative in Conditions 1 and 3; and (c) the between-component relative time allocations to the variable alternative were virtually identical across all conditions.

Local response rates to a schedule (top panels), relative time allocations to the variable alternative (middle panels), and inter-changeover times (bottom panels; log scale) as a function of five-session blocks for each of the three conditions of Experiment 1. Vertical bars through the data points define the range of the standard errors of the means.

The outcomes of analyses of variance (ANOVAs) were generally consistent with the account above. For the local response rates (top panels of Fig. 1), a two-way ANOVA for the four types of Alternative-Component Pair (VR 20 and yoked VI as the variable alternative, and two VI 90 s as the fixed alternative) and five-session Block as within-subjects factors for Condition 1 revealed significant main effects of alternative-component pairs [F(3, 21) = 31.92, p < .001, η g 2 = .50] and five-session blocks [F(9, 63) = 22.56, p < .001, η g 2 = .29], as well as their interaction [F(27, 189) = 2.05, p < .01, η g 2 = .06] (see Olejnik & Algina, 2003, for the effect size n g 2). With regard to the last five-session block of Condition 1, the simple effect of alternative-component pair was significant, and a post-hoc multiple comparison test revealed the order of local response rates as VR20 > yoked VI > VI 90 s paired with VR 20 = VI 90 s paired with yoked VI. Almost identical outcomes were obtained from the ANOVA for Condition 3’s data: The ANOVA revealed a main effect of schedule-component pairs [F(3, 21) = 27.05, p < .001, η g 2 = .49], and a post-hoc multiple comparison test revealed the same order of local response rates.

The order of local response rates noted above was found in Condition 2. An ANOVA for Condition 2 revealed significant main effects of alternative-component pairs [F(3, 21) = 5.06, p < .01, η g 2 = .23] and session blocks [F(5, 35) = 13.17, p < .001, η g 2 = .11], along with their interaction [F(15, 105) = 2.12, p < .05, η g 2 = .03]. Although the simple effect of alternative-component pairs was significant in the last five-session block of Condition 3, a post-hoc multiple comparison test revealed no significant differences across the four alternative-component pairs.

For time allocation (middle panels of Fig. 1), a two-way ANOVA for the two Component (concurrent VR 20 VI 90 s and concurrent yoked VI VI 90 s) and five-session Block as within-subjects factors was conducted for each of the three conditions. As is apparent from the figure, the analysis revealed no significant effect of components in all three conditions.

The bottom panels of Fig. 1 shows inter-changeover times (visit durations) as a function of five-session blocks in the first (left panel), second (center panel), and third (right panel) conditions of Experiment 1. A two-way ANOVA for the four types of Alternative-Component Pair (VR 20, yoked VI, VI 90 s paired with VR 20, and VI 90 s paired with yoked VI) and five-session Block as within-subjects factors revealed no significant difference between VR 20 and the yoked VI, as well as between the two VI 90-s schedules, in all conditions.

Table 1 presents group outcomes of the other related measures. The results are expressed as the means, medians, and standard errors of the means during the last five sessions of each condition. Despite using a yoking procedure, the overall reinforcement rate was slightly higher in Component B than in Component A in Conditions 1 and 3 (first row in Table 1). A two-way ANOVA for Condition (1, 2, and 3) and Component (A and B) as within-subjects factors revealed significant main effects of both condition [F(2, 14) = 87.54, p < .001, η g 2 = .84] and component [F(1, 7) = 27.89, p < .01, η g 2 = .03], and their interaction [F(2, 14) = 5.33, p < .05, η g 2 = .01]. A post-hoc test revealed a significant simple effect of components at Conditions 1 [F(1, 7) = 26.43, p < .01, η g 2 = .04] and 3 [F(1, 7) = 14.36, p < .01, η g 2 = .03].

The relative frequencies of reinforcement were almost equal in both of the two components in each condition (5th row in Table 1). A two-way ANOVA for Conditions (1, 2, and 3) and Components (A and B) as within-subjects factors revealed no significant difference between the components in any conditions.

The sixth row in Table 1 shows that the duration of reinforced IRT for the variable alternative in Component A was shorter than that in Component B in Conditions 1 and 3 (between VR 20 and yoked VI), and this difference disappeared in Condition 2 (between tandem VR 20 DRL 0.8 s and a yoked VI). Although a two-way ANOVA for Conditions (1, 2, and 3) and Components (A and B) as within-subjects factors revealed no significant main effect of components, its p value was .07 [F(1, 7) = 4.47, p = .07, η g 2 = .12]. Additional paired t tests between Components A and B for each condition revealed significant differences in Conditions 1 [t(7) = –15.07, p < .001] and 3 [t(7) = –20.36, p < .001], but not in Condition 2 [t(7) = –0.65, p = .54].

The duration of the reinforced IRT for the fixed alternative were almost equal between components (VI 90 s paired with VR 20 and VI 90 s paired with yoked VI) in all conditions (seventh row in Table 1). A two-way ANOVA for Conditions (1, 2, and 3) and Components (A and B) as within-subjects factors revealed no significant difference between the components in all conditions.

Discussion

As we noted earlier, Tanno et al. (2010) demonstrated that VR-like contingencies elevate VI rates; however, they did not demonstrate that VI-like contingencies reduce VR rates. To a degree, the results of Experiment 1 have redressed this problem. As was shown in Fig. 1, we observed local-rate differences between the VR and yoked VI in Condition 1, and the missing complementary test from Tanno et al.—the imposition of VI-like reinforced IRTs on a VR—produced the expected response-rate reductions to that schedule in Condition 2. During this change, the relative time allocations to VR and yoked VI in comparison with VI 90 s were virtually identical, and the changeover patterns were also identical. Table 1 showed that the relative frequencies of reinforcement and reinforced IRTs for the fixed alternative were almost constant across conditions; in Condition 2, only the reinforced IRT for the variable alternative was lengthened. These results support the two-process account offered by Tanno et al.

Despite this success, three other problems emerged from the present procedure. First, local response rates for the yoked VI were higher than for VI 90 s, at least for Conditions 1 and 3. This outcome is of some concern, because one might argue that the local response rate was not controlled by IRT reinforcement. In our view, this result was due to interaction between the multiple-schedule components. In the present experiment, the lever associated with VR 20 in Component A was also the lever for yoked VI in Component B in all subjects. Imperfect discriminative control of the component signals may have increased the local response rates in the yoked VI.

Second, a trend also apparent in Fig. 1 is that the difference in local response rates between the A and B components that define the variable alternative grows somewhat as a function of preference in all three conditions of the study. Figure 2 maps out this relationship. The correlation coefficients for Conditions 1, 2, and 3 are, respectively, .67, .92, and .86. These correlations are likely a byproduct of the change from VR 20 to tandem VR 20 DRL 0.8 s: Adding a DRL contingency to VR reduced the local response rates to that schedule; in turn, these decreased local rates decreased the VR relative reinforcement rate; and that decrease in relative reinforcement rate reduced choice for the VR. We acknowledge that the correlations shown in Fig. 2 jeopardize the claim that time allocation and local response rates were truly independent factors in this experiment.

Differences in local response rates between variable-alternative Components A and B as a function of the relative time allocation to the variable alternative for all conditions in Experiment 1

Finally, the preference range was restricted, with the relative time allocation often approximating .9. This outcome leaves unanswered what the relation is between preference and local rates at more intermediate relative response rates.

Of more interest for the present study, the method that would be used in Experiment 2 should generate time-based preference data that would vary around indifference. When coupled with the results of Experiment 1, in which large preferences obtained, this would permit a full delineation of the relationship between time allocation and local response rates in choice. Furthermore, we manipulated the presence versus absence of between-alternative differences in IRT reinforcement. By arranging the independent variation of relative time allocation and IRT reinforcement, it should be possible to resolve whether the dependency seen in Experiment 1 between local response rates and time allocation was causal or merely correlational.

Experiment 2

Tanno et al. (2010) did not vary the relative frequency of reinforcement. In consequence, they made no assessment of the interaction between time allocations that are controlled by the relative frequency of reinforcement and local response rates that are controlled by IRT reinforcement. The aim of this second experiment was to provide evidence of how changes in the between-schedule time allocation affect local response rates.

We used Davison and Baum’s (2000) variable-environment procedure. In their study, pigeons chose between a pair of dependent VI schedules until a specified number of reinforcers were obtained. Following a brief blackout, an identically sized reinforcer block began, this time providing reinforcement according to a different pair of VI schedules. Discriminative stimuli did not identify the different schedule pairs in effect in a given block. Generally, each session ended after a specified number of blocks were completed. Davison and Baum found that pigeons quickly discriminated the schedule pair in effect, so that by the end of, say, a ten-reinforcer block, the relative response rates covaried with, but fell short of equaling, the obtained relative frequency of reinforcement. Such an outcome is called “undermatching” (e.g., Baum, 1974; Myers & Myers, 1977). Of more interest, choice data from the variable-environment procedure generated a wide range of relative frequencies of reinforcement within a single condition. As such, it is a useful method for testing the effects of IRT reinforcement on choice performances consistent with matching relations.

In Condition 1 and its redetermination in Condition 3, we followed Davison and Baum (2000) in using only a single VI to assign reinforcement and allocated that assignment to one of two levers probabilistically (Stubbs & Pliskoff, 1969). With this arrangement, a wide range of obtained relative frequencies of reinforcement could be produced that should be largely unaffected by how an animal distributed its time in the presence of an alternative. Then, in Condition 2 of the study, the schedule assigning reinforcers to one alternative was not a VI, but a tandem VI DRL schedule. Conditions 4, 5, and 6 of this experiment were, respectively, identical to Conditions 1, 2, and 3, except that the values of the VI seeding reinforcer assignments differed.

On the basis of the findings of Davison and Baum (2000), we expected that when choice was between concurrent VI schedules (Conditions 1, 3, 4, and 6), the relative time allocated to each VI should covary, but should be less than the relative frequency of the reinforcers provided (i.e., undermatching), an outcome that should complement the large preferences seen in most of the data from the prior experiment. Furthermore, the addition of a DRL reinforcement contingency for VI responding in Conditions 2 and 5 of this experiment should result in lowering local response rates to that schedule. If, in fact, local response rates are independent of time allocation, this manipulation should result in lower local response rates to this schedule, yet have no influence on time allocation.

Method

Subjects and apparatus

Eight experimentally naïve male Wistar rats, approximately 100 days old at the beginning of the experiment, served as subjects. Their conditions of maintenance and the apparatus were the same as in Experiment 1.

Procedure

The rats were initially trained to press the left or the right lever by successive approximation. The lever positions were counterbalanced across subjects. After shaping was judged complete, the left or the right lever, selected at random, was inserted into the chamber. A single press delivered a food pellet, and then the lever was withdrawn. Following an interval of 0.5 s, a lever, again selected at random, was inserted and, when pressed, delivered another food pellet. This arrangement continued until the subject had obtained 30 pellets from each lever.

The experimental procedure was similar to that used by Davison and Baum (2000). Each session was composed of five 12-reinforcer blocks, each associated with one of the following left-lever/right-lever reinforcer ratios (11:1, 8:4, 6:6, 4:8, or 1:11). The ratio assigned to a particular block was selected randomly without replacement. Each block began with the illumination of the houselight and the insertion of both levers. All blocks save the last were followed by a 30-s interval during which the levers were retracted and the houselight was extinguished. Discriminative stimuli were not used to cue the reinforcer ratio in effect in a block. However, a change in reinforcer ratios was signaled by the blackout that preceded the beginning of a new block.

During each block, reinforcers were assigned by a single VI 30-s schedule that stopped operating once an assignment was made (Stubbs & Pliskoff, 1969). Subjects were introduced over sessions to the intermittency of reinforcement that this schedule provides by decreasing its reinforcement rate from 360/h (VI 10 s) to 120/h (VI 30 s). The 12 intervals composing the VI 30-s schedule in the main procedure of this experiment were determined by Fleshler and Hoffman’s (1962) progression. Once an interval was completed, a reinforcer was assigned randomly without replacement to the left or the right lever on the basis of the reinforcer ratio in effect in that block. As in Experiment 1, a 2-s changeover delay prevented changeovers from producing a food pellet.

The procedure described above defined the first and third conditions of this experiment. In the second condition, the schedule associated with one lever, chosen at random and counterbalanced across subjects, was changed from VI to tandem VI DRL 2 s (variable alternative). The schedule for the other alternative was VI in all conditions (fixed alternative). Conditions 4, 5, and 6, respectively, were identical to Conditions 1, 2, and 3, except that the single VI providing reinforcer assignments to the two levers was changed to VI 90 s.

Condition 1 continued until at least 40 sessions had been completed and individual-subject calculations of preference based on three successive three-session averages showed neither an upward nor a downward trend. The stability criterion for subsequent conditions was the same, except that the minimal criterion for condition completion was reduced to 20. All data analysis was based on the last nine sessions of a condition.

With few exceptions, sessions were conducted daily, five or six days per week. For three sessions after completing each condition, the subjects were fed prior to exposure to the next choice procedure of this experiment. Since the purpose of this manipulation was unrelated to the topic of this article, these results are not reported here.

Results

Figure 3 presents the relative time allocations (top row) and relative local response rates (second row) to the variable alternative as a function of successive reinforcers within a 12-reinforcer block during the first three conditions. Rows 3 and 4 in the figure, respectively, present these measures for the last three conditions of the study. We remind the reader that (a) for the top two rows of data, the VI schedule that seeded reinforcement was VI 30 s, whereas in the bottom two rows it was VI 90 s; and (b) the panel columns on the left and right of the figure are based on a choice between VI schedules, while the middle column is based on choice between a VI and a tandem VI DRL schedule.

Relative time allocations (first row of panels) and relative local response rates (second row of panels) as a function of the serial order of reinforcers in a 12-reinforcer block for each of five different reinforcer ratios in Conditions 1–3 of Experiment 2. The third and fourth rows of panels are plotted on the same basis as rows one and two, respectively, except that they are based on Conditions 4–6. Vertical bars through the data points define the range of the standard errors of the means

Four outcomes are apparent in Fig. 3. First, the preference data splay out as a function of serial order in a 12-reinforcer block, and do so in a way consistent with between-lever differences in reinforcement probabilities. When the reinforcer ratios are large (1:11 or 11:1), preference in terms of time shifts more in the direction of the richer schedule than when the ratios are smaller (4:8 or 8:4), and when ratios are smaller, they shift more than they do when the choice schedules provide the same reinforcement rate (6:6). Second, the preference levels and the apparent rate at which they splay with serial position in the reinforcer block seem unaffected by the change in reinforcement rate between Conditions 1–3 and 4–6 (see the first and third rows of panels). Third, unlike the preference data, the relative local response rates to the variable alternative did not change as a function of serial position in each 12-reinforcer block. And fourth, during Conditions 2 and 5, in which the variable alternative was changed from VI to tandem VI DRL, the relative local response rates diminished from the value of approximately .5 that was in evidence in the preceding and following conditions. Almost the same tendencies are evident in the representative individual-subject data shown in Fig. 4.

Representative individual data (for Rats 2 and 7) showing relative time allocations and relative local response rates as a function of the serial order of reinforcers in a 12-reinforcer block for each of five different reinforcer ratios in Conditions 1–3 of Experiment 2

Figure 5, which is derived from Fig. 3, presents the relative time allocations and relative local response rates to the variable alternative as a function of the relative frequency of reinforcement for Conditions 1, 2, and 3 (left panels) and Conditions 4, 5, and 6 (right panels). The top two panels show that relative time allocation was controlled by the relative frequency of reinforcement and was largely independent of whether the choice was between two VIs (Conditions 1, 3, 4, and 6) or between a VI and a tandem VI DRL (Conditions 2 and 5). For the top left panel, a two-way ANOVA for Condition and the Relative Frequencies of Reinforcement as within-subjects factors revealed a significant main effect of relative frequencies of reinforcement [F(4, 28) = 112.96, p < .001, η g 2 = .68], but the effect of condition was not significant. Although a significant interaction was found [F(8, 56) = 4.60, p < .001, η g 2 = .04], significant simple effects of conditions were found only at the 11:1 reinforcer ratio. For the top right panel, the same type of ANOVA showed a significant main effect of relative frequencies of reinforcement [F(4, 28) = 79.95, p < .001, η g 2 = .50], but the effect of condition and the interaction were not significant.

Relative time allocated to the variable alternative (top two panels) and ratios of local response rates (bottom two panels) as a function of relative reinforcement frequency for Conditions 1–3 (left panels) and Conditions 4–6 (right panels) of Experiment 2. The data points are derived from Fig. 3. Vertical bars through the data points define the range of the standard errors of the means

Contrary to the top two panels, the bottom two panels of Fig. 5 show that the rate-reducing impact of the DRL contingency for the tandem VI DRL schedule (Conditions 2 and 5) decreased the relative local response rates to the variable alternative, and that this rate was independent of the relative frequency of reinforcement. For the bottom left panel, a two-way ANOVA for Condition and the Relative Frequencies of Reinforcement as within-subjects factors revealed a significant main effect of condition [F(2, 14) = 32.26, p < .001, η g 2 = .53] and a significant interaction [F(8, 56) = 6.58, p < .001, η g 2 = .02]. Significant simple effects of conditions were found at all levels of relative frequency of reinforcement, and a post-hoc multiple comparison test revealed the following order of relative local response rates at all levels of relative frequency of reinforcement: Condition 1 = Condition 3 > Condition 2. In contrast, significant simple effects of the relative frequency of reinforcement were found only in Condition 1. Almost the same outcomes were obtained from the ANOVA for the bottom right panel. The ANOVA revealed a significant main effect of condition [F(2, 14) = 23.20, p < .001, η g 2 = .28] and a significant interaction [F(8, 56) = 2.72, p < .01, η g 2 = .01]. Significant simple effects of condition were found at all levels of relative frequency of reinforcement, and a post-hoc multiple comparison test revealed the same order of relative local response rates noted above. Significant simple effects of the relative frequency of reinforcement were found only in Condition 5.

Figure 6 shows the relative local response rates and relative inter-changeover times (visit durations) for the variable alternative for each condition of Experiment 2 (see the Appendix for the absolute local response rates and inter-changeover times for each alternative). Consistent with the above analysis, the relative local response rates were lower in Conditions 2 and 5 than in the other conditions. Differences in reinforcer ratios across blocks had no detectable effects. In contrast, the relative inter-changeover times were almost equal for all conditions, and ordinal covariation were found between the reinforcer ratios and inter-changeover times.

Relative local response rates and relative inter-changeover times for the variable alternative for each condition of Experiment 2. Values in the legend show five different reinforcer ratios

Table 2 shows the number of sessions and median durations of reinforced IRTs for the variable and fixed alternatives for all conditions of Experiment 2. As expected, the durations of the reinforced IRT for the variable alternative were longer than those for the fixed alternative in Conditions 2 and 5, while it was also longer in Condition 6. A two-way ANOVA for Condition (from 1 to 6) and Alternative (variable and fixed) as within-subjects factors revealed significant main effects of condition [F(5, 35) = 19.20, p < .001, η g 2 = .36] and alternatives [F(1, 7) = 19.27, p < .01, η g 2 = .39], as well as a Condition × Alternative interaction [F(5, 35) = 27.28, p < .001, η g 2 = .34]. A post-hoc test revealed significant simple effects of alternative at Conditions 2, 5, and 6.

Discussion

Tanno et al. (2010) did not manipulate relative reinforcement rate, and therefore did not demonstrate how changes in preference affect the controlling variables that they identified. In addition, the results of our Experiment 1 suggested a correlation between local response rates and relative time allocation (see Fig. 2) that jeopardized Tanno et al.’s claim that time allocation and local response rates are independent factors in determining concurrent performances. Experiment 2 was designed to remedy these problems.

The results obtained were clear-cut. As is shown in Figs. 3 and 5, there was orderly covariation between time allocation and the relative frequency of reinforcement, a result consistent with the idea that the latter variable controlled changes in time allocation. When this result is combined with the strong-preference data from Experiment 1, a complete mapping of the relation between preference expressed in time allocation and relative frequency of reinforcement is created. Taken together, these findings reinforce the view that across the complete range of possible choices, relative reinforcement frequency dictates relative time allocation (Baum & Rachlin, 1969; Brownstein & Pliskoff, 1968).

The results concerning local response rates seem equally convincing. In Figs. 3, 5, and 6, relative local response rates to the variable alternative are, to a first approximation, unchanged with variation in the relative frequency of reinforcement. What changes local rates is not the distribution of reinforcers between alternatives, but the reinforced-IRT contingency that a particular schedule imposes (Table 2).

When Tanno et al. (2010) introduced their idea that time preferences in choice are controlled by the relative frequency of reinforcement and local rates are controlled by IRT reinforcement, they argued for two levels of analysis: Time allocation was controlled at a molar level, because the relative frequency of reinforcement is based on session-wide totals of reinforcement to each alternative; local response rates to a schedule, on the other hand, were controlled at a molecular level, because their account literally attributes local rates to the relation between the probability of reinforcement and the time between the penultimate and reinforced responses.

An interesting dividend of the present use of the variable-environment procedure of Davison and Baum (2000) is that it calls this designation into question, because preference was not controlled by session-wide totals of between-alternative reinforcement. In the present study, we see in Fig. 3 that often after four or five reinforcers, shifts in preference have already emerged under some choice schedules, as was shown in the studies of Davison and Baum (2000; see also Baum & Davison, 2004, and Baum, 2010, for a review). This outcome raises the possibility that what controls preference is not relative session-wide rates of reinforcement, but instead local and recent between-schedule differences in reinforcement frequency. Indeed, it might be more accurate to say that both preference and local rates in choice are controlled at a local, rather than a session-wide, level.

Comparison of the left and right panels of Fig. 6 reveals that relative inter-changeover times for the variable alternative were unchanged across conditions, even though the local response rates decreased in Conditions 2 and 5. In addition, the results in the right panel of Fig. 6, which shows the ordinal covariation between reinforcer ratio and relative inter-changeover times across conditions, suggests that IRT reinforcement might control within-schedule responding, and that the rate of reinforcement might control between-schedule responding (Shull, 2011).

General discussion

Summary of our experiments

Tanno et al. (2010) offered evidence that manipulating IRT reinforcement in concurrent schedules affects local response rates much more than it affects a molar preference measure such as relative time allocation. They interpreted this finding as showing that these two measures were separable, and that choice as an outcome was predicated on the operation of two processes. There were, however, a few problems with their data and methods. First, their test was asymmetric, in that IRT reinforcement was only manipulated on one choice schedule, and second, they attributed control in part to the relative frequency of reinforcement, even though they did not manipulate it in their study.

The purpose of Experiment 1 was to remedy the first problem with Tanno et al. (2010). In the first and third conditions, comparison between concurrent VR VI and concurrent yoked VI VI schedules showed higher local response rates in VR than in yoked VI, and the rate in VR decreased by adding a DRL contingency in the second condition (top part of Fig. 1). The relative time allocations to the VR and yoked VI were almost the same in all conditions, whereas their absolute levels between Condition 2 and Conditions 1 and 3 differed (bottom part of Fig. 1). These results support Tanno et al.’s claim that local response rates in choice situations are controlled by IRT reinforcement, and this control is independent of the control of relative time allocation by the relative frequency of reinforcement.

Unfortunately, a correlation appeared between changes in preference and changes in local rates (Fig. 2). We viewed this correlation as a byproduct of the following chain of events: (a) adding a DRL contingency to the VR schedule reduced its local response rate; (b) this, in turn, decreased the relative VR reinforcement rate; and (c) the decreased relative VR reinforcement rate reduced preference for the VR.

The two-process account advanced in this report is consistent with outcomes (a) and (c); however, the attendant decrease in relative VR reinforcement jeopardized the two-process account, and called for addressing whether the covariation between changes in preference and local rates was causal or merely correlational. In addition, Experiment 1 introduced another problem: Preferences for the variable alternative were uniformly high, thereby failing to define a full functional relationship between changes in relative time allocation and between-schedule local response rates.

The purpose of Experiment 2 was to remedy the second problem of Tanno et al. (2010), as well as these emergent problems in Experiment 1. Experiment 2 was based on the so-called “variable-environment” procedure of Davison and Baum (2000). This procedure complements the extreme-preference data of the prior experiment by populating its data set with more moderate preference levels. In addition, this time the local response rates were largely unchanged with shifts in preference, although imposition of a tandem schedule terminating in DRL did show the sensitivity of local response rates to IRT reinforcement. Finally, by virtue of its frequent, within-session changes in the relative frequencies of reinforcement, Experiment 2 showed that control by this variable is based not on subjects’ session-wide calculations of each schedule’s reinforcement frequency, but instead on local and recent changes in the probability of reinforcement.

By separately manipulating preference levels and local response rates, Experiments 1 and 2 join Tanno et al. (2010) in supporting the view that two processes control behavior in choice: one based on control by local differences in reinforcement frequency, and the other based on local differences in the IRTs that different choice schedules reinforce. We view these manipulations, so often used in pursuit of a functional analysis of behavior, as decomposing the behavior stream into its likely sources of control.

Relation to other single- and concurrent-schedule studies

This article reports that concurrent performances are controlled by two independent factors: (a) the relative frequency of reinforcement, which controls where time is allocated in choice, and (b) IRT reinforcement, which determines the local rate of response emission during a visitation to an alternative. The utility of the present work is that the joint operation of these factors is revealed in a single research design. However, individually the processes presented here can be identified in prior work. For example, the correspondence between relative time allocation and relative reinforcement frequency has been explicitly demonstrated by Brownstein and Pliskoff (1968) and Baum and Rachlin (1969). Tanno and Silberberg (2012) and Tanno et al. (2010) have shown in choice as well as individual schedules that IRT reinforcement defines between-schedule differences in local response rates. Finally, the independence of these two factors in determining choice behavior was shown by Herrnstein and Heyman (1979) in concurrent VR VI and by Bauman, Shull, and Brownstein (1975) in their demonstration of equivalence in time allocation to a VI schedule when the alternative source of reinforcement was either a VI or a variable-time schedule.

As was discussed in Tanno et al. (2010), if we accept Herrnstein’s (1970) notion that single schedules can be viewed as a choice situation between experimentally reinforced behavior and endogenously reinforced behavior, our conclusion is consistent with findings from Shull and colleagues (Shull, 2011; Shull, Gaynor, & Grimes, 2001; Shull & Grimes, 2003; Shull, Grimes, & Bennett, 2004) in single-schedule situations. They reported that responses (or IRTs) under single reinforcement schedules can be classified into bouts of responses or pauses, and that reinforcement frequency mainly affects the start of a bout (bout-initiation rate), whereas types of reinforcement schedule (VI and tandem VI–FR or tandem VI–VR) mainly affect the speed of responding within a bout (within-bout response rate). Because the reciprocal of the bout-initiation rate will be the time spent not responding, and that of the within-bout response rate will be the local response rate on the time spent in responding, Shull’s results can be interpreted as showing that the reinforcement rate controls time allocation and IRT reinforcement controls local response rate (see also Smith, McLean, Shull, Hughes, & Pitts, 2014).

Theoretical implications

The present study demonstrates that the IRT reinforcement contingencies imposed by different types of schedules control local response rate, whereas the frequency of reinforcement controls time allocation. These conclusions follow from the premise that changes in behavior that accompany changes in some dimension of reinforcement identify control of the former by the latter. Stated another way, our claims follow from the usual assumption among behavior analysts that establishing functional relations between performances and reinforcement identify mechanisms of control. But this assumption begs an interesting question: Are there two separable loci of control in the mechanism of reinforcement?

Morse (1966) thought so. He distinguished between the shaping and strengthening of reinforcement. In this view, IRT reinforcement shapes a response unit defined by the IRT, and the frequency of that unit is strengthened by the frequency of reinforcement. This idea is repeatedly discussed in the literature on the matching law (e.g., de Villiers, 1977; Nevin, 1982). The present results can be viewed as evidence in support of this thesis (see also Shull, 2011).

That having been said, we recently proposed the so-called copyist model (Tanno & Silberberg, 2012), an account of schedule effects that succeeds in accommodating not only the VR–VI rate difference (e.g., Peele et al., 1984), but also matching in concurrent VI VI schedules, and matching with response bias toward the VR in concurrent VR VI schedules. We address this point because the copyist model explains behavior with only the shaping feature of reinforcement in operation (Shimp, 1976, 1984).

Its algorithm posits that animals remember the last 300 reinforced IRTs. These IRTs are weighted by an exponential function, so that IRTs closer to reinforcement contribute more to its mean value. This difference is what most clearly distinguishes this account from earlier IRT reinforcement accounts (Anger, 1956; Morse, 1966).

To emit a response, a single IRT is randomly drawn from memory, its probability of selection weighted so that each IRT in memory occupies the same portion of session time. That is, all other things being equal, the probability of sampling, say, a 2-s IRT is twice that of sampling a 4-s IRT. This rule is based on Shimp’s demonstrations of time-allocation matching in choice among different IRTs (e.g., Shimp, 1969, 1974; Shimp, Sabulsky, & Childers, 1990).

To respond, a single IRT is selected from an exponential distribution with a mean value equal to the sampled IRT from memory. If reinforcement is presented for the emitted IRT, it replaces the oldest reinforced IRT in memory. Whether reinforcement is presented or not, a new IRT duration is then selected for emission as the next simulated response.

To simulate concurrent performances, we added a “choice tag” to each IRT in memory. For example, if the last reinforcement was obtained from the left alternative, the duration of the exponentially weighted sequence of left IRTs that terminated in reinforcement was updated in memory. Thus, the copyist model applied to choice differs in two important ways from the prior IRT accounts offered by Anger (1956) and Morse (1966). First, as we noted earlier, the copyist model is unique in exponentially weighting the string of IRTs between successive reinforcers, and second, tagging the location of reinforced IRTs permits extending a single-schedule account to choice behavior.

The copyist model assumes that subjects simply reproduce reinforced IRTs. Thus, responding to schedules in the here and now is viewed as merely copying what was reinforced in the past. The algorithm in use assumes that subjects’ behavioral repertoire is composed of many behavioral units, with each unit shaped by IRT reinforcement. Because these IRTs are selected by their consequences, they have no value or strength. In its strongest form, the copyist model explains behavior without appeal to operant views of the behavior stream as measuring reinforcer value or strength. Instead, behavior is due to response–reinforcer contiguity and the IRTs that these contiguities select (Shimp, 1976, 1984). Although the present study demonstrated two sources of the control of choice, further studies will be needed to determine the actual reinforcement mechanisms for those relations: Are they due to two separable processes, as suggested by the present work, or by one, as suggested by the copyist model?

References

Anger, D. (1956). The dependence of interresponse times upon the relative reinforcement of different interresponse times. Journal of Experimental Psychology, 52, 145–161. doi:10.1037/h0041255

Baum, W. M. (1974). On two types of deviation from the matching law: Bias and undermatching. Journal of the Experimental Analysis of Behavior, 22, 231–242. doi:10.1901/jeab. 1974.22-231

Baum, W. M. (2010). Dynamics of choice: A tutorial. Journal of the Experimental Analysis of Behavior, 94, 161–174. doi:10.1901/jeab. 2010.94-161

Baum, W. M., & Davison, M. (2004). Choice in a variable environment: Visit patterns in the dynamics of choice. Journal of the Experimental Analysis of Behavior, 81, 85–127. doi:10.1901/jeab. 2004.81-85

Baum, W. M., & Rachlin, H. C. (1969). Choice as time allocation. Journal of the Experimental Analysis of Behavior, 12, 861–874. doi:10.1901/jeab. 1969.12-861

Bauman, R. A., Shull, R. L., & Brownstein, A. J. (1975). Time allocation on concurrent schedules with asymmetrical response requirements. Journal of the Experimental Analysis of Behavior, 24, 53–57. doi:10.1901/jeab. 1975.24-53

Brownstein, A. J., & Pliskoff, S. S. (1968). Some effects of relative reinforcement rate and changeover delay in response-independent concurrent schedules of reinforcement. Journal of the Experimental Analysis of Behavior, 11, 683–688. doi:10.1901/jeab. 1968.11-683

Davison, M., & Baum, W. M. (2000). Choice in a variable environment: Every reinforcer counts. Journal of the Experimental Analysis of Behavior, 74, 1–24. doi:10.1901/jeab. 2000.74-1

de Villiers, P. A. (1977). Choice in concurrent schedules and a quantitative formulation of the law of effect. In W. K. Honing & J. E. R. Staddon (Eds.), Handbook of operant behavior (pp. 233–287). Englewood Cliffs, NJ: Prentice-Hall.

Fleshler, M., & Hoffman, H. S. (1962). A progression for generating variable-interval schedules. Journal of the Experimental Analysis of Behavior, 5, 529–530. doi:10.1901/jeab. 1962.5-529

Herrnstein, R. J. (1961). Relative and absolute strength of response as a function of frequency of reinforcement. Journal of the Experimental Analysis of Behavior, 4, 267–272. doi:10.1901/jeab. 1961.4-267

Herrnstein, R. J. (1970). On the law of effect. Journal of the Experimental Analysis of Behavior, 13, 243–266. doi:10.1901/jeab. 1970.13-243

Herrnstein, R. J., & Heyman, G. M. (1979). Is matching compatible with reinforcement maximization on concurrent variable interval, variable ratio? Journal of the Experimental Analysis of Behavior, 31, 209–223. doi:10.1901/jeab. 1979.31-209

Morse, W. H. (1966). Intermittent reinforcement. In W. K. Honing (Ed.), Operant behavior: Areas of research and application (pp. 52–108). New York, NY: Appleton-Century-Crofts.

Myers, D. L., & Myers, L. E. (1977). Undermatching: A reappraisal of performance on concurrent variable-interval schedules of reinforcement. Journal of the Experimental Analysis of Behavior, 27, 203–214. doi:10.1901/jeab. 1977.27-203

Nevin, J. A. (1982). Some persistent issues in the study of matching and maximizing. In M. L. Commons & R. J. Herrnstein (Eds.), Quantitative analyses of behavior: Vol. 2. Matching and maximizing accounts (pp. 53–165). Cambridge, MA: Ballinger.

Olejnik, S., & Algina, J. (2003). Generalized eta and omega squared statistics: Measures of effect size for some common research designs. Psychological Methods, 8, 434–447. doi:10.1037/1082-989X.8.4.434

Peele, D. B., Casey, J., & Silberberg, A. (1984). Primacy of interresponse time reinforcement in accounting for rate differences under variable-ratio and variable-interval schedules. Journal of Experimental Psychology: Animal Behavior Process, 10, 149–167. doi:10.1037/0097-7403.10.2.149

Shimp, C. P. (1969). The concurrent reinforcement of two interresponse times: The relative frequency of an interresponse time equals relative harmonic length. Journal of the Experimental Analysis of Behavior, 12, 403–411. doi:10.1901/jeab. 1969.12-403

Shimp, C. P. (1974). Time allocation and response rate. Journal of the Experimental Analysis of Behavior, 21, 491–499. doi:10.1901/jeab. 1974.21-491

Shimp, C. P. (1976). Organization in memory and behavior. Journal of the Experimental Analysis of Behavior, 26, 113–130. doi:10.1901/jeab. 1976.26-113

Shimp, C. P. (1984). Cognition, behavior, and the experimental analysis of behavior. Journal of the Experimental Analysis of Behavior, 42, 407–420. doi:10.1901/jeab. 1984.42-407

Shimp, C. P., Sabulsky, S. L., & Childers, L. J. (1990). Preference as a function of absolute response durations. Journal of Experimental Psychology: Animal Behavior Processes, 16, 288–297. doi:10.1037/0097-7403.16.3.288

Shull, R. L. (2011). Bouts, changeovers, and units of operant behavior. European Journal of Behavior Analysis, 12, 49–72.

Shull, R. L., Gaynor, S. T., & Grimes, J. A. (2001). Response rate viewed as engagement bouts: Effects of relative reinforcement and schedule type. Journal of the Experimental Analysis of Behavior, 75, 247–274. doi:10.1901/jeab. 2001.75-247

Shull, R. L., & Grimes, J. A. (2003). Bouts of responding from variable-interval reinforcement of lever pressing by rats. Journal of the Experimental Analysis of Behavior, 80, 159–171. doi:10.1901/jeab. 2003.80-159

Shull, R. L., Grimes, J. A., & Bennett, J. A. (2004). Bouts of responding: The relation between bout rate and the rate of variable-interval reinforcement. Journal of the Experimental Analysis of Behavior, 81, 65–83. doi:10.1901/jeab. 2004.81-65

Smith, T. T., McLean, A. P., Shull, R. L., Hughes, C. E., & Pitts, R. C. (2014). Concurrent performance as bouts of behavior. Journal of the Experimental Analysis of Behavior, 102, 102–125. doi:10.1002/jeab.90

Stubbs, D. A., & Pliskoff, S. S. (1969). Concurrent responding with fixed relative rate of reinforcement. Journal of the Experimental Analysis of Behavior, 12, 887–895. doi:10.1901/jeab. 1969.12-887

Tanno, T., & Sakagami, T. (2008). On the primacy of molecular processes in determining response rates under variable-ratio and variable-interval schedules. Journal of the Experimental Analysis of Behavior, 89, 5–14. doi:10.1901/jeab. 2008.89-5

Tanno, T., & Silberberg, A. (2012). The copyist model of response emission. Psychonomic Bulletin & Review, 19, 759–778. doi:10.3758/s13423-012-0267-1

Tanno, T., Silberberg, A., & Sakagami, T. (2010). Concurrent VR VI schedules: Primacy of molar control of preference and molecular control of response rates. Learning & Behavior, 38, 382–393. doi:10.3758/LB.38.4.382

Author note

This work was supported by JSPS KAKENHI #00237309.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Rights and permissions

About this article

Cite this article

Tanno, T., Silberberg, A. Inter-response-time reinforcement and relative reinforcer frequency control choice. Learn Behav 43, 54–71 (2015). https://doi.org/10.3758/s13420-014-0161-y

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13420-014-0161-y