Abstract

Previous animal-learning studies have shown that the effect of the predictive history of a cue on its associability depends on whether priority was set to the absolute or relative predictiveness of that cue. The present study tested this assumption in a human contingency-learning task. In both experiments, one group of participants was trained with predictive and nonpredictive cues that were presented according to an absolute-predictiveness principle (either continuously or partially reinforced cue configurations), whereas a second group was trained with co-occurring cues that differed in predictiveness (emphasizing the relative predictive validity of the cues). In both groups, later test discriminations were learned more readily if the discriminative cues had been predictive in the previous learning stage than if they had been nonpredictive. These results imply that both the absolute and relative predictiveness of a cue lead positive transfer with regard to its associability. The data are discussed with respect to attentional models of associative learning.

Similar content being viewed by others

Common to most models of associative learning is the assumption that learning depends on the extent of stimulus processing (e.g., the amount of attention paid to a stimulus). After Rescorla and Wagner (1972) focused on the processing of the unconditioned stimulus (i.e., its unexpectedness), most later theorists have assumed that learning will also depend on (and influence) the amount of attention paid to the cues (i.e., the conditioned stimuli; Kruschke, 2001; Mackintosh, 1975; Pearce & Hall, 1980). Whereas attention is typically assumed to increase the associability of a stimulus (in fact, the terms “attention” and “associability” are often used as synonyms; see, e.g., Haselgrove, Esber, Pearce, & Jones, 2010), these models make opposite predictions with regard to what determines the amount of attention paid to a cue, and thus how much is being learned about that stimulus. One such factor is the predictive power of a cue concerning an outcome (predictiveness), that is, the extent to which it actually enables the organism to reliably predict an outcome to occur. According to the Mackintosh (1975) model, assuming a positive transfer of predictiveness on associability, the amount of attention directed to a cue will be a function of how reliable that cue is as a predictor of an outcome, relative to other cues. Consequently, both the salience of the cue and the rate of learning are supposed to be higher for predictive than for nonpredictive cues. By contrast, Pearce and Hall (1980) assumed a negative transfer of predictiveness with regard to the associability of a cue, arguing that the most attention will be paid to cues that are associated with the highest prediction error. More precisely, enhanced processing of a stimulus in the case of an unexpected outcome is supposed to enable further learning and a reduction of prediction errors in the future, whereas the associability of well-known, reliable predictors is assumed to decrease with progressive learning (see also Pearce, Kaye, & Hall, 1982).

Empirical work has shown that the amount of stimulus processing can be modified by the learning history concerning the predictiveness of the stimulus. For instance, in line with the Mackintosh (1975) model, increases in the rate of learning have been demonstrated in both humans and animals for cues that had been experienced as being predictive of an outcome in a previous learning stage, as compared to cues that had been nonpredictive (e.g., Dopson, Esber, & Pearce, 2010; Le Pelley & McLaren, 2003; Le Pelley, Turnbull, Reimers, & Knipe, 2010). Moreover, eyetracking data have demonstrated that (previously) predictive cues capture more visual attention (presumably a more direct measure of associability than learning rate) than do nonpredictive cues (e.g., Le Pelley, Beesley, & Griffiths, 2011; Mitchell, Griffiths, Seetoo, & Lovibond, 2012; Wills, Lavric, Croft, & Hodgson, 2007). In opposition to these findings, and in line with the Pearce and Hall (1980) model, several animal-learning studies have reported either elevated (or sustained) learning rates for cues that had previously been followed by unpredictable outcomes—that is, cues that had been presented in a partial reinforcement schedule—as compared to continuously reinforced cues (e.g., Haselgrove et al., 2010; Kaye & Pearce, 1984; Wilson, Boumphrey, & Pearce, 1992). Moreover, data from human contingency-learning studies have also shown that poor predictors may capture more overt attention than do perfect predictors of an outcome (Austin & Duka, 2010; Hogarth, Dickinson, Austin, Brown, & Duka, 2008). A particularly intriguing study suggesting that uncertainty can also enhance the learning rate in humans has been reported by Griffiths, Johnson, and Mitchell (2011). With a standard human contingency-learning paradigm (the “allergist task”), the authors showed that a few “uncertainty-inducing” unpaired cue presentations (A−) after an initial learning stage with perfect contingencies (A+) can enhance the rate of learning for cue A in a subsequent learning stage, relative to a group without unpaired presentations.

In view of these findings, it seems that the associability of a cue depends on both the Mackintosh (1975) and Pearce and Hall (1980) mechanisms of stimulus processing. Various hybrid models of associative learning have been formulated (e.g., Esber & Haselgrove, 2011; George & Pearce, 2012; Le Pelley, 2004; Le Pelley, Haselgrove, & Esber, 2012; Pearce & Mackintosh, 2010) arguing that the resulting associability of a cue is a function of (a) a predictiveness-driven process of stimulus selection (Mackintosh) and (b) an uncertainty-driven learning process (Pearce–Hall). However, even with these hybrid models (which differ in detail), it is difficult to explain all of the increments and decrements of attention that have been reported as a function of predictiveness and uncertainty in the studies at hand (Pearce & Mackintosh, 2010). Further research will be needed to identify the factors that determine the extents to which associability is driven by the predictiveness of the cue and by the uncertainty of the outcome in a given learning situation. It has been discussed also whether the mechanisms that determine the associability of a cue may differ between different species, as well as between different types of discrimination problems used (see Haselgrove et al., 2010).

In particular, it has been argued that the associability mechanism depends on whether the procedure emphasizes the absolute predictiveness (i.e., the probability of being followed by an expected, well-predictable outcome) or the relative predictiveness (i.e., the extent to which one stimulus is a better predictor than another). In line with hybrid models, the degree of stimulus processing may actually be driven by interacting effects of absolute and relative predictiveness (e.g., Le Pelley, 2004). An absolute-predictiveness mechanism (Pearce–Hall) may be expected to dominate when the entire compound of cues is informative, whereas a relative-predictiveness mechanism (Mackintosh) should dominate when simultaneously presented cues differ in their predictiveness. In fact, most of the evidence for a Pearce–Hall mechanism of attention allocation has been obtained with procedures utilizing entire configurations of cues that were either predictive or nonpredictive of an outcome (e.g., Austin & Duka, 2010; Griffiths et al., 2011; Hogarth et al., 2008; Wilson et al., 1992). On the other hand, Mackintosh-like effects have typically been obtained with compounds of a predictive and a nonpredictive stimulus being presented on the same trials (e.g., Bonardi, Graham, Hall, & Mitchell, 2005; Dopson et al., 2010; Le Pelley & McLaren, 2003; Mitchell et al., 2012).

Haselgrove et al. (2010) reported a study that allowed for direct comparison of the effects of previously experienced absolute and relative predictiveness on the rate of discrimination learning in rats. Specifically, they found that a previously predictive cue could either enhance or reduce the associability of a cue, depending on how the predictiveness of cues had been manipulated. In all their discrimination problems, A and B were consistently followed by a particular outcome (i.e., food was given in 100 % of the trials), whereas X and Y were followed by food on only 50 % of the trials. However, in the first two experiments, Haselgrove et al. manipulated the predictiveness between different learning trials (i.e., continuous vs. partial reinforcement: A+, B+, X±, Y±), whereas they contrasted predictive and nonpredictive cues within the same learning trials in the second two experiments (i.e., feature-neutral discrimination problems: AX+, BY+, X−, Y−). Thus, the first two experiments had been arranged similarly to many studies that had provided evidence for a Pearce–Hall mechanism (e.g., Kaye & Pearce, 1984; Wilson et al., 1992), whereas the second two experiments used a design that required within-compound discrimination, as had been the case in many studies reporting data in favor of a Mackintosh mechanism (e.g., Dopson et al., 2010; Le Pelley & McLaren, 2003). Haselgrove et al. found that, in the first two experiments, later test discriminations were learned faster if the relevant cues had been nonpredictive in the previous stage (AX+ vs. AY−), whereas the same test discriminations in the second two experiments were learned more readily if they were based on previously predictive cues (AY+ vs. BY−). This indicates that the transfer effect of prior learning on the associability of a cue may actually depend on whether the procedural characteristics of the learning task emphasize absolute or relative predictiveness (e.g., whether within-compound discriminations are required). That is, associability may increase with the prediction error (which was higher for X and Y) in the case of absolute predictiveness manipulations, whereas it may increase with the predictiveness of a cue (with A and B being most predictive) in the case of relative predictiveness manipulations.

Le Pelley et al. (2010), however, reported a human contingency-learning study that casts doubt on this assumption. In a series of four experiments, the authors trained participants with single-cue trials that varied in predictiveness (e.g., A+, B−, X±, Y±). Though this procedure is assumed to emphasize absolute over relative predictiveness, later discriminations about previously predictive cues were consistently learned more readily than discriminations about previously nonpredictive cues. Thus, in contrast to the animal-learning data reported by Haselgrove et al. (2010), these manipulations of absolute predictiveness in a human contingency-learning task did not increase the associability of cues that had been associated with unexpected outcomes. The authors argued that the absolute-predictiveness mechanism exerts the same influence on associability in humans as the relative-predictiveness mechanism—that is, an increase in associability for predictive cues (in line with Mackintosh, 1975).

It could be argued, thus, that there is a discrepancy between animal and human learning with regard to the effect of absolute predictiveness. Whereas many animal-learning studies showed that single-cue training produces negative transfer effects on associability (i.e., reduced associability for predictive cues; e.g., Hall & Pearce, 1979; Swartzentruber & Bouton, 1986), Le Pelley et al. (2010) found positive transfer effects with manipulations of absolute predictiveness in humans. On the other hand, still some inconsistent data from human contingency-learning studies have shown that unexpected outcomes do sometimes enhance overt attention as well as the rate of learning, as compared to good predictors of outcomes (e.g., Austin & Duka, 2010; Griffiths et al., 2011; Hogarth et al., 2008), indicating negative transfer of absolute predictiveness. Moreover, recently there has been some indication that more direct measures of human attention (i.e., modulations of the attentional blink, in contrast to indirect measures like learning rate) could actually be differentially affected by absolute and relative predictiveness (see Glautier & Shih, 2014, and their discussion of Exp. 1). Last but not least, although many animal-learning studies have specifically looked at transfer effects of absolute predictiveness on learning-rate measures (typically reporting negative transfer), human contingency-learning studies on this issue have been scarce and less consistent (e.g., both negative and positive transfers have been reported Griffiths et al., 2011; Le Pelley et al., 2010). Due to the theoretical importance of this issue (in particular with regard to hybrid models of associative learning; see, e.g., George & Pearce, 2012; Le Pelley, 2004), it appears crucial to replicate transfer effects (in particular for differences in absolute predictiveness) in a human contingency-learning paradigm.

The aim of the present study was to provide additional support for the claim that both absolute and relative predictiveness exert positive transfer effects on the subsequent rate of learning in humans (Le Pelley et al., 2010). Therefore, the experimental design used by Haselgrove et al. (2010) has been adopted to a human contingency-learning paradigm. In addition to Le Pelley et al. (2010), such a replication allows direct comparison of the transfer effects of absolute and relative predictiveness with the same type of compound discrimination problems at test stage (single-cue test discriminations had been used by Le Pelley et al., 2010).

Specifically, at Stage 1, all participants were trained with some cues that were predictive and with others that were nonpredictive of an outcome. Predictiveness was manipulated either between different learning trials (Group Absolute; e.g., A+, B−, X±), or within trials and between the two elements of a compound (Group Relative; e.g., AX+, BX−). Subsequently, both groups learned the same compound discriminations at Stage 2, with the distinctive elements being either previously predictive (e.g., AX vs. BX) or nonpredictive cues (e.g., CV vs. CW). In line with the assumption that human contingency learning is subject to positive transfer effects irrespective of how prior predictiveness had been manipulated (Le Pelley et al., 2010), participants are expected to learn AX/BX test discriminations more readily than CV/CW discriminations regardless of whether (a) absolute or relative predictiveness had been manipulated at Stage 1, and (b) prior learning experience was based on single-cue or multiple-cue presentations.

Experiment 1

The design of Experiment 1 was developed in analogy to Haselgrove et al. (2010; see Table 1). Predictiveness was manipulated in a way that emphasized the absolute predictiveness of cues in Group Absolute (corresponding to Haselgrove et al.’s Exp. 1), whereas it was manipulated according to a relative-predictiveness principle in Group Relative (Haselgrove et al.’s Exp. 4). In Group Absolute, single cues were presented on each trial, with half of them (A, B, C, and D) being predictive of a particular outcome (either Outcome 1 or 2), and half of them (V, W, X, and Y) being followed by Outcomes 1 and 2 on 50 % of trials each. The same cues were either predictive or nonpredictive in Group Relative, but these participants learned to discriminate the predictiveness of cues that were presented as simultaneously (setting priority to the relative predictiveness of cues). Stage 2 was identical for both groups. Several compounds consisting of a previously predictive and a previously nonpredictive cue were presented to the participants. In order to test for effects of learned predictiveness, the discriminations in Stage 2 were based either on previously predictive or nonpredictive cues.

Method

Participants

A total of 61 undergraduate students of psychology (44 female, 17 male) were recruited at the campus of Technische Universität Darmstadt. Their ages ranged from 18 to 42 years (M = 23.8, SD = 5.1). The participants were assigned randomly to either Group Absolute (n = 33) or Group Relative (n = 28). The individual test sessions took about 45 min, and the participants were compensated with course credits.

Apparatus and stimuli

Stimulus presentation and response registrations were programmed in Python using the PsychoPy package (Peirce, 2007). The stimuli and text instructions were presented on a 22-in. widescreen TFT monitor with a resolution of 1,680 × 1,050 pixels. The monitor was placed approximately 60 cm in front of the participants’ seat.

A set of eight cues was selected from a colored version of Snodgrass and Vanderwart’s picture database (Rossion & Pourtois, 2004), showing drawn images of everyday objects (a brush, a comb, an envelope, a jar, glasses, a key, a pipe, and a shoe). Two pictures of town signs (“Ludwigshafen” and “Mannheim”) and two male portraits (taken from Minear & Park, 2004) were used as the outcomes in Stages 1 and 2, respectively.

Procedure

At the beginning of the experimental session, each participant was asked to read an instruction paper concerning Stage 1 (the learning task was adopted from Uengoer & Lachnit, 2012). The participants were asked to imagine working as a police officer trying to solve a series of crimes that took place either in “Ludwigshafen” or in “Mannheim.” The task in Stage 1 was to find out how reliably certain pieces of evidence indicated where the crime had happened. In each trial, either one or two cues (cf. Table 1) were presented on the screen. The eight cue images were assigned randomly to the particular types of cues in the experiment. The participants were asked to assume the location where the shown objects had been found by clicking on a visual analogue scale ranging from “certainly Ludwigshafen” to “certainly Mannheim”. For each participant, the two locations (“Ludwigshafen” and “Mannheim”) were randomly chosen to be either outcome o1 or o2, respectively. A “certain” o1 prediction was coded as −100, and “certain” o2 prediction was coded as +100 (the numbers were not shown to the participants). Immediately after the response, an image of the corresponding town sign was presented, informing the participants about the actual place where the crime happened. The feedback remained on the screen for 1,500 ms, and the next trial started after a 1,000-ms intertrial interval. The participants were instructed to use the feedback in order to learn where the different objects had been found. Each cue configuration (see Table 1) was repeated 12 times (blocks), resulting in a total of 96 trials in Group Absolute and 144 trials in Group Relative. The order of the trials in Stage 1 was randomized within each block.

Following Stage 1, the participants read another instruction sheet for Stage 2. They were told that some pieces of evidence would contain traces of DNA that can be attributed to two particular suspects. The learning task in Stage 2 was to find out which pieces of evidence can be associated reliably with one of the two suspects (called No. 02 and No. 07). In each trial, a compound of two cues was presented and the participants had to indicate whether they assumed the object to contain DNA of suspect No. 02 or No. 07. Stage 2 was identical for both groups. Each cue configuration in Stage 2 contained a cue that was predictive of outcome o3 or o4 (again, the two outcomes were randomly chosen to serve as either o3 or o4) and one that was nonpredictive of a particular outcome. The predictive cues had been either predictive (A and B) or nonpredictive (V and W) in Stage 1 (see Table 1). Again, responses were given by clicking on a visual analog scale (ranging from certainly No. 02 to certainly No. 07; to simplify the presentation of results, −100 refers to o3, and +100 to o4 in this article). Immediately after the response was given, feedback was presented on the screen for 1,500 ms, showing either the number “02” or “07” together with a picture of the corresponding face. The next trial started after a 1,000-ms interval. Since all cue configurations were repeated 12 times, Stage 2 contained a total of 96 trials. The order of trials was randomized within each block of Stage 2.

Results

For each participant, discrimination learning in Stage 1 was evaluated by comparing the predictive ratings in trials that were predictive of outcome o1 (trials containing cue A or C) with the predictive ratings in trials that were predictive of o2 (cues B or D). Most participants in both groups demonstrated smooth learning in Stage 1. On average, participants reached a discrimination score (= o2 minus o1 predictions, not including trials that contained only a nonpredictive cue V, W, X, or Y) of M = 108.5 (SD = 52.1) points on the prediction scale in the second half of Stage 1. However, for six participants in Group Absolute, as well as for three participants in Group Relative, the predictive ratings did not differ significantly between o1 and o2 trials (p > .05), and these data were excluded from further analyses of the Stage-1 and Stage-2 data.Footnote 1

For the remaining n = 27 and n = 25 participants in Groups Absolute and Relative, respectively, the mean predictions in the second half of Stage 1 are illustrated in Fig. 1. A 2 (Group) × 2 (Outcome) mixed-factors analysis of variance (ANOVA) on the predictive ratings (averaged over Stage 1) revealed a significant main effect of outcome, F(1, 50) = 424.18, p < .001 (with mean predictive ratings of −48.00 and 41.45 for o1 and o2 trials, respectively), but no main effect of group, F(1, 50) < 1, and no interaction, F(1, 50) < 1, indicating that the extents of discrimination learning during Stage 1 did not differ between groups.

Mean outcome prediction ratings (−100 = o1, +100 = o2) following the different types of cues in the second half of Stage 1 for the two groups in Experiment 1

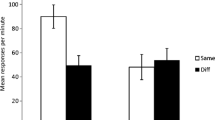

Figure 2 (upper panel) shows the mean predictive ratings for the different cue configurations in Stage 2. In order to evaluate whether the Stage-1 predictiveness of the cues influenced discrimination learning in Stage 2, discrimination scores were computed. Therefore, the predictive ratings in trials containing cue B (or V) were subtracted from the predictive ratings in trials containing cue A (or W). In the lower panel of Fig. 2, the scores for discriminations that were based on previously predictive cues (A–B) are contrasted with the scores of discriminations based on previously nonpredictive cues (W–V).

Mean predictive ratings (−100 = o3, +100 = o4; upper panel) and discrimination scores (lower panel) in Stage 2 as a function of the Stage-1 predictiveness of the relevant cues (filled symbols indicate previously predictive cues) in Experiment 1

These Stage-2 discrimination scores were further analyzed using a 12 (trial) × 2 (group) × 2 (Stage-1 predictiveness) mixed-factors ANOVA with repeated measurements on trial and predictiveness. In both groups, we found significant learning in Stage 2, as indicated by a main effect of trial, F(11, 550) = 44.67, p < .001. The absence of a main effect of group, F(1, 50) < 1, indicated no general learning advantage for either of the two groups. There was, however, a significant main effect of predictiveness, F(1, 50) = 5.31, p = .025, indicating that discriminations about previously predictive cues (M = 53.9) were learned better than discriminations about previously nonpredictive cues (M = 47.4). The predictiveness effect was particularly evident in earlier trials of Stage 2, as indicated by a significant Trial × Predictiveness interaction, F(11, 550) = 3.14, p = .001 (see Fig. 2). The Group × Predictiveness interaction, however, was not significant, F(1, 50) = 2.40, p = .13, suggesting that the impacts of Stage-1 predictiveness on Stage-2 learning did not differ between the two groups. There was no Group × Trial interaction, F(11, 550) < 1, and no three-way interaction, F(11, 550) = 1.38, p = .18. Additional ANOVAs revealed that Stage-1 predictiveness had a significant main effect on discrimination scores in Group Absolute, F(1, 26) = 6.14, p = .02, but not in Group Relative, F(1, 24) < 1. However, significant Trial × Predictiveness interactions emerged for both Group Absolute, F(11, 286) = 2.47, p = .01, and Group Relative, F(11, 264) = 2.07, p = .02, indicating that the Stage-1 predictiveness of cues did actually affect the initial rate of discrimination learning in Stage 2 in both groups (i.e., superior learning with previously predictive as compared to previously nonpredictive cues; see Fig. 2).

Discussion

Experiment 1 demonstrated that discrimination problems that were based on previously predictive cues were learned more readily than discriminations that were based on previously nonpredictive cues. This kind of learned-predictiveness effect is in line with the assumption that the associability of a cue increases with its predictiveness (Mackintosh, 1975). The fact that Stage-1 predictiveness exerted similar effects on Stage-2 discrimination learning in both groups indicates that, in humans, the associability of good predictors is enhanced with both relative and absolute predictiveness mechanisms. In this respect, Experiment 1 successfully replicated Le Pelley et al.’s (2010) finding that pretraining with individual cues (which should emphasize absolute predictiveness) produces the same positive transfer effects as a pretraining stage that requires compound discrimination (emphasizing relative predictiveness). In contrast, Haselgrove et al. (2010, using an equivalent to the design for our Group Absolute) found that rats learned discrimination problems faster if the relevant cues had been partially reinforced in a pretraining stage (X± and Y±) than if they had been reinforced continuously (A+ and B+). These results indicated that a pretraining stage that was designed according to an absolute-predictiveness principle enhances the associability of poor predictors (in line with Pearce & Hall, 1980). It might be argued, thus, that there is a discrepancy between species concerning the transfer of associability.

Admittedly, as a consequence of our particular design, the participants in Group Relative were trained with twice as many presentations of each cue as were the participants in Group Absolute (i.e., each cue was presented both alone and as part of a compound in Group Relative). The resulting difference in the total duration of Stage 1 (96 vs. 144 trials, respectively) could have caused unwanted differences between the two groups—for example, with regard to motivational factors or fatigue at the beginning of Stage 2. Although it is unclear how this might have reduced potential between-group differences in cue associability, a replication with a matched number of cue presentations seemed of value. Therefore, the durations of Stage 1 were matched to 96 trials for the two groups in Experiment 2.

Moreover, the participants in Group Relative (unlike those in Group Absolute) did actually learn to predict the outcomes in all types of trials during Stage 1 (see Fig. 1, right panel). That is, even though the cues V, W, X, and Y were nonpredictive by themselves, participants were able to predict the outcome by taking into account whether the cues were presented in isolation or as a compound. Therefore, the participants in Group Relative could have evaluated the absolute predictive value of the cues presented in a given trial, rather than (or in addition to) their relative predictiveness. The positive transfers observed in Experiment 1 thus might potentially represent effects of absolute predictiveness in both groups. It has been noted that most of the evidence in line with the Mackintosh (1975) model had derived from training phases with compounds, whereas studies in line with the Pearce and Hall (1980) model typically had presented isolated cues during the training (cf. Haselgrove et al., 2010). In Experiment 1, only isolated cues had been presented to Group Absolute in Stage 1, whereas both isolated cues and compound cues had been presented to Group Relative. By using a pretraining stage with only compound cues being presented to both groups in Experiment 2, it could be tested whether the observed positive transfer effects could be generalized to different training procedures (in analogy to Haselgrove et al., 2010, Exp. 2).

Experiment 2

The aim of Experiment 2 was to replicate the positive transfer effects for absolute and relative predictiveness with a slightly different pretraining procedure. Again, half of the cues in Stage 1 were predictive and half were nonpredictive of an outcome. Group Absolute was trained with compounds that were either predictive or nonpredictive of an outcome (i.e., either two predictive or two nonpredictive cues were presented together in the same trials), whereas Group Relative was trained with compounds consisting of cues that differed in predictiveness (cf. Table 2). This procedure was assumed to enhance the likelihood of the two predictiveness principles in the respective groups. In particular, the omission of single-cue presentations was supposed to keep the participants in Group Relative from assessing the absolute predictive validity of individual cues, whereas Group Absolute had to rely on the absolute predictiveness of each compound. For both groups, the test discriminations at Stage 2 were based either on previously predictive or nonpredictive cues.

Method

Participants

Sixty volunteers (34 female, 26 male) participated in Experiment 2 without receiving compensation. Their ages ranged from 19 to 59 years (M = 27.7, SD = 10.9). The participants were assigned randomly to either Group Absolute (n = 30) or Group Relative (n = 30). The individual experimental sessions took about 45 min each.

Apparatus and stimuli

As in Experiment 1, the experimental routines were programmed in Python using PsychoPy (Peirce, 2007). The stimuli and text instructions were presented on a 42-in. widescreen TFT monitor with a resolution of 1,920 × 1,080 pixels. Participants were seated approximately 1.25 m in front of the screen.

The cues were eight images showing everyday objects (a banana, a bow tie, a button, a peg, glasses, a key, a pen, and a watch), which were selected from a colored version of Snodgrass and Vanderwart’s picture database (Rossion & Pourtois, 2004).

Procedure

At the beginning of the experiment, each participant read an instruction paper concerning Stage 1. The participants were asked to imagine that they would bring several talismans when writing exams. Their task was to figure out which talismans would actually help them to pass the exam, and which talismans would provoke failure. In each trial, two cues were presented on the screen for 2,500 ms (representing the talismans brought to the exam). Then the cues disappeared, and the participants had to predict whether they would pass or fail the exam by clicking with the mouse on a “pass” or “fail” button. Subsequently, they were asked to rate how certain they were of their prediction by clicking on a confidence scale ranging from 0 (totally uncertain) to 100 (totally certain). A text message (either “passed” or “failed”) was presented for 3,000 ms as feedback immediately after the confidence rating was given. The next trial started after an intertrial interval of 1,000 ms. Again, the participants were instructed to use the feedback to learn the cue–outcome relations. In order to have equal numbers of trials (96) in both groups, the four cue compounds in Group Absolute were repeated 24 times each, and the eight compounds in Group Relative were repeated 12 times each (see Table 2). The order of the trials was randomized in blocks.

After Stage 1, the participants read another instruction sheet for Stage 2. They were told that they would now be examining the usefulness of the talismans in a different context. That is, their task was to figure out which talisman would help their favorite soccer team to win a game, and which would make them lose. In each trial, a compound of two cues was presented for 2,500 ms, and the participants had to indicate subsequently whether they assumed that the soccer team would win or lose, by clicking on a respective button. Next, they were asked to give confidence ratings, using the same scale as in Stage 1. After the confidence ratings, a text message (“game won” or “game lost”) was presented for 3,000 ms as feedback, and the next trial started after an intertrial interval of 1,000 ms. Stage 2 was identical for both groups. Each cue configuration in Stage 2 contained a cue that was predictive of either outcome o3 or o4 (for half of the participants, o3 was “game won” and o4 was “game lost,” and for the other half of participants, the assignments were the other way around) and one that was nonpredictive of an outcome (see Table 2). Each cue configuration was repeated 12 times, resulting in a total of 96 trials. Trials were presented in a random order.

Results

Confidence-weighted prediction responses were calculated as the product of the outcome predictions (o1 and o3 were coded as −1; o2 and o4 were coded as +1) and the confidence ratings (0–100).

The same criterion as in Experiment 1 was used to assess the individual learning performance in Stage 1. The confidence-weighted prediction responses in the second half of Stage 1 did not differ significantly between trials of opposite outcomes for six participants in Group Absolute, as well as for four participants in Group Relative. The remaining participants’ predictions (n = 25 in Group Absolute, and n = 26 in Group Relative) demonstrated smooth Stage-1 learning in both groups. The average confidence-weighted predictions in the second half of Stage 1 are illustrated in Fig. 3. A 2 (Group) × 2 (Outcome) mixed-factors ANOVA on the confidence-weighted predictions (averaged over Stage 1) revealed a significant main effect of outcome, F(1, 49) = 348.34; p < .001 (with Ms = −55.86 and 61.12 for the predictions in o1 and o2 trials, respectively), but no main effect of group, F(1, 49) < 1, and no interaction, F(1, 49) = 2.35, p = .13, indicating that the asymptotes of learning during Stage 1 did not differ between groups.

Mean confidence-weighted predictions (= predictive response [–1 = o1 or +1 = o2] times confidence judgment [0 to 100]) following different types of compound cues in the second half of Stage 1 for the two groups in Experiment 2

The confidence-weighted prediction responses in Stage 2 are illustrated in Fig. 4 (upper panel). In order to examine the effect of Stage-1 predictiveness on the rate of learning in Stage 2, discrimination scores were computed by subtracting the prediction responses in AX (and CV) trials from those in BX (and CW) trials (see the lower panel of Fig. 4). A 2 (group) × 2 (Stage-1 predictiveness) × 12 (trial) mixed-factors ANOVA on the confidence-weighted predictions in Stage 2 (with repeated measurements on the latter two factors) revealed a significant main effect of trial, F(11, 539) = 62.87, p < .001, proving that discrimination performance increased across the learning stage. There was also a significant main effect of group, F(1, 49) = 9.49, p = .003, indicating that the overall discrimination scores were slightly higher in Group Relative (M = .75) than in Group Absolute (M = .59). The crucial main effect of predictiveness, F(1, 49) = 8.67, p = .005, further demonstrated that discriminations were learned faster if they were based on previously predictive cues (M = .73) rather than on previously nonpredictive cues (M = .61). Moreover, the absence of a Predictiveness × Group interaction, F(1, 49) < 1, indicated that the effects of Stage-1 predictiveness on Stage-2 learning did not differ between the two groups. Again, a significant Predictiveness × Trial interaction, F(11, 539) = 3.60, p < .001, suggested that the learned-predictiveness effect was more evident in the earlier trials of Stage 2. This was particularly true for Group Relative, as indicated by a significant three-way interaction, F(11, 539) = 2.69, p = .002 (see Fig. 4). The Group × Trial interaction was not significant, F(11, 539) = 1.40, p = .17.

Mean confidence-weighted predictions (= outcome prediction [–1 = o1 or +1 = o2] times confidence judgment [0 to 100]; upper panel) and discrimination scores (lower panel) in Stage 2 as a function of the Stage-1 predictiveness of the relevant cues in Experiment 2 (filled symbols indicate previously predictive cues)

Additional within-group tests revealed a marginally significant main effect of predictiveness, F(1, 24) = 3.94, p < .06, but no Predictiveness × Trial interaction for Group Absolute, F(11, 264) < 1. In Group Relative, there was a significant main effect of predictiveness, F(1, 25) = 5.28, p = .03, as well as a significant Predictiveness × Trial interaction, F(11, 275) = 5.95, p < .001. These results indicated that Stage-1 predictiveness affected discrimination learning in both groups. However, the learned predictiveness effect seems persist throughout Stage 2 in Group Absolute, whereas it diminishes in the second half of Stage 2 for Group Relative (see Fig. 4).

Discussion

Experiment 2 successfully replicated the positive transfer effects of cue predictiveness with a different training stage using compounds. As in Experiment 1, the two groups of participants were trained with a procedure that emphasized either the absolute or the relative predictiveness of cues. Group Absolute could only learn the absolute predictive validity of cues (or compounds), whereas Group Relative was made to attend to the relative predictive validity of simultaneously presented cues. Both types of training, however, enhanced the associability of good relative to poor predictors of outcomes, as reflected in faster learning rates for discriminations that were based on the respective cues in Stage 2. These results support the assumption that both absolute and relative predictiveness exert positive transfer effects in human contingency learning (Le Pelley et al., 2010). Unlike in Experiment 1, the numbers of Stage-1 trials were identical for the two groups, thus making it less likely that major motivational differences could have existed between the groups at the beginning of Stage 2. Moreover, a pretraining stage with entire compounds that differed in absolute predictiveness was shown to produce the same positive transfer effects as a pretraining stage with isolated cues (see Exp. 1 and Le Pelley et al., 2010).

General discussion

Two experiments investigated the influence of learned predictiveness on the rate of learning in a second learning stage when the first learning stage had set priority to either absolute or relative predictiveness principles. Both experiments revealed that test discriminations were acquired faster if the relevant cues had also been predictive in Stage 1 than if they had been nonpredictive in Stage 1, irrespective of which type of predictiveness principle had been emphasized during Stage 1. Consistent with Le Pelley et al.’s (2010) results for positive transfer effects with single-cue training, this finding contradicts the assumption (Le Pelley, 2004) that a pretraining stage that emphasizes the absolute predictive validity of cues (in contrast to their relative predictiveness) would enhance the associability of nonpredictive more than of predictive cues. In line with the Mackintosh (1975) model, these data imply that there is a general processing advantage for reliable predictors of an outcome in human contingency learning. More precisely, the amount of attention paid to a cue seems to be enhanced with (a) the extent to which the cue is a better predictor than other cues (relative predictiveness), as well as by (b) the extent to which the cue is actually followed by a predictable outcome (absolute predictiveness). Technically, according to the Mackintosh (1975) model (as well as that of Kruschke, 2001), the associability of a cue is determined by the extent to which the cue is a better predictor than all other cues presented on the same trial. Thus, an additional formal mechanism is required to account for the updating of associability as a function of absolute predictiveness. Specifically, the associability of a cue is supposed to increase after the cue has been presented in trials with correct outcome predictions, and to decrease after incorrect outcome predictions (see Beesley & Le Pelley, 2010, p. 127, for a formal means to determine associability with differences in absolute predictiveness).

It is particularly interesting to see that the associability of cues that had been followed by an unpredictable outcome in Stage 1 was lower (as indicated by a retardation of Stage-2 learning), even if the prior learning stage was designed to direct attention to absolute predictive validity. This finding was observed regardless of whether the absolute predictiveness of single cues (Exp. 1; replicating Le Pelley et al., 2010) or compound cues (Exp. 2) had been varied during Stage 1. Thus, the results of both experiments seem to be inconsistent with the theory proposed by Pearce and Hall (1980), and with what has been reported in previous animal-learning studies. Using an analogue experimental design with rats, Haselgrove et al. (2010, Exps. 1 and 2), for instance, found higher learning rates for Stage-2 discriminations that were based on partially reinforced cues from Stage 1 (i.e., AY/AX discriminations) than for discriminations that were based on continuously reinforced cues (i.e., AY/BY discriminations). These animal-learning results actually suggest that there is a negative transfer of predictiveness with regard to associability if the prior learning stage has set priority to the absolute predictive validity of cues. On the other hand, with a different learning schedule at Stage 1, implemented according to a relative-predictiveness principle, Haselgrove et al. (2010, Exps. 3 and 4) did observe positive transfer effects of predictiveness on the same test discrimination problems. In line with hybrid models of stimulus processing (e.g., Le Pelley, 2004), this dissociation suggests that the transfer effect of predictiveness in animal learning does actually depend on the procedural characteristics of the pretraining phase.

Taken together, the empirical evidence at hand suggests that both relative and absolute predictiveness produce a positive transfer of associability in humans, whereas the transfer of associability may differ between situations that set priority to absolute and relative predictiveness in nonhuman animals (Haselgrove et al., 2010). Due to this discrepancy in transfer effects between human- and animal-learning studies, it might be speculated whether the mechanisms of stimulus processing differ between species (see Le Pelley et al., 2010). It is possible, of course, that factors other than the species per se (e.g., procedural differences) may account for this discrepancy. For instance, in animal studies, predictiveness is often manipulated in a between-subjects design (e.g., Wilson et al., 1992), whereas predictiveness has typically been manipulated within human participants. Manipulating absolute predictiveness in a within-subjects design could enable participants to compare the predictiveness of cues between different trials. In contrast to a between-subjects design, participants might be able to evaluate the relative predictiveness of cues rather than, or in addition to, their absolute predictiveness. However, it is rather unclear how such between-trial comparisons of predictiveness would affect associability according to the Mackintosh (1975) model (see Le Pelley et al., 2010). Additional animal research using a within-subjects manipulation of predictiveness might be a chance to illuminate whether such between-trial comparisons of predictiveness can account for the diverging results. On the other hand, the use of a between-subjects design in human contingency learning could be considered, but this would entail additional problems concerning the interpretation of results (e.g., due to motivational confounds; see Le Pelley et al., 2010).

Even though the present results support the idea that there is always positive transfer of predictiveness with regard to associability in human contingency learning (Le Pelley et al., 2010), some evidence still points toward negative transfer effects in human contingency learning (Griffiths et al., 2011). Specifically, Griffiths et al. found an increase in learning rate when they presented information that enhanced the uncertainty that an outcome would occur (i.e., a few additional unreinforced cue presentations, following an initial learning stage with continuous reinforcement), as compared to when they did not present uncertainty information. Identifying the exact reasons for this discrepancy is difficult, since Griffiths et al. used an experimental design that differed from that of the present study (and of others that have reported positive transfer effects; e.g., Le Pelley et al., 2010) in several ways. For instance, uncertainty had been learned throughout the training stage in the present experiments, whereas Griffiths et al. suddenly induced uncertainty after the training stage. This may have provoked a rapid orienting response that could have temporarily elevated the associability of the respective cues, as compared to the remaining more reliable cues. Thus, it may well be that an abrupt change in cue predictiveness will cause distinct short-term transfer effects on associability—that is, with reduced predictiveness causing an increase in associability (negative transfer). These effects may differ qualitatively from the gradually learned predictiveness effects (exerting positive transfer) that were found in the present study. Further research will certainly be required to identify the exact conditions that elicit positive and negative transfer effects in human contingency learning.

Nevertheless, the results of the present experiments add further support to the assumption that there is always a positive transfer of predictiveness (as acquired throughout a previous learning stage) with regard to associability in human contingency learning, regardless of whether the design of the pretraining stage directs attention to the relative or absolute predictive validity of the cues. In contrast to some evidence from animal learning (Haselgrove et al., 2010), these results are at odds with certain hybrid-model suggestions (e.g., Le Pelley, 2004) that have assumed opposite transfer effects for absolute and relative predictiveness. The present data fit very well with previous findings in human contingency learning showing that discriminations based on previously predictive cues are acquired faster than discriminations based on previously nonpredictive cues, (a) when predictiveness has been varied between the two elements of a compound (relative predictiveness; e.g., Le Pelley & McLaren, 2003), as well as (b) when predictiveness has been varied between different trials (absolute predictiveness; Le Pelley et al., 2010).

Notes

Moreover, the difference between the predictions in o1 and o2 trials was higher than 10 points on the rating scale in the second half of Stage 1 for all of the remaining participants (M =57.7, SD =23.2). A stricter criterion for successful learning (i.e., minimum discrimination scores of 20 points) did not change the overall pattern of results.

References

Austin, A. J., & Duka, T. (2010). Mechanisms of attention for appetitive and aversive outcomes in Pavlovian conditioning. Behavioural Brain Research, 213, 19–26.

Beesley, T., & Le Pelley, M. E. (2010). The effect of predictive history on the learning of sub-sequence contingencies. Quarterly Journal of Experimental Psychology, 63, 108–135.

Bonardi, C. H., Graham, S., Hall, G., & Mitchell, C. J. (2005). Acquired distinctiveness and equivalence in human discrimination learning: Evidence for an attentional process. Psychonomic Bulletin & Review, 12, 88–92. doi:10.3758/BF03196351

Dopson, J. C., Esber, G. R., & Pearce, J. M. (2010). Differences in the associability of relevant and irrelevant stimuli. Journal of Experimental Psychology: Animal Behavior Processes, 36, 258–267. doi:10.1037/a0016588

Esber, G. R., & Haselgrove, M. (2011). Reconciling the influence of predictiveness and uncertainty on stimulus salience: A model of attention in associative learning. Proceedings of the Royal Society B, 278, 2553–2561.

George, D. N., & Pearce, J. M. (2012). A configural theory of attention and associative learning. Learning & Behavior, 40, 241–254.

Glautier, S., & Shih, S.-L. (2014). Relative prediction error and protection from attentional blink in human associative learning. Quarterly Journal of Experimental Psychology. doi:10.1080/17470218.2014.943250

Griffiths, O., Johnson, A. M., & Mitchell, C. J. (2011). Negative transfer in human associative learning. Psychological Science, 22, 1198–1204.

Hall, G., & Pearce, J. M. (1979). Latent inhibition of a CS during CS–US pairings. Journal of Experimental Psychology: Animal Behavior Processes, 3, 31–42. doi:10.1037/0097-7403.5.1.31

Haselgrove, M., Esber, G. R., Pearce, J. M., & Jones, P. M. (2010). Two kinds of attention in pavlovian conditioning: Evidence for a hybrid model of learning. Journal of Experimental Psychology: Animal Behavior Processes, 36, 456–470.

Hogarth, L., Dickinson, A., Austin, A. J., Brown, C., & Duka, T. (2008). Attention and expectation in human predictive learning: The role of uncertainty. Quarterly Journal of Experimental Psychology, 61, 1658–1668.

Kaye, H., & Pearce, J. M. (1984). The strength of the orienting response during Pavlovian conditioning. Journal of Experimental Psychology: Animal Behavior Processes, 10, 90–109.

Kruschke, J. K. (2001). Towards a unified model of attention in associative learning. Journal of Mathematical Psychology, 45, 812–863.

Le Pelley, M. E. (2004). The role of associative history in models of associative learning: A selective review and a hybrid model. Quarterly Journal of Experimental Psychology, 57B, 193–243. doi:10.1080/02724990344000141

Le Pelley, M. E., Beesley, T., & Griffiths, O. (2011). Overt attention and predictiveness in human contingency learning. Journal of Experimental Psychology: Animal Behavior Processes, 37, 220–229.

Le Pelley, M. E., Haselgrove, M., & Esber, G. R. (2012). Modeling attention in associative learning: Two processes or one? Learning & Behavior, 40, 292–304.

Le Pelley, M. E., & McLaren, I. (2003). Learned associability and associative change in human causal learning. Quarterly Journal of Experimental Psychology, 56B, 68–79.

Le Pelley, M. E., Turnbull, M. N., Reimers, S. J., & Knipe, R. L. (2010). Learned predictiveness effects following single-cue training in humans. Learning & Behavior, 38, 126–144.

Mackintosh, N. J. (1975). A theory of attention: Variations in the associability of stimuli with reinforcement. Psychological Review, 82, 276–298. doi:10.1037/h0076778

Minear, M., & Park, D. C. (2004). A lifespan database of adult facial stimuli. Behavior Research Methods, Instruments, & Computers, 36, 630–633. doi:10.3758/BF03206543

Mitchell, C. J., Griffiths, O., Seetoo, J., & Lovibond, P. F. (2012). Attentional mechanisms in learned predictiveness. Journal of Experimental Psychology: Animal Behavior Processes, 38, 191–202.

Pearce, J. M., & Hall, G. (1980). A model of Pavlovian learning: Variations in the effectiveness of conditioned but not of unconditioned stimuli. Psychological Review, 87, 532–552. doi:10.1037/0033-295X.87.6.532

Pearce, J. M., Kaye, H., & Hall, G. (1982). Predictive accuracy and stimulus associability: Development of a model for Pavlovian conditioning. In M. L. Commons, R. J. Herrnstein, & A. R. Wagner (Eds.), Quantitative analyses of behavior (Vol. 3, pp. 241–256). Cambridge, MA: Ballinger.

Pearce, J. M., & Mackintosh, N. J. (2010). Two theories of attention: A review and a possible integration. In C. J. Mitchell & M. E. Le Pelley (Eds.), Attention and associative learning: From brain to behaviour (pp. 11–39). Oxford, UK: Oxford University Press.

Peirce, J. W. (2007). PsychoPy—Psychophysics software in python. Journal of Neuroscience Methods, 162, 8–13. doi:10.1016/j.jneumeth.2006.11.017

Rescorla, R. A., & Wagner, A. R. (1972). A theory of Pavlovian conditioning: Variations in the effectiveness of reinforcement and nonreinforcement. In A. H. Blake & W. F. Prokasy (Eds.), Classical conditioning II: Current research and theory (pp. 64–99). New York, NY: Appleton-Century-Croft.

Rossion, B., & Pourtois, G. (2004). Revisiting Snodgrass and Vanderwart’s object set: The role of surface detail in basic-level object recognition. Perception, 33, 217–236. doi:10.1068/p5117

Swartzentruber, D., & Bouton, M. E. (1986). Contextual control of negative transfer produced by prior CS-US pairings. Learning and Motivation, 17, 366–385.

Uengoer, M., & Lachnit, H. (2012). Modulation of attention in discrimination learning: The roles of stimulus relevance and stimulus–outcome correlation. Learning and Behavior, 40, 117–127.

Wills, A. J., Lavric, A., Croft, G. S., & Hodgson, T. L. (2007). Predictive learning, prediction errors, and attention: Evidence from event-related potentials and eye-tracking. Journal of Cognitive Neuroscience, 19, 843–854.

Wilson, P. N., Boumphrey, P., & Pearce, J. (1992). Restoration of the orienting response to a light by a change in its predictive accuracy. Quarterly Journal of Experimental Psychology, 44B, 17–36. doi:10.1080/02724999208250600

Author Note

I am indebted to my undergraduate student assistants Alisa Bläser, Ulrike Haßfurter, Vanessa Stark, Lea Mara Vorreiter, and Sebastian Warda for their help with recruiting the participants and collecting the data.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kattner, F. Transfer of absolute and relative predictiveness in human contingency learning. Learn Behav 43, 32–43 (2015). https://doi.org/10.3758/s13420-014-0159-5

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13420-014-0159-5