Abstract

Is color a critical feature in emotional content extraction and involuntary attentional orienting toward affective stimuli? Here we used briefly presented emotional distractors to investigate the extent to which color information can influence the time course of attentional bias in early visual cortex. While participants performed a demanding visual foreground task, complex unpleasant and neutral background images were displayed in color or grayscale format for a short period of 133 ms and were immediately masked. Such a short presentation poses a challenge for visual processing. In the visual detection task, participants attended to flickering squares that elicited the steady-state visual evoked potential (SSVEP), allowing us to analyze the temporal dynamics of the competition for processing resources in early visual cortex. Concurrently we measured the visual event-related potentials (ERPs) evoked by the unpleasant and neutral background scenes. The results showed (a) that the distraction effect was greater with color than with grayscale images and (b) that it lasted longer with colored unpleasant distractor images. Furthermore, classical and mass-univariate ERP analyses indicated that, when presented in color, emotional scenes elicited more pronounced early negativities (N1–EPN) relative to neutral scenes, than when the scenes were presented in grayscale. Consistent with neural data, unpleasant scenes were rated as being more emotionally negative and received slightly higher arousal values when they were shown in color than when they were presented in grayscale. Taken together, these findings provide evidence for the modulatory role of picture color on a cascade of coordinated perceptual processes: by facilitating the higher-level extraction of emotional content, color influences the duration of the attentional bias to briefly presented affective scenes in lower-tier visual areas.

Similar content being viewed by others

One of the outstanding questions in the field of attention and affective neuroscience concerns the search for visual features that constitute the “emotionality” of a visual scene and facilitate involuntary attentional orienting toward emotionally significant contents. In this regard, color as a global visual feature might be seen as one of the crucial factors in emotional perception, facilitating scene segregation and content extraction (Gegenfurtner & Rieger, 2000; Hansen & Gegenfurtner, 2009). In the context of affective processing, picture color may influence subjective feelings of pleasantness/unpleasantness and enhance emotional intensity conveyed by a visual scene (Kaya & Epps, 2004; Suk & Irtel, 2010). Critically, color, amongst other perceptual stimulus characteristics (e.g., luminance, contrast), contributes to sensory-driven or “bottom-up” stimulus saliency, and thus can involuntarily attract visual attention toward relevant portions in a scene (Itti & Koch, 2000; Kuniecki, Pilarczyk, & Wichary, 2015). Similarly, preferential attentional orienting and efficient selecting of sensory information for further processing can also be determined by the emotional or motivational relevance of the visual stimulus (for a review, see Carretié, 2014; Pourtois, Schettino, & Vuilleumier, 2013). However, the extent to which color information may contribute to the involuntary capture of attention with emotionally charged visual scenes remains a matter of debate.

Previous event-related potential (ERP) research has consistently indicated two electrophysiological markers sensitive to attentional orienting toward affectively laden visual material – N1 and early posterior negativity (EPN) components, on the one hand, and the late positive potential (LPP), on the other hand (Sabatinelli, Keil, Frank, & Lang, 2013; Schupp et al., 2000; Schupp, Junghöfer, Weike, & Hamm, 2004). Viewing emotionally significant as compared to neutral images is related to differences in amplitude that begin around 120 ms, peaking at a time latency of 140–160 ms (N1), followed by a negative EPN deflection developing over occipito-temporal electrodes ~200–300 ms after picture onset (Junghöfer, Bradley, Elbert, & Lang, 2001; Schupp, Flaisch, Stockburger, & Junghöfer, 2006; Wiens & Syrjänen, 2013). These early negativities (Weinberg & Hajcak, 2010)—or the N1-EPN complex, as we have previously referred to these two components (Bekhtereva, Craddock, & Müller, 2015; Bekhtereva & Müller, 2015; Schönwald & Müller, 2014)—are seen as neural signature of affective content extraction at early stages of processing, likely reflecting an attentional orienting toward emotional material. By contrast, the LPP, a centro-parietal component occurring ~300–500 ms poststimulus, signifies allocation of attentional resources for a better, more detailed evaluation of emotional content at a later stage of visual processing (Sabatinelli et al., 2013; Schupp et al., 2000). Despite the close link between attentional orienting toward emotionally significant stimuli and perceptually salient stimulus properties (Bradley, Hamby, Löw, & Lang, 2007; De Cesarei & Codispoti, 2011; Delplanque, N’diaye, Scherer, & Grandjean, 2007; Miskovic et al., 2015; Schettino, Keil, Porcu, & Müller, 2016), there is mixed evidence regarding whether picture color influences affective processing per se (Cano, Class, & Polich, 2009; Codispoti, De Cesarei, & Ferrari, 2011; Weymar, Löw, Melzig, & Hamm, 2009) and, if so, at which stage of visual processing the influence of color on affective content extraction might be most pronounced. Although two previous studies by Weymar et al. (2009) and Codispoti et al. (2011) revealed no evidence for an influence of color on heightened visual cortical activity during affective picture viewing, Cano et al. (2009) reported ERP emotional modulations late in the processing stream (~300–500 ms) for color but not grayscale pictures. The latter finding sparked off a discussion on the contribution of color to affective outcomes of the LPP, suggesting the critical impact of image color on higher-order affective processing.

Previous findings with respect to the influence of picture color on affective processing may have been incomplete by the following potential reasons. First, confounding effects may have been produced by uncontrolled perceptual stimulus characteristics. Luminance (Miskovic et al., 2015; Schettino et al., 2016), contrast (Kauffmann, Chauvin, Guyader, & Peyrin, 2015), and spatial frequency composition of a visual image (De Cesarei & Codispoti, 2011; Delplanque et al., 2007) have recently been emphasized to modulate behavioral and electrophysiological markers of emotional perception, and were not fully controlled for in those studies. Second, the impact of image color on emotional processing was almost never studied under visually demanding conditions—for example, with very brief image exposure durations. Neutral and affective images were often shown using long presentation times (1–6 s), and a short presentation (24 ms) used in one study by Codispoti et al. (2011) was performed in the absence of a perceptual mask, therefore inevitably producing confounding effects with visual persistence, or continuing visual processing after the stimulus offset (Codispoti et al., 2011; Codispoti, Mazzetti, & Bradley, 2009). Hence, it is not clear whether image color may have an impact on emotional content processing under perceptually challenging conditions, when only a glimpse of a visual scene is available for perceptual processing—that is, when visual information is presented only briefly and is subsequently masked to prevent further elaborate processing. It may be possible that when only the gist of a visual scene can be recovered, color information may facilitate image content identification and modulate attentional orienting toward affective visual cues early in the processing stream, since color as a global stimulus property has been shown to be extracted at early stages of natural scene processing (Gegenfurtner, 2003; Goffaux et al., 2005) and inform scene recognition of briefly shown images (Schyns & Oliva, 1994).

In recent years, we have run a number of studies to investigate the time course of attentional shifting toward distracting task-irrelevant emotional scenes in early visual cortex while subjects perform a demanding foreground attentional task (Bekhtereva et al., 2015; Hindi Attar, Andersen, & Müller, 2010; Hindi Attar & Müller, 2012; Müller, Andersen, & Hindi Attar, 2011; Müller, Andersen, & Keil, 2008; Schönwald & Müller, 2014). This allows us to examine to what extent and how quickly attention can be captured by visual cues conveying emotionally significant information. In our distraction paradigm, participants attend to randomly flickering squares, while neutral or emotional images from the International Affective Picture Set (IAPS; Lang, Bradley, & Cuthbert, 2008) unpredictably appeared in the background. Participants were instructed to detect and respond to periods of coherent motion events of the flickering squares while disregarding background distractors. The flickering visual squares elicit a steady-state visual evoked potential (SSVEP), a continuous neural oscillatory response that is generated mainly in early visual areas (Di Russo et al., 2007; Müller et al., 2006) at the same temporal frequency as the driving flickering stimulus (Norcia, Appelbaum, Ales, Cottereau, & Rossion, 2015; Regan, 1989; Vialatte, Maurice, Dauwels, & Cichocki, 2010). Because the SSVEP continuously indexes neural activity related to flickering stimulus processing and its amplitude is consistently shown to increase when a stimulus is attended as compared to when it is ignored, the SSVEP is a powerful tool for a direct measure of attentional resource allocation over time, and, thus the neural time-course of visual resource competition between attention and emotional stimuli (Hindi Attar et al., 2010; Müller et al., 2011).

In our earlier studies, regardless of whether distracting IAPS images were shown for several seconds or as briefly as 133 ms in our distracting paradigm, we consistently demonstrated a significant decrease in the SSVEP amplitude for emotional relative to neutral background scenes between ~400 and 1,000 ms following the onset of an IAPS picture (Hindi Attar et al., 2010; Müller et al., 2011; Schönwald & Müller, 2014). This reduction in SSVEP amplitude signified a greater withdrawal of visual processing resources away from the flickering foreground task toward emotional as opposed to neutral contents. Of particular relevance is our recent experiment with brief (i.e., 133 ms) presentations of neutral or emotional IAPS images (Müller et al., 2011). Such a short presentation allows only for one fixation but at the same time is sufficient for emotional content extraction (Peyk, Schupp, Keil, Elbert, & Junghöfer, 2009; Smith, Löw, Bradley, & Lang, 2006) without further elaborative processing (Thorpe, Fize, & Marlot, 1996), and our results suggested that such a brief presentation triggers the attentional bias toward affective images, which is not interrupted by masking the respective image.

In the present study we used that design to investigate to what extent color plays a crucial role in emotional content extraction. Contrary to the previous study that faded the respective IAPS images in to avoid an onset ERP in response to the background images, here we presented them with a direct onset after the phase-scrambled version of an image to elicit and analyze the ERP concurrently with the SSVEP elicited by the foreground task. From a methodological viewpoint, brief presentation and masking accomplishes two necessary goals. By masking a briefly shown emotional image, one may more accurately capture its immediate impact on affective picture processing and make it less confounded by extended attentional engagement inherent to long exposure times (Larson, Ruffalo, Nietert, & Davidson, 2005), while controlling the time available for processing of the visual stimulus and reducing the effect of visual persistence (Codispoti et al., 2009; Keysers & Perrett, 2002). A major methodological advantage of the present study is that it concurrently measured the SSVEP elicited by the flickering squares as well as the visual ERP in response to image scenes, such as the N1–EPN complex and LPP. By simultaneously assessing both neural measures, one can delineate the impact of color of distracting emotional scenes on ongoing early visual processing as well as correlates of higher-order affective content extraction (N1–EPN or LPP components as neural indices of affective stimulus processing). If color plays a crucial role for emotional content extraction we expected that the distraction effect upon the SSVEP amplitude would be greater for color images than for grayscale images. In addition, we can infer at which stage of visual processing (i.e., early, in the time range of N1–EPN; or late, in the latency range of LPP) color may uniquely contribute to the higher-order process of emotional content encoding in our distraction paradigm. If color plays a crucial role in early emotional content extraction, we expect a greater N1–EPN effect (i.e., greater difference between neutral and emotional images) for colored background images.

Since emotional and neutral image categories may differ on a variety of low-level physical properties, we minimized such differences by matching the means and standard deviations of the luminance distributions between the two stimulus sets and controlled for potential spatial frequency confounds across experimental stimuli (see Stimuli section). Finally, we complemented classical parametric analysis of the ERP components (Keil et al., 2014) with nonparametric cluster-based statistics (Groppe, Urbach, & Kutas, 2011), in order to more accurately pinpoint the time range when the impact of picture color on the modulation of the ERP response to affectively laden scenes might be most pronounced.

Materials and method

Participants

Twenty-seven participants (18 female, 9 male) with a mean age of 25 years (standard deviation [SD] = 5.12) with normal or corrected to normal visual acuity participated in the experiment. Six participants were excluded from the final sample: five of them did not elicit a reliable SSVEP response, whereas the data of one participant could not be properly saved due to technical problems. All participants received class credits or monetary compensation for their participation (€6 per hour) and gave written informed consent prior to experimental recordings. The experiment was conducted in compliance with the Code of Ethics of the World Medical Association (Declaration of Helsinki) and the guidelines of the local ethic committee (University of Leipzig) for electroencephalography (EEG) studies.

Stimuli

Task-relevant stimuli consisted of an array of 100 randomly distributed moving yellow squares (each 0.6° × 0.6° of visual angle), superimposed upon a neutral or unpleasant scene presented in grayscale or color. As a baseline measure and for masking, we used scrambled versions of these images. Scrambling of images was performed by applying a Fourier transform in a three-step process: (a) amplitude and phase components were obtained for each image; (b) the original phase spectrum of each picture was displaced with randomized phase components while preserving the original amplitude spectrum; (c) each new scrambled image was rebuilt with an inverse Fourier transform. This image-scrambling procedure resulted in the outcome images with the same low-level physical properties (spectral energy, luminance) as their intact originals, but any semantic information was distorted.

A total of 45 unpleasant and 45 neutral picture scenesFootnote 1 were selected from the IAPS (Lang et al., 2008) as well as from the Emotional Picture Set (EmoPicS; Wessa et al., 2010). Picture categories differed on normative ratings of valence [neutral: M = 5.49 (SD = 0.77); unpleasant: M = 2.17 (SD = 0.62)] and arousal [neutral: M = 3.73 (SD = 0.84); unpleasant: M = 6.24 (SD = 0.63)]. Image pictures were presented in color as well as in grayscale versions and were resized to 300 × 400 pixels. Thus, the selected images comprised two valence categories (unpleasant vs. neutral) and two image scales (grayscale vs. color), resulting in four experimental conditions. To ensure similar low-level physical properties between experimental image categories, the mean (representative of the global luminance) and standard deviation (representative of root-mean squared [RMS] contrast) of the luminance distribution of every image were calculated on the intensity of pixels ranging from 0 to 1 (normalized RGB or grayscale values, with minimum 0 = black and maximum 1 = white). Subsequently, all images were adjusted (for MATLAB code, see Bekhtereva & Craddock, 2017) to have equal mean luminance (M = 0.43, SD = 0.02) and mean RMS contrast (M = 0.28, SD = 0.02). A 2 × 2 mixed-design analysis of variance (ANOVA; with Emotion [unpleasant/neutral] as a between-subjects factor for two image sets and Color [grayscale/color] as a within-subjects factor) was performed on luminance and contrast, respectively. The results revealed no significant effect of emotion (mean difference = –0.0006, CI 95% = [–0.008 0.006]; d = –0.034, CI 95% = [–0.45 0.38]) or color (mean difference = –0.003, CI 95% = [–0.005 0.00009]; d = –0.29, CI 95% = [–0.71 0.13]), nor an Emotion × Color interaction (mean difference = –0.001, CI 95% = [–0.008 0.005]; d = –0.07, CI 95% = [–0.49 0.35]), on luminance (ps > .07). Similarly, we found no statistically significant difference in emotion (mean difference = –0.007, CI 95% = [–0.015 0.002]; d = –0.34, CI 95% = [–0.76 0.08]) or color (mean difference = –0.002, CI 95% = [–0.008 0.003]; d = –0.14, CI 95% = [–0.56 0.28]), nor any Emotion × Color interaction (mean difference = 0.009, CI 95% = [–0.001 0.02]; d = 0.27, CI 95% = [–0.15 0.69]) for RMS contrast values (ps > .08). To additionally control for potential spatial frequency confounds across experimental stimuli, discrete wavelet transform analysis was performed to ascertain that neither the color nor the grayscale images differed in spatial-frequency content between the unpleasant and neutral picture categories (Delplanque et al., 2007). To this end, each image was decomposed into eight frequency bands (300–150, 150–75, 75–38, 38–19, 19–9, 9–5, 5–2, and <2 cycles per image [c·image−1]). Subsequently, the mean energy in each frequency band was submitted to a 2 (emotion: unpleasant vs. neutral images) by 8 (spatial frequency: 300–150, 150–75, 75–38, 38–19, 19–9, 9–5, 5–2, vs. <2 c·image−1) mixed-design ANOVA and was analyzed separately for each grayscale picture and each layer of color (RGB). The results demonstrated that the color and grayscale experimental images did not show any statistically significant differences in wavelet energy between the unpleasant and neutral images in any spatial frequency band (ps > .33 for the main effect of emotion and the Emotion × Spatial Frequency interaction). The results for all frequency bands are presented in Fig. 1.

Given the nonsignificant p values obtained from the statistical testing of luminance, contrast, and spatial-frequency content between the experimental image categories, we additionally turned to Bayesian inference testing (Dienes, 2014; Gelman, Carlin, Stern, & Rubin, 2014) to estimate the degree of evidence in favor of an absence of differences between the low-level physical properties of the experimental images. We calculated the Bayes factor for each model of interest: main effect of emotion, main effect of color, both main effects, and both main effects plus Emotion × Color interaction—that is, the full model (Rouder, Morey, Verhagen, Swagman, & Wagenmakers, 2017). Jeffrey–Zellner–Siow (JZS) priors were used, based on the default prior scale for the function anovaBF from the R package BayesFactor, version 0.9.12 (Morey, Rouder, & Jamil, 2015). Experimental images were included in all models as a random factor. The results for luminance, contrast, and spatial-frequency image content are reported in Tables 1 and 2. For luminance and RMS contrast, although some models yielded inconclusive Bayes factors of ~0.7 (i.e., for the main effect of color for luminance and the main effect of emotion for contrast), overall the results demonstrated rather strong evidence in favor of the null hypothesis, with the Bayes factors being less than 1/3 (Dienes, 2014). For spatial frequencies, when we directly compared the full model and the model with the main effect of spatial frequency alone, the Bayes factor BF24 showed that the observed mean energy in the red channel of the RGB spectrum was ~424 times more probable when spatial frequency was the only factor, relative to when it interacted with emotion. For the other channels—green, blue, and gray—the likelihoods were even higher (~4,491, ~5,823, ~3,111 times more probable, respectively). Furthermore, the direct comparison between the models with the main effect of emotion versus the main effect of spatial frequency (BF21) indicated that the mean energy was at least 1.64 × 10128 times more likely when Spatial Frequency was the only factor, versus the Emotion factor alone. Thus, this can be interpreted as very strong evidence in support of the model with only the main effect of spatial frequency.

Taken together, the results from both null hypothesis significance testing and Bayesian inference analyses converged on the conclusion that the luminance, RMS contrast, and spatial-frequency content likely did not differ between the unpleasant and neutral image categories.

Image resizing, conversion to a grayscale format, and luminance and contrast adjustments, as well as the discrete wavelet transform analysis, were done with the MATLAB image-processing toolbox (The Mathworks, Inc., Natick, Massachusetts).

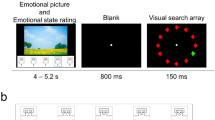

Experimental procedure

Stimuli were presented foveally against a black background on a 19-in. computer screen set at a resolution of 800 × 600 pixels, 16 bits per pixel color mode, and a refresh rate of 60 Hz, with a viewing distance of 80 cm. The size of each image subtended 12.2° × 8° of visual angle. A centrally presented yellow cross served as the fixation for the entire experiment. Yellow squares were randomly allocated across centrally presented pictures and were shown at a flicker frequency of 15 Hz (presented “on” for two frames and “off” for two frames), to elicit a steady-state visual evoked potential (SSVEP). As has been demonstrated in previous studies employing the same visual distraction paradigm (Bekhtereva et al., 2015; Schönwald & Müller, 2014), a stimulation frequency of 15 Hz simultaneously permits the assessment of the visual event-related potential (ERP) response elicited in response to the presentation of a background scene and the analysis of the SSVEP elicited by the presentation of flickering squares.

Each trial started with the onset of a scrambled version of a background scene presented together with flickering yellow squares and lasted for 4,533 ms, resulting in 68 cycles of 15 Hz stimulation (Fig. 2). After each trial, a blank screen with the fixation cross was presented for a time interval that varied randomly between 1,150 and 1,650 ms. At a certain variable time point during each trial, the scrambled image changed to the intact version of the same image for a short time period (see below) and was masked with the scrambled version by fading out the concrete version. This fading-out procedure was performed in order to avoid an abrupt offset of the background pictures that might elicit a visual evoked potential, which in turn could interfere with both the SSVEP toward the foreground task and the visual ERP in response to the onset of the concrete picture presentation. The fading-out procedure was done in the following steps. A weighted average of the scrambled and original intact pictures was shown over a sequence of four frames (one frame of the 60-Hz refresh rate lasted 16.67 ms), with the weight for the intact picture decreasing by 20% per frame (i.e., frame1 = 80%, frame2 = 60%, frame3 = 40%, and frame4 = 20%). The offset of the intact picture was defined at the time point when the weight for the image was less than 50% (i.e., when frame3, with the weight of 40%, was displayed). Thus, an intact picture with a full 100% view was presented only for ~100 ms and remained visible on the screen for 133 ms in total.

Schematic illustration of the stimulus design. Each trial lasted for 4,533 ms and started with the presentation of a phase-scrambled version of a grayscale/color scene. After a variable time interval, the scrambled scene changed to its intact version for 133 ms and back again to its scrambled form (mask) in four steps. Participants attended to flickering and moving yellow squares superimposed upon the background images and were instructed to respond to coherent motion events of a subset of the squares (see the text). Note that this example of an unpleasant image was taken from the GAPED database (Dan-Glauser & Scherer, 2011)

To exclude any anticipation effects of the image change, the change from a scrambled to a concrete image occurred randomly in an early (200–1,000 ms, 13% of trials), middle (1,267–2,400 ms, 60% of trials), or late (2,467–4,333 ms, 27% of trials) time window after trial onset. Trials with early and late image changes served as “catch trials” and were excluded from further analysis (240 trials in total). For these excluded trials a different set of images was selected from the ones we used in the trials included in the data analysis. Overall, the experiment consisted of 600 trials (ten experimental blocks of 60 trials each), with 360 trials that entered the final data analysis (90 per experimental condition). Each scene was presented twice throughout the experiment and randomized such that no repetition of the same image category occurred within three consecutive trials.

In every refresh frame (i.e., every 16.67 ms) the yellow squares moved randomly (up, down, left, or right) by 0.04° of visual angle. The targets were defined as short intervals in which 30% of the squares moved coherently in one of the four cardinal directions for four frames of 15 Hz (i.e., for 266.68 ms). Participants were instructed to detect these coherent motion targets as quickly and accurately as possible by pressing the space bar on the keyboard, and to disregard the background images as task-irrelevant. During each trial, between zero and four coherent-motion targets could be presented. Importantly, to allow for analysis of the time course of the behavioral data, target events were uniformly distributed across each cycle of the 15-Hz flicker (i.e., 66.67 ms), such that over the entire experiment three target events occurred in each time window of 66.67 ms in every experimental condition. After each block (60 trials) participants received feedback about their behavioral performance (mean percentage of target hits and mean reaction time) and were allowed to take a break. All participants completed short training sessions (up to three blocks) prior to the experimental session until they achieved a target performance rate of ~60%, after which the experimental recording started. For the practice session, a different set of images was chosen.

After the EEG experiment, participants were asked to view the images used in the study in a randomized order and to rate them on the scale of affective valence and arousal on the 9-point Self-Assessment Manikin scale (SAM; Bradley & Lang, 1994).

EEG data recording and analysis

EEG was recorded from 64 Ag–AgCl scalp electrodes mounted in an elastic cap using a BioSemi ActiveTwo amplifier system (BioSemi; The Netherlands) following an extended version of the international 10–20 system at a sampling rate of 256 Hz and were band-pass filtered online between 0.016 and 100 Hz. Two additional electrodes were used as the reference and ground (CMS “common mode sense” and DRL “driven right leg”; see www.biosemi.com/faq/cms&drl.htm). Lateral eye movements were monitored with a horizontal electroocculogram (EOG), while vertical eye movements and blinks were monitored with a bipolar montage positioned below and above the right eye (vertical EOG). Data preprocessing and analysis of the SSVEP and ERP data were performed offline with in-house-written custom scripts, using functions included in the EEGLAB version 9.0.2.3b (Delorme & Makeig, 2004) and ERPLAB version 2.0.0.0 (http://erpinfo.org/erplab) toolboxes running under MATLAB (The Mathworks, Natick, MA).

SSVEP analysis

EEG epochs were extracted between 1,000 ms before and 2,000 ms after the onset of the concrete image. Epochs contaminated with eye blinks or eye movements exceeding 2 deg of visual angle (about 20 μV) were rejected with in-house automated routines and additionally verified by visual inspection. Subsequently, the SCADS algorithm (statistical correction of artifacts in dense array studies; Junghöfer, Elbert, Tucker, & Rockstroh, 2000) was performed on the remaining epochs. This procedure corrects for artifacts such as noisy electrodes using a combination of channel approximation and epoch exclusion based on statistical parameters of the data. Epochs with more than ten contaminated electrodes within a particular area of the electrode montage were excluded from further analysis. On average, ~13% of all trials were rejected, with no differences between conditions. Data were algebraically re-referenced to the average reference, linear trends were removed, and as a final step, all epochs were averaged for each individual and condition.

SSVEP amplitudes were extracted from the averaged epochs by means of a Gabor filter (Gabor, 1946) centered at 15 Hz with a frequency resolution of ±1.5 Hz full width at half maximum, resulting in a time resolution of ±147.1 ms (see also Schönwald & Müller, 2014, for more details on Gabor filters). To identify electrodes with maximum SSVEP amplitudes for the statistical analysis, isocontour voltage maps were quantified on the basis of the grand averages across all participants, experimental conditions, and time points. Figure 9b below displays that SSVEP amplitudes were maximal at eight parieto-occipital electrodes: PO3, PO7, O1, Oz, Iz, PO4, PO8, and O2. Taking into account some variations in the individual topographical SSVEP amplitude distributions, for further statistical analysis, one electrode with the greatest amplitude was selected from that cluster for each participant (the individual best-electrode approach). For the statistical analysis, two-tailed paired t tests were used. On the basis of our previous studies with a similar design, to control for Type I error, a statistical significance threshold was set as a point-level p < .05 over ten consecutive data points; thus, only time intervals with a minimum of ten consecutive significant sampling points with p < .05 are reported (Andersen & Müller, 2010; Bekhtereva et al., 2015; Schönwald & Müller, 2014). To test the impact of color on the affective distraction effect with scenes, we conducted a direct test between the grayscale and color images. For this purpose, in the first step we tested for differences in SSVEP amplitude reduction latencies between the unpleasant and neutral conditions, separately for the color and grayscale scenes. In the second step we calculated difference waves (unpleasant minus neutral) for the color and grayscale pictures and tested them against each other with running paired t tests.

ERP analysis

Continuous EEG data were band-pass filtered (Kaiser-windowed FIR, 0.5–14 Hz; Widmann, 2006). Subsequently, epochs from 133 ms before to 1,600 ms after image onset were extracted from the continuous EEG data. The mean of the 133 ms before picture onset (i.e., two full cycles of 15-Hz stimulation) served as a baseline, and the mean was subtracted from each data point in the ERP epochs. Prior to artifact rejection, trials with horizontal eye movements >20 μV (about 2° of visual angle) were removed. On average, 3.33% of trials (i.e., 348 out of 360 trials for 21 participants) were rejected from the analysis after artifact detection with SCADS (Junghöfer et al., 2000), with no differences between conditions.

By means of visual inspection of the grand average ERPs, we identified and further analyzed the P1, N1–EPN complex, and LPP components that were elicited by presentation of the complex background images. The following time windows after concrete image onset and electrodes were chosen for the statistical analysis:

-

For the N1–EPN complex (see Fig. 5a in the Results), a time window from 170 to 400 ms was taken at a cluster of parieto-occipital sensors: I1, I2, O1, O2, PO3, PO4, PO7, PO8, P7, P8, P9, and P10, based on a selection of electrodes from the similarly designed earlier studies by Schönwald and Müller (2014) and Bekhtereva et al. (2015).

-

For the LPP component (Fig. 6a in the Results), a time window between 550 and 1,000 ms was selected from an array of centro-parietal electrodes: CP1, CPz, CP2, P1, Pz, and P2.

-

Additionally, as can be seen in Fig. 5a for the N1 and EPN waveforms, at around 120–170 ms the P1 component exhibited a positive deflection and was quantified at occipital electrodes O1/O2 (120–170 ms), where it was most pronounced.

For all ERP components, the mean amplitude was calculated across the respective electrodes and time windows for each condition. These values were subjected to a 2 × 2 repeated measures ANOVA comprising the factors Emotion (unpleasant vs. neutral) and Color (RGB vs. gray).

Mass-univariate ERP analysis

To further examine ERP differences between neutral and unpleasant valence categories for color and grayscale images, we conducted within-subjects cluster-based permutation tests based on a “maximum cluster-level mass” statistic that is quantified by grouping neighboring electrodes into clusters and extracting a value for the cluster from its constituents (Bullmore et al., 1999; Maris & Oostenveld, 2007). By contrast with the conventional approach for ERP analysis, in which an ANOVA is conducted on a limited point measures (i.e., mean amplitudes) averaged over a specified time window and electrode locations, this nonparametric technique is powerful because it overcomes three major limitations of conventional ERP analyses (Groppe et al., 2011). First, it is sensitive to any unexpected effects outside of the time range of analysis. Second, the method does not require a priori knowledge of the timing and location of an effect. Third, it permits to delineate locus and boundaries of the precise onset and offset of the differences between ERPs of interest, taking into account the information across all channels and time points, while, importantly, simultaneously correcting for multiple comparisons.

An electrode’s spatial neighborhood was determined as all electrodes within approximately 5.4 cm, resulting on average in 7.3 neighbors per channel. The distribution of the null hypothesis was estimated using 5,000 random within-subjects permutations of the data. Any differences between conditions were considered significant if they yielded the lowest significant t-score threshold of –2.8469 (which corresponds to an uncorrected p value of .01) for the comparison between neutral and unpleasant color scenes and 2.8475 for the comparison between neutral and unpleasant grayscale scenes (corresponding to a testwise α = .009965). Finally, the differences between “unpleasant–neutral” difference scores between the color and grayscale conditions were examined and deemed reliable if they exceeded the lowest significant t-score threshold of –2.8543 (corresponding to an uncorrected p value of .01 and a testwise α = .009803). All time points between 0 and 1,600 ms (410 time points at 256-Hz sampling rate) at all 64 scalp electrodes were included in our analysis, resulting in total of 26,240 comparisons, and the test was two-tailed. These cluster-based permutation tests were performed with the Mass Univariate ERP toolbox (Groppe et al., 2011).

Behavioral data and SAM ratings analyses

Correct button presses in a time window between 250 and 1,000 ms after target onset were considered as hits. Later responses or no response were considered as misses. Onsets of target events were uniformly distributed over the time window from 800 ms before and 1,867 ms following the onset of an intact background image. Hits were calculated across time bins of 266.7 ms by averaging across four short bins of 66.67 ms, in order to keep every time bin identical to the duration of the target event, which lasted 266.7 ms (see also Bekhtereva et al., 2015; Hindi Attar et al., 2010; Schönwald & Müller, 2014). This resulted in ten time windows of 266.7 ms each. On the basis of the behavioral results obtained by our previous studies, in which the same distraction paradigm was used (see above), we focused further statistical calculations on the time interval from 533 ms before to 1,334 ms after the picture change (two time bins before and five after). All calculations were performed in relation to the time bin in which the onset of the target event occurred according to the uniform distribution of events over time. A 2 × 2 × seven repeated measures ANOVA with the within-subjects factors Emotion (unpleasant vs. neutral), Color (RGB vs. grayscale), and Time (Bins 1–7) was calculated. To further explore differences between the conditions with respect to time bins, we conducted post-hoc t tests using the Holm–Bonferroni correction for multiple comparisons. Mean arousal and valence SAM ratings for unpleasant and neutral scenes for the color as well as the grayscale versions of pictures were subjected to 2 × 2 repeated measures ANOVA, with Emotion (neutral vs. unpleasant) and Color (gray vs. RGB) as within-subjects factors, followed by paired t tests.

Statistical analyses

Statistical analyses were conducted using R version 3.0.2 (R Development Core Team, 2012) and the packages stats (version 3.3.2), ez (version 4.4-0; Lawrence, 2013), Rmisc (version 1.5), BayesFactor (version 0.9.12; Morey et al., 2015), and ggplot2 (version 2.21; Wickham, 2009). With repeated measures ANOVA, Huynh–Feldt correction was applied whenever Mauchly’s test showed violation of the sphericity assumption, and generalized eta-squared (η g 2) and Cohen’s d (d) with its 95% confidence interval are reported as measures of standardized effect size (Baguley, 2012; Bakeman, 2005; Olejnik & Algina, 2003). Additionally, unstandardized effect sizes and their 95% confidence intervals are reported for relevant comparisons.

Results

SAM ratings

For valence, the 2 (emotion) × 2 (color) repeated measures ANOVA showed significant main effects of emotion, F(1, 20) = 328.17, p < .001, η g 2 = .88; mean difference = –3.47, CI 95% = [–3.87 –3.07]; d = –3.95, CI 95% = [–5.03 –2.88], with unpleasant picture scenes being rated as more negative than neutral scenes, and color, F(1, 20) = 12.45, p = .002, η g 2 = .002; mean difference = 0.06, CI 95% = [0.026 0.1]; d = 0.77, CI 95% = [0.12 1.42], with gray images overall having somewhat higher (more pleasant) valence ratings than their color counterparts. A significant Emotion × Color interaction, F(1, 20) = 14.9, p = .001, η g 2 = .005; mean difference = 0.19, CI 95% = [0.09 0.29]; d = 0.84, CI 95% = [0.19 1.49], and its follow-up pairwise comparisons revealed that neutral images were rated similarly for valence, regardless of whether they were presented in color or grayscale (p = .33; mean difference = 0.03, CI 95% = [–0.03 0.09]; d = 0.22, CI 95% = [–0.41 0.84]); however, emotionally aversive color images were perceived as being slightly more unpleasant (or less positive) than when the same images were presented in grayscale (p < .001; mean difference = –0.16, CI 95% = [–0.22 –0.09]; d = –1.19, CI 95% = [–1.87 –0.52]); see Table 3 and Fig. 3b.

SAM ratings. Averaged subjective arousal (a) and valence (b) ratings of intact scenes. Both valence and arousal ratings were influenced by the color information of an image: whereas neutral scenes had the same arousal and valence ratings, regardless of whether they were presented in color or grayscale format, emotionally aversive color images were judged as being slightly more arousing and more unpleasant than when the images were shown in grayscale. Each condition includes a scatterplot of the individual mean ratings (light and dark gray dots). Error bars represent 95% confidence intervals and correspond to the within-subjects variability after the between-subjects variability was removed using the Cousineau–Morey method (Morey, 2008). Significant differences corresponding to p values <.001 and <.05 are marked with *** and *, respectively; n.s.: not significant

For arousal ratings, we also found significant main effects of emotion, F(1, 20) = 134.44, p < .001, η g 2 = .69; mean difference = 3.64, CI 95% = [2.98 4.29]; d = 2.53 CI 95% = [1.69 3.37], and color, F(1, 20) = 5.61; p = .03, η g 2 = .001; mean difference = 0.08, CI 95% = [0.009 0.15]; d = 0.52, CI 95% = [–0.12 1.15], with emotional scenes having overall higher arousal values than neutral scenes, and color images being generally rated as more arousing than the same images in grayscale. Furthermore, a significant Emotion × Color interaction, F(1, 20) = 5.04; p = .04, η g 2 = .0009; mean difference = –0.15, CI 95% = [–0.29 –0.01]; d = –0.49, CI 95% = [–1.12 0.14], with follow-up post-hoc t tests showed that neutral images had the same arousal ratings, regardless of whether they were presented in color or grayscale (p = .94; mean difference = 0.003, CI 95% = [–0.08 0.09]; d = 0.02, CI 95% = [–0.61 0.64]); conversely, affectively negative color images had slightly higher arousal values than when the same images were presented in grayscale (p = .017; mean difference = 0.15, CI 95% = [0.04 0.26]; d = 0.63, CI 95% = [0 1.28]); see Table 3 and Fig. 3a. Thus, the results for both valence and arousal subjective ratings demonstrate interactive effects of color information on the perception of emotional aversive scenes.

Additionally, the results for averaged ratings in valence and arousal for all experimental pictures are depicted in Fig. 4. This figure suggests that amongst the emotionally unpleasant pictures, the ones depicting assault or attack scenes, mutilations, injuries, or dead bodies had higher arousal values and more negative valence ratings when they were presented in color than when they were shown in gray.

Averaged image ratings of valence (a) and arousal (b) for each of the 45 neutral and 45 unpleasant experimental images. Both the valence and arousal image ratings are influenced by color information. Moreover, when the images were colored, unpleasant pictures received more variable ratings than neutral ones. Note that the ratings are sorted in descending order from the left of the panels; the neutral images “Neutral Boy,” “Boys Playing,” “Guitar,” “Handicraft,” “Office,” “Waiter,” “Factory,” and “Shoeshop” were taken from the EmoPics database (Wessa et al., 2010)

ERP results

P1

The 2 × 2 repeated measures ANOVA on mean amplitude values revealed significant main effects of neither emotion (mean difference = 0.03 μV, CI 95% = [–0.16 0.21]; d = 0.07, CI 95% = [–0.55 0.69], p = .75) nor color (mean difference = –0.03 μV, CI 95% = [–0.32 0.27]; d = –0.04, CI 95% = [–0.66 0.58], p = .86), nor the interaction (mean difference = 0.42 μV, CI 95% = [–0.09 0.93]; d = 0.37, CI 95% = [–0.26 1], p = .1). Furthermore, we calculated the Bayes factor for each model of interest: main effect of emotion, main effect of color, both main effects, and both main effects plus the Emotion × Color interaction—that is, the full model. Participants were included in all models as a random factor. The results are reported in Table 4. Overall, each model yielded a Bayes factor of less than 0.23, which qualifies as good evidence in favor of a null hypothesis (Dienes, 2014), confirming that the P1 is unlikely to have been modulated by emotional category, image color, or their interaction.

N1–EPN complex

Figure 5a depicts grand average ERPs elicited by task-irrelevant scenes, recorded from an occipito-parietal cluster of 12 electrodes. All relevant comparisons between condition means are visualized in Fig. 5b.

ERP waveforms and results of statistical analyses for the N1–EPN complex. (a) Grand average ERPs (N = 21) recorded from an array of 12 parieto-occipital electrodes. The gray area box indicates the selected time window in which amplitude values were extracted for the N1–EPN (170–400 ms). Positive is plotted upward. (b) Mean amplitude values in microvolts for intact neutral and unpleasant images presented in color (right panel) and grayscale (left panel); each condition includes a scatterplot of the individual mean ratings (light and dark gray dots). When neutral and unpleasant scenes were presented in color, they elicited a larger N1–EPN than when neutral and aversive pictures were shown in grayscale. Error bars represent 95% CIs and correspond to the within-subjects variability after between-subjects variability was removed using the Cousineau–Morey method (Morey, 2008). Significant changes corresponding to p values <.001 are marked with ***

The 2 × 2 repeated measures ANOVA on the mean amplitude values of unpleasant and neutral contents for color versus grayscale pictures revealed significant effects of emotion, F(1, 20) = 66.35, p < .001, η g 2 = .03; mean difference = –0.88 μV, CI 95% = [–1.10 –0.65]; d = –1.78, CI 95% = [–2.51 –1.04], and color, F(1, 20) = 7.6, p = .01, η g 2 = 0.004; mean difference = 0.33 μV, CI 95% = [0.08 0.57]; d = 0.6, CI 95% = [–0.04 1.24], accompanied by an Emotion × Color interaction, F(1, 20) = 4.49, p = .047, η g 2 = 0.001; mean difference = 0.41 μV, CI 95% = [0.007 0.81]; d = 0.46, CI 95% = [–0.17 1.09]. Follow-up pairwise comparisons indicated that the difference in amplitude between unpleasant color and unpleasant gray images was not significant (mean difference = 0.12 μV, CI 95% = [–0.23 0.48]; d = 0.16, CI 95% = [–0.47 0.78], p = .47), whereas all other comparisons were (ps < .01). More specifically, differences between unpleasant and neutral pictures in color were more pronounced (mean difference = –1.08 μV, CI 95% = [–1.41 –0.75]; d = –1.5, CI 95% = [–2.21 –0.79], p < .001) than when unpleasant versus neutral pictures were presented in grayscale (mean difference = –0.67 μV, CI 95% = [–0.94 –0.40]; d = –1.13, CI 95% = [–1.80 –0.46], p < .001) (see Table 5 and Fig. 5). Given that the EPN is often presented as a difference wave (emotional minus neutral condition), we additionally performed a paired t test on the difference values between the unpleasant and neutral conditions in the N1–EPN time range. This test confirmed a more pronounced (i.e., more negative) N1–EPN for color (M = –1.07, SD = 0.71) than for grayscale (M = –0.67, SD = 0.59) scenes (mean difference = –0.41 μV, CI 95% = [–0.81 –0.007]; d = –0.46 CI 95% = [–1.09 0.17]), t(20) = –2.12, p = .047, indicating a greater difference between emotional and neutral color images than between grayscale versions of the identical images.

LPP

Figure 6 displays grand average ERPs recorded from a centro-parietal cluster of six electrodes and visualizes comparisons between the condition means.

LPP waveforms and results of statistical analyses for the LPP component. (a) Grand average ERPs (N = 21) recorded from an array of six centro-parietal electrodes (see the Method section). The gray box indicates the selected time window in which amplitude values were extracted for the LPP (550–1,000 ms). Positive is plotted upward. (b) Mean amplitude values in microvolts for intact neutral and unpleasant images in color (right panel) and grayscale (left panel); each condition includes a scatterplot of the individual mean ratings (light and dark gray dots). There were no significant differences in LPP amplitude between unpleasant and neutral pictures in color or grayscale format. Error bars represent 95% CIs and correspond to the within-subjects variability after between-subjects variability was removed using the Cousineau–Morey method (Morey, 2008). n.s.: not significant

The 2 × 2 repeated measures ANOVA on mean amplitude values revealed neither significant main effects of emotion (mean difference = 0.13 μV, CI 95% = [–0.01 0.28]; d = 0.42, CI 95% = [–0.21 1.05], p = .067) and color (mean difference = –0.03 μV, CI 95% = [–0.17 0.11]; d = –0.09, CI 95% = [–0.71 0.54], p = .69), nor an Emotion × Color interaction (mean difference = –0.08 μV, CI 95% = [–0.37 0.22]; d = –0.12, CI 95% = [–0.75 0.50], p = .58) (Fig. 6b). Additionally, the results of a Bayesian analysis for the LPP amplitudes are reported in Table 6. More specifically, the models with the main effect of color as well as the Color × Emotion interaction, with Bayes factors of 0.24 and 0.09, indicated rather strong evidence in favor of a null hypothesis (i.e., neither the factor of Color nor interactive effects between the color information and emotional category of a briefly shown image contributed to reliable variations in amplitude of the LPP response). However, the model with the main effect of emotion yielded an inconclusive Bayes factor of 1.16, thus providing neither reliable evidence in favor of the “null” effect nor any support for the alternative hypothesis (i.e., that emotion explains variations in LPP amplitude). In sum, the results with regard to the influence of emotional content on LPP amplitude variations during brief presentation of color and grayscale images were inconclusive, providing reliable support neither in favor of nor against the null hypothesis.

ERP results of the mass univariate analysis

The results of a point-by-point cluster-based permutation test on neural activity elicited by color unpleasant pictures differed reliably from those of a similar test on color neutral scenes, beginning at approximately 180 ms and lasted until 375 ms after image onset. The maximum cluster-level mass analysis returned a very broadly distributed cluster that encompassed central, frontal, and occipito-parietal electrodes and reflected this differential emotion effect (Fig. 7a). Similarly, significant differences in neural activity with the presentation of unpleasant relative to neutral grayscale scenes were most pronounced between 195 and 363 ms, and the maximum differences were localized across a broad cluster of central, frontal, and occipito-parietal sensors (see Fig. 7b). The cortical maps depicted in Fig. 7a and b are similar to the topographical distribution of typical N1 and EPN components (Bekhtereva et al., 2015; Schettino et al., 2016), and the frontal and occipital electrode clusters showing significant differences between unpleasant and neutral conditions are likely to represent opposite ends of a dipole. Furthermore, not only did we not observe statistically significant differences in any later time windows, time-point-by-time-point visual inspection of the topographical distribution between unpleasant and neutral images in either the color or the grayscale condition did not indicate any ongoing centro-parietal positivity typical for an LPP.

Results of mass-univariate ERP analysis: Difference waveforms of grand average ERPs (a: unpleasant–neutral color; b: unpleasant–neutral gray; c: unpleasant–neutral color minus unpleasant–neutral gray) from 64 electrodes are depicted. The light gray boxes indicate the time windows in which cluster-based permutation t tests identified differences between the conditions (depicted in white on the topographical maps on the right panel, and mainly localized at parieto-occipital electrodes)

Importantly, the cluster-based permutation test revealed a time window for the interactive effects of color information on neural activity elicited by aversive relative to neutral scenes. Specifically, the neural activity differences for the emotional effect (unpleasant vs. neutral) between color and grayscale scenes were focused within the time range of 175 to 285 ms after image onset at occipito-parietal sites (Fig. 7c), which are commonly found to be activated during enhanced processing of emotionally salient material (Schupp et al., 2006).

Taken together, these nonparametric permutation tests further corroborated the results of the classical parametric ERP analysis (see above) and more accurately pinpointed the precise time course as well as the topographical localization of the interactive effects between color and affective image content.

SSVEP results

In line with what was previously observed with a brief presentation of emotional and neutral distractor images using the same paradigm (Müller et al., 2011), for color images in the present study, the differential effect between unpleasant and neutral pictures began around 410 ms and lasted until ~835 ms (ps < .05). For grayscale images, the first time point with significantly greater SSVEP amplitude reduction for unpleasant as compared to neutral scenes occurred slightly earlier, at ~360 ms, and lasted a shorter time, ending already at ~472 ms (ps < .05). The respective time courses for these effects are depicted in Fig. 8. These affective SSVEP modulations for color and grayscale scenes cannot be due to differences in the respective baselines (i.e., during the presentation of the scrambled version of the picture, before its change to the intact version), since no differences were found with running t tests in the time window preceding the onset of a concrete picture (–1,000 to 0).

Gabor-filtered SSVEP time courses: Grand averages of SSVEP amplitudes for color (a) and grayscale (b) images. Note that the first long vertical gray line, at time point zero, indicates the onset of the picture (change from the scrambled version of an image to the intact one). The second vertical line shows the offset of the intact picture. The black horizontal lines on the x-axis show the onsets of significant (ps < .05) SSVEP differential amplitude effects between unpleasant and neutral background images. The shaded areas represent 95% confidence intervals when between-subjects variability was removed using the Cousineau–Morey method (Morey, 2008)

To further test for the impact of color information on the duration of SSVEP affective distraction effects with briefly presented scenes, we calculated the difference amplitudes between emotional and neutral stimuli (unpleasant minus neutral) and tested them directly between the color and grayscale pictures. Figure 9 depicts the difference curves indicating the effects of emotion for color and grayscale images. A negative amplitude value signifies greater SSVEP amplitude with a neutral background image at a particular time point. The direct comparison of these SSVEP difference curves revealed a significant difference that did not occur until 550 ms and lasted until 930 ms (ps < .05). Thus, although the SSVEP amplitude differences for unpleasant versus neutral contents decreased at approximately the same latency for both color and grayscale images (Fig. 9), the SSVEP amplitude reduction for unpleasant versus neutral color images lasted for a significantly longer time than the reduction for unpleasant versus neutral grayscale images (black horizontal line on the x-axis in Fig. 9).

Difference curves for SSVEP amplitudes and the SSVEP topography. (a) Grand average SSVEP amplitude differences for unpleasant minus neutral gray pictures (black line) and unpleasant minus neutral color pictures (color line). The vertical line at time point zero indicates the onset of the picture (change from the scrambled version of an image to the intact one). The black horizontal line on the x-axis marks the significant (ps < .05) tim e window during which differences between the SSVEP emotional effects for color relative to grayscale images were most pronounced (from ~550 to ~930 ms). The shaded areas represent 95% confidence intervals when between-subjects variability was removed using the Cousineau–Morey method (Morey, 2008). (b) Topographical distribution of grand average of 15-Hz SSVEP amplitudes across all participants and conditions for the entire analysis epoch. The bold electrodes indicate the cluster of eight posterior electrodes from which the individual best electrode was chosen for the statistical analysis (see the main text for more details)

Target detection rates

Figure 10 depicts the time courses of target detection rates (i.e., hit rates) before and after picture change for trials with a change to unpleasant and neutral scenes presented in color or grayscale. Note that hits were calculated time-locked to the target onsets. Therefore, peaks around time point zero and for all other windows correspond to responses that, on average, occurred about 600 ms later.

Behavioral data: Time courses of target detection rates in percentages for seven consecutive time windows (two before and five after the image change), averaged across all participants when gray unpleasant (black solid line), gray neutral (black dotted line), color neutral (color dotted line), or color unpleasant (color solid line) pictures were presented in the background. The change from a scrambled to a concrete image for 133 ms occurred at the end of the time bin −267/0. Error bars represent 95% confidence intervals and correspond to the within-subjects variability when between-subjects variability was removed using the Cousineau–Morey method (Morey, 2008)

We found significant main effects of time [F(3.72, 74.4) = 17.91, p < .001, η g 2 = .22] and color [F(1, 20) = 24.79, p < .001, η g 2 = .02], a significant Time × Emotion interaction [F(4.92, 98.4) = 4.34, p = .001, η g 2 = .02], and, most importantly, a significant three-way Color × Emotion × Time interaction [F(4.74, 94.8) = 2.43, p = .042, η g 2 = .01], which suggested that hit rates differed with respect to color and emotional image content in a specific time window. For the sake of brevity, we will focus here only on the most crucial comparisons and effects that occurred in the time window of interest—that is, after the change from a scrambled to an intact image (see Table 7 for more detailed statistics for all of the comparisons, as well as unstandardized effect sizes and their 95% CIs provided where possible), based on the timing of the behavioral costs consistently obtained in our previous studies utilizing the same distraction paradigm (Bekhtereva et al., 2015; Hindi Attar et al., 2010; Müller et al., 2011).

Detection rates for coherent-motion events that started shortly before and immediately during the change to a concrete image (−267 to 0 ms and 1 to 267 ms) dropped considerably, regardless of emotional valence (main effect of color; see Table 7). Most importantly, in the later time window, between 268 and 534 ms after the image onset, hit rates differed between the color and grayscale scenes as a function of emotional content (significant Emotion × Color interaction; see Table 7 and Fig. 10). In particular, hit rates for unpleasant relative to neutral scenes were significantly reduced from 268 to 534 ms with color images (mean difference = –17.9%, CI 95% = [–26.02 –9.7]; d = –1, CI 95% = [–1.66 –0.33], p = .001), but not with grayscale images (mean difference = –4.37%, CI 95% = [–12.56 3.83]; d = –0.24, CI 95% = [–0.87 0.38], p = .29). Thus, the pronounced drop in hit rates for unpleasant relative to neutral intact images occurred only with pictures shown in the color format and lasted for ~270 ms. These differences in accuracy reduction for negative relative to neutral color scenes during that time range were substantial, constituting an ~18% drop, on average.

Additionally, between 535 and 800 ms following the image onset, target detection accuracy was slightly decreased (by ~7%) with unpleasant grayscale relative to unpleasant color images (a significant Emotion × Color interaction; mean difference = –7.54%, CI 95% = [–12.17 –2.9]; d = –0.74, CI 95% = [–1.39 –0.1], p = .017), but no significant differences were observed between grayscale and color neutral scenes (mean difference = 0%, CI 95% = [–5.09 5.09]; d = 0, CI 95% = [–0.62 0.62]) or in the other comparisons (ps > .46).

Discussion

The purpose of the present study was to identify to what extent color influences attention allocation toward task-irrelevant, briefly presented emotional scenes during a concurrent visual detection task. In our distraction paradigm, the short presentation of the background images provided a perceptual challenge that allowed for studying the role of color in the facilitation of scene identification by analyzing the time course of SSVEP amplitudes which were elicited by the flickering squares that formed the foreground task. Color relative to grayscale background images resulted in not only a longer distraction effect (i.e., a greater SSVEP amplitude reduction for unpleasant images) but also a larger amplitude difference than that observed in grayscale images. Importantly, all images were immediately masked, preventing further postpresentation processing since the mask overrides information stored in sensory memory. However, the short presentation was still sufficient for the initial image content categorization (Larson et al., 2005; Thorpe et al., 1996) that triggers shifting of attentional resources away from the foreground stimuli toward the background images. This shifting process was not interrupted by the mask (Müller et al., 2011). We were also interested in establishing at which stage of visual processing color shows differential effects of emotional valence. To this end, we analyzed the P1, N1–EPN complex, and LPP elicited by the background images. We found differences between unpleasant and neutral images for both colored and grayscale images in the N1–EPN range only. The emotional effects for N1–EPN were significantly greater for the colored version of the respective images.

Our present results replicated a number of findings from our previous research. First, similar to our previous experiment that presented color versions of background IAPS images for 133 ms (Müller et al., 2011), we found that SSVEP amplitude reductions were significantly greater with affectively laden relative to neutral scenes for both color and grayscale images. Critically, our present findings revealed that picture color influenced the duration of attentional bias in visual processing triggered by the brief presentation of emotional scenes. Although the onset latency of the SSVEP affective biases to unpleasant scenes were similar for color and grayscale images, colored unpleasant scenes withdrew more attentional resources than did neutral scenes for a significantly longer time period of ~400 ms, as compared to the short visual distraction effect (~100 ms) generated by their grayscale counterparts. The emotional effect per se did not differ between color and grayscale images until ~550 ms, when the emotional effect for color was fully pronounced and after the emotional effect had finished for gray images (see Figs. 8 and 9).

Second, the affective SSVEP bias for color and grayscale scenes was preceded by the N1–EPN modulation (Bekhtereva et al., 2015; Schönwald & Müller, 2014). Similar to the SSVEP distraction effect, the N1-EPN effect was significantly greater for colored than for grayscale images. Additional complementary nonparametric analyses further revealed that these differences in the emotional effect between color and grayscale scenes was reliably observed between ~175 and ~285 ms following picture presentation at parieto-occipital electrode sites, consistent with the well-known topographical distribution of networks engaged in the visual processing of emotional contents. Thus, it seems highly plausible—given the latency of the effects on SSVEP amplitudes—that biasing of attentional resources in early visual areas in favor of background images relies on re-entrant mechanisms from higher cortical areas (Keil et al., 2009).

In line with SSVEP and N1–EPN results, affective evaluation of picture scenes was also influenced by color, corroborating the view that some physical attributes of emotional stimuli are associated with arousal and valence image characteristics in important ways (Olofsson, Nordin, Sequeira, & Polich, 2008). Participants systematically judged unpleasant scenes presented in color as slightly more negative and more arousing than the grayscale versions of the same images, suggesting that color information may have contributed to the perceived emotional intensity of emotional distractors. Although the effects for unpleasant scenes were marginal in terms of mean absolute differences between color and grayscale conditions, namely 0.16 for valence and 0.15 for arousal ratings (on a 1 to 9 scale), they were nonetheless systematic across participants and statistically significant. However, participants were allowed to view the images as long as they wanted during the SAM ratings session, and it is possible that the SAM rating differences would be more pronounced when presenting the images for only 133 ms as in the EEG experiment. On the other hand, our findings and their unstandardized effect sizes are consistent with work showing slightly higher arousal (mean difference = 0.37) and unpleasant valence (mean difference = 0.14) ratings for chromatic relative to achromatic aversive scenes during varied on-screen image presentation duration without using visual masks (6,000 vs. 24 ms; see Codispoti et al., 2011).

Behavioral data also yielded interesting findings. Overall, consistent with our previous studies, we found the typical pattern of a reduction in target detection accuracy with picture onset for both grayscale and for color images, concurrent with the drop in SSVEP amplitudes. This drop did not differ between unpleasant and neutral images. In our previous study (Hindi Attar et al., 2010) we referred to the initial drop in both the behavioral and SSVEP measures as a semantic effect. In other words, the onset of a concrete object (image) produces a strong capture of attention (Yantis & Jonides, 1984)—that is, a shift of attentional resources toward the concrete background image. However, we found a significantly greater decrease in hit rates for unpleasant relative to neutral scenes in the time interval between ~270 and 530 ms following image onset for color background images only. Thus, the behavioral emotional distraction effect is in line with the observed SSVEP attentional bias with unpleasant relative to neutral color scenes. Although the reduction in target detection rate was shorter (~260 ms) than the SSVEP attentional bias with unpleasant versus neutral color scenes (~400 ms), we would note that the temporal resolution of our behavioral response was not high enough to expect exact correspondence between the neural and behavioral time-courses. However, although we found a short time window of SSVEP differential effects for grayscale images, this was not mirrored in behavioral performance (at least from a statistical point of view). Additionally, hit rates were slightly reduced for unpleasant grayscale relative to unpleasant color scenes between ~530 and 800 ms after image onset. Despite being statistically significant, these differences were marginal and constituted ~7% of differences between the two conditions in percentage range (on a scale from 1% to 100% of possible correct responses in each bin), as opposed to substantial differences in hit rates for negative relative to neutral color scenes (~18%).

Contrary to previous studies using brief image exposure duration (Codispoti et al., 2011) and short image exposure duration with visual masking (Codispoti et al., 2009), we found neither a color effect nor any conclusive evidence in favor or against the influence of an emotional content on the neural response in the time range of the LPP (500 ms and later). How can these findings be explained? First, in our experimental design, as opposed to a passive viewing paradigm used by Codispoti and colleagues (Codispoti et al., 2011; Codispoti et al., 2009), participants were explicitly instructed to ignore the background emotional/neutral images and to focus on the foreground task. Second, the LPP may signify sustained attention toward affective images, indicating their detailed in-depth processing (see Hajcak, MacNamara, & Olvet, 2010, for an overview). However, in the present study, in addition to being ignored as task-irrelevant, a background image stayed on the screen for only 133 ms, being subsequently masked. The short presentation of emotional distractors in our design may have triggered only initial affective content extraction, with the immediate masking preventing elaborative processing, thus perhaps accounting for the absence of conclusive evidence for the reliable modulations of the LPP. Unlike the present study, our previous experiment utilizing the same distraction paradigm displayed emotional images for much longer—for example, for ~2–3 s (Schönwald & Müller, 2014). In that study, we observed a larger (i.e., more positive) LPP amplitude for unpleasant than for neutral IAPS distractors between 700 and 1,000 ms following the onset of a concrete image, reflecting a more detailed processing and sustained allocation of attention toward emotional background pictures (Hajcak, MacNamara, Foti, Ferri, & Keil, 2013; Sabatinelli et al., 2013; Schupp et al., 2000) even under conditions of competition, most likely because the image was still on the screen. Therefore, competition for attentional resources as suggested by the biased competition model (Desimone & Duncan, 1995) in line with the presentation of a mask might have prevented the deployment of an LPP in our study. On the other hand, the present LPP findings with regard to color versus grayscale images are consistent with previous research indicating that the LPP, unlike early ERP components (Bradley et al., 2007), is not susceptible to bottom-up perceptual features such as color (Codispoti et al., 2011), picture size (De Cesarei & Codispoti, 2006), complexity (Bradley et al., 2007), or spatial frequencies (De Cesarei & Codispoti, 2011).

Conclusions

The present study offers the first electrophysiological evidence for the close interaction between color as a global visual feature and preferential attentional processing of distracting emotional scenes during a visual detection task. First, the perceptual processing of color is tightly interwoven in the higher-order process of emotional content identification. The results of classical and cluster-based nonparametric ERP analyses revealed that when the background images were shown in color as opposed to grayscale, unpleasant relative to neutral scenes elicited a more pronounced negative deflection in the time range of the N1–EPN complex. This modulatory impact of color on emotional content extraction was most expressed between 175 and 285 ms following picture presentation at parietal and occipital sensors that likely reflect the recruitment of neural networks associated with emotional content extraction. Second, color influenced the duration of the ongoing visual attentional bias in early visual cortex in favor of task-irrelevant emotional scenes. The reduction in SSVEP amplitudes toward unpleasant relative to neutral scenes presented in color lasted ~400 ms longer than the corresponding reduction with grayscale images. The present findings emphasize the modulatory role of color on a cascade of coordinated perceptual processes: following enhanced affective content encoding for color relative to grayscale images, attentional resources were withdrawn away from the foreground task for a longer time period when unpleasant scenes were shown in color as opposed to grayscale format.

Notes

IAPS numbers. Neutral: 2037, 2102, 2190, 2191, 2221, 2235, 2240, 2272, 2372, 2393, 2396, 2435, 2441, 2442, 2480, 2485, 2512, 2560, 2570, 2749, 2840, 2850, 4100, 4542, 7140, 7500, 7546, 7550, 8010, 8090, 8130, 8162, 8232, 8250, 8330, 8371, 8620, 9210. Unpleasant: 1200, 1300, 2375.1, 2661, 2683, 2691, 2703, 2710, 2730, 2800, 2811, 3030, 3053, 3060, 3064, 3101, 3110, 3120, 3130, 3170, 3220, 3225, 3230, 3266, 3301, 3350, 3500, 3530, 6022, 6213, 6313, 6360, 6510, 6550, 6560, 8230, 9040, 9042, 9181, 9250, 9254, 9300, 9410, 9433, 9520. EmoPicS numbers. Neutral: 91, 100, 114, 124, 126, 135, 139.

References

Andersen, S. K., & Müller, M. M. (2010). Behavioral performance follows the time course of neural facilitation and suppression during cued shifts of feature-selective attention. Proceedings of the National Academy of Sciences, 107, 13878–13882.

Baguley, T. (2012). Calculating and graphing within-subject confidence intervals for ANOVA. Behavior Research Methods, 44, 158–175. doi:10.3758/s13428-011-0123-7

Bakeman, R. (2005). Recommended effect size statistics for repeated measures designs. Behavior Research Methods, 37, 379–384. doi:10.3758/BF03192707

Bekhtereva, V., & Craddock, M. (2017). Calculating and adjusting luminance and contrast for grey and RGB images. Unpublished article. doi:10.17605/OSF.IO/3D2M5

Bekhtereva, V., Craddock, M., & Müller, M. M. (2015). Attentional bias to affective faces and complex IAPS images in early visual cortex follows emotional cue extraction. NeuroImage, 112, 254–266.

Bekhtereva, V., & Müller, M. M. (2015). Affective facilitation of early visual cortex during rapid picture presentation at 6 and 15 Hz. Social Cognitive and Affective Neuroscience, 10, 1623–1633.

Bradley, M. M., Hamby, S., Löw, A., & Lang, P. J. (2007). Brain potentials in perception: Picture complexity and emotional arousal. Psychophysiology, 44, 364–373. doi:10.1111/j.1469-8986.2007.00520.x

Bradley, M. M., & Lang, P. J. (1994). Measuring emotion: The self-assessment manikin and the semantic differential. Journal of Behavior Therapy and Experimental Psychiatry, 25, 49–59. doi:10.1016/0005-7916(94)90063-9

Bullmore, E. T., Suckling, J., Overmeyer, S., Rabe-Hesketh, S., Taylor, E., & Brammer, M. J. (1999). Global, voxel, and cluster tests, by theory and permutation, for a difference between two groups of structural MR images of the brain. IEEE Transactions on Medical Imaging, 18, 32–42.

Cano, M. E., Class, Q. A., & Polich, J. (2009). Affective valence, stimulus attributes, and P300: Color vs. black/white and normal vs. scrambled images. International Journal of Psychophysiology, 71, 17–24.

Carretié, L. (2014). Exogenous (automatic) attention to emotional stimuli: A review. Cognitive, Affective, & Behavioral Neuroscience, 14, 1228–1258. doi:10.3758/s13415-014-0270-2

Codispoti, M., De Cesarei, A., & Ferrari, V. (2011). The influence of color on emotional perception of natural scenes. Psychophysiology, 49, 11–16.

Codispoti, M., Mazzetti, M., & Bradley, M. M. (2009). Unmasking emotion: Exposure duration and emotional engagement. Psychophysiology, 46, 731–738.

Dan-Glauser, E. S., & Scherer, K. R. (2011). The Geneva Affective Picture Database (GAPED): A new 730-picture database focusing on valence and normative significance. Behavior Research Methods, 43, 468–477. doi:10.3758/s13428-011-0064-1

De Cesarei, A., & Codispoti, M. (2006). When does size not matter? Effects of stimulus size on affective modulation. Psychophysiology, 43, 207–215.

De Cesarei, A., & Codispoti, M. (2011). Scene identification and emotional response: Which spatial frequencies are critical? Journal of Neuroscience, 31, 17052–17057.

Delorme, A., & Makeig, S. (2004). EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. Journal of Neuroscience Methods, 134, 9–21. doi:10.1016/j.jneumeth.2003.10.009

Delplanque, S., N’diaye, K., Scherer, K., & Grandjean, D. (2007). Spatial frequencies or emotional effects? A systematic measure of spatial frequencies for IAPS pictures by a discrete wavelet analysis. Journal of Neuroscience Methods, 165, 144–150.

Desimone, R., & Duncan, J. (1995). Neural mechanisms of selective visual attention. Annual Review of Neuroscience, 18, 193–222. doi:10.1146/annurev.ne.18.030195.001205

Di Russo, F., Pitzalis, S., Aprile, T., Spitoni, G., Patria, F., & Stella, A. (2007). Spatiotemporal analysis of the cortical sources of the steady-state visual evoked potential. Human Brain Mapping, 28, 323–334.

Dienes, Z. (2014). Using Bayes to get the most out of non-significant results. Frontiers in Psychology, 5, 781. doi:10.3389/fpsyg.2014.00781

Gabor, D. (1946). Theory of communication. Journal of the Institution of Electrical Engineers, Part III, 93, 429–441. doi:10.1049/ji-3-2.1946.0074

Gegenfurtner, K. R. (2003). Cortical mechanisms of colour vision. Nature Reviews Neuroscience, 4, 563–572.

Gegenfurtner, K. R., & Rieger, J. (2000). Sensory and cognitive contributions of color to the recognition of natural scenes. Current Biology, 10, 805–808.

Gelman, A., Carlin, J. B., Stern, H. S., & Rubin, D. B. (2014). Bayesian data analysis (Vol. 2). Boca Raton, FL: Chapman & Hall/CRC.

Goffaux, V., Jacques, C., Mouraux, A., Oliva, A., Schyns, P., & Rossion, B. (2005). Diagnostic colours contribute to the early stages of scene categorization: Behavioural and neurophysiological evidence. Visual Cognition, 12, 878–892.

Groppe, D. M., Urbach, T. P., & Kutas, M. (2011). Mass univariate analysis of event-related brain potentials/fields I: A critical tutorial review. Psychophysiology, 48, 1711–1725.

Hajcak, G., MacNamara, A., Foti, D., Ferri, J., & Keil, A. (2013). The dynamic allocation of attention to emotion: Simultaneous and independent evidence from the late positive potential and steady state visual evoked potentials. Biological Psychology, 92, 447–455.

Hajcak, G., MacNamara, A., & Olvet, D. M. (2010). Event-related potentials, emotion, and emotion regulation: An integrative review. Developmental Neuropsychology, 35, 129–155. doi:10.1080/87565640903526504

Hansen, T., & Gegenfurtner, K. R. (2009). Independence of color and luminance edges in natural scenes. Visual Neuroscience, 26, 35–49.

Hindi Attar, C., Andersen, S. K., & Müller, M. M. (2010). Time course of affective bias in visual attention: Convergent evidence from steady-state visual evoked potentials and behavioral data. NeuroImage, 53, 1326–1333.

Hindi Attar, C., & Müller, M. M. (2012). Selective attention to task-irrelevant emotional distractors is unaffected by the perceptual load associated with a foreground task. PLoS ONE, 7, e37186. doi:10.1371/journal.pone.0037186

Itti, L., & Koch, C. (2000). A saliency-based search mechanism for overt and covert shifts of visual attention. Vision Research, 40, 1489–1506. doi:10.1016/S0042-6989(99)00163-7

Junghöfer, M., Bradley, M. M., Elbert, T. R., & Lang, P. J. (2001). Fleeting images: A new look at early emotion discrimination. Psychophysiology, 38, 175–178.

Junghöfer, M., Elbert, T., Tucker, D. M., & Rockstroh, B. (2000). Statistical control of artifacts in dense array EEG/MEG studies. Psychophysiology, 37, 523–532.