Abstract

When making discrimination decisions between two stimulus categories, subjective confidence judgments are more positively affected by evidence in support of a decision than negatively affected by evidence against it. Recent theoretical proposals suggest that this “positive evidence bias” may be due to observers adopting a detection-like strategy when rating their confidence—one that has functional benefits for metacognition in real-world settings where detectability and discriminability often go hand in hand. However, it is unknown whether, or how, this evidence-weighting asymmetry affects detection decisions about the presence or absence of a stimulus. In four experiments, we first successfully replicate a positive evidence bias in discrimination confidence. We then show that detection decisions and confidence ratings paradoxically suffer from an opposite “negative evidence bias” to negatively weigh evidence even when it is optimal to assign it a positive weight. We show that the two effects are uncorrelated and discuss our findings in relation to models that account for a positive evidence bias as emerging from a confidence-specific heuristic, and alternative models where decision and confidence are generated by the same, Bayes-rational process.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

When considering two alternative hypotheses, the probability of the chosen hypothesis being correct is a function of the availability of evidence supporting not only the chosen hypothesis but also the unchosen one. For example, when deciding that there are more ants in the kitchen than in the living room, confidence should not only positively weigh the number of ants found in the kitchen (positive evidence) but also negatively weigh the number of ants found in the living room (negative evidence). Specifically, a decision should be based on the difference in the number of ants between the kitchen and the living room, but not on the total number of ants found in both rooms together (we refer to these quantities as relative evidence and sum evidence, respectively).

While sum evidence is irrelevant to discrimination decisions between two symmetrical hypotheses (e.g., kitchen or living room), it is highly informative with respect to detection decisions about the presence or absence of a signal. For example, when deciding that an ant colony is nesting in the house, we should also care about the total number of ants, irrespective whether they are found in the kitchen or living room (see Fig. 1).

Discrimination and detection in a two-dimensional signal detection theory (SDT) model. Left: In a two-dimensional SDT model, evidence e is sampled from one of two Gaussian distributions (here, centered at [0,1] and [1,0]). We define relative evidence as eS1 − eS2 and sum evidence as eS1 + eS2. Circles represent contours of two-dimensional distributions. Center and Right: Decision and confidence accuracy are maximized when based on a log-likelihood ratio for the two stimulus categories. Center: In discrimination, this yields optimal decision and confidence criteria that are based on relative evidence (distance from the main diagonal), irrespective of sum evidence. Right: In detection, this yields optimal decision and confidence that are based on a nonlinear interaction between relative and sum evidence. The third circle centred at (0,0) represents the two-dimensional distribution of percepts in the absence of stimuli. (Colour figure online)

A surprising finding is that, despite the irrelevance of sum evidence to the accuracy of discrimination decisions, people are systematically more confident in their perceptual discrimination decisions when sum evidence is high. For example, Zylberberg et al. (2012) had subjects judge which of two flickering stimuli was brighter on average. Subjects were more confident in their decisions when both stimuli were brighter, indicating an effect of sum evidence (here, overall luminance) on decision confidence. A positive effect of sum evidence on decision confidence is mathematically equivalent to a disproportional weighting of positive evidence over negative evidence, also known as a positive evidence bias (Koizumi et al., 2015; Peters et al., 2017; Rollwage et al., 2020; Samaha & Denison, 2020; Sepulveda et al., 2020; Zylberberg et al., 2012). The two are equivalent because positively weighing the sum of positive and negative evidence effectively weakens the negative contribution of negative evidence to decision confidence, while strengthening the contribution of positive evidence. Notably, this finding stands in contrast to what is expected from the exponential scaling of sensory noise relative to stimulus energy (Weber’s law). Instead, an effect of sum evidence on discrimination confidence may indicate a profound link between how confidence is formed in general, and the processes underpinning perceptual detection (Rausch et al., 2018; Samaha et al., 2020).

Different models identify the origin of this evidence-weighting asymmetry at different levels of the cognitive hierarchy, ranging from positing a metacognitive bias that ignores conflicting information (Maniscalco et al., 2016; metacognitive level, Peters et al., 2017), to asymmetries in the active sampling of evidence (attention allocation level; Sepulveda et al., 2020), and down to perceptual asymmetries between the representations of signal and noise (perception level; Miyoshi & Lau, 2020; Webb et al., 2021). These models vary in whether they postulate separate evidence accumulation processes for decisions and confidence judgments, and in whether they model confidence formation as following a suboptimal heuristic, or alternatively as being optimal with respect to available information (information which may be limited or corrupted by noise).

Here we focus on a subset of models which assume that subjects are rational decision makers equipped with veridical beliefs about the world, but who only have limited access to noisy evidence. Our models further assume that subjects’ confidence ratings are Bayesian estimates of the probability of being correct, given the exact same evidence that was used to make the decision. The models do not postulate any metacognitive biases, heuristics, or suboptimalities. We show that two of these models reproduce a positive evidence bias (that is, a positive effect of sum evidence) in discrimination confidence. The same models also make predictions for evidence weighting in detection judgments and confidence ratings. In four experiments, reverse correlation analysis revealed evidence weighting patterns that only partly agree with the predictions of our models. Most notably, our four models fail to account for a negative evidence bias we observed in detection decisions and confidence: a tendency to irrationally place a negative weighting on evidence, such as being more confident in the presence of a bright stimulus when one of the presented stimuli was unusually dark. In what follows we first describe the four models and the predictions they make, before turning to empirical findings from our four experiments.

Computational models

We model a setting in which agents are presented with a sequence of samples from two noisy sensory channels: E1 and E2. The agents’ task is to decide which of the two channels was the signal channel (discrimination), or whether any of the channels had signal in it at all (detection). When a signal is present in a channel, evidence E is sampled from a normal distribution \(\mathcal{N}\left(0.5,1\right)\), and when a signal is absent evidence is sampled from \(\mathcal{N}\left(0,1\right)\) (see Fig. 2, upper panel). In all four models agents only have access to a noisy version of these samples E′, corrupted by additional internal sensory noise. After each time step, they update their belief about the relative likelihood of the observed samples under the two possible world states (signal in Channel 1 versus 2, or signal presence versus absence), and given full knowledge of the true sample-generating process, including the properties of sensory noise. Each trial comprises 12 time steps. At the end of a trial, agents report the world state that maximizes the likelihood of the observed evidence, and rate their confidence as the objective probability that their decision was correct given the accumulated likelihood estimates. The four models vary in the properties of sensory noise, and in the selection of some channels for inspection by selection mechanisms.

Computational models. Upper panel: True world model. Stimuli span 12 time points, each comprising values from two sensory channels (here, presented as luminance values). In discrimination blocks, values in one channel are sampled from the noise distribution (red), and values in the other channel are sampled from the signal distribution (blue). In detection blocks, on half of the trials, all values are sampled from the noise distribution (red). Vanilla model: On each time point, participants perceive both channels, corrupted by sensory noise that is sampled from a normal distribution. They then update their beliefs accordingly. Firing rate model: Sensory samples are sampled from a Poisson distribution. Random attention model: Agents only attend one channel at a time. The attended channel is chosen at random per time point, with a strong bias which is consistent within a trial. Goal-directed attention model: Channels that are likely to include signal (as determined by previous samples) are more likely to be attended. (Colour figure online)

Vanilla model

In the basic, vanilla model, sensory noise is sampled from a normal distribution \(\mathcal{N}\left(0,2\right)\). This model corresponds to a standard equal-variance signal detection model, as illustrated in Fig. 1.

Firing rate model

The firing rate model is similar to the vanilla model, with the exception that perceived values are sampled from a Poisson, rather than a normal distribution. An important property of the Poisson distribution family, commonly used to model firing rates in neuronal populations, is that their mean and variance are lawfully coupled: the stronger the activation, the more variable it is. When applied to sensory neurons, this results in strong stimuli being subjectively perceived as noisier, consistent with the Weber–Fechner law (Fechner & Adler, 1860). In identifying the origin of the positive evidence bias at the perceptual level, this model shares a family resemblance with the unequal-variance model by Miyoshi and Lau (2020). An important feature of this model is that perceptual noise is conditioned not on stimulus class, but on the perceptual sample. This seems plausible, as the perceptual system has no access to stimulus class beyond the information that is available in perceptual samples.

Random attention model

Like the vanilla model, sensory noise is again sampled from \(\mathcal{N}\left(0,2\right)\). Unlike the vanilla model, however, here agents have access to one channel per time point only (they ‘attend’ to one channel at a time). At the start of each trial, agents randomly choose a preferred channel. Then, on each time point, they attend to the preferred channel with probability 0.95, and the nonpreferred channel with probability 0.05, and update their beliefs accordingly. We include this model because it is inherently asymmetric: on each trial, evidence from the preferred channel contributes more to both decision and confidence, simply because it is more visible to the agent.

Goal-directed attention model

This model is similar to the random attention model, except that here attention is biased towards channels that are more likely to include signal. Specifically, agents track the log likelihood ratio LLRr between signal presence in the left or in the right channels, with the probability of attending the right channel being dynamically set at each time point to S(LLRr) where S is a sigmoid function with a steep slope of 5 and LLRr is based on all previous sensory samples in the trial. A conceptually similar drift diffusion model was previously shown to produce a positive evidence bias in confidence ratings (Sepulveda et al., 2020).

Simulations

We simulated 20,000 discrimination and 20,000 detection trials per model (100 trials × 200 simulated agents per model). On each discrimination trial, the signal channel was designated as right or left with equal probability. On half of the detection trials both channels were noise channels. We then sampled, for each trial, 12 values from each channel. These 24 values were then passed on to the simulated agent, who returned a decision and a confidence rating. We then subjected the agents’ decisions and confidence ratings to a reverse correlation analysis. We now turn to describe this analysis, which will also be used to analyze the behaviour of human participants in Exps. 1–4.

Reverse correlation analysis

Following Zylberberg et al. (2012), we took a reverse correlation approach and asked which sources of evidence (positive, negative, relative, and sum evidence) contribute to agents’ decisions and confidence ratings. This analysis focuses on random fluctuations in signal intensity, and asks how they affect behaviour (here, decisions and confidence in these decisions). Accordingly, in analyzing data from our simulated agents, we contrasted external stimulus energy (E) and not internal stimulus energy (E’) leaving internal noise hidden.

Methodological note: Positive evidence bias in perceptual decisions

The positive evidence bias in decision confidence is often seen as particularly striking, given that positive and negative evidence are equally weighted in forming a decision (Peters et al., 2017; Zylberberg et al., 2012). For example, using reverse correlation, Zylberberg et al. (2012) showed that momentary fluctuations in the availability of perceptual evidence for and against a decision were equally predictive of the decision itself. Similarly, Peters et al. (2017) showed that in classifying rapidly presented images as ‘face’ or ‘house’, decisions are not solely guided by positive evidence (e.g., face-related brain activity when deciding ‘face’), but also by negative evidence (e.g., house-related brain activity when deciding ‘face’).

In both cases, it is useful to ask what it would look like for an agent to only consider positive evidence in making a decision. This soon becomes circular, because positive and negative evidence are defined with respect to the decision itself. For example, when analyzing the decisions of an agent that consistently ignores evidence for one alternative (similar to the random attention model above), both positive and negative evidence should still be predictive of decisions. The effect of positive evidence is then driven by those trials in which the agent selected the attended alternative, and the effect of negative evidence by those trials in which the agent selected the ignored alternative (because the evidence for the attended alternative was insufficient). Put differently, asymmetries of positive and negative evidence cannot affect the decision itself, because at the time of making the decision there is no positive and negative evidence to speak of—instead, there are two sources of evidence that may become positive or negative, depending on the decision that is selected. For this reason, in measuring evidence weighting in decision formation, we defined relative and sum evidence relative to the ground truth rather than the agents’ decision.

Discrimination decisions

From each trial (tr) we extracted random fluctuations in perceptual evidence in the signal \({E}_s^{tr}(t)\) and nonsignal \({E}_n^{tr}(t)\) sensory channels. To make sure we are measuring true random fluctuations and not systematic differences between noise and signal channels, we mean centered the signal channels across trials to 0, such that the average time course across all agents and trials was constant at 0. For simplicity, in extracting qualitative predictions from model simulations we averaged all time points in a trial to obtain trial-level estimates \({E}_s^{tr}\) and \({E}_n^{tr}\). Human data were analyzed in a similar fashion, but separately for each time point. Time-resolved decision and confidence kernels derived from model simulations are available in the Appendix.

‘Relative evidence’ was defined as the difference in noise terms between the signal and nonsignal channels (\({E}_{relative}^{tr}={E}_s^{tr}-{E}_n^{tr}\)). To obtain a decision kernel, we took the difference between the average relative evidence in trials where agents chose the signal and nonsignal channels \({E}_{relative}={\left\langle {E}_{relative}^{tr}\right\rangle}_{CORRECT}-{\left\langle {E}_{relative}^{tr}\right\rangle}_{INCORRECT}\). This was done separately for each simulated agent, and the resulting values were tested against zero in a t test. In all four models, relative evidence was higher on trials in which the agent correctly identified the signal channel (Fig. 3A, orange markers).

Simulated predictions for the reverse correlation analysis, derived from the four models. A Effects of relative (orange markers) and sum (black markers) evidence on discrimination decisions. B Effects of evidence for the chosen (green markers) and unchosen (purple markers) alternatives on discrimination confidence. C Effects of sum and relative evidence (defined with respect to participants’ decisions) on discrimination confidence. D, F, and H Effects of evidence in the signal channel (blue markers) and in the nonsignal channel (red markers) on detection decisions, confidence in yes responses, and confidence in no responses, respectively. E, G, and I Effects of relative evidence (orange markers) and sum evidence (black markers) on detection decisions, confidence in yes responses, and confidence in no responses, respectively. For scale, grid lines are plotted in common arbitrary units

‘Sum evidence’ was defined as the total sum of noise terms across both channels (\({E}_{sum}^{tr}={E}_s^{tr}+{E}_n^{tr}\)). Similarly, we used the difference between sum evidence in correct and incorrect trials \({E}_{sum}={\left\langle {E}_{sum}^{tr}\right\rangle}_{CORRECT}-{\left\langle {E}_{sum}^{tr}\right\rangle}_{INCORRECT}\) to probe effects of sum evidence on decision. Sum evidence had no effect on decision in any of the four models (Fig. 3A, black markers).

Discrimination confidence

In all four models, confidence was defined as the Bayesian probability of being correct, given an equal prior over the two world states (see Appendix). The median confidence rating was used to split evidence channels into four sets, according to decision (chosen or unchosen, depending on the agent’s decision) and confidence level (high or low). Confidence kernels for the chosen and unchosen channels were then extracted by subtracting the mean low-confidence from the mean high-confidence values for each channel:

Confidence kernels were also extracted for relative and sum evidence:

In all four models, high confidence ratings were associated with stronger evidence in the chosen channel (Fig. 3B, green markers) and weaker evidence in the unchosen channel (Fig. 3B, purple markers). As expected, this translated to an effect of relative evidence on decision confidence: agents were more confident when the evidence difference between the chosen and unchosen channels (Econf − relative) was high (Fig. 3C, orange markers).

Critically, only the firing rate and goal-directed attention models produced an effect of sum evidence (Econf − sum) on decision confidence, such that agents were more confident when overall evidence was high (Fig. 3C, black markers). As reviewed above, this effect is consistent with a positive evidence bias in discrimination confidence.

Detection decisions

For the reverse correlation analysis of detection decisions, we focused on trials in which a signal was present. This allowed us to disentangle the effects of evidence in the signal and nonsignal channels on detection decisions and confidence. We subtracted evidence in trials that resulted in a ‘no’ (target absent) decision from evidence in trials that resulted in a ‘yes’ (target present) decision, separately for the signal and nonsignal channels:

We similarly obtained detection kernels as a function of relative and sum evidence:

In all four models, ‘yes’ responses were associated with stronger evidence in the signal channel (Fig. 3D, blue markers). Importantly, the same was true for evidence in the nonsignal channel: Agents were more likely to respond ‘yes’ when evidence was stronger in this channel too (Fig. 3D, red markers). This is a key prediction of our Bayes-rational models: In detection, evidence in both channels should be weighted positively, as the agent’s goal is to detect any signal relative to noise. Together, these two positive effects translated to a strong effect of sum evidence on detection decisions: Agents were more likely to respond ‘yes’ when the total sum of evidence was high (Fig. 3E, black markers). A weaker effect of relative evidence on detection decisions was observed in all models except for the random attention model (Fig. 3E, orange markers).

Detection confidence

Similar to the discrimination task, the median confidence rating was used to split evidence channels into four sets, according to signal (signal channel or nonsignal channel) and confidence level (high or low). This was done separately for ‘yes’ and ‘no’ responses. Confidence kernels for the signal and nonsignal channels were then extracted by subtracting the mean low-confidence from the mean high-confidence evidence values for each channel and decision. For example, for ‘yes’ responses this meant computing:

In all four models, agents were more confident in their decisions about signal presence when evidence in the signal channel was stronger (Fig. 3F, blue markers). Mirroring the detection decision kernel means, confidence in signal presence was also positively affected by evidence for signal in the nonsignal channel (Fig. 3F, red markers). Together, these two positive effects produced an overall positive effect of sum evidence on confidence in signal presence (Fig. 3G, black markers). All four models predicted a weaker effect of relative evidence (Fig. 3G, orange markers).

Finally, we asked how random variability in sensory noise contributed to confidence in detection “no” responses. Here, a low number of misses made it difficult to reliably estimate confidence kernels for the firing rate model. In the remaining three models, agents were more confident in decisions about signal absence when evidence in both signal and nonsignal channels was weaker (Fig. 3H, blue and red markers, respectively). Together, these negative effects translated to a total negative effect of sum evidence on confidence in absence (Fig. 3I, black markers). None of the four models predicted a negative effect of relative evidence on confidence in absence, but the random attention model predicted a subtle positive effect (Fig. 3I, orange markers).

Equipped with qualitative predictions from four Bayes-rational models, we now turn to describing our empirical results. As we report below, these models failed to account for a key signature of human decision making: in both decisions and confidence ratings, subjects negatively weigh evidence in the nonsignal channel when inferring signal presence, as if they are making a discrimination judgment about the origin of the signal, rather inferring signal presence.

Experiment 1

Methods

Participants

The research complied with all relevant ethical regulations and was approved by the Research Ethics Committee of University College London (UCL; study ID number 1260/003). Ten participants were recruited via the UCL’s psychology subject pool, and gave their informed consent prior to their participation. Each participant performed four sessions of 600 trials each, in blocks of 100 trials. Sessions took place on different days and consisted of three discrimination blocks interleaved with three detection blocks.

Experimental procedure

The experimental procedure for Exp. 1 largely followed the procedure described in Zylberberg et al. (2012), Exp. 1. Participants observed a random-dot kinematogram for a fixed duration of 700 ms. In discrimination trials, the direction of motion was one of two opposite directions with equal probability, and participants reported the observed direction by pressing one of two arrow keys on a standard keyboard. In detection blocks, participants reported whether there was any coherent motion by pressing one of two arrow keys on a standard keyboard. In half of the detection trials, dots moved coherently to one of two opposite directions, and in the other half all dots moved randomly.

In both detection and discrimination blocks, participants indicated their confidence following each decision. Confidence was reported on a continuous scale ranging from chance to complete certainty. To avoid systematic response biases affecting confidence reports, the orientation (vertical or horizontal) and polarity (e.g., right or left) of the scale was set to agree with the Type 1 response. For example, following an up-arrow press, a vertical confidence bar was presented where ‘guess’ is at the center of the screen and ‘certain’ appeared at the upper end of the scale (see Fig. 4).

Task design for Experiment 1. In both discrimination and detection blocks, participants viewed 700 ms of a random dot motion array, after which they made a keyboard response to indicate their decision (motion direction in discrimination, signal absence or presence in detection), followed by a continuous confidence report using the mouse. Five participants viewed vertically moving dots and indicated their detection responses on a horizontal scale, and five participants viewed horizontally moving dots and indicated their detection responses on a vertical scale

To control for response requirements, for five subjects, the dots moved to the right or to the left, and for the other five subjects, they moved upward or downward. The first group made discrimination judgments with the right and left keys and detection judgments with the up and down keys, and this mapping was reversed for the second group. The number of coherently moving dots (‘motion coherence’) was adjusted to maintain performance at around 70% accuracy for detection and discrimination tasks independently. This was achieved by measuring mean accuracy after every 20 trials, and adjusting coherence by a step of 3% if accuracy fell below 60% or went above 80%. We opted for a block-wise staircasing procedure in order to keep motion energy relatively stable across trials, allowing participants to optimally place their detection criterion. The staircasing procedure for both tasks started at a coherence value of 1.0.

Stimuli for discrimination blocks were generated using the exact same procedure reported in Zylberberg et al. (2012).Footnote 1 Trials started with a presentation of a fixation cross for 1 second, immediately followed by stimulus presentation. The stimulus consisted of 152 white dots (diameter = 0.14∘), presented within a 6.5∘ circular aperture centered on the fixation point for 700 ms (42 frames, frame rate = 60 Hz). Dots were grouped in two sets of 76 dots each. Every other frame, the dots of one set were replaced with a new set of randomly positioned dots. For each coherence value of c′, a proportion of c′ of the dots from the second set moved coherently in one direction by a fixed distance of 0.33∘, while the remaining dots in the set moved in random directions by a fixed distance of 0.33∘. On the next update, the sets were switched, to prevent participants from tracing the position of specific dots. Frame-specific coherence values were sampled for each screen update from a normal distribution centred around the coherence value c with a standard deviation of 0.07, with the constraint that c′ must be a number between 0 and 1.

Stimuli for detection blocks were generated using a similar procedure, where on a random half of the trials coherence was set to 0%, without random sampling of coherence values for different frames.

To probe global metacognitive estimates of task performance, at the end of each experimental block (100 trials) participants estimated the number of correct responses they have made. Analysis of these global metacognitive estimates is provided in the Appendix.

Analysis

Experiment 1 was preregistered (preregistration document is available here: https://osf.io/z2s93/). Our full preregistered analysis is available in the Appendix.

Reverse correlation analysis

For the reverse correlation analysis, we followed a procedure similar to the one described in Zylberberg et al. (2012). For each of the four directions (right, left, up and down), we applied two spatiotemporal filters to the frames of the dot motion stimuli as described in previous studies (Adelson & Bergen, 1985; Zylberberg et al., 2012). The outputs of the two filters were squared and summed, resulting in a three-dimensional matrix with motion energy in a specific direction as a function of x, y, and time. We then took the mean of this matrix across the x and y dimensions to obtain an estimate of the overall temporal fluctuations in motion energy in the selected direction. Using this filter, we obtained estimates of temporal fluctuations in the mean and variance of motion energy for upward, downward, leftward and rightward motion within each trial. We refer to these temporal estimates as motion energy vectors, where each such vector consists of 42 entries, one per time point. Additionally, for every time point we extracted the variance along the x and y dimensions, but given the high correlation between our estimates of mean and variance, we focused our analysis on the mean motion energy.

In order to distill random fluctuations in motion energy from mean differences between stimulus categories, we subtracted the mean motion energy from trial-specific motion energy vectors. The mean motion energy vectors were extracted by averaging the motion energy vectors of all participants, separately for each motion coherence level and motion direction. We chose this approach instead of the linear regression approach used by Zylberberg et al. (2012) in order to be sensitive to the possibility of nonlinear effects of coherence on motion energy.

Results

Decision accuracy

Overall proportion correct was 0.74 in the discrimination and 0.72 in the detection task. Performance in discrimination was significantly higher than in detection MD = 0.02, 95% CI [0.00, 0.04], t(9) = 2.43, p = .038. This difference in task performance reflected a slower convergence of the staircasing procedure for the discrimination task during the first session. When discarding all data from the first session and analyzing only data from the last three sessions (1,800 trials per participant), task performance was equated between the two tasks at the group level MD = 0.00, 95% CI [−0.02, 0.02], t(9) = − 0.05, p = .962; BF10 = 0.31. In order to avoid confounding differences between discrimination and detection decision and confidence profiles with more general task performance effects, the first session was excluded from all subsequent analyses.

Overall properties of response time and confidence distributions

In detection, participants were more likely to respond ‘yes’ than ‘no’ (mean proportion of ‘yes’ responses: M = 0.59, 95% CI [0.53, 0.64], t(9) = 3.45, p = .007). We did not observe a consistent response bias for the discrimination data (mean proportion of ‘rightward’ or ‘upward’ responses: M = 0.52, 95% CI [0.47, 0.57], t(9) = 1.00, p = .344).

Replicating previous studies (Kellij et al., 2021; Mazor et al., 2020, 2021; Meuwese et al., 2014), we find the typical asymmetries between detection ‘yes’ and ‘no’ responses in response time, overall confidence, and the alignment between subjective confidence and objective accuracy (also termed metacognitive sensitivity, measured as the area under the response-conditional Type 2 ROC curve). ‘No’ responses were slower compared with ‘yes’ responses (median difference: 85.37 ms), and accompanied by lower levels of subjective confidence (mean difference of 0.08 on a 0–1 scale). Metacognitive sensitivity was higher for detection ‘yes’ compared with detection ‘no’ responses (mean difference in area under the curve units: 0.11). No difference in response time, confidence, or metacognitive sensitivity was found between the two discrimination responses. For a detailed statistical analysis of these behavioural asymmetries, see Appendix.

Reverse correlation

Discrimination

Using reverse correlation we quantified the effect of random fluctuations in motion energy on the probability of correctly identifying the true direction of motion, and on the temporal dynamics of decision formation. Importantly, this analysis approach treats leftward and rightward motion energy as two independently represented quantities, assuming that the decision-making module has access to individual spatiotemporal filters, and not only to the difference between them (Adelson & Bergen, 1985; Levinson & Sekuler, 1975; Van Santen & Sperling, 1984). We return to this point in describing the rationale for Exp. 2.

Following Zylberberg et al. (2012), we focused our analysis on the first 300 ms of the trial. Participants’ discrimination responses were significantly affected by the relative evidence for the true direction of motion compared with the opposite direction, (Erelative; t(9) = 8.48, p < .001), whereas sum evidence (Esum; the total amount of energy in both directions) had no effect on discrimination accuracy, (t(9) = − 0.70, p = .502 see Fig. 5A). This is consistent with a symmetric weighting of evidence in decision formation, and with the predictions of all four models.

Reverse correlation, Exp. 1. A Effects of relative (orange curve) and sum (black curve) evidence on discrimination decisions. Note that relative evidence here is defined with respect to the true direction of motion, not participants’ decisions. B Effects of evidence for the chosen (green curve) and unchosen (purple curve) alternative on discrimination confidence. C Effects of sum and relative evidence (defined with respect to participants’ decisions) on discrimination confidence. D, F and H Effects of evidence for the true direction of motion (blue curve) and for the opposite direction of motion (red curve) on detection decisions, confidence in yes responses, and confidence in no responses, respectively. E, G, and I Effects of relative evidence (orange curve) and sum evidence (black curve) on detection decisions, confidence in yes responses, and confidence in no responses, respectively. The first 300 ms of the trial are marked in black. All nine panels are presented at the same scale, in arbitrary motion-energy units. Stars represent significance in a two-sided t test for the first 300 ms of the trial: *p < .05, **p < .01, ***p < .001. (Colour figure online)

We next turned to the contribution of motion energy to subjective confidence ratings. The median confidence rating in each experimental session was used to split all motion energy vectors into four groups, according to decision (chosen or unchosen directions) and confidence level (high or low). Confidence kernels for the chosen and unchosen directions were then extracted by subtracting the mean low-confidence from the mean high-confidence vectors for both the chosen and unchosen directions. Motion energy in the chosen direction (positive evidence) significantly increased confidence (Econf − chosen, t(9) = 4.99, p = .001), but we found no significant decrease in confidence with stronger motion energy in the opposite direction, (Econf − unchosen, t(9) = − 0.25, p = .807; see Fig. 5B). Equivalently, both relative and sum evidence positively contributed to decision confidence (Econf − relative, t(9) = 2.76, p = .022; Econf − sum: t(9) = 2.92, p = .017; see Fig. 5C). This is a replication of the positive evidence bias observed in Zylberberg et al. (2012), and consistent with the predictions of the firing rate and goal-directed attention models.

Detection

Participants were significantly more likely to respond ‘yes’ when fluctuations in motion energy during the first 300 ms of the trial strengthened motion energy in the true direction of motion (Edetection − s, t(9) = 6.06, p < .001; see Fig. 5D, blue curve). Critically, and in contrast to the predictions of all four Bayes-rational models, motion energy in the opposite direction had a negative, rather than a positive effect on the probability of responding ‘yes’ (Edetection − n, t(9) = − 2.89, p = .018; see Fig. 5D, red curve). In other words, stronger motion energy in the opposite direction made it less likely that people would say a signal was present.

Confidence ratings were higher in detection ‘yes’ responses when random noise strengthened the motion energy in the true direction of motion (Econf − yes − s; t(9) = 4.59, p = .001; see Fig. 5F, blue curve). Again, in contrast to our model predictions, motion energy in the opposite direction had a negative, rather than a positive effect on detection confidence. That is, subjects were more confident in the presence of coherent motion when there was an unusually low level of motion energy in one of the two directions (Econf − yes − n; t(9) = − 2.95, p = .016; see Fig. 5F, red curve).

Furthermore, unlike in the discrimination task, we found no effect of sum evidence on confidence ratings in ‘yes’ responses (Econf − yes − sum; t(9) = 0.14, p = .892; see Fig. 5G, black curve). To reiterate, while detection decisions were mostly sensitive to fluctuations in motion energy toward the true direction of motion, confidence judgments in detection ‘yes’ responses were equally sensitive (with opposite signs) to fluctuations in the true and opposite directions of motion. However, and to anticipate the results of Exp. 3, presented below, we note that this symmetric weighting of evidence in detection confidence was not replicated in a subsequent experiment designed to directly test this surprising result.

Finally, confidence in ‘no’ responses was independent of relative, sum, positive, and negative evidence (all ps > 0.1; see Fig. 6H).

Task design for Experiment 2. In both tasks, participants viewed two flickering patches for 480 ms, after which they made a keyboard response to indicate which of the patches was brighter (discrimination) or whether any of the patches was brighter than the background (detection). (Colour figure online)

Experiment 2

In Experiment 1, we replicated previous observations of a positive evidence bias in discrimination confidence, such that confidence scaled with the total sum of evidence for both hypotheses. In contrast, in detection an effect of sum evidence was apparent for the decision, but not for the confidence kernels. Furthermore, confidence in detection ‘no’ responses was unaffected by fluctuations in motion energy.

Importantly, our analysis treated energy in the leftward and rightward directions as two independently represented quantities. Although models of motion perception commonly include such direction-selective sensory channels (Adelson & Bergen, 1985; Levinson & Sekuler, 1975; Van Santen & Sperling, 1984), it is unclear to what degree left and right motion energy channels are available to decision-making modules, as opposed to a mere subtraction between the two. In Exp. 2, the two sensory channels corresponded to two separate stimuli, making it much more likely that subjects represented them in an independent manner. Using these stimuli, we tested the generalizability of these findings to a different type of stimuli (flickering patches) and mode of data collection (a ~10-minute online experiment). Our preregistered objectives (documented here: https://osf.io/d3vkm/) were (1) to replicate a positive evidence bias in discrimination confidence, (2) to replicate the absence of a positive evidence bias in detection confidence, and (3) to replicate the absence of an effect of evidence on confidence in ‘no’ judgments.

Methods

Participants

The research complied with all relevant ethical regulations, and was approved by the Research Ethics Committee of University College London (study ID number 1260/003). 147 participants were recruited via Prolific (prolific.co) and gave their informed consent prior to their participation. They were selected based on their acceptance rate (>95%) and for being native English speakers. Following our preregistration, we aimed to collect data until we had reached 100 included participants based on our prespecified inclusion criteria (see https://osf.io/d3vkm/). Our final data set includes observations from 102 included participants. The entire experiment took around 10 minutes to complete. Participants were paid £1.25 for their participation, equivalent to an hourly wage of £7.50.

Experimental paradigm

A static demo of Exp. 2 is available on the project’s GitHub. The experiment was programmed using the jsPsych and P5 JavaScript packages (De Leeuw, 2015; McCarthy, 2015), and was hosted on a JATOS server (Lange et al., 2015). It consisted of two tasks (Detection and Discrimination) presented in separate blocks. A total of 56 trials of each task was delivered in two blocks of 28 trials each. The order of experimental blocks was interleaved, starting with discrimination.

The first discrimination block started after an instruction section, which included instructions about the stimuli and confidence scale, four practice trials and four confidence practice trials. Further instructions were presented before the second block. Instruction sections were followed by multiple-choice comprehension questions, to monitor participants’ understanding of the main task and confidence reporting interface. To encourage concentration, in addition to trial-wise feedback we also provided participants with feedback about their overall performance and mean confidence at the end of the second and fourth blocks.

Importantly, unlike in the lab-based experiment, there was no calibration of difficulty for the two tasks. The rationale for this is that in Exp. 1, perceptual thresholds for motion discrimination were highly consistent across participants, and staircasing took a long time to converge. Furthermore, in Exp. 1, we aimed to control for task difficulty, but this introduced differences between the stimulus intensities used for detection and discrimination. To complement our findings, here we aimed to match stimulus intensity between the two tasks and accepted that task performance might vary between detection and discrimination as a result.

Trial structure

In discrimination blocks, trial structure closely followed Exp. 2 from Zylberberg et al. (2012), with a few adaptations. Following a fixation cross (500 ms), two sets of four adjacent vertical gray bars were presented as a rapid serial visual presentation (RSVP; 12 frames, presented at 25 Hz), displayed to the left and right of the fixation cross (see Fig. 7). On each frame, the luminance of each bar was randomly sampled from a Gaussian distribution with a standard deviation of 10/255 units in the standard RGB 0-255 coordinate system. For one set of bars, this Gaussian distribution was centered at the same luminance value as the background (128/255). For the other set, it was centered at 133/255, making it brighter on average. Participants then reported which of the two sets was brighter on average using the ‘D’ and ‘F’ keys on the keyboard. After their response, they rated their confidence on a continuous scale, by controlling the size of a coloured circle with their mouse. High confidence was mapped to a big, blue circle, and low confidence to a small, red circle. To discourage hasty confidence ratings, the confidence rating scale stayed on the screen for at least 2,000 ms. Feedback about decision accuracy was delivered after the confidence rating phase.

Reverse correlation, Exp. 2. Same conventions as in Fig. 5. (Colour figure online)

Detection blocks were similar to discrimination blocks, with the exception that decisions were made about whether the average luminance of either of the two sets was brighter than the gray background, or not. In ‘different’ trials, the luminance of the four bars in one of the sets was sampled from a Gaussian distribution with mean 133/255, and the luminance of the other set from a Gaussian distribution with mean 128/255. In ‘same’ trials, the luminance of both sets was sampled from a distribution centered at 128/255. Participants were told that only one of the two patches could be bright, but never both. Decisions in Detection trials were reported using the ‘Y’ and ‘N’ keys. Confidence ratings and feedback were as in the discrimination task.

Results

Decision accuracy

Overall proportion correct was 0.85 in the discrimination and 0.67 in the detection task. Performance in discrimination was significantly higher than in detection (MD = 0.18), 95% CI [0.16, 0.20], t(101) = 18.01, p < .001. Unlike in Exp. 1, where we aimed to control for task difficulty, here we decided to match stimulus intensity between the two tasks, so a difference between detection and discrimination performance was expected (Wickens, 2002).

Overall properties of decision and confidence distributions

Similar to Exp. 1, participants were more likely to respond ‘yes’ than ‘no’ in the detection task (mean proportion of ‘yes’ responses: 0.54). We did not observe a consistent response bias in discrimination (mean proportion of ‘right’ responses: 0.50). The two detection responses showed the typical asymmetries, with ‘yes’ responses being faster (median difference of 77 ms) and accompanied by higher levels of confidence (mean difference of 0.10 on a 0–1 scale). Unlike in Exp. 1, here we found no evidence for a difference in metacognitive sensitivity between ‘yes’ and ‘no’ responses (mean difference of 0.02 in AUC units). No asymmetries were observed between the two discrimination responses. For a detailed statistical analysis, see Appendix.

Reverse correlation

Stimuli in Exp. 2 consisted of two flickering patches, each comprising four gray bars presented for 12 frames. Together, this summed to 96 random luminance values per trial, which we subjected to reverse correlation analysis, following the analysis procedure of Exp. 2 in Zylberberg et al. (2012).

Discrimination decisions

First, we asked whether random fluctuations in luminance influenced discrimination responses. Similar to the results obtained by Zylberberg et. al., discrimination decisions were sensitive to fluctuations in relative evidence (the difference in mean luminance between the left and right stimulus) during the first 300 ms of the trial (Erelative; t(100) = 14.29, p < .001; see Fig. 7A, orange curve). Furthermore, participants’ decisions were surprisingly more sensitive to evidence in the nontarget stimulus within the same time window, resulting in a negative effect of sum evidence (Esum; t(100) = − 2.29, p = .024; see Fig. 7A, black curve). Importantly, this negative effect of sum evidence on decision accuracy was not replicated in Exps. 3 and 4, and we do not interpret it further.

Discrimination confidence

Similar to Exp. 1, we observed a significant effect of positive (Econf − chosen; t(100) = 6.76, p < .001), and negative (Econf − unchosen; t(100) = − 4.28, p < .001), evidence on decision confidence within the first 300 ms of the stimulus (see Fig. 7B). When plotting the sum-evidence kernel, we observed an initial negative dip followed by a sustained positive effect of sum evidence on decision confidence, consistent with a positive evidence bias (Econf − sum; t(100) = 2.56, p = .012; see Fig. 7C, black curve).

Detection

Participants’ detection decisions were sensitive to fluctuations in the luminance of the target stimulus, such that ‘yes’ responses were associated with a brighter target stimulus (Edetection − s; t(101) = 9.39, p < .001; see Fig. 7D, blue curve). Similar to Exp. 1, and in contrast to the behaviour of Bayes-rational simulated agents, the luminance of the nontarget stimulus had a negative effect on the probability of responding ‘yes’ (Edetection − n; t(101) = − 4.64, p < .001; see Fig. 7D, red curve).

Confidence in detection ‘yes’ responses was similarly sensitive to fluctuations in the luminance of the target stimulus (Econf − yes − s; t(99) = 4.27, p < .001; see Fig. 7F, blue curve). Again, brighter nontarget stimuli made participants less, rather than more, confident in the presence of a signal (Econf − yes − n; t(99) = − 4.98, p < .001; see Fig. 7F, red curve). As in Exp. 1, here, too, sum evidence (overall luminance) had no significant effect on confidence in detection ‘yes’ responses (Econf − yes − sum; t(99) = − 0.28, p = .784; see Fig. 7G, black curve). However, this surprising result was not replicated in Experiments 3 and 4.

Finally, unlike in Exp. 1, confidence in detection ‘no’ responses was sensitive to random fluctuations in the luminance of the target, such that participants were more confident in the absence of a signal when the target stimulus was darker (Econf − no − s t(96) = − 2.28, p = .025; see Fig. 7H). The overall luminance of the display also had a negative effect on confidence in detection ‘no’ responses (Econf − no − sum; t(96) = − 2.04, p = .044; see Fig. 7I). The luminance of the nontarget stimulus (Econf − no − n; t(96) = − 0.71, p = .482), and the difference in luminance between the two stimuli (Econf − no − relative; t(96) = − 1.04, p = .301), had no significant effects on confidence in detection ‘no’ responses.

Experiment 3

In Exp. 3, we aimed to replicate our findings using a direct experimental manipulation in addition to employing reverse-correlation analysis. Our preregistered objectives (see our preregistration document: https://osf.io/hm3fn/) were (1) to replicate a positive evidence bias in discrimination confidence, (2) to replicate a positive evidence bias in detection decisions, and (3) to replicate the absence of a positive evidence bias in detection confidence.

Methods

Participants

The research complied with all relevant ethical regulations, and was approved by the Research Ethics Committee of University College London (study ID number 1260/003). A total of 173 participants were recruited via Prolific (prolific.co) and gave their informed consent prior to their participation. They were selected based on their acceptance rate (>95%) and for being native English speakers. Following our preregistration, we aimed to collect data until we had reached 100 included participants, based on our prespecified inclusion criteria (see https://osf.io/hm3fn/). Our final data set includes observations from 100 included participants. The entire experiment took around 20 minutes to complete. Participants were paid £2.50 for their participation, equivalent to an hourly wage of £7.50.

Experimental paradigm

A static demo of Exp. 3 is available on the project’s GitHub. Experiment 3 was identical to Experiment 2 with two changes. First, on half of the trials (high-luminance trials) the luminance of both sets of bars was increased by 2/255 for the entire duration of the display, thereby increasing sum evidence without affecting relative evidence. Second, in order to increase our statistical power for detecting response-specific effects in detection, participants performed four detection blocks and two discrimination blocks. Each block comprised 56 trials. The order of blocks was [detection, discrimination, detection, discrimination, detection, detection] for all participants.

Results

Decision accuracy

Overall proportion correct was 0.87 in the discrimination and 0.67 in the detection task. Performance in discrimination was significantly higher than in detection (MD = 0.21), 95% CI [0.19, 0.22], t(99) = 30.35, p < .001, as expected.

Overall properties of decision and confidence distributions

Similar to Exps. 1 and 2, participants were more likely to respond ‘yes’ than ‘no’ in the detection task (mean proportion of ‘yes’ responses: 0.53). We did not observe a consistent response bias in the discrimination task (mean proportion of ‘right’ responses: 0.50). The two detection responses showed the typical asymmetries, with ‘yes’ responses being faster (median difference of 69 ms) and accompanied by higher levels of confidence (mean difference of 0.09 on a 0–1 scale). As in Exp. 1, metacognitive sensitivity was higher for ‘yes’ than for ‘no’ responses (mean difference of 0.03 in AUC units). No asymmetries were observed between the two discrimination responses. For a detailed statistical analysis, see Appendix.

Reverse correlation

We first focused on reverse correlation analyses, pooling data from both high-luminance and standard trials (after mean-centering luminance in each), in order to replicate the findings of Exps. 1 and 2. We note the results are qualitatively similar when including standard trials only, with the exception of confidence in detection ‘no’ responses (see Appendix).

Discrimination decisions

Discrimination decisions were sensitive to relative evidence during the first 300 ms of the trial (Erelative; t(99) = 19.17, p < .001; see Fig. 8A) with no effect of sum evidence (Esum;t(99) = 0.23, p = .817).

Reverse correlation, Exp. 3. Same conventions as in Fig. 5. (Colour figure online)

Discrimination confidence

Decision confidence was sensitive to positive (Econf − s; t(99) = 6.27, p < .001) and negative (Econf − n; t(99) = − 5.29, p < .001) evidence within the first 300 ms of the stimulus (see Fig. 8B). Reverse correlation revealed no effect of random fluctuations in sum evidence on decision confidence (Econf − sum; t(99) = 0.75, p = .455), but an effect of sum evidence was found when directly contrasting high- and low-luminance trials, as we show in the “Evidence-weighting” section below.

Detection

Participants’ detection decisions were sensitive to fluctuations in the luminance of the target stimulus, such that ‘yes’ responses were associated with brighter target stimuli (Edetection − s; t(99) = 13.01, p < .001; see Fig. 8D, blue curve). Replicating the surprising results of Exps. 1 and 2, the luminance of the nontarget stimulus had a negative effect on the probability of responding ‘yes’ in the detection task (Edetection − n; t(99) = − 8.91, p < .001; see Fig. 8D, red curve). Together, detection decisions were sensitive to relative evidence (Edetection − relative, or the difference in luminance between the target and nontarget stimuli; t(99) = 15.95, p < .001), and to sum evidence (Edetection − relative, or the overall luminance of the display; t(99) = 4.29, p < .001; see Fig. 8E, orange and black curves, respectively).

Confidence in detection ‘yes’ responses was similarly positively correlated with the luminance of the target stimulus (Econf − yes − s; t(99) = 8.37, p < .001), and negatively correlated with the luminance of the nontarget stimulus (Econf − yes − s; t(99) = − 3.58, p = .001; see Fig. 8F). This is again in contrast to what is expected from a Bayes-rational agent: the probability of being correct is positively correlated with evidence intensity in both signal and nonsignal channels. Recall that a surprising finding in Exps. 1 and 2 was that sum evidence (motion energy or luminance) had no effect on participants’ confidence in their judgments of stimulus presence. In contrast, in Exp. 3, sum luminance had a significant positive effect on decision confidence when reporting target presence (Econf − yes − sum; t(99) = 2.83, p = .006; see Fig. 8G, black curve).

Finally, and in line with what we observed in Exp. 2, confidence in detection ‘no’ responses was sensitive to random fluctuations in the luminance of the target, such that participants were more confident in the absence of a signal when the target stimulus was darker (Econf − no − s; t(98) = − 2.72, p = .008; see Fig. 8H). Relative evidence also had a marginally significant negative effect on confidence in decisions about absence (Econf − no − relative; t(98) = − 1.98, p = .050). The luminance of the nontarget stimulus and the overall luminance had no significant effects on confidence in detection ‘no’ responses (ps > 0.3).

Evidence-weighting

In Experiments 1 and 2, confidence in signal presence was invariant to sum evidence (overall motion energy in Exp.1, sum luminance in Exp. 2). This was surprising for two reasons. First, in both cases sum evidence did have a significant effect on detection decisions. Second, incorporating information about sum evidence into confidence in the presence of a stimulus is rational: A target stimulus is more likely to be present (in either location) when both target and nontarget stimuli are brighter compared with when both are dark. As we document above, however, the counterintuitive findings of Exps. 1 and 2 only partly replicated in Exp. 3: subjects still negatively weighted the luminance of the nontarget stimulus (despite this being irrational), but this negative effect was weaker than the positive effect of the luminance of the target stimulus, resulting in an overall positive effect of sum evidence on detection confidence.

To shed further light on this issue, in Exp. 3, half of the trials were manipulated to include slightly brighter stimuli, thereby increasing statistical power for tests of the effects of sum luminance on discrimination and detection decisions and confidence.

First, we established that participants were more likely to respond ‘yes’ on higher compared with lower luminance trials (M = 0.09), 95% CI [0.07, 0.11], t(99) = 8.73, p < .001, consistent with overall luminance providing a valid cue for signal presence.

We next turned to the effects of our luminance manipulation on confidence. For discrimination judgments, participants were also more confident in higher compared with lower luminance trials (M = 0.02), 95% CI [0.01, 0.03], t(99) = 3.20, p = .002 (see Fig. 9A), replicating a positive evidence bias in discrimination confidence. For detection judgments, in line with the reverse correlation analysis of Exp. 3 (and in contrast to the findings of Experiments 1 and 2), participants were more confident in their ‘yes’ responses when overall luminance was higher (M = 0.02), 95% CI [0.01, 0.03], t(99) = 3.00, p = .003. Our preregistered Bayesian analysis provided strong evidence for the alternative hypothesis that detection confidence is affected by this manipulation (BF10 = 10.57). Furthermore, this increase in confidence in presence as a function of the brightness manipulation was not significantly different from that observed for discrimination confidence (M = − 0.01), 95% CI [−0.03, 0.01], t(99) = − 0.57, p = .573. Finally, and in line with Exp. 2, overall luminance had a significant negative effect on confidence in ‘no’ responses (M = − 0.02), 95% CI [−0.03, −0.01], t(99) = − 3.09, p = .003, indicating that participants were more confident in the absence of a target when overall luminance was lower.

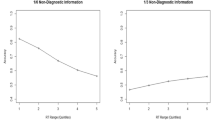

Difference in confidence between standard and higher evidence (luminance and hue) trials for the three response categories (detection ‘yes’ and ‘no’ responses, and discrimination responses) in Exps. 3 and 4. Box edges and central lines represent the 25, 50, and 75 quantiles. Whiskers cover data points within four interquartile ranges around the median. Stars represent significance in a two-sided t test: **p < .01, ***p < .001. (Colour figure online)

Experiment 4

A limitation of Exps. 2 and 3 is that apparent asymmetries in the weighting of positive and negative evidence may result from a nonlinear mapping between luminance in RGB space and screen brightness.Footnote 2 For example, a dark bar that is −2 RGB units from the mean does not necessarily cancel out a bright bar that is +2 RGB units from the mean (unless working with a gamma-corrected monitor), making positive evidence objectively more salient than negative evidence.

To address this concern, we include an additional experiment where evidence is sampled from a perceptually uniform space. Specifically, Exp. 4 was similar to Exp. 3 with the exception that flickering stimuli varied in their hue rather than luminance, and where hue values were sampled from a Gaussian distribution in the CIE L*a*b* colour space. Moreover, the roles of ‘target’ and ‘nontarget’ hues were counterbalanced between participants, such that any built-in asymmetries in the perception of positive and negative evidence should cancel out at the group level.

Methods

Participants

The research complied with all relevant ethical regulations, and was approved by the Research Ethics Committee of University College London (study ID number 1260/003). A total of 117 participants were recruited via Prolific (prolific.co), and gave their informed consent prior to their participation. They were selected based on their acceptance rate (>95%) and for being native English speakers. Following our preregistration, we aimed to collect data until we had reached 100 included participants, based on our prespecified inclusion criteria (see https://osf.io/9zbpc). Our final data set includes observations from 100 included participants. The entire experiment took around 20 minutes to complete. Participants were paid £2.50 for their participation, equivalent to an hourly wage of £7.50.

Experimental paradigm

A static demo of Exp. 4 is available on the project’s GitHub. Experiment 4 was identical to Experiment 3, with two changes. First, flickering bars varied in hue, randomly sampled from a Gaussian distribution in the CIE L*a*b* colour space, centred at L = 54, a = 21.5 and b = 11.5, with a radius of 49 (Schurgin et al., 2020). For half of the participants, nontarget hues were sampled around an orientation of 1.85 radians with a standard deviation of 0.35, and target hues were sampled around an orientation of 2.1 with a standard deviation of 0.35. For the first group, target patches were little more orange than nontarget patches, and for the second group target patches were little more green than nontarget patches. To make sure nontarget patches were perceived as the absence of signal relative to the background, the RSVP display was overlaid on top of a rectangle with the mean colour of a nontarget patch. Second, in order to avoid interference with the colour-judgment task, the confidence circle was presented in gray. Third, subjects were allowed to repeat the multiple-choice questions up to three times. Finally, in addition to trial-wise feedback, block-wise feedback about overall performance and mean confidence in correct and incorrect responses was displayed at the end of each block.

Results

Decision accuracy

Overall proportion correct was 0.92 in the discrimination and 0.74 in the detection task. Performance in discrimination was significantly higher than in detection (ΔM = 0.18), 95% CI [0.16, 0.20], t(199.32) = 16.52, p < .001, as expected.

Overall properties of decision and confidence distributions

Similar to Exps. 1–3, participants were more likely to respond ‘yes’ than ‘no’ in the detection task (mean proportion of ‘yes’ responses: 0.52). A slight response bias in discrimination was not significant (mean proportion of ‘right’ responses: 0.51). The two detection responses showed the typical asymmetries, with ‘yes’ responses being faster (median difference of 48 ms) and accompanied by higher levels of confidence (mean difference of 0.07 on a 0–1 scale). A mean difference of 0.03 in metacognitive sensitivity (AUC units) was not significant. For a detailed statistical analysis, see Appendix.

Reverse correlation

Discrimination decisions

Discrimination decisions were sensitive to relative evidence during the first 300 ms of the trial (Erelative; t(102) = 10.23, p < .001; see Fig. 10A). Sum evidence had a positive effect on discrimination decisions, such that subjects were more likely to correctly select the target stimulus when the overall hue of both stimuli together was closer to the target hue (Esum; t(102) = 2.31, p = .023).

Reverse correlation, Exp. 4. Same conventions as in Fig. 5. (Colour figure online)

Discrimination confidence

Decision confidence was sensitive to positive (Econf − chosen; t(108) = 6.00, p < .001) and negative (Econf − unchosen ;t(108) = − 3.94, p < .001) evidence within the first 300 ms (see Fig. 10B). The effect of sum evidence on decision confidence was only marginally significant (Econf − sum; t(108) = 1.92, p = .058). An effect of sum evidence was found when directly contrasting high- and low-evidence trials, as we show in the “Evidence-weighting” section below.

Detection

Participants’ detection decisions were sensitive to fluctuations in the hue of the target stimulus (Edetection − s; t(101) = 10.65, p < .001; see Fig. 10D, blue curve). Fluctuations in the hue of the nontarget stimulus had the opposite effect on detection decisions, replicating again our main finding from Exps. 1–3 (Edetection − n; t(101) = − 4.22, p < .001; see Fig. 10D, red curve).

Confidence in detection ‘yes’ responses was positively sensitive to fluctuations in the hue of the target (Econf − yes − s; t(99) = 6.08, p < .001), and negatively sensitive to fluctuations in the hue of the nontarget stimulus (Econf − yes − n; t(99) = − 2.63, p = .010; see Fig. 13F). Similar to Exp. 3, here, too, sum evidence (deviation from the background hue toward the target hue) had a significant positive effect on decision confidence when reporting target presence (Econf − yes − sum; t(99) = 2.64, p = .010; see Fig. 10G).

Finally, confidence in detection ‘no’ responses was sensitive to random fluctuations in the hue of the target, such that participants were more confident in the absence of a signal when the target stimulus was closer in hue to the background (Econf − no − s; t(97) = − 2.23, p = .028; see Fig. 10H). Sum evidence (the overall hue of the display) had a marginal negative effect on confidence in absence (t(97) = − 1.95, p = .054). Relative evidence and negative evidence had no significant effects on confidence in detection ‘no’ responses (ps > 0.3).

Evidence-weighting

As in Exp. 3, on half of the trials (‘high-evidence’ trials), the hue of both patches was slightly shifted in the direction of the target stimulus (that is, made greener if the target stimulus was greener than the nontarget stimulus, or more orange otherwise). This allowed us to directly measure how sum evidence affects both detection decisions, and detection and discrimination confidence ratings. Overall, we obtained a similar pattern to Exp. 3: on high-evidence trials, participants were more likely to respond ‘yes’ in the detection task (M = 0.17, 95% CI [0.15, 0.19], t(101) = 17.39, p < .001), and became more confident in their discrimination judgments (M = 0.02, 95% CI [0.01, 0.03], t(109) = 3.75, p < .001), more confident in their detection ‘yes’ responses (M = 0.03, 95% CI [0.02, 0.04], t(101) = 4.76, p < .001), and less confident in detection ‘no’ responses (M = − 0.04, 95% CI [−0.05, −0.03], t(101) = − 6.34, p < .001; see Fig. 9B). Our preregistered Bayesian analysis provided strong evidence for the alternative hypothesis that detection confidence is affected by sum evidence (BF10 = 2547.94). One difference in comparison to Exp. 3 was that in Exp. 4 the increase in ‘yes’ response confidence as a function of the hue manipulation was significantly stronger than that observed for discrimination confidence (M = − 0.02, 95% CI [−0.04, −0.01], t(101) = − 2.61, p = .011).

Discussion

In four experiments, we compared the drivers of decisions and confidence ratings in perceptual discrimination and detection, in conditions either matched for difficulty (Exp. 1) or signal strength (Exps. 2–4). In order to measure the contribution of perceptual evidence to confidence in detection and discrimination judgments, we followed Zylberberg et al. (2012) in applying reverse correlation analysis to noisy stimuli in perceptual decision-making tasks. We fully replicated the main results of Zylberberg and colleagues: decisions and confidence were affected by perceptual evidence in the first 300 ms of the trial, peaking at around 200 ms. We also successfully replicated a positive evidence bias (PEB) for discrimination confidence: confidence in the discrimination task was more affected by supporting than by conflicting evidence—a pattern which may be indicative of a detection-like decision rule operating for discrimination confidence. This effect was qualitatively accounted for by two Bayes-rational decision-making models: a firing rate model, in which perceptual noise was stimulus dependent (inspired by Miyoshi & Lau, 2020), and a goal-directed attention model (inspired by Sepulveda et al., 2020).

These same two models also made corresponding predictions for the detection task: When attempting to detect signal presence in either channel, decisions and confidence ratings should positively weigh evidence for both alternatives (e.g., motion energy to the right and to the left). Paradoxically, however, in the detection task subjects adopted a discrimination-like disposition, negatively weighing evidence in the nonsignal channel. In other words, subjects were less likely to say a target was present (in either channel) when the weaker channel had more evidence. In Exps. 1 and 2, this negative weighting of evidence in the nonsignal channel was strong enough to bring the effect of sum evidence on detection confidence down to zero (as the surprising negative effect of nonsignal evidence canceled out the expected positive influence of signal evidence on detection probability). This negative weighting of evidence in the nonsignal channel remained significant in Experiments 3 and 4, although was now somewhat weaker than the positive weighting of the evidence in the signal channel, leading to an overall sum evidence effect on detection probability. Overall, then, subjects incorporated detection-relevant evidence into their confidence in discrimination judgments, and discrimination-relevant evidence into their detection judgments and confidence ratings.

What drives these discrimination-like evidence weighting profiles in detection? In Experiments 3 and 4, one explanation is that our evidence-boost manipulation may have rendered it rational for subjects to focus on the difference in evidence between the two sensory channels. If on a random subset of trials both stimuli are made brighter, focusing on overall brightness is not as informative as focusing on the contrast between the brightness of the two stimuli, which remains unaffected by the evidence-boost manipulation. This account fails to explain, however, the emergence of a negative effect of evidence in the nonsignal channel in Experiments 1 and 2, where the evidence-boost manipulation was not applied and where a rational agent should have positively weighted evidence from both channels.

Alternatively, changes to the global perception of overall stimulus intensity may have an internal source. For example, slow brain oscillations in the alpha band affect both detection criterion and discrimination confidence but have minimal effects on discrimination sensitivity: a nonselective effect on perception which has been attributed to a global change in the baseline firing rate of sensory neurons (Samaha et al., 2020). Similar to our evidence-boost manipulation, an overall increase in baseline firing rate increases sum evidence without affecting relative evidence. If agents do not have metacognitive access to the current excitability of their perceptual system but do know that such global effects exist, focusing on relative evidence in detection may be a rational way of dealing with this ambiguity of baseline excitability. This account fails to explain, however, why subjects in Exps. 2–4 did not use the static background rather than the nontarget stimulus as a reference point, given that it is presumably also susceptible to perceptual influences from global changes in the baseline firing rate of sensory neurons.

Finally, it may be that evidence accumulation in detection and discrimination depends on shared processes and internal representations. Outside of a laboratory setting, detectability and discriminability mostly go hand in hand; the farther away from ‘nothing’ a representation is, the more distinct and differentiated from other representations it becomes. Given these meta-level expectations about the distribution of evidence in the world, the overall availability of evidence may be a valid cue for confidence in discrimination judgments (Maniscalco et al., 2016). Conversely, asymmetries in the availability of evidence for two competing hypotheses may serve as a valid cue for the presence of signal in one of the channels.

If discrimination confidence and detection decisions are drawing on shared evidence weighting mechanisms, one might expect that person-specific tendencies to rely more on one or other evidence channel will be correlated across both tasks. For example, subjects whose discrimination confidence was strongly affected by sum evidence (or equivalently, showed a pronounced positive evidence bias), may also be sensitive to sum evidence in their detection decisions and confidence. Surprisingly, however, we find no evidence for such an effect (see Appendix). Across subjects, the effects of positive, negative, sum and relative evidence on discrimination confidence were not reliably correlated with their corresponding effects on detection decisions, nor with their effects on confidence in signal presence. This null result should be interpreted with caution: our experiments were not powered to identify correlations between participants, with Exp. 1 adopting a small-N, many-trials design, and Experiments 2–4 a high-N, few-trials design, with the attendant limitation of noisy single-subject estimates. Thus while our current results do not directly support a shared-resources account, they are not inconsistent with it.

Conclusion

In four experiments, we replicated previous findings of a “positive evidence bias”: a detection-like evidence weighting in discrimination confidence. This pattern was accounted for by models that posit asymmetries either in the distributions of sensory noise or allocation of attention between target and nontarget channels. However, these same models could not account for a surprising finding of discrimination-like evidence weighting in detection decisions and confidence. We suggest that these seemingly irrational positive and negative evidence biases may reflect, at least in part, shared representational resources being harnessed for detection decisions and discrimination confidence.

Notes

We reused the original MATLAB code that was used for Exp. 1 in Zylberberg et al. (2012), kindly shared by Ariel Zylberberg.

We thank an anonymous reviewer for bringing this issue to our attention.

References

Adelson, E. H., & Bergen, J. R. (1985). Spatiotemporal energy models for the perception of motion. Journal of the Optical Society of America A, 2(2), 284–299. https://doi.org/10.1364/JOSAA.2.000284

De Leeuw, J. R. (2015). jsPsych: A JavaScript library for creating behavioral experiments in a web browser. Behavior Research Methods, 47(1), 1–12.

Fechner, G. T., & Adler, H. E. (1860). Elemente der psychophysik [Elements of psychophysics]. Breitkopf and Ha Rtel.

Kellij, S., Fahrenfort, J., Lau, H., Peters, M. A., & Odegaard, B. (2021). An investigation of how relative precision of target encoding influences metacognitive performance. Attention, Perception, & Psychophysics, 83(1), 512–524.

Koizumi, A., Maniscalco, B., & Lau, H. (2015). Does perceptual confidence facilitate cognitive control? Attention, Perception, & Psychophysics, 77(4), 1295–1306.

Lange, K., Kuhn, S., & Filevich, E. (2015). Just another tool for online studies (JATOS): An easy solution for setup and management of web servers supporting online studies. PLOS ONE, 10(6), e0130834.

Levinson, E., & Sekuler, R. (1975). The independence of channels in human vision selective for direction of movement. The Journal of Physiology, 250(2), 347–366.

Li, Y., Chen, K., & Yan, J. (2018). Tls: Tools of total least squares in error-in-variables models. https://CRAN.R-project.org/package=tls. Accessed 1.3.2023.

Maniscalco, B., & Lau, H. (2014). Signal detection theory analysis of Type 1 and Type 2 data: Meta-d′, response-specific meta-d′ and the unequal variance SDT model. In S. M. Fleming & C. D. Frith (Eds.), The cognitive neuroscience of metacognition (pp. 25–66). Springer.

Maniscalco, B., Peters, M. A., & Lau, H. (2016). Heuristic use of perceptual evidence leads to dissociation between performance and metacognitive sensitivity. Attention, Perception, & Psychophysics, 78(3), 923–937.

Mazor, M., Friston, K. J., & Fleming, S. M. (2020). Distinct neural contributions to metacognition for detecting, but not discriminating visual stimuli. eLife, 9, e53900.

Mazor, M., Mazor, N., & Mukamel, R. (2019). A novel tool for time-locking study plans to results. European Journal of Neuroscience, 49(9), 1149–1156.

Mazor, M., Moran, R., & Fleming, S. (2021). Stage 2 registered report: Metacognitive asymmetries in visual perception. Neuroscience of Consciousness, 2021(1), niab025. https://doi.org/10.1093/nc/niab025

McCarthy, L. (2015). p5.js. https://P5js.org. Accessed 1.3.2023.

Meuwese, J. D., van Loon, A. M., Lamme, V. A., & Fahrenfort, J. J. (2014). The subjective experience of object recognition: Comparing metacognition for object detection and object categorization. Attention, Perception, & Psychophysics, 76(4), 1057–1068.

Miyoshi, K., & Lau, H. (2020). A decision-congruent heuristic gives superior metacognitive sensitivity under realistic variance assumptions. Psychological Review, 127(5), 655.

Peters, M. A., Thesen, T., Ko, Y. D., Maniscalco, B., Carlson, C., Davidson, M., ..., Lau, H. (2017). Perceptual confidence neglects decision-incongruent evidence in the brain. Nature Human Behaviour, 1(7), 1–8.

Rausch, M., Hellmann, S., & Zehetleitner, M. (2018). Confidence in masked orientation judgments is informed by both evidence and visibility. Attention, Perception, & Psychophysics, 80(1), 134–154.

Rollwage, M., Loosen, A., Hauser, T. U., Moran, R., Dolan, R. J., & Fleming, S. M. (2020). Confidence drives a neural confirmation bias. Nature Communications, 11(1), 1–11.

Samaha, J., & Denison, R. (2020). The positive evidence bias in perceptual confidence is not post-decisional. bioRxiv.

Samaha, J., Iemi, L., Haegens, S., & Busch, N. A. (2020). Spontaneous brain oscillations and perceptual decision-making. Trends in Cognitive Sciences, 24(8), 639–653.