Abstract

Listeners’ use of contour information as a basis for memory of rhythmic patterns was explored in two experiments. Both studies employed a short-term memory paradigm in which listeners heard a standard rhythm, followed by a comparison rhythm, and judged whether the comparison was the same as the standard. Comparison rhythms included exact repetitions of the standard, same contour rhythms in which the relative interval durations of successive notes (but not the absolute durations of the notes themselves) were the same as the standard, and different contour rhythms in which the relative duration intervals of successive notes differed from the standard. Experiment 1 employed metric rhythms, whereas Experiment 2 employed ametric rhythms. D-prime analyses revealed that, in both experiments, listeners showed better discrimination for different contour rhythms relative to same contour rhythms. Paralleling classic work on melodic contour, these findings indicate that the concept of contour is both relevant to one’s characterization of the rhythm of musical patterns and influences short-term memory for such patterns.

Similar content being viewed by others

Over the years, multiple authors have highlighted the fundamental importance of contour in listeners’ musical processing (Dowling, 1978; Schmuckler, 2009, 2016). Evidence for the central role of this component can be seen across a wide swath of literature, including work on the perceptual organization of music (Bregman, 1990; Carlyon, 2004; Krumhansl & Schmuckler, 1986), perceived complexity of music (Eerola et al., 2006; Schmuckler, 1999), perceived similarity for melodies (Prince, 2014; Schmuckler, 2010), and musical memory (Dowling, 1971, 1972; Dowling & Fujitani, 1971; Halpern & Bartlett, 2010). Given the robustness of these findings, contour is clearly a fundamental component of virtually all aspects of musical behavior.

Typically, when contour is considered within a musical context, this concept involves the organization of pitch information. Thus, work on contour has almost exclusively focused on characterizing models of pitch structure (Adams, 1976; Friedmann, 1985; Marvin & Laprade, 1987; Quinn, 1999; Schmuckler, 1999, 2010). Although the concept of contour has been extended to other perceptible dimensions, most notably vision (Koenderink et al., 1997; Loffler, 2008; Taylor et al., 2014), it has only rarely been discussed with reference to other auditory and musical dimensions (but see McDermott et al., 2008, and Schmuckler & Gilden, 1993, for exceptions).

One notable exception to this generalization was provided by Schmuckler and Gilden (1993) in their investigation of perceived fractal contours in auditory sequences. In this work, Schmuckler and Gilden (1993) used random number sequences characterizable by different fractal dimensions (white noise, fractal dimension = 0.0; 1/f or flicker noise, fractal dimension = −1.0; brown noise, fractal dimension = −2.0) to generate auditory sequences in which the pitch changes, loudness changes, or duration changes of sequential tones were employed to encode the fractal structures. Listeners could accurately categorize these sequences on the basis of these underlying fractal dimensions when this structure was mapped into pitch or loudness changes, but not into successive note duration changes. Accordingly, this work suggested that the concept of perceived contour structure, operationalized as detecting higher/greater versus lower/lesser changes in sequential events, is applicable to pitch and loudness dimensions of auditory sequences, but not to the duration dimension of auditory tones. Put more simply, listeners can successfully perceive pitch and loudness contours, but cannot form duration contours of fractal structure.

Whether this result is the final word on the applicability of contour structure to duration remains equivocal. There are multiple reasons why these findings should not be taken as definitive regarding the possibility of contour formation in the auditory time dimension. First, and most fundamentally, Schmuckler and Gilden (1993) were primarily focused on investigating listeners’ percepts of fractal structure, not their ability to form and use contour information in changing note durations. As such, the methodology employed was not particularly optimal for exploring the question of duration contours. Second, and related to the first caveat, the actual changes in note durations used to create contours were not especially well-matched to the duration information that listeners typically encounter in musical contexts in general, or even in the quasimusical sequences used in this study. Specifically, Schmuckler and Gilden (1993) created duration contours by mapping random number sequences into 14 different duration “bins,” with these bins varying between 100 ms and 750 ms in 50 ms increments. Thus, the differences in note durations underlying the contours were linear in nature. Musical note durations, however, do not vary in such a continuous, linear fashion. Instead, musical durations are typically in ratio forms, with notes having 2:1 or 3:1 (or more complex) duration values, which are then used to produce complex musical rhythms. Accordingly, a more appropriate test of whether note durations can form duration contours would be in the context of rhythmic stimuli encompassing more musically realistic duration information.

Given these concerns, what might be a more appropriate context for investigating duration contour formation and use? One seemingly obvious characteristic would be to create rhythmic contours employing tones whose durations are drawn from typical musical events, with successive note durations comprising simple integer ratios (e.g., 1:2, 2:3, 3:4). Methodologically, it would make sense to employ paradigms that have successfully investigated perceived contour in other musical domains; in this case, it is most instructive to look at work investigating melodic contour. Specifically, Dowling and colleagues (Bartlett & Dowling, 1980; Dowling, 1971, 1972, 1978, 1994; Dowling & Bartlett, 1981; Dowling & Fujitani, 1971; Dowling et al., 1995; Halpern & Bartlett, 2010; Halpern et al., 1998) have provided some of the most comprehensive and well-known research on melodic contour. Although a thorough review of this work is beyond the scope of this report, this work has demonstrated that contour information is a central driver for both short- and long-term memory of musical melodies. Based on such a characterization, contour has been taken as a critical feature for remembering melodies (Dowling, 1991; Dowling et al., 1995). Moreover, contour information is especially critical for short-term memory melodic representations, and is enhanced when the melodies adhere to a coherent tonal framework (Dowling et al., 1995).

In Dowling’s work, melodic contour is coded as a simple series of +s and −s, representing ascending and descending (respectively) pitch differences between successive notes; this form of contour coding (or its equivalent code of 1s and −1s) has been employed by multiple authors (Friedmann, 1985; Marvin & Laprade, 1987; Quinn, 1999). In contrast, very little work exists on how to characterize duration or rhythmic contours. The majority of work that has analyzed rhythm has focused more on patterns of stress and intonation, as opposed to durations, likely due to the emphasis on rhythm and prosody in speech and language (Aiello, 1994; Cooper & Meyer, 1960; Lerdahl & Jackendoff, 1983; Patel et al., 2006; Thaut, 2008). As an example, Cooper & Meyer’s, (1960) classic text on the rhythmic structure of music explicitly related musical rhythmic structure to accented and unaccented groupings, using terminology drawn from work in prosody.

One of the few studies on this topic was provided by Marvin (1991), who proposed an alternative characterization of conceptualizing rhythmic contour more consistent with the framework employed in Dowling’s research. Specifically, Marvin suggested encoding rhythm contours “as analogous to melodic contours: they represent relative durations in much the same way that melodic contours represent relative pitch height, without a precise calibration of the intervals spanned” (Marvin, 1991, p. 64). Accordingly, in this scheme, rhythm contours would be similarly coded as a series of 1s and −1s representing increases and decreases in sequential note durations.

With this characterization, it becomes possible to define a framework for determining whether contour coding is an appropriate characterization of rhythmic contour, and whether contour plays as significant a role in the processing of rhythmic information as it does with pitch information. Methodologically, the most straightforward approach involves building from the classic melodic contour memory work pioneered by Dowling and colleagues (e.g., Dowling, 1984; Dowling et al., 2008). Although Dowling’s work has employed multiple variations in its explorations, the most basic paradigm involved presenting an initial standard melody, followed by a subsequent comparison (test) melody, and asking participants if these two melodies were the same or different (e.g., Dowling et al., 2008).

The power of this melodic contour paradigm lies in manipulations of the relation between the standard and comparison melodies. As shown in Fig. 1, this relation can take multiple forms. Somewhat obviously, the comparison could be an exact repetition of the standard melody (see Fig. 1A), varying only in terms of its transposition to a different pitch level (see Fig. 1B). A second type of contour could contain the same pattern of relative pitch differences, but different specific pitch intervals (see Fig. 1C); such a melody would be a same contour comparison. Finally, a third comparison could contain a different pattern of both relative pitch differences and specific pitch intervals (see Fig. 1D); this melody would be a different contour comparison. The goal of the current study was to adapt this general framework to investigate the perception of rhythmic contours.

Example melodic contours, adapted from Dowling (1994). Panel A shows a Standard Contour Melody, Panel B shows an Exact Repetition Melody comparison contour, Panel C shows a Same Contour Melody comparison contour with different pitch intervals, and Panel D shows a Different Contour Melody comparison with different pitch intervals and direction. For all melodies, “pitch intervals” refers to the number of semitones between notes, and “direction” indicates whether successive tones are higher (+) versus lower (—) in pitch

Experiment 1: Metric rhythms

Experiment 1 provided an initial test of coding for rhythms, and whether rhythm contour is indeed a factor in musical processing. This study adapted the basic short-term memory paradigm employed by Dowling and colleagues (Bartlett & Dowling, 1980; Dowling, 1978, 1991, 1994; Dowling et al., 2008; Dowling et al., 1995), with listeners hearing pairs of short standard-comparison rhythms, and then deciding whether the comparison rhythm was the same as the previously presented standard rhythm. Earlier work by Dowling manipulated multiple factors within this paradigm, including the pitch contour relation between standard and comparison melodies described earlier (exact transposition, same contour lures, different contour lures; see Fig. 1), the time delay between standard and comparison (e.g., Dowling, 1991; Dowling et al., 1995), the tempo of melodies (e.g., Dowling et al., 2008), whether melodies adhered to a tonal structure or were atonal (e.g., Dowling, 1978; Schulze et al., 2012), and the tonal relations between standard and comparison (e.g., Bartlett & Dowling, 1980). Generalizing from this substantial body of work, Dowling and colleagues observed that contour similarity was a critically important factor in memory for melodies, particularly at shorter time delays between the standard and comparison melodies. Dowling (1991), for instance, found that listeners were less likely to mistakenly respond “same” to a different melody with the same contour at long delays (39 s), particularly when the melodies were tonal. According to Dowling, these findings suggest that contour is particularly relevant for short-term musical memory, with tonality increasing in influence at longer delays.

Methods

Participants

Twenty undergraduate participants (13 females, Mage = 19.5 yrs, SD = 1.4 yrs, range: 18.6–23.7 yrs), drawn from the Introductory Psychology subject pool at the University of Toronto Scarborough, took part in this experiment. One additional participant completed this study, but their data were not used due to a failure to appropriately employ the rating scale. Listeners received credit in their Introductory Psychology course for participating. Participants were not selected based on any prior musical training. As such, participants evinced a range of musical backgrounds, including an average of 5.0 years playing an instrument or singing (SD = 4.7 yrs, range: 0.0–16.0 yrs), an average of 1.0 years of formal lessons (SD = 2.8 yrs, range: 0.0–12.0 yrs), an average of 1.1 hrs/wk involved in music-making activities (SD = 3.8 hrs/wk, range: 0.0–17.00 hrs/wk), and an average of 20.9 hrs/wk engaged in music listening (SD = 21.5 hrs/wk, range: 2.0–85.0 hrs/wk).

Apparatus

All experimental sessions were run on a PC-compatible computer (Windows 10), using custom-written software in MATLAB for stimulus presentation and response gathering. Participants viewed instructions and the experimental control procedure on a Dell 24-in. monitor (P2419H), heard auditory stimuli through over-ear headphones (Sennheiser HD280 Pro), and responded using the computer keyboard.

Stimuli, experimental conditions, and experimental design

Stimuli for this study consisted of six or eight note rhythms, played on a single pitch (F4, 349.23 Hz) using a piano sound. All stimuli were composed to be metric, adhering to the common Western meters of 3/4 (six notes) or 4/4 time (eight notes); two different time signatures were used to create metric variation in the stimuli, and to discourage participants from attempting to memorize the beginning or ending notes. Stimuli were initially created in Finale and were exported as .wav files. To instantiate a meter for these rhythms, six equiloudness beats of a hand clap sound were played prior to the start of the 3/4 rhythm, and eight beats were played prior to the start of the 4/4 rhythm, for both standard and comparison rhythms. The rhythm itself thus occurred over the two bars.

The rhythms employed consisted of a set of standard and comparison rhythm contours. Samples of these stimuli, in the 4/4 meter, appear in Fig. 2. The first rhythm (see Fig. 2A) represents both the standard rhythm, as well as the exact repetition comparison rhythm. The only difference between the standard and the exact repetition comparison was that the standard was played at 120 beats per minute (bpm), whereas the exact repetition comparison was played at 150 bpm, slightly faster than the standard. The different tempo between the standard and comparison melodies was done to ensure that participants could not simply use absolute note durations (particularly for initial or final tones) as a cue to determining whether or not the rhythm contours were the same or different. Figure 2B presents a second comparison rhythm and represents a same contour comparison variant of the standard. This variant was also played at 150 bpm, and contained the same pattern of relative durations as seen in the standard rhythm. Figure 2C presents a third comparison rhythm and portrays a different contour comparison variant of the standard, again played at 150 bpm. In this rhythm the relative durations displayed a markedly different pattern than the standard. Twenty different sets of standard and comparison rhythm contours were created for this experiment.

Durations for the notes within each rhythm ranged from thirty-second notes to half notes in sixteenth note increments. Thus, for the standard stimuli at a tempo of 120 bpm the shortest duration tone (a thirty-second note) was 66.5 ms and the longest duration tone (a half note) was 1,000 ms. For the comparison stimuli at a tempo of 150 bpm, the shortest duration tone was 50 ms, and the longest duration tone was 800 ms. All rhythms lasted between 3 and 4 s, varying depending upon the specific note duration content of each rhythm.

All listeners heard 80 trials of randomly ordered standard-comparison rhythm contour pairs. These pairs consisted of two repetitions of each standard rhythm—exact repetition rhythm comparison, and one repetition each of the standard rhythm—same contour rhythm comparison and standard rhythm—different contour rhythm comparison. The two repetitions of the exact repetition rhythm comparison were included to balance the number of objective “same” and “different” responses.

Procedure

The experimental procedure and purpose of this study were explained to participants, after which they provided informed consent. Participants were told that this experiment was examining their memory for musical rhythms. They were told that on each trial they would hear pairs of rhythms, and that their task was to determine whether the standard and comparison rhythms were exactly the same, ignoring the overall tempo of the rhythms. Participants were asked to make these judgments using a 1 to 6 scale, with responses of 1 to 3 indicating the rhythms were different at varying levels of confidence (1 = very confident to 3 = mildly confident), and responses 4 to 6 indicating the rhythms were the same, again at varying levels of confidence (4 = mildly confident to 6 = very confident).

Listeners then began a practice block of trials in which 12 randomly chosen standard–comparison pairs (drawn from the complete set of 80 standard–comparisons) were presented. Listeners received feedback as to the correctness of their response during this block. After completing these practice trials, listeners received an experimental block of trials, with the 80 standard–comparison trials presented in different randomized orders for participants.

Within each trial, listeners initially heard the standard (first) rhythm, followed by a 400-ms pause, and then the comparison (second) rhythm. Participants typed in their response using the computer keyboard, after which there was a 500-ms pause, and then the next trial began. The experiment itself lasted approximately 20 minutes. After completing the final experimental trial, the hypotheses and purpose of the study were explained to participants. Participants then completed a music background questionnaire. The entire visit to the lab lasted about 35–40 minutes.

Results and discussion

For each listener, the 6-point rating scale was used to calculate percentage correct values for exact repetition rhythm comparisons (responses of 4 through 6), for same contour rhythm comparisons (responses of 1 through 3) and for different contour rhythm comparisons (responses of 1 through 3). These percentages were then used to calculate d-prime values for both the same contour rhythm comparisons and the different contour rhythm comparisons. In this analysis, the percentage correct for the same contour rhythm comparisons and different contour rhythm comparisons were each used as separate hit rate values, with 1 – percentage correct for the exact repetition rhythm comparisons used as the false-alarm rate for both the same contour rhythm comparisons and different contour rhythm comparisons.Footnote 1

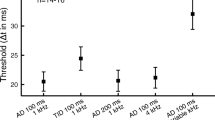

D-prime scores for the same contour rhythm and different contour rhythm comparisons were then compared in a paired-samples t test. This test revealed a significant difference in discrimination between the two comparison types, t(19) = 2.40, p < .05, with different contour rhythm comparisons (M d-prime = 1.40, SE = 0.20) better discriminated than same contour rhythm comparisons (M d-prime = 1.01, SE = 0.13). These means appear in Fig. 3A.

These findings are straightforward in their implications. Convergent with research exploring the importance of pitch contour, the observed difference in discrimination indicates that the relative pattern of note durations can drive listeners’ short-term memory for rhythms, with comparable relative duration patterns (i.e., same contour rhythms) leading to more memory confusions than divergent relative duration patterns (i.e., different contour rhythms). Thus, in contrast with previous work (i.e., Schmuckler & Gilden, 1993), contour is a relevant characteristic for such rhythmic patterns, at least with the short eight-note metric rhythms employed here.

Given these initial results, it is of interest to both replicate and expand these findings. One interesting extension would be to determine whether rhythm contour drives memory for rhythms that do not adhere to a metric framework. Put more simply, does rhythm contour influence short-term memory for ametric rhythms? Conceptually, exploring the impact of metric versus ametric rhythms is comparable to the work in pitch contour that has compared the impact of contour for tonal versus atonal melodies (Dowling, 1978, 1991; Dowling et al., 1995; Mikumo, 1992). Intriguingly, this research has observed that pitch contour is equally operative for both tonal and atonal melodies in musical memory. Accordingly, if contour plays a comparable role in rhythm processing, then contour should also be a factor in short-term memory for ametric stimuli, leading to poorer discrimination for ametric, same contour rhythms compared with ametric, different contour rhythms. Experiment 2 tests this prediction.

Experiment 2: Ametric rhythms

The goal of Experiment 2 was to replicate and extend the findings from Experiment 1, looking at the impact of duration contour information for ametric rhythms. If contour is an important characterizing component of rhythms, then the impact of this factor should still be evident with stimuli that do not adhere to typical, Western metrical structures.

Methods

Participants

Twenty undergraduate students (10 males, M = 20.7 yrs, SD = 1.6 yrs, range: 17.9–23.7 yrs) from the Introductory Psychology course at the University of Toronto Scarborough took part in this experiment; none of these participants took part in Experiment 1. Because these participants were not recruited on the basis of prior music training or experience, they exhibited a wide range of musical backgrounds. As a group, participants had an average of 6.5 years of playing an instrument or singing (SD = 5.2 yrs, range: 0.0–15.0 yrs), an average of 2.5 yrs of formal instruction on an instrument or voice (SD = 3.3 yrs, range: 0.0–10.0 yrs), an average of 4.8 hrs/wk involved in music-making activities (SD = 9.8 hrs/wk, range: 0.0–30.0 hrs/wk), and an average of 24.2 hrs/wk engaged in music listening (SD = 17.1 hrs/wk, range: 4.5–60 hrs/wk).

Apparatus, stimuli, experimental conditions, experimental design, and procedure

Most experimental parameters for the current study were comparable to the previous experiment. The principal difference in this study involved the stimuli, with these rhythms consisting of seven notes, as opposed to six or eight notes in the previous study, and most critically not occurring in any standard metric framework. Employing seven note rhythms was done (1) to highlight the ametric nature of these stimuli, given that two measures in a standard time signature have an even number of beats, and (2) to bring these rhythm contours into more direct alignment with the melodic contours of Dowling and colleagues, who typically employed seven note melodies (see Dowling, 1994). Figure 4 shows a sample set of standard and comparison melodies from this experiment. Given that there was no intended metric framework for these rhythms, there was no hand-clap lead-in to these rhythms. As in the previous study, all stimuli consisted of standard-comparison pairs of rhythms, with the exact repetition rhythm comparison (Fig. 4A), same contour rhythm comparison (Fig. 4B), and different contour rhythm comparison (Fig. 4C) played at a faster tempo (150 bpm) than the standard rhythm (120 bpm).

The procedure for this study was the same as in the previous experiment. Participants were told that they would hear pairs of rhythms and should indicate whether the second rhythm was exactly the same as the first, ignoring the difference in tempo. The structure of the study, including the 12 practice trials and the 80 experimental trials, was the same as in the previous study. Again, the experimental session lasted about 20 minutes.

Results and discussion

As in the previous study, the 6-point rating scale was scored dichotomously, calculating percentage correct values for the exact repetition rhythm trials (responses of 4 to 6) and the same contour rhythm and different contour rhythm trials (responses of 1 to 3), with separate scores for same and different contours. These percentages were then converted to d-primes, with the percentage correct for same contour rhythm and different contour rhythms used as separate hit rates, and 1 − percentage correct for exact repetition rhythms as the false-alarm rate. The d-primes for the same contour rhythm comparisons and different contour rhythm comparisons appear in Fig. 3B and were compared in a paired-samples t test. This analysis revealed a significant difference between the two types of contours, t(19) = 4.25, p < .001, with better discrimination for different contour rhythms (M = 2.03, SE = 0.16) relative to same contour rhythms (M = 1.67, SE = 0.13).

In a final analysis, the d-primes for Experiments 1 and 2 were compared in a two-way analysis of variance (ANOVA), with the within-subjects factor of Contour Type (same contour rhythms, different contour rhythms) and the between-subjects factor of Metric Framework (metric stimuli [Experiment 1], ametric stimuli [Experiment 2]). This analysis produced a main effect of Contour Type, F(1, 38) = 16.70, MSE = 0.17, p < .001, np2 = 0.30, a main effect of Metric Framework, F(1, 38) = 9.85, MSE = 0.85, p = .003, np2 = 0.85, and no interaction between the two factors, F(1, 38) = 0.03, ns. The main effect of Contour Type repeated the already observed difference between same contour rhythms and different contour rhythms (Ms = 1.34 and 1.72, SEs = 0.10 and 0.13, respectively). The main effect of Metric Framework demonstrated that listeners performed better with ametric rhythms than with metric rhythms (Ms = 1.85 and 1.21, SEs = 0.14 and 0.14, respectively).

Overall, this study produced comparable findings to those observed in the previous experiment. Rhythms employing the same relative pattern of short and long durations were harder to discriminate than rhythms containing different relative patterns of duration. Thus, fundamentally, these findings converge with comparable investigations of the role of pitch contour in melodic processing (Dowling, 1978, 1991; Dowling et al., 1995; Mikumo, 1992).

General discussion

Two experiments explored listeners’ use of contour information in short-term memory for rhythmic patterns. These studies found that the similarity of rhythm contour information led to memory confusions, such that listeners evinced more difficulty in discriminating pairs of rhythms in which the relative patterns of durations were comparable, relative to rhythms in which the relative patterns differed. Accordingly, these studies demonstrated that listeners can (1) form duration contours, thus encoding patterns of short and long durations, and (2) use these structures in basic cognitive tasks such as short-term memory comparisons. Thus, the concept of rhythm contour does play a role in listeners’ apprehension of musical information. As already highlighted, this process appears highly comparable to that observed in the processing of melodic patterns, in which listeners encode relative patterns of pitch changes, and use this information in their online processing of melodies (Eerola et al., 2006; Eerola et al., 2001; Schmuckler, 1999, 2010) as well as their memory for melodies (Croonen, 1994, 1995; Croonen & Kop, 1989; Dowling, 1971, 1972; Dowling & Fujitani, 1971; Halpern & Bartlett, 2010).

Before diving more deeply into the implications of these findings, it is important to consider one potential methodological issue with this study. Specifically, one concern is the possibility that the different contour comparison rhythms were simply more unusual or distinctive than the same contour comparison rhythms, relative to the standard rhythms, in some dimension other than the actual rhythm contour itself. In this regard, it is worth noting that the tempo differences between all of the comparison (standard, same contour, and different contour) rhythms (150 bpm) relative to the standard rhythms (120 bpm) was employed specifically to reduce the possibility of using absolute note durations as a cue for performing this task. Nevertheless, it is worth checking as to whether there is some obvious cue that systematically distinguishes the different contour comparison rhythms from the same contour comparison rhythms, relative to the standard rhythms.

Candidate possibilities in this regard might involve the relative lengths of the initial tone in each rhythm, or the final tone; these possibilities arise out of standard primacy and recency factors well-known in memory research. Another possibility might lie in the overall length of the contours themselves, although such a factor is only applicable to the ametric stimuli of Experiment 2, given that the metric framework of Experiment 1 ensured that all comparison contours were of the same overall duration.

To examine this possibility, difference scores were created by subtracting from the standard rhythm the durations (coded in sixteenth note beats) for the same and different comparison rhythm contours for the first note and last note (Experiments 1 and 2), and for the total length of the rhythms (Experiment 2 only). These difference scores were then compared in a series of t-tests, examining whether some factor systematically distinguished the different contour stimuli, relative to the same contour stimuli. Of these five comparisons (metric rhythms: first note, last note; ametric rhythms: first note, last note, total rhythm length), the only noteworthy result was a marginal effect for the first note difference scores for the ametric rhythms, t(19) = 1.99, p = .061, with a slightly larger difference for the different contour rhythms (M = −0.45, SE = 0.46) relative to the same contour rhythms (M = 0.10, SE = 0.49). For comparison, there was no effect for the first note durations for the metric, t(19) = −0.34, ns. Given that this was the only observed difference, as well as its marginal significance and its lack of consistency across experiments, it seems highly unlikely that this difference represents a systematic cue for reliably distinguishing standard and different rhythm contours, relative to standard and same rhythm contours. Accordingly, we believe the best explanation for the observed discrimination differences between same and different rhythm contours lies in the contour relations themselves, and not in any extraneous stimulus property.

Turning to a discussion of these findings, one interesting point of note is that the evidence for the formation and use of duration contours contrasts with the findings of Schmuckler and Gilden (1993). As described previously, in this earlier work these authors failed to find that listeners could accurately categorize duration contours based on fractal information. Thus, this earlier work suggested significant limitations in listeners’ abilities to form and use duration contours. In contrast, the current findings do indicate that listeners can use duration information to form and use contours.

To reconcile these divergent results, as mentioned previously, there are multiple notable differences between the experimental context of Schmuckler and Gilden (1993) and the current project. For instance, Schmuckler and Gilden used durations that changed linearly across sequential tones, with tones varying between 100 ms and 750 ms in 50-ms steps. In contrast, the current study employed duration sequences more representative of naturalistic musical contexts, with sequential tones generally embodying small ratio values. As an example, in the standard contour for the metric stimuli shown in Fig. 2, based on a sixteenth note subdivision, the first two tones have durations with a 4:6 (2:3) duration ratio, the second and third tones have durations in a 6:2 (3:1) ratio, and the third and fourth tones are in a 2:4 (1:2) ratio. In fact, the unusual linear structure of the duration sequences was raised by Schmuckler and Gilden as potentially underlying listeners’ inabilities to successfully categorize duration contours in that project.

Another factor of potential importance involves the overall length of the sequences. Specifically, Schmuckler and Gilden (1993) employed sequences that were 100-notes long, whereas the current project used sequences of six, seven, and eight notes. Somewhat obviously, the processing demands of 100-note sequences vary significantly from six- to eight-note sequences. Two points are noteworthy in this regard. First, it is important to note that it is possible to form and process contour information in extended sequences, such as employed by Schmuckler and Gilden. Indeed, in this previous work, listeners were accurate in categorizing 100-note sequences when these passages varied in pitch and loudness. Accordingly, absolute length of a sequence in and of itself does not determine listeners’ abilities to employ contour information.

Second, it is also important to note that very little work has examined the formation and use of contour information as a function of sequence length (but see Edworthy, 1985; Schulze et al., 2012, for exceptions). If one looks at the literature investigating melodic contour (e.g., Barnes-Burroughs et al., 2006; Dowling, 1991; Dowling & Fujitani, 1971; Dowling et al., 1995; Lee et al., 2011; Lu et al., 2017; Quinn, 1999), by and large the melodies employed as stimuli are short, ranging from 5 tones up to 8 tones. Work on interleaved melodies (Andrews & Dowling, 1991; Bey & McAdams, 2002, 2003; Dowling, 1973; Dowling et al., 1987) provides something of an exception to this trend by using more extended sequences. However, the sequences employed in this work are frequently highly overlearned melodies containing significant melodic repetition (i.e., simple nursery rhymes), thereby modifying our understanding of contour influences. One significant exception involves the contour investigations of Schmuckler (Prince, Schmuckler, et al., 2009a; Schmuckler, 1999, 2010), who employed 12-note melodies in initial work (Schmuckler, 1999) and longer melodies in subsequent work (Prince, Schmuckler, et al., 2009a; Schmuckler, 2010). As such, it is truly unknown what impact sequence length has on the process of contour formation. In fact, Schmuckler (2004, 2009, 2010, 2016) has explicitly suggested that the processing of contour might be fundamentally different as a function of sequence length. Along these lines, specific interval content, such as that described by Quinn and others (Friedmann, 1985, 1987; Marvin & Laprade, 1987; Quinn, 1999) is of principal use when listening to short melodies, in which such information can be easily retained. In contrast, more global characteristics, such as those captured in time series measures, and other features (e.g., number of contour reversals) could drive processing for longer melodies, in which it is more difficult to retain explicit pitch relations.

One of the more surprising results of the current project was the lack of an effect of metric structure on listeners’ abilities to employ contour information in their memory judgments. This result is intriguing given that one might expect that a metric structure would enhance rhythm processing. For example, multiple authors have argued that metrical structure guides attention in time, which then facilitates rhythmic processing (Fitzroy & Sanders, 2020; Jones, 2019; Jones & Boltz, 1989; Large & Jones, 1999; Nobre & van Ede, 2018). Along these lines, metric regularity allows for the temporal predictability of events, with such predictability enabling listeners to selectively attend to certain time points, thereby maximizing the apprehension of critical information. More broadly, meter is often characterized as the overarching temporal framework that allows listeners to perceive individual rhythms in the first place (London, 2002; Vuust & Witek, 2014). As such, it would be reasonable for meter to influence the apprehension of rhythm, with a predictable metric framework leading to increased accessibility of rhythmic contour information.

Given this context, the fact that the presence (versus absence) of a metric framework did not facilitate memory for rhythm contours, and actually produced somewhat poorer memory performance, is both surprising and intriguing. One possible explanation for this lack of a facilitatory effect for meter is that this framework might be functioning much like a tonal framework does for the apprehension and use of melodic contour. Work by Dowling and colleagues (Bartlett & Dowling, 1980; Dowling, 1978, 1991, 1994; Dowling & Fujitani, 1971; Dowling et al., 1995) has generally found that melodic contour is a critical factor in memory regardless of the degree of tonal structure in melodies. Dowling (1991), for instance, observed that, at short time delays, different contour lures were better detected than same contour lures regardless of whether the melodies were strongly tonal, weakly tonal, or atonal.

Despite these results, there is also abundant evidence that tonality does influence memory for musical information. Generally, strongly tonal melodies were better remembered than weakly tonal and atonal melodies. Even more centrally, at long delays, contour appeared not to be a factor in memory for strongly tonal melodies, although it continued to influence memory for weakly tonal and atonal melodies. As an aside, this finding highlights another result in work on melodic contour, which is that such information is most critical after short delays of a few seconds between standard and comparison, relative to long delays of 30 to 40 seconds (DeWitt & Crowder, 1986; Dowling, 1994; Dowling & Bartlett, 1981). It would be interesting to see if such a finding similarly held for rhythm contours. Regardless, the fact that contour tends to function irrespective of whether or not it is embedded within an overarching hierarchical framework (either tonal or metric) appears to be a very general characteristic underlying the use and importance of contour information.

Arising out of this discussion of, and comparison with, the use of contour in pitch and rhythm is the natural extension of this work to questions about co-occurring pitch and rhythm contours. Indeed, one critically important limitation to this work is that the sequences employed contained only duration changes, without any changing pitch information. Such contours are hardly representative of realistic Western musical contours, in which pitch and temporal information simultaneously change, and thus simultaneously give rise to contour information. As such, a natural extension of this work would involve investigating listeners’ use of contour information when sequences vary simultaneously in their pitch and rhythmic content.

Such work is of interest for multiple reasons. First, previous work on melodic perception has demonstrated that both pitch and rhythm information play roles in the perception of melodic contour complexity and similarity (Eerola & Bregman, 2007; Eerola et al., 2006; Eerola et al., 2001; Prince, 2014; Schmuckler, 2010). Thus, a fuller understanding of the psychological processes underlying memory for music requires investigating the relative importance and use of contour information in pitch and time.

Second, and arising out of this first rationale, exploration of the role of pitch and rhythm contours in musical processing provides an additional window into a perennially thorny issue in music processing—namely, whether pitch and rhythm information are processed independently (Makris & Mullet, 2003; Monahan & Carterette, 1985; Palmer & Krumhansl, 1987a, 1987b; Pitt & Monahan, 1987; Prince, Schmuckler, et al., 2009b; Prince, Thompson, et al., 2009) or interactively (Abe & Okada, 2004; Boltz, 1989a, 1989b, 1989c, 1991, 1992, 1993; Crowder & Neath, 1995; Jones et al., 1982; Kidd et al., 1984; Monahan et al., 1987). By now, the answer to this question appears to be a solid “it depends,” with the factors underlying what it depends on varying as a function of stimulus materials, experimental task, and even the method by which the data were analyzed (Prince, 2011, 2014; Prince, Thompson, et al., 2009). A related issue, and one that has seemed somewhat more tractable, is whether there is a more general tendency to emphasize one or the other dimension in music listening, with some data suggesting that at least for Western music, pitch information seems somewhat primary over temporal information (Prince, 2011, 2014; Prince & Pfordresher, 2012; Prince, Schmuckler, et al., 2009b; Prince, Thompson, et al., 2009). If true, this might predict that listeners more naturally let pitch contour drive musical processing, relative to rhythm contour, with pitch contour influencing rhythm judgments, but not vice versa. Thus, investigating the simultaneous processing of pitch and rhythm contours in melodies provides a novel window into this longstanding question.

Finally, investigation of simultaneous pitch and rhythm contours provides a means for investigating the question of redundancy gains in musical processing, a topic on which there exists only a handful of studies (Acker & Pastore, 1996; Schmuckler & Gilden, 1993; Schröter et al., 2007; Tekman, 2002; Tierney et al., 2008). Classic work on dimensional redundancy (Garner, 1970, 1974, 1976; Garner & Felfoldy, 1970; Pomerantz & Garner, 1973) finds that when multiple dimensions within a stimulus are congruent or correlated in some fashion, observers typically experience facilitated processing of that stimulus, compared with a stimulus containing the relevant information in only a single dimension (Mordkoff & Miller, 1993; Shephardson & Miller, 2014, 2016). For instance, Acker and Pastore (1996) had participants perform same/different judgments of the pitch of individual components of a major triad (i.e., either the major third or perfect fifth of the chord), when both of these components varied in either a correlated or orthogonal fashion. Discrimination scores indicated significant redundancy gains for determining frequency differences when the two components varied in a correlated fashion, and significant interference effects when these components varied orthogonally. Acker and Pastore interpreted these results as indicating that listeners process musical chords in an integral fashion.

With respect to the current findings, a natural extension of these results would be to examine whether pitch and rhythm contours also exhibit redundancy gains in the processing of melodies. Multiple questions could be considered in this regard, including whether comparable pitch and rhythm contours, relative to varying pitch and rhythm contours, induce more accurate short-term memory for melodies and increased accuracy in judgements of pitch and/or duration content in these melodies (e.g., did such melodies contain a given pitch or duration?). Interestingly, such work would converge in its implications involving the relation between pitch and temporal dimensions in music processing, as discussed previously.

In summary, the current results demonstrating listeners’ abilities to form rhythm contours and the use of such information in musical processing provide an important extension to previous work on rhythm perception, as well as on the role of contour information in musical apprehension. Even more generally, these findings provide important insights into our understanding of how listeners actively understand complex auditory sequences that continuously change in their multidimensional attributes (e.g., pitch, duration, loudness, timbre). Accordingly, such work has the potential to shed new light onto general processes involved with the perception and organization of complex auditory information, with corresponding implications across a wide array of auditory domains.

Data availability

The data will be uploaded to OSF upon publication.

Notes

The original intent of employing the 1 to 6 rating scale was to use these confidence scores to calculate Memory Operating characteristic (MOC) curves for the same contour rhythm and different contour rhythm comparisons, using the area under this MOC as the principal dependent measure. However, initial calculations of the area under the MOC suggested that this more refined measure added no additional insight to the general pattern of findings observed. Accordingly, the more conceptually familiar and easier d-prime values were employed as the principal dependent measure in this work.

References

Abe, J., & Okada, A. (2004). Integration of metrical and tonality organization in melody perception. Japanese Psychological Research, 46, 298–307. https://doi.org/10.1111/j.1468-5584.2004.00262.x

Acker, B. E., & Pastore, R. E. (1996). Perceptual integrality of major chord components. Perception & Psychophysics, 58, 748–761. https://doi.org/10.3758/bf03213107

Adams, C. R. (1976). Melodic Contour Typology. Ethonomusicology, 20, 179–215. https://doi.org/10.2307/851015

Aiello, R. (1994). Music and language: Parallels and contrasts. In R. Aiello & J. Sloboda (Eds.), Musical perceptions (pp. 40–63). Oxford University Press.

Andrews, M. W., & Dowling, W. J. (1991). The development of perception of interleaved melodies and control of auditory attention. Music Perception, 8, 349–368. https://doi.org/10.2307/40285518

Barnes-Burroughs, K., Watts, C., Brown, O. L., & LoVetri, J. (2006). The visual/kinesthetic effects of melodic contour in musical notation as it affects vocal timbre in singers of classical and music theater repertoire. Journal of Voice, 19, 411–419. https://doi.org/10.1016/j.jvoice.2004.08.001

Bartlett, J. C., & Dowling, W. J. (1980). Recognition of transposed melodies: A key-distance effect in developmental perspective. Journal of Experimental Psychology: Human Perception and Performance, 6, 501–515. https://doi.org/10.1037//0096-1523.6.3.501

Bey, C., & McAdams, S. (2002). Schema-based processing in auditory scene analysis. Perception & Psychophysics, 64, 844–854. https://doi.org/10.3758/bf03194750

Bey, C., & McAdams, S. (2003). Postrecognition of interleaved melodies as an indirect measure of auditory stream formation. Journal of Experimental Psychology: Human Perception and Performance, 29, 267–279. https://doi.org/10.1037/0096-1523.29.2.267

Boltz, M. (1989a). Perceiving the end: Effects of tonal relationships on melodic completion. Journal of Experimental Psychology: Human Perception and Performance, 15, 749–761. https://doi.org/10.1037/0096-1523.15.4.749

Boltz, M. (1989b). Rhythm and “good endings”: Effects of temporal structure on tonality judgments. Perception & Psychophysics, 46, 9–17. https://doi.org/10.3758/BF03208069

Boltz, M. (1989c). Time judgments of musical endings: Effects of expectancies on the “filled interval effect”. Perception & Psychophysics, 46, 409–418. https://doi.org/10.3758/bf03210855

Boltz, M. (1991). Some structural determinants of melody recall. Memory & Cognition, 19, 239–251. https://doi.org/10.3758/bf03211148

Boltz, M. (1992). Temporal accent structure and the remembering of filmed narratives. Journal of Experimental Psychology: Human Perception and Performance, 18, 90–105. https://doi.org/10.1037/0096-1523.18.1.90

Boltz, M. (1993). The generation of temporal and melodic expectancies during musical listening. Perception & Psychophysics, 53, 585–600. https://doi.org/10.3758/bf03211736

Bregman, A. S. (1990). Auditory scene analysis: The perceptual organization of sound. MIT Press.

Carlyon, R. P. (2004). How the brain separates sounds. Trends in Cognitive Sciences, 8, 465–471. https://doi.org/10.1016/j.tics.2004.08.008

Cooper, G., & Meyer, L. B. (1960). The rhythmic structure of music. University of Chicago Press.

Croonen, W. L. M. (1994). Effects of length, tonal structure, and contour in the recognition of tone series. Perception & Psychophysics, 55, 623–632. https://doi.org/10.3758/bf03211677

Croonen, W. L. M. (1995). Two ways of defining tonal strength and implications for recognition of tone series. Music Perception, 13, 109–119. https://doi.org/10.2307/40285687

Croonen, W. L. M., & Kop, P. F. M. (1989). Tonality, tonal scheme and contour in delayed recognition of tone sequences. Music Perception, 7, 49–67. https://doi.org/10.2307/40285448

Crowder, R. G., & Neath, I. (1995). The influence of pitch on time perception in short melodies. Music Perception, 12, 379–386. https://doi.org/10.2307/40285672

DeWitt, L. A., & Crowder, R. G. (1986). Recognition of novel melodies after brief delays. Music Perception, 3, 259–274. https://doi.org/10.2307/40285336

Dowling, W. J. (1971). Recognition of inversions of melodies and melodic contours. Perception & Psychophysics, 9, 348–349. https://doi.org/10.3758/BF03212663

Dowling, W. J. (1972). Recognition of melodic transformations: Inversion, retrograde, and retrograde inversion. Perception & Psychophysics, 12, 417–421. https://doi.org/10.3758/BF03205852

Dowling, W. J. (1973). The perception of interleaved melodies. Cognitive Psychology, 5, 322–337. https://doi.org/10.1016/0010-0285(73)90040-6

Dowling, W. J. (1978). Scale and contour: Two components of a theory of memory for melodies. Psychological Review, 85, 341–354. https://doi.org/10.1037/0033-295X.85.4.341

Dowling, W. J. (1984). Musical experience and tonal scales in the recognition of octave-scrambled melodies. Psychomusicology, 4, 13–32. https://doi.org/10.1037/h0094206

Dowling, W. J. (1991). Tonal strength and melody recognition after long and short delays. Perception & Psychophysics, 50, 305–313. https://doi.org/10.3758/bf03212222

Dowling, W. J. (1994). Melodic contour in hearing and remembering melodies. In R. Aiello & J. A. Sloboda (Eds.), Musical perceptions (pp. 173–190). Oxford University Press.

Dowling, W. J., & Bartlett, J. C. (1981). The importance of interval information in long-term memory for melodies. Psychomusicology, 1, 30–49. https://doi.org/10.1037/h0094275

Dowling, W. J., Bartlett, J. C., Halpern, A. R., & Andrews, M. W. (2008). Melody recognition at fast and slow tempos: Effects of age, experience, and familiarity. Perception & Psychophysics, 70, 496–502. https://doi.org/10.3758/PP.70.3.496

Dowling, W. J., & Fujitani, D. (1971). Contour, interval and pitch recognition in memory for melodies. Journal of the Acoustical Society of America, 49, 524–531. https://doi.org/10.1121/1.1912382

Dowling, W. J., Kwak, S., & Andrews, M. W. (1995). The time course of recognition of novel melodies. Perception & Psychophysics, 57, 139–149. https://doi.org/10.3758/bf03206500

Dowling, W. J., Lung, K. M.-T., & Herrbold, S. (1987). Aiming attention in pitch and time in the perception of interleaved melodies. Perception & Psychophysics, 41, 642–656. https://doi.org/10.3758/bf03210496

Edworthy, J. (1985). Interval and contour in melody processing. Music Perception, 2, 375–388. https://doi.org/10.2307/40285305

Eerola, T., & Bregman, M. (2007). Melodic and contextual similarity of folk song phrases. Musicae Scientiae, Discussion Forum, 4A-2007, 211–233. https://doi.org/10.1177/102986490701100109

Eerola, T., Himberg, T., Toiviainen, P., & Louhivuori, J. (2006). Perceived complexity of western and African folk melodies by western and African listeners. Psychology of Music, 34, 337–371. https://doi.org/10.1177/0305735606064842

Eerola, T., Järvinen, T., Louhivuori, J., & Toiviainen, P. (2001). Statistical features and perceived similarity of folk melodies. Music Perception, 18, 275–296. https://doi.org/10.1525/mp.2001.18.3.275

Fitzroy, A. B., & Sanders, L. D. (2020). Subject metric organization directs the allocation of attention across time. Auditory Perception & Cognition, 3, 212–237. https://doi.org/10.1080/25742442.2021.1898924

Friedmann, M. L. (1985). A methodology for the discussion of contour: Its application to Schoenberg’s music. Journal of Music Theory, 29, 223–248. https://doi.org/10.2307/843614

Friedmann, M. L. (1987). A response: My contour, their contour. Journal of Music Theory, 31, 268–274. https://doi.org/10.2307/843710

Garner, W. R. (1970). Good patterns have few alternatives. American Scientist, 58, 34–42.

Garner, W. R. (1974). The processing of information and structure. Erlbaum.

Garner, W. R. (1976). Interaction of stimulus dimensions in concept and choice processes. Cognitive Psychology, 8, 98–123. https://doi.org/10.1016/0010-0285%2876%2990006-2

Garner, W. R., & Felfoldy, G. L. (1970). Integrality of stimulus dimensions in various types of information processing. Cognitive Psychology, 1, 225–241. https://doi.org/10.1016/0010-0285(70)90016-2

Halpern, A. R., & Bartlett, J. C. (2010). Memory for melodies. In M. R. Jones, R. R. Fay, & A. N. Popper (Eds.), Music perception (pp. 233–258). Springer-Verlag.

Halpern, A. R., Bartlett, J. C., & Dowling, W. J. (1998). Perception of mode, rhythm and contour in unfamiliar melodies: Effects of age and experience. Music Perception, 15(4), 335–355. https://doi.org/10.2307/40300862

Jones, M. R. (2019). Time will tell—A theory of dynamic attending. Oxford University Press.

Jones, M. R., & Boltz, M. (1989). Dynamic attending and responses to time. Psychological Review, 96, 459–491. https://doi.org/10.1037/0033-295x.96.3.459

Jones, M. R., Boltz, M., & Kidd, G. (1982). Controlled attending as a function of melodic and temporal context. Perception & Psychophysics, 32, 211–218. https://doi.org/10.3758/bf03206225

Kidd, G. R., Boltz, M., & Jones, M. R. (1984). Some effects of rhythmic context on melody recognition. American Journal of Psychology, 97, 153–173. https://doi.org/10.2307/1422592

Koenderink, J. J., van Doorn, A. J., Kappers, A. M. L., & Todd, J. T. (1997). The visual contour in depth. Perception & Psychophysics, 59, 828–838. https://doi.org/10.3758/bf03205501

Krumhansl, C. L., & Schmuckler, M. A. (1986). The Petroushka chord: A perceptual investigation. Music Perception, 4, 153–184. https://doi.org/10.2307/40285359

Large, E. W., & Jones, M. R. (1999). The dynamics of attending: How we track time varying events. Psychological Review, 106, 119–159. https://doi.org/10.1037/0033-295X.106.1.119

Lee, Y.-S., Janata, P., Frost, C., Hanke, M., & Granger, R. (2011). Investigation of melodic contour processing in the brain using multivariate pattern-based fMRI. NeuroImage, 57, 293–300. https://doi.org/10.1016/j.neuroimage.2011.02.006

Lerdahl, F., & Jackendoff, R. (1983). A generative theory of tonal music. MIT Press.

Loffler, G. (2008). Perception of contours and shapes: Low and intermediate stage mechanisms. Vision Research, 48, 2106–2127. https://doi.org/10.1016/j.visres.2008.03.006

London, J. (2002). Cognitive constraints on metric systems: Some observations and hypotheses. Music Perception, 19, 529–550. https://doi.org/10.1525/mp.2002.19.4.529

Lu, X., Sun, Y., Ho, H. T., & Thompson, W. F. (2017). Pitch contour impairment in congenital amusia: New insights from the self-paced audio-visual contour task (SACT). PLoS One, 12, Article e0179252. https://doi.org/10.1371/journal.pone.0179252

Makris, I., & Mullet, É. (2003). Judging the pleasantness of contour-rhythm-pitch-timbre musical combinations. American Journal of Psychology, 116, 581–611. https://doi.org/10.2307/1423661

Marvin, E. W. (1991). The perception of rhythm in non-tonal music: Rhythmic contours in the music of Edgard Varèse. Music Theory Spectrum, 13, 61–78. https://doi.org/10.2307/745974

Marvin, E. W., & Laprade, P. A. (1987). Relating musical contours: Extensions of a theory for contour. Journal of Music Theory, 31, 225–267. https://doi.org/10.2307/843709

McDermott, J. H., Lehr, A. J., & Oxenham, A. J. (2008). Is relative pitch specific to pitch? Psychological Science, 19, 1263–1271.

Mikumo, M. (1992). Encoding strategies for tonal and atonal melodies. Music Perception, 10, 73–81. https://doi.org/10.2307/40285539

Monahan, C. B., & Carterette, E. C. (1985). Pitch and duration as determinants of musical space. Music Perception, 3, 1–32. https://doi.org/10.2307/40285320

Monahan, C. B., Kendall, R. A., & Carterette, E. C. (1987). The effect of melodic and temporal contour on recognition memory for pitch change. Perception & Psychophysics, 41, 576–600. https://doi.org/10.3758/BF03210491

Mordkoff, J. T., & Miller, J. (1993). Redundancy gains and coactivation with two different targets: The problem of target preferences and the effects of display frequency. Perception & Psychophysics, 53, 527–535. https://doi.org/10.3758/bf03205201

Nobre, A. C., & van Ede, F. (2018). Anticipated moments: Temporal structure in attention. Nature Reviews, Neuroscience, 18, 34–38. https://doi.org/10.1038/nrn.2017.141

Palmer, C., & Krumhansl, C. L. (1987a). Independent temporal and pitch structures in determination of musical phrases. Journal of Experimental Psychology: Human Perception and Performance, 13, 116–126. https://doi.org/10.1037/0096-1523.13.1.116

Palmer, C., & Krumhansl, C. L. (1987b). Pitch and temporal contributions to musical phrase perception: Effects of harmony, performance timing, and familiarity. Perception & Psychophysics, 41, 505–518. https://doi.org/10.3758/BF03210485

Patel, A. D., Iversen, J. R., & Rosenberg, J. C. (2006). Comparing the rhythm and melody of speech and music: The case of British English and French. The Journal of the Acoustical Society of America, 119, 3034–3047. https://doi.org/10.1121/1.2179657

Pitt, M. A., & Monahan, C. B. (1987). The perceived similarity of auditory polyrhythms. Perception & Psychophysics, 41, 534–546. https://doi.org/10.3758/BF03210488

Pomerantz, J. R., & Garner, W. R. (1973). Stimulus configuration in selective attention tasks. Perception & Psychophysics, 14, 565–569. https://doi.org/10.3758/BF03211198

Prince, J. B. (2011). The integration of stimulus dimensions in the perception of music. The Quarterly Journal of Experimental Psychology, 64, 2125–2152. https://doi.org/10.1080/17470218.2011.573080

Prince, J. B. (2014). Contributions of pitch contour, tonality, rhythm, and meter to melodic similarity. Journal of Experimental Psychology: Human Perception and Performance, 40, 2319–2337. https://doi.org/10.1037/a0038010

Prince, J. B., & Pfordresher, P. Q. (2012). The role of pitch and temporal diversity in the perception and production of musical sequences. Acta Psychologica, 141, 184–198. https://doi.org/10.1016/j.actpsy.2012.07.013

Prince, J. B., Schmuckler, M. A., & Thompson, W. F. (2009a). Cross-modal melodic contour similarity. Canadian Acoustics, 37, 35–49.

Prince, J. B., Schmuckler, M. A., & Thompson, W. F. (2009b). The effect of task and pitch structure on pitch-time interactions in music. Memory & Cognition, 37, 368–381. https://doi.org/10.3758/MC.37.3.368

Prince, J. B., Thompson, W. F., & Schmuckler, M. A. (2009). Pitch and time, tonality and meter: How do musical dimensions combine? Journal of Experimental Psychology: Human Perception and Performance, 35, 1598–1617. https://doi.org/10.1037/a0016456

Quinn, I. (1999). The combinatorial model of pitch contour. Music Perception, 16, 439–456. https://doi.org/10.2307/40285803

Schmuckler, M. A. (1999). Testing models of melodic contour similarity. Music Perception, 16, 295–326. https://doi.org/10.2307/40285795

Schmuckler, M. A. (2004). Pitch and pitch structures. In J. Neuhoff (Ed.), Ecological psychoacoustics (pp. 271–315). Academic Press.

Schmuckler, M. A. (2009). Components of melodic processing. In S. Hallam, I. Cross, & M. Thaut (Eds.), Oxford handbook of music psychology (1st ed. pp. 93–106). Oxford University Press.

Schmuckler, M. A. (2010). Melodic contour similarity using folk melodies. Music Perception, 28, 169–194. https://doi.org/10.1525/mp.2010.28.2.169

Schmuckler, M. A. (2016). Tonality and contour in melodic processing. In S. Hallam, I. Cross, & M. Thaut (Eds.), The Oxford handbook of music psychology (2nd ed., pp. 143–165). Oxford University Press.

Schmuckler, M. A., & Gilden, D. L. (1993). Auditory perception of fractal contours. Journal of Experimental Psychology: Human Perception and Performance, 19, 641–660. https://doi.org/10.1037/0096-1523.19.3.641

Schröter, H., Ulrich, R., & Miller, J. (2007). Effects of redundant auditory stimuli on reaction time. Psychonomic Bulletin & Review, 14, 39–44. https://doi.org/10.3758/bf03194025

Schulze, K., Dowling, W. J., & Tillman, B. (2012). Working memory for tonal and atonal sequences during a forward and a backward recognition task. Music Perception, 29, 255–267. https://doi.org/10.1525/mp.2012.29.3.255

Shephardson, P., & Miller, J. (2014). Redundancy gain in semantic categorisation. Acta Psychologica, 148, 96–106. https://doi.org/10.1016/j.actpsy.2014.01.011

Shephardson, P., & Miller, J. (2016). Non-semantic contributions to “semantic” redundancy gain. The Quarterly Journal of Experimental Psychology, 69, 1564–1582. https://doi.org/10.1080/17470218.2015.1088555

Taylor, G., Hipp, D., Moser, A., Dickerson, K., & Gerhardstein, P. (2014). The development of contour processing: Evidence from physiology and psychophysics. Frontiers in Psychology, 5, Article 719. https://doi.org/10.3389/fpsyg.2014.00719

Tekman, H. G. (2002). Perceptual integration of timing and intensity variations in the perception of musical accents. The Journal of General Psychology, 129, 181–191. https://doi.org/10.1080/00221300209603137

Thaut, M. H. (2008). Rhythm, music, and the brain: Scientific foundations and clinical applications. Routledge.

Tierney, A. T., Bergeson, T. R., & Pisoni, D. B. (2008). Effects of early musical experience on auditory sequence memory. Empirical Musicological Review, 3, 178–186. https://doi.org/10.18061/1811/35989

Vuust, P., & Witek, M. A. G. (2014). Rhythmic complexity and preditive coding: A novel approach to modeling rhythm and meter perception in music. Frontiers in psychology, 5, article 1111. https://doi.org/10.3389/fpsyg.2014.01111

Acknowledgments

This research was supported by a grant from the Natural Sciences and Engineering Research Council of Canada, award to M. A. Schmuckler. Portions of this work were presented at the Future Directions of Music Cognition conference, March 6–7, 2021.

Funding

The current research was funded by an NSERC Discovery Grant awarded to M. A. Schmuckler.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics approval

The experimental protocol was approved by the University of Toronto ethics board (research protocol # 22701), and is in accordance with the 1964 Declaration of Helsinki.

Consent to participate

All participants provided written informed consent for participation.

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Schmuckler, M.A., Moranis, R. Rhythm contour drives musical memory. Atten Percept Psychophys 85, 2502–2514 (2023). https://doi.org/10.3758/s13414-023-02700-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-023-02700-w