Abstract

Speech input like [byt] has been shown to facilitate not only the subsequent processing of an identical target word /byt/ but also that of a target word /tyb/ that contains the same phonemes in a different order. Using the short-term phonological priming paradigm, we examined the role of lexical representations in driving the transposed-phoneme priming effect by manipulating lexical frequency. Results showed that the transposed-phoneme priming effect occurs when targets have a higher frequency than primes, but not when they have a lower frequency. Our findings thus support the view that the transposed-phoneme priming effect results from partial activation of the target word’s lexical representation during prime processing. More generally, our study provides further evidence for a role for position-independent phonemes in spoken word recognition.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

One particularity of speech is that it unfolds over time, and consequently, the first sounds that make up a word are heard and begin to be processed before later sounds. In direct connection with this linearity, the most influential models of spoken word recognition (Gaskell & Marslen-Wilson, 1997; Grossberg, 2003; Marslen-Wilson, 1990; Marslen-Wilson & Warren, 1994; Marslen-Wilson & Welsh, 1978; McClelland & Elman, 1986; Norris, 1994) make the strong assumption that the phonological form of words consists of an ordered sequence of sounds. As a result, within such a view the sounds extracted from the speech signal are encoded according to their position in the speech input in order to be successfully mapped onto an ordered sequence of sounds stored in long-term memory. In accordance with this theorizing, there is abundant evidence that words that share the same sounds at the same positions as those present in a given portion of the speech signal are activated and thus are potential candidates for recognition (e.g., Allopenna, Magnuson, & Tanenhaus, 1998; Dufour & Peereman, 2003; Marslen-Wilson, Moss, & van Halen, 1996; Zwitserlood, 1989).

Nonetheless, there are recent demonstrations that position-independent phonemes play a role in spoken word recognition; results that therefore challenge the dominant view of spoken word recognition according to which the precise order of segments must be encoded. Using the visual world paradigm, Toscano, Anderson, and McMurray (2013) examined the eye movements of participants who followed spoken instructions to manipulate objects pictured on a computer screen. They found more fixations on the picture representing a CAT than on a control picture (e.g., the picture of a MILL) when the spoken target was TACK, thus suggesting that CAT and TACK are confusable words, even if the shared consonants are not in the same position. Importantly the activation of CAT when the target is TACK cannot be attributed to shared features between the phonemes /t/ and /k/, since exactly the same pattern of results was found for pairs of words such as BUS and SUB, whose phonemes /s/ and /b/ are maximally distinct. Also, Toscano et al. (2013) showed that the probability of fixating transposed words was higher than the probability of fixating words sharing the same vowels at the same position plus one consonant in a different position (e.g., SUN–BUS). This finding suggests that the transposed-phoneme effect is due to more than just vowel position overlap in the transposed words. The main finding of Toscano et al. (2013) that CAT and TACK are confusable words was replicated in a following study by Gregg, Inhoff, and Connine (2019) with a larger set of items. At the same time, Gregg et al. (2019) showed that competitors without vowel position overlap (e.g., LEAF–FLEA) were not fixated more that unrelated words. Such a finding could argue for a special status for vowels, and in particular that positional vowel match is critical in the observation of transposed-phoneme effects (Gregg et al., 2019; see also Dufour & Grainger, 2019). It is also possible that the distance separating the transposed phonemes could be a factor determining the size of transposed-phoneme effects.

Recent experiments in our own laboratories (Dufour & Grainger, 2019) provided further evidence for a role for position-independent phonemes in spoken word recognition. This new evidence was obtained with a different experimental paradigm and a different language than in the abovementioned studies. Using the phonological priming paradigm, Dufour and Grainger (2019) showed that the French words BUT /byt/ “goal” facilitated the subsequent processing of the target word TUBE /tyb/ “tube.” This transposed-phoneme priming effect was found when unrelated words (MOULE /mul/ “mussel” – TUBE /tyb/ “tube”), vowel overlap words (PUCE /pys/ “flea” – TUBE /tyb/ “tube”) and vowel plus one consonant in a different position overlap words (BULLE /byl/ bubble – TUBE /tyb/ “tube”) were used as control conditions, thus strengthening the previous observations. Testing also the full repetition (TUBE /tyb/– TUBE /tyb/), we observed that the transposed-phoneme priming effect differed from the repetition priming effect by both its magnitude and its time course. The transposed-phoneme priming effect was significantly smaller than the repetition priming effect, and was only obtained using a short-term priming procedure with targets immediately following primes, while the repetition priming effect also occurred in a long-term priming paradigm with primes and targets presented in separated blocks of stimuli. An explanation for these findings is that target words in the transposed-phoneme condition are only partially activated during prime processing. As a result, this partial activation quickly dissipates over time, and only fully activated lexical representations resist the longer delay and impact of intervening items in long-term priming.

To our knowledge, there is currently only one model of spoken word recognition, the TISK model (Hannagan, Magnuson, & Grainger, 2013; see You & Magnuson, 2018, for a more recent implementation), that can account for transposed-phoneme effects.Footnote 1 TISK is an interactive-activation model similar to the TRACE model (McClelland & Elman, 1986), but it replaces the position-dependent units in TRACE by both a set of position-independent phoneme units and a set of open-diphone units that represent ordered sequences of contiguous and noncontiguous phonemes (cf. the open-bigram representations proposed by Grainger & van Heuven, 2003, for visual word recognition). Within such a framework, it is position-independent phoneme units that lead to partial activation of transposed words, while diphone units encode the order of phonemes and allow the model to distinguish between transposed words.

We explained the results of our previous study (Dufour & Grainger, 2019) as resulting from partial activation of the lexical representation of the target word during processing of transposed-phoneme primes. The key hypothesis being that a lexical representation can be activated by a prime that shares phonemes with the target word even when the phonemes occupy different positions in prime and target. However, an alternative account of this priming effect, which could easily be envisaged within TISK, is that the effect does not involve lexical representations, but merely results from the repeated activation of the same time-invariant prelexical units during processing of primes and targets. Within such a view, the transposed-phoneme priming effect would only reflect processes occurring before, but not during, lexical access. Here, we provide a test of the lexical and prelexical accounts of the transposed-phoneme priming effect by manipulating lexical frequency. The logic behind this manipulation is straightforward. If the transposed-phoneme priming effect only involves sublexical representations, then we expect no modulation in the magnitude of the effect as a function of lexical factors, such as word frequency.Footnote 2 In contrast, if the transposed-phoneme priming effect involves lexical representations, the effect should modulate as a function of lexical factors such as word frequency.

In order to optimize our chances of finding an impact of lexical frequency on the transposed-phoneme priming effect, we opted for a relative-frequency manipulation whereby prime–target pairs were selected such that prime word frequency was lower than target frequency or vice versa by simply changing the order of primes and targets. The choice of a relative-frequency manipulation was motivated by practical and theoretical reasons. Practically speaking, it was not possible to select two transposed target words, one of low frequency and the other of high frequency, for the same prime word. Most important, however, is a consideration of how prime and target frequency can modulate short-term priming effects based on the principles of interactive-activation, and notably in terms of the role of word frequency in determining the level of activation attained by a given lexical representation upon presentation of speech input.

Most models of spoken word recognition (e.g., Gaskell & Marslen-Wilson, 1997; Marslen-Wilson, 1990; McClelland & Elman, 1986) account for word-frequency effects by assuming that high-frequency words receive stronger bottom-up input from the speech signal than do low-frequency words. Interactive-activation models (Hannagan et al., 2013; McClelland & Elman, 1986) further assume that the higher the activation level of a lexical representation the more it can inhibit all other coactivated words via lateral inhibition, as attested by neighborhood frequency effects (e.g., Dufour & Frauenfelder, 2010, for spoken words, and Grainger, O’Regan, Jacobs, & Segui, 1989, for written words). In terms of modulating priming effects, the general idea is that in a short-term priming context where primes and targets are related (i.e., share phonemes) but are not the same words, then the amount of priming is determined by the level of activation reached by the target word representation during prime processing. On the basis of the abovementioned principles, we argue that, for a given amount of prime–target overlap, the greatest level of target word activation, and therefore the greatest amount of priming occurs when primes are low frequency and targets high frequency. During prime word processing, the more frequent a target word is the more it will benefit from bottom-up activation via phonemes shared with the prime, and the less frequent a prime word is the less it can inhibit the target word via lateral inhibition.

Method

Participants

Eighty French speakers from Aix-Marseille University participated in the experiment. All participants reported having no hearing or speech disorders. Half of them were tested in the higher frequency condition, and the other half were tested in the lower frequency condition.

Materials

Twenty-six pairs of CVC French words that shared all of the phonemes but with the two consonants in a different order (e.g., ROBE /ʀͻb/ “dress” – BORD /bͻʀ/ “edge”) were selected from Vocolex, a lexical database for French (Dufour, Peereman, Pallier, & Radeau, 2002). They were selected such as the difference in frequencies of the two words of a pair was greater than 45 occurrences per million. Each of the words of a pair was used as a prime or as a target, depending of the frequency condition. In each frequency condition and for each target word, a control prime word sharing with the target only the medial vowel was selected (e.g. VOL /vͻl/ “flight” – BORD /bͻʀ/ “edge” for the lower/higher frequency condition; SOMME /sͻm/ “sum” – ROBE /ʀͻb/ “dress” for the higher/lower frequency condition). All the words have their uniqueness point after the last phoneme. None of the transposed prime–target pairs were semantically related in any obvious way. The main characteristics of the prime and the target words are given in Table 1. The complete set of prime and target words are given in Appendix 1.Footnote 3

For each frequency condition, two experimental lists were created so that each of the 26 target words were preceded by the two types of prime (transposed, control), and participants were presented with each target word only once. Note that a between-participants design for the factor relative prime–target frequency was used in order to have a sufficient number of trials for each type of prime across lists (i.e., 13 in this present case), while avoiding stimuli repetition within participants, such that a given prime or target was never heard twice by a same participant. The follow-up statistical analyses were made accordingly with the factor prime–target relative frequency entered as a between factor. For the purpose of the lexical decision task, 26 target nonwords were added to each list. The nonwords were created by changing the last phoneme of words not used in the experiment (e.g., the nonword /bãʒ/ derived from the word /bãk/ banque “bank”). This allowed us to have wordlike nonwords, and to force participants to listen to the stimuli up to the end prior to giving their response. So that the nonwords followed the same criteria as the words, 13 of them were paired with a prime word sharing the same phonemes, but in a different order (e.g., the prime word JAMBE /ʒãb/ “leg” and the nonword target /bãʒ/). The 13 other nonwords were paired with a prime word sharing only the medial phoneme (e.g., the prime word FOUR /fuʀ/ “oven” and the nonword target /mup/). In addition, 78 unrelated prime–target pairs having no phoneme in common were added to each list. Half of the unrelated pairs consisted of a prime word and a target word (e.g., GUERRE /gɛʀ/ “war” – DANSE /dãs/ “dance”), and the other half consisted of a prime word and a target nonword (e.g., LUGE /lyʒ/ “luge” – /bif/). All of the stimuli were recorded by a female native speaker of French, in a sound attenuated room, and digitized at a sampling rate of 44 kHz with 16-bit analog to digital recording.

Procedure

The participants were tested in a sound-attenuated booth. Stimulus presentation and recording of the data were controlled by a PC running E-Prime software. The primes and the targets were presented over headphones at a comfortable sound level, and an interval of 20 ms (ISI) separated the offset of the prime and the onset of the target. Participants were asked to make a lexical decision as quickly and accurately as possible on the target stimuli, with “word” responses being made using their dominant hand on an E-Prime response box that was placed in front of them. RTs were recorded from the onset of stimuli. The prime–targets pairs were presented randomly, and an intertrial interval of 2,000 ms elapsed between the participant’s response and the presentation of the next pair. Participants were tested on only one experimental list and began the experiment with 12 practice trials.

Results

Three pairs of prime–target words that gave rise to an error rate of more than 60% in the lower frequency target condition were removed from the analyses. The mean RT and percentage of correct responses on target words in each priming condition are presented in Table 2. The percentage of correct responses was analyzed using a mixed-effects logit model (Jaeger, 2008). No significant effects were found in an analysis of error rates, and so we will not discuss them further.

RTs on target words were analyzed using linear mixed effects models with participants and target words as crossed random factors, using R software (R Development Core Team, 2016) and the lme4 package (Baayen, Davidson, & Bates, 2008; Bates & Sarkar, 2007). The RT analysis was performed on correct responses, thus removing 190 (10.33%) data points out of 1,840. RTs greater than 2,200 ms (2.24%) were also excluded from the analysis. For the model to meet the assumptions of normally distributed residuals and homogeneity of variance, a log transformation was applied to the RTs (Baayen & Milin, 2010) prior to running the model. The model was run on 1,613 data points. We tested a model with the variable prime type (transposed, control) and frequency (lower/higher, higher/lower) entered as fixed effects. The model also included participants and items as random intercepts, plus random participant and item slopes for the within factor prime type (see Barr, Levy, Scheepers, & Tily, 2013). Global effects were reported and were obtained using the “anova” function, and the p values were computed using the Satterwaite approximation for degrees of freedom using lmerTest (Kuznetsova, Brockhoff, & Christensen, 2013).

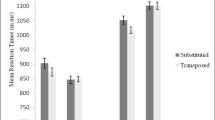

The main effect of frequency was significant, F(1, 108) = 5.84, p < .05, with RTs on target words being shorter in the lower/higher frequency condition than in the higher/lower frequency condition. The main effect of prime type was marginally significant, F(1, 354) = 3.58, p = .06. Crucially, the interaction between prime type and frequency was significant, F(1, 354) = 4.13, p < .05. Subsequent pairwise comparisons with a Bonferroni correction were done to assess the effect of priming within each frequency condition. A significant priming effect was observed only in the lower/higher frequency condition (z = −2.79, p < .05) with RTs on target words being 45 ms shorter when preceded by transposed primes in comparison with control primes. No significant priming effect was observed in the higher/lower frequency condition (z = 0.21, p > .20).

Discussion

Using the short-term phonological priming procedure, we recently showed that a speech input like [byt] not only facilitates the subsequent processing of the corresponding target word /byt/ but also that of the target word /tyb/ that contains the same phonemes in a different order (Dufour & Grainger, 2019). In the present study, we examined whether lexical representations are involved in the transposed priming effect by manipulating lexical frequency. The results are clear cut. Transposed-phoneme priming effects occurred when the targets were of higher frequency than the primes, but not when they were of lower frequency than the primes. Such a finding unequivocally argues in favor of an involvement of lexical representations in driving transposed-phoneme effects, and rules out an interpretation according to which priming would be uniquely due to residual activity in prelexical representations that carries over from prime to target processing. The present results therefore reinforce our claim that transposed-phoneme priming effects result from the greater activation of the lexical representations of target words during prime processing in the transposed-prime condition compared with control primes.

As discussed earlier, TISK (Hannagan et al., 2013) is currently the sole model that can account for transposed-phoneme effects. This is mainly because this model incorporates a set of position-independent phoneme units, which thus trigger activation of words that share all their phonemes with a given target word, but in a different order. Within this framework, how might the relative frequency of the prime and the target words affect the transposed priming effect? As noted in the Introduction, there are two mechanisms that likely contribute to the increase in priming effects when targets are more frequent than primes than vice versa. These mechanisms concern the way in which frequency influences bottom-up activation of lexical representations, and the way it modulates lateral inhibitory influences between coactivated lexical representations. The most straightforward way to conceptualize frequency effects in a connectionist model like TRACE (see Dahan, Magnuson, & Tanenhausl, 2001, for simulations), is in the connection strengths between phoneme representations and whole-word representations, with greater connections strength for high-frequency words. Although word frequency is not yet implemented in TISK, an implementation of frequency via variation in the connection strengths between sublexical phone and biphone representations and lexical representations would allow the position-independent phoneme units to generate more activation in the lexical representations of words that contain these phonemes when the words increase in frequency. As concerns the effects of lateral inhibition (lexical competition), a key principle in interactive-activation models like TISK (Hannagan et al., 2013) and its predecessor TRACE (McClelland & Elman, 1986), according to which coactivated lexical representations compete among themselves via lateral inhibition as a function of their relative activation levels, the more activated a lexical representation is the more it can inhibit competing representations. These two mechanisms work together to increase the activation level of target word representations during prime processing when the prime is lower frequency than the target. Low-frequency primes generate less inhibition on the target word representation, and high-frequency targets receive more bottom-up activation during prime processing.

We nevertheless acknowledge that the absolute frequency of both primes and targets was confounded with our relative frequency manipulation.Footnote 4 Future research could examine effects of absolute frequency on the transposed-priming effect by having prime–target pairs that are both high frequency or both low frequency. Given that the aim of the present work was to simply demonstrate an influence of lexical frequency on the transposed-phoneme priming effect, we opted for what was arguably the strongest manipulation possible. Our choice was determined by prior research showing an impact of the relative frequency of phonologically and/or orthographically similar words on spoken word recognition (e.g., Dufour & Frauenfelder, 2010) and visual word recognition (e.g., Grainger et al., 1989). In general, future studies could independently manipulate target word frequency, prime word frequency, and relative prime–target frequency in order to better specify the contribution of bottom-up factors (e.g., phone-to-word connection strengths) and lateral inhibition to transposed-phoneme priming effects.

Although our study clearly suggests that lexical representations are involved in the transposed-priming effect, this does not mean that the effect uniquely arises at the lexical level of processing. Indeed, as mentioned in Footnote 2, another possibility is that our effect results from the interaction between lexical and sublexical levels of representations, and thus in such a case the transposed-priming effect would have several loci. Models like TRACE (McClelland & Elman, 1986) and TISK (Hannagan et al., 2013) also include top-down connections between lexical and sublexical units, by which lexical representations, once activated, boost the activation level of their sublexical units. By this mechanism, high-frequency words that receive more activation than low-frequency words during prime processing return more activation to their (time-invariant) sublexical units, which in turn boost the activation level of the lexical representations that they are associated with. As a result, high-frequency words are more affected by the boost coming from the prelexical level than low-frequency words, thus providing another mechanism for the stronger priming effect observed with high frequency words. The important point here is that our results allow us to rule out a purely prelexical locus of the transposed-phoneme priming effect, and point to a role for lexical representation in driving this effect most likely via several of the mechanisms postulated in interactive-activation models.

In conclusion, the present study demonstrated that word frequency plays an important role in determining the size of transposed-phoneme priming effects. We are thus confident that the effect is driven by differences in the activation of lexical representations during prime word processing. Future computational work could conduct simulation studies to identify which mechanism or combination of mechanisms among lateral inhibition, resting level activation or phone-to-word connection strengths, and word–phone feedback best explains the modulation of the transposed-phoneme priming effect as a function of word frequency. Furthermore, because, to this date, transposed-priming effects have only been observed with short words, future research could also examine the contribution of factors such as word length, uniqueness point, position and proximity of the transposed phonemes. For example, given the sequentiality of the speech signal, a possibility is that the position of the initial phonemes would be more accurately encoded than that of the final phonemes. As a result, even if as shown in this study, the transposed-phoneme effect is observed in short-words with the first and last phonemes transposed, we might expect, especially in longer words, to observe greater priming effects when the transposed-phonemes occur at the end of words in comparison to the beginning of the word. Although our study opens up an intriguing set of questions, it nonetheless constitutes another demonstration of the existence of position-independent phonemes in auditory lexical access, which we believe provides additional strong constraints for the modeling of spoken word recognition. In a broader way, a parallelism could be made between the transposed-phoneme effects found in spoken word recognition and the transposition errors that commonly occur during speech production (e.g., Meyer, 1992). Transposed-phoneme effects thus appear to reinforce the view that speech perception and speech production are tightly linked, and that the phoneme could be the functional linguistic unit common to the production and comprehension of spoken language.

Notes

We note here that adding positional noise to models that provide a precise encoding of phoneme order might enable these models to account for transposed-phoneme effects, hence mimicking certain models of orthographic processing (e.g., Gomez, Ratcliff, & Perea, 2008), and their account of transposed-letter effects.

It could be argued that activity in such sublexical representations might be sensitive to word frequency via top-down feedback from lexical representations, but then the transposed-phoneme priming effect would no longer be purely prelexical in nature.

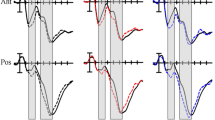

Intriguingly our two sets of words differ in their segmental contents in that 19 target words out of 26 in the higher/lower frequency condition began with a liquid (/l/ and /r/), and none of the target words began with a liquid in the lower/higher frequency condition. To test whether the transposed-phoneme priming effect could depend on the segmental contents of the target words, we conducted additional analyses on the set of words of our previous study (Dufour & Grainger, 2019, Experiment 3), in which vocalic overlap was also used as control. Among the 45 prime–target pairs used in that experiment, 11 consisted in targets beginning with a liquid (CALE /kal/ “wedge” – LAC /lac/ “lake”) and 13 consisted in targets beginning with a consonant other than a liquid but ending with a liquid (LOUPE /lup/ – “magnifying glass” – POULE /pul/ “hen”), thus mimicking the present set of words. Our analysis did not reveal a significant modulation of the priming effect as a function of the segmental contents. It was around 20 ms in the two groups (/cal/ – /lac/; Mean RTs: 1,025 ms and 1,005 ms for the control and transposed primes; /lup/ – /pul/; Mean RTs: 1,032 ms and 1,013 ms for the control and transposed primes). For the totality of the pairs the magnitude of the priming effect was around 26 ms.

Given the overlap between the frequency ranges of our two sets of stimuli, we examined the impact of this on the priming effects we observed. The overlap was due to only seven prime–target pairs out of 26. Removing these pairs did not change the pattern of results. The mean RTs were 1,119 ms and 1,122 ms for the control and transposed primes in the higher/lower frequency condition, and 1,024 ms and 983 ms for the control and transposed primes in the lower/higher frequency condition.

References

Allopenna, P. D., Magnuson, J. S., & Tanenhaus, M. K. (1998). Tracking the time course of spoken word recognition using eye movements: Evidence for continuous mapping models. Journal of Memory and Language, 38, 419–439.

Baayen, R. H., & Milin, P. (2010). Analyzing reaction times. International Journal of Psychological Research, 3, 12–28.

Baayen, R. H., Davidson, D. J., & Bates, D. M. (2008). Mixed-effects modeling with crossed random effects for subjects and items. Journal of Memory and Language, 59, 390–412.

Barr, D. J., Levy, R., Scheepers, C., & Tily, H. J. (2013). Random effects structure for confirmatory hypothesis testing: Keep it maximal. Journal of Memory and Language, 68, 255–278.

Bates, D. M., & Sarkar, D. (2007). lme4: Linear mixed-effects models using S4 classes (R Package Version 2.6) [Computer software]. Retrieved from http://lme4.r-forge.r-project.org

Dahan, D., Magnuson, J. S., Tanenhaus, M. K. (2001). Time course of frequency effects in spoken word recognition: Evidence from eye movements. Cognitive Psychology, 42, 317–367.

R Development Core Team. (2016). R: A language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing. Retrieved from http://www.R-project.org

Dufour, S., & Frauenfelder, U. H. (2010). Phonological neighbourhood effects in French spoken word recognition. Quarterly Journal of Experimental Psychology, 63, 226–238.

Dufour, S., & Grainger, J. (2019): Phoneme-order encoding during spoken word recognition: A priming investigation. Cognitive Science,43, 1–16.

Dufour, S., & Peereman, R. (2003). Lexical competition in phonological priming: Assessing the role of phonological match and mismatch lengths between primes and targets. Memory & Cognition, 31, 1271–1283.

Dufour, S., Peereman, R., Pallier, C., & Radeau, M. (2002). VoCoLex: A lexical database on phonological similarity between French words. L’Année Psychologique, 102, 725–746.

Gaskell, M. G., & Marslen-Wilson, W. D. (1997). Integrating form and meaning: A distributed model of speech perception. Language and Cognitive Processes, 12, 613–656.

Gomez, P., Ratcliff, R., & Perea, M. (2008). The overlap model: A model of letter position coding. Psychological Review, 115, 577–601.

Grainger, J., & van Heuven, W. J. B. (2003). Modeling letter position coding in printed word perception. In P. Bonin (Ed.), The mental lexicon (pp. 1–23). New York, NY: Nova Science.

Grainger, J., O’Regan, K., Jacobs, A., & Segui, J. (1989). On the role of competing word units in visual word recognition: The neighborhood frequency effect. Perception & Psychophysics, 45, 189–195.

Gregg, J., Inhoff, A. W., & Connine, C. M. (2019). Re-reconsidering the role of temporal order in spoken word recognition. Quarterly Journal of Experimental Psychology, 72, 2574–2583.

Grossberg, S. (2003). Resonant neural dynamics of speech perception. Journal of Phonetics, 31, 423–445.

Hannagan, T., Magnuson, J. S., & Grainger, J. (2013). Spoken word recognition without a TRACE. Frontiers in Psychology, 4, 563.

Jaeger, T. F. (2008). Categorical data analysis: Away from ANOVAs (transformation or not) and towards logit mixed models. Journal of Memory and Language, 59, 434–446.

Kuznetsova, A., Brockhoff, P. B., & Christensen, R. H. B. (2013). lmerTest: Tests in linear mixed effects models (R Package Version 2.0-11) [Computer software]. Retrieved from http://cran.r-project.org/web/packages/lmerTest/index.html

Marslen-Wilson, W. D. (1990). Activation, competition, and frequency in lexical access. In G. T. M. Altmann, Cognitive models of speech processing: psycholinguistic and computational perspectives (pp. 148–172). Cambridge, MA: MIT Press.

Marslen-Wilson, W. D., & Warren, P. (1994). Levels of perceptual representation and process in lexical access: Words, phonemes, and features. Psychological Review, 101, 653–675.

Marslen-Wilson, W. D., & Welsh, A. (1978). Processing interaction and lexical access during word recognition in continuous speech. Cognitive Psychology, 10, 29–63.

Marslen-Wilson, W. D., Moss, H. E., & van Halen, S. (1996). Perceptual distance and competition in lexical access. Journal of Experimental Psychology: Human Perception and Performance, 22, 1376–1392.

McClelland, J. L., & Elman, J. L. (1986). The TRACE model of speech perception. Cognitive Psychology, 18, 1–86.

Meyer, A. S. (1992). Investigation of phonological encoding through speech error analyses: Achievements, limitations and alternatives. Cognition, 42, 181–211.

Norris, D. (1994). SHORTLIST: A connectionist model of continuous speech recognition. Cognition, 52, 189–234.

Toscano, J. C., Anderson, N. D., & McMurray, B. (2013). Reconsidering the role of temporal order in spoken word recognition. Psychonomic Bulletin & Review, 20, 981–987.

You, H., & Magnuson, J. (2018). TISK 1.0: An easy-to-use Python implementation of the time-invariant string kernel model of spoken word recognition. Behavior Research Methods, 50, 871–889.

Zwitserlood, P. (1989). The locus of the effects of sentential-semantic context in spoken-word processing. Cognition, 32, 25–64.

Acknowledgements

This research was supported by ERC Grant No. 742141. We are grateful to three anonymous reviewers who provided helpful comments on an earlier version of this manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Primes and targets with their frequency (i.e., “Freq” in number of occurrences per million) used in the Experiment. Phonetic transcriptions and English translations are provided.

Rights and permissions

About this article

Cite this article

Dufour, S., Grainger, J. The influence of word frequency on the transposed-phoneme priming effect. Atten Percept Psychophys 82, 2785–2792 (2020). https://doi.org/10.3758/s13414-020-02060-9

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-020-02060-9