Abstract

Researchers have recognized the role that task representation plays in our behavior for many years. However, the specific influence that the structure of one’s task representation has on executive functioning has only recently been investigated. Prior research suggests that adjustments of cognitive control are affected by subtle manipulations of aspects of the stimulus–response pairs within and across task sets. This work has focused on examples of cognitive control such as response preparation, dual-task performance, and the congruency sequence effect. The current study investigates the effect of task representation on another example of control, post-error slowing. To determine if factors that influence how people represent a task affect how behavior is adjusted after an error, an adaptive attention-shifting task was developed with multiple task delimiting features. Participants were randomly assigned to a separate task set (two task sets) or an integrated task set (one task set) group. For the separate set group, the task sets switched after each trial. Results showed that only the integrated set group exhibited post-error slowing. This suggests that task representation influences the boundaries of cognitive control adjustments and has implications for our understanding of how control is organized when adjusting to errors in performance.

Similar content being viewed by others

Grappling with an ever-changing complex environment can be difficult, given the limitations of our cognitive capacity (Schumacher & Hazeltine, 2016). Nevertheless, both humans and nonhumans routinely prioritize and switch between complex tasks. This adaptive behavior is the result of cognitive control, and it allows for the regulation of competing goals and habitual responses (Miller & Cohen, 2001; Norman & Shallice, 1986; Schumacher & Hazeltine, 2016). How does this flexible adaptive processing constrain itself when faced with complex situations? It is not yet clear what factors are responsible for guiding the resources of cognitive control.

Task representation and the boundaries of control

Stimulus–response (SR) associations have long been considered a fundamental component of action selection (cf. Hazeltine & Schumacher, 2016). Yet abstract relationships that span across sets of stimuli and responses (causing some SR pairs to be represented together as a task) explain important features of human performance (e.g., Duncan, 1977, 1979). The boundaries between tasks can be shaped by stimulus and response features (Dreisbach, 2012). Tasks can be thought of as explicit goal-directed schemas that have a hierarchical structure with subgoals (e.g., turning the faucet to the on position) nested within higher order goals (washing the dishes) and with abstract rules (in some cases scaffolded on differences in stimulus and response features) acting as task delimiters (Gozli, 2019). The higher order structure of a task representation may help determine how control is deployed given one’s current environment (Schumacher & Hazeltine, 2016).

We have previously reported evidence supporting the proposal that the structure of a task representation plays a role in setting the boundaries of cognitive control across a variety of experimental procedures. First, we showed that the size of the congruency sequence effect is modulated by task structure (Akçay & Hazeltine, 2008; Hazeltine, Lightman, Schwarb, & Schumacher, 2011). The congruency sequence effect is defined by a weaker congruency effect for trials following incongruent trials relative to trials following congruent trials (cf. Gratton, Coles, & Donchin, 1992). Some studies have reported this effect even when the tasks and SR sets change on subsequent trials (Freitas, Bahar, Yang, & Banai, 2007; Freitas & Clark, 2015; Weissman, Colter, Drake, & Morgan, 2015). Other studies have not observed such cross-task adjustments (Akçay & Hazeltine, 2008; Egner, Delano, & Hirsch, 2007; Funes, Lupiáñez, & Humphreys, 2010; Verguts & Notebaert, 2008). We found that this inconsistency may stem from subtle differences in the experimental designs used across these studies and that what really determines the span of the congruency-sequence effect is the way participants represent the tasks they are performing. For example, when stimulus or response modality could be used to segregate SR pairs to different tasks (e.g., left-hand vs. right-hand responses, visual vs. auditory stimuli), then the congruency sequence effect did not span changes in stimulus and/or response modality. However, when stimulus and response modality was irrelevant to the task, then the congruency sequence effect spanned stimulus and response modality changes. That is, cognitive control is bounded by the task representation (Hazeltine et al., 2011; Schumacher & Hazeltine, 2016).

Our laboratories have also produced evidence for a role of task structure in other domains. We found that task structure affects how effectively people are able to use a partially informative cue. We found both behavioral (Cookson, Hazeltine, & Schumacher, 2019) and neural (Cookson, Hazeltine, & Schumacher, 2016) evidence that preparatory processes prime responses within a task set but not across task sets—even when the cue provides the same amount of information about the upcoming SR pairs. We also found that dual-task interference is also affected by task representation (Schumacher, Cookson, Smith, Nguyen, Sultan, Reuben, & Hazeltine, 2018). In that study, we found that dual-task interference occurred when participants made two responses from two separate tasks, but not when the two responses were part of the same task set even though the number and type of stimuli and responses was held constant across the two conditions. Together, these results suggest that the way participants represent the task or tasks they are engaged in can alter the deployment of cognitive control and radically affect behavioral outcomes.

Finally, we have summarized other evidence for how and why a theory of goal-directed behavior should include hierarchical representations of tasks across perceptual, motivational, cognitive, and motor domains (Hazeltine & Schumacher, 2016). Additionally, we have proposed an example of such a hierarchical structure, which we call a task file, and have reviewed how it may help explain complex behaviors (Bezdek, Godwin, Smith, Hazeltine, & Schumacher, 2019; Schumacher & Hazeltine, 2016). Briefly, task files are complex sets of hierarchical associations shaped by the interplay of goals and affordances to facilitate the pursuit of a goal state (Schumacher & Hazeltine, 2016). The task file framework provides an alternative to understanding tasks as simply being comprised of SR associations. In short, because the system exerts control through task files, performance adjustments can be context specific. That is, the SR pairs are segmented into tasks based on clusters of features (e.g., stimulus features, response sets, SR pairings), control parameters can be adjusted for one task segment at a time and distinct control parameters may be applied to different tasks. Thus, performance can be governed by local (task-specific) control parameters. Still, even though much of control is local to the task, it remains possible that some types of performance adjustments span task representations.

Post-error adjustments

One area where global control processing (i.e., control spanning task sets) is plausible is performance adjustments following an error, where an unexpected error may lead to a global behavioral adjustment. Behaviorally, reaction times (RTs) on trials following errors are slower than trials following correct responses (e.g., Laming, 1968; Rabbitt, 1966; Wessel, 2018). Less commonly, post-error accuracy increases have been observed as well (Danielmeier, Eichele, Forstmann, Tittgemeyer, & Ullsperger, 2011; Laming, 1968, 1979; Marco-Pallarés, Camara, Munte, & Rodriguez-Fornells, 2008). The combination of post-error slowing and a post-error increase in accuracy suggests that participants may adjust their speed–accuracy trade off parameters (or some other kind of adaptive process) after an error (Botvinick, Braver, Barch, Carter, & Cohen, 2001; Ridderinkhof, 2002; Wessel, 2018). On the other hand, other studies have reported post-error decreases in accuracy, which implies that post-error effects might be maladaptive in nature (Fiehler, Ullsperger, & Von Cramon, 2005; Rabbitt & Rodgers, 1977).

The issue we address in the present experiment is whether post-error adjustments reflect a global process or one local to the task in which the error occurred. For example, an error might trigger a global change in processing, so that any response following an error is slowed (Notebaert, Houtman, Van Opstal, Gevers, Fias, & Verguts, 2009; Wessel, 2018). Alternatively, task structure may affect adaptive cognitive-control related post-error slowing. Thus, adjustments in error-related control parameters that lead to improved performance in one task may not necessarily translate to an improved performance on other tasks. Additionally, performance monitoring (e.g., tallying of previous trial response conflict, error likelihood expectations, deviation from some subgoal) might be conducted separately for the two component tasks.

The literature on the context specificity of post-error slowing is currently limited and inconsistent. In one study addressing this issue, Regev and Meiran (2014) report post-error slowing across trials that switch between two tasks requiring participants to make category judgments of visual stimuli using overlapping responses. In another study examining how task structure affects post-error adjustments, Forster and Cho (2014) used a procedure that switched between Stroop and Simon trials. They found evidence that the control processes mediating the congruency sequence effect did not span task boundaries, but that the error-adjustment processes did generalize across tasks. That is, they found that the size of the congruency effect was modulated by the congruency on the previous trial (the congruency sequence effect) only when the previous trial was the same task as the current trial (as in Akçay & Hazeltine, 2008; Hazeltine et al., 2011). However, RTs for trials following errors were slower (and accuracy was lower) regardless of the type of task performed on the previous trial. They even found that increases in RTs following an error spanned five trials (although this effect did attenuate over time). This difference in local versus global effect of previous trial congruency and errors suggests that the control processes mediating congruency are distinct from those mediating post-error effects. This stands in contrast to popular theories such as the conflict monitoring model (Botvinick et al., 2001), which explains the congruency sequence effect and post-error slowing with a common mechanism. That is, more difficult trials—indicated by decreased SR congruency, errors, or both—lead to increases in detected response conflict and subsequent adjustments in cognitive control.

Forster and Cho’s (2014) findings are also puzzling with regard to recent research. Using a dual-task procedure with trials consisting of a color flanker primary task and a pitch discrimination secondary task, Steinhauser et al. (2017) found evidence for time-dependent context sensitivity of post-error slowing. Errors on the primary task led to slowing on the secondary task, but this effect decayed with stimulus onset asynchrony and post-error slowing between trials only occurred within a task. Essentially, Steinhauser and colleagues observed a short-term global post-error slowing (which they speculated might stem from an attentional bottleneck produced by the error) and a long-term task specific post-error slowing. This differs from Forster and Cho, who observed sustained post-error slowing across task sets.

The task representation perspective offers a way to explain contradictory findings about the context specificity of post-error slowing (Forster & Cho, 2014; Steinhauser, Ernst, & Ibald, 2017). As stated previously, both Forster and Cho (2014) and Regev and Meiran (2014) used an overlapping response sets and both found evidence for global post-error slowing (Regev & Meiran, 2014, also used the same stimuli for each judgement type). In contrast, Steinhauser et al. (2017) used tasks with different perceptual modalities and responses segregated by hand and found evidence that the post-error effect may be local to the task performed. Perceptual and response factors like these have been shown to play a role in the creation of task boundaries (Hazeltine et al., 2011). Given this, the procedure used by Steinhauser and colleagues may have been more conducive to the formation of separate task sets. Thus, it is possible that differences in task structure might be responsible for the contradictory findings in these post-error slowing studies, as it was able to account for the contradictory results in the congruency sequence effect literature (cf. Hazeltine et al., 2011). The present study tests this hypothesis using a procedure, inspired by the previously described research, that should bias participants towards deploying separate task sets by providing them with salient delimiters, along multiple dimensions (e.g., different response effectors, unique visual features, and unique stimulus locations for each task), between the subtasks. Having multiple delimiters provides participants with multiple features in on which to base task set boundaries between SR pairs.

Finally, an additional limitation of Forster and Cho’s (2014) experiment and others investigating post-error slowing (e.g., Danielmeier & Ullsperger, 2011; Jentzsch & Dudschig, 2009) is that they often produce very few errors. Many studies of post-error effects report very high accuracy rates (e.g., Forster & Cho, 2014, had to use a high error rate subset of their participants for their primary analyses), which means that the estimate of post-error trial RT may not be a reliable estimate of the real underlying behavior. Adaptive procedures are one way to obtain high error rates across all participants (Danielmeier & Ullsperger, 2011; Notebaert et al., 2009). Higher error rates reduce the probability of noisy post-error slowing measurements. The present study used adaptive procedures to achieve a higher error rate to gain a better understanding the impact of task representational structure on post-error slowing.

Present experiment

The goal of the current experiment is to determine how task structure might account for the discrepancy in the findings of Steinhauser et al. (2017) and those of Forster and Cho (2014) (i.e., the local or global nature of post-error slowing) using a procedure to encourage distinct task representations and a high error rate. We tested two groups of participants as they performed an adaptive attention-shifting task. One group (the integrated set group) used SR mappings that encouraged an integrated representation for all responses. The other group (the separate set group) used SR mappings that encouraged participants to represent the responses in two separate groups. Given the boundaries that task structure places on cognitive control in other task situations (e.g., congruency sequence effect, response preparation, and dual-task processing), we predict that, contrary to Forster and Cho (2014), post-error slowing will be attenuated across task sets. That is, an error on one task will lead to less slowing on the subsequent trial of a different task than of the same task.

Method

Participants

Fifty-seven participants (40 males, 17 females), with ages ranging from 18 to 25 years, were recruited from the Georgia Institute of Technology student participant pool and tested under the guidelines of the Georgia Tech Institutional Review Board. Participants were compensated for their time with course credit. All participants were native English speakers and had normal or corrected-to-normal vision. Because overall accuracy levels have been shown to influence post-error adjustments (e.g., post-correct trial slowing when errors are more frequent than correct trials; Notebaert et al., 2009) participants with accuracy of 50% or lower were not included in the final analysis (four participants, three males, one female), leaving the final sample size at 53 participants.

Stimuli and apparatus

The experiment included two groups (integrated set and separate set) with different tasks and instructions (see Fig. 1). Both groups were required to press a button based on the identity of a stimulus that appeared briefly on the opposite side of the screen from a distractor.

Example trials for both the vertical and horizontal orientated sets. Each trial began with a flickering equal sign followed by a stimulus and then mask on the opposite side of the screen. Participants responded to the identity of the stimulus with a manual or pedal response. The integrated set group saw vertically oriented letters and responded with finger presses. The separate set group switched between vertical and horizontally oriented letters or digits and responded with foot presses or finger presses, respectively. The screen was black, letters were red, numerals were blue, and feedback was white in the actual experiment

The experiment was coded in Psychology Software Tools’ E-Prime software and stimuli were presented on a CRT monitor. Participants were seated approximately 60 cm from the monitor. For both the integrated set and separate set groups, each trial began with a flickering equal sign cue that appeared approximately 15 cm from the center of the screen (either offset horizontally or vertically, see group task descriptions below). The cue appeared for 100 ms, disappeared for 50 ms, and then reappeared for another 100 ms. Fifty ms after the equal sign disappeared, the target stimulus appeared on the opposite side of the screen from the cue (also approximately 15 cm from the center of the screen). The stimulus duration varied depending on an adaptive performance procedure described below. The stimulus was replaced by a mask (an ampersand sign) for 960 ms. After the mask, there was an intertrial interval (ITI) that varied with a uniform distribution between 200, 600, 1,000, 1,400, 1,800, or 2,200 ms. Similar jittering procedures have been used in antisaccade experiments (a similar task) to mitigate anticipation effects (Wright, Dobson, & Sears, 2014).

Participants responded to the stimuli either with manual or pedal responses (as described below). To ensure that all participants were equally aware of their errors, which has been shown to affect post-error adjustments (cf. Nieuwenhuis, Ridderinkhof, Blom, Band, & Kok, 2001; Wessel, Danielmeier, & Ullsperger, 2011), the letter C was presented in the center of the screen during the ITI if the response on the previous trial was correct, and the letter E was presented if the previous trial was an error. Participants were told to perform as quickly and as accurately as possible and that both speed and accuracy were equally important. The minimum trial duration was 1,000 ms (40 ms minimum stimulus duration + 960 ms mask period); therefore, 1,000 ms was used for the response window for all trials.

For the adaptive performance procedure, stimulus duration was initially set to 100 ms, and every 24 trials it was either increased or decreased by 20 ms depending on the accuracy rate of the previous set of 24 trials. If accuracy was 70% or greater, the stimulus duration was decreased by 20 ms. If accuracy was less than 70% on the previous 24 trials, then the stimulus duration was increased by 20 ms. A floor of 40 ms was imposed on the adaptive procedure. The stimulus duration continued to adapt, for both groups, throughout the course of the experiment.

Prior to the experimental blocks, participants completed 72 practice trials. These practice trials used the same adaptive stimulus duration procedure described above and were always followed by a feedback screen informing participants about their accuracy and RT on the preceding trial. The feedback screen was followed by an ITI in which a central fixation cross appeared for a fixed duration of 1,000 ms. After practice, both groups completed four experimental blocks of 192 trials each. After each block, participants received feedback for 4 s showing their mean RT and accuracy rate (RT and accuracy were collapsed across orientation for the separate group) for the preceding block.

The SR set was the only procedural difference between the two groups. In the separate set group, participants responded to two sets of stimuli, blue numerals (6 & 9) oriented horizontally, and red letters (M & N) oriented vertically. Participants responded to numerals with their thumbs via a handheld number pad, and they responded to the letters with foot pedal responses. The numeral 6 mapped to the left thumb key, and the numeral 9 mapped to the right thumb key. Given the location of the 6 and 9 keys, the thumb responses were oriented vertically. The keys of the number pad were covered with stickers in order to prevent confusion with the numbers on the keys. The letter M required participants to press the left-most foot pedal with their left foot, and the letter N required them to press the right-most foot pedal using their right foot. All trials switched between orientation sets (blue/horizontal/numeral/hand; red/vertical/letter/foot). In each block, all trial types (M-Up, M-Down, N-Up, N-Down, 6-Left, 6-Right, 9-Left, 9-Right) were presented 24 times each, and each trial type was followed by ITIs of each duration four times. Considering that no single feature seems to act as a hard boundary between task sets (Hazeltine et al., 2011; Weissman et al., 2015), the sets differed along four dimensions (character type, color, orientation, response mode) to promote the formation of separate task representations.

In the integrated set group, participants responded to vertically oriented red letters with their left and right middle and index fingers. There were no foot-based responses. The set of red letters included V, B, N, and M. Participants responded using a standard horizontally oriented keyboard using the left middle (V), left index (B), right index (N), and right ring (M) fingers. In each block, each trial type was presented 24 times. Targets were not repeated and stimuli were chosen pseudorandomly with the constraint that each stimulus occurred and equal number of times and that trials alternated between two letter sets V and B and N and M. The set alternation made the groups equivalent in terms of the number of potential stimuli–responses on a given trial. Each trial type was followed by each ITI duration four times.

Results

Data trimming and transformation

For the RT analyses, trials with responses of 200 ms or less were removed (0.1% of integrated set group trials & 0.9% of separate set group trials), but these trials were still used for determining previous trial error status. An arcsine transform was applied to accuracy data prior to analysis, but accuracy is presented as untransformed proportion correct in tables and figures.

Group differences in stimulus duration and accuracy

Overall accuracy was 69% for the integrated set group and 67% for the separate set group. An independent-sample t test showed that the arcsine transformed accuracy proportions did not significantly differ between the groups, t(51) = 1.386, p = .172. The accuracy rates demonstrate that the adaptive difficulty procedure was effective at achieving roughly 70% accuracy in both groups. Also, the adaptive procedure did not lead to a significant difference between the stimulus durations of the tasks in the two groups. The mean stimulus duration for the integrated set group was 104 ms, and the mean duration for the separate set group was 136 ms, t(51) = −1.687, p = .098. These small and insignificant differences between the integrated and separate set groups are unlikely to produce the post-error slowing differences described below.

Orientation differences in reaction time and accuracy in the integrated condition

A paired-sample t test conducted on the correct trial RT data collapsed across previous trial error status revealed that mean responses to the vertical orientation (foot responses) were slower (M = 697 ms, SD = 60 ms) than those of the horizontal orientation (hand responses; M = 586 ms, SD = 71 ms), t(25) = 11.445, p < .001. Additionally, a paired-sample t test on the arcsine transformed proportions yielded a statistically significant difference in accuracy between the orientations. Mean accuracy for vertical orientation trials was lower (M = .64, SD = .08) than mean accuracy for horizontal orientation trials (M = .71, SD = .07), t(25) = −3.014, p < .05.

Traditional post-error adjustment measures

Reaction time

All correct trials were classified based on the error status of the preceding trial. Given that the accuracy rates differed between the two orientations, the average of each participant’s vertical and horizontal trial means was used as the across-orientation measure of post-error RT and post-correct trial RT to ensure that both orientations received equal weight in the separate set group’s post-error and post-correct trial measurements. As shown in Fig. 2a, a two-factor mixed ANOVA (Group × Previous Trial Error Status) yielded a significant main effect of previous trial error status, F(1, 51) = 4.605, p < .05, \( {\mbox{\fontencoding{U}\fontfamily{phon}\selectfont\char110}}_p^2 \)= .083, and more importantly, a significant Group × Previous Error Trial Status interaction, F(1, 51) = 5.973, p < .05, \( {\mbox{\fontencoding{U}\fontfamily{phon}\selectfont\char110}}_p^2 \)= .105, driven by post-error slowing in the integrated set group and a lack of post-error slowing in the separate set group (Post-error RT − Post-correct RT Integrated Set: 16 ms; Post-error − Post-correct RT Separate Set: −1 ms). A paired-sample t test demonstrated statistically significant post-error slowing in the integrated set group, t(26) = 4.835, p < .001.

The mean reaction times (a) and the accuracy (b) for the integrated set and separate set groups for trials following errors and correct trials. The separate set means are collapsed across the vertical and horizontal orientations. The error bars represent the standard error of the Group × Previous Trial Error Status interaction

As stated previously, the two trial orientations exhibited different accuracy rates and RT. To investigate possible differences in post-error slowing for the two orientations in the separate set group, a two-way ANOVA with orientation (vertical and horizontal) and preceding trial error status was performed on the RT data from the separate set group. As shown in Table 1, only the main effect of orientation was statistically significant, F(1, 25) = 121.688, p < .001, \( {\mbox{\fontencoding{U}\fontfamily{phon}\selectfont\char110}}_p^2 \)= .830. The mean RT was slower for the vertical orientation than the horizontal orientation. Neither the main effect of previous trial error status, F(1, 25) = .028, p = .869, \( {\mbox{\fontencoding{U}\fontfamily{phon}\selectfont\char110}}_p^2 \)= .001, nor the interaction between orientation and error status were significant, F(1, 25) = .013, p = .911, \( {\mbox{\fontencoding{U}\fontfamily{phon}\selectfont\char110}}_p^2 \)= .001. Thus, there was no evidence for post-error slowing in either orientation.

Accuracy

As shown in Fig. 2b, a two-factor mixed ANOVA (Group × Previous Trial Error Status) performed on the orientation collapsed arcsine transformed accuracy proportions yielded a significant main effect of previous trial error status, F(1, 51) = 202.144, p < .001, \( {\mbox{\fontencoding{U}\fontfamily{phon}\selectfont\char110}}_p^2 \)= .799, and an interaction between group and previous trial error status, F(1, 51) = 5.782, p < .05, \( {\mbox{\fontencoding{U}\fontfamily{phon}\selectfont\char110}}_p^2 \)= .102. As can be seen in Fig. 2b, accuracies were higher following correct trials overall, and the post-error accuracy decrease (relative to correct trials) was larger in the separate set group than in the integrated set group. Paired-sample t tests performed on the arcsine transformed accuracy proportions demonstrated a statistically significant post-error accuracy decrease in the integrated set group, t(26) = −10.410, p < .001, and the separate set group, t(25) = −9.994, p < .001.

To investigate possible differences in post-error effects for the two orientations in the separate set group, the arcsine transformed proportions for the separate set group were submitted to a two-way ANOVA with the same factors: preceding error status and orientation. Only the main effect of preceding trial error status on accuracy, F(1, 25) = 97.764, p < .001, \( {\mbox{\fontencoding{U}\fontfamily{phon}\selectfont\char110}}_p^2 \)= .796, and the main effect of orientation, F(1, 25) = 9.190, p < .05, \( {\mbox{\fontencoding{U}\fontfamily{phon}\selectfont\char110}}_p^2 \)= .269, were significant. Both sets were marked by a post-error decrease in accuracy (see Table 1).

Global performance fluctuations

Post-error slowing may arise from global performance fluctuation artifacts (Dutilh et al., 2012). For example, if participants grow fatigued over the course of the experiment, error frequency may increase as well as RTs for both correct and error trials. This would lead to post-error slowing, stemming, at least in part, from the overrepresentation of errors during the slower period of the experiment. Alternatively, a decrease in vigilance in the task by participants as the experiment progresses might lead to a liberalization of response criteria, which could produce both a higher error rate and a decrease in RT across the experiment leading to post-error speeding with traditional measures. Dutilh et al. (2012) proposed a solution to this problem that is robust against fluctuations in global performance. They proposed that post-error slowing should be calculated as the average of pairwise differences between the post-error trial and the trial preceding a given error.

To determine if global performance fluctuations across the experiment were present (as described by Dutilh et al., 2012; Forster & Cho, 2014), a one-way ANOVA of the block-level differences (employing the Greenhouse–Geisser correction) was used to analyze the arcsine transformed accuracy proportions across the four experimental blocks across groups. There were no significant accuracy changes for either set: integrated set, F(2.090, 54.329) = .517, p = .607, ɳ2= .020; separate set, F(1.994, 49.855) = 1.377, p = .262, ɳ2= .052. The means are shown in Table 2. There was no evidence for global fluctuations in accuracy across this experiment. Nevertheless, to be consistent with the analysis conducted by Forster and Cho (2014), we investigated our data for sustained effects of post-error slowing using the robust measure proposed by Dutilh et al. (2012).

Robust sustained post-error effects

The robust analysis included measures of post-error slowing at N + 1, N + 2,Footnote 1 and N + 3 post-error trials. The trial preceding a given error was used as the baseline for all three post-error time points, if it was correct and if it was not a post-error trial. If the trial preceding an error was not correct the sequence was not used in the analysis. Only N + 2 and N + 3 trials that were preceded by two or three consecutive correct trials, respectively, after the occurrence of the paired error trial and were not followed by an error trial were used in the analysis. These restrictions prevent trials from acting as a post-error trial and a correct reference trial for another post-error trial. Unlike Forster and Cho (2014), we did not extend our analysis beyond N + 3, because there were very few trials to analyze that had runs of more than three correct trials as a result of our adaptive procedure.

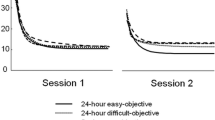

Figure 3 shows the sustained post-error slowing present in the integrated set group. For this group, a one-way ANOVA on mean RTs with trial since error (N + 1, N + 2, N + 3) did not yield a significant main effect of the temporal distance on the robust measure of post-error slowing, F(1.732, 52) = 2.455, p = .10, ɳ2 = .086, indicating that RT slowing did not significantly vary depending on the time since an error occurred, although the mean post-error slowing clearly decreases with time. Planned single-sample t tests yielded a significant robust post-error slowing effect for the integrated set condition at N + 1, t(26) = 7.247, p < .001; N + 2, t(26) = 2.551, p < .05; and N + 3, t(26) = 2.482, p < .05. As also shown in Fig. 3, the separate set group did not exhibit post-error slowing for any comparison, N + 1: vertical, t(25) = .940, p = .356, horizontal, t(25) =.486, p = .631; N + 3: vertical, t(25) = 1.640, p = .114, horizontal, t(25) = .509, p = .615.

Mean sustained post-error slowing for the integrated set and separate set groups across three post-error trials (N + 1, N + 2, and N + 3). For the separate set group, N + 2 was not computed, because it was confounded with orientation set switches. The bars represent the 95% confidence intervals for the corresponding integrated sample t tests

Bayesian multilevel analysis of robust sustained post-error slowing effects

The previously described analysis of the robust post-error slowing was conducted in a manner similar to the analysis of Forster and Cho (2014). However, given within participant variance, potential interindividual variability, and differences in the number of trials per condition and per participant, multilevel modeling might be a superior analytical approach, especially for the separate set group because only a relatively few number of trials contributed to the participant means for each cell. Instead of using only the means for each participant, the multilevel analysis considers the individual trials nested within participants. Both the integrated set and separate set groups were reexamined with multilevel intercept only models (the intercept is the estimate of post-error slowing) for each trial type using a penalized maximum likelihood estimation (Chung, Rabe-Hesketh, Dorie, Gelman, & Liu, 2013, Dorie & Dorie 2015) of the random effects. Simply put, in each model a fixed intercept (average post-error slowing in this group) and the variance in participant intercepts were estimated. Parameter estimates were determined via maximum likelihood estimation assuming a gamma distribution for the random effect variance. Degrees of freedom were determined by the Kenward–Roger method (Kenward & Roger, 1997). Table 3 displays the fixed effects for the integrated set group’s intercept models. As with the robust sustained effects analysis (see Robust Sustained Post-error Effects section), all of the effects reached statistical significance.

As can be seen in Table 4, none of the fixed effect estimates for the separate set group reached statistical significance. This outcome is congruent with the analysis presented in the Robust Sustained Post-error Effects section. Overall, the multilevel modeling results reaffirm the findings of the robust sustained effects analysis presented above.

Sustained accuracy adjustment analysis

The robust post-error slowing metric does not have an analog for accuracy adjustments. Nevertheless, sustained post-error accuracy effects were analyzed. Planned pairwise comparisons were made between sequences starting with errors and those starting with correct trials. For N + 1 post-error trial accuracy was simply compared with post-correct trial accuracy. For N + 2 and N + 3, trials between the starting trial in a sequence and the target trial (T) had to be correct (N + 2: ECT & CCT; N + 3: ECCT & CCCT). Paired-sample t tests showed significant post-error accuracy decreases for N + 1 trials, t(26) = −10.211, p < .001; N + 2 trials, t(26) = −4.174, p < .001; and N + 3 trials, t(26) = −2.642, p < .05, in the integrated set group. The same trend was found for vertical orientation trials, N + 1: t(25) = −11.411, p < .001; N + 2: t(25) = −6.053, p < .001; N + 3: t(25) = −3.632, p < .05, and horizontal orientation trials, N + 1: t(25) = −7.122, p < .001; N + 2: t(25) = −4.334, p < .001; N + 2: t(25) = −2.811, p < .05, in the separate set group. The means and standard deviations can be found in Table 5.

Discussion

General findings

Here we report the results of an experiment using an adaptive attention-shifting task with two groups: one with instructions and SR pairs designed to be represented as one task set (integrated set group) and another with instructions and SR pairs designed to be represented as two task sets (separate set group). Consistent with previous research (Forster & Cho, 2014), we found that post-error slowing occurs, and is sustained across three subsequent trials, in the integrated set group. The novel finding here is that, for the separate set group, trials were not affected by the error status of the preceding trial for either horizontal or vertically oriented tasks. Evidence from multiple commonly used experimental procedures has shown that the division of SR pairs along salient features can erect task boundaries around these features (cf. Hazeltine & Schumacher 2016; Schumacher & Hazeltine, 2016). The presence of post-error slowing in the integrated set group and the absence of post-error slowing in the separate set group (see Figs. 2a and 3) supports our hypothesis that task representation bounds the cognitive mechanisms that produce post-error slowing.

The effect of errors on subsequent trial accuracy is harder to interpret. We found post-error decreases in accuracy in both groups. In fact, as indicated by the significant interaction of group and previous trial error status, these decreases were slightly larger for the separate set group (Fig. 2b). This may suggest that the presence of distinct task representations may influence the way errors affect the trade off between speed and accuracy.

Alternatively, Danielmeier and Ullsperger (2011) propose that post-error slowing and accuracy adjustments have distinct underlying processes. Perhaps these processes are differentially affected by task representational structure. However, the adaptive difficulty procedure used here warrants caution when interpreting these accuracy results. The post-error decrease in accuracy could be explained by error streaks or clusters, which are sometimes induced by adaptive difficulty procedures (cf. Danielmeier & Ullsperger, 2011).

Another reason to be cautious about interpreting post-error accuracy effects is that the effect of making an error on subsequent trial accuracy is not consistent in the literature. Many studies report post-error increases in accuracy (Danielmeier et al., 2011; Laming, 1968, 1979; Maier, Yeung, & Steinhauser, 2011; Marco-Pallarés et al., 2008), others report no effect (Hajcak, Mcdonald, & Simons, 2003; Hajcak & Simons, 2008), and others report what we found here—post-error decreases in accuracy (Fiehler et al., 2005; Rabbitt & Rodgers, 1977). Regardless of the mechanisms involved, post-error accuracy decreases were found in both the integrated and the separate set groups, so their presence is unlikely to cause the group differences in post-error slowing reported here.

The effect of task structure on post-error slowing suggests that control processes that produce post-error slowing are deployed within a task set, and an error on one task does not necessarily lead to slowing on a different subsequent task. Thus, here we report evidence for a bounded effect of post-error slowing, local to the task where the error was produced, consistent with Steinhauser et al. (2017). Forster and Cho (2014), on the other hand, reported evidence for a global effect of post-error slowing. Why did we fail to replicate Forster and Cho? One potential explanation is that participants may use (and perhaps require) a variety of features salient to the task to create task representational boundaries. Forster and Cho had participants switch between Stroop and Simon trials. These tasks have important similarities. They may differ on a key feature, the type of conflict, but they are similar in the respect that responses were made with the participants’ hands. The key mappings for the two procedures even overlapped. Unlike Forster and Cho’s mixed Stroop–Simon blocks, the task used by Steinhauser and colleagues segregated responses by hand and presented stimuli in two different modalities. The present experiment also used a variety of salient features (stimulus color, stimulus category, response effector, and screen orientation) to support the development of separate task set representations (horizontal vs. vertical, blue vs. red, numbers vs. letters, hands vs. feet). Thus, these results indicate a way to reconcile seemingly contradictory findings in the literature. When the task representations are not sufficiently separate, post-error slowing can be observed even when the particular SR rules change as these alone may not be sufficient to create the requisite task boundaries for separate control settings.

However, Forster and Cho (2014) observed task specificity of the congruency sequence effect, but post-error slowing occurred across tasks. If their tasks were not sufficient to limit control for post-error slowing, it is not clear why they would be sufficient to bound control processes mediating the congruency sequence effect. Further research is needed to better understand the differences in the mechanisms responsible for the congruency sequence effect and the mechanisms responsible for post-error slowing. In any case, in the present experiment we find strong evidence for context and task specificity of post-error slowing.

Finally, multiple dimensions (character type, color, orientation, response effector) were used to encourage the formation of separate task sets, so it is unknown if all of these featural differences were necessary to erect task boundaries around the SR pairs, or if one or more of the features may have been sufficient. Even if one is not convinced that the featural differences divided the SR pairs into separate task sets, these data still demonstrate that post-error slowing is affected by the similarity across SR features in the task or tasks performed. Although the particular feature or features affecting post-error slowing cannot be identified, these findings demonstrate that post-error slowing is dependent on SR features and thus is sensitive to context.

Conclusions

The present results are best explained by task representation boundaries and the results are generally in accordance with the predictions of the task file account of cognitive control (Bezdek et al., 2019; Schumacher & Hazeltine, 2016). Consistent with other examples of control processing bounded by task structure (Cookson et al., 2016,2019; Hazeltine et al., 2011; Schumacher et al., 2018; Schumacher et al., 2011; Schumacher & Schwarb, 2009), post-error performance also depends on the representational structure of the task or tasks performed. As is the case with the congruency sequence effect (Hazeltine et al., 2011), subtle features of task design can determine the presence or absence of post-error slowing.

Notes

N + 2 was measured in the integrated set group only because, for the separate set group, the constant switching between sets meant that the N + 2 pairs always had a baseline from a different task than the post error trial.

References

Akçay, Ç., & Hazeltine, E. (2008). Conflict adaptation depends on task structure. Journal of Experimental Psychology: Human Perception and Performance, 34(4), 958–973. doi:https://doi.org/10.1037/0096-1523.34.4.958

Bezdek, M. A., Godwin, C. A., Smith, D. M., Hazeltine E., & Schumacher, E. H. (2019). How conscious and unconscious aspects of task representations affect dynamic behavior in complex situations. Psychology of Consciousness: Theory, Research, and Practice, 6(3), 225–241. doi:https://doi.org/10.1037/cns0000184

Botvinick, M. M., Braver, T. S., Barch, D. M., Carter, C. S., & Cohen, J. D. (2001). Conflict monitoring and cognitive control. Psychological Review, 108(3), 624–652. doi:https://doi.org/10.1037/0033-295x.108.3.624

Chung, Y., Rabe-Hesketh, S., Dorie, V., Gelman, A., & Liu, J. (2013). A nondegenerate penalized likelihood estimator for variance parameters in multilevel models. Psychometrika, 78(4), 685–709. doi:https://doi.org/10.1007/s11336-013-9328-2

Cookson, S. L., Hazeltine, E., & Schumacher, E. H. (2016). Neural representation of stimulus–response associations during task preparation. Brain Research, 1648(Pt. A), 496–505. doi:https://doi.org/10.1016/j.brainres.2016.08.014

Cookson, S. L., Hazeltine, E., & Schumacher, E. H. (2019). Task structure boundaries affect response preparation. Psychological Research, 1–12. Advance online publication. doi:https://doi.org/10.1007/s00426-019-01171-9

Danielmeier, C., Eichele, T., Forstmann, B. U., Tittgemeyer, M., & Ullsperger, M. (2011). Posterior medial frontal cortex activity predicts post-error adaptations in task-related visual and motor areas. Journal of Neuroscience, 31(5), 1780–1789. doi:https://doi.org/10.1523/JNEUROSCI.4299-10.2011

Danielmeier, C., & Ullsperger, M. (2011). Post-error adjustments. Frontiers in Psychology, 2, 233. doi:https://doi.org/10.3389/fpsyg.2011.00233

Dorie, V., & Dorie, M. V. (2015). Package ‘blme’. Bayesian Linear Mixed-Effects Models. https://CRAN.R-project.org/package=blme

Dreisbach, G. (2012). Mechanisms of cognitive control: The functional role of task rules. Current Directions in Psychological Science, 21(4), 227–231. doi:https://doi.org/10.1177/0963721412449830

Duncan, J. (1977). Response selection rules in spatial choice reaction tasks. In S. Dornic (Ed.), Attention and performance (Vol. 6, pp. 49–61). Hillsdale, NJ: Erlbaum.

Duncan, J. (1979). Divided attention: The whole is more than the sum of its parts. Journal of Experimental Psychology: Human Perception and Performance, 5(2), 216–228. doi:https://doi.org/10.1037/0096-1523.5.2.216

Dutilh, G., van Ravenzwaaij, D., Nieuwenhuis, S., van der Maas, H. L., Forstmann, B. U., & Wagenmakers, E. J. (2012). How to measure post-error slowing: a confound and a simple solution. Journal of Mathematical Psychology, 56(3), 208–216. doi:https://doi.org/10.1016/j.jmp.2012.04.001

Egner, T., Delano, M., & Hirsch, J. (2007). Separate conflict-specific cognitive control mechanisms in the human brain. NeuroImage, 35(2), 940–948. doi:https://doi.org/10.1016/j.neuroimage.2006.11.061

Fiehler, K., Ullsperger, M., & Von Cramon, D. Y. (2005). Electrophysiological correlates of error correction. Psychophysiology, 42(1), 72–82. doi:https://doi.org/10.1111/j.1469-8986.2005.00265.x

Forster, S. E., & Cho, R. Y. (2014). Context specificity of post-error and post-conflict cognitive control adjustments. PLOS ONE, 9(3), e90281. doi:https://doi.org/10.1371/journal.pone.0090281

Freitas, A. L., Bahar, M., Yang, S., & Banai, R. (2007). Contextual adjustments in cognitive control across tasks. Psychological Science, 18(12), 1040–1043. doi:https://doi.org/10.1111/j.1467-9280.2007.02022.x

Freitas, A. L., & Clark, S. L. (2015). Generality and specificity in cognitive control: Conflict adaptation within and across selective-attention tasks but not across selective-attention and Simon tasks. Psychological Research, 79(1), 143–162. doi:https://doi.org/10.1007/s00426-014-0540-1

Funes, M. J., Lupiáñez, J., & Humphreys, G. (2010). Analyzing the generality of conflict adaptation effects. Journal of Experimental Psychology: Human Perception and Performance, 36(1), 147. doi:https://doi.org/10.1037/a0017598

Gratton, G., Coles, M. G., & Donchin, E. (1992). Optimizing the use of information: Strategic control of activation of responses. Journal of Experimental Psychology: General, 121(4), 480–506. doi:https://doi.org/10.1037//0096-3445.121.4.480

Gozli, D. (2019). Experimental psychology and human agency. Cham, Switzerland: Springer.

Hajcak, G., McDonald, N., & Simons, R. F. (2003). To err is autonomic: Error-related brain potentials, ANS activity, and post-error compensatory behavior. Psychophysiology, 40(6), 895–903. doi:https://doi.org/10.1111/1469-8986.00107

Hajcak, G., & Simons, R. F. (2008). Oops! . . . I did it again: An ERP and behavioral study of double-errors. Brain and Cognition, 68(1), 15–21. doi:https://doi.org/10.1016/j.bandc.2008.02.118

Hazeltine, E., Lightman, E., Schwarb, H., & Schumacher, E. H. (2011). The boundaries of sequential modulations: Evidence for set-level control. Journal of Experimental Psychology: Human Perception and Performance, 37(6), 1898–1914. doi:https://doi.org/10.1037/a0024662

Jentzsch, I., & Dudschig, C. (2009). Why do we slow down after an error? Mechanisms underlying the effects of post-error slowing. The Quarterly Journal of Experimental Psychology, 62(2), 209–218.

Kenward, M. G., & Roger, J. H. (1997). Small sample inference for fixed effects from restricted maximum likelihood. Biometrics, 53(3), 983–997. doi:https://doi.org/10.2307/2533558

Laming, D. R. J. (1968). Information theory of choice-reaction times. New York, NY: Academic Press.

Laming, D. (1979). Choice reaction performance following an error. Acta Psychologica, 43(3), 199–224. doi:https://doi.org/10.1016/0001-6918(79)90026-X

Maier, M. E., Yeung, N., & Steinhauser, M. (2011). Error-related brain activity and adjustments of selective attention following errors. NeuroImage, 56(4), 2339–2347. doi:https://doi.org/10.1016/j.neuroimage.2011.03.083

Marco-Pallarés, J., Camara, E., Münte, T. F., & Rodríguez-Fornells, A. (2008). Neural mechanisms underlying adaptive actions after slips. Journal of Cognitive Neuroscience, 20(9), 1595–1610. doi:https://doi.org/10.1162/jocn.2008.20117

Miller, E. K., & Cohen, J. D. (2001). An integrative theory of prefrontal cortex function. Annual Review of Neuroscience, 24(1), 167–202. doi:https://doi.org/10.1146/annurev.neuro.24.1.167

Nieuwenhuis, S., Ridderinkhof, K. R., Blom, J., Band, G. P., & Kok, A. (2001). Error-related brain potentials are differentially related to awareness of response errors: Evidence from an antisaccade task. Psychophysiology, 38(5), 752–760.

Norman, D. A., & Shallice, T. (1986). Attention to action. In R. J. Davidson, G. E. Schwartz, & D. Shapiro (Eds.), Consciousness and self-regulation (pp. 1–18). Boston, MA: Springer. doi:https://doi.org/10.1007/978-1-4757-0629-1_1

Notebaert, W., Houtman, F., Van Opstal, F., Gevers, W., Fias, W., & Verguts, T. (2009). Post-error slowing: an orienting account. Cognition, 111(2), 275–279. doi:https://doi.org/10.1016/j.cognition.2009.02.002

Rabbitt, P. M. (1966). Errors and error correction in choice-response tasks. Journal of Experimental Psychology, 71(2), 264–272. doi:https://doi.org/10.1037/h0022853

Rabbitt, P., & Rodgers, B. (1977). What does a man do after he makes an error? An analysis of response programming. Quarterly Journal of Experimental Psychology, 29(4), 727–743. doi:https://doi.org/10.1080/14640747708400645

Regev, S., & Meiran, N. (2014). Post-error slowing is influenced by cognitive control demand. Acta Psychologica, 152, 10–18. doi:https://doi.org/10.1016/j.actpsy.2014.07.006

Ridderinkhof, R. K. (2002). Micro- and macro-adjustments of task set: Activation and suppression in conflict tasks. Psychological Research, 66(4), 312–323. doi:https://doi.org/10.1007/s00426-002-0104-7

Schumacher, E. H., Cookson, S. L., Smith, D. M., Nguyen, T. V., Sultan, Z., Reuben, K. E., & Hazeltine, E. (2018). Dual-task processing with identical stimulus and response sets: Assessing the importance of task representation in dual-task interference. Frontiers in Psychology, 9. doi:https://doi.org/10.3389/fpsyg.2018.01031

Schumacher, E. H., & Hazeltine, E. (2016). Hierarchical task representation: Task files and response selection. Current Directions in Psychological Science, 25(6), 449-454. doi:https://doi.org/10.1177/0963721416665085

Schumacher, E. H., Schwarb, H., Lightman, E., & Hazeltine, E. (2011). Investigating the modality specificity of response selection using a temporal flanker task. Psychological Research, 75(6), 499–512. doi:https://doi.org/10.1007/s00426-011-0369-9

Schumacher, E. H., & Schwarb, H. (2009). Parallel response selection disrupts sequence learning under dual-task conditions. Journal of Experimental Psychology: General, 138(2), 270–290. doi:https://doi.org/10.1037/a0015378

Steinhauser, M., Ernst, B., & Ibald, K. W. (2017). Isolating component processes of post-error slowing with the psychological refractory period paradigm. Journal of Experimental Psychology: Learning, Memory, and Cognition, 43(4), 653–659. doi:https://doi.org/10.1037/xlm0000329

Verguts, T., & Notebaert, W. (2008). Hebbian learning of cognitive control: Dealing with specific and nonspecific adaptation. Psychological Review, 115(2), 518–525. doi:https://doi.org/10.1037/0033-295X.115.2.518

Weissman, D. H., Colter, K., Drake, B., & Morgan, C. (2015). The congruency sequence effect transfers across different response modes. Acta Psychologica, 161, 86–94. doi:https://doi.org/10.1016/j.actpsy.2015.08.010

Wessel, J. R., Danielmeier, C., & Ullsperger, M. (2011). Error awareness revisited: Accumulation of multimodal evidence from central and autonomic nervous systems. Journal of Cognitive Neuroscience, 23(10), 3021–3036. doi:https://doi.org/10.1162/jocn.2011.21635

Wessel, J. R. (2018). An adaptive orienting theory of error processing. Psychophysiology, 55(3), e13041. doi:https://doi.org/10.1111/psyp.13041

Wright, C. A., Dobson, K. S., & Sears, C. R. (2014). Does a high working memory capacity attenuate the negative impact of trait anxiety on attentional control? Evidence from the antisaccade task. Journal of Cognitive Psychology, 26(4), 400–412. doi:https://doi.org/10.1080/20445911.2014.901331

Acknowledgements

The authors would like to thank Claire Allison and Tiffany Nguyen for their assistance with data collection. In addition, they would like to thank Clifford E. Hauenstein, IV, for his advice about multilevel modeling.

Open practices statement

All of the data and materials for the experiment reported here are available from the authors upon request. The experiment was not preregistered.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Declarations of interest

None

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Smith, D.M., Dykstra, T., Hazeltine, E. et al. Task representation affects the boundaries of behavioral slowing following an error. Atten Percept Psychophys 82, 2315–2326 (2020). https://doi.org/10.3758/s13414-020-01985-5

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-020-01985-5