Abstract

A successful language learner must be able to perceive and produce novel sounds in their second language. However, the relationship between learning in perception and production is unclear. Some studies show correlations between the two modalities; however, other studies have not shown such correlations. In the present study, I examine learning in perception and production after training in a distributional learning paradigm. Training modality is manipulated, while testing modality remained constant. Overall, participants showed substantial learning in the modality in which they were trained; however, learning across modalities shows a more complex pattern. Although individuals trained in perception improved in production, individuals trained in production did not show substantial learning in perception. That is, production during training disrupted perceptual learning. Further, correlations between learning in the two modalities were not strong. Several possible explanations for the pattern of results are explored, including a close examination of the role of production variability, and the results are explained using a paradigm appealing to shared cognitive resources. The article concludes with a discussion of the implications of these results for theories of second-language learning, speech perception, and production.

Similar content being viewed by others

In order to successfully communicate in a language, an individual must be able to both perceive and produce that language. Most current theories of speech perception or production assume a relatively straightforward relationship between the two modalities. That is, the two modalities are assumed to share representations and processes. In many models of speech perception, single word perception begins with auditory processing, and these sounds are then mapped onto phonetic and phonological representations, lexical representations, and semantic representations. Production is often described as being the nearly same process in reverse, beginning with accessing a semantic representation, a lexical representation, and then sound structure, before a word is produced using articulators. An abundance of recent work has shifted the focus from perception and production in isolation to the relationship between the two modalities. However, several recent studies have suggested that the relationship between perception and production may not be as straightforward as commonly assumed. The present studies examine the interaction between speech perception and production in a specific case: learning nonnative speech sound categories using a distributional learning paradigm. I present evidence that the relationship between perception and production at the earliest stages of nonnative speech category learning in each modality is complex and suggest that these data support a reformulation of current theories of both perception and production to account for the complexity of this relationship.

Relationship between perception and production

As stated above, the relationship between perception and production is the topic of an ongoing debate in the speech community.Footnote 1 Although it is clear that perception and production must interact in the systems of proficient, adult speakers of a language, it is unclear how this interaction occurs and how best to characterize the nature of the relationship between the two modalities. The strongest views on this matter have been posited by those who suggest that the two modalities are very closely related. For example, both the motor theory of speech production (Libermann, Cooper, Shankweiler, & Studdert-Kennedy, 1967; Libermann, Delattre, & Cooper, 1952; Libermann & Mattingly, 1985, 1989) and direct realist theories (e.g., Best, 1995; Fowler, 1986) posit common representations shared between perception and production during processing based on articulatory properties or motor representations.Footnote 2

These theories can be contrasted with a general auditory account of perception (see Diehl, Lotto, & Holt, 2004, for a review), which suggests that the object of speech perception is an acoustic target rather than an auditory one. Under this account, a variety of configurations are possible for the relationship of perception to production. For example, it is possible that both modalities share the same target; however, unlike direct realism or motor theory, this target would be an acoustic representation rather than a motor representation. This configuration is supported by work examining compensation for motor perturbation during production. In these studies, participants compensate for perturbation to achieve an acoustic target, suggesting that there is an acoustic component to production targets (e.g., Guenther, Hampson, & Johnson, 1998). Additional possible configurations under this account would not rely on identical targets in the two modalities; instead, it is possible that the targets of perception and production are distinct, and the modalities are linked at later stages of processing.

Previous experimental work has demonstrated mixed results, with some studies suggesting a close relationship between the two modalities (e.g., Goldinger, 1998), whereas other work suggests that there are few correlations between performance in the two modalities. In a recent intriguing finding, some work has also suggested that the two modalities may have a more antagonistic relationship (Baese-Berk & Samuel, 2016). Given the large variation in findings, it is important to continue investigations and to ask under what circumstances we may expect to see correlations or lack of correlations in performance between the two modalities, and how determining these circumstances could influence our understanding and theories of the interactions between perception and production. The present study provides an extension of the findings of Baese-Berk and Samuel (2016) and also suggests that production may, in some circumstances, disrupt perceptual learning, even when participants learn in production. Below, I review a selection of studies that investigate the relationship between perception and production to demonstrate the complex nature of previous findings.

Several studies have demonstrated some evidence for a close link between perception and production. Using imitation and shadowing (i.e., direct repetition without instruction for imitation), Goldinger and colleagues have demonstrated that tokens produced after exposure to a perceptual target are judged to be perceptually more similar to the target word than baseline productions (i.e., productions made before any exposure to the target speaker; Goldinger, 1998; Goldinger & Azuma, 2004). Shockley, Sabadini, and Fowler (2004) showed that not only are shadowed words judged to be more perceptually similar to target words but that specific acoustic properties of speakers’ shadowed tokens shift toward shadowed targets. When shadowing words with lengthened voice onset times (VOTs), speakers produced tokens with lengthened VOTs compared with the VOT of their baseline productions. This also suggests that on a fairly short time scale, fine-grained properties of perception can be transferred to production. Several other studies (e.g., Nye & Fowler, 2003; Vallabha & Tuller, 2004) have further examined the acoustic properties of shadowed speech, demonstrating that in shadowed speech some properties of the perceptual tokens are reflected in production. In a more naturalistic task, Pardo (2006) examined the relationship between speech perception and speech production via phonetic convergence during conversations. Using perceptual similarity ratings by naïve listeners, this data suggest that during a dialogue, speakers alter their speech to be more similar to that of their partner.

However, similar studies suggest that this process of phonetic convergence is modulated by a variety of factors. In an examination of what types of phonetic properties are imitated during shadowing, Mitterer and Ernestus (2008) suggested that only “phonologically relevant” properties are shadowed, and “phonologically irrelevant” properties are not. Specifically, they demonstrated that native Dutch speakers shadow prevoicing generally (compared with short-lag voicing), but the amount of prevoicing is not shadowed. However, using a combination of shadowing and short-term training, Nielsen (2011) showed that individuals shift their productions of VOT (a phonetically irrelevant contrast under Mitterer & Ernestus’s definition) to be closer to that of a target voice without any explicit instruction. Babel and colleagues (Babel, 2011; Babel, McGuire, Walters, & Nicholls, 2014) demonstrated that the amount of shadowing is influenced by linguistic factors (e.g., vowel is being shadowed) as well as social factors. Brouwer, Mitterer, and Huettig (2010) examined shadowing of canonical and reduced tokens and found that participants’ shadow both types of tokens, but do not shadow the magnitude of difference between canonical and reduced tokens.

These studies suggest that shadowing and accommodation depends on any number of factors, complicating the interpretation of these results for understanding the relationship between perception and production more specifically. Further, there are methodological and theoretical considerations in interpreting these findings. Only some of the studies assess similarity of the produced and perceived tokens based on acoustic measurements (Mitterer & Ernestus, 2008; Nielsen, 2011; Shockley et al., 2004; Vallabha & Tuller, 2004); many others rely instead on listeners’ perceptual similarity judgments. Although this sort of judgment implies that there are changes in production, it is more difficult to identify any single acoustic property or set of properties to demonstrate that productions are physically more similar to the target tokens. More critically, however, the bulk of these studies have examined aspects of production in the listener’s native language, or at least a language they speak proficiently. Therefore, it is difficult to know how general these patterns are, especially during development. Nonnative speech sound learning provides a possible avenue for examination of this relationship.

Speech sound learning

One of the hallmarks of perception of sounds in one’s native language is categorical perception, characterized by sharp categorization boundaries between sound categories of a language, and good discrimination across category boundaries found in a language, but not within categories of that language. Many studies have asked how an individual system becomes tuned to the native language, and whether it is possible to retune an individual’s system to a nonnative language. At a very early age, infants are able to discriminate relatively well between a wide variety of phonetic contrasts, both native and nonnative; however, they become less sensitive to nonnative contrasts during the first year of life (e.g., Werker & Tees, 1984). By adulthood, listeners are typically insensitive to most contrasts not found in their native language (MacKain, Best, & Strange, 1981). For example, native English listeners are able to categorize tokens from an /r/–/l/ continuum into two categories, a contrast that occurs in their native language. However, Japanese listeners demonstrate poor categorization of those same sounds, because the distinction does not exist in their native language (e.g., Goto, 1971). That is, their perception is reliant on the category structure of their language (e.g., Best, McRoberts, & Sithole, 1988; Kuhl, Williams, Lacerda, Stevens, & Lindblom, 1992; Libermann, Harris, Hoffman, & Griffith, 1957; Pegg, Werker, Ferguson, Menn, & Stoel-Gammon, 1992).

Over the past 3 decades, dozens of studies have demonstrated that listeners are able to increase their sensitivity to contrasts that are not found in their native language with training (Strange & Dittman, 1984). In the laboratory, various methods have been used to train nonnative listeners on the perception of novel phonetic contrasts (see Iverson, Hazan, & Bannister, 2005, for a comparison). These investigations have examined a variety of segments including Japanese listeners’ perception of English /r/ and /l/ (e.g., Logan, Lively, & Pisoni, 1991), English listeners’ perception of a three-way voicing contrast (e.g., McClaskey, Pisoni, & Carrell, 1983; Tremblay, Kraus, & McGee, 1998), English listeners’ perception of German vowels (e.g., Kingston, 2003), Spanish and German listeners’ perception of English vowels (e.g., Iverson & Evans, 2009), and English listeners’ perception of Mandarin tones (e.g., Wang, Spence, Jongman, & Sereno, 1999). In many cases cited above, after relatively brief exposure, listeners are better able to categorize sounds and/or discriminate between categories in a nonnative language. However, it is important to note that there is substantial variability in learner’s abilities to learn nonnative speech sounds, including, but not limited to their initial abilities to perceive or produce the contrast (see, e.g., Perrachione, Lee, Ha, & Wong, 2011).

Models of nonnative phoneme learning

In addition to types of training paradigms, other factors may also influence learning of novel categories. For example, Best et al. (1988) demonstrated that native English listeners are quite good at discriminating between Zulu clicks even though the contrast does not exist in English. They suggested that the relationship between sounds in the learner’s native language and in the target nonnative language could affect the listener’s ability to discriminate. The two predominant models of nonnative and second-language speech perception (the perceptual assimilation model: Best, 1994; Best, McRoberts, & Goodell, 2001; Best, McRoberts, & LaFleur, 1995; Best et al., 1988; PAM-L2: Best & Tyler, 2007; and the speech learning model: Flege, 1995, 1997) make explicit predictions that how listeners will perceive nonnative contrasts and the ease (or difficulty) with which they learn them is directly shaped by the similarity of these contrasts to contrasts in their native language.Footnote 3

Both PAM and SLM make strong claims about the relationship between perception and production. Although PAM itself does not make strong claims regarding production of novel contrasts, the model does posit that speech perception and production share representations. Further, perceptual assimilation under this hypothesis is driven by phonetic similarity of sounds. Because of these general claims, one is able to infer that learning in one modality should be strongly correlated to learning in the other modality. Because PAM posits a very close relationship between the two modalities, it is assumed to be the case that learning in each modality will be correlated under this model. SLM makes more explicit claims about the relationship of perception and production during learning. Specifically, it is claimed that perception leads production (i.e., should always occur first in terms of learning), and that perception and production become closer to one another over the course of learning.

However, evidence for this procession of learning is limited. Several studies have found evidence that directly contradicts these hypotheses. For example, Sheldon and Strange (1982) demonstrated that production learning can precede perceptual learning. Bradlow, Pisoni, Akahane-Yamada, and Tohkura, (1997) examined whether perceptual training transferred to production learning. At a population level, they demonstrated that learners improved on production of the tokens even without overt production training (for additional evidence of transfer from perceptual training to production learning, see Bradlow, Akahane-Yamada, Pisoni, & Tohkura, 1999; Wang, Jongman, & Sereno, 2003). However, the pattern of individual learning is much less clear. Some individual participants show substantial improvement in both perception and production. Others demonstrate improvement in perception alone, with no improvement in production. Still other participants show what are assumed to be floor or ceiling effects. Further, some participants demonstrate improvement in production and do not demonstrate any improvement in perception. This result runs counter to the predictions of PAM and SLM, both of which suggest such improvement should not occur in the absence of perceptual learning.

Of course, perception and production are starkly different tasks in terms of their demands on a learner. When producing, especially when repeating, a learner has increased cognitive demands as compared to perception alone. That is, to repeat a token, the learner must first encode what they have heard, and then retrieve a motor plan that corresponds with their percept to appropriately repeat the token. This increased processing demand could disrupt some aspects of learning. That is, if resources are shared between the two modalities during training, it is possible that perception and production may actually have an antagonistic role during learning, with training in one modality reducing the resources available for learning in the opposite modality.

Some recent evidence suggests that production during training does, in fact, incur a cost to the learner. Baese-Berk and Samuel (2016) examined perceptual learning for native Spanish speakers learning a new sound distinction in Basque. After 2 days of training, naïve participants trained in perception alone demonstrated substantial improvements in their ability to perceive the novel contrast. However, participants who were trained in a paradigm that alternated between perception exposure and production practice did not demonstrate learning in perception. That is, perceptual learning was disrupted by producing tokens during training. Interestingly, participants with more experience with the contrast (i.e., late learners of Basque) demonstrate less disruption to perceptual learning. This study, which is foundational for the current manuscript, is discussed in more detail below.

Previous work has also examined learning in each modality as a result of production-focused training. Hattori (2010) examined the perception and production of /r/ and /l/ by native Japanese speakers. He found that the baseline abilities in perception and production of the contrast was not highly correlated. After training listeners using articulatory, production-oriented training their productions of the contrast improved significantly according to a variety of measures; however, their perception was unchanged after this training (see Tateishi, 2013, for a similar finding). This finding contrasts with that of Leather (1990), who trained Dutch participants on the production of four Mandarin words differing in tone. After training in production, he found that participants generalized this learning to perception. However, the author concedes that this result is not conclusive as only one syllable was used during training and testing. Furthermore, there was no pretest, so it is unclear whether the participants were able to perceive this contrast before training.

Current studies

Even given the substantial body of previous work, the links between perceptual learning and production learning remain unclear. Specifically, although it appears that production can occasionally disrupt perceptual learning, it is unclear whether learning can emerge in production, even for those participants who do not learn in perception. Further, it is unclear how distributional information may differentially influence learning in each modality and whether exposure to clear distributions of tokens may impact the relationship between the two modalities.

In Baese-Berk and Samuel (2016), we demonstrated that producing tokens during training could disrupt perceptual learning. In the present study, I extend these results in two important ways: First, I vary the number of days of training (see Experiment 1 vs. Experiment 2), and second, I investigate production learning in addition to perceptual learning. By providing these two extensions, it is possible to begin to answer the question of under what circumstances we might expect to see a disruption of perceptual learning after production during training. Once we better understand those circumstances, we could provide tests of ways in which this disruption could be alleviated, which has potential real-world consequences, in addition to consequences for our scientific understanding of the relationship between the two modalities.

In the present study, a distributional learning paradigm is used to train participants and explicitly manipulate training modality while holding testing modality stable. That is, participants were trained either in perception alone or in a combination of perception and production practice. All participants, regardless of training modality, are tested in both perception and production. This allows a direct examination of the influence of training modality learning in both perception and production of a nonnative sound category. The results of the studies presented here will give us insight into how distributional information influences learning and will elucidate the relationship between the two modalities.

Method

Experimental stimuli and methods

Stimuli

Stimuli in all three experiments were modeled on those used in Maye and Gerken (2000), who demonstrated that participants can learn novel speech sound categories after exposure to a bimodal distribution, but not from a unimodal distribution. All stimuli were resynthesized tokens of syllables spoken by a female native speaker of American English. Three separate synthetic continua were formed, following Maye and Gerken, each with the stop consonant in a different vowel environment (i.e., before /a/, /æ/, and /ɚ/). Across continua, voice onset time and steepness of formant transitions were held constant. Vowel durations were equated within and across continua. Each continuum included eight equidistant steps. The syllables were resynthesized from naturally produced tokens of a contrast that English listeners are able to produce, but that English does not use contrastively: prevoiced to a short-lag alveolar stop. To produce a prevoiced stop, a speaker’s vocal folds are vibrating during the closure for the stop; critically, voicing begins before the release of the consonant. There is usually minimal disruption in vocal fold vibration following the stop release, unlike an aspirated stop. A short-lag stop has a brief period of aspiration after the stop release, and no voicing during the closure of the stop. For more discussion of realizations of this contrast, see Davidson (2016). Two phonetic cues are used to signal this contrast in the continua. The first is VOT (prevoiced vs. short lag); the second is the formant transitions from the stop consonant to the vowel (steeper for the prevoiced end of the continuum, and shallower for the short lag end).

Synthesis of stimuli

In order to create the continua, the voicing and formant transitions of a naturally produced token were covaried using Praat (Boersma & Weenink, 2015). The first two formants were resynthesized for each continuum. The tokens were synthesized using the prevoiced token as a base, so that each subsequent step had a smaller amount of prevoicing and less steep formant transitions. Following Maye and Gerken (2000), 78 ms of naturally occurring prevoicing were included in Token 1. Voice onset time was manipulated by gradually reducing the amount of prevoicing at a step size of approximately 13 ms, such that Token 8 had a positive voice onset time of 13 ms. Vowel formants were resynthesized using Praat’s LPC algorithm for the first 60 ms of each vowel. Slope was gradually reduced for each token along the continuum, mirroring slopes in Maye and Gerken. Because each vowel required a different end state, the formant transitions differed slightly across each continuum.

Training paradigm

Statistical learning studies have provided a means to examine novel category formation under slightly more naturalistic, though still controlled, laboratory training studies. In the present study, I use a distributional learning paradigm, following Maye and Gerken (2000). This paradigm is an ideal tool for examining the relationship between perception and production. Because participants are trained implicitly, no explicit instruction about the sound categories is needed during training, allowing for a more equal training in perception and production. Below, I report the data from the bimodal training groups, as the unimodal training group did not demonstrate significant learning in perception or production.

Procedure

All training and testing took place in a large, single-walled sound booth. Visual stimuli were presented on a computer screen. Audio stimuli were presented over speakers at a comfortable volume for the participant. All tasks were self-paced. Production responses were made using a head-mounted microphone. Responses in perception tasks were made using a button box.

Training

The training procedure was an implicit learning paradigm that used pictures to reinforce statistical distributional information given to participants. The procedure for training followed the basic procedure used by Maye and Gerken (2000). In Experiment 1, training took place over 2 consecutive days. In Experiment 2, training occurred over 3 consecutive days. Each day, training was broken into several blocks, with 16 repetitions of the target stimuli. The number of repetitions of each token on the continuum was determined by participant training group.

Figure 1 shows the distribution of tokens in each of the two types of training. Participants in the unimodal training groups received more repetitions of stimuli in the middle of the continuum (i.e., stimuli at Points 4 and 5 on the continuum) and fewer repetitions of those stimuli near the ends of the continuum (i.e., Stimuli 2 and 7), which created a single distribution on the continuum. Participants in the bimodal training groups, on the other hand, received more repetitions of stimuli at two points along the continuum (i.e., stimuli at Points 2 and 7 on the continuum) and fewer repetitions of the stimuli at the middle of the continuum (i.e., Stimuli 4 and 5), which creates two separate distributions on the continuum. (See Fig. 1 for examples of these distributions.) Participants in the bimodal training group should infer two novel categories, and participants in the unimodal group should infer only one category. Each participant heard 16 experimental tokens from each of three continua, for a total of 48 tokens per block. Participants were exposed to eight training blocks per day for a total of 384 training tokens each day.

All tokens were paired with a picture during presentation. The unimodal group saw one picture per continuum. The continuum for the bimodal group was divided in half, with one picture per half. These pictures reinforced the distributional information given to participants in their respective training groups. Pairings of pictures with continua were counterbalanced across participants.

Following Maye and Gerken (2000), participants were told nothing specific about the syllables they were listening to. Diverging from Maye and Gerken, training took place over 2 days to allow for an examination of the time course of learning. Additionally, this allowed for the inclusion of more testing without disrupting the training distributions presented to the participants.

Testing

During the testing phase, participants performed four tests, two focusing on perception (discrimination and identification) and two focusing on production (repetition and naming). Testing was identical for all subjects regardless of training group. Discrimination and repetition pre-tests occurred before training on each day of the experiment. At the end of each day, participants performed discrimination, repetition, categorization, and naming post-tests, though only discrimination and repetition data are presented here.

Discrimination test

On each trial of the discrimination test, participants heard a pair of tokens separated by a 500 millisecond interstimulus interval and were asked to indicate whether the tokens were the same or different. Feedback was not provided between trials or at the end of the test. Stimuli 1, 3, 6, and 8 from the continua were used during the discrimination test. These tokens are presented the same number of times during training to both the unimodal and bimodal training groups. Therefore, any differences in discrimination should be due to differences in how those tokens fell in the distribution participants were exposed to only, and, critically, not to how often they heard that particular token. Presentation of pairs of tokens and the order of tokens within a pair was fully counterbalanced. Participants heard a total of 48 pairs of tokens during the discrimination test. No visual stimuli were included for this portion of the test.

The discrimination test contained three types of comparisons: same, within category, and across category. For same comparisons, participants heard one of four acoustically identical pair types: 1–1, 3–3, 6–6, or 8–8. Within-category comparisons were either Tokens 1 and 3 or Tokens 6 and 8. These comparisons fall within as defined by the unimodal and bimodal distributions. Across-category comparison contained Pairs 3–6 or 1–8. These comparisons are of tokens that fall across categories as defined by the bimodal distribution, but of tokens which fall within a single category as defined by the unimodal distribution. Critically, the test tokens were presented the same number of times in each of the training paradigms, so differences in performance cannot be driven by exposure to the specific test tokens.

Repetition test

In the repetition test, participants were asked to repeat stimuli from the three continua. Participants heard a single token and were instructed to repeat the token. After participants produced a token, they pressed a button to advance to the next token. As in the discrimination test, the test tokens were Tokens 1, 3, 6, and 8 along the continuum. Four tokens of each of these points were presented. Presentation of stimuli was fully randomized. Participants were presented with a total of 48 tokens during this test. No visual stimuli were presented during this portion of the test.

Voice onset time and whole-word duration was measured for each token by two trained coders. Each coder marked burst onset, voicing onset, and end of vowel. If any amount of prevoicing was present before the burst onset, the onset of this prevoicing was also marked. Furthermore, if there were breaks between the prevoicing, and the onset of the burst, the offset of prevoicing was also marked (see Davidson, 2016, for a description of the various realizations of VOT by native English speakers). This allowed three measures to be calculated from each response each participant produced: the presence or absence of prevoicing, breaks in voicing during prevoiced tokens, and voice onset time (VOT; if positive, the duration between the burst onset or voicing onset; if negative, the duration between the onset of prevoicing and the onset of the burst). Interrater reliability was high (confidence interval for intraclass correlation coefficients [ICC] = .965 < ICC < .99).

For the sake of brevity, only data from the bimodal participants are presented in the current manuscript. Unimodal participants did not demonstrate improvement in perception or production from Day 1 to Day 2, as expected, given previous results using this paradigm. Although I describe all participants from the experiment below, only those from the bimodal training group are analyzed here.

Experiment 1

Method

Participants

Eighty-nine undergraduate students (62 females) participated in Experiment 1. Participants reported no speech or hearing deficits, nor did the groups of participants differ as a function of musicianship. Seventeen participants either did not complete both days of the experiment or were not native, monolingual American English speakers, leaving a total of 72 participants for analysis. Participants were divided into two training regimens, described below, with a total of 36 participants completing each training regimen. This sample size was chosen based on previously reported effect sizes for similar perception experiments, given the lack of similar studies examining production after training in a distributional learning paradigm.Footnote 4 Below, we report data from only the 36 participants who completed the bimodal training regimen.

Procedure: Perception-only training

Participants followed the general methods outlined above for training and testing. Participants were tested in perception and production at the beginning and end of each of 2 training days. During training, participants in this condition heard a token and saw the paired picture. They then pressed a button on the button box to advance to the next token. They were not required to actively engage with the token they heard during training. Participants were presented stimuli from either the bimodal or unimodal distribution described above. Assignment of these conditions was counterbalanced across participants, so 18 participants were trained in the perception-only regimen on a unimodal distribution, and 18 participants were trained in the perception-only regimen on a bimodal distribution. As stated above, only data from participants in the bimodal training groups are analyzed here.

Procedure: Perception + production training

Participants in the perception + production training regimen followed the same general methods for testing and training outlined above. The primary difference between this training regimen and the one outlined above is the task during training. As in the perception-only training, participants heard a token and saw the paired picture. However, before pressing the button to advance to the next token, participants were told to repeat the token they heard. They then pressed the button on the button box to advance to the next token. As in the perception-only training regimen, participants were presented stimuli from either a unimodal or a bimodal distribution. Assignment of these conditions was also counterbalanced. Eighteen participants were trained in the perception + production regimen on a unimodal distribution, and the remaining 18 participants were trained in the perception + production regimen on a bimodal distribution. As above, I only report data from the bimodal training groups here.

Production learning predictions

If participants learn to modify their productions of the training tokens as a result of their exposure, we may expect to see participants in the bimodal groups make a bigger difference in their repetitions of end-point tokens at the end of 2 days of training than they do at the beginning of training. Specifically, we should expect to see participants producing longer voice onset times for Token 8 than for Token 1, or more prevoicing on Token 1 than on Token 8. These differences ought to increase from pretest to posttest if participants are learning to change their productions as a result of training. Differences between perception-only and perception + production training would reflect differences in modality of training. Given previous results, we anticipate that participants in the perception + production training will demonstrate some improvement in their productions from pretest to posttest.

Production learning analysis

Participants produced a total of 48 tokens in each test (three continua, four points per continuum, and four repetitions per point). Participants’ productions were classified into one of four types: short-lag tokens, prevoiced tokens, mixed tokens (with substantial periods of both prevoicing and aspiration), and mixed tokens with a pause (with a substantial period of prevoicing, a period of silence, and a period of aspiration). Only short-lag and prevoiced tokens were used for the analyses reported here; however, Table 1 shows the proportion of each type of token.

Only the end-point tokens (Tokens 1 and 8) were compared because this is where participants are expected to make the largest differences in production. Because short-lag and prevoiced tokens are bimodally distributed, voice onset times for each token type were analyzed separately. Furthermore, because relatively few prevoiced tokens were produced, participants’ voice onset times were analyzed for short-lag tokens only, and for prevoiced tokens, the proportion of tokens that were prevoiced was the dependent measure. For short-lag tokens, both raw VOT and a measure normalizing VOT for vowel duration were calculated. However, the same pattern was found across the two measures. Raw VOT is reported below. Because continuum was not a significant predictor of VOT or the proportion of tokens that were prevoiced, all continua are collapsed in the plots and analyses below.

The data were analyzed using linear mixed-effects regressions for short-lag voice onset time (Baayen, Davidson, & Bates, 2008) and logistic mixed-effects regressions for proportion of tokens that were prevoiced, implemented with R package lme4 (Bates, Maechler, Bolker, & Walker, 2014). All regressions included the maximal random-effect structure justified by the model, and the random-effect structure for each model is specified below. Significance of each predictor in the linear regressions was assessed using model comparisons.

Production learning results

Figure 2 shows the results for voice onset time of short-lag tokens for the participants in the perception-only and perception + production training groups. Examining the figure, it is clear that participants in both training groups make small differences between the two end-point tokens at pretest. This is expected, given that previous research has suggested that speakers shadow VOT (Goldinger, 1998; Nielsen, 2011). Further, participants appear to make larger differences at posttest. It also appears as though this difference is larger for the perception + production training group than the perception-only training group.

The results of the mixed-effects model support this observation. The regression included training modality, training day, token number, and their interactions as fixed effects. Random effects included random slopes for training day by subject, and a random intercept for training continuum. Significance of each factor and interaction was calculated via model comparisons. First, training day was a significant predictor of model fit (β = −0.0012, SE = 0.0007, t = −1.54, χ2 = 10.001, p = .002), suggesting that participants changed their productions from Day 1 to Day 2. Token number was also a significant predictor of model of model fit (β = 0.0024, SE = 0.0007, t = 3.378, χ2 = 68.008, p < .001). However, training modality was not a significant predictor of model fit (χ2 < 1, p = .347).

Furthermore, the interaction between training day and token number was a significant predictor of model fit (β = 0.00027, SE = 0.001, t = −1.683, χ2 = 6.178, p = .013), suggesting that participants produce Tokens 1 and 8 on Day 1 rather than Day 2. The three-way interaction between training modality, training day, and token number also significantly predicted model fit, suggesting that (β = 0.0028, SE = 0.0014, t = 2.015, χ2 = 4.077, p = .043) participants in the perception + production training group make differences between Tokens 1 and 8 that interact with training day, but participants in the perception-only training group do not. No other interactions contributed significantly to the model fit (all χ2s < 1, ps > .1)

Figure 3 shows the results for voice onset time of short-lag tokens for the participants in the perception-only and perception + production training groups. Examining this figure it is clear that participants in both training groups prevoice tokens more on Day 2 than they do on Day 1. It also appears as though participants in the perception + production training group produce more prevoicing on Day 2 than participants in the perception-only training group.

A logistic regression was run comparing the proportions of prevoicing across the two experiments, using the same fixed-effects and random-effect structure as the model described above for voice onset time. Because logistic mixed-effects regressions use z values, I use these estimates to determine significance. The main effect of day was significant, suggesting that participants in both training groups produce prevoicing more often on Day 2 than on Day 1 (β = 1.09, SE = 0.41, z = 2.7, p = .007). No other main effects or interactions were significant (z values < 1). It should be noted, however, that one should be cautious in interpreting these results, given the relatively small number of prevoiced tokens produced.

These results suggest that although participants in the bimodal perception-only training group demonstrate some changes in production, the changes are not as robust as the bimodal perception + production training group. Although perceptual training can result in production learning, more robust production learning results from training that includes production. This finding is consistent with a wide array of literature suggesting that a learner’s ability to produce tokens can change with both perception and production training, but production training is more effective for eliciting changes in productions. Further, this work builds upon previous work using statistical learning, demonstrating that distributional exposure can influence production.

Perception results

Perceptual learning predictions

Turning our attention to perceptual learning, I ask here whether training modality influences perceptual learning. If participants in the bimodal training group successfully learn two novel categories after training, we expect their sensitivity to across-category comparisons should significantly increase from Day 1 pretest to Day 2 posttest. However, their sensitivity to within-category comparisons should remain stable or decrease if they have a high baseline sensitivity to the contrasts. If training modality influences learning, we expect to see differences in sensitivity to across-category comparisons between the perception-only and perception + production training groups. Given previous results (e.g., Baese-Berk & Samuel, 2016), we may expect to see an attenuation of learning for the perception + production trained groups.

Perceptual learning analysis

Mixed-effects models were conducted to analyze this data. Because no significant differences were found for location on the continuum to the regression (e.g., 1–8 comparisons vs. 3–6 comparisons), order of stimulus presentation (e.g., 1–8 vs. 8–1), or continuum (e.g., /da/ vs. /dæ/), these factors were not included in the models presented below, and all figures collapse over these distinctions.

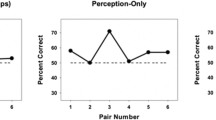

Perceptual learning results

Figure 4 shows sensitivity at posttest for participants in the perception-only and perception + production training groups. Examining the figure, it appears that participants in the perception-only training group show sensitivity to the across-category comparisons, but not the within-category comparisons. It is also clear from Fig. 4 that perceptual learning is attenuated for participants in the perception + production training group.

To compare perception-only and perception + production training regimens, regressions were run that used training modality, training day, and contrast type, and their interactions as fixed effects. Random-effect structure was the maximal structure justified by the model (using model comparisons) and included random slopes for training day and contrast type by participant and a random intercept for continuum. Significance was determined using model comparisons (see χ2 and p values that follow). Training day was a significant predictor of model fit (β = 0.76, SE = 0.12, t = 6.34, χ2 = 15.01, p = .008), suggesting that overall participants performed differently on Day 1 than on Day 2. Although no other main factors were significant predictors of model fit, several interactions did emerge as significant predictors. These interactions are summarized below.

First, the interaction between training modality (i.e., perception-only or perception + production) and training day was a significant predictor (β = −0.53, SE = 0.16, t = -3.27, χ2 = 5.36, p = .009). This suggests that the differences in performance across days are dependent on the training modality. The interaction between training day and comparison type also emerged as a significant predictor of model fit (β = −0.61, SE = 0.16, t = −3.86, χ2 = 11.27, p < .001). This suggests that participants perform differently on across-category and within-category comparisons on Day 1 and Day 2. The three-way interaction between training modality, training day, and comparison type is also a significant predictor of model fit (β = 0.45, SE = 0.22, t = 2.03, χ2 = 4.23, p = .039). These observations suggest that adding production to a perceptual training regimen negatively influences perceptual learning.

However, it is not the case that perceptual learning is depressed for all subjects. Examining individual data, several participants in the bimodal perception + production training group do show robust perceptual learning. A number of factors were examined as potential predictors for perceptual learning for participants in the bimodal training. Perception abilities on Day 1 during the pretest did not significantly improve the fit of the regression. It is also possible that because participants in the perception + production training group have larger demands on their attention (Baese-Berk & Samuel, 2016), and because participants do show learning in production, their perceptual learning may simply be slowed down. Perhaps with additional training time, participants in the perception + production training group would be able to learn to discriminate between the two new sound categories. This possibility is examined in Experiment 2.

Experiment 2

Method

Participants

Forty-nine Northwestern University undergraduates (31 females) participated in this experiment. Thirteen participants did not complete all 3 days of training and were excluded from analysis, leaving a total of 36 participants for analysis. All participants were native monolingual English speakers and did not report speech or hearing disorders. Training groups did not differ significantly in their musical experience. All participants were paid for their participation. As in Experiment 1, each training group in Experiment 2 contained 18 participants. Participants were divided into two training groups: a bimodal perception-only training group and a bimodal perception + production training group. Because participants in the unimodal groups in Experiment 1 demonstrated no learning, we restricted training to bimodal groups for Experiment 2.

Stimuli

The stimuli in Experiment 2 are identical to those in Experiment 1. Test and training stimuli are drawn from the same continua formed for Experiment 1. Because both training groups in Experiment 2 are bimodal exposure groups, there were no differences in the distributions given to participants in this study.

Procedure

The procedure was identical to that in Experiment 1. All training and testing occurred in the same order as in Experiments 1 and 2. However, participants in this study trained for 3 consecutive days. The testing and training order were the same on all 3 days of the experiment. Training and testing took around 1 hour each day of the training regimen.

Production learning predictions

In Experiment 1, participants in the bimodal perception-only training group did not demonstrate significant improvement in production, though there were trends toward improvement after 2 days of training. By examining repetition after 3 days of training, it is possible that learning will emerge for perception-only training in the nontrained modality of production. If these changes do occur, it should be expected that participants in the both will make a bigger difference in their repetitions of end-point tokens at the end of 3 days of training than they do at the beginning of training. Specifically, we should expect to see participants producing longer voice onset times for Token 8 than for Token 1. Furthermore, Token 1 should be prevoiced more often than Token 8. These differences ought to increase from pretest to posttest if participants are learning to change their productions. Participants in the bimodal perception + production training should show similar patterns of learning on Day 2 as the similar group did in Experiment 1. They may also continue to improve on Day 3, showing increased differences between the two end-point tokens.

Production learning analysis

As in Experiment 1, participants’ productions were classified into one of four groups: short-lag tokens, prevoiced tokens, and mixed tokens (with substantial periods of prevoicing and aspiration, sometimes also including a pause). Only short-lag and prevoiced tokens were used for the analyses reported here; however, Table 2 shows the proportion of each type of token.

Participants’ voice onset times for short-lag tokens were analyzed, due to the relatively small number of tokens that were prevoiced. Only the end-point tokens (Tokens 1 and 8) were compared because this is where participants are expected to make the largest differences in production. As in Experiment 1, VOT was calculated as a raw value and also as a ratio of the vowel duration. Because the two measures show similar patterns, I report raw VOT here. Additionally, the proportion of tokens that were prevoiced are also reported for Tokens 1 and 8. Because there were no significant differences across continua, all continua are collapsed in the analyses reported here.

Production learning results

Figure 5 shows the average voice onset time at Day 1 pretest and Days 2 and 3 posttest for short-lag tokens for the two bimodal training groups.

Once again, the data were analyzed using a linear mixed-effects regression that included training day, training modality, token number, their interactions, and the maximal random-effect structure justified by the model described above. The main effect of training day is significant (β = 0.0038, SE = 0.0006, t = 2.556, χ2 = 35.489, p < .001), as is the main effect of token (β = 0.0015, SE = 0.0007, t = 2.237, χ2 = 32.581, p < .001), suggesting that participants make distinctions between Tokens 1 and 8 and that their productions across training days differ. The main effect of training modality is not significant (β = 0.0044, SE = 0.0012, t = 3.436, χ2 = 3.5415, p = .059), though there is a numerical trend toward the participants in the perception + production training group producing longer voice onset times.

In terms of interactions, only the interaction between training day and training modality emerges as a significant predictor of model fit (β = −0.0045, SE = 0.0009, t = −4.726, χ2 = 30.289, p < .001). All other two-way interactions and the three-way interaction were not significant predictors of model fit (χ2 < 1, p > .1).

As in Experiment 1, participants in both training groups make numeric differences in short-lag VOT between Tokens 1 and 8. Specifically, Token 1 is produced with a shorter VOT than Token 8. Once again, participants appear to shadow some properties of the tokens they are repeating. Although this result is slightly different than Experiment 1 in which both groups showed some differences in voice onset time between Tokens 1 and 8 after training, this lack of differences is unsurprising when examining the data. The variance in this population is large and may mask some of the very small voice onset time differences.

Figure 6 shows the proportion of tokens that were prevoiced at pretest and posttest for the two bimodal training groups.

When examining the proportion of tokens that are prevoiced, a logistic mixed-effects model was used. Factors in the model included training modality, training day, token number, their interactions, and the maximal random-effect structure justified by the model. Training day was a significant predictor of model fit (β = 2.27, SE = 0.34, z = 3.5, p < .001), suggesting that participants prevoiced more often after training than they did before. Numerically, participants in both training groups do prevoice Token 1 more often than Token 8. However, token number was not a significant predictor of model fit (z < 1). The three-way interaction between training modality, training day, and token number (β = −0.15, SE = 0.06, z = −2.17, p = .03) was a significant predictor of model fit. Examining participants’ performance, it is clear that participants in the bimodal perception + production training prevoiced Token 1 more often than Token 8 on Day 3 of training. Participants in the bimodal perception-only training do not make such a large distinction.

To examine this finding in more detail, follow-up regressions were run comparing Day 1 pretest with Day 2 posttest and, separately, Day 1 pretest with Day 3 posttest. No main effects or interactions emerged as significant predictors in the model examining Day 1 pretest to Day 2 posttest (zs < 1). However, in the regression that compares Day 1 pretest to the Day 3 posttest, training day is a significant predictor of model fit (β = −3.8, SE = 1.6, z = −2.4, p = .032), as is the three-way interaction between training modality, training day, and token number (β = –3.7, SE = 1.8, z = −2.02, p = .043). This supports the explanation above that the changes in productions emerge on Day 3, but not yet on Day 2. As in the case of Experiment 1, one should be cautious in interpreting these results, given the relatively small number of prevoiced tokens produced.

These results support findings in Experiment 1. Though participants in the perception-only training do demonstrate small changes in production after training, this learning is not nearly as robust as production learning after training in perception + production. Although differences were not found in short-lag voice onset time in this study, it is possible that participants were more variable in their productions in the present experiment. When examining the perception + production group independent of the perception-only group, several significant differences emerge.

Perceptual learning predictions

First, participants in the perception-only training group should demonstrate robust learning after 2 days of learning, replicating the results from Experiment 1. Additionally, participants in the bimodal perception + production training group should not demonstrate perceptual learning after 2 days of training. However, on the third day, there may be a performance increase if learning in perception is simply slowed down for participants in the perception + production training group, rather than being completely disrupted. Furthermore, participants in the bimodal perception-only training group may improve their performance as a function of an increased amount of training.

Perceptual learning analysis

Analyses of the discrimination data were the same as those in Experiment 1. Linear mixed-effects regressions were used to analyze the data.

Perceptual learning results

Figure 6 shows posttest scores for both the perception-only and perception + production training groups. Examining this figure, it is clear that participants in perception-only training are quite sensitive to across-category comparisons after both 2 and 3 days of training. As expected, given the results of Experiment 1, it appears as though participants in the perception + production training demonstrate less perceptual sensitivity to the across-category contrasts after 2 days of training than participants in the perception-only training group. However, interestingly, these participants do show an improvement in sensitivity to across-category comparisons after 3 days of training, as demonstrated in the right most set of bars (Fig. 7).

To assess perceptual learning, a mixed-effects regression was performed on discrimination data from Day 1 pretest and posttests on Days 2 and 3. The regression model included the main effects of training modality, training day, comparison type, all of their interactions, and the maximal random-effect structure justified by the model with random slopes for day by contrast for participants. Contrast emerges as a significant predictor of model fit (β = −1.1659, SE = 0.2084, t = −5.594, χ2 = 75.516, p < .001), suggesting that participants have an increased sensitivity to the across-category contrasts compared to the within-category contrasts. Neither training modality nor day significantly improves model fit (both χ2 < 2, p > .10).

Examining the interactions included in the model, the three-way interaction between training day, training modality, and contrast type was not significant (χ2 < 1, p > .10). However, the two-way interaction between training day and modality was significant (β = .5538, SE = 0.2908, t = 1.904, χ2 = 5.9372, p = .015), as is the interaction between training modality and contrast type (β = 0.4857, SE = 0.2908, t = 1.67, χ2 = 4.4422, p = .035). The interaction between training day and contrast type is not significant, though there is a numerical trend toward across-category comparisons being more distinct on Day 2 than on Day 1 (β = −.3149, SE = 0.2948, t = -1.069, χ2 = 3.4823, p = .06).

In Experiments 1 and 2, participants show improvement that is largely tied to the modality of training. Participants trained in perception + production demonstrate substantial improvement in repetition from pretest to posttests, but only begin to show gains in perceptual learning after 3 days of training. Participants trained in perception-only demonstrate improvement in discrimination, but do not demonstrate learning in production. After 3 days of training, they do show some improvement in production, but these advances are relatively limited compared to participants in the perception + production training group.

At first blush, this is not surprising. Training focused on a particular modality should show substantial improvement within that modality. However, one aspect of this finding is rather puzzling. How do participants in the perception + production training learn in production when they are unable to perceive differences between the training tokens? This is particularly curious because the training task was a repetition task, which requires the learner to perceive the token they are trying to produce. Although the results of Experiment 2 suggest that perceptual learning is not entirely disrupted, the finding of reduced learning after 2 days of training in perception merits further review. In the next section, I investigate individual variability in perceptual learning and whether perceptual learning correlates with production learning for the training groups in Experiment 1. I then examine several possible factors underlying individual variability in perceptual learning.

Individual differences in perceptual learning

Figure 8 shows individual performance for the bimodal training groups from Experiments 1 and 2. Individual performance on the across-category comparisons during the Day 2 posttest are plotted here; participants are ordered by their final performance on the discrimination task. A few interesting observations can be made. First, there is substantial individual variation across participants in both training groups. However, focusing on the perception + production training group in the top panels of Fig. 8, it is clear that 11 of the 18 participants are performing at chance levels on the discrimination task, even after 2 days of learning.

This pattern was also seen in the individual performance for participants in Experiment 2. The individual data are plotted in the bottom panels of Fig. 8. Participants are ordered by their performance on the discrimination task on Day 2. Interestingly, some participants in the perception + production training group who do not learn after 2 days of training do demonstrate improvement from Day 2 to Day 3. However, a small number of participants still demonstrate very poor performance (i.e., chance performance on the discrimination task) even after 3 full days of training. This suggests that the source of disruption may not simply be a delay in learning, which could be alleviated by increased or prolonged exposure. I examine some possible options for this disruption below.

One may wonder whether participants who fail to learn in perception also fail to learn in production. It is possible that the gains in production learning for the perception + production training group are driven by the few participants who demonstrate perceptual learning. In order to ask this question, I examined correlations in perception and production for both training groups in Experiment 1.

To examine the correlation in learning across modalities, model comparisons were performed for models that included Day 2 discrimination performance as a predictor of the difference participants make between tokens in production on Day 2. The model that included Day 2 discrimination was a significantly better fit than the model that did not include that comparison (χ2 = 11.6, p < .03). This suggests that performance in the two modalities is related for participants in the perception-only training group. Figure 9 shows Day 2 discrimination performance and the amount of difference made between Tokens 1 and 8 in production.

Day 2 discrimination (d') and the amount of difference in voice onset time between Tokens 1 and 8 on Day 2. Perception-only training is shown in the left panel, and perception + production training is shown in the right panel. Discrimination is shown in d', and the production data are shown in VOT (seconds)

A similar comparison for the perception + production training group reveals a different pattern. The model that included Day 2 discrimination was not a significantly better fit than the model that did not include that factor (χ2 = 8.1, p = .09). This suggests that for the perception + production participants, performance in production is not related to performance in perception. The right panel of Fig. 9 shows Day 2 discrimination and Day 2 repetition performance for the perception + production training group. Of particular interest are the 11 subjects who do not learn in perception (clustered around the zero point on the horizontal axis). Several of these participants demonstrate production differences on Day 2, suggesting that learning in production is not tied to learning in production.

Although it is clear that performance on perception and production tasks is not closely tied for the participants in the perception + production training, a number of other factors could influence the disruption of perceptual learning. Several of these possibilities were examined using model comparisons. Day 1 pretest performance on the discrimination task does not significantly predict model fit for the perception + production training group (χ2 < 1).

One intuitive explanation is that participants who were “bad” at the repetition task were also “bad” learners. That is, participants who gave themselves productions that deviated from the distributions given to participants during training may have caused a disruption in their own perceptual learning. Taken in its most basic form, this does not appear to be true. As demonstrated above, examining only the perception + production training group, Day 2 posttest production performance does not significantly improve model fit. Baseline production abilities also do not significantly improve model fit (χ2 < 1), nor does average performance on either of the two end-point tokens (χ2 < 1).

However, it is possible that average production abilities do not accurately characterize what participants produce during test and training. Substantial previous work has suggested that variability plays a critically important role in learning. In order to examine the role of production variability during learning, I measured all productions of Tokens 1 and 8 during training and test.Footnote 5 I then calculated the variability for each token. For visualization purposes, participants in the bimodal training group into two groups: learners and nonlearners. Participants were grouped as a function of whether their d' values at posttest for the across-category comparison were above chance (learners) or at chance (nonlearners). Figure 10 shows the variability in voice onset time for Tokens 1 and 8 for learners and nonlearners. This figure shows that participants who do not learn in perception are more variable than participants who do learn in perception, specifically on Token 1. Recall that Token 1 is at the prevoiced end of the continuum. It appears that participants are more variable on the end of the continuum that is less frequent in English and is likely more novel to participants in the present study.

Variability in voice onset time for the perception + production training group in Experiment 1, divided by learners and nonlearners

The results of a linear mixed model support this observation. A mixed model was run with the variability in voice onset time as the dependent variable, and learner status (i.e., learner vs. nonlearner) and token (1 vs. 8) and their interaction as fixed factors, and the maximal random-effect structure justified by the model. A model comparison demonstrated inclusion of learner status significantly improves model fit (χ2 = 4.517, p = .033), but inclusion of token and the interaction of token and learner status do not improve model fit (both χ2 < 1).

General discussion

The results of these studies replicate previous findings that participants trained in both perception and production demonstrate disrupted perceptual learning as compared with participants trained in perception alone. Specifically, participants trained in perception alone demonstrate robust perceptual learning. Participants trained in both perception and production demonstrate robust learning in production, but less learning in perception. Greater improvement in the modality that was the focus of training is not a particularly surprising finding. However, it is rather surprising that participants in the bimodal perception + production training learn to produce tokens more accurately after training, even though they do not show evidence of perceptual discrimination between these two categories. An additional key finding, further differentiating this work from Baese-Berk and Samuel (2016) is that a third day of training partially alleviates the disruption to perceptual learning after training emphasizing production. However, substantial individual differences in perceptual learning remain, even after a third day of training. Therefore, a key question about this data is why perceptual learning is disrupted when participants produce tokens. An investigation of the results reveals several unlikely sources for the disruption, as well as some possible avenues for future research.

Below, I outline the implications of this work for our understanding of variability during training and how perception and production may be susceptible to different types of learning. I also discuss the implications of these results for our understanding of the relationship between perception and production, specifically during learning, and outline a proposed framework for understanding how the two modalities interact with one another.

Variability in production and learning

The results in the present study revealed no significant correlation between perception and production abilities for the participants trained in perception + production, suggesting that the lack of perceptual learning is not driven only by participants who also do not demonstrate learning in production. This is supported by analyses suggesting that neither participants’ baseline production abilities nor their production abilities at posttest predict their performance on the perception tasks. It is also not the case that participants baseline perception abilities are predictive of performance in perception after training. However, an examination of variability, especially variability on the “new” token seems to at least partially predict performance on the perception task. That is, increased variability in production correlates with a disruption in perceptual learning.

These results suggest that variability influences performance in a different way than absolute accuracy. Before beginning this discussion, it is important to note that variability can occur in a number of different forms: variability in learner performance, acoustic variability in productions from speakers, acoustic variability in the input to listeners, semantic variability, and speaker variability. Although it is clear that each of these types of variability have properties that are quite different from one another, substantial previous work has treated variability as a monolithic construct. Below, I describe some previous work on variability, particularly with regard to nonnative speech learning, and address how the results of the present study fit into this prior work.

Some previous work has suggested that nonnative speech can be more variable than native speech (e.g., Baese-Berk & Morrill, 2015; Wade, Jongman, & Sereno, 2007); however, other work suggests that, under some circumstances, nonnative speech is less variable than native speech (e.g., Morrill, Baese-Berk, & Bradlow, 2016; Vaughn, Baese-Berk, & Idemaru, 2018). This is, perhaps, unsurprising because native speakers produce substantial amounts of variability. Therefore, it may be more appropriate to reframe the notion of “correct” production. Instead, nonnative speakers must learn how to appropriately deploy variability in their productions and interpret variability in their production.

Taking this reframing, these results have interesting implications for how variability affects learning. If a learner must be able to acquire appropriate variability, but variability correlates in this study with a disruption of perceptual learning, how can we reconcile this conflict? In fact, this study is not the first to recognize such a conflict. Substantial prior research has demonstrated two conflicting consequences of variability during training—some in which it hinders learning and some in which it helps learning (e.g., Barcroft & Sommers, 2005; Sommers & Barcroft, 2007). One critical question is under what circumstances variability may have a positive impact and under what circumstances it can be disruptive.

In general, variability is thought to be one of the primary challenges of speech perception. The listener must determine what variability in the acoustic stream is meaningful for phonetic contrasts and what variability is not meaningful for phonetic contrasts.Footnote 6 However, it is also important to understand the range of variability that a particular phonetic feature may map on to. Substantial previous work has suggested that variability in both words and voices can benefit lexical learning and phonetic learning for nonnative speakers (Bradlow et al., 1997; Iverson et al., 2005; Sommers & Barcroft, 2007). Further, it is clear that exposure to multiple speakers can help listeners better understand a novel speaker from either a familiar (Bradlow & Bent, 2008; Sidaras, Alexander, & Nygaard, 2009) or unfamiliar (Baese-Berk, Bradlow, & Wright, 2013) background. However, additional work by Barcroft and Sommers (2005) has demonstrated that some types of variability can hinder the learning of novel words. Specifically, variability in the semantic representation learners are exposed to can disrupt learning, whereas variability in the form tends to enhance it.

In the present study, the variability being considered is always in the form, but it is still possible that it differs from variability in studies discussed above in important ways. Specifically, in previous studies, the experimenter has controlled the amount of variability that a listener is exposed to. This is true even in the distributions provided to learners in the present study. However, what is not controlled in the present study is the variability learners are exposed to in their own productions. It is possible that experimenter-given variability is structured in a particular way such that the variability itself is more stable. However, when listeners are exposed to their own voices, is it possible that this variability differs in its structure. That is, the variability could be even less predictable for the learner than the variability they are exposed to in other studies, where it is more carefully controlled by the experimenter. Further, it is possible that listeners weigh variability in their own productions differently than variability they are exposed to in other productions. When examining adaptation to an unfamiliar speaker or production, Sumner (2011) suggests that the type of variability is likely to affect how learning and adaptation proceed, which is consistent with the results of the present study. Previous work has also suggested that variability may facilitate learning when that variability is tied to indexical features (e.g., Rost & McMurray, 2010); however, when variability is within a contrastive acoustic dimension it can disrupt learning (e.g., Clayards, Tanenhaus, Aslin, & Jacobs, 2008; Holt & Lotto, 2006; Lim & Holt, 2011). Therefore, perhaps the variability we see in the present study (within a contrastive acoustic dimension) could be a source of disrupting perceptual learning precisely because it is within a contrastive acoustic dimension.

It is also possible that the tasks themselves could directly impact variability. Previous work has demonstrated that dual tasks increase variability in speech motor performance (Dromey & Benson, 2003), and the production + perception condition in the present study is an example of a dual task. However, it should be noted that in the case of variability as it is investigated here, all participants are completing the same tasks; therefore, the explanation that dual tasks increase variability cannot entirely account for our results. That is, participants in the perception + production training were differently variable from one another, even though they were completing the same tasks. That said, it is possible that dual tasks have a greater impact on some learners than others, with regard to variability in production.

Different types of learning in perception and production

As discussed above, understanding variability is critically important for understanding category learning. But one must ask whether participants in this experiment are learning categories at all, or whether different types of learning may be emerging in different training groups. The type of discrimination tested here requires learners to develop category representations, as learning was defined as an increase in across-category discrimination from pretest to posttest, but critically not within-category learning. Of course, it is possible that learners could improve at both within-category and across-category distinctions; however, this would suggest that their overall discrimination was improving and would not suggest that they were forming two novel categories in perception. That is, a lack of learning in perception could imply a lack of learning, or it could imply simply a different type of learning. Rather than acquiring a novel category, the learner may be more proficient at fine-grained discrimination. Obviously, this skill is less useful for phonological category learning, which requires a listener to be able to generalize over irrelevant variability to acquire a novel category. However, to imply that this is not learning may be an inappropriate interpretation of the results.

Whether phonologically categorical learning is occurring at all for participants in the present study is, in fact, unclear. Some previous work has directly addressed the issue of what is required during repetition and whether phonological categories are reflected in imitation, especially, for a second language. For example, Hao and de Jong (2016) examine phonological mediation during imitation, and demonstrate that second-language (L2) speakers show little evidence for phonological encoding during this task. This suggests that perhaps imitation or repetition in L2 does not directly require phonological (i.e., categorical) information. Therefore, a learner could improve at repetition in two ways. One is to acquire a novel category and to be able to more accurately select an exemplar for production from that category. However, a learner could also improve at repetition simply by more accurately matching their production to the token they hear. This would not require formation or access of a category. Naming, on the other hand, requires learners to access categorical representations, and improvement of the second type above would not allow for improvement in naming performance.

Similarly, in perception, it is possible that learners are not actually acquiring phonological (or even linguistic) categories, even in the bimodal case. That is, it is possible that a learner is simply acquiring the fact that the distribution of sounds is bimodal, but not necessarily that sounds in each of the two modes are from distinct categories. In fact, this is all that is required for learning to occur in some models (see, e.g., Kronrod, Coppess, & Feldman, 2016). Therefore, it is necessary to further probe linguistic knowledge, especially phonological category knowledge, in order to determine what, exactly, participants are learning. This suggests that a wide range of skills should be tested in order to better understand whether learners truly are acquiring novel categories.Footnote 7