Abstract

Interceptive actions, such as catching, are a fundamental component of many activities and require knowledge of advanced kinematic information and ball flight characteristics to achieve successful performance. Rather than combining these sources of information, recent exploration of interceptive actions has presented them individually. Thus, it still is unclear how the information available from advanced cues influences eye movement behaviour. By integrating advanced visual information with novel ball projection technology, this study examined how the availability of advanced information, using four different cueing conditions: no image (ball flight only with no advanced information), non-informative (ball flight coupled with ball release information), directional (ball flight coupled with directional information), and kinematic (ball flight coupled with video of a throwing action), influenced visual tracking during a one-handed catching task. The findings illustrated no differences in catching performance across conditions; however, tracking of the ball was initiated earlier, for a longer duration, and over a greater proportion of the ball’s trajectory in the directional and kinematic conditions. Significant differences between a directional cue and kinematic cue were not evident, suggesting a simple cue that provided information on the time of release and direction of ball flight was sufficient for successfully anticipating ball release and constraining eye movements. These findings highlight the relationship between advanced information and gaze behaviour during ball flight, and the performance of dynamic interceptive actions. We discuss the implications and potential limitations (e.g. variability between throwing image and ball projection) of the findings in the context of recent research on catching.

Similar content being viewed by others

Introduction

Little is known about how the information available from advanced cues, or cue specificity, influences visual behaviour during externally timed actions. Interceptive actions, such as hitting, kicking, and catching require coupling different sources of emergent visual information prior to and following ball release (e.g. advanced information from an individual’s kinematics, and/or ball flight characteristics), to successfully coordinate movement (Dicks, Davids, & Button, 2009; Pinder et al., 2011; Shim et al., 2005). Information is specifying when it holds a strong relationship between a source of information from the environment and a future visual event, resulting in accurate movement coordination. Identifying how the information present in the environment influences human behaviour is crucial for improving our understanding of interceptive actions, as not all perceptual cues can facilitate appropriate responses; some may be irrelevant or may even cause a distraction to performers (Greenwood, 2014). Variations in the information available may lead to changes in perception (Panchuk et al., 2013) and, in turn, modification of the individual’s visual behaviours (Müller & Abernethy, 2006; Savelsbergh et al., 2003). While empirical research highlights the coupling between an individual and the environment, there is a lack of understanding of the role informational constraints play in regulating eye tracking behaviour in dynamic interceptive actions, particularly when the type of advanced perceptual information available to a performer varies.

Due to the intricate coupling between perception and action, movement coordination during interception relies upon precise and continuous adjustments in vision, which plays a prospective role in determining the future position of a moving target (Diaz et al., 2013; Land, 2006; Lopez-Moliner, Field, & Wann, 2007). Skilled performers direct their gaze towards an opponent’s kinematic actions prior to release (Dicks, Davids, & Button, 2009). For example, in cricket, the bowler’s arm actions may be used to make a prediction about time of release and trajectory (Land & McLeod, 2000; Land, 2006). After release, visual tracking of the object’s trajectory can provide spatial and temporal information (e.g. time-to-contact) that assists in allowing the performer to couple their actions to emergent visual information from the object (Caljouw, van der Kamp, & Savelsbergh, 2004; Davids et al., 2006). In this context, precise timing of eye movements is vital for guiding actions; the gaze control system distinguishes the identity and location of the object and directs the hands to it (Land, 2006). While theories of vision for action have indicated that both advanced information, prior to flight, and from an object’s trajectory is essential for the successful performance of interceptive actions (van der Kamp et al., 2008), the sources of information that constrain action are unknown.

Previous experimental research has addressed the contribution of advanced kinematic information and ball flight characteristics independently when exploring interceptive actions. For example, studies have investigated anticipation when only advanced kinematic information is available (e.g. Müller, Abernethy, & Farrow, 2006), while others have examined interceptive behaviour when only ball trajectory is provided (e.g. Mazyn et al., 2007). In either case, information available to performers is incomplete and thus limits our understanding of the role that this information plays in eye movement control. When these sources of perceptual information are removed, the natural task is altered by eliminating stimuli that support behaviour and decoupling the link between visual perception and the movement response (Müller & Abernethy, 2006). Research that integrates advanced sources of information and ball flight (e.g. Panchuk et al., 2013; Stone et al., 2014) has provided new insights into the nature of perception-action coupling during interceptive actions. Although advanced information, as a function of performance, has been well documented in previous research, how the information content of advanced cues can facilitate eye tracking behaviour is still not well understood, particularly when determining the position and timing of the to-be-intercepted object for successful interception.

In the absence of any advanced information, there is a delay in tracking onset after a ball is released (Panchuk et al., 2013). However, individuals can use advanced visual information from the kinematics of the individual throwing the ball, to track the ball more effectively, which, in turn, enables more controlled hand movements for successful interception (Stone et al., 2013). Objects that move in a slow, predictable way tend to be tracked for a greater duration. In contrast, fast approaching, less predictable, objects result in a delayed tracking onset (Croft, Button, & Dicks, 2010; Land & McLeod, 2000). When conflicting information sources are presented, individuals do not track the entire trajectory of the approaching object to the point of interception and different gaze behaviours have been observed depending on the predictability of flight of the to-be-intercepted object (Croft, Button, & Dicks, 2010). This relationship between the level of specification provided by advanced cues and performance has, to some extent, been demonstrated previously (Panchuk et al., 2013; Stone et al., 2014). While these studies show a link between advanced information and visual tracking of an object, they have not examined how the information available within the advanced cues explicitly influences tracking behaviour.

In this study, we sought to determine how the type of advanced cue information available to the performer was used to regulate gaze behaviour during interceptive actions. Specifically, this study evaluated catching performance and visual tracking behaviours when participants were presented with video images that contained varying degrees of ball release and trajectory information during a one-handed catching task. In line with previous research that has compared interceptive actions when only ball flight information was available, and a combination of advanced information and ball flight, we expected tracking duration to increase as the timing and direction of ball release became more certain. When presented with video footage that provided all information regarding the forthcoming throw and was representative of an actual throwing action (e.g. ball direction and timing of ball release), an earlier tracking onset and longer gaze duration on the ball would be observed. Conversely, with no visual information present, we anticipated that the participants would begin tracking the ball later and for a shorter duration. We also expected catching success to improve when additional information regarding release time and direction was available.

Materials and methods

Participants

A sample size of 15 participants was recommended for the study based on an a priori power analysis using an effect size of 0.22 (as per Panchuk et al., 2013), with a power of 0.90 and an alpha P < 0.05. Fifteen participants (14 males, 1 female; mean age: 24 ± 6.21 years), with normal or corrected-to-normal vision, volunteered for the study. Eleven participants were right-hand dominant and four participants were left-hand dominant. A sport participation questionnaire was completed prior to the experiment; all but one participant had gained some competitive or recreational experience with sports requiring a catching component, including: Australian Rules football, cricket, basketball, soccer goalkeeping, rugby, netball, or slow-pitch. Catching skill was determined by a pre-test, where participants were required to successfully catch at least 16 out of 20 (10 left, 10 right; Catching performance: M = 17.73 ± 1.91) balls projected at 50 km/h from the ball projection machine, using only one hand (Stone et al., 2014). The local Ethical Review Board provided approval for the study, and all participants provided their informed consent prior to participation.

Apparatus

Ball projection machine

A custom-built apparatus combined a tennis ball projection machine (Spinfire Pro 2) integrated with a PC (Fig. 1). The PC controlled the ball release mechanism of the projection machine through an electromechanical piston in the ball feed chute, which permitted synchronisation of video images and ball projection at pre-determined time points during a video sequence (for a detailed description, see Stone et al., 2013 & Panchuk et al., 2013). The ball machine was placed behind a free-standing projection screen (Grandview), with a 15 cm hole cut into its surface, enabling the ball to travel through unobstructed. A video projector (BenQ MP776st) was positioned in front of the screen and adjusted so the point of ball release was aligned with the hole in the screen. The video images and the time to ball release were accurately synchronised to give the appearance of the ball being thrown towards the participant. The vertical trajectory of the release from pilot testing (assuming a ball released straight from the machine equalled 0°) was typically between 2.7° and 6.8°. Launch direction was manipulated by a laser positioned on the ball machine chute to ensure precise alignment to the left and right consistently for each trial. The horizontal location of the ball’s trajectory was scaled to the movement capabilities of each individual. This was determined prior to testing by projecting balls to the participant’s left and right during practice trials. The exact spatial variability (horizontal and vertical) of the projection machine was not determined; pilot testing and previous experimental research (Panchuk et al., 2013; Stone et al., 2013) with the apparatus demonstrated a high degree of spatial consistency in projection location with 97.5 % of balls being catchable. All balls were projected at 50 km/h for this experiment and the ball machine had a high degree of consistency (SD = 0.8 km/h) at this speed. At a distance of 7 m (participant location) this translated into a ball flight time of approximately 504 ± 8 ms.

Stimuli

Four different stimuli were developed for the experiment. The stimuli were selected to provide differing levels of advanced information to the participant. Video clips were created (Fig. 2) and edited using Final Cut Pro (Apple; http://www.apple.com) to produce an identical duration and release point on the projection screen. The stimuli were:

-

(1)

No image: no video image, only ball flight was available—information regarding ball direction and time-to-release was not available to the participant.

-

(2)

Non-informative: an image of a spot appeared on the screen for 2 s, followed by ball release—a non-directional cue that provided information that ball release was imminent.

-

(3)

Directional: an arrow was shown on the screen for 2 s—the participant was provided with ball direction and information that ball release was imminent.

-

(4)

Kinematic: video image of an actor mimicking the throwing action from the participant’s perspective. To create the video stimuli, a camera was placed at the location where participants would stand during the experiment. An actor was then recorded throwing the ball to a 1 m x 1 m target located at the approximate catching location (hand height: 1.2 m) to the right of the mark where the participants would stand. The velocity of the actor’s throw was recorded using a radar gun to ensure the throwing velocity was within the range of the ball projection machine (50.0 ± 0.8 km/h). The video was then mirrored in software (Windows Movie Maker, Microsoft; http://windows.microsoft.com) to the left, to imply that the throw was directed to the left of the participant. This ensured that there was no variability in the kinematic actions of the thrower. Ball ejection occurred when the thrower released the ball from their hand and the throwing speed of the actor matched the ball release speed—the participant was provided with kinematic information of the full throwing action to determine the ball’s direction and timing of release.

The ball speed and trajectory were deliberately controlled as the goal was to determine the influence of advanced information on catching and tracking performance, rather than other factors. Previous research that has used a variety of ball speeds (Panchuk et al., 2013; Stone et al., 2014, 2015) has demonstrated that coordination of a catching action and gaze behaviour are influenced by the presence (or absence) of advanced information more so than ball speed. Therefore, a speed of 50 km/h was chosen to allow us to isolate the effects of various advanced information on tracking behaviour and to provide direct comparisons with this previous research.

Eye movement recording

Each participant wore a mobile eye tracking device (Mobile Eye, Applied Sciences Laboratories, Bedford, MA) during the experimental task. The Mobile Eye is a head-mounted eye tracking system that uses corneal reflection to measure monocular eye-line-of-gaze in the field of view, with a spatial accuracy of 0.5° and a precision of 0.1° of visual angle (as reported by the manufacturer). The glasses are lightweight and consist of two high resolution cameras to record the scene image and the participant’s eye at 30 Hz. Mounted on safety glasses, the system allows the participant to move freely within the environment, without restricting peripheral vision.

Procedure

After providing informed consent, an overview of the experiment and apparatus was given to the participant. Twenty practice trials (10 left, 10 right), with a blank screen, at projection speeds of 50 km/h were performed to allow the participant to become accustomed to the apparatus and ensure the equipment was functioning correctly. Once the participant felt comfortable, they performed a 20-trial assessment of catching skill without any video stimuli. Following the assessment, the Mobile Eye was fitted and calibrated to five points on the projection screen, and calibration was continually monitored throughout the testing. The experiment consisted of 80 randomised trials divided into 20 trials for each condition, 10 each for the left and right side. For each trial, the participant stood 7 m away from the screen, with arms at their side, and attempted to catch the ball using the hand to which the ball was directed (only one-handed catches were permitted). The instructions given to the participants were to attempt to catch the ball with one hand; the participants were not informed of the ball’s direction during the trials, and no other instructions were provided regarding gaze or movement behaviour. When the participant was ready, a ball was placed into the ball machine, and following a random interval of 0–2 s, the video image was displayed (according to the experimental condition) and the ball was projected at 50 km/h. The outcome of each trial was recorded on a scoring sheet by two researchers to ensure reliability. A 2-min break was given after each block of 20 trials in order to prevent fatigue. No participant reported any discomfort or interference with their catching action when wearing the equipment. Participants were instructed to look at the bottom of the screen (away from the projection hole) following each trial to eliminate any directional advantage that they might perceive from the movement of the ball machine. Each participant also wore headphones with background white noise to remove any acoustic information from the ball machine that may be used to time their actions. Each trial was monitored for quality of ball projection. Trials were removed if the ball was deemed to be uncatchable (e.g. the ball was not projected to a comfortable catching position or was out of reach) or if the participant had to move their feet to catch the ball.

Data processing and analysis

Of 1200 trials captured across all participants, only 1 trial was removed from analysis due to a technical fault. Visual tracking of the ball’s flight was coded, frame-by-frame, using QuickTime Player (Apple). Tracking behaviour was coded when the gaze cursor remained within 3° of visual angle (which corresponded to the size of the gaze cursor at 7 m and the typically reported size of the fovea; Carpenter, 1988) on the moving ball for a minimum of three frames (100 ms; Vickers, 2007). Absolute tracking latency was measured as the duration between the time of ball release and the onset of ball tracking (ms), and absolute tracking duration was the total time (ms) spent tracking the ball’s flight. To account for inherent variability of ball flight from the projection machine (0.8 km/h), absolute times were also converted to a percentage of total ball flight time to provide relative tracking latency and relative tracking duration and both values are reported. Participant catching success was calculated as a percentage of total balls caught relative to the total number of trials in each condition. A subsample of data was coded by two independent coders to establish reliability of the processing methods; interclass correlations greater than 0.90 were found for all variables, indicating strong agreement between coders.

Catching performance was analysed using a one-way (Condition: no image, non-informative, directional, kinematic) within-subjects, repeated measures ANOVA. Paired samples t-tests were used for follow-up analysis of significant differences and a Bonferroni correction was applied. A nominal P-value of .05 was used for initial tests and an adjusted P-value of .008 was used for follow-up tests. Effect sizes were reported using partial-eta squared (η 2 p ). Each eye tracking variable (absolute and relative tracking latency and duration) was analysed separately using linear mixed models (LMM) with condition and outcome as fixed factors, trial number as a repeated measure, and participant as a random factor. The fit of the model was adjusted by inclusion of random intercepts and slopes, changing the variance structure, and removing non-significant effects. Goodness of fit between models was compared using the Akaike information criterion. Follow-up comparison tests were carried on the estimated marginal means with a Bonferroni correction applied. Effect sizes were reported as 95 % confidence intervals (95 % CI).

Results

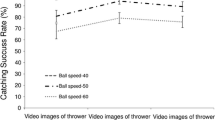

Catching performance

Analysis of catching performance revealed no significant differences between the conditions, F(3, 42) = .303, P = .823, η 2 p = .021. Catching success was 79 % (SD = 13.8) in the no image condition, 79 % (SD = 16.7) in the non-informative condition, 81 % (SD = 11.3) in the directional condition, and 79 % (SD = 16.6) in the kinematic condition. Catching performance in the experimental task was consistent with the skill level required for participant inclusion in the study (80 %).

Absolute tracking latency

For absolute tracking latency, there was a significant effect of condition (P < .001). Follow-up comparisons revealed that tracking latency occurred significantly later in the no image condition compared to the non-informative [P = .018; 95 % CI (1.87, 31.63 ms)], directional [P < .001; 95 % CI (15.78, 46.43 ms)], and kinematic [P = .001; 95 % CI (6.40, 36.39 ms)] conditions. Tracking latency was also different for catches and misses [Table 1; P < .001; 95 % CI (6.90, 23.53 ms)]. The interaction of condition and outcome was not significant (P = .202).

Relative tracking latency

For relative tracking latency, there was a significant effect of condition (P < .001). Follow-up comparisons revealed that tracking latency occurred significantly later in the no image condition compared to the non-informative [P = .001; 95 % CI (0.92, 5.17 %)], directional [P < .001; 95 % CI (3.49, 8.01 %)], and kinematic [P < .001; 95 % CI (3.34, 7.68 %)] conditions (see Fig. 3). Relative tracking latency also occurred later in the non-informative condition compared to the directional [P = .008; 95 % CI (0.49, 4.92 %) and kinematic [P = .014; 95 % CI (0.33, 4.61 %)] conditions. Relative tracking latency was also different for catches and misses [Table 1, Fig. 4; P = .001; 95 % CI (0.80, 3.23 %)]. The interaction of condition and outcome was not significant (P = .490).

Absolute tracking duration

For absolute tracking duration, there was a significant effect of condition (P < .001). Follow-up comparisons revealed that tracking duration was significantly shorter in the no image condition compared to the non-informative [P = .012; 95 % CI (−30.46, −2.45 ms)], directional [P < .001; 95 % CI (−49.88, −21.04 ms)], and kinematic [P < .001; 95 % CI (−50.57, −22.35 ms)] conditions. Absolute tracking duration was also shorter in the non-informative condition compared to the directional [P = .003; 95 % CI (−33.39, −4.63 ms)] and kinematic [P = .001; 95 % CI (−34.07, −5.94 ms)] conditions. Absolute tracking duration was also shorter on misses compared to catches [Table 1; P < .001; 95 % CI (−28.23, −12.58 ms)]. The interaction of condition and outcome was not significant (P = .238).

Relative tracking duration

For relative tracking duration, there was a significant effect of condition (P < .001). Follow-up comparisons revealed that relative tracking duration was significantly shorter in the no image condition compared to the non-informative [P = .002; 95 % CI (−5.51, −0.85 %)], directional [P < .001; 95 % CI (−8.92, −3.92 %)], and kinematic [P < .001; 95 % CI (−9.76, −4.93 %)] conditions (see Fig. 5). Relative tracking duration was also shorter in the non-informative condition compared to the directional [P = .003; 95 % CI (−5.69, −0.77 %)] and kinematic [P < .001; 95 % CI (−6.50, −1.83 %)] conditions. Relative tracking duration was also shorter on misses compared to catches (Table 1, Fig. 6; P < .001; 95 % CI (−4.20, −1.53 %)]. The interaction of condition and outcome was not significant (P = .197).

Discussion

While we know that the integration of advanced information and ball flight characteristics is essential for accurate coordination of interceptive actions (e.g. Panchuk et al., 2013; Stone et al., 2014), questions surrounding how the information contained within advanced cues could support successful interception during interceptive actions remain. By integrating advanced visual information with ball projection technology, this study examined how varying the information contained within cues prior to ball flight affected performance and visual tracking behaviour during a one-handed catching task. As predicted, tracking latency occurred earlier when informational cues were presented. When no advanced information was presented (i.e. no image) or the advanced information lacked directional cues (i.e. non-informative), participants began tracking the ball relatively later. Comparisons of tracking duration on the ball also differed between conditions, with the participants tracking the ball for a longer period when release time and direction was available (i.e. directional and kinematic), thus supporting our hypothesis. Specifically, tracking duration (both relative and absolute) differed between the no image/non-informative conditions and the directional/kinematic conditions. Contrary to our hypotheses, catching performance did not vary between conditions. There were, however, differences in tracking behaviour between catches and misses; successful catches were characterised by earlier tracking after ball release and longer tracking durations.

The findings illustrated significant changes in visual tracking behaviour when the information provided to participants varied between the four conditions. In general, when timing and directional information was available, visual tracking behaviours started earlier after release and lasted longer. The findings are in accordance with previous research that have highlighted the importance of coupling perception and action during performance of interceptive actions (Panchuk et al., 2013; Stone et al., 2014) and supports the argument that advanced visual information influences performance of interceptive timing tasks (Pinder et al., 2011; van der Kamp et al., 2008). This data also provides specific evidence showing that gaze behaviours were strongly influenced by the type of advanced visual information provided; when the advanced cue did not contain directional information, tracking performance was negatively impacted and later/shorter tracking was associated with poor catching success. Without appropriate information to constrain their actions, the participants were unable to anticipate ball release and directional information prior to ball projection; this led to a reactive style of tracking behaviour as they were required to wait until ball flight information became available to determine the direction and flight of the ball. While this did not influence catching success between conditions (i.e. number of catches), across conditions, successful performances (i.e. catches) were characterised by earlier and longer tracking. This suggests that, at this particular ball speed, participants were able to use information emerging from ball flight to efficiently coordinate their actions, but were more likely to be successful when they could effectively coordinate their tracking behaviour with ball flight. The importance of advanced information to support tracking behaviour on the ball may become more relevant at higher speeds than those used here.

Based on the analyses of absolute and relative tracking latency, the inclusion of directional information (e.g. an arrow or kinematic information) resulted in a quicker recognition of initial ball release and onset of ball tracking, compared to a later onset of ball tracking when limited information was available (i.e. no image and non-informative conditions). This supports the contention that valid advanced information, providing the timing and direction of ball release, supports visual tracking behaviour. In the absence of directional information to assist with guiding their actions, the participants were forced to rely only on ball flight information to constrain their movements, resulting in the emergence of a more reactive style of visual tracking. However, once the additional perceptual information provided by the directional cue was available, along with ball projection, participants were able to visually track the ball more effectively, as they were exposed to constraints that allowed them to use this information to anticipate ball release. It is worth noting that, while there was a statistically significant difference in latency between the directional and no image condition, the difference in absolute (2.9 ms) and relative (0.2 %) latency between the no image and kinematic conditions approached significance (P = .05 and P = .02, respectively), and it is highly likely that there is a meaningful distinction between the two. The use of a single trajectory to project the ball to the left or the right limits the generalisability of these findings. It would be interesting to see how a directional cue compares to kinematic cues when multiple potential trajectories are used. For example, would tracking in the kinematic and directional conditions be equivalent if balls were projected high, low, and further to the right/left? Based on seminal research in the priming of actions (Rosenbaum, 1980), we expect that differences would begin to emerge between directional and kinematic cues as predictability of the trajectory changed.

One of the more interesting findings from this experiment was that, although providing more specific information enhanced visual tracking, a directional cue without any kinematic information was essentially equivalent to kinematics in terms of its influence on tracking ability. This could have an impact on the amount of advanced information required to elicit natural tracking behaviour; an arrow and a warning of when an event will occur may be a sufficient cue for an individual to initiate an interceptive action. This may be explained by the theoretical framework proposed by van der Kamp et al. (2008), who suggested the use of advanced information during the performance of an interceptive action is likely to depend on the level of anticipation needed. In tasks that require a high degree of anticipation, like catching, the advanced information available may act to constrain gaze behaviours and the subsequent performance of an interceptive action. Under these particular task constraints, the image of the arrow provided the participants with as much perceptual information for constraining their gaze behaviour as the image of the actor. Therefore, it appears that if advanced cues provide information regarding release time and direction, there are potential visual tracking benefits (Pinder et al., 2011). At some point, however, it is possible that additional contextual information (e.g. seeing the individual throwing the ball) may actually become redundant for constraining eye movements, provided there is perceptual information available that specifies direction and time of release. As noted earlier though, the predictability of ball trajectory and speed could also influence the generalisability of this statement and additional research, with more variety in ball projection, is necessary to determine under what conditions this is the case.

While our results suggest that a directional cue may be sufficient for constraining gaze behaviour, in naturalistic tasks such as cricket or baseball where a variety of trajectories are used, this may not be the case. One could argue that training with directional cues (e.g. arrows as opposed to a thrower) may be equivalent to using a live thrower; however, the transferability of this training could be questioned due to the use of non-specific information. Practically, though, it may be beneficial to expose individuals to predictable and consistent ball trajectories to promote development of tracking capability and coordinating tracking with the actions of a thrower. For example, using a consistent throwing trajectory and speed would allow a developing batter to learn the relationship between the thrower’s actions and their tracking behaviour. Once the relationship has been established, the variety of trajectories and speeds could then be increased to promote more adaptable tracking behaviours. It is important to note that, other than recording the outcome, movement patterns were not measured in this study. Pinder et al. (2011) have shown that movement kinematics was also influenced by the availability of advanced information. Although the present outcomes indicated that an arrow was equivalent to a thrower for constraining eye movements, it is not yet known if this would be a sufficient source of information for constraining movement coordination in other, more applied contexts.

There are some limitations to the current findings and additional questions for investigation. In the present study, balls were projected at the same speed for all trials, which casts doubt on whether the results would be consistent at other ball speeds. Previous research (e.g. Stone, et al., 2015) has shown that tracking duration is linked to ball speed, suggesting that differences in eye movement behaviours are more pronounced when projection speeds are more variable and thus may also influence catching success. An interesting issue to address in future research is the extent of changes in projectile speed and trajectory on tracking ability. The contextual or kinematic information available in the display also raises questions about tracking behaviour. The throwing action used in the present study was consistent across all trials and we were unable to match the inherent variability in the ball machine and the throwing action exactly from trial-to-trial. There is evidence, however, to suggest that catching performance and coordination are maintained and individuals are largely unaware of any manipulation when thrower kinematics and ball trajectory information are de-coupled (Panchuk et al., 2013; Stone et al., 2015). It would be worth investigating how much de-coupling can be tolerated before performance, coordination, and awareness become affected. It is possible that actions which exaggerate aspects of the throw or are deceptive in nature may lead to different visual tracking behaviour and catching performance. Research using point-light displays to examine action observation has shown that identifying the relative motion of joints is sufficient to distinguish a movement (Calvo-Merino et al., 2010). Future research should address the idea that some relative information may be redundant and the implementation of a point-light display may be a way to determine how contextual information associated with the throwing action was used to guide tracking behaviour. Coordination patterns of the catching movement were not measured in this study; therefore, the influence of varying levels of advanced information available in the cues on movement organisation was not determined. It is possible that movement patterns may have differed among the conditions. Investigating the nature of this relationship could establish how movement patterns change when responding to varying sources of advanced information and the link between perceptual behaviour and action control.

The data has implications for the design of future studies exploring interceptive actions. Most previous research exploring interceptive actions have typically focused on perceptual anticipation in the absence of ball flight, while other studies have examined ball flight exclusively. Studies involving occlusion and simulation-based paradigms fail to capture the dynamic nature of advanced kinematic information when excluding movement of the interceptive action, which is essential in a natural performance environment. The removal of this information alters the constraints available to coordinate an individual’s visual behaviour, and calls into question the degree to which action-perception coupling enhances the dynamic nature of advanced information on interceptive actions. The present study provides further information, along with studies by Panchuk et al. (2013) and Stone et al. (2014), that both sources of information are essential to obtain a true representation of human performance.

Conclusions

In summary, this study combined advanced visual information and ball flight to examine visual tracking behaviour using cues that varied in the amount of advanced information available during a one-handed catching task. Novel ball projection technology was used to demonstrate that, as informative visual cues that provide time of release and direction became available, tracking onset occurred earlier and for a longer proportion of the ball’s flight. The findings suggested that a simple informational cue, such as an arrow, can provide the information necessary to successfully constrain eye movements and highlighted the importance of the relationship between advanced information and visual tracking during the performance of a dynamic interceptive action.

References

Caljouw, S. R., van der Kamp, J., & Savelsbergh, G. J. P. (2004). Catching optical information for the regulation of timing. Experimental Brain Research, 155, 427–438. doi:10.1007/s00221-003-1739-3

Calvo-Merino, B., Ehrenberg, S., Leung, D., & Haggard, P. (2010). Experts see it all: Configural effects in action observation. Psychological Research, 74, 400–406. doi:10.1007/s00426-009-0262-y

Carpenter, R. H. S. (1988). Movements of the eyes. London: Pion.

Croft, L., Button, C., & Dicks, M. (2010). Visual strategies of sub-elite cricket batsmen in response to different ball velocities. Human Movement Science, 29(5), 751–763. doi:10.1016/j.humov.2009.10.004

Davids, K., Button, C., Araújo, D., Renshaw, I., & Hristovski, R. (2006). Movement models from sports provide representative task constraints for studying adaptive behavior in human movement systems. Adaptive Behavior, 14(1), 73–95. doi:10.1177/105971230601400103

Diaz, G., Cooper, J., Rothkopf, C., & Hayhoe, M. (2013). Saccades to future ball location reveal memory-based prediction in a virtual-reality interception task. Journal of Vision, 13(1), 1–14. doi:10.1167/13.1.20

Dicks, M., Davids, K., & Button, C. (2009). Representative task designs for the study of perception and action in sport. International Journal of Sport Psychology, 40, 506–524.

Greenwood, D.A. (2014). Informational constraints on performance of dynamic interceptive actions. PhD Thesis, Queensland University of Technology, Brisbane.

Land, M. F., & McLeod, P. (2000). From eye movements to actions: How batsmen hit the ball. Nature Neuroscience, 3, 1340–1345. doi:10.1038/81887

Land, M. F. (2006). Eye movements and the control of actions in everyday life. Progress in Retinal and Eye Research, 25, 296–324. doi:10.1016/j.preteyeres.2006.01.002

Lopez-Moliner, J., Field, D. T., & Wann, J. P. (2007). Interceptive timing: Prior knowledge matters. Journal of Vision, 7(13), 1–8. doi:10.1167/7.13.11

Mazyn, L. I. N., Savelsbergh, G. J. P., Montagne, G., & Lenoir, M. (2007). Planning and on-line control of catching as a function of perceptual-motor constraints. Acta Psychologica, 126(1), 59–78. doi:10.1016/j.actpsy.2006.10.001

Müller, S., & Abernethy, B. (2006). Batting with occluded vision: An in situ examination of the information pick-up and interceptive skills of high- and low-skilled cricket batsmen. Journal of Science and Medicine in Sports, 9, 446–458. doi:10.1016/j.jsams.2006.03.029

Müller, S., Abernethy, B., & Farrow, D. (2006). How do world-class cricket batsmen anticipate a bowler's intention? Quarterly Journal of Experimental Psychology, 59(12), 2162–2186. doi:10.1080/02643290600576595

Panchuk, D., Davids, K., Sakajian, A., MacMahon, C., & Parrington, L. (2013). Did you see that? Dissociating advanced visual information and ball flight constrains perception and action processes during one-handed catching. Acta Psychologica, 142, 394–401. doi:10.1016/j.actpsy.2013.01.014

Pinder, R. A., Davids, K., Renshaw, I., & Araújo, D. (2011). Manipulating informational constraints shapes movement reorganization in interceptive actions. Attention Perception Psychophysics, 73, 1242–1254. doi:10.3758/s13414-011-0102-1

Rosenbaum, D. A. (1980). Human movement initiation: Specification of arm, direction, and extent. Journal of Experimental Psychology. General, 109(4), 444–474.

Savelsbergh, G., Rosengren, K., van der Kamp, J., & Verheul, M. (2003). Catching action development. Development of movement co-ordination in children: Applications in the fields of ergonomics, health sciences, and sport (pp. 191–212). New York: Routledge.

Shim, J., Carlton, L. G., Chow, J. W., & Chae, W. (2005). The use of anticipatory visual cues by highly skilled tennis players. Journal of Motor Behavior, 37(2), 164–175. doi:10.3200/JMBR.37.2.164-175

Stone, J. A., North, J. S., Panchuk, D., Davids, K., & Maynard, I. W. (2015). (De)synchronisation of advanced visual information and ball flight characteristics constrains emergent information-movement couplings during one-handed catching. Experimental Brain Research, 232(2), 449–458. doi:10.1007/s00221-014-4126-3

Stone, J. A., Panchuk, D., Davids, K., North, J. S., Fairweather, I., & Maynard, I. W. (2013). An integrated ball projection technology for the study of dynamic interceptive actions. Behavior Research Methods, 46(4), 984–991. doi:10.3758/s13428-013-0429-8

Stone, J. A., Panchuk, D., Davids, K., North, J. S., & Maynard, I. W. (2014). Integrating advanced visual information with ball projection technology constrains dynamic interceptive actions. Procedia Engineering, 72, 156–161. doi:10.1016/j.proeng.2014.06.027

van der Kamp, J., Rivas, F., van Doorn, H., & Savelsbergh, G. (2008). Ventral and dorsal system contributions to visual anticipation in fast ball sports. International Journal of Sport Psychology, 39, 100–130.

Vickers, J. N. (2007). Perception, cognition, and decision training: The quiet eye in action. Champaign: Human kinetics.

Author information

Authors and Affiliations

Corresponding author

Additional information

Data collection was carried out at College of Sport and Exercise Science, Victoria University

Rights and permissions

About this article

Cite this article

Akl, J., Panchuk, D. Cue informativeness constrains visual tracking during an interceptive timing task. Atten Percept Psychophys 78, 1115–1124 (2016). https://doi.org/10.3758/s13414-016-1080-0

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-016-1080-0