Abstract

There is an ongoing debate whether or not multisensory interactions require awareness of the sensory signals. Static visual and tactile stimuli have been shown to influence each other even in the absence of visual awareness. However, it is unclear if this finding generalizes to dynamic contexts. In the present study, we presented visual and tactile motion stimuli and induced fluctuations of visual awareness by means of binocular rivalry: two gratings which drifted in opposite directions were displayed, one to each eye. One visual motion stimulus dominated and reached awareness while the other visual stimulus was suppressed from awareness. Tactile motion stimuli were presented at random time points during the visual stimulation. The motion direction of a tactile stimulus always matched the direction of one of the concurrently presented visual stimuli. The visual gratings were differently tinted, and participants reported the color of the currently seen stimulus. Tactile motion delayed perceptual switches that ended dominance periods of congruently moving visual stimuli compared to switches during visual-only stimulation. In addition, tactile motion fostered the return to dominance of suppressed, congruently moving visual stimuli, but only if the tactile motion started at a late stage of the ongoing visual suppression period. At later stages, perceptual suppression is typically decreasing. These results suggest that visual awareness facilitates but does not gate multisensory interactions between visual and tactile motion signals.

Similar content being viewed by others

Introduction

Visual and tactile motion perception are closely intertwined. For example, the perceived direction and speed of tactile motion can be biased towards the direction and speed of synchronously presented visual motion (Bensmaïa, Killebrew, & Craig, 2006; Craig, 2006; Lyons, Sanabria, Vatakis, & Spence, 2006). The perceived direction of visual motion, in turn, can be biased by concurrently presented tactile motion, if the visual motion stimulus is ambiguous (Blake, Sobel, & James, 2004; Butz, Thomaschke, Linhardt, & Herbort, 2010; Pei et al., 2013). Furthermore, the simultaneous presentation of congruently moving visual and tactile stimuli leads to lower direction (Ushioda & Wada, 2007) and speed discrimination thresholds (Gori, Mazzilli, Sandini, & Burr, 2011; Gori et al., 2013) compared to separate presentations of the same stimuli. Even motion after-effects transfer between vision and touch (Konkle, Wang, Hayward, & Moore, 2009). In sum, there is ample evidence for interactions between visual and tactile motion signals during perception.

Interactions between visual and tactile motion signals might occur in unisensory or in multisensory brain areas. For one, visual and tactile motion signals converge at the human analogue of the ventral intraparietal cortex (VIP, Bremmer et al., 2001), an area showing neural signatures of interactions between visual and tactile signals in monkeys (Avillac, Hamed, & Duhamel, 2007). However, visual as well as tactile motion stimuli additionally activate the extrastriate hMT+/V5-complex (Blake et al., 2004; Hagen et al., 2002; Summers, Francis, Bowtell, McGlone, & Clemence, 2009; but see Jiang, Beauchamp, & Fine, 2015), a region traditionally associated with visual information processing only. Thus, interactions between visual and tactile motion stimuli possibly arise already in classical “unisensory” areas. Consistent with interactions between visual and tactile motion signals in unisensory areas, directionally congruent visual-tactile motion stimuli induce synchronized gamma-band oscillations over primary and secondary visual as well as somatosensory regions (Krebber, Harwood, Spitzer, Keil, & Senkowski, 2015). However, on its own this evidence is not conclusive: activity in unisensory areas could either reflect early interactions of cross-modal signals or arise due to feedback from later processing stages. Likewise, activity in parietal association cortex could either reflect a late locus of visual-tactile interactions or be driven by interactions at earlier processing stages that project into the respective area. The timing of direction-specific interactions between visual and tactile motion stimuli could differentiate these options; however, interactions between visual and tactile motion stimuli have not been investigated with time-sensitive methods.

To test the role of awareness in interactions between visual and tactile motions signals, we manipulated visual awareness while keeping the physical stimulation constant using the binocular rivalry paradigm. In binocular rivalry, two different images are presented to corresponding retinal locations of both eyes. Visual awareness typically alternates between the two images over time (for reviews on binocular rivalry see Alais, 2012; Blake & Logothetis, 2002). Only one image is perceived at a time (the dominant image), the other image is suppressed from awareness. Image-features, such as image contrast, can influence the duration of the dominance and suppression periods of an image (Levelt, 1965). Crucially, if an experimental manipulation targeted at a currently suppressed image affects its perception, this effect occurs in the absence of visual awareness.

Binocular rivalry impedes processing of a suppressed visual stimulus at multiple stages. Thereby, the depth of suppression is assumed to increase along the processing pathway (Nguyen, Freeman, & Alais, 2003; Nguyen, Freeman, & Wenderoth, 2001). Consequently, it needs to be clarified that a suppressed visual motion stimulus is processed to an extent that allows for interactions with motion stimuli presented in other modalities. Local motion cues seem to be fully processed in the absence of awareness, because they induce motion after-effects even when being perceptually suppressed during the adaptation phase (Lehmkuhle & Fox, 1975). Similarly, after-effects to spiral motion occur even if the stimulus was suppressed during adaptation – although these effects are reduced in magnitude compared to adaptation with motion stimuli participants were aware of (Kaunitz, Fracasso, & Melcher, 2011). Thus, translational and more complex types of visual motion, associated with processing within the primary visual cortex and the V5/MT+-complex (Adelson & Movshon, 1982; Movshon & Newsome, 1996), seem to be processed in the absence of visual awareness.

Multisensory interactions in the absence of visual awareness exist for several stimulus features (reviewed in Faivre, Arzi, Lunghi, & Salomon, 2017). Most relevant to our study, visual and tactile texture information interact in the absence of visual awareness: Touching a plastic grating stimulus causes perception to bring a suppressed visual grating stimulus back into awareness more quickly if the tactile and the suppressed visual stimulus match in orientation and frequency (Lunghi & Alais, 2013; Lunghi, Binda, & Morrone, 2010). Further, the sensitivity to visual probe stimuli, which are superimposed on a suppressed congruent visual stimulus, increases if congruent haptic stimuli are presented (Lunghi & Alais, 2015). Thus, static visual and tactile stimuli interact in the absence of visual awareness.

However, whether these findings generalize to a dynamic context remains unclear. Under visual awareness, the currently perceived rotation of a visually presented bistable globe remained dominant for longer time periods if participants at the same time touched a globe rotating in the same direction (Blake et al., 2004). However, only for three out of five participants, tactile rotation in the other, unseen direction shortened the duration of the suppression period. Moreover, this effect of congruent tactile motion on suppressed visual motion was weaker than the corresponding effect on dominant visual motion. Therefore, it remains open whether dynamic visual stimuli need to reach awareness before they can be subject to modulation from other sensory modalities. Notably, studies in which awareness appeared to be necessary for multisensory interactions involved dynamic visual-auditory stimulus pairs matched with respect to melody, rhythm, or motion (Conrad, Bartels, Kleiner, & Noppeney, 2010; Kang & Blake, 2005; Lee, Blake, Kim, & Kim, 2015; Moors, Huygelier, Wagemans, de-Wit, & van Ee, 2015; but see Lunghi, Morrone, & Alais, 2014), suggesting that the role of awareness for cross-modal interactions differs between static and dynamic stimuli.

The present study investigated the role of visual awareness for interactions between tactile and visual translational motion signals. To this end, we dichoptically presented visual motion stimuli under binocular motion rivalry and intermittently added periods of tactile motion stimulation. We predicted that interactions between visual and tactile motion signals bias the temporal dynamics of rivalry in favor of the congruently moving visual stimulus. We generally expect visual-tactile interactions under awareness to be reflected in an extension of the time until perception switches away from a congruently moving dominant visual stimulus compared to a unimodal baseline. If visual-tactile motion signals interact in the absence of awareness, perceptions are expected to switch faster towards a congruently moving suppressed visual stimulus. Thus, binocular rivalry offers the unique advantage to simultaneously test for multisensory interactions in the presence and in the absence of visual awareness.

The depth of binocular rivalry suppression changes over the course of an ongoing perceptual period: The suppression of a signal is stronger at the beginning than towards the end of a suppression period (Alais, Cass, O’Shea, & Blake, 2010). Therefore, we presented the tactile stimulus at varying points within the time course of binocular rivalry, to test for graded effects of visual awareness on visual-tactile interactions.

Methods

Participants

Twenty-one students from the University of Hamburg took part in the experiment (age: M = 23 years, SD = 3.5, 16 female). The data for one participant were excluded because of poor visual acuity (< 0.7 decimal acuity) and the data for another one were excluded because of a technical problem during data acquisition. All remaining 19 participants had normal or corrected-to-normal vision as checked by a Snellen visual acuity screening (Freiburger Visual Acuity Test, Bach, 2006), as well as normal color- and stereovision according to self-report. All participants reported to be free of tactile impairments and were naïve with respect to the purpose of the experiment. They gave written informed consent prior to the experiment and received course credits in return for their participation. The study was conducted in accordance with the guidelines of Helsinki and was approved by the local ethics committee.

Materials

Visual stimuli

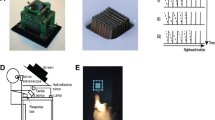

Visual stimuli (Fig. 1a) consisted of two horizontally oriented sinusoidal gratings (size: 2.8 × 2.8° or 2.3 × 2.3 cm; spatial frequency: 3.4 cycles/° or 3 cycles/cm). The gratings were framed by a Gaussian envelope (SD = 0.75°) and presented on a uniformly gray background (40 cd/m2). The stimuli were viewed dichoptically with an effective viewing distance of about 47 cm. The distance between the gratings was adjusted to fit participants’ interocular distance. The phase of each grating was shifted gradually, thereby inducing the perception of vertical translational motion for both gratings (temporal frequency of 0.97 Hz equivalent to 3.3 °/s or 2.65 cm/s), but in opposite directions (upwards vs. downwards). One grating was tinted in orange, the other one in blue (CIE 1931 x = 0.42, y = 0.39 and x = 0.23, y = 0.23; average luminance of 45 and 36 cd/m2, respectively). As color and motion perception can become uncoupled during binocular rivalry (Carney, Shadlen, & Switkes, 1987; Hong & Blake, 2009), we ensured in a control experiment that in our setup color and motion percepts remained coupled under binocular rivalry (see Supplementary Material, Experiment 1). To ease vergence of the eyes during binocular rivalry trials, a centrally presented, red fixation cross (height and width 1.12°) and simple monochromatic frames surrounding the rivalry stimuli (spanning 4.5° to the left and right from the fixation cross) were presented to both eyes. Visual stimuli were created and presented using Matlab (2014b) with the Psychtoolbox (3.0) extension (Brainard, 1997; Kleiner et al., 2007; Pelli, 1997).

Stimuli and experimental setup. (a) Visual stimuli were comprised of two horizontal gratings, which drifted in opposite vertical directions and were tinted in different colors. Vergence of the eyes was facilitated by presenting black and white frames and a fixation cross to both eyes. (b) For tactile stimulation, the index finger rested on a plastic wheel with an imprinted horizontal grating structure. Rotation of the wheel created the percept of vertical tactile motion. (c) Technical (left) and 3-D illustration (right) of the experimental setup. Participants viewed the visual stimuli through a stereoscope. The display was reflected by a mirror mounted below the screen. A box on the table held the structured plastic wheel. Participants placed their index finger on a hole in the box to touch the wheel. The box was slanted to match the virtual visual plane and positioned to match the individually perceived position of the visual stimulus

Tactile stimuli

Tactile stimuli were induced by rotation of a plastic wheel on which the index finger rested. The wheel was imprinted with a sinusoidal grating of horizontally alternating ridges and grooves (Fig. 1b). The spatiotemporal profile of the sinusoids was identical to that of the visual gratings, with a spatial frequency of 3 cycles/cm (around 2 mm maximal indentation depth), moving at a speed of 2.65 cm/s. The sinusoids were perceived as moving vertically, that is, upwards or downwards in eye-centered coordinates. We verified in a separate control experiment that the induced directional motion percepts were clearly identifiable despite concurrent visual motion stimulation (see Supplementary Material, Experiment 2).

Apparatus and setup

We used a mirror setup to spatially align tactile and visual stimuli so that the visual stimulus appeared to be located in the same point in space as the tactile stimulus (Fig. 1c). Visual stimuli were presented on a calibrated LCD-computer screen (50.8 × 28.5 cm, resolution: 1,920 × 1,080 pixel, 8-bit color-depth, 60-Hz refresh rate), which was mounted in parallel to the tabletop. A mirror stereoscope (Screenscope, Stereoaids, Albany, WA, Australia) was used to present the visual stimuli dichoptically. The plastic wheel (9.5 cm diameter, 3 cm width) used for tactile stimulation was rotated by a stepper motor, controlled by a microcontroller board (Arduino Uno R3). The wheel was contained within a box which was slanted analogous to the perceived visual plane. Participants sat in a dimly lit room and listened to white noise presented via headphones to mask any sounds produced by the motor. Button press responses were registered by an external device synchronized with the computer (Neurocore, Germany).

Procedure

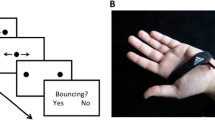

Before each trial, a red fixation cross was dichoptically presented, that is, one half of the cross was presented to either eye. The trial started once participants reported to perceive one fused fixation cross in the center of the screen, as this indicated successful vergence of the eyes onto one point. In each trial, two differently colored gratings, drifting in opposite vertical directions, were presented for 69 s. Four tactile motion stimuli were presented per trial. Each of the motion stimuli lasted 5 s (Fig. 2a) and motion stimuli started at semi-regular intervals, that is, 10, 10.5, 11, 11.5, or 12 s with an onset jitter of -200 to 200 ms (uniform distribution) after the end of the preceding tactile stimulus. Each combination of visual motion direction (up, down) and color (orange, blue) as well as the left-right arrangement of the two stimuli was shown 13 times, presented in randomized order across the experiment. Both tactile motion directions (up, down) were presented 104 times; tactile motion directions were randomized within trials. All but two participants performed a total of 52 trials. The remaining two participants performed slightly less trials (49 and 43 trials, respectively) due to time constraints. Testing time amounted to approximately 90 min, including breaks.

Independent and dependent variables. (a) This figure illustrates the timeline of an example trial: Participants continuously reported the color of the grating they were currently seeing. These color reports were used to infer the perceived visual motion direction. Opaque colored rectangles represent the reported color. Four tactile stimuli were presented per trial, represented here as shaded rectangles. The color of the rectangles indicates whether the tactile motion was direction-congruent to the currently dominant (matching colors) or to the currently suppressed visual stimulus (mismatching colors). (b) Each trial was cut into segments (black boxes), which were locked to the onset of the tactile stimulation. Visual-tactile segments started and unimodal segments ended with the onset of a tactile stimulus. The analyzed segments were longer than the tactile stimulation (+2.5 s) to encompass switches in visual motion perception occurring shortly after the offset of a tactile stimulation. (c) Enlarged view of the second and third visual-tactile segments from the above shown perceptual timeline. Within each segment, we measured switch times, that is, the time until the first switch in visual motion perception from the onset of the segment (indicated by an arrow). In the left segment, the first switch in perception following the onset of tactile stimulation ends a suppression period of the congruent (blue) visual stimulus. In the right segment, the first switch ends a dominance period of the congruent (orange) visual stimulus. Curly brackets indicate the time period since the last switch before the beginning of the segment. Note that the time period starting with the last switch preceding the segment (tactile stimulus onset) and the time period until the first switch within the segment taken together describe one perceptual period

Task

At most time points during a trial, participants saw only one of the two different visual gratings. Participants were asked to continuously report the color of the currently seen – that is, the dominant visual stimulus – by keeping the corresponding button pressed. Whenever they had a percept in which information from both images was fused (such as when perceiving a patchy or unidentifiable color or a motion standstill), participants were asked to release both buttons.

Design

We tested the influence of tactile stimulation on the duration of dominance and suppression periods of the congruently moving visual stimulus. To this aim, we analyzed the length of the time interval from the onset of the tactile stimulation to the first perceptual switch, in short “switch times” (Fig. 2c). This analysis included three different factors: (1) stimulus modality, contrasting phases of visual-tactile stimulation with phases of unimodal (visual-only) stimulation; (2) perceptual state of the congruently moving visual stimulus, which could either be dominant or suppressed at onset of the tactile stimulation; (3) depth of suppression, indicated by the duration of the ongoing perceptual period at the onset of a tactile stimulation.

Stimulus modality: Visual-tactile and unimodal time-segments

Each trial was cut into visual-tactile and unimodal time-segments locked to the onsets of the tactile stimuli presented during that trial. Visual-tactile segments started with the onset of a tactile stimulus; unimodal segments ended with the onset of a tactile stimulus (Fig. 2b). All segments were 7.5 s long, which is the maximal length of a segment without creating a temporal overlap between subsequent segments. We used a segment length that exceeded the tactile stimulus duration, because the effect of a tactile stimulus on the duration of a perceptual period might unfold after or extend beyond the end of the tactile stimulation.

Perceptual state of the congruent visual stimulus: Dominant and suppressed

We categorized visual-tactile segments with respect to the perceptual state of the congruent visual stimulus at the onset of the tactile stimulation. Unimodal segments cannot be categorized this way, as visual-tactile congruency requires bimodal stimulation. We pseudo-randomly categorized unimodal segments as congruent or incongruent, while ensuring that in each category the frequency of the different visual stimuli (for example, orange, upwards moving, presented to the right eye) in unimodal segments matched their frequency in visual-tactile segments (for details, see Supplementary Material, Fig. S3). By doing so we compared similar numbers of visual-tactile and unimodal segments and, at the same time, controlled for potential effects of visual stimulus identity (such as biases for one color or eye).

Depth of suppression: Length of the ongoing perceptual period

We coded for each segment the length of the time interval since the last perceptual switch before the onset of the tactile stimulation or the beginning of the segment (Fig. 2c).

Adjusting for motor delays

Button presses are significantly delayed relative to the perceptual changes they inform about; here we assumed an average motor response time of 350 ms (Dieter, Melnick, & Tadin, 2015) and adjusted the response timeline accordingly.

Data preprocessing

We asked participants to continuously report their perception to be able to identify and remove time periods with mixed or otherwise unclear percepts. These periods were indicated by longer periods of time (> 350 ms) in which neither of the two buttons was pressed. The mean duration of these unclear or fusion percepts was 880 ms (SD = 520 ms) and they made up 4% of the recorded timepoints per participant (SD = 4%). Longer periods (> 350 ms) in which both buttons were pressed were removed as well (1.1% of the recorded time points per participant, SD = 1.5%). Removal of these time periods led to removal of 5% of all segments per participant (SD = 4.9%), as segments starting with an unclear percept could not be categorized as congruent or incongruent.

During transitions from pressing one button to pressing the other button, participants often accidently pressed either both or none of the buttons for short periods of time (< 350 ms). Such undefined, presumably accidental responses were assigned to the subsequently pressed button.

The distribution of switch times was markedly right-skewed with a long distribution tail. Segments with switch times longer than the segment length were removed (0.5% of all segments per participant, SD = 0.7%), because often the time point of such switches lay within the boundaries of the subsequent segment. For analogous reasons, segments with very long periods between the last preceding perceptual switch and the onset of the segment (> 7.5 s) were removed as well (1.5% of all segments per participant, SD = 1.8%).

Finally, around 1% of visual-tactile segments had to be removed due to a technical error of the response recording device.

Around 93% of all recorded segments (SD = 5.5%) were analyzed, comprising on average 184 visual-tactile and 189 unimodal segments per participant. In half of the visual-tactile segments (on average 91 segments per participant, SD = 10), the congruent visual stimulus was dominant and in the other half (on average 93 segments per participant, SD = 11), the congruent visual stimulus was suppressed at the onset of tactile stimulation.

Statistical analysis

We fitted generalized linear mixed models with a Gamma distribution family and log link function to the data. The Gamma family accounts for the skewedness of the switch time distribution and the log link function translates the expected values onto an unbounded (log-) scale.

Model 1 quantified the effect of congruent tactile stimulation upon currently dominant visual stimuli. We compared switch times ending dominance periods of congruent stimuli between visual-tactile and unimodal segments (factor: stimulus modality). The durations for which the respective stimulus had already been dominant prior to the start of a segment, that is, the time periods since the preceding perceptual switch, were log-transformed and entered as covariates into the model.

Model 2 quantified the effect of congruent tactile stimulation upon currently suppressed visual stimuli. We compared switch times ending the suppression periods of congruent visual stimuli between visual-tactile segments and unimodal segments. As before, the duration for which the respective stimulus had already been suppressed prior to the start of a segment was entered as a log-transformed covariate into the model.

Significant interactions were resolved by splitting the data along each participant’s median of the time interval between the last preceding perceptual switch and the onset of the segment. Both subsets of data were tested for an effect of stimulus modality on switch times by comparing visual-tactile against unimodal segments.

All three models were fitted in R 3.42 (2017) using the lme4-package (Bates, Mächler, Bolker, & Walker, 2015). The significance level was set to 5%. We applied summation contrast coding for categorical factors and omnibus p-values were derived via likelihood ratio tests. For follow-up tests on interactions, p-values were adjusted for multiple testing using the Holm-method (Holm, 1979). In all models, we included random intercepts as well as all random slopes to fit individual biases, thereby keeping the random effect structure maximal (Barr, Levy, Scheepers, & Tily, 2013).

Results

Switch times ending dominance periods of congruent visual stimuli

Perceptual switches ending dominance periods of visual stimuli occurred significantly later under concurrent congruent tactile stimulation than during unimodal periods (χ2(1) = 4. 43, p = 0.035; Fig. 3a, left panel). Perceptual switches occurred generally earlier if the congruent stimulus had already been dominant for a longer time period (χ2(1) = 143, p < 0.001). However, the effect of stimulus modality did not significantly vary with the duration for which the congruent visual stimulus had already been dominant (χ2(1) = 0.021, p = 0.650).

Switch times as a function of the perceptual status of the congruent visual stimulus and the time passed since the preceding perceptual switch. (a) Perceptual switches occuring during visual-tactile (red) and unimodal segments (blue) could either end dominance (left panel, Model 1) or suppression periods (right panel, Model 2) of congruently moving visual stimuli and their correspondents during unimodal stimulation. Group mean switch times and standard errors are shown separately for segments in which the preceding switch occurred “recently” and segments for which it occurred “longer ago” (relative to the median). In the statistical model these durations were entered as a continuous variable; the median split was used to follow-up on significant interactions involving this continuous variable. (b) Switch times for perceptual switches ending suppression periods of congruent visual stimuli as a function of the time passed since the last preceding perceptual switch. For this illustration, the time passed since the last preceding perceptual switch has been averaged into 5 temporal bins each spanning 1,500 ms. The size of the marker indicating the mean of the switch times is scaled by the average number of switch times within the respective temporal bin per participant. Note that in most cases, switches preceding the current perceptual switch occurred less than 2 s ago (with a median of 1,400 ms)

Switch times ending suppression periods of congruent visual stimuli

Switches of perception ending suppression periods of visual stimuli did not generally occur significantly faster under concurrent, congruent tactile stimulation than during unimodal periods (χ2(1) = 1.19, p = 0.276). Switches of perception generally occurred earlier if the congruent visual stimulus had already been suppressed for a longer time period (χ2(1) = 167, p < 0.001). Importantly, the effect of stimulus modality significantly increased with the length of the time period for which the congruent visual stimulus had been suppressed prior to the start of the segment (χ2(1) = 9.5, p = 0.002). Splitting the data along the median of time periods since the last perceptual switch preceding the segment revealed a significant effect of stimulus modality only if the congruent visual stimulus had been suppressed for a longer time period at the onset of tactile stimulation (χ2(1) = 10.2, p = 0.003): Congruently moving visual stimuli remained suppressed for shorter time periods than corresponding visual stimuli in unimodal segments, if the tactile stimulus set in at a later stage of the suppression period (Fig. 3a, right panel; Fig. 3b).

Discussion

The present study investigated whether tactile motion influences the perception of direction-congruent visual motion in the presence and the absence of visual awareness. Alternations in awareness were induced by presenting two competing motion stimuli in a binocular rivalry paradigm. Tactile motion stimulation prolonged the time periods for which a congruently moving visual stimulus dominated perception, which indicates interactions of visual and tactile motion signals in the presence of awareness. In contrast, tactile motion stimuli shortened the suppression periods of congruently moving visual stimuli only if the congruent visual motion percept had been suppressed for a longer time at the onset of the tactile stimulus. Thus, tactile motion signals interacted with suppressed visual motion signals only at late stages of the suppression period, when the strength of suppression is supposed to decrease (Alais et al., 2010).

Overall, our results provide evidence for an interdependence of visual awareness and interactions between visual and tactile motion signals: The effect of congruent tactile motion stimuli on visual motion perception under awareness was stronger and more robust than its counterpart in the absence of awareness. This effect was independent of the duration of the perceptual period. In contrast, cross-modal effects on the currently suppressed visual stimuli were tied to a later stage of the suppression period. Visual stimuli that reach awareness are associated with stronger neuronal activation than stimuli outside the realm of awareness (Moutoussis & Zeki, 2002; Zeki & Ffytche, 1998), and exhibit more widespread and more persistent activity (Boly et al., 2011; Cul, Baillet, & Dehaene, 2007; Engel & Singer, 2001; Uhlhaas et al., 2009). Thus, awareness may increase the availability of information across different parts of the processing stream and longer time periods, which in turn might facilitate the integration of additional information (Mudrik, Faivre, & Koch, 2014). Processing of stimuli that do not reach awareness, instead, is assumed to be locally restricted and predominantly feed-forward (Boly et al., 2011), rendering information “encapsulated” (Mudrik et al., 2014, p. 490) and less accessible for integration with information from other modalities. Awareness might be particularly relevant for the integration of complex stimulus features, which require integration across time and space (Mudrik et al., 2014), such as the cross-modal motion stimuli. Consistent with this notion, many studies in which cross-modal congruency was defined by a dynamic feature, such as melody, rhythm, or motion, found no significant multisensory interactions in the absence of awareness (Kang & Blake, 2005; Lee et al., 2015; Moors et al., 2015).

We did find influences of tactile motion on the perception of suppressed visual motion stimuli if the tactile motion set in after longer periods of visual suppression. Under this condition, tactile motion appeared to facilitate the return to a dominance of the currently suppressed congruent visual motion stimulus. Therefore, rather than gating visual-tactile interactions, visual awareness might facilitate interactions of visual and tactile motion signals by allocating higher processing resources to the dominant congruent visual stimulation. Interactions between visual and tactile motion stimuli potentially rely on the availability of visual information at later processing stages. Due to the competition between visual signals at preceding processing stages and inhibitory feedback from neural populations representing the currently dominant visual percept, the non-dominant visual motion signal might not always reach such processing stages under binocular rivalry. As the depth of neural suppression of a visual stimulus declines over the course of a suppression period (Alais et al., 2010), the previously suppressed visual stimulus is expected to become increasingly available for multisensory interactions.

However, reduced depth of suppression does not exhaustively explain why tactile motion induced switches ending the perception of suppressed congruent visual stimuli only at later stages of a suppression period. If depth of suppression was the only regulating factor, ongoing tactile stimulation should elicit effects on suppressed visual stimuli during a later stage of the suppression period, too. However, effects of tactile motion on suppressed visual stimuli were reserved to instances in which tactile stimulation started – rather than continued – late into a visual suppression period. Therefore, additional factors besides suppression depth must determine whether tactile stimuli influence visual perception in the absence of awareness. Perhaps, cross-modal information has to be novel or particularly salient to bias visual competition. Possibly, tactile motion signals moving in the same direction as the suppressed visual motion are weakened too, and this effect might be proportional to the degree of the visual suppression. As a result, only salient tactile motion combined with weak visual suppression at the end of a suppression period might allow for a change in visual perception in favor of the suppressed congruent visual motion stimulus.

Analogous to our results, visual attention influences perception under binocular rivalry most strongly when the attentional cues are presented at the end of a dominance or suppression period (Dieter et al., 2015). This finding has been interpreted in the light of the biased competition theory of attention, which predicts stronger attentional effects on visual processing when visual conflict is high (Desimone, 1998; Desimone & Duncan, 1995). During advanced stages of a perceptual period, competition between stimuli transpires through multiple processing stages, thereby creating a situation of high conflict for the visual system (Dieter et al., 2015) and a call for attentional investment. Analogously to attentional control, cross-modal influences on visual perception might be particularly strong when visual conflict is high, such as at the end of a perceptual period in binocular rivalry.

We interpret our results as influences of tactile motion on congruently moving visual stimuli. However, in the present experiment, the competing visual stimuli always drifted in opposite directions. Therefore, an effect on the perception of a congruent visual stimulus always implied the reversed effect on incongruent visual stimuli. Yet, previous studies have indicated that cross-modal congruency - rather than incongruency - guides direction-specific interactions between motion signals. For instance, directional sounds have been shown to affect the perception of congruent but not of incongruent visual motion stimuli when the stimuli separately competed against a directionally-neutral stimulus in a binocular rivalry paradigm (see Conrad et al., 2010, for a thorough discussion on this issue). Furthermore, congruent cross-modal motion signals are associated with improved performance in direction and speed discrimination tasks compared to unimodal stimuli. In contrast, incongruent cross-modal motion signals do not result in lower performance compared to unimodal conditions (Gori et al., 2011; Meyer, Wuerger, Röhrbein, & Zetzsche, 2005). Noteworthy,the stimuli used in these studies were very similar to our stimuli (Gori et al., 2011). In specific scenarios, incongruent motion signals interact across modalities (Gori et al., 2011; Soto-Faraco, Spence, & Kingstone, 2004). However, the direction of these effects should lead to orthogonal effects relative to ours, which further speaks against influences of touch on incongruent visual motion signals in our study. Based on this literature, we argue that tactile motion signals interacted with congruent visual motion stimuli independent of their perceptual state.

Conclusion

In conclusion, tactile motion can influence the perception of congruent visual motion in the presence and the absence of awareness, but in the latter case only if the suppression of awareness has decreased to some degree. We argue that this pattern of results reflects a crucial role of visual awareness in facilitating but not gating interactions between visual and tactile motion signals.

References

Adelson, E. H., & Movshon, J. A. (1982). Phenomenal coherence of moving visual patterns. Nature, 300(5892), 523. doi:https://doi.org/10.1038/300523a0

Alais, D. (2012). Binocular rivalry: Competition and inhibition in visual perception. Wiley Interdisciplinary Reviews: Cognitive Science, 3(1), 87–103. doi:https://doi.org/10.1002/wcs.151

Alais, D., Cass, J., O’Shea, R. P., & Blake, R. (2010). Visual sensitivity underlying changes in visual consciousness. Current Biology, 20(15), 1362–1367. doi:https://doi.org/10.1016/j.cub.2010.06.015

Avillac, M., Hamed, S. B., & Duhamel, J.-R. (2007). Multisensory Integration in the Ventral Intraparietal Area of the Macaque Monkey. Journal of Neuroscience, 27(8), 1922–1932. doi:https://doi.org/10.1523/JNEUROSCI.2646-06.2007

Bach, M. (2006). The Freiburg visual acuity test-variability unchanged by post-hoc re-analysis. Graefe’s Archive for Clinical and Experimental Ophthalmology, 245(7), 965–971. doi:https://doi.org/10.1007/s00417-006-0474-4

Barr, D. J., Levy, R., Scheepers, C., & Tily, H. J. (2013). Random effects structure for confirmatory hypothesis testing: Keep it maximal. Journal of Memory and Language, 68(3), 255–278. doi:https://doi.org/10.1016/j.jml.2012.11.001

Bates, D., Mächler, M., Bolker, B., & Walker, S. (2015). Fitting linear mixed-effects models using lme4. Journal of Statistical Software, 67(1). doi:https://doi.org/10.18637/jss.v067.i01

Bensmaïa, S. J., Killebrew, J. H., & Craig, J. C. (2006). Influence of visual motion on tactile motion perception. Journal of Neurophysiology, 96(3), 1625–1637. doi:https://doi.org/10.1152/jn.00192.2006

Blake, R., & Logothetis, N. K. (2002). Visual competition. Nature Reviews Neuroscience, 3(1), 13–21. doi:https://doi.org/10.1038/nrn701

Blake, R., Sobel, K. V., & James, T. W. (2004). Neural synergy between kinetic vision and touch. Psychological Science, 15(6), 397–402. doi:https://doi.org/10.1111/j.0956-7976.2004.00691.x

Boly, M., Garrido, M. I., Gosseries, O., Bruno, M.-A., Boveroux, P., Schnakers, C., … Friston, K. (2011). Preserved feedforward but impaired top-down processes in the vegetative state. Science, 332(6031), 858–862. doi:https://doi.org/10.1126/science.1202043

Brainard, D. H. (1997). The psychophysics toolbox. Spatial Vision, 10(4), 433–436. doi:https://doi.org/10.1163/156856897X00357

Bremmer, F., Schlack, A., Shah, N. J., Zafiris, O., Kubischik, M., Hoffmann, K.-P., … Fink, G. R. (2001). Polymodal motion processing in posterior parietal and premotor cortex: A human fMRI study strongly implies equivalencies between humans and monkeys. Neuron, 29(1), 287–296. doi:https://doi.org/10.1016/S0896-6273(01)00198-2

Butz, M. V., Thomaschke, R., Linhardt, M. J., & Herbort, O. (2010). Remapping motion across modalities: Tactile rotations influence visual motion judgments. Experimental Brain Research, 207(1–2), 1–11. doi:https://doi.org/10.1007/s00221-010-2420-2

Carney, T., Shadlen, M., & Switkes, E. (1987). Parallel processing of motion and colour information. Nature, 328(6131), 647–649. doi:https://doi.org/10.1038/328647a0

Conrad, V., Bartels, A., Kleiner, M., & Noppeney, U. (2010). Audiovisual interactions in binocular rivalry. Journal of Vision, 10(10), 27. doi:https://doi.org/10.1167/10.10.27

Craig, J. C. (2006). Visual motion interferes with tactile motion perception. Perception, 35(3), 351–367. doi:https://doi.org/10.1068/p5334

Cul, A. D., Baillet, S., & Dehaene, S. (2007). Brain dynamics underlying the nonlinear threshold for access to consciousness. PLOS Biology, 5(10), e260. doi:https://doi.org/10.1371/journal.pbio.0050260

Desimone. (1998). Visual attention mediated by biased competition in extrastriate visual cortex. Philosophical Transactions of the Royal Society B: Biological Sciences, 353(1373), 1245–1255.

Desimone, R., & Duncan, J. (1995). Neural mechanisms of selective visual attention. Annual Review of Neuroscience, 18(1), 193–222. doi:https://doi.org/10.1146/annurev.ne.18.030195.001205

Dieter, K. C., Melnick, M. D., & Tadin, D. (2015). When can attention influence binocular rivalry? Attention, Perception & Psychophysics, 77(6), 1908–1918. doi:https://doi.org/10.3758/s13414-015-0905-6

Engel, A. K., & Singer, W. (2001). Temporal binding and the neural correlates of sensory awareness. Trends in Cognitive Sciences, 5(1), 16–25. doi:https://doi.org/10.1016/S1364-6613(00)01568-0

Faivre, N., Arzi, A., Lunghi, C., & Salomon, R. (2017). Consciousness is more than meets the eye: A call for a multisensory study of subjective experience. Neuroscience of Consciousness, 2017(1). doi:https://doi.org/10.1093/nc/nix003

Gori, M., Mazzilli, G., Sandini, G., & Burr, D. (2011). Cross-sensory facilitation reveals neural interactions between visual and tactile motion in humans. Frontiers in Psychology, 2, 55. doi:https://doi.org/10.3389/fpsyg.2011.00055

Gori, M., Sciutti, A., Jacono, M., Sandini, G., Morrone, C., & Burr, D. C. (2013). Long integration time for accelerating and decelerating visual, tactile and visuo-tactile stimuli. Multisensory Research, 26(1–2), 53–68. doi:https://doi.org/10.1163/22134808-00002397

Hagen, M. C., Franzén, O., McGlone, F., Essick, G., Dancer, C., & Pardo, J. V. (2002). Tactile motion activates the human middle temporal/V5 (MT/V5) complex: Tactile motion in hMT/V5. European Journal of Neuroscience, 16(5), 957–964. doi:https://doi.org/10.1046/j.1460-9568.2002.02139.x

Holm, S. (1979). A simple sequentially rejective multiple test procedure. Scandinavian Journal of Statistics, 6(2), 65–70.

Hong, S. W., & Blake, R. (2009). Interocular suppression differentially affects achromatic and chromatic mechanisms. Attention, Perception & Psychophysics, 71(2), 403–411. doi:https://doi.org/10.3758/APP.71.2.403

Jiang, F., Beauchamp, M. S., & Fine, I. (2015). Re-examining overlap between tactile and visual motion responses within hMT+ and STS. NeuroImage, 119, 187–196. doi:https://doi.org/10.1016/j.neuroimage.2015.06.056

Kang, M.-S., & Blake, R. (2005). Perceptual synergy between seeing and hearing revealed during binocular rivalry. Psichologija, 32, 7–15.

Kaunitz, L., Fracasso, A., & Melcher, D. (2011). Unseen complex motion is modulated by attention and generates a visible aftereffect. Journal of Vision, 11(13), 10–10. doi:https://doi.org/10.1167/11.13.10

Kleiner, M., Brainard, D., Pelli, D., Ingling, A., Murray, R., & Broussard, C. (2007). What’s new in psychtoolbox-3. Perception, 36(14), 1–16. doi:https://doi.org/10.1068/v070821

Konkle, T., Wang, Q., Hayward, V., & Moore, C. I. (2009). Motion aftereffects transfer between touch and vision. Current Biology, 19(9), 745–750. doi:https://doi.org/10.1016/j.cub.2009.03.035

Krebber, M., Harwood, J., Spitzer, B., Keil, J., & Senkowski, D. (2015). Visuotactile motion congruence enhances gamma-band activity in visual and somatosensory cortices. NeuroImage, 117, 160–169. doi:https://doi.org/10.1016/j.neuroimage.2015.05.056

Lee, M., Blake, R., Kim, S., & Kim, C.-Y. (2015). Melodic sound enhances visual awareness of congruent musical notes, but only if you can read music. Proceedings of the National Academy of Sciences, 112(27), 8493–8498. doi:https://doi.org/10.1073/pnas.1509529112

Lehmkuhle, S. W., & Fox, R. (1975). Effect of binocular rivalry suppression on the motion aftereffect. Vision Research, 15(7), 855–859. doi:https://doi.org/10.1016/0042-6989(75)90266-7

Levelt, W. J. M. (1965). On Binocular Rivlary. Retrieved from http://www.mpi.nl/world/materials/publications/levelt/Levelt_Binocular_Rivalry_1965.pdf

Lunghi, C., & Alais, D. (2013). Touch interacts with vision during binocular rivalry with a tight orientation tuning. PloS One, 8(3), e58754. doi:https://doi.org/10.1371/journal.pone.0058754

Lunghi, C., & Alais, D. (2015). Congruent tactile stimulation reduces the strength of visual suppression during binocular rivalry. Scientific Reports, 5, 9413. doi:https://doi.org/10.1038/srep09413

Lunghi, C., Binda, P., & Morrone, M. C. (2010). Touch disambiguates rivalrous perception at early stages of visual analysis. Current Biology, 20(4), R143-144. doi:https://doi.org/10.1016/j.cub.2009.12.015

Lunghi, C., Morrone, M. C., & Alais, D. (2014). Auditory and tactile signals combine to influence vision during binocular rivalry. Journal of Neuroscience, 34(3), 784–792. doi:https://doi.org/10.1523/JNEUROSCI.2732-13.2014

Lyons, G., Sanabria, D., Vatakis, A., & Spence, C. (2006). The modulation of crossmodal integration by unimodal perceptual grouping: A visuotactile apparent motion study. Experimental Brain Research, 174(3), 510–516. doi:https://doi.org/10.1007/s00221-006-0485-8

Meyer, G. F., Wuerger, S. M., Röhrbein, F., & Zetzsche, C. (2005). Low-level integration of auditory and visual motion signals requires spatial co-localisation. Experimental Brain Research, 166(3–4), 538–547. doi:https://doi.org/10.1007/s00221-005-2394-7

Moors, P., Huygelier, H., Wagemans, J., de-Wit, L., & van Ee, R. (2015). Suppressed visual looming stimuli are not integrated with auditory looming signals: Evidence from continuous fash suppression. I-Perception, 6(1), 48–62. doi:https://doi.org/10.1068/i0678

Moutoussis, K., & Zeki, S. (2002). The relationship between cortical activation and perception investigated with invisible stimuli. Proceedings of the National Academy of Sciences, 99(14), 9527–9532. doi:https://doi.org/10.1073/pnas.142305699

Movshon, J. A., & Newsome, W. T. (1996). Visual response properties of striate cortical neurons projecting to area mt in macaque monkeys. Journal of Neuroscience, 16(23), 7733–7741.

Mudrik, L., Faivre, N., & Koch, C. (2014). Information integration without awareness. Trends in Cognitive Sciences, 18(9), 488–496. doi:https://doi.org/10.1016/j.tics.2014.04.009

Nguyen, V. A., Freeman, A. W., & Alais, D. (2003). Increasing depth of binocular rivalry suppression along two visual pathways. Vision Research, 43(19), 2003–2008. doi:https://doi.org/10.1016/S0042-6989(03)00314-6

Nguyen, V. A., Freeman, A. W., & Wenderoth, P. (2001). The depth and selectivity of suppression in binocular rivalry. Perception & Psychophysics, 63(2), 348–360. doi:https://doi.org/10.3758/BF03194475

Pei, Y.-C., Chang, T.-Y., Lee, T.-C., Saha, S., Lai, H.-Y., Gomez-Ramirez, M., … Wong, A. (2013). Cross-modal sensory integration of visual-tactile motion information: Instrument design and human psychophysics. Sensors, 13(6), 7212–7223. doi:https://doi.org/10.3390/s130607212

Pelli, D. G. (1997). The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spatial Vision, 10(4), 437–442. doi:https://doi.org/10.1163/156856897X00366

Soto-Faraco, S., Spence, C., & Kingstone, A. (2004). Cross-modal dynamic capture: Congruency effects in the perception of motion across sensory modalities. Journal of Experimental Psychology: Human Perception and Performance, 30(2), 330–345. doi:https://doi.org/10.1037/0096-1523.30.2.330

Summers, I. R., Francis, S. T., Bowtell, R. W., McGlone, F. P., & Clemence, M. (2009). A functional-magnetic-resonance-imaging investigation of cortical activation from moving vibrotactile stimuli on the fingertip. The Journal of the Acoustical Society of America, 125(2), 1033–1039. doi:https://doi.org/10.1121/1.3056399

Uhlhaas, P. J., Pipa, G., Lima, B., Melloni, L., Neuenschwander, S., Nikolić, D., & Singer, W. (2009). Neural synchrony in cortical networks: History, concept and current status. Frontiers in Integrative Neuroscience, 3, 17. doi:https://doi.org/10.3389/neuro.07.017.2009

Ushioda, H., & Wada, Y. (2007). Multisensory integration between visual and tactile motion information: evidence from redundant-signals effects on reaction time. Proceedings of Fechner Day, 23(1). Retrieved from http://www.ispsychophysics.org/fd/index.php/proceedings/index

Zeki, S., & Ffytche, D. H. (1998). The Riddoch syndrome: Insights into the neurobiology of conscious vision. Brain: A Journal of Neurology, 121(1), 25–45.

Acknowledgements

Funding from the European Research Council (ERC-2009-AdG 249425-CriticalBrainChanges) and the German Research Foundation (DFG Ro 2625/10-1) to BR and (BA 5600/1-1) to SB. We thank Liesa Zwetzschke for help with data collection.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

ESM 1

(PDF 638 kb)

Rights and permissions

About this article

Cite this article

Hense, M., Badde, S. & Röder, B. Tactile motion biases visual motion perception in binocular rivalry. Atten Percept Psychophys 81, 1715–1724 (2019). https://doi.org/10.3758/s13414-019-01692-w

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-019-01692-w