Abstract

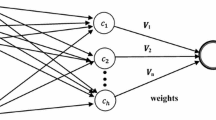

A reservoir radial-basis function neural network, which is based on the ideas of reservoir computing and neural networks and designated for solving extrapolation tasks of nonlinear non-stationary stochastic and chaotic time series under conditions of a short learning sample, is proposed in the paper. The network is built with the help of a radial-basis function neural network with an input layer, which is organized in a special manner and a kernel membership function. The proposed system provides high approximation quality in terms of a mean squared error and a high convergence speed using the second-order learning procedure. A software product that implements the proposed neural network has been developed. A number of experiments have been held in order to research the system’s properties. Experimental results prove the fact that the developed architecture can be used in Data Mining tasks and the fact that the proposed neural network has a higher accuracy compared to traditional forecasting neural systems.

Similar content being viewed by others

References

Jaeger, H., The Echo State Approach to Analyzing and Training Recurrent Neural Networks, Berlin: German National Research Center for Information Technology, 2001.

Maass, W., Legenstein, R.A., and Markram, H., A new approach towards vision suggested by biologically realistic neural microcircuit models, Proc. of the 2nd Workshop on Biologically Motivated Computer Vision, Lecture Notes in Computer Science, Berlin: Springer, 2002, pp. 124–132.

Jaeger, H., Short Term Memory in Echo State Networks, Berlin: German National Research Center for Information Technology, 2001.

Maass, W. and Natschläger, T., Fading memory and kernel properties of generic cortical microcircuit models, J. Physiol., Orlando: Academic Press, 2004, vol. 98, no. 4, pp. 315–330.

Jaeger, H., Reservoir riddles: Suggestions for echo state network research, Proceedings of the International Joint Conference on Neural Networks, New York: Springer-Verlag, 2005. pp. 1460–1462.

Jaeger, H., Adaptive nonlinear system identification with echo state networks, Adv. Neural Inf. Process. Syst., 2003, vol. 15, no. 3, pp. 593–600.

Steil, J.J., Online stability of backpropagation-decorrelation recurrent learning, Neurocomputing, 2006, vol. 69, no. 7, pp. 642–650.

Wyffels, F., Schrauwen, B., and Stroobandt, D., Using reservoir computing in a decomposition approach for time series prediction, Proceedings of the European Symposium on Time Series Prediction, Amsterdam, 2008, pp. 149–158.

Park, J. and Sandberg, I.W., Universal approximation using radial-basis-function networks, Neural Comput., 1991, vol. 3, pp. 246–257.

Sunil, E.V.T. and Yung, C.Sh., Radial basis function neural network for approximation and estimation of nonlinear stochastic dynamic systems, IEEE Trans. Neural Networks, 1994, vol. 5, pp. 594–603.

Bishop, C.M., Neural Networks for Pattern Recognition, Oxford: Clarendon Press, 1995.

Paetz, J., Reducing the number of neurons in radial basis function networks with dynamic decay adjustment, Neurocomputing, 2004, vol. 62, pp. 79–91.

Bodyanskiy, Ye.V. and Deineko, A.A., Adaptive learning of an architecture and RBFN’s parameters, Sist. Tekhnol., 2013, vol. 4, no. 87, pp. 166–173.

Bodyanskiy, Ye.V., Deineko, A.A., and Stolnikova, M.Z., Adaptive learning of all parameters in an evolving radial-basis function network, Sist. Obrobky Inf., 2013, vol. 5, no. 112, pp. 13–16.

Epanechnikov, V., Non-parametric estimation of multivariate probability density, Teor. Veroyatn. Ee Primen., 1968, vol. 14, no. 1, pp. 156–161.

Author information

Authors and Affiliations

Corresponding author

Additional information

The article is published in the original.

About this article

Cite this article

Tyshchenko, O.K. A reservoir radial-basis function neural network in prediction tasks. Aut. Control Comp. Sci. 50, 65–71 (2016). https://doi.org/10.3103/S0146411616020061

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3103/S0146411616020061