Abstract

In this study, a novel reinforcement learning task supervisor (RLTS) with memory in a behavioral control framework is proposed for human—multi-robot coordination systems (HMRCSs). Existing HMRCSs suffer from high decision-making time cost and large task tracking errors caused by repeated human intervention, which restricts the autonomy of multi-robot systems (MRSs). Moreover, existing task supervisors in the null-space-based behavioral control (NSBC) framework need to formulate many priority-switching rules manually, which makes it difficult to realize an optimal behavioral priority adjustment strategy in the case of multiple robots and multiple tasks. The proposed RLTS with memory provides a detailed integration of the deep Q-network (DQN) and long short-term memory (LSTM) knowledge base within the NSBC framework, to achieve an optimal behavioral priority adjustment strategy in the presence of task conflict and to reduce the frequency of human intervention. Specifically, the proposed RLTS with memory begins by memorizing human intervention history when the robot systems are not confident in emergencies, and then reloads the history information when encountering the same situation that has been tackled by humans previously. Simulation results demonstrate the effectiveness of the proposed RLTS. Finally, an experiment using a group of mobile robots subject to external noise and disturbances validates the effectiveness of the proposed RLTS with memory in uncertain real-world environments.

摘要

针对人—多机器人协同系统提出一种基于行为控制框架的带记忆强化学习任务管理器 (RLTS). 由于重复的人工干预, 现有人—多机器人协同系统决策时间成本高、 任务跟踪误差大, 限制了多机器人系统的自主性. 此外, 基于零空间行为控制框架的任务管理器依赖手动制定优先级切换规则, 难以在多机器人和多任务情况下实现最优行为优先级调整策略. 提出一种带记忆强化学习任务管理器, 基于零空间行为控制框架融合深度Q-网络和长短时记忆神经网络知识库, 实现任务冲突时最优行为优先级调整策略以及降低人为干预频率. 当机器人在紧急情况下置信度不足时, 所提带记忆强化学习任务管理器会记忆人类干预历史, 在遭遇相同人工干预情况时重新加载历史控制信号. 仿真结果验证了该方法的有效性. 最后, 通过一组受外界噪声和干扰的移动机器人实验, 验证了所提带记忆强化学习任务管理器在不确定现实环境中的有效性.

Similar content being viewed by others

References

Antonelli G, Chiaverini S, 2006. Kinematic control of platoons of autonomous vehicles. IEEE Trans Rob, 22(6):1285–1292. https://doi.org/10.1109/TRO.2006.886272

Aviv Y, Pazgal A, 2005. A partially observed Markov decision process for dynamic pricing. Manag Sci, 51(9):1400–1416. https://doi.org/10.1287/mnsc.1050.0393

Baizid K, Giglio G, Pierri F, et al., 2015. Experiments on behavioral coordinated control of an unmanned aerial vehicle manipulator system. IEEE Int Conf on Robotics and Automation, p.4680–4685. https://doi.org/10.1109/ICRA.2015.7139848

Baizid K, Giglio G, Pierri F, et al., 2017. Behavioral control of unmanned aerial vehicle manipulator systems. Auton Robot, 41(5):1203–1220. https://doi.org/10.1007/s10514-016-9590-0

Bajcsy A, Herbert SL, Fridovich-Keil D, et al., 2019. A scalable framework for real-time multi-robot, multi-human collision avoidance. Int Conf on Robotics and Automation, p.936–943. https://doi.org/10.1109/ICRA.2019.8794457

Bluethmann W, Ambrose R, Diftler M, et al., 2003. Robonaut: a robot designed to work with humans in space. Auton Robot, 14(2):179–197. https://doi.org/10.1023/A:1022231703061

Bogacz R, Brown E, Moehlis J, et al., 2006. The physics of optimal decision making: a formal analysis of models of performance in two-alternative forced-choice tasks. Psychol Rev, 113(4):700–765. https://doi.org/10.1037/0033-295X.113.4.700

Chen YT, Zhang ZY, Huang J, 2020. Dynamic task priority planning for null-space behavioral control of multi-agent systems. IEEE Access, 8:149643–149651. https://doi.org/10.1109/ACCESS.2020.3016347

Fu HJ, Chen SC, Lin YL, et al., 2019. Research and validation of human-in-the-loop hybrid-augmented intelligence in Sawyer. Chin J Intell Sci Technol, 1(3):280–286 (in Chinese). https://doi.org/10.11959/j.issn.2096-6652.201933

Gans NR, Rogers JG III, 2021. Cooperative multirobot systems for military applications. Curr Robot Rep, 2(1):105–111. https://doi.org/10.1007/s43154-020-00039-w

Graves A, Schmidhuber J, 2005. Framewise phoneme classification with bidirectional LSTM and other neural network architectures. Neur Netw, 18(5–6):602–610. https://doi.org/10.1016/j.neunet.2005.06.042

Honig S, Oron-Gilad T, 2018. Understanding and resolving failures in human-robot interaction: literature review and model development. Front Psychol, 9:861. https://doi.org/10.3389/fpsyg.2018.00861

Huang J, Zhou N, Cao M, 2019. Adaptive fuzzy behavioral control of second-order autonomous agents with prioritized missions: theory and experiments. IEEE Trans Ind Electron, 66(12):9612–9622. https://doi.org/10.1109/TIE.2019.2892669

Huang J, Wu WH, Zhang ZY, et al., 2020. A human decision-making behavior model for human-robot interaction in multi-robot systems. IEEE Access, 8:197853–197862. https://doi.org/10.1109/ACCESS.2020.3035348

Lee WH, Kim JH, 2018. Hierarchical emotional episodic memory for social human robot collaboration. Auton Robot, 42(5):1087–1102. https://doi.org/10.1007/s10514-017-9679-0

Lippi M, Marino A, 2018. Safety in human-multi robot collaborative scenarios: a trajectory scaling approach. IFAC-PapersOnLine, 51(22):190–196. https://doi.org/10.1016/j.ifacol.2018.11.540

Lippi M, Marino A, Chiaverini S, 2019. A distributed approach to human multi-robot physical interaction. IEEE Int Conf on Systems, Man and Cybernetics, p.728–734. https://doi.org/10.1109/SMC.2019.8914468

Mnih V, Kavukcuoglu K, Silver D, et al., 2015. Human-level control through deep reinforcement learning. Nature, 518(7540):529–533. https://doi.org/10.1038/nature14236

Mo ZB, Zhang ZY, Chen YT, et al., 2022. A reinforcement learning mission supervisor with memory for human-multi-robot coordination systems. Proc Chinese Intelligent Systems Conf, p.708–716. https://doi.org/10.1007/978-981-16-6320-8_72

Moreno L, Moraleda E, Salichs MA, et al., 1993. Fuzzy supervisor for behavioral control of autonomous systems. Proc 19th Annual Conf of IEEE Industrial Electronics, p.258–261. https://doi.org/10.1109/IECON.1993.339071

Queralta JP, Taipalmaa J, Pullinen BC, et al., 2020. Collaborative multi-robot search and rescue: planning, coordination, perception, and active vision. IEEE Access, 8:191617–191643. https://doi.org/10.1109/ACCESS.2020.3030190

Robla-Gómez S, Becerra VM, Llata JR, et al., 2017. Working together: a review on safe human-robot collaboration in industrial environments. IEEE Access, 5:26754–26773. https://doi.org/10.1109/ACCESS.2017.2773127

Rosenfeld A, Agmon N, Maksimov O, et al., 2017. Intelligent agent supporting human—multi-robot team collaboration. Artif Intell, 252:211–231. https://doi.org/10.1016/j.artint.2017.08.005

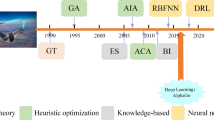

Wang HN, Liu N, Zhang YY, et al., 2020. Deep reinforcement learning: a survey. Front Inform Technol Electron Eng, 21(12):1726–1744. https://doi.org/10.1631/FITEE.1900533

Watkins CJCH, Dayan P, 1992. Q-learning. Mach Learn, 8(3–4):279–292. https://doi.org/10.1007/BF00992698

Zhang KQ, Yang ZR, Başar T, 2021. Decentralized multi-agent reinforcement learning with networked agents: recent advances. Front Inform Technol Electron Eng, 22(6):802–814. https://doi.org/10.1631/FITEE.1900661

Zheng NN, Liu ZY, Ren PJ, et al., 2017. Hybrid-augmented intelligence: collaboration and cognition. Front Inform Technol Electron Eng, 18(2):153–179. https://doi.org/10.1631/FITEE.1700053

Zhou BT, Sun CJ, Lin L, et al., 2018. LSTM based question answering for large scale knowledge base. Acta Sci Nat Univ Pek, 54(2):286–292 (in Chinese). https://doi.org/10.13209/j.0479-8023.2017.155

Author information

Authors and Affiliations

Contributions

Jie HUANG and Zhibin MO designed the research. Zhibin MO and Zhenyi ZHANG processed the data and drafted the paper. Jie HUANG and Yutao CHEN helped organize the paper. Jie HUANG, Zhibin MO, and Yutao CHEN revised and finalized the paper.

Corresponding author

Ethics declarations

Jie HUANG, Zhibin MO, Zhenyi ZHANG, and Yutao CHEN declare that they have no conflict of interest.

Additional information

Project supported by the National Natural Science Foundation of China (No. 61603094)

List of supplementary materials

Video S1 Behavioral control based on reinforcement learning for human—multi-robot coordination systems

Electronic supplementary materials

Rights and permissions

About this article

Cite this article

Huang, J., Mo, Z., Zhang, Z. et al. Behavioral control task supervisor with memory based on reinforcement learning for human—multi-robot coordination systems. Front Inform Technol Electron Eng 23, 1174–1188 (2022). https://doi.org/10.1631/FITEE.2100280

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1631/FITEE.2100280

Key words

- Human-multi-robot coordination systems

- Null-space-based behavioral control

- Task supervisor

- Reinforcement learning

- Knowledge base