Abstract

The FDA modernization act 2.0, signed into law by President Biden in December 2022 encourages the use of alternatives to animal testing for drug discovery. Cell-based assays are one important alternative, however they are currently not fit for purpose. The use of 3D, tissue engineered models represents a key development opportunity, to enable development of models of human tissues and organs. However, much remains to be done in terms of understanding the materials, both bioderived and synthetic that can be incorporated into the models, to provide structural support and also functional readouts. This perspective provides an overview on the history of drug safety testing, with a brief history on the origins of the Food and Drug Administration (FDA). It then goes on to discuss the current status of drug testing, outlining some of the limitations of animal models. In vitro, cell-based models are discussed as an alternative for some parts of the drug discovery process, with a brief foray into the beginnings of tissue culture and a comparison of 2D vs 3D cell culture. Finally, this perspective lays out the argument for implementing tissue engineering methods into in vitro models for drug discovery and safety testing.

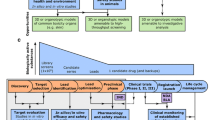

Graphical abstract

Drug safety testing is a long and expensive process. Advanced, tissue engineered (human) models such as organ-on-chip and spheroids or organoids, are higher throughput methods that can be used to complement, or sometimes replace, animal models currently used. Made with biorender.com

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Experimentation on animals has been a feature of medical research for centuries. In descriptions of early experiments by Robert Hooke carried out at the Royal Society in London in 1667, he expressed his distaste at experimenting on dogs.[1] Clearly, the ethical dilemma surrounding animal experimentation for human gain is not just a feature of the twentieth and twenty-first centuries but has been debated for much longer. It is only in recent decades however, that a move away from animal experimentation has seemed possible. Indeed, growing evidence is accumulating to suggest that alternatives to (non-human) animal testing could be more accurate in predicting toxicity of compounds to humans. In December 2022, I came across the following headline: “The FDA no longer needs to require animal tests before human drug trials”.[2] The article was referring to the FDA modernization act, signed into law by President Biden, which authorizes alternatives to animal testing for drug discovery, encouraging cell-based and in silico alternatives. In fact, upon closer inspection, it seems that the FDA did not remove a requirement, but instead made it clearer what is considered an acceptable alternative to animal testing. Now, more than ever, development of viable robust, alternatives is necessary. Most likely, some element of animal testing will be required for many drugs to come to market, although hopefully the scale of this testing will be greatly reduced. The new act also heralds a call to action for Materials Scientists. In this, the 50th year of the Materials Research Society’s existence, the increase in sophistication of materials and devices being applied to biology and medicine is remarkable. In my particular area of interest, that of building tissue engineered in vitro models for studying disease and screening new therapeutic compounds, the integration of synthetic or bio-derived materials as architectural scaffolds, in devices for reading out cellular function, or even stimulating cellular function is helping to drive progress. In combination with novel fabrication methods allowing miniaturisation, multiplexing, and additive manufacturing, it is clear to me that Materials Scientists will play an important role in the field of in vitro tissue engineering in the years to come. In this perspective I’ll describe the history of drug safety testing, outlining why it was necessary and how it has evolved over the past ~ 100 years (see Fig. 1). I’ll then give my take on the early years of in vitro tissue culture (cell growth in the laboratory) and why it was essential to integrate learning from tissue engineers, who were more focused on growing cellular constructs for implantation into the human body. I’ll end with some thoughts on the future directions of this very exciting research area and the relevance to Materials Scientists.

A short history of drug safety testing

In the early part of the twentieth century in the United States it was not uncommon for travelling salesmen or pharmacists to promote wonder cures or beauty enhancing products, correctly identified by some savvy members of the public as snake oil. But others were certainly duped with everything from eyelash dyes (Lash-Lure) that caused horrific blisters, ulcers, and even blindness for some, to radium containing tonics alleging to cure tuberculosis. The Elixir Sulfanilamide scandal was the nail on the coffin, however, that precipitated the formation of the FDA- the federal drug administration.[3] One salesman in Tennessee, realising that customers preferred a liquid dose to a tablet form, proposed to his parent company, Massengill, that the powdered form sulfanilamide antibiotic, be dissolved in a solvent. As a complex chemical molecule, sulfanilamide was poorly soluble in water, therefore a more potent solvent was proposed, diethylene glycol, a partially hydrolysed form of ethylene glycol (more commonly known as anti-freeze). Because the solvent was not that palatable, a raspberry flavouring was added. Following sales of the Elixir, 107 people died; first suffering from nausea and vomiting, then kidney failure, then death. The Federal Food, Drug, and Cosmetic Act was signed into law by Franklin D. Roosevelt the following year, following a public outcry. The beleaguered salesman committed suicide shortly thereafter. The company however, admitted no fault, in that what they had done was not strictly illegal at the time (Fig. 2).[4]

From left to right: The Elixir Sulfanilamide bottle, a diet cure involving sanitized tape worms, The Lash-Lure packaging (top) and an FDA campaign poster from the 1950s dispelling rumours from “quacks” relating to malnutrition (bottom), a poster from the US federal civil service highlighting the role of Frances Kelsey of the FDA in saving lives. From FDA/ Flickr.

The FDA was set up primarily as a consumer protection agency, while in Europe, the European Medicines Agency (EMA) was set up in 1995 to harmonise and simplify drug approval processes across member states. Typically, drug approvals are required from both the EMA and the FDA, although some efforts are afoot to avoid redundancy, as the approvals processes have a lot in common.[5] During the recent pandemic caused by the SARS-CoV-2 virus, the procedures for approval of new medicines, and crucially vaccines, were hugely accelerated; Between 2011 and 2020 median time for vaccine approval at the FDA was around 12 months, while during the pandemic, the median time for review was 21 days thanks to the Emergency Use Authorizations.[6] Despite much political wrangling, with different countries backing different vaccines and politicians weighing in on the safety of different formulations, a huge number of people received vaccinations.[7] The post-pandemic era is set to be hugely exciting, not least because of the acceleration of some drug safety testing processes in emergency situations, and the development of new, nucleic-based vaccines which could revolutionise healthcare if we can only figure out how to safely and specifically deliver these medicines to humans. Back at the beginning of the last century, Paul Ehrlich, a pioneer of the magic bullet approach (die Zauberkugel) for the development of medicines, had shown that different dyes, often taken from the cloth dyeing industry, could bind to specific molecules in the cell, thus providing contrast and allowing different subcellular compartments to be distinguished.[8] Ehrlich looked to nature, observing; “antitoxins and antibacterial substances are, so to speak, charmed bullets which strike only those objects for whose destruction they are produced by the organism.”[9] Since then, perhaps as foreseen by Ehrlich, medicines have moved from small chemical molecules to the newer biologics, which include biopolymers, proteins, nucleic acid and even cells. Ehrlich’s magic bullet concept still holds however, and although biologics (generally defined as biomolecules greater than 1kDa, which are used as therapeutics) have an enormous capacity for the specificity he was longing for, this still has to be tested very carefully.

The human organism is enormously complicated. While it may be appropriate to take a cell in isolation and study it to see how it grows, or how a chemical molecule might enter it and interact with it, it is quite a stretch to say that this then represents how this chemical molecule will interact with all cells, or even the same cell, now placed in the context of the body. The chemical/biological molecule or component must also be carefully examined to make sure it is always the same. The thalidomide scandal of the 1960s was an example of where a chemical molecule, prepared in a formulation, given to pregnant mothers experiencing nausea, caused horrifying birth defects. Babies born to mothers who’d been given the anti-emetic, often suffered from malformed limbs and heart defects and tragically a large percentage of them died at birth.[10] Frances Kelsey, a reviewer at the FDA, had repeatedly asked the German manufacturer of the drug, Grünenthal, for safety evidence, notably on pregnancy, and not receiving it, refused to approve the drug for use in the USA. When the scandal broke in 1961, showing that thalidomide’s use was linked to birth defects, Kelsey’s vigilance was vindicated and harsh laws were passed by US congress to further strengthen drug regulation. This again highlights the FDA’s role as consumer protection agency rather than simply a regulatory body. From a scientific point of view, the thalidomide molecule was an interesting example, as it actually exists in two forms, one the mirror-image of the other. The commonly accepted truth was that while one form (R) was useful as a sedative, the other form (S), was teratogenic, causing birth defects. Until this scandal broke, drug formulations had been developed without concern for separating out mixtures of chiral, or mirror-image, molecules. This version of events is disputed, with other reports showing that both enantiomers are equally toxic, but it still raised the issues that enantiomers could have differing effects.[11] After the thalidomide disaster, pharmaceutical companies were required to purify the molecules separately and test them individually and as mixtures. The realisation that enzymes and biological receptors, the targets of many drugs, were so specific to be even able to distinguish between molecules that are identical in terms of chemical composition, differing only in that they are mirror images of each other, was also an enormous opportunity to achieve even more exquisite targeting of biological processes. Chiral drugs are still seen as potentially risky, as some may be able to switch between forms.[12]

From the time that the FDA mandated that drugs should be tested on animals for safety and efficacy (given a lack of viable alternatives) before progressing to human trials in 1938,[13] up until December 2022, it has not been possible to have a medicine approved without going through animal testing. Just before the turn of this century, however, the distinction between medicines and cosmetics was made, and, starting with the UK in 1998, many countries started to ban the testing of cosmetics on animals. The particularly cruel nature of this type of animal testing included the Draize irritancy tests, developed by the FDA in 1944,[14] which were touch paper to animal rights activists, and indeed animal lovers. Unlike for medicines, the justification for cosmetics was not strong enough to withstand a barrage of negative attention. A flurry of countries (including Mexico, Brazil, India and the EU) have, between 1998 and today, banned cosmetics which use animal testing in development. In the US, at the time of writing, 10 US states have banned cosmetics tested on animals, with the federal Humane Cosmetics Act reintroduced as legislation in 2021. However, this could not have happened without the parallel development of lab-based models to replace the animal testing. On the subject of eye irritancy tests, the OECD (Organisation for Economic Co-operation and Development) approved non-animal eye irritancy and skin allergy tests in 2022, as alternatives to the Draize irritancy tests. Thanks to efforts by various agencies (banded together in the International Cooperation on Alternative Test Methods, ICATM[15]) championing alternative methods and scientists who’ve come up with replacement tests, often using live human cells, to effectively test skin and eye toxicity, the testing of cosmetics on animals has been relegated to a dusty storage container, hopefully never to be reopened. The testing of drugs for healthcare however, is another matter. The new law signed by President Biden in December 2022, means that the animal tests do not have to be a precursor to the human clinical trials in the US. However, many critics have argued that alternative methods for screening compounds for safety are still in their infancy and not suitable. There is thus an urgent call to develop effective and appropriate alternatives, that can be used to replace at least some aspects of animal testing. Thankfully, there are now more and more in vitro tests that have been approved for very specific purposes, that can be built upon to increase the repertoire of available assays. A likely future scenario, is that animal tests would not be replaced by a single test in vitro, but rather with a series of tests, designed to evaluate individual safety/toxicity concerns. A note of caution however; the same criticisms applied to animal studies – such as lack of reproducibility, poor experimental methods, experimental bias, unclear genetic background, can also be applied to in vitro testing.[16] To quote DG Altman, “we need less research, better research, and research done for the right reasons”.[17]

The current status of drug safety testing

For a drug to be approved in the US, the FDA typically requires toxicity tests on a rodent species, e.g., mice or rats (sometimes involving 100s of animals), followed by one non-rodent species such as a dog or a non-human primate (usually 10s of animals). Provided the compound is shown to be non-toxic, the tests can then move into clinical trials, first at small (again 10s of volunteers), then at large scale (100s of volunteers). However, fewer than 10% of compounds that enter clinical trials are successful,[18] with > 30% failing in late stage clinical trials.[19] This results in an extremely lengthy and expensive process, with estimates of cost around $2.6Bn and 12 years per drug.[20,21] This situation is perplexing enough to have coined a new law—Eroom’s law (Moore spelled backwards) so called because the number of FDA approved drugs halved every 9 years since 1950.[22] Many people point to inefficiency in the drug discovery process with basic errors made in underappreciating physicochemical properties of drugs and underprediction of safety-related failures.[23] A typical drug development process involves 4–5 years of in vitro testing followed by extensive animal testing, however this combination often leads to dead ends and both false negatives and positives. As the field moves more and more into development of biologics, which may, as predicted by Ehrlich, have greater specificity, it will be interesting to see whether the poor success rate for drug approval is altered by a move away from small molecules. At present however, biologics represent a small fraction of drugs approved, so more data is needed. A strong economic and sustainability argument can be made that the process needs a major overhaul and in fact, the pharmaceutical industry has made some inroads into breaking Eroom’s law.[24] Analysts and industry have identified the most common reasons for the attrition in the drug discovery process as being related to a lack of efficiency of potential drugs as well as a lack of safety, resulting from poorly predictive pre-clinical models that are unable to demonstrate the mechanism of the drug. [25] Two of the priority areas for pharma include treatments for oncology and central nervous system disorders, both targets being notoriously difficult to model adequately in small animal models. Although the non-human primate model is a better model of the human brain than a rodent brain, there are still significant differences in terms of brain organisation and size (see Fig. 3).[26,27] Another crucial difference is the immune system of the laboratory models which is markedly different from humans, not least because they are bred for laboratory use, but also fundamentally due to the type of cells present and the response to immune system challenge.[28] The average rate of successful translation from animal trials to clinical cancer trials is particularly poor, at 8%.[29] Although there are many differences between animals such as rodents and humans, one important, yet overlooked difference, is that mice and rats are nocturnal, a fact that is rarely taken into account despite a new found appreciation of the role of chronobiology.[30] More and more, the use of animals in research is being questioned, with a view to improving their utility and predictive power for human medicine, with more stringent controls on the design of experiments to eliminate heterogeneity, with factors including strain of animal, their housing conditions and even their gender. The exclusive use of male animals was, until recently, common practice, due to hormonal fluctuations in the female animals. Particularly in neuroscience studies these ideas were justified by scientists who claimed that the experiments using female animals were more complicated and time consuming.[31]

reproduced from Ref. 26. (B) Coronal Nissl-stained sections of hippocampus-containing tissue in the 3 species. Copyright: © 2011 DeFelipe, reproduced from Ref. 27.

Cartoon depicting size and life-span differences between humans and animal species typically used in drug testing (left). Images from brains of human, non-human primate (macaque) and mouse in terms of size and organisation (right). (A) Images of the dorsal surface of a human brain, macaque brain, and mouse brain. Notice the striking differences in the size and convolution complexity of the cerebral cortex across the 3 species. Copyright (2019) National Academy of Sciences,

Are in vitro models of human biology a viable alternative?

In 2006, eight healthy human volunteers were enrolled in a Phase I clinical trial for a new drug, TGN1412, a monoclonal antibody designed to directly stimulate T cells.[32] In pre-clinical models, no adverse toxicity or proinflammatory effects had been observed. Within 90 min of the compound being infused into the patients, they started to observe effects such as headache and nausea, despite a dose ~ 500 times less than the intended final dose. 12 h later they were all critically ill, apart from the two volunteers who had received a placebo. The six affected volunteers were all found to have suffered a rapid and massive release of pro-inflammatory cytokines. Thankfully, after treatment, all six survived, but the incident highlighted the risk in transition from pre-clinical phases to clinical phases of a trial. Following this disaster, the FDA introduced mandatory in vitro cytokine release assays to evaluate compounds that might over-stimulate the immune system.[33]

There are of course other reasons that compounds fail to be approved. Harking back to Paul Ehrlich’s magic bullet concept, therapeutic agents need to have high specificity for a target and low toxicity. Many tissue engineering/ in vitro model developers have adopted George Box’s well-known aphorism; All models are wrong but some are useful. More precisely, Box cautioned that scientists should be aware of the limitations of any given model.[34]This is very useful advice for development of in vitro models for drug discovery or toxicology monitoring. Specific models can be developed to understand different features of adverse or off-target effects of particular compounds. Coming in sequence after a simpler in vitro model that allows higher throughput, well-designed in vitro tissue models can inform the drug development process. Thanks to the use of human cells, species specific effects can be determined which can be weighed against animal data. The 3Rs agenda of reducing, refining and replacing will be propelled forward by effective in vitro models. Although in vitro models can be extremely useful, one thing that is particularly difficult to assess are systemic effects, for example determining what pharmacologists call ADME (adsorption, distribution, metabolism, excretion) where a compound enters a body across a biological barrier, e.g., intestine, is distributed in the blood stream, maybe metabolised by liver, and is ultimately secreted by the kidney. Until the very adventurous DARPA sponsored body-on-chip programs were launched in 2012, animal experiments were the only way to accomplish this. Now, various systems have been developed that offer (micro)fluidic connections between chambers on chips, each containing chamber representing a different organ. Such chips are now in the commercial arena, with companies such as Emulate, Mimetas and CN Bio Innovation leading the way on making microphysiological systems (MPS) available for purchase. Both single and multi-organ chips have been demonstrated to be capable of accurately modelling human organ physiology, even compared to spheroids or organoids. The inclusion of mechanical stimuli such as shear stress and extracellular matrix components (gels), multiple representative cell types have been shown to cause cells to behave in a functionally more in vivo like manner which leads to accurate predictions of toxicology.[35] A landmark paper, published by Emulate in collaboration with Donald Ingber’s group at the Wyss Institute in Boston, showed that a liver on chip could predict liver toxicity with exceptionally high specificity and sensitivity.[36] The paper, published in December 2022 nicely coincided with the FDA’s announcement.

In vitro tissue culture; where it started and where it is now

For more than a century, cell biologists have enabled disease modelling and target discovery by cultivating live cells on 2D supports. This approach has yielded many advances, however there is a disconnect with pre-clinical and clinical stages of the drug discovery, resulting in huge attrition in the number of therapeutics reaching patients. One major contributing factor is that 2D tissue culture does not accurately reflect cells in the biological context from which they were originally taken (see Fig. 4). A quick foray into the origins of tissue culture help to explain why cells were grown in 2D monolayers, rather than the more complex 3D tissues of their origin.

Cells grown on a 2D support (left) experience very different cue from cells grown on a 3D support (e.g. a hydrogel or a scaffold; right). The 3D support mimics biological environments found underlying tissues, typically made up of extracellular matrix (ECM) to which cells can bind and even migrate through in 3 planes, x, y, z. In 2D, cell movement is limited to two planes, x, y. Figure created in biorender.com.

When the first attempts were made to take cells from a mammal and cultivate them in the lab, there were many hurdles to cross. Nowadays, looking at the gleaming, stainless steel laminar flow hoods used to ensure sterility in the laboratory it is hard to believe that early scientists were able to keep anything alive, much less avoid contamination from microorganisms circulating in the air or present on surfaces. Ross Granville Harrison was a pioneering embryologist working at Johns Hopkins Medical School, who was one of the first to successfully grow cells outside of a body. This resulted in the launch of a new discipline, called tissue culture. The growth of cells in vitro (on glass) meant that cells could be studied in isolation, outside of the organism, allowing in a sense to disentangle the function of various different types of cells. Thanks to the efforts of Harrison and others, enormous progress was made in understanding cell biology. Harrison was working on a theory for the growth of neurons. There were a number of different theories which prevailed at the time about how nerve cells grew and were connected to each other, forming the complicated networks we now know are crucial for the complex functions of our nervous systems. Harrison’s breakthrough was the development of a new experimental method that allowed neurons to be dissected out from an embryo and grown in the lab. He encountered many difficulties. In his ground-breaking paper, published in 1910 in the journal of experimental zoology,[37] Harrison describes with painstaking detail how he overcame three main problems. First, how to keep the explants alive? Initial attempts with a saline solution failed. The dissected tissues were suspended on a thin piece of glass, known as a cover slip, used for microscopy. The cover slip was placed on the stage of a microscope with the hanging droplet suspended on the underside. However, the tissue did not survive. It was only when Harrison hit on the idea of using “lymph” from adult frogs that he made progress. Lymph is a fluid, not unlike blood, which bathes tissues and contains many different biomolecules, including, importantly a biological polymer known as fibrinogen. Also found in blood, fibrinogen aids with the clotting process. By using lymph, Harrison provided a liquid medium that would allow the explanted tissues to grow, by virtue of containing biomolecules necessary for the cells to survive and thrive. However, even though he started with a liquid, important reagents in the lymph meant that the liquid changed in a very important way, serendipitously helping with the second major issue, that of a support for the nerve cells to sprout and elongate their nerve fibres. He noted “one of the necessary conditions of outgrowth is in all probability a medium which affords some solid support to the fibres.” Fibrinogen forms clots which are tiny rope like structures that together result in a jelly-like substance. The nerve cells added to this mixture were able to happily grow along the fibres as a solid support. Harrison observed that when the tissue was “implanted in the lymph it shows a tendency to spread out… into a single layer of cells”. Other experiments using gelatine were unsuccessful. Although gelatine is a bio-derived product, it is a highly processed (boiled) biopolymer in which the biological compounds are denatured almost beyond recognition, at least to a cell. It does however retain its ability to gel, and is often used to jellify, much like pectin is used in jam making. Amazingly, Harrison hit upon a method that was re-introduced many years later, presaging the idea of mechanotransduction whereby cells respond to the stiffness of a surface. Had he tried to grow the cells directly on glass he may not have succeeded. The gelatin experiment demonstrated that the fibres alone were insufficient, they had to be combined with the active biomolecules. In later years, scientists realised that they could use glass surfaces to grow cells, but in many cases, a coating of a biomolecule was required, to render the surface more attractive to cells. A third breakthrough, which may seem somewhat mundane to the modern scientist, was that of sterilization. Despite taking immense care from the point of dissection to the mounting of the sample on the coverslip, Harrison experienced many failures and recorded very precisely the discovery of both bacteria and fungi in his samples. His solution was to do repeatedly wash the tissues in sterile water and to treat every single one of his materials for the dissection and preparation of the tissue mounts by sterilising them with dry heat. In an address to the American Society of Anatomists in 1912, Harrison, championing the new science of in vitro cell culture, begged to be freed from the “concept of the domination of the organism as a whole”. He hoped to be able to use his experimental method to study cells in isolation to understand their function.[38]

The result of his efforts was a hugely exciting period. Harrison unleashed what he later called a Gold Rush of scientists thronging to explore the new techniques of tissue culture. Indeed, Johns Hopkins became an epicentre for the initial explorations in tissue culture, first with Montrose Burrows, who graduated from Johns Hopkins Medical School in 1909 and later corresponded with Harrison, in some ways becoming his academic successor in the field.[39] Around the same time, husband and wife team Margaret and Warren Lewis brought their knowledge of embryology and the development of different media for organ culture to bear on the field. Following Warren Lewis’s retirement in 1937, his direct academic successor—continuing work on tissue culture, was George Gey. He, in his turn, worked closely with his wife Margaret Gey for nearly 40 years, setting up a laboratory for work on tissue culture, and establishing multiple normal and malignant types of cells, including the infamous HeLa cells, which they showed could be infected with the poliomyelitis virus, thus later enabling production of a vaccine. Their work was highlighted in “the Immortal life of Henrietta Lacks”. The issue of consent is a central tenet of the book; Henrietta Lack’s cells were removed and turned into a formidable research tool, however she was never consulted.[40] Since then significant legislation has been introduced to protect patients, to make sure consent is obtained and to ensure privacy of the donor.

The development of tissue culture as a discipline was a massive breakthrough which facilitated huge numbers of biomedical advances. In the 1940s, Wilton Earle at the US National Cancer Institute provided painstaking detail on methods for sterilisation of media, preparation of substrates and observations of cells, including their spontaneous transformation into malignant cells, resulting in some cases, in what we now call cell lines.[41] Earle and colleagues created a fibroblast cell-line in 1943 (Fig. 1). Cells lines are a tool we now take somewhat for granted, but their ability to grow and continuously divide was a massive boon to researchers attempt to understand the functions of cells. For a period of about 50 years, from the 1950s, until around 2000, the standard model was to grow 2D, single layers of cells, on rigid plastic or glass surfaces, using complicated formulations of media, often specific for each cell type. One major advantage, and possibly driver for the single layer approach was that the cells could be easily observed under a microscope. The downside however, was that this growth of cells in the lab, in vitro, diverged from the understanding of how cells grew in vivo.

How does tissue engineering help with all of this?

Accounts of organ transplantation can be traced back to Galen in Rome, early Christian mythology and Arab scholars.[42] Fascinating as these accounts are, all but the simplest of transfers of biological tissues were unsuccessful. Successful operations included the transfer of skin from a patient to another wounded part of their own body (autograft), such as the work by Tagliacozzi in Italy in the early 1500s, a pioneer in reattaching severed noses. Other examples include early blood transfusions in the 1660s in France, where physicians serendipitously transferred blood of the same type from a human donor to a patient. At the same time early physicians were developing materials for implantation. But here, the focus was very much on architecture and shape. Early dental implants were shaped to try to fit the socket where the rotten or diseased tooth had been housed, sometimes replacing with an inert material like gold, but often using teeth from a donor. From prosthetic limbs to dental implants, materials technology was improving, first with a very scarce range to choose from—wood for a start, as a material that could be shaped easily and substances like cat gut or silk used for sutures. Gradually however, the idea of regenerative medicine arose, using donor tissues or cell for implantation. A major issue arose of course, that of incompatibility of tissues from the donor, with the patient. It was not until 1967, with the first successful heart transplant in South Africa, that some of these issues began to be solved, albeit slowly. Of course, a massively limiting factor was a ready source of compatible organs for transplantation.

Given the success of in vitro tissue culture, it is not surprising that attention turned to the possibility for generating tissues and even organs in the laboratory. Tissue Engineering arose due to a general frustration of scientists and clinicians plagued with problems surrounding a lack of donor material, difficulties with rejection of implanted tissues due to incompatibility and a general problem of not being able to have tissues available on demand. This was beautifully put by Robert Langer and Joseph Vacanti in their 1991 article in Science: “The loss or failure of an organ or tissue is one of the most frequent, devastating, and costly problems in human health care. A new field, tissue engineering, applies the principles of biology and engineering to the development of functional substitutes for damaged tissue.” The idea was to standardise some of the materials involved, integrating techniques to grow tissues in the laboratory and then be able to scale up production. It was naturally inconceivable to regenerative medicine specialists that monolayers of cells adapted to a petri dish could be used; they were looking to tissue structure and shape and attempting to model it. It is generally accepted that a scaffold or a template is needed to build a tissue in 3D. The use of ready-made tissue, excised from a donor and used as is, is medicine. However, to build a tissue from scratch, requires engineering. Biomimicry is the term used for copying of a biological process or structure and is at the heart of tissue engineering, striving to make tissues that look like human tissues (or indeed other animals or organisms depending on the interest). Sometimes compromises are made because of the availability or source of a material. Sometimes an additional functionality will be added. Mimicking the biological phenomenon found in nature is the goal and so it should come as no surprise that the first stage in designing tissues should be a comprehensive study of the tissue found in the body, and an attempt to copy it as closely as possible, remaining as faithful as possible to the complexity of the tissue. The road that in vitro tissue culture took, optimising the growth of cells in rigid flat dishes, with complicated cocktails of media, represents a major detour. Instead of being used to understand how things work in the body, in actual fact, in vitro tissue culture may often tell us about what happens when we grow cells in a dish, much in the same way that mouse studies tell us how to cure cancer in mice.

In 2006, reflecting on the History of Tissue Engineering”, Charles Vacanti defined a modern field, distinguishing it from earlier work.[43] According to Vacanti, the first recorded use of the term Tissue Engineering, as it is used today, was in a manuscript entitled “Functional Organ Replacement: The New Technology of Tissue Engineering”. Vacanti differentiated the use of prosthetic devices and “surgical manipulation of tissues” from this new technology. For Vacanti, tissue engineering involved growth of new tissue utilizing biologics, usually through the use of “appropriate scaffolding material”. Here, biologics was a term used to describe biological materials, which could include cells or other biomolecules like proteins.

Vacanti’s own lab had achieved fame (or perhaps notoriety) for the images of the “mouse with the human ear”. This was a scaffold in the shape of an ear that had been loaded with cells from a cow and then surgically implanted onto the back of a laboratory mouse (Fig. 5).[44] As an early prototype, the ear on the mouse’s back typified the main drivers for the field of Tissue Engineering. A scaffold made of a synthetic polymer called PGA was used to provide support. Chondrocytes, cells that grow in cartilage tissue such as that found in the ear, were encouraged to grow and fill the support. This was placed in an environment where the cells could grow (on the back of the mouse), generating cartilage, resulting in what looked like an intact ear. Because the initial scaffold was made of a biodegradable material, it could gradually dissolve over time, being replaced with the newly forming cartilage. The promise of such implants was quickly realised, when a young boy in Boston had his missing sternum replaced with a tissue engineered construct, built along the same principles, although importantly, using his own chondrocytes, rather than ones from a calf.[43]

We are now closer to that goal than Vacanti and co-workers were in the late 20th century but there is still a long way to go. Despite the early and continued promise of tissue engineering, it has yet to realise the dividends promised after its inception at the end of the last century. However, many engineered tissues have huge utility in the development of more complex, physiologically authentic models of human biology. The added advantage is that the more in vitro models are studied, the more likely it is that issues around materials biocompatibility, compatibility with host immune systems and adhesion with target tissue in a potential patient, will be solved. For now, the development of advanced tissue engineered models of human biology can fill the gap I identified at the start of this article, that of complementing very simple 2D in vitro models, providing more accurate and predictive models for drug discovery and safety testing before progressing to clinical trials. Early examples of tissue engineered models in vitro focussed on skin and retina, and indeed these are some of the first to have been approved as alternatives.[45,46] Exciting examples of advanced tissue engineering models in recent years have emerged, utilising key advances in cell biology such as human-induced pluripotent stem cells to develop different cell lineages from a single genetic background,[47] the use of organoids[48] and even inclusion of microbiome.[49] We recently reviewed advanced tissue engineered human models[50] and highlighted the need for integration of adapted technology, be it fluidics to connect multiple organs on a chip[51] or electronics for monitoring tissue function.[52]

Interfacing tissue engineering with organ-on-chip

Where to now? Materials Scientists have an important role to play in tissue engineering. In a previous special issue of MRS communications published in 2017, I highlighted the need for the development of materials for 3D biology.[53] Now, on the occasion of the 50th anniversary of the Materials Research Society I’d like to further emphasise that call but this time point out that materials science has an essential role to play in the development of structures and supports that will enable tissue engineering, and in particular, for in vitro tissue engineering. The advantages of this are twofold: This can be extremely useful for generating implants, fulfilling the vision of Langer, Vacanti and others, but there is also a place for this work in the drug discovery and toxicity testing arena. Advanced, tissue engineered models of human biology need to be developed to replace unnecessary and ineffective animal testing but also to supplement existing 2D cell-based assays in the drug discovery pipeline. Whether bio-derived or synthetic, materials scientists understand materials, and their input is essential. Another crucial area for input is the systems and devices that enable flow, oxygen gradients, monitoring and more. It remains to be seen, whether in vitro models will be able to capture effects such as chronobiology, gender and immune system differences, however, as mentioned before, specific, individual multi-cellular models can be used to gain mechanistic understanding on biological processes, which can then be used to inform and refine further experiments on animals or human clinical trials. Pioneering work by Materials Scientists has shown how recapturing physical and chemical tissue environments, can induce stem cells to differentiate, generating many of the cell types found in vitro. Indeed, rather than inducing cell fate with biochemical cues only, physical cues such shape of a tissue engineered scaffold and flow can reproduce biological phenomena such as epithelial cell renewal and migration of stem cells. This was recently, elegantly demonstrated by Lutolf and colleagues, in the context of in vitro models of the human intestine.[54,55] Vascularisation of tissue in vitro provides the opportunity to observe molecules transferring from one compartment to another, modelling movement of cells (e.g., immune cells) and small molecules, while induction of shear stress via flow can induce cell differentiation.[56] Again, the key seems to be the growth of human derived stem cells within soft matrices such as extracellular matrix derived materials, making sure that cells are given appropriate biochemical, physical and chemical cues. A key challenge here is the low-throughput and cost of such models. A typical multi-organ model may take 3–4 weeks to develop, requiring off chip or on chip pumps and expensive cell culture reagents. Additional issues include the use of animal derived media components and extracellular matrix molecules, where a human only model must be the eventual goal. Materials Science thus has enormous potential to aid in fulfilling the promise of reducing animal experimentation and making more human-relevant models for drug discovery and safety testing. Input is urgently required in (1) Development of tissue engineered models, (2) Developing devices and structures for increasing throughput and reducing cost, and (3) Developing new synthetic, defined materials to replace animal derived components. Learning from the physical models will be greatly complemented by in silico techniques, amplifying their impact. Materials Scientists, working in a interdisciplinary framework, are central to the success of this effort.

Data Availability

This is a perspective, there is no associated data.

References

R. Hooke, An account of an experiment made by M. Hook, of preserving animals alive by blowing through their lungs with bellows. Philos. Trans. R. Soc. Lond. 2, 539–540 (1967)

FDA no longer needs to require animal tests before human drug trials. https://www.science.org/content/article/fda-no-longer-needs-require-animal-tests-human-drug-trials (Accessed 07 Mar 2023).

J.G. West, The Accidental Poison That Founded the Modern FDA. The Atlantic. https://www.theatlantic.com/technology/archive/2018/01/the-accidental-poison-that-founded-the-modern-fda/550574/ (Accessed 21 Feb 2023).

Elixir sulfanilamide—massengill. Calif. West. Med. 48(1), 68–70 (1938)

G.A. Van Norman, Drugs and devices: comparison of European and US approval processes. JACC Basic Transl. Sci. 1(5), 399–412 (2016). https://doi.org/10.1016/j.jacbts.2016.06.003

M.P. Lythgoe, P. Middleton, Comparison of COVID-19 vaccine approvals at the US food and drug administration, European medicines agency, and health Canada. JAMA Netw. Open 4(6), e2114531 (2021). https://doi.org/10.1001/jamanetworkopen.2021.14531

Boseley, S. How vaccine approval compares between the UK, Europe and the US. The Guardian. December 4, 2020. https://www.theguardian.com/world/2020/dec/04/how-vaccine-approval-compares-between-the-uk-europe-and-the-us (Accessed 07 Mar 2023).

F. Bosch, L. Rosich, The contributions of Paul Ehrlich to pharmacology: a tribute on the occasion of the centenary of his Nobel Prize. Pharmacology 82(3), 171–179 (2008). https://doi.org/10.1159/000149583

P. Ehrlich, Experimental Researches on Specific Therapeutics (Lewis, London, 1908)

W.G. Mcbride, Thalidomide and congenital abnormalities. Lancet 278(7216), 1358 (1961). https://doi.org/10.1016/S0140-6736(61)90927-8

S. Fabro, R.L. Smith, R.T. Williams, Toxicity and teratogenicity of optical isomers of thalidomide. Nature 215(5098), 296–296 (1967). https://doi.org/10.1038/215296a0

I. Agranat, H. Caner, J. Caldwell, Putting chirality to work: the strategy of chiral switches. Nat. Rev. Drug Discov. 1(10), 753–768 (2002). https://doi.org/10.1038/nrd915

Commissioner, O. of the. Part II: 1938, Food, Drug, Cosmetic Act. FDA 2019. https://www.fda.gov/about-fda/changes-science-law-and-regulatory-authorities/part-ii-1938-food-drug-cosmetic-act

K.R. Wilhelmus, The Draize eye test. Surv. Ophthalmol. 45(6), 493–515 (2001). https://doi.org/10.1016/S0039-6257(01)00211-9

International cooperation on alternative test methods. https://ntp.niehs.nih.gov/whatwestudy/niceatm/iccvam/international-partnerships/icatm/index.html (Accessed 21 Apr 2023).

T. Hartung, Food for thought look back in anger-what clinical studies tell us about preclinical work. Altex 30(3), 275 (2013)

D.G. Altman, The scandal of poor medical research. BMJ 308(6924), 283–284 (1994). https://doi.org/10.1136/bmj.308.6924.283

A. Mullard, Parsing clinical success rates. Nat. Rev. Drug Discov. 15(7), 447–447 (2016). https://doi.org/10.1038/nrd.2016.136

S. Galson, C.P. Austin, E. Khandekar, L.D. Hudson, J.A. DiMasi, R. Califf, J.A. Wagner, The failure to fail smartly. Nat. Rev. Drug Discov. 20(4), 259–260 (2020). https://doi.org/10.1038/d41573-020-00167-0

A.A. Seyhan, Lost in translation: the valley of death across preclinical and clinical divide – identification of problems and overcoming obstacles. Transl. Med. Commun. 4(1), 18 (2019). https://doi.org/10.1186/s41231-019-0050-7

J.A. DiMasi, H.G. Grabowski, R.W. Hansen, Innovation in the pharmaceutical industry: new estimates of R&D costs. J. Health Econ. 47, 20–33 (2016). https://doi.org/10.1016/j.jhealeco.2016.01.012

J.W. Scannell, A. Blanckley, H. Boldon, B. Warrington, Diagnosing the decline in pharmaceutical R&D efficiency. Nat. Rev. Drug Discov. 11(3), 191–200 (2012). https://doi.org/10.1038/nrd3681

M.J. Waring, J. Arrowsmith, A.R. Leach, P.D. Leeson, S. Mandrell, R.M. Owen, G. Pairaudeau, W.D. Pennie, S.D. Pickett, J. Wang, O. Wallace, A. Weir, An analysis of the attrition of drug candidates from four major pharmaceutical companies. Nat. Rev. Drug Discov. 14(7), 475–486 (2015). https://doi.org/10.1038/nrd4609

M.S. Ringel, J.W. Scannell, M. Baedeker, U. Schulze, Breaking Eroom’s law. Nat. Rev. Drug Discov. 19(12), 833–834 (2020). https://doi.org/10.1038/d41573-020-00059-3

P. Morgan, D.G. Brown, S. Lennard, M.J. Anderton, J.C. Barrett, U. Eriksson, M. Fidock, B. Hamrén, A. Johnson, R.E. March, J. Matcham, J. Mettetal, D.J. Nicholls, S. Platz, S. Rees, M.A. Snowden, M.N. Pangalos, Impact of a five-dimensional framework on R&D productivity at AstraZeneca. Nat. Rev. Drug Discov. 17(3), 167–181 (2018). https://doi.org/10.1038/nrd.2017.244

D.T. Gray, C.A. Barnes, Experiments in macaque monkeys provide critical insights into age-associated changes in cognitive and sensory function. Proc. Natl. Acad. Sci. 116(52), 26247–26254 (2019). https://doi.org/10.1073/pnas.1902279116

J. DeFelipe, The evolution of the brain, the human nature of cortical circuits, and intellectual creativity. Front. Neuroanat. (2011). https://doi.org/10.3389/fnana.2011.00029

Z.B. Bjornson-Hooper, G.K. Fragiadakis, M.H. Spitzer, D. Madhireddy, D. McIlwain, G.P. Nolan, A comprehensive atlas of immunological differences between humans, mice and non-human primates. bioRxiv (2019). https://doi.org/10.1101/574160

I.W. Mak, N. Evaniew, M. Ghert, Lost in translation: animal models and clinical trials in cancer treatment. Am. J. Transl. Res. 6(2), 114–118 (2014)

R.J. Nelson, J.R. Bumgarner, W.H. Walker, A.C. DeVries, Time-of-day as a critical biological variable. Neurosci. Biobehav. Rev. 127, 740–746 (2021). https://doi.org/10.1016/j.neubiorev.2021.05.017

R.M. Shansky, Are hormones a “female problem” for animal research? Science 364(6443), 825–826 (2019). https://doi.org/10.1126/science.aaw7570

G. Suntharalingam, M.R. Perry, S. Ward, S.J. Brett, A. Castello-Cortes, M.D. Brunner, N. Panoskaltsis, Cytokine storm in a phase 1 trial of the Anti-CD28 monoclonal antibody TGN1412. N. Engl. J. Med. 355(10), 1018–1028 (2006). https://doi.org/10.1056/NEJMoa063842

D. Finco, C. Grimaldi, M. Fort, M. Walker, A. Kiessling, B. Wolf, T. Salcedo, R. Faggioni, A. Schneider, A. Ibraghimov, S. Scesney, D. Serna, R. Prell, R. Stebbings, P.K. Narayanan, Cytokine release assays: current practices and future directions. Cytokine 66(2), 143–155 (2014). https://doi.org/10.1016/j.cyto.2013.12.009

G.E.P. Box, Science and statistics. J. Am. Stat. Assoc. 71(356), 791–799 (1976). https://doi.org/10.2307/2286841

D.E. Ingber, Human organs-on-chips for disease modelling, drug development and personalized medicine. Nat. Rev. Genet. 23(8), 467–491 (2022). https://doi.org/10.1038/s41576-022-00466-9

L. Ewart, A. Apostolou, S.A. Briggs, C.V. Carman, J.T. Chaff, A.R. Heng, S. Jadalannagari, J. Janardhanan, K.-J. Jang, S.R. Joshipura, M.M. Kadam, M. Kanellias, V.J. Kujala, G. Kulkarni, C.Y. Le, C. Lucchesi, D.V. Manatakis, K.K. Maniar, M.E. Quinn, J.S. Ravan, A.C. Rizos, J.F.K. Sauld, J.D. Sliz, W. Tien-Street, D.R. Trinidad, J. Velez, M. Wendell, O. Irrechukwu, P.K. Mahalingaiah, D.E. Ingber, J.W. Scannell, D. Levner, Performance assessment and economic analysis of a human liver-chip for predictive toxicology. Commun. Med. 2(1), 1–16 (2022). https://doi.org/10.1038/s43856-022-00209-1

R.G. Harrison, The outgrowth of the nerve fiber as a mode of protoplasmic movement. J. Exp. Zool. 9(4), 787–846 (1910). https://doi.org/10.1002/jez.1400090405

M. Abercrombie, Ross Granville Harrison, 1870–1959. Biogr. Mem. Fellows R. Soc. 7, 110–126 (1997). https://doi.org/10.1098/rsbm.1961.0009

F.B. Bang, History of tissue culture at Johns Hopkins. Bull. Hist. Med. 51(4), 516–537 (1977)

R. Skloot, The Immortal Life of Henrietta Lacks/Rebecca Skloot (Macmillan, London, 2011)

W.R. Earle, E.L. Schilling, T.H. Stark, N.P. Straus, M.F. Brown, E. Shelton, Production of malignancy in vitro. IV. The mouse fibroblast cultures and changes seen in the living cells. JNCI J. Natl. Cancer Inst. 4(2), 165–212 (1943). https://doi.org/10.1093/jnci/4.2.165

D. Hamilton, A History of Organ Transplantation: Ancient Legends to Modern Practice (University of Pittsburgh Press, Pittsburgh, 2012)

C.A. Vacanti, The history of tissue engineering. J. Cell. Mol. Med. 10(3), 569–576 (2006). https://doi.org/10.1111/j.1582-4934.2006.tb00421.x

Y. Cao, J.P. Vacanti, K.T. Paige, J. Upton, C.A. Vacanti, Transplantation of chondrocytes utilizing a polymer-cell construct to produce tissue-engineered cartilage in the shape of a human ear. Plast. Reconstr. Surg. 100(2), 297 (1997)

J.R. Yu, J. Navarro, J.C. Coburn, B. Mahadik, J. Molnar, J.H. Holmes IV., A.J. Nam, J.P. Fisher, Current and future perspectives on skin tissue engineering: key features of biomedical research, translational assessment, and clinical application. Adv. Healthc. Mater. 8(5), 1801471 (2019). https://doi.org/10.1002/adhm.201801471

S. Shafaie, V. Hutter, M.T. Cook, M.B. Brown, D.Y.S. Chau, In vitro cell models for ophthalmic drug development applications. BioRes. Open Access 5(1), 94–108 (2016). https://doi.org/10.1089/biores.2016.0008

M.J. Workman, M.M. Mahe, S. Trisno, H.M. Poling, C.L. Watson, N. Sundaram, C.-F. Chang, J. Schiesser, P. Aubert, E.G. Stanley, A.G. Elefanty, Y. Miyaoka, M.A. Mandegar, B.R. Conklin, M. Neunlist, S.A. Brugmann, M.A. Helmrath, J.M. Wells, Engineered human pluripotent-stem-cell-derived intestinal tissues with a functional enteric nervous system. Nat. Med. 23(1), 49–59 (2017). https://doi.org/10.1038/nm.4233

M. Hofer, M.P. Lutolf, Engineering organoids. Nat. Rev. Mater. 6(5), 402–420 (2021). https://doi.org/10.1038/s41578-021-00279-y

S. Jalili-Firoozinezhad, F.S. Gazzaniga, E.L. Calamari, D.M. Camacho, C.W. Fadel, A. Bein, B. Swenor, B. Nestor, M.J. Cronce, A. Tovaglieri, O. Levy, K.E. Gregory, D.T. Breault, J.M.S. Cabral, D.L. Kasper, R. Novak, D.E. Ingber, A complex human gut microbiome cultured in an anaerobic intestine-on-a-chip. Nat. Biomed. Eng. 3(7), 520 (2019). https://doi.org/10.1038/s41551-019-0397-0

C.-M. Moysidou, C. Barberio, R.M. Owens, Advances in engineering human tissue models. Front. Bioeng. Biotechnol. (2021). https://doi.org/10.3389/fbioe.2020.620962

M. Trapecar, E. Wogram, D. Svoboda, C. Communal, A. Omer, T. Lungjangwa, P. Sphabmixay, J. Velazquez, K. Schneider, C.W. Wright, S. Mildrum, A. Hendricks, S. Levine, J. Muffat, M.J. Lee, D.A. Lauffenburger, D. Trumper, R. Jaenisch, L.G. Griffith, Human physiomimetic model integrating microphysiological systems of the gut, liver, and brain for studies of neurodegenerative diseases. Sci. Adv. 7(5), eabd1707 (2021). https://doi.org/10.1126/sciadv.abd1707

C. Pitsalidis, D. van Niekerk, C.-M. Moysidou, A.J. Boys, A. Withers, R. Vallet, R.M. Owens, Organic electronic transmembrane device for hosting and monitoring 3D cell cultures. Sci. Adv. 8(37), eabo4761 (2022). https://doi.org/10.1126/sciadv.abo4761

R.M. Owens, D. Iandolo, K. Wittmann, A (bio) materials approach to three-dimensional cell biology. MRS Commun. 7(03), 287–288 (2017). https://doi.org/10.1557/mrc.2017.102

M. Nikolaev, O. Mitrofanova, N. Broguiere, S. Geraldo, D. Dutta, Y. Tabata, B. Elci, N. Brandenberg, I. Kolotuev, N. Gjorevski, H. Clevers, M.P. Lutolf, Homeostatic mini-intestines through scaffold-guided organoid morphogenesis. Nature 585(7826), 574–578 (2020). https://doi.org/10.1038/s41586-020-2724-8

N. Gjorevski, M. Nikolaev, T.E. Brown, O. Mitrofanova, N. Brandenberg, F.W. DelRio, F.M. Yavitt, P. Liberali, K.S. Anseth, M.P. Lutolf, Tissue geometry drives deterministic organoid patterning. Science 375(6576), eaaw9021 (2022). https://doi.org/10.1126/science.aaw9021

K. Ronaldson-Bouchard, D. Teles, K. Yeager, D.N. Tavakol, Y. Zhao, A. Chramiec, S. Tagore, M. Summers, S. Stylianos, M. Tamargo, B.M. Lee, S.P. Halligan, E.H. Abaci, Z. Guo, J. Jacków, A. Pappalardo, J. Shih, R.K. Soni, S. Sonar, C. German, A.M. Christiano, A. Califano, K.K. Hirschi, C.S. Chen, A. Przekwas, G. Vunjak-Novakovic, A multi-organ chip with matured tissue niches linked by vascular flow. Nat. Biomed. Eng. 6(4), 351–371 (2022). https://doi.org/10.1038/s41551-022-00882-6

Acknowledgments

I’d like to thank Luíseach Nic Eoin for providing critical feedback essential for shaping the manuscript. The Table of contents figure, figure 1, part of figure 3, and figure 4 were created with biorender.com.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Owens, R.M. Advanced tissue engineering for in vitro drug safety testing. MRS Communications 13, 685–694 (2023). https://doi.org/10.1557/s43579-023-00421-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1557/s43579-023-00421-7