Abstract

Three-dimensional (3D) panoramic vision system plays a fundamental role in the biological perception of external information, and naturally becomes a key system for embodied intelligence to interact with the outside world. A binocular vision system with rotating eyeball has long baseline, large volume and weak sensitivity to motion. A compound eye system has small volume, high sensitivity to motion but poor precision. Here, a planar compound eye microsystem for high precision 3D perception is proposed by combining semiconductor manufacturing process and biological compound eye structure. Using a semiconductor planar image sensor as the sensing unit, a space-coded planar sub-eye array is designed and its sub field of view (FOV) is dynamically mapped to the image sensor. It solves the problem that a traditional vision system cannot simultaneously accommodate wide FOV with long focal length and high sensitivity to motion with high resolution. The parallax among different sub-eyes enables the system to accurately perceive and dynamically track the 3D position of the target in the range of 10 m and within the FOV of 120 ° in a single compound eye. This system is of great significance in the fields of intelligent robot and intelligent perception.

Similar content being viewed by others

Introduction

Visual information perception is an important sign of biological evolution. Through the acquisition of visual information, animals can have a more detailed and accurate grasp and dynamic prediction of external information, and achieve more accurate predation, attack and avoidance [1]. This significantly promotes the development of biological intelligence. Some animals in nature, especially the large animals represented by human beings, have a binocular vision system composed of a pair of eyeballs [2]. The light emitted or reflected by the target passes through the cornea and lens, refracts and then converges on the retina. The imaging parallax between the two eyes can be used for three-dimensional (3D) perception, positioning and tracking of targets. It realizes the 3D panoramic perception of the outside world through the rotation of the eyeball and neck, and realizes the perception of near or far targets by adjusting the curvature of the lens [3]. However, its motion structure is complex, the baseline between the two eyes is long, resulting in large volume, and a large amount of information needs to be processed to obtain the accurate position of the target. Some animals, such as fish, realize the wide field of view (FOV) perception through the fisheye system [1, 4]. Such systems have a gradient index spherical lens that does not require eye movement for panoramic perception. But the distortion at the edge of the FOV is too large, and the accuracy of the perceptual information is poor. The shape of the fish eye lens cannot be changed, so it can only perceive nearby targets [5]. At the same time, most animals in nature, such as insects and crustaceans, achieve 3D panoramic perception through lightweight compound eye structures [6, 7]. The compound eye is a multi-view stereo vision system composed of a series of ommatidia with different directions. The ommatidia with curved surface distribution can realize the acquisition of information under the panoramic FOV. The imaging resolution of compound eye is determined by the number of ommatidia, it requires low computing power and has strong dynamic perception ability. Besides, the small aperture of the ommatidium grants the compound eye system with the imaging advantage of infinite depth of field [8]. However, short focal length and short baseline between ommatidia lead to low perception accuracy and short 3D perception distance range.

Inspired by the structure of biological compound eyes and combined with the existing photoelectric detection technology, a series of artificial compound eyes were developed by combining biotechnology and information technology. Artificial compound eyes can be divided into two categories according to their functions: one for two-dimensional (2D) image recovery and reconstruction [9,10,11,12], and the other for 3D target perception and tracking. In the existing studies of artificial compound eyes used for 3D perception of targets, the compound eye optical system is composed of curved sub-eyes to acquire the information in panoramic FOV like insects. The machining of curved optical systems is not compatible with current planar semiconductor processes, so its manufacture is difficult [13]. Some of these systems have curved detectors modeled on insect neurons [9, 14]. By using flexible electronic manufacturing technology, the sensing unit of the detector is matched to the sub-eye one by one, and the information like Mosaic with low sampling rate is obtained. Others use a common planar image sensor as sensing device [15,16,17,18,19]. However, because it does not match the curved optical system [20], it is necessary to add complex relay system or waveguide system [15, 16, 21]. As for principles of target perception, there are two types of existing research. The first is to code the light intensity distribution of the image of the target in different positions at the sensing unit to form the corresponding relationship between the code and the target position [15,16,17,18, 22,23,24,25]. However, the image spot morphology is closely related to the shape and light intensity of the target, so this code is not universal. The second is to find the intersection of the corresponding light incident vector of different sub-eyes by the least square method to determine the 3D position of the target [26,27,28,29]. However, the short focal length of the sub-eye leads to inaccurate measurement of the light incident vector, which affects the perception accuracy of the system and leads to a small perception range. The measurement distance range of the existing compound eye systems is from tens of millimeters to hundreds of millimeters, similar to that of insects (hundreds of millimeters) [30, 31]. Given that some systems have a baseline much larger than the insect compound eye, the distance-baseline-ratio of existing 3D compound eye measurement systems is relatively small.

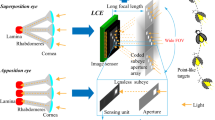

Here, a compound eye microsystem that combines the biological compound eye structure and the planar semiconductor process is developed. In this microsystem, the code of the acquired information is moved forward from the sensing unit to the optical system, and a planar compound eye optical system with spacing coded sub-eyes is designed which can be manufactured by conventional micro-electromechanical systems (MEMS) processing technology. The targets in different FOV areas are modulated by the coded sub-eyes and are imaged on the same plane image sensor. The microsystem obtains 3D position information of the target by parallax of different pairs of sub-eyes, and realizes position perception of the meter-level distance under the millimeter-level baseline. The planar optical system breaks through the mutual constraint between FOV and resolution in traditional binocular vision systems by using the principle of long focal length sub-eyes and image sensor multiplexing. Besides, the sub-eye code rules and sub-eye aperture shapes of the compound eye optical system are optimized to achieve better perceptual performance. The microsystem has small size, large FOV, large measurement distance range, large distance-baseline-ratio and low computing power requirements. The work provides an accurate 3D perception measurement method and measurement system for micro embodied intelligent robots and unmanned aerial vehicles (UAV). The target 3D position information provided by the microsystem for the embodied intelligent robot and UAV has broad application value in visual 3D positioning, target tracking, obstacle avoidance, formation and other fields, as shown in Figs 1 (a) and (d).

Principle and application of microsystem. a Schematic diagram of the use of a compound eye microsystem on embodied intelligent robots. b Microsystem architecture and multiplexing image sensor principle. c Principle of 3D position measurement. d Schematic diagram of application of compound eye microsystem in target perception, tracking, formation and other scenarios

Results and discussion

3D perception principle

The microsystem consists of a planar compound eye optical system with coded sub-eyes and a planar image sensor, as shown in Fig. 1 (b). The light emitted or reflected by a close-range target (the angle of the target relative to the sub-eye is less than 1/10 of the sub-eye's FOV) passes through the optical system and forms an array of coded image, which are received by the image sensor. Using the measurement principle of multiplexing image sensor [32], the area of the optical system is much larger than the area of the image sensor, so there are targets imaging on the image sensor in a wide FOV. Since the sub-eyes on the optical system are arranged according to the determined code, the code of the target image under different orientation is different. According to the code, the matching relationship between the collected image and the coded sub-eyes can be carried out. The morphology of the target on the image sensor is a diffuse light spot covering several pixels, and the resolution beyond the pixel size of the image sensor can be achieved by determining the centroid of the light spot [33], similar to the concept of hyperacuity in biology [34].

When the target to be measured is infinitely far away, the incident light is parallel light, and each pair of matched sub-eye and target image has the same direction vector. At this time, the collected information does not contain 3D position information, but only contains 2D orientation information of the target in the microsystem coordinate system, which is consistent with the previous article [32]. When the target to be measured is within a finite distance, because the propagating light of the target is manifested as a spherical wave instead of a plane wave, the direction vector of each pair of matched sub-eye and target image are different, which contains 3D position information of the target. Dispersive light will cause a difference between the imaging distance and sub-eyes aperture distance, as shown in Fig. 1 (c). According to the parallax principle, the coordinates of the target P(xp, yp, zp) at finite distance can be expressed as (see Supplementary Note 1 for specific formula derivation) :

Where f is the focal length of the microsystem, n is the number of target image collected in a single measurement, xsi and ysi,i∈1,…,n is the x and y coordinates of the collected target images centroid, xai and yai,i∈1,…,n is the x and y coordinates of the sub-eye apertures center corresponding to the collected target images, and k and l are the number of pairs in the x and y directions selected in different solving algorithms.

Based on the similar triangles in Fig. 1 (c), ratio R is defined as follows:

For the target with determinate position, R of any area of the whole collected image is a fixed value, which is only related to the depth information zp of the target and the focal length f of the system. Without considering the error, R can be obtained from any pair of matched target image and sub-eye coordinates. Combined with the Equation (2), the coordinates of P(xp, yp, zp) can be expressed as:

Microsystem design

There are many optical structures that can realize large FOV perception, and the two common structures are fisheye system and compound eye system. Both the fisheye system and the traditional compound eye system introduce light from various incident angles in the environment to the image sensor through an optical device without direction specificity. At the sensing unit, the incident light in different directions can be distinguished by coding the position of the target image and its light intensity distribution. The planar compound eye 3D perception microsystem combines the optical structure of the compound eye with the planar image sensor like the retinal. Different from the hexagonal uniform Mosaic structure of biological compound eyes, the position and distance of the sub-eyes are coded, and the code is moved forward from the sensing unit to the optical system. The image from each sub-eye appears on the entire image sensor plane depending on the angle of the incident light. The sensing unit only collects information without code ensuring the multiplexing characteristics of the photosensitive pixel, as shown in Fig. 2 (a). The code of the geometric position of the sub-eye aperture is more precise and with higher resolution than the code of the presence, light or shade of the target image, and the code value is almost unaffected by the shape and light intensity of the target, so it provides more accurate information for 3D perception.

Coding and layout design of sub-eyes. a Perception principles of different systems. b Schematic diagram of 2D code and 1D matching operation, where STD is the standard deviation function, n is the number of sub-eyes aperture distance in the x and y directions and m is the number of imaging distance in the x and y directions

In the process of 3D perception of microsystems, the arrangement of sub-eyes directly affects the matching recognition between sub-eyes and target images, and then affects the accuracy of perception. Considering the complexity of the calculation, the sub-eyes of the compound eye optical system are arranged according to the grid shape, so that the 2D sub-eye apertures array can be compressed into two one-dimensional (1D) coded vectors, which greatly reduces the computing power requirements, as shown in Fig. 2 (b). As shown from the imaging principle of this system in Fig. 2 (a), when the light enters at a certain angle, part of the sub-eyes on the compound eye optical system is imaged to the image sensor according to the projection relationship. Taking the x direction as an example, the obtained x coordinates of the centroid of the target images can be used to calculate the imaging distance of the x direction (collectx1,……, collectxm). Then, computation is performed by matching the imaging distance with the sub-eyes aperture distance in the x direction (codex1,……, codexn). Specifically, the ratio between the imaging distance and the sub-eyes aperture distance is computed, and the standard deviation is determined. After sliding operation, the matching values of different positions are obtained. The matching function of target image and sub-eye aperture should have similar orthogonal characteristics. The matching value of the best matched point should be clearly distinguished from the matching value of the non-matched point, so as to ensure the recognition of the system has strong fault tolerance. Based on this principle, the code (see Materials and methods) of compound eye optical system is designed with the inspiration of orthogonal coding method in the field of communication using genetic algorithm [35]. As shown in Fig. 2 (b), the matching value is 0 only at the best matched point, while the matching value at other points is larger, and the distinction is obvious. The y direction selects the same code as the x direction (i.e. codeyi = codexi, i=1,…,n). Two orthogonal 1D vectors can be extended into a grid distributed sub-eye apertures array on compound eye optical system. The average value of the ratio between the imaging distance and the sub-eyes aperture distance is R. R can be used to compute the 3D position information of the target combined with the centroid coordinates of the target image and the center coordinates of the sub-eye aperture according to the Equation (3).

Limited by the diffraction of the sub-eye aperture, the target image is a superposition of a group of diffraction spots from different incident angles. Energy concentration of the diffraction spot directly affects the centering precision, and thus the measurement precision. It is necessary to optimize the shape of the sub-eye aperture to ensure that the diffraction spot energy of the light at a specific incident angle is as concentrated as possible, so as to improve the imaging quality of the target. The aperture of the sub-eye has been optimized in the previous work [32], but it is optimized for the main incidence angle (the line connecting the center of the sub-eye aperture and the center of the image sensor). As can be seen from Fig. 3, the sub-eye has a wide FOV (see Supplementary Table 2). The optimization only for the main incidence angle will lead to defocus of the target at the edge of the sub-eye FOV. And the imaging quality between the two sides of the image sensor is obviously different (see Figure S14), which will further affect the centering precision of the diffraction spots at the edge of the sub-eye FOV. Therefore, it is necessary to optimize the aperture size according to the FOV of different sub-eyes. In order to simplify the analysis, a rectangular aperture is selected as the shape of the sub-eye. It is assumed that the incident light has a FOV that changes with the position of the sub-eye only in the plane formed by one side of the aperture (lp) and the optical axis, and a fixed FOV for the other side (lv) (see Figs. 3 (a) and (c)). Fresnel-Kirchhoff diffraction formula (see Equation (4)) is used to simulate the diffraction spots of light under different conditions (see Materials and methods). Under the conditions of 7 mm focal length and 5 mm×5 mm image sensor size, the effect of lv direction FOV (~±20 °) on the change of spot dispersion area is less than 10%, which can be ignored, as shown in Fig. 3 (b). The FOV in the lp direction has a great influence on the dispersion area of the diffraction spot, as shown in Fig. 3 (c). With the increase of the FOV range and the range closer to the edge of FOV, the effect is more severe (see Fig. 3 (d) and Supplementary Note 2). (The influence of the focal length of the sub-eye and the wavelength of the incident light is shown in Supplementary Note 3). The size of lp is selected so that the average and standard deviation of the diffraction spot area within the range of the sub-eye FOV are considered to be the minimum (see Supplementary Note 2), and the size of lv is selected to take the lp value of the sub-eye located at the optical axis. In this way, the imaging quality of each sub-eye in the FOV is more uniform, ensuring the consistency of the spot centering precision, and then the measurement precision of the system is guaranteed and improved. For sub-eyes with different distances from the optical axis, the corresponding aperture size design value can be obtained according to its FOV (see Supplementary Table 3).

Optimal design of sub-eye aperture. a Simulation of the influence of the FOV (-21 °~21 °) in the lv direction on the diffraction spot when the incident angle is kept 50 ° in the lp direction. The parameters used in the simulation are: lp=0.122 mm, lv=0.063 mm, wavelength is 532 nm. b Under different incident angle in lp direction, the effect on the diffraction spot by the FOV range in the lv direction; c Simulation of the effect on the diffraction spot by the FOV range (38 °~56 °) in the lp direction, when the incident angle is kept 0 °in the lv direction. The parameters used in the simulation are: lp=0.122 mm, lv=0.063 mm and wavelength is 532 nm. d The diffraction spot area (EE80, see Materials and methods) corresponding to the aperture size 0.07 mm~0.2 mm in the FOV range of 32 °~59°

According to the above analysis, the designed compound eye optical system, as shown in Fig. 4 (a), has 2879 sub-eyes. The number of sub-eyes is equivalent to the number of ommatidia of common insects [7]. MEMS processing technology is used for processing and manufacturing (see Materials and methods and Fig. 4 (b)). Combined with an image sensor (2048×2048 pixel @ 2.4 μm), relevant electronic processing circuit, mechanical structure, etc., a compound eye 3D perception microsystem was assembled, as shown in Figs 4 (c) and (d), with a focal length of 7 mm and a conical FOV of 120 °.

Experiment for 3D perception

Relevant experimental equipment was built to test the 3D perception ability of the microsystem, as shown in Fig. 5 (a). The microsystem was placed on a rotating platform, and a luminous target (The light source is white broad-spectrum with illuminance of 100 lx and size of ~10 cm.) was placed on the guide rail and can be moved to any position in a large distance range. The spherical wave of the target irradiated to the microsystem, and the coded pattern (as shown in Fig. 5 (b)) was collected by the image sensor and solved by the 3D position determination algorithm (see Equation (3)) . The precise moving guide rail (positioning accuracy < 0.1 mm) provided the truth distance value for reference.

Compound eye 3D perception experiment and results. a Drawings of experimental equipment. b Imaging of insect-shaped targets and triangular targets. c is the positioning results of different positions in the full FOV. d is the comparison between the Euclidean distance from target to the microsystem and the set value. e Test results of small scale stepping motion. f and g are the perception experiment diagram and perception result of the target with horizontal path respectively

The target was placed at different distances from the microsystem (from 500 mm to 10000 mm, with a step of 500 mm), and the microsystem is rotated at different angles using the rotating platform (from 0 ° to 60 °, with a step of 20 °) to test the accuracy of different spatial positions in the half FOV. Considering the symmetry and equivalence of the microsystem, the design of the x and y directions of the microsystem is the same, and the measurement principle of the positive and negative directions of the x/y axis is also the same, so the experiment can represent the measurement performance of the microsystem in the entire conical FOV. After 100 frames of images were collected at each position, the average value was taken as the measurement result and the standard deviation was used for precision evaluation. The experimental results, as shown in Fig. 5 (c) and (d), prove that the compound eye microsystem can realize 3D perception and measurement of the target within a distance range of 10m and a conical FOV of 120 °. The average positioning error of the full FOV is 4.86% within 5 m, and 7.32% within 10 m. As the measuring distance becomes longer, the measurement error increases (see Supplementary Note 4 for specific measurement results data). It should be noted that the sensing unit of the image sensor is about 5 mm (because only the target image on the sensing unit can participate in the calculation), so the maximum size of the multi-aperture system’ baseline is 5 mm. The measuring distance of the microsystem can reach 10 m, so the distance-baseline-ratio is more than 2000. Next, the target is placed at a certain distance from the microsystem, and a small scale step movement (step is 10 mm) is made to observe the resolution of the microsystem. For example, at the position of 3000 mm, the fitting step of the microsystem solution result is 10.83 mm as shown in Fig. 5 (e), which is similar to the set value. It verifies that the microsystem has the resolution ability at the close positions. And with the linear increase of the measurement distance within a certain range, the microsystem measurement result also increases linearly. Finally, an insect-shaped target is moved with horizontal path within the FOV of the microsystem, as shown in Fig. 5 (f). The test result obtained is shown in Fig. 5 (g), which is consistent with the movement trajectory (See the Supplementary Movie for an intuitive display). The above experiments prove that the microsystem has the ability to locate the space target with high precision in a wide FOV and large distance range.

Disregarding spatial constraints of the laboratory, this microsystem also can actually measure longer distances possibly. Of course, the measurement error also increases with the measurement distance, because the relationship between sub-eye imaging parallax and measurement distance is nonlinear. In order to achieve higher positioning accuracy within the specified range, the instrument can be calibrated by measuring a target at a known spatial location before using (see Supplementary Note 5). The imaging of target details in the system is limited by sub-eye aperture diffraction, which can be used to achieve clearer imaging and wider target recognition by embedding microlens and metalens into the sub-eye aperture. It can cooperate with cooperative targets with infrared spectral light source, and realize the relative distance measurement in the hidden environment. The system only computes the centroid coordinates of the target images, which is simple in calculation and requires low computing power. The measurement update rate is mainly limited by the image sensor frame rate, and the image sensor with higher frame rate can achieve higher sensitivity of motion.

Conclusion

The existing 3D perception in scientific research and industry is mainly realized through binocular stereo vision [36]. Their baseline size directly affects the measurement distance and measurement accuracy [37], so the volume is usually big, and its FOV is small limited by the long focal length. The instruments that can achieve a large distance range and wide-FOV 3D measurement and perception, such as scanning Lidar [38, 39] and laser tracker [40, 41], generally require laser transmitting devices, mechanical rotating devices, laser receiving devices, etc. Its volume is large and its structure is complex. In this paper, a compound eye microsystem is developed which can perceive the 3D position of the target within a conical FOV of 120 ° and the distance range of 10 m. Its size is small, and the distance-baseline-ratio exceeds 2000, which is far higher than that in the existing literature. Just like binocular vision system, this microsystem also adopts parallax measurement principle. However, because the compound eye measurement system has ~3000 sub-eyes, the average result of ~100 sub-eyes participating in each calculation reduces the random error. The random error is theoretically reduced to \(\text{1/}\sqrt{50}\) of the binocular vision system with the same focal length and baseline parameter, improving the precision of the measurement. The image sensor multiplexing principle makes the compound eye microsystem have a much wider FOV than the binocular vision system. Compared with the existing compound eye 3D perception system, the design of the long focal length sub-eye ensures the accuracy of the light incident vector measurement and greatly improves the perception accuracy and range.

Compared with the previous work [32], the two microsystem use the same instrument architecture. The previous article achieves the orientation measurement of infinite distance (far away enough) target with wide FOV and high resolution. This paper proposes a 3D spatial position measurement of finite distance target, and is an important supplement and extension of the previous article. In addition, the orthogonal aperture coded design and the optimization method for the aperture size according to the sub-eye FOV are proposed in this paper, so that the system can further improve the redundancy of the algorithm, arithmetic speed and quality of image, while maintains the advantages of the previous work. Further, according to the estimation of sub-eye imaging parallax and R, the system can realize the switch of infinite distance 2D orientation perception and finite distance 3D position perception, and realize the perception and measurement of orientation and spatial coordinates of various geometric quantities of targets in infinite distances and finite distances. This paper provides a 3D perception method with high distance-baseline-ratio, which can be widely used in the fields of interaction between embodied intelligence and environment, visual positioning navigation, unmanned aerial vehicles obstacle avoidance, target acquisition, unmanned system formation, etc., after being adjusted accordingly for specific applications.

Materials and methods

The design process of sub-eye code values by genetic algorithm

Genetic algorithm is a highly parallel, stochastic and adaptive algorithm for global optimization based on natural selection and evolution in biology [42]. The specific steps of using genetic algorithm to optimize the sub-eye arrangement template are: a) parameter coded, b) initial population generation, c) fitness function computation, d) selection/replication, e) crossover, and f) mutation. Iterate steps c) to f) until convergence conditions are reached. In this paper, the minimum value of the matching value of the non-matched points is selected as the fitness function to ensure the orthogonality of the sub-eye aperture coding. The above process can be directly implemented in Matlab’s Optimization Tool.

Imaging simulation of sub-eye aperture

The target image formed by a sub-eye aperture is a diffraction image following the Fresnel–Kirchhoff diffraction formula, see Equation (4). For near-parallel light, the complex amplitude of diffracted light wave becomes [32]:

where A is a constant related to the intensity of the target, λ is the wavelength of the light wave, k is the wavenumber, x0 and y0 are the horizontal and vertical coordinates of the integral surface element \({\text{d}}\upsigma\) in the aperture region, α and β denote two direction cosines of the incident light, the meanings of vectors n, r and l shown in Figure S18, and r is the norm of r.

For a sub-eye aperture at a certain distance from the optical axis, the FOV in x and y directions can be calculated by the Equation (5) and Equation (6) [32].

Where, (xa,ya) is the x and y coordinates of the center of the sub-eye aperture, lsensor is the size of the image sensor sensing unit, and f is the focal length of the microsystem. The calculated value is taken into the Equation (4) as the incident angle of the light. Combined with the setting of other parameters, the simulation image under different parameters can be obtained. At the same time, the area corresponding to 80% energy of the diffraction spot (called EE80) is selected as the evaluation standard [43], and the quality of the image can be assessed quantitatively.

Processing and manufacturing of compound eye optical system

The planar compound eye optical system is machined by MEMS process as same as the previous article [32], as shown in Fig. 4 (b). The substrate of the MEMS aperture array is quartz glass, which is cleaned first. And a layer of chromium with a thickness of 100 nm is plated on the surface of the glass. Through the MEMS mask fabrication process, the photoresist is coated on the chromium-plated quartz substrate and exposed by the laser or electron beam according to the design of the compound eye optical system. After the exposed photoresist is removed, the chromium layer is exposed and removed by etching. At this point, the etched part allows light to pass through as sub-eye, while the other part rejects light. After resist stripping, the required planar compound eye optical system can be obtained. The machining method is compatible with semiconductor machining processes and does not require complex 3D etching or integration processes.

Availability of data and materials

The datasets used during the current study are available from the corresponding author on reasonable request.

Abbreviations

- 1D:

-

One-dimensional

- 2D:

-

Two-dimensional

- 3D:

-

Three-dimensional

- FOV:

-

Field of view

- MEMS:

-

Micro-electromechanical systems

- UAV:

-

Unmanned aerial vehicle

References

Lee LP, Szema R. Inspirations from biological, optics for advanced phtonic systems. Science. 2005;310:1148–50. https://doi.org/10.1126/science.1115248.

Sarkar, M., Theuwissen, A. in A Biologically Inspired CMOS Image Sensor Vol. 461 Studies in Computational Intelligence 1-11 (Springer-Verlag Berlin, 2013).

Toates FM. Accommodation function of Human eye. Physiol Rev. 1972;52:828–000. https://doi.org/10.1152/physrev.1972.52.4.828.

Jagger WS. The optics of the spherical fish Lens. Vision Res. 1992;32:1271–84. https://doi.org/10.1016/0042-6989(92)90222-5.

Easter SS, Hitchcock PF. The myopic eye of the black moor goldfish. Vision Res. 1986;26:1831–000. https://doi.org/10.1016/0042-6989(86)90135-5.

Rossel S. Binocular stereopsis in an insect. Nature. 1983;302:821–2. https://doi.org/10.1038/302821a0.

Sarkar, M. & Theuwissen, A. in A Biologically Inspired CMOS Image Sensor Vol. 461 Studies in Computational Intelligence 13-48 (Springer-Verlag Berlin, 2013).

Land MF, Nilsson DE, Land MF, Nilsson DE. Apposition compound eyes. New York: Oxford Univ Press; 2012.

Song YM, et al. Digital cameras with designs inspired by the arthropod eye. Nature. 2013;497:95–9. https://doi.org/10.1038/nature12083.

Keum D, et al. Xenos peckii vision inspires an ultrathin digital camera. Light-Science & Applications. 2018;7:7. https://doi.org/10.1038/s41377-018-0081-2.

Kim K, Jang KW, Ryu JK, Jeong KH. Biologically inspired ultrathin arrayed camera for high-contrast and high-resolution imaging. Light-Science & Applications. 2020;9:7. https://doi.org/10.1038/s41377-020-0261-8.

Kogos LC, et al. Plasmonic ommatidia for lensless compound-eye vision. Nature Communications. 2020;11:9. https://doi.org/10.1038/s41467-020-15460-0.

Zhu, L Z., Y. L. Sun, H B. Miniaturising artificial compound eyes based on advanced micronanofabrication techniques. Light Advanced Manufacturing.2021;(2):84-100 https://doi.org/10.37188/lam.2021.007

Floreano D, et al. Miniature curved artificial compound eyes. Proc Natl Acad Sci U S A. 2013;110:9267–72. https://doi.org/10.1073/pnas.1219068110.

Shi CY, et al. SCECam: a spherical compound eye camera for fast location and recognition of objects at a large field of view. Optics Express. 2017;25:32333–45. https://doi.org/10.1364/oe.25.032333.

Zheng YL, Song L, Huang JX, Zhang HY, Fang FZ. Detection of the three-dimensional trajectory of an object based on a curved bionic compound eye. Opt Lett. 2019;44:4143–6. https://doi.org/10.1364/ol.44.004143.

Ma MC, et al. Target orientation detection based on a neural network with a bionic bee-like compound eye. Optics Express. 2020;28:10794–805. https://doi.org/10.1364/oe.388125.

Dai B, et al. Biomimetic apposition compound eye fabricated using microfluidic-assisted 3D printing. Nature Communications. 2021;12:11. https://doi.org/10.1038/s41467-021-26606-z.

Feng X, et al. A Meniscus Multifocusing Compound Eye Camera Based on Negative Pressure Forming Technology. Micromachines. 2023;14:12. https://doi.org/10.3390/mi14020420.

Hu ZY, et al. Miniature optoelectronic compound eye camera. Nat Commun. 2022;13:5634. https://doi.org/10.1038/s41467-022-33072-8.

Zhang H, et al. Development of a low cost high precision three-layer 3D artificial compound eye. Optics Express. 2013;21:22232–45. https://doi.org/10.1364/oe.21.022232.

Chung-You, L., Jun-Fu, C., Ting-Chieh, Y., Wang, K. Use bionic microlens array and CMOS image sensor for three-dimensional motion detection. 2012 7th IEEE International Conference on Nano/Micro Engineered and Molecular Systems (NEMS). 2021;388-391 https://doi.org/10.1109/nems.2012.6196800.

Chen AH, He BW, Gao CH. In: 3rd International Conference on Mechatronics and Control Engineering (ICMCE). Switzerland: Trans Tech Publications Ltd; 2014. p 290–4.

Frost SA, Gorospe GE, Teubert C. Compound eye sensor for real-time aircraft wing deflection measurement. AIAA Guidance Navigation and Control Conference. 2016;9–9. https://doi.org/10.2514/6.2016-1136.

Xian, D., Su, Q., Fengwen, M. & Weiqi, J. Development of a 3x3-channel bionic compound eyes imaging system for target positioning. Proc. SPIE (USA) 11885, 118850V (118810 pp.)-118850V (118810 pp.), https://doi.org/10.1117/12.2602394 (2021).

Ma MC, Guo F, Cao ZL, Wang KY. Development of an artificial compound eye system for three-dimensional object detection. Applied Optics. 2014;53:1166–72. https://doi.org/10.1364/ao.53.001166.

Jian HJ, He JZ, Jin XY, Chen XC, Wang KY. Automatic geometric calibration and three-dimensional detecting with an artificial compound eye. Applied Optics. 2017;56:1296–301. https://doi.org/10.1364/ao.56.001296.

Pang K, Fang FZ, Song L, Zhang Y, Zhang HY. Bionic compound eye for 3D motion detection using an optical freeform surface. J Opt Soc Am B-Opt Phys. 2017;34:B28–35. https://doi.org/10.1364/josab.34.000b28.

Li L, Hao YP, Xu JL, Liu FL, Lu J. The Design and Positioning Method of a Flexible Zoom Artificial Compound Eye. Micromachines. 2018;9:14. https://doi.org/10.3390/mi9070319.

Kral K, Poteser M. Motion parallax as a source of distance information in locusts and mantids. J Insect Behav. 1997;10:145–63. https://doi.org/10.1007/bf02765480.

Kapustjansky A, Chittka L, Spaethe J. Bees use three-dimensional information to improve target detection. Naturwissenschaften. 2010;97:229–33. https://doi.org/10.1007/s00114-009-0627-5.

Zhang L, Zhan HY, Liu XY, Xing F, You Z. A wide-field and high-resolution lensless compound eye microsystem for real-time target motion perception. Microsyst Nanoeng. 2022;8:9. https://doi.org/10.1038/s41378-022-00388-w.

Zhan H, et al. Analyzing the Effect of the Intra-Pixel Position of Small PSFs for Optimizing the PL of Optical Subpixel Localization. Engineering. 2023. https://doi.org/10.1016/j.eng.2023.03.009.

Braddick O. VISUAL HYPERACUITY. Nature. 1984;308:228–9. https://doi.org/10.1038/308228a0.

Liu B, He Z. Genetic Algorithm based MIMO Radar Polyphase Code Design. Journal of Electronic Measurement and Instrument. 2008;22:62–6.

Asim B. Advances in Theory and Applications of Stereo Vision. Croatia: INTECH d.o.o.; 2011. p 39–43.

Okutomi M, Kanade T. A MULTIPLE-BASE-LINE STEREO. IEEE Trans Pattern Anal Mach Intell. 1993;15:353–63. https://doi.org/10.1109/34.206955.

Wang DK, Watkins C, Xie HK. MEMS Mirrors for LiDAR: A Review. Micromachines. 2020;11:24. https://doi.org/10.3390/mi11050456.

Zhang, X. S., Kwon, K., Henriksson, J., Luo, J. H. & Wu, M. C. A large-scale microelectromechanical-systems-based silicon photonics LiDAR. Nature 603, 253-+, https://doi.org/10.1038/s41586-022-04415-8 (2022).

Muralikrishnan B, Phillips S, Sawyer D. Laser trackers for large-scale dimensional metrology: A review. Precis Eng-J Int Soc Precis Eng Nanotechnol. 2016;44:13–28. https://doi.org/10.1016/j.precisioneng.2015.12.001.

Vikas & Sahu, R. K. A review on application of laser tracker in precision positioning metrology of particle accelerators. Precis. Eng.-J. Int. Soc. Precis. Eng. Nanotechnol. 2021;71, 232-249, https://doi.org/10.1016/j.precisioneng.2021.03.015.

Katoch S, Chauhan SS, Kumar V. A review on genetic algorithm: past, present, and future. Multimed Tools Appl. 2021;80:8091–126. https://doi.org/10.1007/s11042-020-10139-6.

Zhang, Y. F. et al. Conceptual design of the optical system of the 6.5m wide field multiplexed survey telescope with excellent image quality. PhotoniX. 2023;4:25, https://doi.org/10.1186/s43074-023-00094-4

Acknowledgements

Not applicable.

Funding

This work was financially supported by the National Key Research and Development Program of China (2023YFB3906300).

Author information

Authors and Affiliations

Contributions

LZ was responsible for instrument design, production, experiment, data analysis and paper writing. HZ and HC was responsible for revising the paper and analyzing the experimental data. XL was responsible for the implementation of the instrument. FX was responsible for the guidance of the whole process. ZY puts forward some suggestions for improvement. All authors read and approved the final manuscript.

Author’s information

Not applicable.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

All authors agree to publish this articles.

Competing interests

The authors declare no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Supplementary Material 2.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhang, L., Zhan, H., Liu, X. et al. A planar compound eye based microsystem for high precision 3D perception. PhotoniX 5, 21 (2024). https://doi.org/10.1186/s43074-024-00136-5

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1186/s43074-024-00136-5