Abstract

Background

There is a lack of consensus about how to prioritize potential implementation strategies for HIV pre-exposure prophylaxis (PrEP) delivery. We compared several prioritization methods for their agreement and pragmatism in practice in a resource-limited setting.

Methods

We engaged diverse stakeholders with clinical PrEP delivery and PrEP decision-making experience across 55 facilities in Kenya to prioritize 16 PrEP delivery strategies. We compared four strategy prioritization methods: (1) “past experience surveys” with experienced practitioners reflecting on implementation experience (N = 182); (2 and 3) “pre- and post-small-group ranking” surveys before and after group discussion (N = 44 and 40); (4) “go-zone” quadrant plots of perceived effectiveness vs feasibility. Kendall’s correlation analysis was used to compare strategy prioritization using the four methods. Additionally, participants were requested to group strategies into three bundles with up to four strategies/bundle by phone and online survey.

Results

The strategy ranking correlation was strongest between the pre- and post-small-group rankings (Tau: 0.648; p < 0.001). There was moderate correlation between go-zone plots and post-small-group rankings (Tau: 0.363; p = 0.079) and between past-experience surveys and post-small-group rankings (Tau: 0.385; p = 0.062). For strategy bundling, participants primarily chose bundles of strategies in the order in which they were listed, reflecting option ordering bias. Neither the phone nor online approach was effective in selecting strategy bundles. Participants agreed that the strategy ranking activities conducted during the workshop were useful in prioritizing a final set of strategies.

Conclusions

Both experienced and inexperienced stakeholder participants’ strategy rankings tended to prioritize strategies perceived as feasible. Small group discussions focused on feasibility and effectiveness revealed moderately different priorities than individual rankings. The strategy bundling approach, though less time- and resource-intensive, was not effective. Future research should further compare the relative effectiveness and pragmatism of methodologies to prioritize implementation strategies.

Similar content being viewed by others

Introduction

While there are several established methods for barrier-strategy matching, methods for prioritizing which implementation strategies to test or deliver remains a considerable challenge in implementation science (IS), particularly prioritizing strategies through engaging a diverse set of stakeholders. Stakeholder engagement has been shown to increase acceptability of proposed interventions, enhance community buy-in, and ensure cultural appropriateness of the intervention [1,2,3]. Stakeholder-driven implementation science methods—including prioritization efforts—are a critical frontier in implementation science. Some of the posited advantages of stakeholder-driven approaches are that specification of strategies can be more specific due to their content and context expertise and de-prioritization of strategies that may be theoretically well-suited to barriers but not feasible within the given context. Many of the existing methods for mapping and criteria for selecting strategies are “top down” approaches, in which scientific experts decide what to implement in consultation with community or content experts. In this paper, we present methods that are “bottom up,” which allow diverse stakeholders to directly prioritize strategies to test based on their own firsthand experiences and understanding, without extensive implementation science training.

In the process of choosing which implementation strategies to test or deliver, there are several robust methods for barrier-strategy mapping or matching that include stakeholder engagement. Intervention mapping—which has been applied to IS in implementation mapping focuses on highly impactful barriers identified during planning that are mapped to appropriate strategies using a theoretical understanding of the relationships between barriers and strategies [4,5,6]. The Behavior Change Wheel and COM-B model are also well-suited to barrier-strategy matching, particularly through highlighting the behavioral mechanism through which a strategy acts. The CFIR-ERIC matching tool is well-suited to provide a provisional list of ERIC strategies that are matched to CFIR-derived barriers. However, neither implementation mapping, nor BCW-COM-B, nor CFIR-ERIC matching tool have scales or criteria for prioritization built in. The study that yielded the creation of the ERIC and linked publications of groupings of similar strategy types did utilize feasibility and effectiveness as criteria, but this tool does not include this type of exercise for prioritization after candidate strategy selection. Therefore, despite barrier-strategy matching tools existing to guide matching, we are still left with the problem of how we prioritize among candidate strategies that come out of a matching exercise. APEASE provides a series of criteria that can be used to inform prioritization efforts but no specific approach for how to translate the 6 criteria into a ranked list. APEASE is typically utilized by a content expert team with some consultation of frontline stakeholders but is not a stakeholder-driven, or bottom-up, approach. While the criteria in APEASE exist and are likely useful for driving prioritization, we are not aware of any evidence base that suggests their superiority over any other set of criteria or approach in terms of selecting ultimately impactful strategies.

Group consensus-generating tools are important and have been used variably in low-resource contexts. The Delphi method, nominal group technique, and the consensus development conference center stakeholder expertise for prioritizing problems and solutions during group discussions [1, 7,8,9,10]. Creation of “go-zone” plots—in which two selection criteria are plotted on x- and y-axes and the quadrant that is “high” for both criteria is the “go-zone”—is a stakeholder-engaged visual method of strategy prioritization [11]. Finally, a variety of ranking approaches (best–worst choice, ranking attributes, constant sum scaling, conjoint analysis, discrete choice experiment, etc.) may be used to individually rank strategies for prioritization with group averages used in lieu of consensus [12,13,14,15,16,17]. In addition to selecting which strategies to prioritize, a linked challenge is which strategies to bundle together. To guide strategy bundling, concept mapping is well-suited, revealing relationships between ideas that are explicitly mapped out based on compiled individual sorting data; these data can be used to understand which strategies might be conceptually similar, as well as important [18,19,20].

The existing methods of strategy prioritization described above have varying time and resource complexity profiles and researchers have called for increased comparative research and economic evaluations to compare the various methodologic approaches [21]. Additionally, there is a notable lack of comparative data about the use of these methods in low- and middle-income country (LMIC) settings; previous work has highlighted the need for comparative testing of different strategy prioritization methods to determine stakeholder feasibility and acceptability, the diversity of prioritized strategies by each method, and the efficiency of prioritization methods [6].

In this study, we sought to address the gap in comparative testing of different strategy prioritization methods in a LMIC setting. We utilized a variety of strategy ranking approaches to determine whether more or less time- and resource-intensive methods could provide similar prioritization profiles. We also aimed to provide a nuanced description of the similarities and differences in ranking outcomes between methods.

Methods

Study context

Pre-exposure prophylaxis (PrEP) is an evidence-based, once-daily pill that that has demonstrated efficacy and safety for use by women during pregnancy [22,23,24]. While PrEP delivery has been broadly scaled up for sero-discordant couples and adolescent girls and young women, PrEP delivery in MCH clinics has been slower due to the additional time required for diffusion and uptake of new service delivery methods [25,26,27,28]. Several studies in Kenya have demonstrated that integrating delivery of PrEP into maternal and child health clinics (MCH) is feasible [29,30,31], but barriers to successful implementation were noted. The ongoing PrEP in Pregnancy, Accelerating Reach and Efficiency study (PrEPARE; NCT04712994) utilized stakeholder engagement to obtain both qualitative and quantitative data regarding determinants of PrEP implementation and the prioritization of PrEP implementation strategies in MCH clinics in Kenya.

Study design and participants

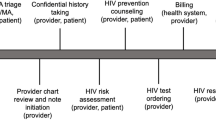

This was a cross-sectional study to evaluate four strategy prioritization activities and a strategy bundling exercise via two stakeholder engagement methods (Fig. 1). The data for this study was collected with diverse stakeholders in Kisumu, Homa Bay, and Siaya Counties in Kenya. Two distinct populations contributed to the sequential data collection in this study. First, healthcare workers (HCWs), who were selected for their prior experience delivering PrEP in MCH clinics at 55 facilities, participated in HCW surveys between October 2020 and July 2021. The facilities from which HCWs were recruited had previously participated in studies seeking to integrate PrEP in MCH clinics. Second, a group of diverse PrEP stakeholders were recruited for an in-person stakeholder workshop held in August 2021. Stakeholders included national- and county-level officials from the Kenyan Ministry of Health with PrEP policy and implementation experience, PrEP users, HCWs; participants were purposively sampled for their breadth of experience (e.g., government, facility, lived experience) and different levels of government service (subcounty, county, and national).

Data collection

Overview

Study participants rated and ranked 16 unique PrEP delivery implementation strategies which were identified in previous qualitative work using the Consolidate Framework for Implementation Research (CFIR) to inform the question guide and deductive coding [32, 33]. Participants were initially asked about perceived benefits and challenges of PrEP implementation in MCH clinics. The main barriers identified were insufficient providers and inadequate training, insufficient space to deliver PrEP, and high volume of patients [32]. The 16 identified strategies to overcome these barriers are provided in Additional file 1. While no framework was utilized to inform strategy-barrier mapping, the selected strategies mapped reasonably well to several ERIC strategies post hoc, including revision of professional roles (task shifting), developing and distributing educational materials and conducting educational meetings (communication aids and health talks), and changing service sites (delivering PrEP and health talks in MCH) [34].

Data collection for each of the four prioritization activities and the strategy bundling exercise are summarized below. Participants also completed a brief workshop evaluation survey to assess whether the workshop objectives were met, including facilitation of strategy prioritization, balancing potential power dynamics between stakeholders, and meeting stakeholder expectations. All data was collected through online REDCap and Poll Everywhere surveys; a summary is included in Fig. 1 [35,36,37].

Strategy prioritization activities

The four prioritization activities to be compared in this paper include the following: (1) “past experience surveys” with experienced practitioners reflecting on implementation success experience; (2 and 3) ‘pre- and post-small group ranking” surveys before and after small group discussion; (4) “go-zone” quadrant plots of perceived effectiveness vs feasibility (Fig. 1). Three bundles of strategies were ultimately selected for testing: bundle 1, fast-tracking PrEP clients in MCH and retraining providers; bundle 2, task-shifting PrEP risk counseling to HIV testing service providers and training different providers to deliver PrEP; and bundle 3, PrEP health talks in waiting bays and provision of communication aids. All bundles will also include delivering PrEP in MCH clinics, retraining providers, and audit and feedback processes. Given the exploratory nature of identifying potential PrEP implementation strategies, we sought to foster community-academic partnerships across a range of stakeholder experiences (government, facility, lived experience), and we selected relatively simple prioritization activities (e.g., surveys) to facilitate non-researcher stakeholder engagement. Furthermore, the nominal group technique (NGT) was used exclusively during the stakeholder workshop to facilitate stakeholder consensus generation; as such, three of the four prioritization methods from this analysis were conducted as part of the NGT [9].

Prioritization method 1—Surveys with PrEP experienced HCWs

The past experience surveys were conducted with HCWs in-person, online, or over the telephone; surveys were provided online and over the phone due to the COVID-19 pandemic. Participants were asked to rate 16 PrEP delivery strategies depending on whether each strategy had been tested at their facility and the impact that each tested strategy had on improving PrEP delivery. We considered PrEP delivery to include PrEP education prior to initiation; risk screening as part of risk reduction counseling; HIV testing services; PrEP offer; PrEP counseling for initiation, adherence, and continuation; and provision of PrEP pills. Strategies were rated on a 3-point Likert scale as “Tested and improved delivery,” “Tested but did not improve delivery,” and “Did not test.”

Prioritization methods 2–4—Rankings from the stakeholder workshop

In the workshop, pre-small-group rankings, go-zone plot rankings, and post-small-group rankings were generated. In the pre- and post-small-group rankings, participants were asked to respond to an online REDCap survey and individually rank a set of 16 PrEP delivery strategies on their perceived effectiveness to improve PrEP delivery in MCH for pregnant women. Strategies were sequentially ranked from 1 (most effective) to 16 (least effective). The go-zone plots were generated in small groups where participants ranked each strategy on a 5-point Likert scale for perceived feasibility and effectiveness. Each small groups’ average feasibility and effectiveness score were plotted; strategies with mean feasibility and effectiveness scores of 2.5 or higher were considered within the “go-zone.”

Workshop evaluation

Following the workshop, participants were asked to complete a workshop evaluation survey. Participants rated their agreement with a series of statements about the workshop on a 5-point Likert scale from “Strongly disagree” to “Strongly agree.” The workshop evaluation surveys were completed online through REDCap.

Strategy bundling exercise

In a separate analysis, the strategy bundling exercise was tested as an alternative to concept mapping as traditional concept mapping is time-intensive and relatively technologically complex [38]. The strategy bundling exercise was conducted with HCWs through the HCW surveys. They were asked to create three combination packages using up to four strategies in each package based on which strategies they thought would work best in combination; strategies could be used in more than one bundle and some strategies were not used at all. They described their reasons for bundling certain strategies and how they envisioned the packages might be implemented.

Data analysis

Strategy prioritization activities

In each of the four methods used, participants rated or ranked 16 strategies; rating involved assigning a value to each strategy while ranking involved assigning each strategy a place on a scale of one to 16 relative to one another. For this analysis, participant ratings were converted to rankings where all 16 strategies were placed on a list from 1 to 16 with 1 representing the highest aggregate rating and 16 representing the lowest. The past experience survey ratings were converted to ranks in two ways: (1) the percentage of respondents who reported the strategy as having been tested and improving PrEP delivery (versus not tested or tested and did not improve delivery) and (2) the percentage of respondents who reported having tested the strategy at all, regardless of whether or not PrEP delivery improved. The go-zone plot rankings for each strategy were calculated as an average of averages: mean feasibility and effectiveness scores from each small group were calculated, and then an overarching group average was calculated for the strategies’ overall ranks. Pre- and post-small-group rankings for each strategy were obtained by averaging the rank position across all workshop participants. After the four ranked lists were created, we used Kendall’s correlation analysis to determine the similarities between strategy prioritization profiles and provide a correlation metric between ranking profiles for each of the 17 possible methodology comparisons [39, 40]. A sensitivity analysis was also conducted, recalculating the past experience rankings to include strategies that had been tested overall rather than those that specifically tested and improved delivery. Kendall’s correlation coefficients were calculated across all relevant methodological comparisons for each of these measures. An additional sensitivity analysis, we assessed the correlation between strategies ranked in the top and bottom three positions across each method. Strategies rankings were transformed into a categorical variable (1 = top 3 strategy, 0 = middle strategy, -1 = bottom 3 strategy), and Kendall’s correlation coefficients were calculated for the six main comparisons across the four methods. Finally, the overall rankings of each method were plotted to assess ranking spread across the different ranking and rating methods.

Due to data collection errors, two strategies—“Coordination with adolescent friendly services” and “Task shifting any other component of PrEP counseling, assessment, or dispensing”—were excluded at the analysis stage from the original list of 16.

Strategy bundling exercise

Participants were asked to create the strategy bundles once over the phone with a study nurse and once online using a self-administered online survey; an additional sample of facility in-charges were only asked to complete the strategy bundles online. For each survey method, a composite list of all unique strategy bundles was created, and the top three and four strategy bundles were identified by calculating the frequency of respondents that selected each bundle. A sensitivity analysis was conducted to assess any differences in strategy bundle selection between phone and online survey methods among the participants who completed both surveys. Two Sankey diagrams, traditionally used to illustrate flows of energy, materials, costs, etc. between defined categories, were constructed to map the order of strategy selection in each bundle based on the first strategy selected among phone and online participants [41]; these diagrams were completed among the survey participants who selected exactly 4 strategies per bundle using SankeyMATIC [42].

Workshop evaluation

The proportions of participants’ agreement with each statement were graphed to show the distribution of agreement.

Reporting guidelines

A completed copy of the Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) guidelines for cross-sectional studies can be found in Additional file 2 [43].

Results

Participant demographics

Out of the 185 HCWs asked to participate in the past experience surveys, 182 completed the survey (N = 127 for both online and phone surveys; N = 55 facility in-charges for online survey only). Of the 48 participants invited to the stakeholder workshop, the majority completed the pre- and post-small-group discussion rankings (N = 44; N = 40 respectively). As previously described, the PrEPARE stakeholder workshop participants were older on average than the PrEP-experienced HCWs with median age 40 and 32 respectively. Both groups had similar gender distribution and educational attainment, with 62.8% and 56.5% female, as well as 95.6% and 93.5% having attended college or university across HCWs and workshop participants respectively). Among the PrEP-experienced HCWs, there was a median of 2.3 years providing care to pregnant and postpartum women and providing PrEP to this population. Additionally, 61.8% of HCWs had received training on providing PrEP adherence counseling to pregnant and postpartum women.

Correlation between prioritization methodologies’ ranking profiles

We compared each pair of prioritization method rankings using correlation coefficients (Table 1). The correlation was strongest and significant between the pre- and post-small-group rankings (Tau: 0.648; p < 0.001). There was a moderate degree of correlation trending towards statistical significance between the past-experience surveys and the post-small-group rankings (Tau: 0.385; p = 0.062). In a sensitivity analysis, we re-computed past experience survey rankings focusing on strategies that had been tested overall, regardless of impact on delivery (sensitivity analysis: strategy was tested at all vs original analysis: strategy was tested and improved delivery; Table 1). There was stronger correlation across all methodological comparisons; for example, comparing the past experience surveys and the post-small-group rankings, the sensitivity analysis correlation was stronger than the original (sensitivity: Tau: 0.451, p = 0.026 vs original: Tau: 0.385, p = 0.062).

Additionally, in the sensitivity analysis comparing strategies ranked in the top or bottom three positions for each method, we found the highest correlation between the past experience surveys, pre-small-group rankings, and post-small-group rankings (sensitivity: Tau: 0.632 for all comparisons). In contrast, the go-zone plot rankings had lower correlation across all comparisons, ranging from a Tau of 0.298 compared to pre-small-group rankings to a Tau of 0.456 compared to the post-small-group rankings.

Ranking spread

The ranking spread for each strategy across the past experience surveys, pre/post-small-group rankings, and go-zone plots is depicted in Fig. 2. The highest and lowest ranked strategies had the least spread with an overall difference of only two ranked positions. However, the middle ranked 12 strategies had a much higher degree of heterogeneity in the rankings across the four methodologies. Several strategies had very disparate rankings between the pre- and post-small-group ranks and the go-zone plot ranks. For example, “Task shifting PrEP counseling” was ranked 4th and 2nd in the pre- and post-small-group rankings, respectively, while it was ranked 10th in the go-zone plots. Similarly, “Fast tracking in MCH clinics” was ranked 1st and 3rd in the pre- and post-small-group rankings while it dipped to 9th in the go-zone plot rankings. When discussed in small groups, the content of the discussion for these two strategies revealed feasibility concerns. In these reports, facilitators noted broad discussions about the perceived cons of feasibility for both of the aforementioned strategies, despite the fact that there were strong feelings of perceived effectiveness for each. It was noted that both of these strategies required additional staff, may infringe on the rights of non-PrEP clients at the clinics, and may conflict with implementing partner priorities.

Strategy bundling approaches—accuracy and pragmatic considerations

Selected strategy bundles were similar between the HCWs who completed the surveys over the phone compared to online. Among the phone surveys, 226 unique strategy bundles were identified. Of these, 179 bundles (79.2%) were selected by a single participant, 29 (12.8%) were selected by 2 participants, and 18 (8.0%) were selected by three or more participants. The top three to four strategy bundles are outlined in Table 2 and aligned most with the order in which the strategies appeared in the survey list. The strategy bundle that was most frequently identified by participants included all four strategies that related to fast tracking PrEP clients (n = 31; strategies 5, 6, 7, 8 in the list). Participants also identified strategy bundles that focused on task shifting in different PrEP delivery locations (n = 28; strategies 1, 2, 3, 4 in the list) as well as patient PrEP education and provider training (n = 9; strategies 9, 10, 11, 12 in the list).

Among the online surveys, 350 unique strategy bundles were identified. Like the phone surveys, 300 bundles (85.7%) were identified by one participant, 32 (9.1%) were identified by 2 participants, and 18 (5.1%) were identified by three or more. The top four strategy bundles also focused on fast tracking (n = 20; strategies 5, 6, 7, 8 in the list), task shifting (n = 20; strategies 1, 2, 3, 4 in the list), and patient education (n = 5; strategies 9, 10, 15, 16 in the list). However, the online survey respondents included “provision of communication aids” and “coordination with adolescent friendly services” in the patient education bundle rather than focusing on provider training.

The sensitivity analysis showed similar results to both the phone and online surveys (Table 2). The plurality of the most frequently identified strategy bundles consisted of consecutively ordered strategies from the overall list. The Sankey diagrams for both phone and online survey participants demonstrate the diversity of strategy bundles selected by participants (Fig. 3). The plurality of participants selected strategy bundles that were thematically grouped by task shifting (strategies 1, 2, 3, 4 in the list) and fast-tracking PrEP clients (strategies 5, 6, 7, 8 in the list). However, it is clear that certain strategies such as “Adolescent friendly services” were popular across participants of both the phone and online surveys; although this strategy was not consistently grouped with a particular strategy bundle, it was frequently included in strategy bundles (Fig. 3: panel A, n = 80; panel B, n = 93).

While completing the strategy bundling exercise, participants were given the option to explain why they selected these strategy bundles in open answer text boxes. These qualitative responses from participants highlighted a variety of motivations for strategy bundle selection, including saving time for providers and mothers, motivating clients to start and maintain PrEP use, reduce provider workload, and avoid missed opportunities to initiate clients on PrEP. Most responses focused on the impact of the individual strategies, rather than describing how the strategies might synergistically work together.

Workshop evaluations

Of the 48 workshop participants, 46 (95.8%) completed the workshop evaluation surveys. Overall, 91% of participants agreed that there was sufficient time to discuss and review the proposed strategies, and the majority of workshop participants agreed or strongly agreed with all statements (Fig. 4). The statements with which participants most disagreed was having the support of their bosses (21%) and colleagues (14%) for participation in the workshop. No participants disagreed with the statement that they were “able to rank potential strategies easily,” and 89% of participants agreed or strongly agreed that the workshop led to the identification of appropriate strategies.

Discussion

In this study, we compared four strategy prioritization activities with the goal of understanding the practicality of these approaches and making recommendations for streamlining strategy prioritization in future work. We observed the strongest correlation between the pre- and post-small-group rankings, suggesting that the small group discussion between the two rankings did not substantially alter individual rankings. We hypothesize several reasons why the discussions did not have an impact on individual rankings. The small groups placed participants with others of a similar cadre to reduce power imbalances and each group only discussed a subset of 3–5 of the 16 strategies to align stakeholders’ relevant experience to potential strategies. However, reviewing a subset of strategies may have made it difficult to adapt ranking of the full 16 strategies during the post-small-group rankings. Additionally, the small group rankings reflected the perceptions of those who were selected to discuss that strategy in a small group setting, preferentially those with more experience with a particular system or strategy. Finally, time constraints during the workshop may have left insufficient time for logistical processing, including adequate time for presentations on each strategy to achieve group consensus on strategy definitions. Based on these data, we recommend that future strategy prioritization workshops create and send pre-reads to participants that define the implementation science terms to be evaluated (e.g., feasibility, effectiveness) and outline the strategies to be considered. Use of pre-reads, followed by an overview presentation during the workshop, will enable a more rapid, mutual understanding of strategies and implementation science terms. Future methodologic comparisons of prioritization methods should develop a shorter list of strategies for consideration and ask participants to evaluate all potential strategies rather than a subset to learn whether small group discussions impact individual rankings.

We observed that the ranking spread across strategies using different methods were quite consistent for the highest and lowest ranked items, but less tightly aligned in the middle ranks. In our sensitivity analysis of the top and bottom ranked strategies, we observed that the past experience surveys and the pre- and post-small-group rankings were much more tightly correlated compared to the go-zone plot rankings. The correlation between methodologies may have been impacted by having too many items to rank. Ranking tasks are more difficult to complete with a larger list of items; while participants are often able to clearly identify the highest and lowest ranked items, there is often less distinction between the items in the middle of the list [44], with more unexplained variance in ranking choices as the number of ranking choices increase [45]. Strategy prioritization exercises should consider limiting the number of options when using ranking. However, in the three methods that required participants to rank all 16 strategies, there was good agreement about the top and bottom three strategies, indicating that participants were able to reach a general consensus about the strategies they would prefer to implement based on perceived feasibility and effectiveness. Had participants been required to complete the go-zone plots evaluating all 16 strategies rather than a subset, we may have observed higher correlation across these methodological comparisons. This discrepancy further demonstrates the need to rank all strategies in each method when multiple methods are being utilized.

We noted that workshop rankings correlated more closely to sensitivity analyses of past experience survey rankings focused on whether a strategy had been tested at all, rather than tested and improved delivery. Although these comparisons were drawn across two different populations, they highlight how decision inertia may impact decision-making [46, 47]. Cognitive research has shown that when new evidence is presented that aligns with an individual’s initial choice, that evidence is processed more efficiently [48]. As workshop stakeholders were familiar with which strategies had been tested rather than those that actually improved delivery, there may have been bias to align their rankings accordingly. It is particularly interesting that feasibility pre- and post-small-group rankings were more tightly correlated compared to effectiveness rankings because participants were specifically asked to consider effectiveness in their pre- and post-small-group rankings. Study staff noted that stakeholders, in particular HCWs, were more likely to view feasibility as something within their control, depending on the implementation of the individual strategy. However, effectiveness was seen as a patient-dependent construct and beyond the control of implementing HCWs, indicating that potential effectiveness may be more difficult to judge compared to feasibility. The use of pre-reads may assist in overcoming these challenges. For example, the pre-reads may be used to define implementation terms and familiarize participants with all strategies, while the workshop may be used to review the pre-read content in a presentation and conduct the ranking activities. Additionally, obtaining and presenting patient-level preferences regarding the considered strategies may provide insights further insights into strategy effectiveness; as Kenyan stakeholders perceived effectiveness to be indicative of a patient-level construct, they may benefit from understanding patient preferences as they consider strategies’ effectiveness.

Decision inertia and decision-making bias may be addressable with group decision-making or instructed dissent to encourage dissenting viewpoints [46, 49]. We utilized the nominal group technique (NGT) in this study to encourage democratic participation, as this method is designed to quickly reach consensus and has been increasingly used in medical and health services research [7, 50,51,52,53,54]. However, the NGT may not have been able to sufficiently overcome decision inertia because of the homogeneity of the small groups as we had created similar cadre small groups to reduce power imbalances between patients, providers, and policymakers [46]. Group strategy prioritization exercises may still be useful to interrupt decision inertia, but careful attention should be paid to balance the goals of homogeneity to reduce power dynamics and heterogeneity to interrupt decision inertia.

The results from the workshop evaluation surveys demonstrate a high level of acceptability regarding the methods for strategy prioritization utilized during the workshop. The majority of participants felt that the NGT and go-zone plots were useful to ensure that all voices were heard and to select appropriate strategies moving forward. Although the majority of participants felt there was sufficient time to discuss potential strategies, there was a slightly elevated proportion of participants who felt the tasks during the workshop were unclear; the proposed use of pre-reads may provide the additional time necessary to more fully describe the workshop activities and ensure mutual understanding of the proposed strategies among participants. Care needs to be taken to ensure that individuals’ participation is supported by their bosses and colleagues, particularly for HCWs as their absence from work may impact clinic flow; extending the workshop would likely make this stakeholder engagement strategy less acceptable.

Our strategy bundling exercise tested an approach to conducting stakeholder-engaged prioritization that required less time and resources than concept mapping. However, our strategy bundle exercise was not effective, as participants defaulted to creating bundles that mostly reflected the ordered list provided. This reflects order bias and may be partially attributable to the design of the strategy list which was compiled by broad themes (e.g., fast tracking, task shifting, etc.), prompting participants to select strategies thematically rather than creating synergistic bundles [55]. Strategy bundling exercises should consider randomizing strategy order, as is recommended in survey literature [56, 57].

In addition to the comparisons of prioritization activities, we evaluated a simplified strategy bundling exercise to determine whether this approach is comparable to more traditional approaches such as concept mapping. We compared two levels of time and staff resources by collecting strategy bundling data by phone and online survey. We found that individuals who completed the survey over the phone with study staff were much more likely to choose the required number of strategies per bundle than those who completed the survey independently online. The advantage conferred by staff correcting participants’ number of strategies selected could be overcome with online constraints that forced inclusion of the required number of strategies. Alternatively, concept mapping could be a better approach because its highly structured nature removes the possibility of incorrectly including or excluding particular strategies. A pooled analysis of 69 concept mapping studies found high internal representational validity and reliability, suggesting that concept mapping is likely a superior method of identifying strategy bundles despite its time and resource complexity [58]. We recommend that teams consider concept mapping as a first choice; if utilizing an alternative bundling approach, we recommend using surveys that enforce bundling rules. The qualitative responses participants provided explaining why they chose each strategy bundle demonstrated a variety of motivations for bundle selection, but a health systems view was not applied. Study staff noted that HCWs tended to view the potential strategies from a HCW-cost vs. client-benefit perspective, first considering the challenges to strategy implementation and weighing them against the potential benefits to clients which may explain why HCWs were more likely to highlight individual benefits to strategy implementation. Additionally, HCWs had previous experience organically testing PrEP implementation strategies at the facilities, potentially promoting the view that strategies are individual entities rather than elements of an intervention that can work in tandem to address multiple barriers. Future work in this area should consider additional prompting and targeted questions to address facility and program determinants of intervention implementation and how different types of strategies may work synergistically to produce the desired outcomes, particularly among HCWs.

Additionally, it may be difficult to achieve full consideration of strategy synergies through a strategy bundling exercise. As it remains challenging for individuals to consider and hold all potential downstream impacts of strategy combinations, previous work has called for a complex systems lens in regards to combination HIV prevention in order to differentiate between additive versus synergistic effects of multiple interventions [59, 60]. Simulation modeling-based approaches are useful for tackling complex systems in public health, including intervention evaluations prior to their actual implementation [60, 61]. Future strategy prioritization and bundling activities should consider the use of simulation modeling techniques such as discrete event simulation and system dynamics models to more accurately assess how strategy combinations will synergistically impact clinic operations and patient flow, as has been implemented in the U.S. Department of Veterans Affairs [60, 62].

Implications for practice

As previously discussed, our recommendations for future strategy prioritization work include limiting the number of strategies for each prioritization activity and ranking all strategies in each prioritization activity when multiple activities are utilized. While the past experience surveys with healthcare workers provided a useful starting point for identifying implementation strategies that aligned with stakeholder goals, the prioritization activities from the stakeholder workshop were able to incorporate a greater diversity of perspectives and explicitly consider both feasibility and effectiveness. In the context of a stakeholder workshop, we recommend sending pre-reads to participants to explain the strategies under consideration and the implementation science terms that will be used and providing patient-level data on strategy preferences for consideration in discussions of potential effectiveness. Finally, in future strategy bundling exercises, we recommend using methods such as discrete event simulation or simulation dynamics modeling approaches to more fully account for the complexity involved in assessing strategy synergies.

Next steps for research

Future research should directly assess the ideal number of strategies to include in prioritization activities. Additionally, there is a dearth of comparative effectiveness literature for strategy prioritization activities. There is a need for future work involving more than one prioritization method to make direct comparisons between the results of these methods in order to optimize time and resources expended on strategy prioritization.

This study included diverse stakeholder perspectives in the evaluation of modified strategy prioritization methodologies. Few studies have had the ability to draw comparisons between ranking methodologies within the same study. Several of the limitations of the PrEPARE study include potential recall bias in healthcare worker responses, power differentials in the stakeholder workshop small groups, and the use of purposive sampling. The primary limitation of this analysis is that the PrEPARE study was not designed to directly compare the strategy prioritization methodologies. However, there is still value in assessing the comparative usefulness of these methodologies as they were applied in practice and to inform future structured experiments, as has been done in studies comparing the time, resources, and yield of strategy elicitation exercises [63]. As the 16 implementation strategies were thematically identified through prior qualitative work, we were unable to systematically specify the strategies according as per Proctor et al. [64]. However, after the prioritization process, all strategies to be tested were specified by the study team. We also did not perform explicit strategy-barrier mapping, but several of the prioritized strategies matched reasonably well to the barriers identified post hoc, such as retraining and training new providers to address insufficient provider-patient ratios. Additionally, we were unable to analyze strategies individually to determine whether the rankings changed during the workshop due to barriers in data structure and linkages. While small group discussions may be helpful in strategy consideration, the use of go-zone plots may not be additionally useful to identify a final set of strategies for implementation. Furthermore, there was no a priori rationale for thematically grouping strategies in the provided list for the strategy bundling exercise; the challenges with strategy non-randomization presented here highlight the need for future strategy bundling activities to randomize the strategies provided to participants as part of best practices. In the past experience surveys, the use of self-reported improvements in PrEP service delivery may be subject to recall bias. However, we are utilizing PrEP-experienced HCWs’ perceived service delivery improvement to generate further discussion of potential strategies and offer a starting point for identifying implementation strategies that align with stakeholder goals. We are currently testing the prioritized strategies to provide empiric evidence of any observed improvements in PrEP service delivery.

Conclusion

In this study, we conducted the first head-to-head comparison of pragmatic stakeholder engagement methods utilized in low-resource settings, including four implementation strategy prioritization activities and a strategy bundling exercise. We found that participants were more likely to prioritize familiar strategies and that ranking exercises were less effective with large numbers of strategies. In future strategy prioritization activities, we recommend using pre-reads to define both implementation science terminology and strategies as well as providing a short list of strategies for small group discussions, followed by a ranking of all listed strategies. Additionally, we found that a low time- and resource-intensive strategy bundling exercise was not effective in identifying strategy bundles. In future strategy bundling exercises, we recommend utilizing existing methods with high internal validity like concept mapping, or simulation modeling-based approaches.

Availability of data and materials

The datasets generated and/or analyzed during the current study are not publicly available due to limitations in data sharing permissions from the Kenyan ethical review boards but are available from the corresponding author on reasonable request.

The datasets used and analyzed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- ERC:

-

Ethics and Research Committee

- HCW:

-

Healthcare worker

- HIV:

-

Human immunodeficiency virus

- HTS:

-

HIV testing services

- IRB:

-

Institutional Review Board

- IS:

-

Implementation Science

- KNH-UoN:

-

Kenyatta National Hospital-University of Nairobi

- LMIC:

-

Low- and middle-income countries

- MCH:

-

Maternal and child health

- NGT:

-

Nominal group technique

- PrEP:

-

Pre-exposure prophylaxis

- PrEPARE:

-

PrEP in Pregnancy, Accelerating Reach and Efficiency

- RAST:

-

Rapid Assessment Screening Tool

- UW:

-

University of Washington

- WHO:

-

World Health Organization

References

Murphy MK, Black NA, Lamping DL, McKee CM, Sanderson CF, Askham J, et al. Consensus development methods, and their use in clinical guideline development. Health Technol Assess Winch Engl. 1998;2(3):i–iv, 1–88.

Brett J, Staniszewska S, Mockford C, Herron-Marx S, Hughes J, Tysall C, et al. Mapping the impact of patient and public involvement on health and social care research: a systematic review. Health Expect Int J Public Particip Health Care Health Policy. 2014;17(5):637–50.

Gray-Burrows KA, Willis TA, Foy R, Rathfelder M, Bland P, Chin A, et al. Role of patient and public involvement in implementation research: a consensus study. BMJ Qual Saf. 2018;27(10):858–64.

Fernandez ME, ten Hoor GA, van Lieshout S, Rodriguez SA, Beidas RS, Parcel G, et al. Implementation mapping: using intervention mapping to develop implementation strategies. Front Public Health. 2019;7. Available from: https://www.frontiersin.org/articles/10.3389/fpubh.2019.00158 Cited 2022 Aug 10

Bartholomew Eldredge LK, Markham CM, Ruiter RAC, Fernández ME, Kok G, Parcel GS. Planning health promotion programs: an intervention mapping approach. Hoboken, UNITED STATES: John Wiley & Sons, Incorporated; 2016. Available from: http://ebookcentral.proquest.com/lib/washington/detail.action?docID=4312654 Cited 2021 Oct 4

Powell BJ, Beidas RS, Lewis CC, Aarons GA, McMillen JC, Proctor EK, et al. Methods to improve the selection and tailoring of implementation strategies. J Behav Health Serv Res. 2017;44(2):177–94.

Harvey N, Holmes CA. Nominal group technique: an effective method for obtaining group consensus. Int J Nurs Pract. 2012;18(2):188–94.

de Meyrick J. The Delphi method and health research. Health Educ. 2003;103(1):7–16.

Allen J, Dyas J, Jones M. Building consensus in health care: a guide to using the nominal group technique. Br J Community Nurs. 2004;9(3):110–4.

Wortman PM, Vinokur A, Sechrest L. Do consensus conferences work? A process evaluation of the NIH Consensus Development Program. J Health Polit Policy Law. 1988;13(3):469–98.

Waltz TJ, Powell BJ, Matthieu MM, Damschroder LJ, Chinman MJ, Smith JL, et al. Use of concept mapping to characterize relationships among implementation strategies and assess their feasibility and importance: results from the Expert Recommendations for Implementing Change (ERIC) study. Implement Sci. 2015;10(1):109.

Mangham LJ, Hanson K, McPake B. How to do (or not to do) … Designing a discrete choice experiment for application in a low-income country. Health Policy Plan. 2009;24(2):151–8.

Bridges JFP, Hauber AB, Marshall D, Lloyd A, Prosser LA, Regier DA, et al. Conjoint analysis applications in health—a checklist: a report of the ISPOR Good Research Practices for Conjoint Analysis Task Force. Value Health. 2011;14(4):403–13.

Orme B. Getting started with conjoint analysis. Mark Res. Winter. 2005;17(4):42.

Marley AAJ, Pihlens D. Models of best–worst choice and ranking among multiattribute options (profiles). J Math Psychol. 2012;56(1):24–34.

Kaynak E. Service Industries in Developing Countries. London, UNITED KINGDOM: Taylor & Francis Group; 2004. Available from: http://ebookcentral.proquest.com/lib/washington/detail.action?docID=1395316 Cited 2021 Oct 26

Green PE, Krieger AM, Wind Y. Thirty years of conjoint analysis: reflections and prospects. Interfaces. 2001;31(3):S56.

Davies M. Concept mapping, mind mapping and argument mapping: what are the differences and do they matter? High Educ. 2011;62(3):279–302.

Trochim W, Kane M. Concept mapping: an introduction to structured conceptualization in health care. Int J Qual Health Care. 2005;17(3):187–91.

Kane M, Trochim W. Concept Mapping for Planning and Evaluation. 2455 Teller Road, Thousand Oaks California 91320 United States of America: SAGE Publications, Inc.; 2007. Available from: http://methods.sagepub.com/book/concept-mapping-for-planning-and-evaluation Cited 2021 Oct 26

Powell BJ, Fernandez ME, Williams NJ, Aarons GA, Beidas RS, Lewis CC, et al. Enhancing the impact of implementation strategies in healthcare: a research agenda. Front Public Health. 2019;7. Available from: https://www.frontiersin.org/articles/10.3389/fpubh.2019.00003 Cited 2022 Aug 10

Mofenson LM, Baggaley RC, Mameletzis I. Tenofovir disoproxil fumarate safety for women and their infants during pregnancy and breastfeeding. AIDS. 2017;31(2):213–32.

Joseph Davey DL, Pintye J, Baeten JM, Aldrovandi G, Baggaley R, Bekker LG, et al. Emerging evidence from a systematic review of safety of pre-exposure prophylaxis for pregnant and postpartum women: where are we now and where are we heading? J Int AIDS Soc. 2020;23(1): e25426.

Heffron R, Pintye J, Matthews LT, Weber S, Mugo N. PrEP as Peri-conception HIV Prevention for Women and Men. Curr HIV/AIDS Rep. 2016;13(3):131–9.

Fonner VA, Dalglish SL, Kennedy CE, Baggaley R, O’Reilly KR, Koechlin FM, et al. Effectiveness and safety of oral HIV preexposure prophylaxis for all populations. AIDS. 2016;30(12):1973–83.

Baeten JM, Donnell D, Ndase P, Mugo NR, Campbell JD, Wangisi J, et al. Antiretroviral prophylaxis for HIV prevention in heterosexual men and women. N Engl J Med. 2012;367(5):399–410.

Celum CL, Delany-Moretlwe S, McConnell M, Rooyen H van, Bekker LG, Kurth A, et al. Rethinking HIV prevention to prepare for oral PrEP implementation for young African women. J Int AIDS Soc 18(1). Available from: https://go-gale-com.offcampus.lib.washington.edu/ps/i.do?p=AONE&sw=w&issn=17582652&v=2.1&it=r&id=GALE%7CA435190660&sid=googleScholar&linkaccess=abs Cited 2021 Sep 27

Pintye J, Baeten JM, Celum C, Mugo N, Ngure K, Were E, et al. Maternal tenofovir disoproxil fumarate use during pregnancy is not associated with adverse perinatal outcomes among HIV-infected East African women: a prospective study. J Infect Dis. 2017;216(12):1561–8.

Mugwanya KK, Pintye J, Kinuthia J, Abuna F, Lagat H, Begnel ER, et al. Integrating preexposure prophylaxis delivery in routine family planning clinics: A feasibility programmatic evaluation in Kenya. PLoS Med. 2019;16(9): e1002885.

Pintye J, Kinuthia J, Roberts DA, Wagner AD, Mugwanya K, Abuna F, et al. Brief Report: Integration of PrEP services into routine antenatal and postnatal care: experiences from an implementation program in Western Kenya. JAIDS J Acquir Immune Defic Syndr. 2018;79(5):590–5.

Dettinger JC, Kinuthia J, Pintye J, Mwongeli N, Gómez L, Richardson BA, et al. PrEP Implementation for Mothers in Antenatal Care (PrIMA): study protocol of a cluster randomised trial. BMJ Open. 2019;9(3):e025122.

Beima-Sofie K. Implementation challenges and strategies in integration of PrEP into maternal and child health and family planning services: Experiences of frontline healthcare workers in Kenya. Mexico City, Mexico.: 10th International AIDS Society (IAS) Conference on HIV Science; 2019.

Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4(1):50.

Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015;10(1):21.

Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)—a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377–81.

Harris PA, Taylor R, Minor BL, Elliott V, Fernandez M, O’Neal L, et al. The REDCap consortium: Building an international community of software platform partners. J Biomed Inform. 2019;1(95): 103208.

Poll Everywhere. Poll Everywhere. Available from: https://www.polleverywhere.com Cited 2022 Aug 16

Trochim WM, McLinden D. Introduction to a special issue on concept mapping. Eval Program Plann. 2017;1(60):166–75.

Akoglu H. User’s guide to correlation coefficients. Turk J Emerg Med. 2018;18(3):91–3.

Kendall MG. A new measure of rank correlation. Biometrika. 1938;30(1–2):81–93.

Bakenne A, Nuttall W, Kazantzis N. Sankey-diagram-based insights into the hydrogen economy of today. Int J Hydrog Energy. 2016;41(19):7744–53.

SankeyMATIC: A Sankey diagram builder for everyone. Available from: https://sankeymatic.com/ Cited 2022 Aug 22

Cuschieri S. The STROBE guidelines. Saudi J Anaesth. 2019;13(Suppl 1):S31–4.

Iarossi G. The power of survey design: a user’s guide for managing surveys, interpreting results, and influencing respondents. The World Bank; 2006.

Bradley M, Daly A. Use of the logit scaling approach to test for rank-order and fatigue effects in stated preference data. Transportation. 1994;21(2):167–84.

Bang D, Frith CD. Making better decisions in groups. R Soc Open Sci. 2017;4(8): 170193.

Akaishi R, Umeda K, Nagase A, Sakai K. Autonomous mechanism of internal choice estimate underlies decision inertia. Neuron. 2014;81(1):195–206.

Talluri BC, Urai AE, Tsetsos K, Usher M, Donner TH. Confirmation bias through selective overweighting of choice-consistent evidence. Curr Biol. 2018;28(19):3128-3135.e8.

Herbert TT, Estes RW. Improving executive decisions by formalizing dissent: the corporate devil’s advocate. Acad Manage Rev. 1977;2(4):662–7.

Nominal Group Technique (NGT) - Nominal Brainstorming Steps | ASQ. Available from: https://asq.org/quality-resources/nominal-group-technique Cited 2022 Mar 24

Jones J, Hunter D. Consensus methods for medical and health services research. BMJ. 1995;311(7001):376–80.

Dzinamarira T, Mulindabigwi A, Mashamba-Thompson TP. Co-creation of a health education program for improving the uptake of HIV self-testing among men in Rwanda: nominal group technique. Heliyon. 2020;6(10): e05378.

Mashamba-Thompson TP, Lessells R, Dzinamarira T, Drain P, Thabane L. Co-creation of HIVST delivery approaches for improving urban men’s engagement with HIV services in eThekwini District, KwaZulu-Natal: nominal group technique. 2021; Available from: https://www.preprints.org/manuscript/202106.0273/v1 Cited 2022 Mar 24

Hajizadevalokolaee. Strategies for improving the integrated program of HIV/AIDS with sexual and reproductive health: using nominal group technique. Available from: https://www.jnmsjournal.org/article.asp?issn=2345-5756;year=2018;volume=5;issue=4;spage=147;epage=152;aulast=Hajizadevalokolaee Cited 2022 Mar 24

Drury DH, Farhoomand A. Improving management information systems research: question order effects in surveys. Inf Syst J. 1997;7(3):241–51.

Wilson EV, Lankton NK. Some unfortunate consequences of non-randomized, grouped-item survey administration in IS research. In: Proceedings of the International Conference on Information Systems, ICIS 2012, Orlando, Florida, USA, December 16–19, 2012. Association for Information Systems; 2012. Available from: http://aisel.aisnet.org/icis2012/proceedings/ResearchMethods/4 Cited 2022 Aug 23

Loiacono ET, Wilson EV. Do we truly sacrifice truth for simplicity: comparing complete individual randomization and semi- randomized approaches to survey administration. AIS Trans Hum-Comput Interact. 2020;12(2):45–69. https://doi.org/10.17705/1thci.00128.

Rosas SR, Kane M. Quality and rigor of the concept mapping methodology: a pooled study analysis. Eval Program Plann. 2012;35(2):236–45.

Brown G, Reeders D, Dowsett GW, Ellard J, Carman M, Hendry N, et al. Investigating combination HIV prevention: isolated interventions or complex system. J Int AIDS Soc. 2015;18(1):20499.

Wagner AD, Crocker J, Liu S, Cherutich P, Gimbel S, Fernandes Q, et al. Making smarter decisions faster: systems engineering to improve the global public health response to HIV. Curr HIV/AIDS Rep. 2019;16(4):279–91.

Cooper K, Brailsford SC, Davies R. Choice of modelling technique for evaluating health care interventions. J Oper Res Soc. 2007;58(2):168–76.

Zimmerman L, Lounsbury DW, Rosen CS, Kimerling R, Trafton JA, Lindley SE. Participatory system dynamics modeling: increasing stakeholder engagement and precision to improve implementation planning in systems. Adm Policy Ment Health Ment Health Serv Res. 2016;43(6):834–49.

Becker-Haimes EM, Ramesh B, Buck JE, Nuske HJ, Zentgraf KA, Stewart RE, et al. Comparing output from two methods of participatory design for developing implementation strategies: traditional contextual inquiry vs. rapid crowd sourcing. Implement Sci IS. 2022;17(1):46.

Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implement Sci IS. 2013;1(8):139.

Acknowledgements

We thank the Kenyan Ministry of Health and the Kisumu County, Siaya County, and Homa Bay County Health Departments for their support. We thank the women and healthcare workers who participated in this study.

Funding

This study was supported by the National Institutes of Health (NIH) (R01 HD094630-03S1 and K01MH121124). The funding agencies had no role in the study design, data collection, analysis, interpretation of data, or writing of the manuscript or the decision to submit it for publication.

Author information

Authors and Affiliations

Contributions

GJS, JK, and ADW are principal investigators of the study. GJS, JK, ADW, JCD, FA, BO, and NN designed the study and prepared the protocol. FA, BO, NM, LG, JS, GO, ES, and JK led the study implementation and data collection and data management. SH conducted the analysis, drafted the tables and figures, wrote the first draft, and conducted edits for the draft. GJS, BJW, ADW, JK, and JCD provided analytic and scientific guidance on analyses and interpretation. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Ethical approval for this study was obtained from the University of Washington (UW) Human Subjects Division Institutional Review Board (IRB) and Kenyatta National Hospital-University of Nairobi (KNH-UoN) Ethics and Research Committee (ERC). Informed consent was obtained from participants completing the past experience surveys and the workshop using the electronic consent module through REDCap.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1:

Strategies prioritized for testing.

Additional file 2:

STROBE Statement—Checklist of items that should be included in reports of cross-sectional studies.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Hicks, S., Abuna, F., Odhiambo, B. et al. Comparison of methods to engage diverse stakeholder populations in prioritizing PrEP implementation strategies for testing in resource-limited settings: a cross-sectional study. Implement Sci Commun 4, 76 (2023). https://doi.org/10.1186/s43058-023-00457-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s43058-023-00457-9