Abstract

Background

Clinical decision support (CDS) is increasingly used to facilitate chronic disease care. Despite increased availability of electronic health records and the ongoing development of new CDS technologies, uptake of CDS into routine clinical settings is inconsistent. This qualitative systematic review seeks to synthesise healthcare provider experiences of CDS—exploring the barriers and enablers to implementing, using, evaluating, and sustaining chronic disease CDS systems.

Methods

A search was conducted in Medline, CINAHL, APA PsychInfo, EconLit, and Web of Science from 2011 to 2021. Primary research studies incorporating qualitative findings were included if they targeted healthcare providers and studied a relevant chronic disease CDS intervention. Relevant CDS interventions were electronic health record-based and addressed one or more of the following chronic diseases: cardiovascular disease, diabetes, chronic kidney disease, hypertension, and hypercholesterolaemia. Qualitative findings were synthesised using a meta-aggregative approach.

Results

Thirty-three primary research articles were included in this qualitative systematic review. Meta-aggregation of qualitative data revealed 177 findings and 29 categories, which were aggregated into 8 synthesised findings. The synthesised findings related to clinical context, user, external context, and technical factors affecting CDS uptake. Key barriers to uptake included CDS systems that were simplistic, had limited clinical applicability in multimorbidity, and integrated poorly into existing workflows. Enablers to successful CDS interventions included perceived usefulness in providing relevant clinical knowledge and structured chronic disease care; user confidence gained through training and post training follow-up; external contexts comprised of strong clinical champions, allocated personnel, and technical support; and CDS technical features that are both highly functional, and attractive.

Conclusion

This systematic review explored healthcare provider experiences, focussing on barriers and enablers to CDS use for chronic diseases. The results provide an evidence-base for designing, implementing, and sustaining future CDS systems. Based on the findings from this review, we highlight actionable steps for practice and future research.

Trial registration

PROSPERO CRD42020203716

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Background

Introduction

Clinical decision support (CDS) refers to a wide range of tools that present clinical or patient-related information, “intelligently filtered or presented at appropriate times” to enhance healthcare processes and patient outcomes [1]. Modern computerised CDS systems are data-driven and utilise individual patient data from existing electronic health records (EHRs) [2]. EHR data is extracted and processed via algorithms, and decision support outputs are displayed to users within EHRs themselves or in standalone web-based applications. Provider-facing CDS functions include tools for clinical documentation, data presentation, order or prescription creation, protocol or pathway support, reference guidance, and alerts or reminder [3, 4]—typically, CDS systems incorporate one or more of these functions.

As early as 1998, decision support was identified as a fundamental area of high-quality chronic disease management within the Chronic Care Model [5]. The current COVID-19 pandemic has accelerated the need for virtual models of care in addition to traditional face-to-face chronic disease care [6]; improving chronic disease care through technology-enabled care models is also a goal of the current Australian national digital health strategy [7]. EHR-based CDS is a digital health intervention that can facilitate the whole spectrum of chronic disease care from screening and diagnosis; to management and optimisation of care pathways; to ongoing individual and population-level disease monitoring.

CDS has the potential to improve health process outcomes, such as adherence to recommended preventative care measures [8,9,10,11,12]. However, meta-analyses of CDS intervention studies show substantial heterogeneity in effect sizes [8,9,10]. Furthermore, widespread adoption of CDS systems has not been achieved [13], and access to CDS does not guarantee user uptake or acceptance in clinical settings [14,15,16]. For example, a review of 23 studies examining medication CDS systems found that between 46 and 96% of medication alerts were overridden by clinicians [15]. Other studies have found that an overload of CDS alerts can even contribute to burnout amongst time-poor clinicians [17]. Why do some CDS implementations succeed, whereas others fall short of expectations [18]? Inconsistent CDS uptake stems from technical issues during development, and contextual challenges to implementing the technology [19, 20]. From a technical perspective, CDS development requires complex clinical logic to be converted into computer-executable algorithms, necessitating skills in both medicine and informatics [13]. From an implementation perspective, CDS interventions commonly encounter user and organisational barriers.

Despite a sizeable volume of perspective pieces on why CDS undertakings succeed or fail, there remains few contemporary qualitative systematic reviews on the topic. Several systematic reviews have investigated CDS uptake using a quantitative approach, reporting user satisfaction scores, or reporting meta-regression results to identify factors statistically associated with positive CDS outcomes [8, 9, 21, 22]. Other reviews investigate CDS uptake by summarising the experience of healthcare providers—through narrative or thematic meta-synthesis of primary qualitative research findings [18, 23,24,25,26,27,28]. Two of these qualitative systematic reviews summarised early primary research studies in the field from the 1990s and 2000s [18, 25]. More recent reviews have been conducted to investigate barriers and enablers to CDS uptake, but have predominantly focussed on a single type of CDS—for example, CDS that is medication-related [24, 25], or CDS systems that are alert-based [26]. One 2015 study by Miller et al uses a comprehensive definition of computerised CDS—however, the authors only selected 9 of 56 eligible qualitative studies for inclusion in the final synthesis, as studies of limited methodological quality were excluded from the qualitative synthesis [23].

Building on previous studies, we sought to conduct a systematic review of barriers and enablers affecting EHR-based CDS adoption for chronic disease care. Barriers and enablers are determinants of practice that prevent or enable knowledge translation [29, 30]. A barrier adequately addressed becomes a facilitator for health intervention implementation. A synthesis of barriers and enablers across multiple studies can bring valuable perspectives not found within an individual study. A myriad of qualitative synthesis methods have been developed, each with its own epistemological stance and stated purpose [31, 32]. Broadly speaking, qualitative synthesis methods lie on a continuum between primarily aggregative to primarily interpretive approaches [31, 33]. Aggregative approaches such as meta-aggregation seeks to pool findings across studies, mirroring the meta-analysis process used in quantitative studies. In contrast, interpretative approaches such as meta-ethnography are grounded in social science research traditions and seek to re-interpret primary research findings to develop theories and frameworks [34, 35]. We use a JBI (formerly Joanna Briggs Institute) meta-aggregation approach for synthesising qualitative evidence for two main reasons—firstly, aggregative rather than interpretive synthesis methods is most appropriate for qualitative data in the CDS field, with its limited availability of contextually “thick” and conceptually “rich” qualitative findings [36, 37]; secondly, meta-aggregation best aligns with our review objective of identifying actionable steps at a practice level. A preliminary search of PROSPERO, Cochrane Database of Systematic Reviews, JBI database and Medline did not reveal published or studies in progress that conducted a similar scope of work.

Objectives

This qualitative systematic review aims to describe healthcare provider experiences of implementing, using, evaluating, and sustaining EHR-based CDS interventions for chronic disease care. Barriers and enablers of providers will be described from the perspectives of individual clinicians, and healthcare services.

Methods

The systematic review is registered on PROSPERO (CRD42020203716). The overall approach to this CDS review was a mixed method review incorporating qualitative, effectiveness, and economic evaluation components. Only the qualitative component of the systematic review is reported in this paper, and the effectiveness and economic reviews will be reported separately. Methods for the qualitative systematic review are informed by JBI methodology for systematic reviews of qualitative evidence, and JBI methodology for text and opinion [38]. The review was reported according to Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) guidelines—see Additional file 1.

Searches

Databases searches included PubMed (Medline), EBSCOHOST (CINAHL, APA PsychInfo, EconLit), and Web of Science. MeSH terms and title/abstract search terms included synonyms of “clinical decision support systems,” “cardiovascular disease,” “diabetes,” “chronic kidney disease,” “hypertension,” and “hypercholesterolaemia.” The full search strategy for PubMed (Medline) is outlined in Additional file 2. Studies were restricted to English language studies from January 2011 to January 2021. Studies prior to 2011 have been adequate covered by previous reviews [8, 18, 25] and our review targeted contemporary EHR-based CDS system implementations—hence, we restricted the search to studies published within the past decade.

Study inclusion and exclusion criteria

Population

Our population of interest was individual clinician (e.g. doctors, nurses, pharmacists) or other health service staff (e.g. clinic managers) using EHR CDS systems as a point-of-care tool for patients with one or more of five chronic diseases. Even though CDS can be both provider-facing and patient-facing, we focussed on provider perspectives since providers are the main implementers and users of CDS systems. The five related chronic diseases were chronic kidney disease, cardiovascular disease, diabetes, hypertension, and hypercholesterolaemia. CDS targeting the whole spectrum of chronic disease care was considered—including but not limited to CDS used for screening and diagnosis, pathway support, pharmacological management, and non-pharmacological management of chronic diseases. Basic EHR-based CDS functions with limited scope—such as single medication CDS (e.g. warfarin dosing) and simple recall alerts—were excluded from this study.

Phenomena of interest and context

The phenomena of interest were healthcare provider experiences of chronic disease CDS systems, focussing on barriers and enablers to implementing, using, evaluating, and sustaining CDS interventions. We sought to understand “real-world” provider experiences and therefore, CDS prototypes not used in clinical settings were excluded from the study. The contexts of interest were non-acute settings delivering chronic disease care, which included primary care, specialist outpatient, and community healthcare services. CDS systems used in emergency or other acute settings only, such as CDS for inpatient management of blood glucose levels, were excluded from this study; this is because optimal healthcare processes to address chronic diseases are inherently different from that of acute hospital services [5, 39]. See Additional file 3 for a detailed inclusion and exclusion criteria.

Other eligibility criteria

Primary research studies with CDS systems implemented for clinical use were included—thus, CDS research protocols and articles describing methods of CDS development without real-world implementation were excluded. Secondary research such as perspective pieces and systematic reviews were excluded. Study designs considered included qualitative studies and other evaluation studies with a qualitative inquiry component—these included studies conducting interviews, surveys, focus group discussions, and mixed methods studies. For the purposes of this review, “qualitative studies” referred to studies with an explicit qualitative methodological approach (e.g. phenomenology). “Other evaluation studies” refers to studies incorporating a qualitative inquiry component (e.g. user feedback study) but without an explicit qualitative methodological approach. We include both types of studies in our review, recognising that evidence from both formal qualitative studies and “other evaluation studies” with qualitative findings can enrich a synthesis [37].

Study selection

Identified citations were uploaded into Covidence software (Veritas Health Innovation, Melbourne, Victoria, Australia) [40] for screening and article selection. Title and abstracts were screened independently by two independent reviewers (WC, and BB or PC). Conflicts were resolved by reaching a consensus between the two reviewers, and where this was not possible, conflicts were resolved by a third team member. Full text screening was conducted, and studies were classified into qualitative, effectiveness, or economic categories by one reviewer (WC). Reasons for exclusion at full text stage were recorded. Citations for inclusion were imported into the JBI System for the Unified Management, Assessment and Review of Information (JBI SUMARI) software (JBI, Adelaide, Australia) [41] for critical appraisal and data extraction.

Study quality assessment

Critical appraisal of methodology quality was conducted using the JBI Critical Appraisal Checklist for Qualitative Research 2017 [38] by two reviewers (WC and CO). A random 25% sample of included studies had methodology quality assessed by both reviewers independently and disagreements were resolved through consensus. This review took an inclusive approach to incorporate a broad range of healthcare provider experiences—therefore, study quality assessment was conducted but studies were not excluded from data extraction or synthesis based on methodological quality scoring.

Data extraction and synthesis

We based our qualitative data extraction and synthesis on the JBI meta-aggregation approach [38]. Meta-aggregation is philosophically grounded in pragmatism and transcendental phenomenology [31]. Pragmatism roots are reflected in an emphasis to identify “lines of action” for policy and practice, which contrasts with other synthesis methods (e.g. meta-ethnography) that have a focus on mid-level theory generation [42]. The influence of transcendental phenomenology is seen in meta-aggregation bracketing, where findings are extracted as given without re-analysis or re-interpretation based on prior concepts regarding the phenomena of interest [43]—through bracketing, meta-aggregation seeks to aggregate primary study findings in a way that represents the original authors’ intended means, with minimal influence from the reviewer [43, 44]. The qualitative meta-aggregation process generates a hierarchy of findings, categories, and synthesised statements—pictorially represented in Fig. 1. In meta-aggregation, level 1 findings are extracted findings (e.g. themes) reported verbatim from primary research authors, which are backed with supportive illustrations (e.g. interview excerpts). Level 2 findings are reviewer-defined categories, which group two or more level 1 findings based on similarities in meaning. Level 3 findings are synthesised statements, which represent the collective meaning of categories and provide recommendations for practice [38, 44].

For data extraction, two reviewers extracted study characteristics and qualitative findings from included studies using JBI SUMARI software (WC and CO) [41], according to a modified JBI meta-aggregation approach [38]. Study characteristics were extracted using the standardised JBI SUMARI tool and included method of data collection and analysis, study setting, phenomena of interest, and participant characteristics. Findings referred to verbatim extracts of the author’s interpretation of qualitative or mixed methods results; illustrations were direct quotations or other supporting data from the primary study (e.g. interviews, surveys). In addition to primary qualitative research findings, verbatim author text and opinion describing primary quantitative research data (e.g. survey data) were also extracted for synthesis. A level of credibility from unequivocal (U), credible (C) to not supported (N) was assigned to each finding. Qualitative findings were pooled—only unequivocal (U) and credible (C) findings were grouped into categories, which were meta-aggregated into synthesised findings. Not supported (N) findings are presented separately.

Results

Included studies

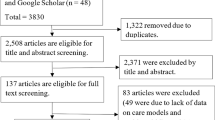

Abstract and title screening was conducted for 7999 citations. Articles were excluded at this stage (n = 7374) mainly due to an absence of a chronic disease CDS mentioned in the abstract and title. Full text review was conducted for 625 articles. Reasons for exclusion were as follows: 377 based on article type (e.g. protocol, non-primary research articles) and 148 based on article content. The most common reasons for exclusion based on article content were that the CDS did not have an EHR component (n = 65) or that the EHR did not have a CDS component (n = 29). Thirty-three studies met the inclusion criteria for the qualitative outcome component of this systematic review, of which 13 were qualitative studies and 20 were other evaluation studies with qualitative findings. All 33 studies were included for data extraction and qualitative evidence synthesis (meta-aggregation). See also Fig. 2 for PRISMA flow diagram [45]. Effectiveness and economic evaluation components of the systematic review will be reported separately.

Study quality assessment

Overall methodological quality was moderate for the 13 qualitative studies—main issues identified during quality assessment related to limited descriptions of methodological approach (JBI Checklist for Qualitative Research Q1 and Q2) and limited description of researcher context (Q6 and Q7). For the 20 other evaluation studies with qualitative findings, Q1 to Q7 were considered not applicable. The main issue identified in other evaluation studies was that few (n = 6) had evidence of ethics approval. See Additional file 4 for methodological quality of included studies.

Characteristics of included studies

The highest number of studies were conducted in USA (n = 13), Australia (n = 7), and India (n = 3) (Fig. 3). Year of publication ranged from 2011 to 2020, with the highest number of articles (48%) published in 2018 and 2019. The most common disease focus of the CDS systems were cardiovascular risk factors (n = 13), followed by diabetes (n = 5), hypertension (n = 4), and multiple medications (n = 4) (Fig. 4). The majority of studies were conducted in primary care (n = 27)—which referred to general practices, community health clinics, and primary care practices. The remaining studies were conducted in specialist outpatients (n = 3) and multiple settings (n = 3). A range of CDS types were included: from screening and diagnosis, to pathway support, pharmacological management, and non-pharmacological management. See Table 1 for characteristics of included studies.

The median number of provider participants was 19 (IQR 10 to 63); 4 studies did not state the number of participants included. Methods used for data collection included interviews (n = 11), surveys (n = 7), or more than one method (n = 14). Those using more than one data collection method mainly used surveys and interviews, or surveys and focus groups. For data analysis, 6 studies used an inductive approach to thematic analysis and 2 used deductive coding—utilising the NASSS framework [46] and Rogers’ diffusion of innovation [80]. The remaining qualitative studies used other evaluation approaches, drawing on theoretical frameworks such as the Consolidated Framework for Implementation Research (CFIR) [57], realist evaluation framework [70], Normalisation Process Theory (NPT) [85], and Centre for eHealth Research (CeHRes) Roadmap [54].

Review findings and meta-aggregation

From included articles, 177 findings were extracted on barriers and enablers to implementation and use of CDS. Out of these findings, 40 (22%) were unequivocal, 65 (37%) were credible, and 72 (41%) were not supported. For the full list of findings with illustrations, see Additional file 5. The findings were grouped into 29 categories, which were synthesised into 8 findings. Findings, categories, and synthesised findings are presented in Tables 2, 3, 4, and 5.

Clinical context barriers and enablers

“Clinical context” referred to clinical factors during the consultation—relating to the patient’s medical condition, patient-clinician interaction, or the clinician’s management of the patient. CDS barriers encountered during the consultation included “distorted priorities” and “blanket recommendations” that did not account for the patient’s agenda and the need to consider multiple clinical guidelines in multimorbidity presentations [74]. Enablers to CDS uptake included tools that facilitated structured chronic disease care or triggered relevant discussions during the clinical consultation. Clinicians also responded positively to CDS that facilitated their own judgment or provided a safety net to “avoid dangerous situations” [59].

Example finding 1: Distorted Priorities [74]

Illustration from interview: “One of the dangers I would see with this is the encouragement of game playing…So an electronic decision support module that is only related to cardiovascular disease…could lead you to focus on getting cholesterol and things done and perhaps forget immunisations or pap smears or the housing forms because that’s what the computer is flashing up at you.”

User barriers and enablers

“User” factors referred to the individual attributes of the provider. Barriers arose from low awareness and low familiarity with the CDS technology. Lack of trust was also a major barrier—some clinicians felt “marked down” by an external authority [46, 74]. Perceived usefulness to skill expansion and user confidence were facilitators to CDS use. Building user confidence required deliberate effort from the implementation team in providing both initial training and follow-up training or individualised support. As the research teams plays a central role in driving engagement, one study described a plateauing of CDS use post-trial [72].

Example finding 2: Confidence [75]

Illustration from interview: “First, we were afraid that there was the need to handle computers and touch screens, but later after training, we were able to understand it. After we did 1 or 2 tests, it became easy, and we can do it better now.”

External context barriers and enablers

“External context” referred to service and other macro context factors. Lack of time, interruption to workflow, and lack of resources were key barriers to CDS uptake. Time and remuneration are tightly coupled in fee-for-service settings, such as private general practice clinics. One team sought to include CDS use as a billable item number on the fee-for-service schedule in Australia but was informed that payment for CDS use was not possible from a legislative point of view [46]. Healthcare providers identified engaged clinical champions and allocated personnel to operate the CDS, including allocated technical support, as key enablers to successful implementation.

Example finding 3: Allocated person [72]

Illustration from interview: “A good single person allocated, keep monitoring, keep going, these tools will be very, very good. Yeah.”

Technical barriers and enablers

“Technical” refers to technical features that affected CDS uptake. The alert burden was a recurring theme and contributed to CDS prompts being overridden [50]. Although the CDS systems included in this study were all EHR-linked, poor integration and the need for additional manual data entry were nevertheless barriers to uptake. Useful technical functions and attractive CDS design features were enablers to uptake. Clinicians valued CDS functions that provided relevant and immediate “hands-on information” [59]. Appropriate use of colour elicited desirability and “almost an emotional response” to the CDS [72].

Example finding 4: Integration [46]

Illustration from interview: “Nothing from HealthTracker populated into the EMR; [only] the reverse occurred.”

Discussion

Main findings and recommendations

Our systematic review aimed to describe the experience of healthcare providers in implementing, using, evaluating, and sustaining CDS systems. The findings of this review predominantly outline factors influencing implementation and use, since few findings from included primary research studies addressed factors influencing the evaluation or sustainability of CDS projects. The eight synthesised findings from meta-aggregation of qualitative findings related to clinical context, user-related, external context, and technical factors that influenced CDS uptake. We found that these factors influencing CDS uptake were universal across the broad range of included CDS studies, regardless of CDS type (e.g. by disease focus), and shared across both high and lower middle-income settings. Our findings indicate that adequate attention and resourcing needs to be directed at each of these domains when undertaking a CDS project. We summarise actionable steps that can be taken to address the challenges in each of these domains in Table 6.

In the CDS literature, external context and user factors affecting uptake have remained relatively constant over time and across heterogenous CDS implementations [18, 25, 86]. In particular, the macro external contextual “barriers of insufficiency”—lack of funding, training, and supportive policy—have changed little since barriers to health information technology was first described in the 1960s [18, 87]. Several included studies illustrate the challenge of overcoming barriers of insufficiency beyond the project implementation phase. Patel et al. highlighted the critical role of the CDS research team in driving training and user engagement—the authors noted that when this support was withdrawn post-trial, CDS-related improvements in health outcomes plateaued [72]. In Abimbola et al., the research team sought government funding to reimburse clinicians for CDS use on the national fee-for-service Medicare Benefits Schedule (Australia) but were informed that “from a legislative viewpoint, MBS items can’t be attached to software” [46]. Although there has been examples of national-level financial incentives for EHR-based CDS uptake (e.g. in the USA) [88], the lack of clear macro-level CDS reimbursement strategies continues to impede its widespread uptake into routine clinical practice [89, 90].

In contrast to external context and user factors, the clinical and technological expectations we identified in this review are specific to our highly digitised era. The “five rights” of CDS interventions include providing the right information, to the right person, in the right format, through the right channel, at the right time [4]. Providing the “right information” is increasingly hard to achieve in the face of multimorbidity—whilst single-disease digitalised guidelines may be appropriate in acute settings (e.g. stroke assessment), we found that a lack of CDS algorithm complexity frustrates clinicians and limits CDS applicability in multimorbid, chronic disease settings [28]. Clinician expectations for what constitutes right EHR information has also evolved in an age where handheld devices synchronise instantaneously to the cloud and across multiple devices, healthcare providers now expect pertinent individual EHR data to be immediately integrated within CDS systems. Today’s CDS users also expect “right format” not only in terms of correct functionality, but also in terms of desirability. Design principles suggest that attractive designs “look easier to use… whether or not they actually are easier to use” [91]—although user experience (UX) is an established sector outside of medicine, such applications of these principles to CDS research remains preliminary in nature [92, 93].

Thus, our results suggest that an ideal chronic disease CDS system would be wide rather than narrow in clinical scope to reflect the complex nature of care in multimorbidity. For example, some authors have proposed problem-orientated patient summaries with decision support as a potential way to improve CDS usability in multimorbidity settings [94, 95]. Such broad-scope CDS interventions require improved clinical collaboration beyond that of specialty or disease-specific interests [28, 96]. More work also needs to be done to develop CDS systems that are both functional and attractive. These goals are conceptually obvious but difficult to achieve in practice. For example, a patient’s data is often distributed across “archipelagos” of EHR sources, which may not adhere to interoperability standards [90]—greater collaboration between EHR vendors and developers is needed to enable a greater variety of EHR data to be extracted, which would increase CDS algorithm complexity and improve clinician workflow [95, 97, 98].

Comparison with previous studies

In terms of methodology, our review is most similar to the systematic review of CDS studies conducted by Miller et al. (year 2000 to 2013), who used an inductive approach to qualitative evidence synthesis—similar to the JBI meta-aggregation method we used. However, Miller et al. included a narrower scope than us; whereas we examined both barriers and enablers, their search strategy narrowed the article search to primary research describing barriers or problems with CDS interventions only [23]. More recently, two 2021 systematic reviews examined barriers and enablers to using CDS systems—one investigating CDS targeting medication use [24] and one focussing on alert-based CDS systems [26]. Both of these reviews synthesised qualitative findings using thematic analysis with a deductive approach, utilising the “Human, Organization, and Technology–Fit” (HOT–fit) framework [99]. Several barriers and enablers identified in our study are similar to the human, technological, and organisation factors influencing uptake described by the HOT-fit framework [86, 99, 100]. In one of these reviews, Westerbeek et al. highlighted that disease-specific factors were not adequately captured within the HOT-fit framework [24]. Likewise, we found a large number of qualitative findings relating to “clinical context” factors (e.g. distorted clinical priorities) that are not sufficiently incorporated into HOT-fit [99] and similar determinant frameworks used for health information technology interventions [25, 58, 86].

Strengths and limitations

To date, the discourse on CDS systems implementation has typically focussed on barriers to uptake. This study takes a unique approach to synthesising provider experiences, focussing not only on deficits but also on potential solutions to improving CDS interventions [101]—specifically, our meta-aggregation process extracted and synthesised findings on healthcare provider perspectives of CDS enablers and presented them as actionable steps for practice. Another strength of our review was the breadth of primary research articles included to capture the experience of CDS interventions across different settings and clinical specialties. We utilised a broad search strategy for EHR-based CDS systems, across high- and lower middle-income countries, and across various non-acute clinical settings. To incorporate the spectrum of experience across both early and established CDS systems, we included qualitative research and “other evaluation” studies with qualitative findings. Even though studies in the other evaluation category tended to have limited methodological rigour, we included them to improve generalisability of our findings. We note that other evaluation studies with qualitative findings are often conducted as early precursors of formal qualitative studies, or are sometimes the only evaluations that took place if projects lacked sufficient sustainability to undertake formal qualitative evaluations.

There are several limitations to our review methodology. Whilst we employed a comprehensive search strategy across several databases, we did not include grey literature. Furthermore, non-research articles such as perspective pieces and systematic reviews were not included as the focus was on synthesis of primary research article findings. Common, related cardiometabolic chronic diseases with similar modifiable risk factors were included in this review—however, other chronic diseases such as COPD or chronic pain were not included. Healthcare providers are the primary targets of EHR-based CDS and thus were included as the population of interest in this study. However, multiple perspectives, including patient, EHR vendor, CDS developer, and other collaborator perspectives are also of interest in fully understanding the barriers and facilitators to CDS success [102, 103].

JBI methodology to meta-aggregation is one of many forms of qualitative research synthesis, and debates over the preferred systematic review synthesis methodology continue [34, 104]. Consistent with pragmatism, knowledge from meta-aggregation do not indicate hypothetical explanation [105] and some critics note a lack of re-interpretation and generation of theoretical understandings with this approach. We recognise the important contributions of theory and frameworks in the field of implementation research, which facilitates the systematic uptake of effective health technologies into routine clinical contexts [86, 106,107,108]. Diverse theoretical lenses have been used in describing CDS uptake [47, 100, 109, 110], and we provide a comprehensive description of theoretical frameworks used to guide qualitative data analysis within the primary research articles included in our review (e.g. NASSS framework, CFIR framework). However, because meta-aggregation is descriptive rather than interpretive, a limitation of this synthesis method is that it does not further develop existing theoretical frameworks. The merits of alternative qualitative synthesis approaches over meta-aggregation lie in their ability to contribute to mid-level theory—such alternative synthesis methods include meta-ethnography, or deductive thematic analysis using an existing implementation science framework. Nevertheless, meta-aggregation was selected as the most appropriate synthesis method for our review given the limited availability of “thick” and “rich” qualitative data [36, 37] in the CDS field. We also selected meta-aggregation because it is a structured and transparent method to synthesise findings into concrete statements (practice-level theory) that is practical and accessible to healthcare providers at the coalface of CDS implementation [42, 111].

Conclusion

Qualitative findings of barriers and enablers to CDS uptake provide valuable insights into why some projects are successfully implemented, whereas others fail to achieve uptake. Our systematic review summarises provider experiences in implementing and using chronic disease CDS systems across a broad range of studies. Our findings identified clinical context, user, external context, and technological factors affecting uptake. The meta-aggregated findings and summary of recommendations provide an evidence-base for designing, implementing, and sustaining future CDS systems.

Availability of data and materials

The data supporting the findings of this study are available within the article and Supplementary Information.

Abbreviations

- AF:

-

Atrial fibrillation

- CDS:

-

Clinical decision support

- CeHRes:

-

Centre for eHealth Research (CeHRes) Roadmap

- CFIR:

-

Consolidated Framework for Implementation Research

- CKD:

-

Chronic kidney disease

- CVRF:

-

Cardiovascular risk factors

- EHR:

-

Electronic health record

- GP:

-

General practitioners

- HITREF:

-

Health Information Technology Evaluation Framework

- HOT-fit:

-

Human, Organization, and Technology–Fit framework

- JBI:

-

Formerly Joanna Briggs Institute

- JBI SUMARI:

-

JBI System for the Unified Management, Assessment and Review of Information

- NASSS:

-

Nonadoption, Abandonment, and challenges to Scale-up, Spread and Sustainability framework

- NPT:

-

Normalisation Process Theory

- PRISMA:

-

Preferred Reporting Items for Systematic reviews and Meta-Analyses

- UX:

-

User experience

References

Osheroff JA, Healthcare Information and Management Systems Society. Improving outcomes with clinical decision support : an implementer’s guide, vol. xxiii. 2nd ed. Chicago: HIMSS; 2012. p. 323.

Sutton RT, Pincock D, Baumgart DC, Sadowski DC, Fedorak RN, Kroeker KI. An overview of clinical decision support systems: benefits, risks, and strategies for success. NPJ Digit Med. 2020;3:17.

Agency of Healthcare Research and Quality. Digital healthcare research: section 4 - types of CDS interventions. Rockville: U.S. Department of Health & Human Services; 2021. Available from: https://digital.ahrq.gov/ahrq-funded-projects/current-health-it-priorities/clinical-decision-support-cds/chapter-1-approaching-clinical-decision/section-4-types-cds-interventions. Cited 2021 November

Sirajuddin AM, Osheroff JA, Sittig DF, Chuo J, Velasco F, Collins DA. Implementation pearls from a new guidebook on improving medication use and outcomes with clinical decision support. Effective CDS is essential for addressing healthcare performance improvement imperatives. J Healthc Inf Manag. 2009;23(4):38–45.

Wagner EH, Austin BT, Von Korff M. Organizing care for patients with chronic illness. Milbank Q. 1996;74(4):511–44.

Smithson R, Roche E, Wicker C. Virtual models of chronic disease management: lessons from the experiences of virtual care during the COVID-19 response. Aust Health Rev. 2021;45(3):311–6.

Australian Digital Health Agency. National digital health strategy and framework for action. Canberra: Australian Government; 2021. Available from: https://www.digitalhealth.gov.au/about-us/national-digital-health-strategy-and-framework-for-action. Cited 2021 November

Bright TJ, Wong A, Dhurjati R, Bristow E, Bastian L, Coeytaux RR, et al. Effect of clinical decision-support systems: a systematic review. Ann Intern Med. 2012;157(1):29–43.

Kwan JL, Lo L, Ferguson J, Goldberg H, Diaz-Martinez JP, Tomlinson G, et al. Computerised clinical decision support systems and absolute improvements in care: meta-analysis of controlled clinical trials. BMJ. 2020;370:m3216.

Groenhof TKJ, Asselbergs FW, Groenwold RHH, Grobbee DE, Visseren FLJ, Bots ML. The effect of computerized decision support systems on cardiovascular risk factors: a systematic review and meta-analysis. BMC Med Inform Decis Mak. 2019;19(1):108.

Bryan C, Boren SA. The use and effectiveness of electronic clinical decision support tools in the ambulatory/primary care setting: a systematic review of the literature. Inform Prim Care. 2008;16(2):79–91.

Moja L, Kwag KH, Lytras T, Bertizzolo L, Brandt L, Pecoraro V, et al. Effectiveness of computerized decision support systems linked to electronic health records: a systematic review and meta-analysis. Am J Public Health. 2014;104(12):e12–22.

Greenes RA, Bates DW, Kawamoto K, Middleton B, Osheroff J, Shahar Y. Clinical decision support models and frameworks: seeking to address research issues underlying implementation successes and failures. J Biomed Inform. 2018;78:134–43.

Jing X, Himawan L, Law T. Availability and usage of clinical decision support systems (CDSSs) in office-based primary care settings in the USA. BMJ Health Care Inform. 2019;26(1):e100015.

Nanji KC, Slight SP, Seger DL, Cho I, Fiskio JM, Redden LM, et al. Overrides of medication-related clinical decision support alerts in outpatients. J Am Med Inform Assoc. 2014;21(3):487–91.

Kouri A, Yamada J, Lam Shin Cheung J, Van de Velde S, Gupta S. Do providers use computerized clinical decision support systems? A systematic review and meta-regression of clinical decision support uptake. Implement Sci. 2022;17(1):21.

Jankovic I, Chen JH. Clinical decision support and implications for the clinician burnout crisis. Yearb Med Inform. 2020;29(1):145–54.

Kaplan B. Evaluating informatics applications--clinical decision support systems literature review. Int J Med Inform. 2001;64(1):15–37.

Agency of Healthcare Research and Quality. Challenges and barriers to clinical decision support (CDS) design and implementation experienced in the Agency for Healthcare Research and Quality CDS Demonstrations. Rockville: U.S. Department of Health and Human Services; 2010. Available from: https://digital.ahrq.gov/sites/default/files/docs/page/CDS_challenges_and_barriers.pdf. Cited 2021 November

Eichner J, Das M. Challenges and barriers to clinical decision support (CDS) design and implementation experienced in the Agency for Healthcare Research and Quality CDS Demonstrations, Prepared for the AHRQ National Resource Center for Health Information Technology under Contract No. 290-04-0016. Rockville: Agency for Healthcare Research and Quality; 2010. Available from: https://digital.ahrq.gov/sites/default/files/docs/page/CDS_challenges_and_barriers.pdf. Updated 01/01; Cited 2021 November

Van de Velde S, Heselmans A, Delvaux N, Brandt L, Marco-Ruiz L, Spitaels D, et al. A systematic review of trials evaluating success factors of interventions with computerised clinical decision support. Implement Sci. 2018;13(1):114.

Kawamoto K, Houlihan CA, Balas EA, Lobach DF. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ. 2005;330(7494):765.

Miller A, Moon B, Anders S, Walden R, Brown S, Montella D. Integrating computerized clinical decision support systems into clinical work: a meta-synthesis of qualitative research. Int J Med Inform. 2015;84(12):1009–18.

Westerbeek L, Ploegmakers KJ, de Bruijn G-J, Linn AJ, van Weert JCM, Daams JG, et al. Barriers and facilitators influencing medication-related CDSS acceptance according to clinicians: a systematic review. Int J Med Inform. 2021;152:104506.

Moxey A, Robertson J, Newby D, Hains I, Williamson M, Pearson S-A. Computerized clinical decision support for prescribing: provision does not guarantee uptake. J Am Med Inform Assoc. 2010;17(1):25–33.

Olakotan OO, Mohd Yusof M. The appropriateness of clinical decision support systems alerts in supporting clinical workflows: a systematic review. Health Inform J. 2021;27(2):14604582211007536.

Kilsdonk E, Peute LW, Jaspers MW. Factors influencing implementation success of guideline-based clinical decision support systems: a systematic review and gaps analysis. Int J Med Inform. 2017;98:56–64.

Fraccaro P, Casteleiro MA, Ainsworth J, Buchan I. Adoption of clinical decision support in multimorbidity: a systematic review. JMIR Med Inform. 2015;3(1):e4.

Flottorp SA, Oxman AD, Krause J, Musila NR, Wensing M, Godycki-Cwirko M, et al. A checklist for identifying determinants of practice: a systematic review and synthesis of frameworks and taxonomies of factors that prevent or enable improvements in healthcare professional practice. Implement Sci. 2013;8:35.

Legare F, Zhang P. Chapter 3.3a Barriers and facilitators. In: Straus S, Tetroe J, Graham I, editors. Knowledge translation in health care: moving from evidence to practice. New Jersey: Wiley; 2013.

Hannes K, Lockwood C. Synthesizing qualitative research: choosing the right approach. Hoboken: Wiley; 2012.

Bearman M, Dawson P. Qualitative synthesis and systematic review in health professions education. Med Educ. 2013;47(3):252–60.

Gough D, Thomas J, Oliver S. Clarifying differences between review designs and methods. Syst Rev. 2012;1:28.

Lockwood C, Pearson A. A comparison of meta-aggregation and meta-ethnography as qualitative review methods. United States: Lippincott Williams & Wilkins; 2013.

Sattar R, Lawton R, Panagioti M, Johnson J. Meta-ethnography in healthcare research: a guide to using a meta-ethnographic approach for literature synthesis. BMC Health Serv Res. 2021;21(1):50.

Flemming K, Booth A, Garside R, Tunçalp Ö, Noyes J. Qualitative evidence synthesis for complex interventions and guideline development: clarification of the purpose, designs and relevant methods. BMJ Glob Health. 2019;4(Suppl 1):e000882.

Noyes J, Booth A, Cargo M, Flemming K, Harden A, Harris J, et al. Chapter 21: Qualitative evidence. In: Cochrane handbook for systematic review of intervensions version 63 (updated February 2022): Cochrane; 2022. Available from: https://training.cochrane.org/handbook/current/chapter-21.

Aromataris E, Munn Z. JBI manual for evidence synthesis: JBI; 2020. Available from: https://synthesismanual.jbi.global. Cited 2021 November

Wagner EH, Austin BT, Davis C, Hindmarsh M, Schaefer J, Bonomi A. Improving chronic illness care: translating evidence into action. Health Aff. 2001;20(6):64–78.

Covidence. Covidence systematic review software. Melbourne: Veritas Health Innovation; 2021. Available from: https://www.covidence.org/. Cited 2021 November

JBI. JBI SUMARI software. Adelaide: The University of Adelaide; 2021. Available from: https://sumari.jbi.global/. Cited 2021 November

Lockwood C, Munn Z, Porritt K. Qualitative research synthesis: methodological guidance for systematic reviewers utilizing meta-aggregation. JBI Evid Implement. 2015;13(3):179–87.

Tufanaru C. Theoretical foundations of meta-aggregation: insights from Husserlian phenomenology and American pragmatism. Adelaide: University of Adelaide; 2016. Available from: https://hdl.handle.net/2440/98255. Cited 2022 May

Munn Z, Dias M, Tufanaru C, Porritt K, Stern C, Jordan Z, et al. The “quality” of JBI qualitative research synthesis: a methodological investigation into the adherence of meta-aggregative systematic reviews to reporting standards and methodological guidance. JBI Evid Synth. 2021;19(5):1119–39.

Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ. 2021;372:n71.

Abimbola S, Patel B, Peiris D, Patel A, Harris M, Usherwood T, et al. The NASSS framework for ex post theorisation of technology-supported change in healthcare: worked example of the TORPEDO programme. BMC Med. 2019;17(1):233.

Greenhalgh T, Wherton J, Papoutsi C, Lynch J, Hughes G, A’Court C, et al. Beyond adoption: a new framework for theorizing and evaluating nonadoption, abandonment, and challenges to the scale-up, spread, and sustainability of health and care technologies. J Med Internet Res. 2017;19(11):e367.

Ballard AY, Kessler M, Scheitel M, Montori VM, Chaudhry R. Exploring differences in the use of the statin choice decision aid and diabetes medication choice decision aid in primary care. BMC Med Inform Decis Mak. 2017;17(1):118.

Chiang J, Furler J, Boyle D, Clark M, Manski-Nankervis JA. Electronic clinical decision support tool for the evaluation of cardiovascular risk in general practice: a pilot study. Aust Fam Physician. 2017;46(10):764–8.

Cho I, Slight SP, Nanji KC, Seger DL, Maniam N, Dykes PC, et al. Understanding physicians’ behavior toward alerts about nephrotoxic medications in outpatients: a cross-sectional analysis. BMC Nephrol. 2014;15:200.

Conway N, Adamson KA, Cunningham SG, Emslie Smith A, Nyberg P, Smith BH, et al. Decision support for diabetes in scotland: implementation and evaluation of a clinical decision support system. J Diabetes Sci Technol. 2018;12(2):381–8.

Dagliati A, Sacchi L, Tibollo V, Cogni G, Teliti M, Martinez-Millana A, et al. A dashboard-based system for supporting diabetes care. J Am Med Inform Assoc. 2018;25(5):538–47.

Dixon BE, Alzeer AH, Phillips EO, Marrero DG. Integration of provider, pharmacy, and patient-reported data to improve medication adherence for type 2 diabetes: a controlled before-after pilot study. JMIR Med Inform. 2016;4(1):e4.

Fico G, Hernanzez L, Cancela J, Dagliati A, Sacchi L, Martinez-Millana A, et al. What do healthcare professionals need to turn risk models for type 2 diabetes into usable computerized clinical decision support systems? Lessons learned from the MOSAIC project. BMC Med Inform Decis Mak. 2019;19(1):163.

van Gemert-Pijnen JEWC, Nijland N, van Limburg AHM, Ossebaard HC, Kelders SM, Eysenbach G, et al. A holistic framework to improve the uptake and impact of eHealth technologies. J Med Internet Res. 2011;13(4):e111.

Gill J, Kucharski K, Turk B, Pan C, Wei W. Using electronic clinical decision support in patient-centered medical homes to improve management of diabetes in primary care: the DECIDE study. J Ambul Care Manage. 2019;42(2):105–15.

Gold R, Bunce A, Cowburn S, Davis JV, Nelson JC, Nelson CA, et al. Does increased implementation support improve community clinics’ guideline-concordant care? Results of a mixed methods, pragmatic comparative effectiveness trial. Implement Sci. 2019;14(1):100.

Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4(1):50.

Helldén A, Al-Aieshy F, Bastholm-Rahmner P, Bergman U, Gustafsson LL, Höök H, et al. Development of a computerised decisions support system for renal risk drugs targeting primary healthcare. BMJ Open. 2015;5(7):e006775.

Holt TA, Dalton AR, Kirkpatrick S, Hislop J, Marshall T, Fay M, et al. Barriers to a software reminder system for risk assessment of stroke in atrial fibrillation: a process evaluation of a cluster randomised trial in general practice. Br J Gen Pract. 2018;68(677):e844–e51.

Jindal D, Gupta P, Jha D, Ajay VS, Goenka S, Jacob P, et al. Development of mWellcare: an mHealth intervention for integrated management of hypertension and diabetes in low-resource settings. Glob Health Action. 2018;11(1):1517930.

Kumar S, Woodward-Kron R, Frank O, Knieriemen A, Lau P. Patient-directed reminders to improve preventive care in general practice for patients with type 2 diabetes: a proof of concept. Aust J Gen Pract. 2018;47(6):383–8.

Litvin CB, Hyer JM, Ornstein SM. Use of clinical decision support to improve primary care identification and management of chronic kidney disease (CKD). J Am Board Fam Med. 2016;29(5):604–12.

Lopez PM, Divney A, Goldfeld K, Zanowiak J, Gore R, Kumar R, et al. Feasibility and outcomes of an electronic health record intervention to improve hypertension management in immigrant-serving primary care practices. Med Care. 2019;57:S164–S71.

Lugtenberg M, Pasveer D, van der Weijden T, Westert GP, Kool RB. Exposure to and experiences with a computerized decision support intervention in primary care: results from a process evaluation. BMC Fam Pract. 2015;16:141.

Majka DS, Lee JY, Peprah YA, Lipiszko D, Friesema E, Ruderman EM, et al. Changes in care after implementing a multifaceted intervention to improve preventive cardiology practice in rheumatoid arthritis. Am J Med Qual. 2019;34(3):276–83.

Meador M, Osheroff JA, Reisler B. Improving identification and diagnosis of hypertensive patients hiding in plain sight (HIPS) in health centers. Jt Comm J Qual Patient Saf. 2018;44(3):117–29.

Millery M, Shelley D, Wu D, Ferrari P, Tseng TY, Kopal H. Qualitative evaluation to explain success of multifaceted technology-driven hypertension intervention. Am J Manag Care. 2011;17(12 Spec No.):Sp95–102.

O'Reilly DJ, Bowen JM, Sebaldt RJ, Petrie A, Hopkins RB, Assasi N, et al. Evaluation of a chronic disease management system for the treatment and management of diabetes in primary health care practices in Ontario: an observational study. Ont Health Technol Assess Ser. 2014;14(3):1–37.

Orchard J, Li J, Gallagher R, Freedman B, Lowres N, Neubeck L. Uptake of a primary care atrial fibrillation screening program (AF-SMART): a realist evaluation of implementation in metropolitan and rural general practice. BMC Fam Pract. 2019;20(1):1–13.

Pawson R, Tilley N. Realistic evaluation bloodlines. Am J Eval. 2001;22(3):317–24.

Patel B, Usherwood T, Harris M, Patel A, Panaretto K, Zwar N, et al. What drives adoption of a computerised, multifaceted quality improvement intervention for cardiovascular disease management in primary healthcare settings? A mixed methods analysis using normalisation process theory. Implement Sci. 2018;13(1):140.

Murray E, Treweek S, Pope C, MacFarlane A, Ballini L, Dowrick C, et al. Normalisation process theory: a framework for developing, evaluating and implementing complex interventions. BMC Med. 2010;8(1):63.

Peiris D, Usherwood T, Weeramanthri T, Cass A, Patel A. New tools for an old trade: a socio-technical appraisal of how electronic decision support is used by primary care practitioners. Sociol Health Illn. 2011;33(7):1002–18.

Praveen D, Patel A, Raghu A, Clifford GD, Maulik PK, Abdul AM, et al. SMARTHealth India: development and field evaluation of a mobile clinical decision support system for cardiovascular diseases in Rural India. JMIR Mhealth Uhealth. 2014;2(4):e3568.

Raghu A, Praveen D, Peiris D, Tarassenko L, Clifford G. Engineering a mobile health tool for resource-poor settings to assess and manage cardiovascular disease risk: SMARThealth study. BMC Med Inform Decis Mak. 2015;15:36.

Regan ME. Implementing an evidence-based clinical decision support tool to improve the detection, evaluation, and referral patterns of adult chronic kidney disease patients in primary care. J Am Assoc Nurse Pract. 2017;29(12):741–53.

Romero-Brufau S, Wyatt KD, Boyum P, Mickelson M, Moore M, Cognetta-Rieke C. A lesson in implementation: a pre-post study of providers’ experience with artificial intelligence-based clinical decision support. Int J Med Inform. 2020;137:1–6.

Shemeikka T, Bastholm-Rahmner P, Elinder CG, Vég A, Törnqvist E, Cornelius B, et al. A health record integrated clinical decision support system to support prescriptions of pharmaceutical drugs in patients with reduced renal function: design, development and proof of concept. Int J Med Inform. 2015;84(6):387–95.

Singh K, Johnson L, Devarajan R, Shivashankar R, Sharma P, Kondal D, et al. Acceptability of a decision-support electronic health record system and its impact on diabetes care goals in South Asia: a mixed-methods evaluation of the CARRS trial. Diabet Med. 2018;35(12):1644–54.

Rogers EM. Diffusion of innovations. 5th ed. New York: Free Press; 2003.

Sperl-Hillen JM, Crain AL, Margolis KL, Ekstrom HL, Appana D, Amundson G, et al. Clinical decision support directed to primary care patients and providers reduces cardiovascular risk: a randomized trial. J Am Med Inform Assoc. 2018;25(9):1137–46.

Vedanthan R, Blank E, Tuikong N, Kamano J, Misoi L, Tulienge D, et al. Usability and feasibility of a tablet-based Decision-Support and Integrated Record-keeping (DESIRE) tool in the nurse management of hypertension in rural western Kenya. Int J Med Inform. 2015;84(3):207–19.

Wan Q, Makeham M, Zwar NA, Petche S. Qualitative evaluation of a diabetes electronic decision support tool: views of users. BMC Med Inform Decis Mak. 2012;12:61.

Patel A, Praveen D, Maharani A, Oceandy D, Pilard Q, Kohli MPS, et al. Association of multifaceted mobile technology-enabled primary care intervention with cardiovascular disease risk management in rural Indonesia. JAMA Cardiol. 2019;4(10):978–86.

Ross J, Stevenson F, Lau R, Murray E. Factors that influence the implementation of e-health: a systematic review of systematic reviews (an update). Implement Sci. 2016;11(1):146.

Kaplan B. The Medical Computing “lag”: perceptions of barriers to the application of computers to medicine. Int J Technol Assess Health Care. 1987;3(1):123–36.

Murphy EV. Clinical decision support: effectiveness in improving quality processes and clinical outcomes and factors that may influence success. Yale J Biol Med. 2014;87(2):187–97.

Tcheng JE, Bakken S, Bates DW, Bonner H III, Gandhi TK, Josephs M, et al. Optimizing strategies for clinical decision support (meeting). Washington, D.C.: National Academy of Sciences; 2017. Available from: https://lccn.loc.gov/2017056753. Cited 2022 June

Kawamoto K, McDonald CJ. Designing, conducting, and reporting clinical decision support studies: recommendations and call to action. Ann Intern Med. 2020;172(11_Supplement):S101–S9.

Lidwell W. In: Butler J, Holden K, editors. Universal principles of design : 100 ways to enhance usability, influence perception, increase appeal, make better design decisions, and teach through design. Gloucester: Rockport; 2003.

Miller K, Mosby D, Capan M, Kowalski R, Ratwani R, Noaiseh Y, et al. Interface, information, interaction: a narrative review of design and functional requirements for clinical decision support. J Am Med Inform Assoc. 2018;25(5):585–92.

Payne PRO. Advancing user experience research to facilitate and enable patient-centered research: current state and future directions. EGEMS (Wash DC). 2013;1(1):1026.

Semanik MG, Kleinschmidt PC, Wright A, Willett DL, Dean SM, Saleh SN, et al. Impact of a problem-oriented view on clinical data retrieval. J Am Med Inform Assoc. 2021;28(5):899–906.

Curran RL, Kukhareva PV, Taft T, Weir CR, Reese TJ, Nanjo C, et al. Integrated displays to improve chronic disease management in ambulatory care: a SMART on FHIR application informed by mixed-methods user testing. J Am Med Inform Assoc. 2020;27(8):1225–34.

Sarkar U, Samal L. How effective are clinical decision support systems? BMJ. 2020;370:m3499.

Kawamoto K, Del Fiol G, Lobach DF, Jenders RA. Standards for scalable clinical decision support: need, current and emerging standards, gaps, and proposal for progress. Open Med Inform J. 2010;4:235–44.

Kawamoto K, Kukhareva PV, Weir C, Flynn MC, Nanjo CJ, Martin DK, et al. Establishing a multidisciplinary initiative for interoperable electronic health record innovations at an academic medical center. JAMIA Open. 2021;4(3):ooab041.

Yusof MM, Kuljis J, Papazafeiropoulou A, Stergioulas LK. An evaluation framework for health information systems: human, organization and technology-fit factors (HOT-fit). Int J Med Inform. 2008;77(6):386–98.

Cresswell K, Williams R, Sheikh A. Developing and applying a formative evaluation framework for health information technology implementations: qualitative investigation. J Med Internet Res. 2020;22(6):e15068.

Saleebey D. The strengths perspective in social work practice: extensions and cautions. Soc Work. 1996;41(3):296–305.

Ash JS, Sittig DF, McMullen CK, Wright A, Bunce A, Mohan V, et al. Multiple perspectives on clinical decision support: a qualitative study of fifteen clinical and vendor organizations. BMC Med Inform Decis Mak. 2015;15(1):35.

Kumar A. Stakeholder’s perspective of clinical decision support system. Open J Bus Manag (Irvine, CA). 2016;4(1):45–50.

Bergdahl E. Is meta-synthesis turning rich descriptions into thin reductions? A criticism of meta-aggregation as a form of qualitative synthesis. Nurs Inq. 2019;26(1):e12273.

Hannes K, Lockwood C. Pragmatism as the philosophical foundation for the Joanna Briggs meta-aggregative approach to qualitative evidence synthesis. J Adv Nurs. 2011;67(7):1632–42.

Varsi C, Solberg Nes L, Kristjansdottir OB, Kelders SM, Stenberg U, Zangi HA, et al. Implementation strategies to enhance the implementation of ehealth programs for patients with chronic illnesses: realist systematic review. J Med Internet Res. 2019;21(9):e14255.

Wensing M. Implementation science in healthcare: introduction and perspective. Z Evid Fortbild Qual Gesundhwes. 2015;109(2):97–102.

Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. 2015;10(1):53.

Sockolow PS, Bowles KH, Rogers M. Health Information Technology Evaluation Framework (HITREF) comprehensiveness as assessed in electronic point-of-care documentation systems evaluations. Stud Health Technol Inform. 2015;216:406–9.

Liberati EG, Ruggiero F, Galuppo L, Gorli M, González-Lorenzo M, Maraldi M, et al. What hinders the uptake of computerized decision support systems in hospitals? A qualitative study and framework for implementation. Implement Sci. 2017;12(1):113.

Davidoff F, Dixon-Woods M, Leviton L, Michie S. Demystifying theory and its use in improvement. BMJ Qual Saf. 2015;24(3):228.

Acknowledgements

The researchers gratefully acknowledge the Royal Australian College of General Practitioners (RACGP) Foundation for their support of this project. We thank Ms Lisa Ban (Charles Darwin University academic research librarian) for her review of the search strategy.

Funding

This research was supported by the RACGP, the Australian Government Research Training Program (RTP) Scholarship, and Menzies School of Health Research scholarship.

Author information

Authors and Affiliations

Contributions

WC, GG, AC, KH, and AA contributed to the conception and design of the study. WC conducted the search strategy. WC, BB, and PC screened titles and abstracts for eligibility. WC screened full texts for inclusion. WC and CO conducted critical appraisal and data extraction. WC analysed the data and prepared the original draft. The author(s) read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

PRISMA 2020 Checklist.

Additional file 2.

Search strategy.

Additional file 3.

Detailed inclusion and exclusion criteria.

Additional file 4.

Methodological quality of included studies.

Additional file 5.

List of findings with illustrations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Chen, W., O’Bryan, C.M., Gorham, G. et al. Barriers and enablers to implementing and using clinical decision support systems for chronic diseases: a qualitative systematic review and meta-aggregation. Implement Sci Commun 3, 81 (2022). https://doi.org/10.1186/s43058-022-00326-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s43058-022-00326-x