Abstract

Background

To combat the opioid epidemic in the USA, unprecedented federal funding has been directed to states and territories to expand access to prevention, overdose rescue, and medications for opioid use disorder (MOUD). Similar to other states, California rapidly allocated these funds to increase reach and adoption of MOUD in safety-net, primary care settings such as Federally Qualified Health Centers. Typical of current real-world implementation endeavors, a package of four implementation strategies was offered to all clinics. The present study examines (i) the pre-post effect of the package of strategies, (ii) whether/how this effect differed between new (start-up) versus more established (scale-up) MOUD practices, and (iii) the effect of clinic engagement with each of the four implementation strategies.

Methods

Forty-one primary care clinics were offered access to four implementation strategies: (1) Enhanced Monitoring and Feedback, (2) Learning Collaboratives, (3) External Facilitation, and (4) Didactic Webinars. Using linear mixed effects models, RE-AIM guided outcomes of reach, adoption, and implementation quality were assessed at baseline and at 9 months follow-up.

Results

Of the 41 clinics, 25 (61%) were at MOUD start-up and 16 (39%) were at scale-up phases. Pre-post difference was observed for the primary outcome of percent of patient prescribed MOUD (reach) (βtime = 3.99; 0.73 to 7.26; p = 0.02). The largest magnitude of change occurred in implementation quality (ES = 0.68; 95% CI = 0.66 to 0.70). Baseline MOUD capability moderated the change in reach (start-ups 22.60%, 95% CI = 16.05 to 29.15; scale-ups −4.63%, 95% CI = −7.87 to −1.38). Improvement in adoption and implementation quality were moderately associated with early prescriber engagement in Learning Collaboratives (adoption: ES = 0.61; 95% CI = 0.25 to 0.96; implementation quality: ES = 0.55; 95% CI = 0.41 to 0.69). Improvement in adoption was also associated with early prescriber engagement in Didactic Webinars (adoption: ES = 0.61; 95% CI = 0.20 to 1.05).

Conclusions

Rather than providing an all-clinics-get-all-components package of implementation strategies, these data suggest that it may be more efficient and effective to tailor the provision of implementation strategies based on the needs of clinic. Future implementation endeavors could benefit from (i) greater precision in the provision of implementation strategies based on contextual determinants, and (ii) the inclusion of strategies targeting engagement.

Similar content being viewed by others

Introduction

Background

Opioid overdose deaths in the USA continue to rise with the use of prescription opioids, heroin, synthetic opioids, and tainted stimulants. More than 100 individuals die daily from an opioid overdose [1]. Although three evidence-based medications for opioid use disorder (MOUD: buprenorphine, naltrexone, and methadone) exist and are available through the Drug Addiction Treatment Act of 2000 [2,3,4,5], only 10% of people across the country who need these medications receive them [6,7,8]. To combat the opioid epidemic, unprecedented federal funding has been directed to states and territories to increase access to MOUD and reduce opioid overdose deaths through prevention, treatment, and recovery efforts [9,10,11,12,13,14,15].

Among other efforts, California deployed an 18-month multi-component implementation program within local safety-net, primary care clinics to install or expand access to medications for opioid use disorder (reach), increase the number of active x-waivered providers (adoption), and augment MOUD quality of care (implementation quality). These primary care clinics were either at the start-up phase, with no current MOUD capability, or at the scale-up phase, with some existing MOUD capability.

Safety-net primary care clinics offer services to low-income and underserved populations regardless of their ability to pay [16]. Because they are usually the first point of contact for identifying and treating health conditions, these clinics are well-positioned to screen, triage, and treat opioid use disorder (OUD). Patients receiving care at these clinics often face adverse social determinants of health and added challenges to accessing specialty care services [17]. Clinicians at these clinics also report having higher time pressure and staff burnout [18, 19]—an important consideration when asking them to engage in implementation activities.

The multi-component implementation program combined access to four commonly used implementation strategies: (1) Enhanced Monitoring and Feedback, (2) Learning Collaboratives, (3) External Facilitation, and (4) Didactic Webinars. These were selected based on the evidence from the literature and lessons learned from a previous iteration of implementing MOUD in a similar setting [20,21,22]. Typical of other real-world implementation endeavors [23, 24], no plan was devised for offering different strategies to different clinics based on MOUD capability, specific implementation barriers, or other contextual factors. In large part, this is due to a dearth of literature or implementation expertise on how best to accomplish this. The present work evaluates the relative effectiveness of four commonly used strategies in implementation practice on reach, adoption, and implementation quality given determinants of context and engagement.

Despite massive economic investments, the opioid crisis is far from resolved. Findings from this study not only contribute to future efforts on implementing MOUD and optimizing system-level efforts to alter the trajectory of the opioid epidemic, but they may also be relatable to other implementation efforts within safety-net practices, primary care clinics, and/or other medication management settings to address the questions of how heterogeneous contextual determinants and engagement in implementation strategies may differentially impact the outcomes of an implementation.

Methods

Aims

Specific aims and hypotheses were specified prior to data analysis.

The primary aim was (a) to test whether the provision of the multi-component implementation program impacted change in the percent of patients prescribed MOUD (primary outcome); (b) to estimate (and rank) the magnitude of change in the reach, adoption, and implementation quality outcomes; and (c) to estimate how this effect differs by clinics sub-grouped on the basis of their MOUD capability at baseline, prior to enrolling in the program. It was hypothesized that (a) the primary outcome, or percent of patients prescribed MOUD, would change overtime; (b) all outcomes would improve gradually over time, with the largest magnitude of change in implementation quality, given expanding quality (or capability) is precursor to the adoption and sustainment of evidence-based practices [25]; (c) the program would have a greater effect on change over time for clinics with lower MOUD capability at baseline because of the larger opportunity for improvement.

The secondary aim estimates the effect of early engagement in the four components of the program on the outcomes of reach, adoption, and implementation quality. For each implementation strategy, the hypothesis was that early engagement in the components with a prescriber would be associated with better outcomes in future quarters than the absence of early engagement or early engagement without a prescriber. We expect that prescribers, as key actors of the implementation, must engage in the program components, in order for them to effect meaningful change [26, 27].

Study design

This manuscript presents a prospective, longitudinal evaluation that focuses on the period from pre (January to March 2019) to mid-active implementation (October to December 2019). Figure 1 illustrates the program activities and measurement timeline. The Standards for Reporting Implementation Studies (StaRI) checklist [28] was used to guide reporting (Additional file 1).

Clinics

Forty-two clinics (55%) out of 76 clinics that applied to participate in the program were enrolled. Clinics were eligible if they (1) provided care in the State of California; (2) met the definition of a safety-net health care organization; (3) met the definition of a non-profit and tax-exempt entity under 501(C)(3) of the Internal Revenue Service Code (IRC) or a governmental, tribal, or public entity; (4) provided comprehensive primary care services; and (5) were interested in initiating or expanding MOUD efforts. Clinics were deemed not eligible if they (1) submitted an incomplete application; (2) provided care outside of California; (3) did not meet the definition of a non-profit. Upon receipt of applications, a panel of addiction and quality improvement experts further screened potential participating clinics for MOUD program readiness, as well as team and leadership commitment. Clinics that were retained either had no existing MOUD capability (start-up clinics) or were seeking to optimize and expand any existing MOUD capability (scale-up clinics).

Clinic types included Federally Qualified Health Centers (FQHC) and FQHC look-alikes, community clinics, rural health clinics, ambulatory care clinics owned and operated by hospitals, and Indian Health Service clinics. FQHCs are nonprofits or public organizations receiving funding from the Health Resources & Services Administration and are legislatively mandated to provide primary care following a sliding fee discount program in medically underserved areas [29]. FQHC look-alikes are community health centers that provide primary care and are eligible for FQHC reimbursement structures and discounted drug pricing [30]. These clinic locations ranged from densely urban to frontier rural and primarily serve low-income patients, those living in medically underserved areas, and/or those within specific racial and ethnic subgroups (e.g., Native American Tribes and Alaska Native people, Latinos, Koreans).

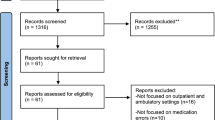

Clinics were compensated in a stage-wise fashion with funds up to $50,000 upon meeting pre-determined benchmarks. It was made clear to all clinics and clinic leadership that this compensation was for providing research data but not for receiving or taking part in the implementation strategies. Thus, no compensation was provided to engage clinics in the implementation strategies. Following inclusion in the study, one clinic withdrew from the study. The final sample size is 41 clinics. Figure 2 presents an extended CONSORT Diagram. Figure 3 is a geographic representation of participating clinics by start-up and scale-up designation.

CONSORT diagram of clinic recruitment, enrollment, retention, and early engagement. Note: Under Early Clinic Engagement in Discrete Implementation Strategies, n refers to the sum of clinics that attended the first session of the specified combinations of implementation activities. Given that External Facilitation sessions are scheduled by the external facilitator and clinic team, attendance at kick-off session is only counted if it took place within the first month of active implementation

Implementation strategies

Clinics were offered four types of discrete implementation support activities: (1) ongoing Enhanced Monitoring and Feedback, (2) two Learning Collaboratives, (3) monthly External Facilitation, and (4) monthly Didactic Webinars [22]. Within the confines of each strategy, the content of the implementation support activities—as well as approaches to engaging clinics in these activities—was tailored to address the barriers that patients at safety-net primary care clinics often face. However, the choice of implementation strategy offered was not tailored; instead, all four strategies were provided to all clinics as a single package (Fig. 1).

Enhanced Monitoring and Feedback

Similar to audit and feedback, a commonly used evidence-based implementation strategy [31], Enhanced Monitoring and Feedback (EMF) triggers change using performance data to guide decisions and actions of quality improvement efforts [32, 33]. EMF augments traditional audit and feedback by feeding back information on organization (e.g., leadership, culture, workflow) and system-level (e.g., policies, community norms, network connectivity) factors in addition to individual performance. Throughout the pre and active implementation phases, performance indicators pertaining to reach, adoption, and implementation of MOUD were gathered and fed back to clinics quarterly via automatic run charts. Clinics were also provided with aggregate averages of all other clinics, allowing them to compare their performance with the program average.

Learning Collaboratives

The Learning Collaborative strategy is widely utilized across quality improvement efforts, particularly those targeting complex interventions that involve systems and multidisciplinary teams [34,35,36]. It also has a positive, demonstrated effect on both quality- and quantity-type outcomes for MOUD expansion in primary care [37]. Learning Collaboratives, led by a panel of addiction and quality improvement experts, were held in April and September 2019. These in-person sessions covered MOUD practice and quality improvement strategies. The MOUD practice component consisted of presentations by addiction treatment experts and primary care peers on various topics related to MOUD, such as how to kick-start MOUD; strategies to manage complex cases, diversion, patients with co-occurring stimulant use disorders, pregnancy; and approaches to address negative stigma, beliefs, and attitudes related to addressing addiction in primary care. The quality improvement segment consisted of interactive workshop activities in which clinics identified SMART (Specific, Measurable, Achievable, Relevant, and Time-bound) goals, drivers, and barriers to implement/expand MOUD practice, and setting measurable and achievable goals with team members to take back to their clinic.

External Facilitation

External Facilitation, sometimes also referred to as implementation facilitation, coaching, or mentoring, has been utilized extensively and successfully across a wide range of implementation efforts in medical and non-medical settings [38,39,40,41,42]. Clinics were assigned to an external facilitator for up to 25 h of implementation support. Clinics were encouraged to meet with their facilitator (via phone or teleconference) at least once per quarter to review ongoing MOUD expansion efforts, discuss successes, identify areas for improvement, and troubleshoot solutions to existing barriers, such as retention of MOUD care for homeless patients and those with transportation needs. The external facilitators were either addiction psychiatrists, nurses, social workers, or behavioral health specialists and had extensive experience providing MOUD care in primary care settings.

Didactic Webinars

Didactic Webinars have a demonstrated positive impact on reach and adoption of MOUD [20]. Fifteen, 1-h-long, webinars were developed and made available over the course of the pre and active implementation period. The webinars were led by addiction psychiatrists, nurses, social workers, or behavioral health specialists, who had extensive experience providing MOUD care in primary care settings [43]. Examples of topics covered were as follows: MOUD overview and management, contingency management, teleconsultation support for clinicians, peer support from those with lived experiences, and fundamentals of compassionate care. To acknowledge staff burnout, a common barrier in safety-net, primary care setting, Didactic Webinars integrated interactive activities such as guided meditations and open discussions that allowed staff to reflect on their work and experiences. Instant feedback from polls were collected at the end of each webinar to ensure the content was helpful and relevant.

Measures

Time

In this study, time (t) is operationalized as the quarter (Q) of data collection: t = 0 refers to data collected in January to March 2019 (Q0; pre-implementation), t = 1 refers to April to June 2019 (Q1), t = 2 refers to July to September 2019 (Q2), and t = 3 refers to October to December 2019 (Q3; mid-active implementation).

Outcome variables

All outcomes of interest—reach, adoption, and implementation quality—were guided by RE-AIM, a framework developed and commonly used to study the quality, speed, and public health impact of efforts to implement evidence-based practices in real-world settings [44].

Reach and adoption

Reach and adoption were operationalized as counts and percentages for a given quarter. Reach was (1) the number of patients with a current diagnosis of OUD; (2) the number of patients with a current, active prescription of MOUD such as buprenorphine or naltrexone long-acting injectable; and (3) the percent of OUD patients with a current, active prescription of MOUD (primary outcome). A prescription was considered active if it covered any of the past 30 days of the reporting month.

Adoption was (1) the number of prescribers; (2) the number of x-waivered prescribers; (3) the number of active x-waivered prescribers who have prescribed MOUD in the 3-month data reporting period; and (4) the percent of x-waivered prescribers in the clinic.

Reach and adoption were collected quarterly via a secure online data portal customized for the program. To ensure data accuracy, clinics were trained on data collection through webinars and one-on-one phone sessions at the program start. Data validation was performed quarterly by a trained member from the research team to ensure completeness and accuracy as well as resolve discrepancies.

Implementation quality

The Integrating Medications for Addiction Treatment (IMAT) in Primary Care Index is a self-reported, team-based assessment designed to evaluate implementation quality of and capability for MOUD [45]. The IMAT includes elements of the Addiction Care Cascade and measures guideline adherence against policies, expert consensus recommendations, and best practices [46, 47]. It is comprised of 45 benchmark items across 7 domains that assess implementation quality with regard to Infrastructure, Clinic Culture and Environment, Patient Identification and Initiating Care, Care Delivery and Treatment Response, Care Coordination, Workforce, and Staff Training and Development. Each item is rated on a 5-point scale ranging from 1—Not Integrated to 3—Partially Integrated to 5—Fully Integrated. Scores for each domain as well as a total composite score can be generated. The IMAT is psychometrically acceptable with good internal consistency, concurrent validity, and predictive validity [48]. The IMAT was administered at baseline and at active implementation midpoint.

MOUD capability

Prior to implementation, each clinic was categorized as either a start-up clinic or a scale-up clinic. Start-up refers to clinics that had a small number of x-waivered prescribers with no or few patients on MOUD; scale-up refers to clinics that were more established in their OUD care. These categorizations were developed by an expert team with qualifications in addiction medicine, addiction psychiatry, primary care management, quality improvement, and correspond to Aarons et al. Exploration, Preparation, Implementation and Sustainment (EPIS) stages: Exploration and Preparation (start-up clinics) and Implementation and Sustainment (scale-up clinics) [25].

Early engagement

Early engagement is the focal predictor variable of the secondary aim. It was operationalized as clinic attendance at the first session of each implementation activity. Clinics were grouped into one of three categories: (1) no member of the clinic attended, (2) at least one member of the clinic attended, but none was a prescriber, and (3) at least one member of the clinic attended, including a prescriber. Prescribers are physicians, physician assistants, and nurse practitioners who are eligible for or already have an x-waiver.

Attendance data, including the name and role of attendees as well as the associated clinic, were collected by program staff at each activity. Learning Collaborative and Didactic Webinar attendance were recorded through sign-in documentation. External facilitators utilized facilitation logs to track monthly encounters. Engagement with EMF was not tracked as all clinics received it continuously throughout the program.

Statistical analyses

Analyses were performed in R version 4.0.2. Additional file 2 provides additional details regarding the methods used.

Primary aim: pre-post differences of longitudinal outcomes given MOUD capability

A linear-mixed effects (LME) [49] model was used to examine changes in reach, adoption, and implementation quality as a function of time, here pre-post, and MOUD capability. The pre-specified LME model included fixed effects for the intercept, time (t = 0,1,2,3), MOUD capability at baseline (1 = scale-up, 0 = start-up), and an interaction term for time and baseline MOUD capability. The LME also included a random effect for intercept and an independent covariance structure for the residual errors. For ease of interpretability, MOUD capability was mean-centered, enabling the intercept and time fixed effects to be interpreted as the marginal mean at baseline and the marginal slope (i.e., averaged over MOUD capability), respectively. This same LME model was used for all three parts of the primary aim.

Secondary aim: effects of early clinic engagement

A LME model was used to examine the association between early engagement in each of the implementation strategies on reach and adoption. The pre-specified LME analysis included fixed effects for the intercept, time (t = 1,2,3), early engagement, and an interaction term for time and early engagement. The LME also included a random effect for intercept and an independent covariance structure for the residual errors.

For the implementation quality outcome, a standard regression model (not longitudinal) was fitted instead, given that implementation quality was only measured at pre-implementation (t = 0) and mid-active implementation (t = 3). This model included an intercept and early engagement.

To reduce the possibility of bias (in the estimated effect of early engagement on outcomes) due to common correlates of the outcomes and engagement [50, 51], all models included two types of variables as baseline covariates: (i) a theory-informed list of pre-implementation variables that were hypothesized to be associated with the outcomes, and (ii) a list of pre-implementation variables found to correlate moderately or strongly with early engagement. Additional information on the methods of covariate selections are provided in Additional file 2.

Effect sizes

For all estimated effects, effect sizes [52] are reported to ease interpretation of results and compare the effects across outcomes. An effect size of 0.5 (medium) or greater was considered clinically meaningful.

Missing data

For all variables considered, there was ≤10% missingness. To address missingness, the data were multiply imputed using a chained equations approach [53]. Twenty complete (imputed) datasets were generated. All results reported below were calculated using Rubin’s rules for combining the results of identical analyses on each of the 20 datasets [54, 55].

Results

Descriptive

Baseline clinic characteristics

Twenty-five (61%) clinics were MOUD start-ups, and 16 (39%) were scale-ups. The majority of clinics were FQHCs (n = 28; 68.3%) located in urban or metropolitan areas (n = 34; 82.9%). On average, clinics had less than 1 addiction certified physician (SD = 0.6) or psychiatrist (SD = 0.9) and only 1 mental health and addiction certified behavioral clinician on staff (SD = 1.5). At program start, a mean of 5 (41.3%) prescribers at each clinic were x-waivered (clinic range 0 to 23). Similarly, a mean of 30 (44.4%) patients diagnosed with OUD were receiving MOUD (clinic range 0 to 301). Baseline characteristics of clinics are presented in Table 1.

Early engagement

Figure 4 displays a breakdown of early clinic and prescriber engagement in each of the discrete implementation strategies. All clinics attended the kick-off Learning Collaborative (n = 41, 100%), 37 (90.2%) clinics engaged in External Facilitation within the first 2 months, and 33 (80.5%) of clinics participated in the initial Didactic Webinar. Early prescriber engagement was the highest for Learning Collaboratives (n = 35, 85.4%), followed by External Facilitation (n = 26, 63.4%), and Didactic Webinars (n = 17, 41.5%).

Early engagement in the multi-component implementation program (n = 41). Note: The n’s above represent clinic count, not individual attendees. Early engagement was operationalized as clinic attended with a prescriber, clinic attended without a prescriber, and clinic did not attend. Early engagement among providers was not reported for Enhanced Monitoring and Feedback (EMF) because all clinics received EMF continuously

Primary aim: pre-post differences of longitudinal outcomes given MOUD capability

Consistent with our hypothesis, we observed pre-post difference in the percent of patients prescribed MOUD, the primary outcome (βtime = 3.99; 0.73 to 7.26; p = 0.02).

As anticipated, reach, adoption, and implementation quality all improved from pre to mid-active implementation (see Table 2). Implementation quality had the largest magnitude of change (ES = 0.68; 95% CI = 0.66 to 0.70), with an estimated mean improvement of 0.53 between pre to mid-active implementation. The number of x-waivered prescribers actively prescribing grew by a mean of 1.32 with the second-largest magnitude of change (ES = 0.34; 95% CI = 0.32 to 0.36). Percent of patients prescribed MOUD had an estimated mean improvement of 11.98% and the third-largest magnitude of change (ES = 0.27; 95% CI = 0.18 to 0.37).

As expected, MOUD capability was found to moderate the change in longitudinal outcomes. Compared to scale-up clinics, start-ups experienced a greater, positive change in percent of patients prescribed MOUD (22.60%; 95% CI = 16.05 to 29.15), implementation quality, number of active x-waivered prescribers, and number of prescribers. In contrast (and surprisingly), scale-up clinics saw a 4.63% (95% CI = −7.87 to −1.38) reduction in the proportion of patients prescribed MOUD. Table 2 and Figs. 5, 6, and 7 respectively summarize and contrast the estimated change in longitudinal outcomes on reach, adoption, and implementation between baseline and mid-active implementation moderated by MOUD capability.

Secondary aim: effects of early clinic engagement

Tables 3, 4, and 5 present the estimated effect and effect size of clinic engagement in each implementation strategy. Figure 8 illustrates the effect size of early engagement between clinics with prescriber attendance and clinics without prescriber attendance by implementation strategy.

Effect size of early engagement with a prescriber compared to early engagement without a prescriber in discrete implementation strategies. Note: Asterisk (*) represents 95% CI of the effect size crossed zero; exact numerical value can be found in Tables 3, 4, and 5. Reference group is clinic attended without a prescriber. Comparison group is clinic attended with a prescriber

Consistent with our expectation, early clinic engagement with a prescriber was associated with better outcomes in future quarters than early clinic engagement without a prescriber. Prescriber attendance at the first Learning Collaborative was moderately associated with improvement in the number of active x-waivered prescribers (ES = 0.61; 95% CI = 0.25 to 0.96) and IMAT implementation quality (ES = 0.55; 95% CI = 0.41 to 0.69). Clinics that attended the first session of Learning Collaboratives with a prescriber had an estimated mean of 1.10 (95% CI = 0.46 to 1.74) additional x-waivered prescriber actively prescribing and an addition of 0.33 (95% CI = 0.25 to 0.42) to the IMAT implementation quality score by mid-active implementation. Prescriber attendance in the first session of Didactic Webinars was moderately associated with improvement in number of x-waivered prescribers (ES = 0.61; 95% CI = 0.20 to 1.05). An estimated mean of 1.66 (95% CI = 0.54 to −2.78) additional prescribers were x-waivered in clinics that attended the early Didactic Webinar with a prescriber than those that attended without a prescriber by mid-active implementation.

Discussion

Summary

This study aimed to evaluate whether and to what extent a large-scale multi-component implementation support campaign led to changes in reach, adoption, and implementation quality for MOUD. Findings show that reach, adoption, and implementation quality all improved over time following the provision of a multi-component implementation program, with the greatest change on implementation quality and the least change on number of patients with OUD. These changes differed by MOUD capability at baseline. We observed the most substantial difference in the percent of patients prescribed MOUD, which improved over time for start-ups, but worsened for scale-ups. Changes in outcomes also differed by engagement in implementation strategies. Early engagement with a prescriber in the Learning Collaborative strategy had a moderate, positive effect on number of active x-waivered prescribers and implementation quality. Similarly, early engagement with a prescriber in the Didactic Webinar strategy had a moderate, positive effect on number of x-waivered prescribers.

Implications

These findings provide insight into the effectiveness of various implementation strategies on reach, adoption, and implementation quality given determinants of context and engagement. They start informing what outcomes to target, how to target, and who/when to target—offering more precise guidance for future real-world large-scale implementation-as-usual endeavors—a departure from the “everything but the kitchen sink” approach [56]. There has been unprecedented national investment in combatting the opioid and stimulant overdose crises—with a major focus on expanding access to life-saving medications, i.e., MOUD [9,10,11,12,13,14,15]. Yet there is sparse scientific understanding of the effectiveness of the implementation strategies being used to scale up access to and sustain MOUD and its guideline adherent delivery [57,58,59,60]. The current study contributes to tackling this question.

The importance of implementation quality

Findings suggest that the multi-component implementation program had the largest effect on implementation quality. This is significant as quality and fidelity are essential to optimizing the benefit of any evidence-based intervention. As previously argued [61, 62], enhancing quality of health care delivery has the potential to maximize return on investment by “delivering the right care at the right time.” To thoroughly evaluate the effectiveness and sustainability of an implementation program, it is therefore insufficient to only evaluate the quantity as an indicator of improvement. It is also critical to examine the quality or capability of the intervention delivery as a predictor of sustainment. Capacity cannot be maximized if there is no capability. In addition to highlighting the importance of quality, our observations may imply that change in quality comes before change in quantity, which will need to be investigated in future studies. Examining the temporality of change during an implementation program can begin to unravel questions such as whether a peak in quality might predict large magnitude of improvement in reach and adoption, and whether these improvements can be sustained over time.

Better accommodating the needs of scale-up sites

By mid-active implementation, start-up clinics saw gains in percent of patients prescribed MOUD, whereas scale-up clinics’ percent of patients prescribed MOUD decreased. One possible explanation to this observation is that start-up clinics had more room for improvement. Some start-up clinics were new to MOUD thus had no patient with OUD or on MOUD at program start. Scale-up clinics, on the other hand, may already have a panel of OUD patients identified waiting to receive treatment, yet some of these identified OUD patients may not be eligible for MOUD. As a result, the number of OUD patients in scale-up clinics grew faster than the number of patients prescribed MOUD. This should be further investigated at program end. An alternate explanation could be the differing needs of scale-up clinics than those of start-up clinics. Clinics that are just starting up might require a higher intensity and wider range of supports, such as all four of the strategies offered in this multi-component implementation program. Sites that are scaling up, on the other hand, might experience implementation fatigue and need lighter touch but targeted scale-up strategies [63, 64], such as train-the-trainer initiatives, infrastructure development, and quality improvement collaboratives. These strategies that build human capacity and capability of implementation and develop the culture of change among staff and leaderships might be better suited to address the barriers that sites encounter during implementation scale-up. Lastly, it is also possible that improving quality may sacrifice quantity due to competing resources and demands. Through participating in this implementation effort, scale-up clinics may have realized the need to improve their quality of MOUD services. As a result, they spent more time and resources on improving their quality of care, such as existing MOUD workflow and clinic culture, thus temporarily sacrificing their goal to increasing their reach.

Facilitating early engagement among key actors

As expected, early engagement with a prescriber in Didactic Webinars and Learning Collaboratives predicted improvement in percent of x-waivered prescribers and implementation quality. Consistent with the literature [65], these findings suggest the importance of engaging MOUD prescribers, or key actors of the implementation effort early on. During design of implementation strategies, special attention should be given to encourage early engagement from key actors and proactively prevent disengagement.

Limitations

Although our implementation took place across primary care clinics in the State of California, central California was underrepresented; yet, this is where implementation of MOUD may face a different set of challenges. The use of clinic-reported data, though prone to missingness and less objective than other data sources, such as claims and uniform electronic health records, is a pragmatic and affordable method of data collection that is widely utilized [66]. Our program staff anticipated for potential errors by systematically reviewing and validating the clinic-reported data to optimize data quality. Further, although clinics were instructed to complete the implementation quality team-based assessment (IMAT) as conservatively as possible, social desirability bias may have influenced implementation quality ratings.

Operationalizing engagement as attendance is only but one way to conceptualize and operationalize engagement. We were limited by the data in hand. Given the multi-dimensions and complexity of engagement, future studies should examine (i) other approaches to engaging clinics in the implementation strategies being offered; and (ii) how best to engage clinics in research (i.e., to collect outcomes of reach, adoption, and implementation quality) to further advance the field. We also believe that in many settings where research outcomes are self-reported (as opposed to passively collected), making the distinction between engagement in the implementation strategies (something an implementor does) versus engagement in obtaining the research outcome (something a researcher does) could lead to improved implementation science.

Conclusion

These findings suggest that providing an all-clinics-get-all-components package of implementation strategies may be both inefficient and ineffective. To meet the needs of all clinics, implementers need better guidance from implementation scientists on how to select and “prescribe” the choice of initial strategy(ies) with greater precision. Similarly, implementers could also benefit from guidance on how to monitor the success of strategies early on, and how to subsequently adapt or alter the strategy(ies) being offered depending on such measures of success or failure [67, 68]. The findings also begin to point to the importance of building packages of implementation strategies that are successful at engaging clinics [27, 69, 70], or that monitor clinics for early signs of disengagement and then course-correct if needed.

Availability of data and materials

The datasets generated during and/or analyzed during the current study are not publicly available because data belong to the participating clinics but are available from the corresponding author on reasonable request.

Abbreviations

- CI:

-

Confidence interval

- CONSORT:

-

Consolidated Standards of Reporting Trials

- EE:

-

Estimated effect

- EMF:

-

Enhanced monitoring and feedback

- ES:

-

Effect size

- FQHC:

-

Federally Qualified Health Center

- IMAT:

-

Integrating Medications for Addiction Treatment Index

- LME:

-

Linear mixed effects

- OUD:

-

Opioid use disorder

- MOUD:

-

Medications for opioid use disorder

References

Center for Disease Control and Prevention. Understanding the epidemic. 2020 [cited 2020 Oct 10]. Available from: https://www.cdc.gov/drugoverdose/epidemic/index.html

Bilirakis M. H.R.4365 - 106th Congress (1999-2000): Children’s Health Act of 2000. 2000 [cited 2020 Mar 14]. Available from: https://www.congress.gov/bill/106th-congress/house-bill/4365

Murthy VH. Ending the opioid epidemic — a call to action. N Engl J Med. 2016;375(25):2413–5.

Nielsen S, Larance B, Degenhardt L, Gowing L, Kehler C, Lintzeris N. Opioid agonist treatment for pharmaceutical opioid dependent people. Cochrane Database Syst Rev. 2016;2016(5) Available from: www.cochranelibrary.com.

Volkow ND, Frieden TR, Hyde PS, Cha SS. Medication-assisted therapies — tackling the opioid-overdose epidemic. N Engl J Med. 2014;370(22):2063–6.

Aletraris L, Bond Edmond M, Roman PM. Adoption of injectable naltrexone in U.S. substance use disorder treatment programs. J Stud Alcohol Drugs. 2015;76(1):143–51.

Timko C, Schultz NR, Cucciare MA, Vittorio L, Garrison-Diehn C. Retention in medication-assisted treatment for opiate dependence: a systematic review. J Addict Dis. 2016;35(1):22–35.

Turner L, Kruszewski SP, Alexander GC. Trends in the use of buprenorphine by office-based physicians in the United States, 2003-2013. Am J Addict. 2015;24(1):24–9.

Bipartisan Policy Center. Tracking federal funding to combat the opioid crisis. 2019. Available from: https://bipartisanpolicy.org/wp-content/uploads/2019/03/Tracking-Federal-Funding-to-Combat-the-Opioid-Crisis.pdf

Office of National Drug Control Policy. National Drug Control Strategy FY 2021 Budget and Performance Summary. 2020. Available from: https://trumpwhitehouse.archives.gov/wp-content/uploads/2020/06/2020-NDCS-FY-2021-Budget-and-Performance-Summary.pdf

Office of National Drug Control Policy. National Drug Control Strategy FY 2022 Funding Highlights. 2021. Available from: https://www.whitehouse.gov/wp-content/uploads/2021/05/National-Drug-Control-Budget-FY-2022-Funding-Highlights.pdf

The White House. Biden-Harris administration calls for historic levels of funding to prevent and treat addiction and overdose. The White House. 2021 [cited 2021 Aug 17]; Available from: https://www.whitehouse.gov/ondcp/briefing-room/2021/05/28/biden-harris-administration-calls-for-historic-levels-of-funding-to-prevent-and-treat-addiction-and-overdose/

Bonamici S. H.R.34 - 114th Congress (2015-2016): 21st Century Cures Act.2016. Available from: https://www.congress.gov/bill/114th-congress/house-bill/34

Substance Abuse and Mental Health Services Administration. State targeted response to the opioid crisis grants; 2016. Available from: https://www.samhsa.gov/grants/grant-announcements/ti-17-014

Substance Abuse and Mental Health Services Administration. State Opioid Response Grants. 2018. Available from: https://www.samhsa.gov/grants/grant-announcements/ti-18-015

Institute of Medicine (US) Committee on the Changing Market, Managed Care, and the Future Viability of Safety Net Providers. In: Ein Lewin M, Altman S, editors. Americas’s health care safety net: intact but endangered. Washington (DC): National Academies Press (US); 2000. [cited 2022 Apr 29]. Available from: http://www.ncbi.nlm.nih.gov/books/NBK224523/.

Jones EB. Medication-assisted opioid treatment prescribers in federally qualified health centers: capacity lags in rural areas. J Rural Health. 2018;34(1):14–22.

Satterwhite S, Knight KR, Miaskowski C, Chang JS, Ceasar R, Zamora K, et al. Sources and impact of time pressure on opioid management in the safety-net. J Am Board Fam Med. 2019;32(3):375–82.

Edwards ST, Marino M, Balasubramanian BA, Solberg LI, Valenzuela S, Springer R, et al. Burnout among physicians, advanced practice clinicians and staff in smaller primary care practices. J Gen Intern Med. 2018;33(12):2138–46.

Caton L, Shen H, Assefa M, Fisher T, McGovern MP. Expanding access to medications for opioid use disorder in primary care: an examination of common implementation strategies. J Addict Res Ther. 2020;11:407.

Lewis CC, Boyd MR, Walsh-Bailey C, Lyon AR, Beidas R, Mittman B, et al. A systematic review of empirical studies examining mechanisms of implementation in health. Implement Sci. 2020;15(1):21.

McGovern M, Assefa M, Abraibesh A. Center for care innovations treating addiction in primary care: evaluation. Center Behavioral Health Services and Implementation Research, Stanford University; 2018.

Powell BJ, Fernandez ME, Williams NJ, Aarons GA, Beidas RS, Lewis CC, et al. Enhancing the impact of implementation strategies in healthcare: a research agenda. Front Public Health. 2019;7 [cited 2021 Feb 2]. Available from: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6350272/.

Powell BJ, Beidas RS, Lewis CC, Aarons GA, McMillen JC, Proctor EK, et al. Methods to improve the selection and tailoring of implementation strategies. J Behav Health Serv Res. 2017;44(2):177–94.

Aarons GA, Hurlburt M, Horwitz SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Admin Pol Ment Health. 2011;38(1):4–23.

Dixon-Woods M, McNicol S, Martin G. Ten challenges in improving quality in healthcare: lessons from the Health Foundation’s programme evaluations and relevant literature. BMJ Qual Saf. 2012;21(10):876–84.

Siriwardena AN. Engaging clinicians in quality improvement initiatives: art or science? Qual Prim Care. 2009;17(5):303–5.

Pinnock H, Barwick M, Carpenter CR, Eldridge S, Grandes G, Griffiths CJ, et al. Standards for reporting implementation studies (StaRI) statement. BMJ. 2017;356:i6795.

Health Resources & Services Administration. Federally Qualified Health Centers. Official web site of the U.S. Health Resources & Services Administration. 2017 [cited 2021 Apr 4]. Available from: https://www.hrsa.gov/opa/eligibility-and-registration/health-centers/fqhc/index.html

Health Resources & Services Administration. Federally Qualified Health Center Look-Alike. Official web site of the U.S. Health Resources & Services Administration. 2017 [cited 2021 Apr 4]. Available from: https://www.hrsa.gov/opa/eligibility-and-registration/health-centers/fqhc-look-alikes/index.html

Hysong SJ, SoRelle R, Hughes AM. Prevalence of effective audit-and-feedback practices in primary care settings: a qualitative examination within veterans health administration. Hum Factors. 2022;64(1):99–108.

Gould NJ, Lorencatto F, Stanworth SJ, Michie S, Prior ME, Glidewell L, et al. Application of theory to enhance audit and feedback interventions to increase the uptake of evidence-based transfusion practice: an intervention development protocol. Implement Sci. 2014;9(1):92.

Ivers N, Jamtvedt G, Flottorp S, Young JM, Odgaard-Jensen J, French SD, et al. Audit and feedback: effects on professional practice and healthcare outcomes. Cochrane Database Syst Rev. 2012; Available from: http://doi.wiley.com/10.1002/14651858.CD000259.pub3.

Hulscher MEJL, Schouten LMT, Grol RPTM, Buchan H. Determinants of success of quality improvement collaboratives: what does the literature show? BMJ Qual Saf. 2013;22(1):19–31.

Schouten LMT, Hulscher MEJL, van Everdingen JJE, Huijsman R, Grol RPTM. Evidence for the impact of quality improvement collaboratives: systematic review. BMJ. 2008;336(7659):1491–4.

Clarke JR. The use of collaboration to implement evidence-based safe practices. J Public Health Res. 2013;2(3):e26.

Nordstrom BR, Saunders EC, McLeman B, Meier A, Xie H, Lambert-Harris C, et al. Using a learning collaborative strategy with office-based practices to increase access and improve quality of care for patients with opioid use disorders. J Addict Med. 2016;10(2):117–23.

Baskerville NB, Liddy C, Hogg W. Systematic review and meta-analysis care settings. Ann Fam Med. 2012;10(1):63–74.

Kirchner JAE, Ritchie MJ, Pitcock JA, Parker LE, Curran GM, Fortney JC. Outcomes of a partnered facilitation strategy to implement primary care–mental health. J Gen Intern Med. 2014;29(4):904–12.

Kirchner JE, Waltz TJ, Powell BJ, Smith JL, Proctor EK. Implementation strategies. In: Dissemination and implementation research in health. 2nd ed. New York: Oxford University Press; 2017. Available from: https://www.oxfordscholarship.com/10.1093/oso/9780190683214.001.0001/oso-9780190683214-chapter-15.

Kirchner JE, Ritchie MJ, Dollar KM, Gundlach P, Smith JL. Implementation facilitation training manual: using external and internal facilitation to improve care in the veterans health administration; 2013. Available from: http://www.queri.research.va.gov/tools/implementation/Facilitation-Manual.pdf

Ritchie MJ, Parker LE, Edlund CN, Kirchner JE. Using implementation facilitation to foster clinical practice quality and adherence to evidence in challenged settings: a qualitative study. BMC Health Serv Res. 2017;17(1):294.

Center for Care Innovation. ATSH Primary Care Program Page. Center for Care Innovations. 2021 [cited 2021 Jul 11]. Available from: https://www.careinnovations.org/atshprimarycare-teams/

Glasgow RE, Harden SM, Gaglio B, Rabin B, Smith ML, Porter GC, et al. RE-AIM planning and evaluation framework: adapting to new science and practice with a 20-year review. Front Public Health. 2019;7 [cited 2021 Jan 3]. Available from: https://www.frontiersin.org/articles/10.3389/fpubh.2019.00064/full.

McGovern MP, Hurley B, Caton L, Fisher T, Newman S, Copeland M. Integrating medications for addiction treatment in primary care – Opioid Use Disorder Version 1.3: an index of capability at the organizational/clinic level. 2019. Available from: https://med.stanford.edu/content/sm/cbhsir/di-tools-and-resources/_jcr_content/main/panel_builder/panel_0/accordion/accordion_content6/panel_builder/panel_1/download/file.res/IMAT-PC%20v1.3%20GENERIC%20030119.pdf

Williams AR, Nunes EV, Bisaga A, Levin FR, Olfson M. Development of a cascade of care for responding to the opioid epidemic. Am J Drug Alcohol Abuse. 2019;45(1):1–10.

Williams AR, Nunes EV, Bisaga A, Pincus HA, Johnson KA, Campbell AN, et al. Developing an opioid use disorder treatment cascade: a review of quality measures. J Subst Abus Treat. 2018;91:57–68.

Chokron Garneau H, Hurley B, Fisher T, Newman S, Copeland M, Caton L, et al. The integrating medications for addiction treatment (IMAT) index: a measure of capability at the organizational level. J Subst Abus Treat. 2021;126:108395.

Hedeker D, Gibbons R. Longitudinal data analysis. 1st ed: Wiley-Interscience; 2006. p. 360. [cited 2021 Jul 11]. Available from: https://www.amazon.com/Longitudinal-Data-Analysis-Donald-Hedeker/dp/0471420271

VanderWeele TJ. Principles of confounder selection. Eur J Epidemiol. 2019;34(3):211–9.

VanderWeele TJ, Shpitser I. On the definition of a confounder. Ann Stat. 2013;41(1):196–220.

Cohen J. Statistical power analysis for the behavioral sciences. 2nd ed. Hillsdale: Routledge; 1988. p. 400.

Azur MJ, Stuart EA, Frangakis C, Leaf PJ. Multiple imputation by chained equations: what is it and how does it work? Int J Methods Psychiatr Res. 2011;20(1):40–9.

van Buuren S. Flexible imputation of missing data. 1st ed. Boca Raton: Chapman and Hall/CRC; 2012. p. 342.

Rubin DB. Multiple imputation for nonresponse in surveys. New York: Wiley; 2004. p. 258. [cited 2021 Jul 29]. Available from: https://www.wiley.com/en-us/Multiple+Imputation+for+Nonresponse+in+Surveys-p-9780471655749

Grimshaw JM, Eccles MP, Lavis JN, Hill SJ, Squires JE. Knowledge translation of research findings. Implement Sci. 2012;7(1):50.

Caton L, Yuan M, Louie D, Gallo C, Abram K, Palinkas L, et al. The prospects for sustaining evidence-based responses to the US opioid epidemic: state leadership perspectives. Subst Abuse Treat Prev Policy. 2020;15(1):84.

Becker WC, Krebs EE, Edmond SN, Lin LA, Sullivan MD, Weiss RD, et al. A research agenda for advancing strategies to improve opioid safety: findings from a VHA state of the art conference. J Gen Intern Med. 2020;35(Suppl 3):978–82.

Ducharme LJ, Wiley TRA, Mulford CF, Su ZI, Zur JB. Engaging the justice system to address the opioid crisis: the justice community opioid innovation network (JCOIN). J Subst Abus Treat. 2021;128 [cited 2022 Apr 29]. Available from: https://www.journalofsubstanceabusetreatment.com/article/S0740-5472(21)00033-7/fulltext.

Chandler RK, Villani J, Clarke T, McCance-Katz EF, Volkow ND. Addressing opioid overdose deaths: the vision for the HEALing communities study. Drug Alcohol Depend. 2020;217:108329.

Oxman TE, Schulberg HC, Greenberg RL, Dietrich AJ, Williams JW, Nutting PA, et al. A fidelity measure for integrated management of depression in primary care. Med Care. 2006;44(11):1030–7.

Woolf SH, Johnson RE. The break-even point: when medical advances are less important than improving the fidelity with which they are delivered. Ann Fam Med. 2005;3(6):545–52.

Barker PM, Reid A, Schall MW. A framework for scaling up health interventions: lessons from large-scale improvement initiatives in Africa. Implement Sci. 2016;11:12.

Leeman J, Birken SA, Powell BJ, Rohweder C, Shea CM. Beyond “implementation strategies”: classifying the full range of strategies used in implementation science and practice. Implement Sci. 2017;12(1):125.

Saldana L, Chamberlain P, Wang W, Brown CH. Predicting program start-up using the stages of implementation measure. Adm Policy Ment Health Ment Health Serv Res. 2012;39(6):419–25.

Franklin M, Thorn J. Self-reported and routinely collected electronic healthcare resource-use data for trial-based economic evaluations: the current state of play in England and considerations for the future. BMC Med Res Methodol. 2019;19(1):8.

Kilbourne AM, Smith SN, Choi SY, Koschmann E, Liebrecht C, Rusch A, et al. Adaptive school-based implementation of CBT (ASIC): clustered-SMART for building an optimized adaptive implementation intervention to improve uptake of mental health interventions in schools. Implement Sci. 2018;13(1):119.

Quanbeck A, Almirall D, Jacobson N, Brown RT, Landeck JK, Madden L, et al. The balanced opioid initiative: protocol for a clustered, sequential, multiple-assignment randomized trial to construct an adaptive implementation strategy to improve guideline-concordant opioid prescribing in primary care. Implement Sci. 2020;15(1):26.

White M, Butterworth T, Wells JS. Healthcare quality improvement and ‘work engagement’; concluding results from a national, longitudinal, cross-sectional study of the ‘productive Ward-releasing time to care’ Programme. BMC Health Serv Res. 2017;17:510.

Sheard L, Marsh C, O’Hara J, Armitage G, Wright J, Lawton R. Exploring how ward staff engage with the implementation of a patient safety intervention: a UK-based qualitative process evaluation. BMJ Open. 2017;7(7):e014558.

van Buuren S, Groothuis-Oudshoorn K. Mice: multivariate imputation by chained equations in R. J Stat Softw. 2011;45(3):1–67.

Snijders TAB. Multilevel analysis: an introduction to basic and advanced multilevel modeling. 2nd ed: SAGE Publications Ltd; 2011. p. 368.

Acknowledgements

The authors would like to thank Dr. Rita Popat for her generous guidance on the methodology for this project and Ms. Mina Yuan for her diligence with data entry. The staff at Center for Care Innovations contributed significantly to the implementation and data collection efforts. This work would not be possible without the tremendous hard work of providers and staff providing care for patients with opioid use disorder and diligence in reporting data during the Addiction Treatment Starts Here program.

Funding

This project was funded by the California Department of Health Care Services (18-95529) via the Substance Abuse and Mental Health Services Administration (SAMHSA) State Opioid Response Grant and Cedars-Sinai Community Benefit Giving Office. Funding for HC, MPM, HCG, and DA’s contribution was provided by the National Institute on Drug Abuse (R01DA052975 for HC, MPM, HCG; P50DA054039 and R01DA039901 for DA). The funders were not involved in the design of the study and collection, analysis, and interpretation of data.

Author information

Authors and Affiliations

Contributions

HC, MPM, HCG, and DA conceived of the idea and developed the aims. HC and DA developed and refined the methods. HC cleaned and analyzed the data under the guidance of DA. MPM, BH, TF, and MC designed the implementation program and coordinated the data collection efforts. All authors contributed meaningfully to the drafting, editing, and approval of the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable because only aggregated clinic-level data were collected.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

Standards for Reporting Implementation Studies: the StaRI checklist for completion [28].

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Cheng, H., McGovern, M.P., Garneau, H.C. et al. Expanding access to medications for opioid use disorder in primary care clinics: an evaluation of common implementation strategies and outcomes. Implement Sci Commun 3, 72 (2022). https://doi.org/10.1186/s43058-022-00306-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s43058-022-00306-1