Abstract

Background

Despite an increasing number of training opportunities in implementation science becoming available, the demand for training amongst researchers and practitioners is unmet. To address this training shortfall, we developed the King’s College London ‘Implementation Science Masterclass’ (ISM), an innovative 2-day programme (and currently the largest of its kind in Europe), developed and delivered by an international faculty of implementation experts.

Methods

This paper describes the ISM and provides delegates’ quantitative and qualitative evaluations (gathered through a survey at the end of the ISM) and faculty reflections over the period it has been running (2014–2019).

Results

Across the 6-year evaluation, a total of 501 delegates have attended the ISM, with numbers increasing yearly from 40 (in 2014) to 147 (in 2019). Delegates represent a diversity of backgrounds and 29 countries from across the world. The overall response rate for the delegate survey was 64.5% (323/501). Annually, the ISM has been rated ‘highly’ in terms of delegates’ overall impression (92%), clear and relevant learning objectives (90% and 94%, respectively), the course duration (85%), pace (86%) and academic level 87%), and the support provided on the day (92%). Seventy-one percent of delegates reported the ISM would have an impact on how they approached their future work. Qualitative feedback revealed key strengths include the opportunities to meet with an international and diverse pool of experts and individuals working in the field, the interactive nature of the workshops and training sessions, and the breadth of topics and contexts covered.

Conclusions

Yearly, the UK ISM has grown, both in size and in its international reach. Rated consistently favourably by delegates, the ISM helps to tackle current training demands from all those interested in learning and building their skills in implementation science. Evaluation of the ISM will continue to be an annual iterative process, reflective of changes in the evidence base and delegates changing needs as the field evolves.

Similar content being viewed by others

Introduction

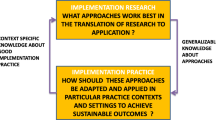

Implementation science is a rapidly evolving discipline with a significant role in bridging the widely cited ‘research to practice’ gap [1,2,3]. On average, it takes an estimated 17 years for research findings to be translated into their intended clinical settings [4,5,6]. Many studies never go beyond publication [7, 8], and of those that do, widespread and systematic implementation of findings is seldom achieved [9, 10]. This consistent failure to efficiently implement evidence into practice not only represents a missed opportunity to improve health outcomes and save lives but also results in significant resource burden for the health and social care system as a whole [10,11,12,13]. Uncovering ways to close this quality chasm is fundamental, if health and social care outcomes are to be improved [9, 14, 15].

The field of implementation science has experienced significant growth over the last two decades [1, 16, 17]. As interest in the field has increased [1], so has the appetite of researchers and implementers (those tasked with implementing healthcare evidence) to learn about implementation science methodologies [18,19,20,21,22]. To keep abreast with these demands, opportunities for building capacity in implementation science are essential [19, 20, 23,24,25]. The field has responded to this increased demand—such that a variety of teaching initiatives and training programmes have emerged [3, 17, 21, 26,27,28]. Typically, these take the form of webinars or short courses and ‘taster’ sessions over 1–5 days [29,30,31,32,33] but may also form part of masters, doctoral, or post-doctoral programmes [21, 34,35,36,37]. The USA and Canada have paved the way in many of these efforts with the establishment of training institutes [25,26,27,28, 38], academic courses and certificate programmes [10, 35, 39,40,41,42,43,44], and webinar series [45, 46]. Training opportunities in the UK [47, 48], other parts of Europe [21, 37], Asia [49], Australia [29, 50], and Africa [51] have also been reported, along with the recent development of a Massive Open Online Course (MOOC) in Implementation Research by the Special Programme for Research and Training in Tropical Diseases (TDR) [52].

In 2015, Implementation Science highlighted a renewed interest in manuscripts describing and appraising education and training offerings [23] (such as those described), so that the educational effectiveness of the training courses and events on offer can be formally appraised. Further to this, in 2017, a review of dissemination and implementation capacity-building initiatives in the USA raised the importance of formal evaluation to ensure users’ needs are being met and to inform the planning of future initiatives [18].

Research in the field on the impact of training on knowledge acquisition, understanding, and interest has shown considerable promise. Taken collectively, findings demonstrate the value in preparing new researchers to conduct implementation research, upskilling those already working in the field, increasing confidence in the application of acquired skills, and forging working relationships between multidisciplinary audiences [2, 17, 26,27,28, 33, 51, 53]. While findings are positive, much of the evidence to date is USA- and Canada-centric [3, 26,27,28, 31,32,33,34, 38, 53, 54], with fewer evaluations of training endeavours in other parts of the world [21, 29, 37, 51] and a noticeable gap in evidence within the UK context.

This paper aims to build on the existing literature and address this evidence gap. We report an innovative UK training initiative, which to the best of our knowledge is the largest of its kind in Europe: the ‘Implementation Science Masterclass’ (ISM), led by the Centre for Implementation Science, King’s College London. We describe the course development and delivery and report on delegates’ evaluations and faculty reflections over its first 6 years (2014–2019).

Methods

Institutional context

The ISM is partly funded by the National Institute of Health Research’s (NIHR) Applied Research Collaboration (ARC) South London, 2019–2024 (formerly the ‘Collaboration for Applied Leadership Health Research and Care’ (CLAHRC) South London, 2013–2018). This is one of a number of applied health research collaborations across England, centrally funded by the NIHR to produce highly implementable and high-quality applied health research that addresses urgent health and healthcare needs of the English population, and also to develop capacity in applied health research and healthcare implementation (including implementation research and practice). The NIHR ARC South London comprises a diverse and multidisciplinary team working collaboratively between academic institutions and NHS organisations across South London.

Within this research infrastructure, the Centre for Implementation Science was established in 2014 and became operational in 2015. Based within the Health Service and Population Research Department of King’s College London, the Centre leads implementation research and education activities and supports the research carried out by the ARC South London with the primary goal of helping to better implement research-driven best practices. The Centre is currently the largest implementation research infrastructure of its kind in the UK and Europe, hosting over 50 staff members—including faculty, scientists, and managerial and administrative personnel. The Centre is the organisation that hosts and delivers the ISM.

Rationale and aims of the ISM

The ISM was firstly conceptualised in 2013, as part of a drive to augment the UK capacity and capability in implementation research and practice. At the time of inception (2013), there was a significant shortage of UK training opportunities for those interested in learning how to better implement research findings into clinical practice [55]. Training opportunities offered outside the UK (past and present) have not been able to address the training need—many are/have been restricted to small numbers (e.g. capped at 12–20 individuals [26, 27, 38, 56]), involve a competitive application process [26, 27, 36, 38, 56], or are restricted to specific professions [23, 36], contexts [27, 29, 38], specific stage of career [27, 28, 35, 38, 49, 54], or those with established opportunities to conduct an implementation research project in their workplace [10, 36].

The ISM’s goal was to overcome these barriers and address the implementation research capacity shortage in the UK—and potentially also Europe. The primary aim of the ISM was to provide a training mechanism for all individuals interested in the application of implementation science methodologies and techniques, irrespective of their professional background, where they fall on the career trajectory, or their expertise. Secondary to this, we wanted to help encourage collaborative work through developing a network of implementation scientists from diverse disciplines, professions, work settings, and socio-demographics.

ISM annual development and delivery cycle

The ISM is delivered annually, in July, in London, over 2 full days. All aspects of the ISM are delivered face-face, excluding situations where there are unanticipated issues with a speaker’s ability to attend in person; in these circumstances, video conferencing is used. The development and delivery of the ISM follows an annual multiphase, iterative educational cycle within the UK academic year:

Development stage (September to January): the ISM core content and faculty composition for the upcoming July delivery are reviewed and agreed in light of the preceding year’s evaluation and faculty reflections. In the ISM’s first development iteration (2013–2014), this stage also included a needs assessment of healthcare professionals and a curriculum mapping exercise of other relevant training initiatives in order to establish research and education priorities. This activity is now undertaken periodically to ensure the ISM remains relevant and addresses current needs in the UK (and further afield).

Delivery stage (January to July): the ISM is fully planned—including detailed description of the learning objectives of the core elements of the course; description of the course specialist elements; specification of interactive workshops; faculty formulation; finalisation of length, structure, and pedagogical approach(es); and administrative and communications arrangements for the course (incl. course location, communications materials, and handouts). Early in the calendar year, the course registration also becomes available for delegates.

Evaluation phase (July to August): this relates to the collection and analysis of (1) delegates’ evaluations of the ISM (July), along with (2) faculty reflections (July to August), which are then used to inform the content and structure of the course for the following year—hence closing an iterative annual feedback and learning loop.

Delegates’ evaluations: a structured and standardised evaluation form is used to assess delegates’ overall impression of the ISM as well as attitudes towards the ISM’s relevance and clarity of learning objectives, the appropriateness of its pace, duration and academic level, and the level of support provided by the faculty and organising committee before the ISM and its 2-day duration. Evaluation forms are included in the information pack delegates receive on the first day of the ISM. Delegates are encouraged to complete and hand in the evaluation survey at the end of the ISM: for those unable to do this, the survey is circulated via email, the day after the ISM. Responses are provided on a 5-point ordinal scale (e.g. ‘What was your overall impression of the course?’—range—‘very poor’, ‘poor’, ‘fair’, ‘good’, ‘very good’) or 3-point ordinal scale (e.g. ‘What did you think of the course duration?’—‘too short’, ‘about right’, and ‘too long’). For the purpose of the evaluation, the positive and negative anchors derived from the 5-point scales were amalgamated (e.g. ‘very good’ and ‘good’ were combined to make ‘good’).

Delegates are also given the opportunity to provide free-text feedback on perceived key strengths of the ISM and what they felt could be done differently. Data is aggregated and fully anonymized for yearly ISM evaluation reports for the Centre for Implementation Science. Individual data are then destroyed due to General Data Protection Regulations (GDPR), a set of data protection rules implemented in UK law as the Data Protection Act 2018 (https://www.legislation.gov.uk/ukpga/2018/12/contents/enacted).

Faculty reflections: the ISM faculty are annually invited to take part in two debriefings: one ‘hot debrief’ and one ‘cold debrief’. The hot debrief takes place upon completion of the ISM—in a faculty group session or in smaller groups, facilitated by one of the two ISM co-directors. The aim is to capture initial thoughts and the experience of the course immediately upon its completion that will otherwise be forgotten or be filtered through subsequent reflection. The cold debrief is always virtual. The faculty are invited to submit their reflections on the course in August and September through a faculty group email, facilitated by one of the two co-directors. All emails are collected and collated, and then submitted to the ISM organising committee at their first meeting for the subsequent year’s ISM (typically in September to October annually), alongside the detailed evaluation report, which includes a summary of the delegates’ ISM evaluations. Annual ISM improvements are driven by these faculty reflections and the delegates’ summary evaluation report.

Faculty and organising committee

The faculty represent a range of countries and continents, professional backgrounds (e.g. medics, researchers, service users, and patient representatives), disciplines (e.g. psychology, psychiatry, public health, medicine, and epidemiology), work settings (e.g. academic or healthcare organisations and services), and health services (e.g. cancer, surgery, diabetes). Taken collectively, the faculty hold expertise spanning all areas of implementation science.

The organising committee is chaired by 2 academic co-directors and includes experienced implementation scientists, communication officers, education programme managers, and experienced administrators. The committee oversees the scientific direction of the ISM, procurement and allocation of resources, substantive iterations to content (year on year), and dissemination of relevant information, materials, and guidance to the wider faculty and delegates (see Table 1 for a full list of the faculty over the 6 years of the ISM).

Course development, curriculum, and structure

Five guiding principles underpin the ISM curriculum: (1) to have relevance to both researchers and implementers; (2) to focus on different health and care contexts, locally, nationally, and internationally, to illustrate that implementation issues are endemic in any care setting; (3) to take a ‘systems’ approach to highlight that implementation is a multi-level phenomenon (e.g. involving individuals, teams, organisations); (4) to comprise a mixture of teaching approaches and interactive sessions to meet the educational needs of new and intermediary learners as well as those with more expertise; and (5) to enable formal and informal interactions with faculty and delegates to forge experiential and transdisciplinary understanding of the methodological issues and conceptual challenges within the field.

Based on these principles, the ISM curriculum follows a 4-block structure, with each block delivered within a half-day session. The blocks cover the following broad thematic areas:

-

Block 1—introduction to implementation science: delivered as the first half-day of the ISM; this block introduces the delegates to the basic concepts and definitions of the science, its historic provenance, and how it differs from/relates to other areas of health research (e.g. clinical effectiveness research).

-

Block 2—implementation theories and frameworks: this block exposes delegates to theoretical frameworks developed and used by implementation scientists, including exemplar applications within research studies.

-

Block 3—implementation research and evaluation methods and designs: this block covers research design elements applicable to implementation research, including an introduction to hybrid effectiveness-implementation designs, other trial and observational designs of relevance to implementation research questions, the Medical Research Council Framework for evaluation of complex health interventions, process evaluation approaches, and the use of logic models/theory-of-change methodology in implementation studies.

-

Block 4—specialist topics: the final half-day of the ISM varies on an annual basis, thus offering the opportunity to cover prominent or topical implementation research issues. To date, this block has featured (amongst other topics) sessions on how implementation science relates to improvement science and knowledge mobilisation, the advantages and disadvantages of different implementation research designs, and the usefulness of implementation research for practical policy-making applications. The format of this block is accordingly flexible.

To deliver the four ‘blocks’, the ISM comprises a mixture of plenary lectures, workshops and breakout sessions, and debate panels. The plenaries and lectures describe and discuss the conceptual foundations and methodologies of implementation science. The workshops and breakout sessions focus on the application of specific tools and techniques. The debate panels address current controversies or ‘hot topics’ in implementation science as well as the relationship between implementation science and the related field of applied health research.

The content of the ISM was initially developed by drawing on the core faculty’s extensive research and education expertise. In addition, we reviewed published resources including a list of established core competencies in knowledge translation [25], taken from a 2011 Canadian training initiative, as well as a 2012 framework for training healthcare professionals in implementation and dissemination science [41]. Newer evidence in the field (i.e. post-inception of the ISM in 2013) is considered yearly and used to help inform the following year’s curriculum as needed: examples include published evaluations of implementation science training initiatives [21, 27, 28, 31, 32, 34, 37, 38, 54], the National Implementation Research Network’s recent 2018 working draft of core competencies for an implementation practitioner [57], and a scoping review of core knowledge translation competencies [58]. The ISM involves no summative assessment, but understanding is assessed formatively through the interactive group-based sessions.

Registration for the ISM has been through a simple online process with places offered on a first-come-first-served basis.

Further information on any aspect of the ISM is available from the lead author (RD) upon request.

Results

Following the initial course in July 2014, the ISM is held annually in London, UK. The course is currently in its sixth year of running, with the 2020 ISM fully scheduled.

Each year, the number allowed to register has increased, to account for growing demand: starting from 40 delegates in 2014, with the most recent year (2019) capped at 150. A standardised fee structure is applied, in tandem with the host academic institution’s (KCL) short course fee structure to ensure transparency and equity. Discounted fees are offered to specific groups of individuals (e.g. low- and middle-income country (LMIC) nationals, service users, those working in non-governmental organisations (NGOs) and/or within the ARC South London footprint).

Information on delegates

To date, 501 delegates from over 29 countries have attended the masterclass. Most delegates (75%) have been UK-based (380/501) with 51% (258/501) residing in London, UK. The number of overseas delegates has increased year-on-year, from 13% (N = 5/40) in year 1 (2014) to 33% (N = 48/147) in year 6 (2019) (see Table 2).

The course attracts delegates from a range of cultural, ethnic, and professional backgrounds (e.g. nurses, doctors, healthcare managers, psychologists, economists, policy makers, patient representatives, and epidemiologists), and different stages of their career (e.g. doctoral students, post-doctoral fellows, research staff, junior, and senior faculty), representing multiple academic departments, including social work, public health, medicine, pharmacy, and psychology, as well as those from non-academic health and social care organisations.

Delegates’ evaluations of the ISM

The overall response rate from delegates across the six years was 64.5% (323/501). Table 3 displays the breakdown of data in relation to the evaluation survey. An overwhelming majority of delegates (92%, 294/318 responses) reported that their overall impression of the course was ‘good’ or ‘very good’. The majority also stated that the learning objectives were relevant (94%, 287/306), clear (90%, 277/307), and reached 84% (256/306). Seventy-four percent of delegates (233/313) rated the pre-course reading material favourably, with most agreeing that the pace of the course (86%, 275/320), the course duration (85%, 271/319), and the academic level (87%, 275/316) were ‘about right’. Seventy-one percent (219/307) felt that the ISM would have an impact (‘definitely’ or ‘partly’) on how they approach their practice and/or future research, with the majority also rating both the level of pre-course support for delegates and support during the day as ‘high’ (89%, 276/310, and 92%, 281/304, respectively).

Free-text feedback on the ISM was provided by more than half (> 65%) the delegates. Comments on the ‘strengths’ of the course across all 6 years focused on the following:

-

Breadth and variety of topics: e.g. ‘nice breadth of topics’, ‘comprehensive overview of the field’.

-

Quality of the speakers: e.g. ‘the speakers were all excellent. Very engaged and helpful’, ‘clearly incredibly knowledgeable and approachable’.

Benefit of interactive sessions: e.g. ‘it was useful to apply the learnings from the lectures in the workshops’, ‘very valuable having the small group sessions’, ‘the discussions gave me the chance to really understand and apply the content being presented’.

Networking opportunities: e.g. ‘interacting with experts in the field, meeting colleagues and networking informally’, ‘I loved having the opportunity to interact with researchers in the field and editors of journals’.

Diversity of faculty and audience: e.g. ‘speakers from a variety of fields’, ‘getting the perspectives of researchers internationally’, ‘global perspective of implementation science from a diversity of fields’.

Consolidation of learning: e.g. ‘enough repetition and continuity to embed ideas’, ‘reinforced concepts I was familiar with and stretched my thinking about challenges and questions to be answered’, ‘validated what I understood already’.

Structure: e.g. ‘mixture of presentations and group-based sessions’, ‘combination of lectures followed by workshops’.

Comments on how the ISM could be improved in the future centred on the following:

Greater focus and separation on LMIC and other contexts: e.g. ‘I don’t think I was the right person for the course because I work in LMIC and did not feel the content was relevant to these contexts’, ‘the focus on healthcare – I would have liked to have seen more relevance to social care’, ‘it would have been useful to separate out local and global streams’.

-

Opportunities to discuss own work: e.g. ‘more time for small group work to discuss individual projects’, ‘some project clinic time for reflection on specific projects’, ‘more opportunities to talk about my own work’, ‘clinic to discuss own projects with experts’.

-

Greater interactivity: e.g. ‘would have liked it to be more interactive’, ‘even more group-based sessions’, ‘greater opportunities to ask questions and engage with tasks’.

-

Support required in the application of knowledge: e.g. ‘I have learned a lot but I am not able to implement the knowledge’, ‘I know what implementation science is now but am still unsure how to apply it to my field’.

Faculty reflections

The faculty reflections have focused on several areas over the years—summarised as follows:

Educational delivery methods: balance of didactic lectures and workshops; this is a recurring theme. The ISM has always included a mixture of didactic and interactive components. The balance of them as well as the nature of the activities carried out during the interactive workshops has been regularly reflected upon. The faculty have remained keen on the mixture of activities to continue. The interactive workshops have changed in nature—from sessions where the contents of the immediately preceding lecture were reflected upon and discussed in the context of specific delegates’ projects, to delegates submitting summary projects which were themed and reviewed at the workshops, to, more recently, the workshops focusing on specialist topic areas that require a hands-on interactive approach—including how to publish implementation studies, how to apply the concept of a learning healthcare system, and how to carry out stakeholder engagement activity and other specialist topics.

ISM educational level: introductory vs advanced curriculum streams; in its early years and following the original needs assessment, the ISM was designed as a plenary course—i.e. all delegates were always kept together in plenary sessions, and attended similarly themed workshops. However, over the years, this approach shifted—such that as of 2019 the ISM includes two streams, one aimed at introductory learners and the other aimed at advanced learners. The lectures and workshops are themed accordingly for these two streams.

Selection and prioritisation of topic areas within the curriculum: the faculty have been very keen on keeping the curriculum relevant but also refreshing it annually. This has meant that the curriculum has gravitated towards coverage of key methodological aspects of implementation science (including hybrid designs, implementation theories and frameworks, application of theory of change/logic model methodology, and complex intervention evaluation design). At the same time, the need to refresh the ISM and keep it current has meant that a number of specialist areas have also been covered over the years—including the interface of implementation with improvement science and knowledge mobilisation, funding implementation research, and implementation research in the context of global health.

Provision of project (incl. papers, grants, service implementation projects) development support and mentoring: the faculty have often reflected that some of the more advanced learners who are at a stage of their careers at which they are submitting funding bids or designing implementation studies would benefit from a mentoring scheme to be delivered through the ISM. A pilot operationalization of this reflection will be offered as part of the 2020 ISM.

Examples of iterations to the course

The process of designing and delivering the ISM each year is a continual process. Key changes we have made centre on the registration process, the setting, the content, and the structure. For example, after the 2015 ISM, we streamlined the registration process so that delegates no longer had to submit an abstract (which detailed an outline of an implementation project for discussion and development) because acted as a deterrent to some, especially if they were new to the field. After the 2014 ISM, we changed the venue and room layout after many individuals commented on how the workshops and group work felt fragmented and broken up by walking to different venues. In 2016, after delegates stated that they were ‘hoping for more on evaluations’, we incorporated sessions on evaluating complex interventions in the curriculum for the following years. We have, over the years, also reduced the number of didactic classes per day and increased (where possible) formal and informal opportunities to network and discuss research projects with faculty and peers. In 2019, we also introduced an advanced implementation science stream (mentioned in the faculty reflections above) based on requests from a growing number of delegates and the need to cater for their differing levels of expertise.

Wider impact and evolution of additional training offerings

We have developed several other training initiatives that have stemmed either directly or partly from feedback on the ISM. Key outputs include the following:

Project advice clinics—we offer bespoke advice clinics, introduced (in 2017) as a direct result of repeated and growing numbers of requests from ISM delegates for feedback on their own implementation science projects. Anyone can apply, and clinics run all through the year, providing real-time feedback to individuals as and when they need it. To date, we have conducted 39 clinics (2017 = 15, 2018 = 13, 2019 = 11); though these figures are not an indicator of the demand for the clinics, rather they are reflective of the capacity we have had to deliver them. Feedback collected from attendees has been extremely positive in terms of the usefulness of the clinics and level of support provided.

UK Implementation Science Annual Research Conference—as the ISM has grown, it has become harder to accommodate delegates’ desires to showcase and discuss their own research in the group-based sessions. As a direct expressed need, we developed the UK Implementation Science Annual Research Conference, which is the largest conference of its kind in the UK (and will be in its 3rd year in 2020). In 2018, 116 delegates attended the conference from 16 countries; this number increased to 148 in 2019, with delegates from 17 countries, including (but not limited to) Austria, Belgium, South Africa, Spain, New Zealand, USA, Canada, Sweden, Nigeria, Taiwan, and Hong Kong.

-

Additional offerings—we have developed a range of lectures and half or full day training offerings embedded within formal postgraduate courses at King’s College London (e.g. the Masters in Public Health and MSc Global Mental Health). These offerings were driven, in part, by feedback from ISM delegates regarding the lack of training opportunities in implementation science. We have also designed and delivered a range of bespoke courses for health and social care organisations. Typically (but not always), these requests originate from individuals that attend the ISM that wish to offer similar training to their host organisation, so we then deliver training tailored to their specific needs and context.

Discussion

This paper describes the development and evaluation of the UK ISM, led annually by the Centre for Implementation Science, King’s College London in London, UK. Over the 6-year period, the ISM has grown considerably, both in size and in terms of its international reach with delegates attending from all over the world. Across the evaluation, consistently favourable results were reported in terms of knowledge gained, relevance of content, and potential impact on future work. Noteworthy strengths included the breadth of the curriculum and opportunities to network with individuals from a diversity of backgrounds. Several areas of improvement were identified, including allowing more time for group discussions and placing greater emphasis on implementation science in low- and middle-income countries (LMICs) and social care contexts.

The ISM was developed to address the significant lack in training opportunities in implementation science in the UK. At its inception (and currently still), it is the largest initiative of its kind in the UK that provides training for all individuals irrespective of their professional background, qualifications, and expertise or where they fall on the career trajectory. The substantial breadth of topics covered together with the cross-disciplinary, international composition of faculty and delegates provides a rich and varied training environment as well as helping to foster collaborative opportunities.

Our findings, consistent with analogous research [26,27,28, 33, 37, 38, 54], demonstrate the need and value of training initiatives in implementation science. A key strength of our research is the longitudinal nature of the evaluative data, collected at the end of each ISM, providing ‘real-time’ feedback over a 6-year period. To the best of our knowledge, this is the only research that describes the development and evaluation of a training initiative delivered in the UK, focused solely on implementation science. Papers like ours are essential in order to gain a field-wide perspective on the nature and range of initiatives available so that training gaps can be identified and addressed in future capacity-building endeavours [2, 18,19,20, 59, 60]. A limitation of our research is that only two thirds of delegates completed our evaluation survey. However, while lower than we would have liked, this response rate was consistently achieved across the whole evaluation period enabling comparability of findings across the years.

Developing and delivering the ISM has not been without its challenges. We are still grappling with some of these issues but feel it is important to reflect on our learnings to date. Many obstacles we have encountered are interrelated; while not insurmountable, they certainly compound the complexities with building capacity in the field. We reflect on these here so they can be borne in mind by educators who may be looking to establish similar training initiatives.

A strong underlying aim of our ISM is its transdisciplinary and cross-professional approach. While this component is critical to the success of the course, such diversity can create considerable differences in perspectives and training expectations amongst delegates. While this is neither unpreventable nor unwanted (given the importance of addressing complex healthcare problems from a variety of angles), it can also be obstructive to meeting individual needs. Given our wide target audience, we could not tailor our curriculum to specific disciplines or contexts. At times, this made it hard for some delegates (particularly those with less experience) to assimilate the taught concepts and methodologies in a way that made sense to them and was applicable to their own practice setting.

Equally, an intentional feature of our ISM was to target both junior and established investigators as well as those newer to the field as many of the current training opportunities are aimed at those with more experience [26,27,28, 38]. This resulted in some delegates expressing a desire for sessions to be split based on levels of expertise: a view, more prevalent in the recent ISMs, as the course has grown. In 2019, we made efforts to address this through the inclusion of an ‘advanced stream’. The need to account for differential competencies for the beginner and advanced learner in implementation science has also been raised more widely in the literature [33, 61, 62], but we found it difficult deciding the level of content and specific points of focus for each stream. We also did not find any similar attempts reported in the published literature that we could use as a benchmark.

An additional aspect of our ISM was its dual focus on both implementers and researchers, which is important for two key reasons. First, training efforts in implementation science are typically aimed at researchers not implementers [32]. Second, bringing together researchers and implementers enables an open forum to raise key obstacles when implementing evidence into practice and generates research and practice discussions on how these can be overcome [19, 51]. When the ISM was initially developed in 2013/2014, core competencies for the implementation researcher and practitioner did not exist. This resulted in considerable debate amongst faculty over what topics we should include, and how. While more recently, important steps have been taken to establish curricula expectations [25, 41, 57, 59, 63] and a working draft of competencies for the implementation specialist has been produced [57], a consistent curriculum, focused on inter-disciplinary competencies, is yet to emerge [26, 27, 41, 64].

A final, but equally important challenge we have encountered is keeping up with training demands, reflected by the increasing number of delegates wanting to register on our ISM, including those from overseas. A key advantage of our ISM is that it provides efficiency in scale, attracting a range of international faculty and delegates at a singular event. In the early years, when the ISM was smaller, delegates were able to benefit greatly from the expertise of the faculty by signing up for one-on-one time to gain feedback on their individual projects. We were also able to better align individuals in the group sessions and workshops in terms of interests and experience, providing greater opportunities to discuss techniques and methods that could be applied to their specific needs. Now the ISM is substantially bigger, it has become harder to tailor it in this way, and in the interest of fairness, we no longer hold one-one sessions with faculty because we cannot do this for all attendees. This presents us with somewhat of a quandary. While we do not want to hinder the growth of the ISM, we do not have capacity to keep up with this growth if it continues to mature at its current pace. This issue resonates with wider literature that has shown there is a shortage of spaces on implementation science-focused training opportunities, with demand notably superseding availability [19, 26, 27, 38, 62]. Finding ways to build capacity in the field to reach out to a wider critical mass is essential if we are to cope with this growing demand. The online avenue holds promise, with several organisations paving the way and releasing web-based courses in dissemination and implementation science in recent years [52, 65, 66].

Finally, it is also important to note that as part of this evaluation, we did not assess whether training resulted in improved implementation of evidence-based interventions. Recent evidence has shown that training initiatives in implementation and dissemination science can lead to sustained improvements in applying evidence into practice [54], and also result in peer-reviewed publications, grant applications, and subsequent funding [26, 27, 38, 67] with scholarly productivity increasing the longer the duration of training [67]. While it was not the intention of our research to examine this, it nonetheless remains an important area of exploration to help highlight and strengthen the value and impact of training in the field. We are mindful of this and are exploring ways to assess such benefits in our future capacity-building endeavours.

Conclusions

As evident in this article, interest in the UK ISM is growing year on year and on an international level. The development of the ISM curriculum will continue to be an annual iterative process, reflective of the evidence base as it evolves and the directly expressed needs of the delegates that attend the course. As an emerging field of interest, implementation science measures and methods are still developing [68, 69], but as new research unfolds, we will move more towards a clearer and more established consensus on teaching priorities and approaches. There is no easy formula to address some of the challenges we have faced when developing and delivering the ISM, but our consistently positive findings across a 6-year evaluation period indicate that we are at least some of the way to getting the ingredients right.

Availability of data and materials

The datasets are available from the authors on reasonable request.

Abbreviations

- ISM:

-

Implementation Science Masterclass

- LMICs:

-

Low- and middle-income countries

- NIHR:

-

National Institute of Health Research

- ARC:

-

Applied Research Collaboration

- CLAHRC:

-

Collaboration for Leadership in Applied Health Research and Care

- KCL:

-

King’s College London

References

Norton W, Lungeanu A, Chamber DA, Contractor N. Mapping the growing discipline of dissemination and implementation science in health. Scientometrics. 2017;12:1367–90.

Chambers DA, Pintello D, Juliano-Bult J. Capacity-building and training opportunities for implementation science in mental health. Psychol Res. 2020;283:112511.

Norton W. Advancing the science and practice of dissemination and implementation in health: a novel course for public health students and academic researchers. Public Health Rep. 2014;129(6):536–42.

Balas E, Boren S. Managing clinical knowledge for health care improvement. In: van Bemmel JH, McCray AT, editors. Yearbook of medical informatics. Stuttgart: Schattauer Verlagsgesellschaft mbH; 2000. p. 65–70.

Grant J, Green L, Mason B. Basic research and health: a reassessment of the scientific basis for the support of biomedical science. Res Eval. 2003;12:217–24.

Morris ZS, Wooding S, Grant J. The answer is 17 years, what is the question: understanding time lags in translational research. J Roy Soc Med. 2011;104:510–20.

Wensing M. Implementation science in healthcare: introduction and perspective. Z Evid Fortbild Qual Gesundnwes. 2015;109(2):97–102.

Rapport F, Clay-Williams R, Churruca K, Shih P, Hogden A, Braithwaite J. The struggle of translating science into action: foundational concepts of implementation science. J Eval Clin Pract. 2018;24(1):117–26.

Bauer MS, Damschroder L, Hagedorn H, Smith J, Kilbourne AM. An introduction to implementation science for the non-specialist. BMC Psychol. 2015;16(3):32.

Burton DL, Lubotsky Levin B, Massey T, Baldwin J, Williamson H. Innovative graduate research education for advancement of implementation science in adolescent behavioral health. J Behav Health Serv Res. 2016;43:172–86.

Grol R. Successes and failures in the implementation of evidence-based guidelines for clinical practice. Med Care. 2001;39:46–54.

McGlynn EA, Asch SM, Adams J, et al. The quality of health care delivered to adults in the United States. N Engl J Med. 2003;348:2635–45.

Seddon ME, Marshall MN, Campbell SM, Roland MO. Systematic review of studies of quality of clinical care in general practice in the UK, Australia and New Zealand. Qual Health Care. 2001;10:152–8.

Mallonee S, Fowler C, Istre GR. Bridging the gap between research and practice: a continuing challenge. Inj Prev. 2016;12(6):357–9.

Ginossar T, Heckman KJ, Cragun D, et al. Bridging the chasm: challenges, opportunities, and resources for integrating a dissemination and implementation science curriculum into medical education. J Med Ed Curr Dev. 2018;5:1–11.

Sales AE, Wilson PM, Wensing M, et al. Implementation science and implementation communications: our aims, scope and reporting expectations. Implement Sci. 2019;14:77.

Brownson RC, Proctor EK, Luke DA, et al. Building capacity for dissemination and implementation research: one university’s experience. Implement Sci. 2017;12:104.

Proctor EK, Chambers DA. Training in dissemination and implementation research: a field-wide perspective. Transl Behav Med. 2017;7(3):624–35.

Tabak R, Padek MM, Kerner JF. Dissemination and implementation science training needs: insights from practitioners and researchers. Am J Prev Med. 2017;52(3):S322–9.

Chambers DA, Proctor EK, Brownson RC, Straus SE. Mapping training needs for dissemination and implementation research: lessons from a synthesis of existing D&I research training programs. Transl Behav Med. 2017;7(3):593–601.

Carlfjord S, Roback K, Nilsen P. Five years’ experience of an annual course on implementation science: an evaluation among course participants. Implement Sci. 2017;12:101.

Ford BS, Rabin B, Morrato EH, Glasgow RE. Online resources for dissemination and implementation science: meeting the demand and lessons learned. J Clin Transl Sci. 2018;2(5):259–66.

Straus S, Sales A, Wensing M, Michie M, Kent B, Fiy R. Education and training for implementation science: our interest in manuscripts describing education and training materials. Implement Sci. 2015;1:136.

Smits PA, Denis JL. How research funding agencies support science integration into policy and practice: an international overview. Implement Sci. 2014;9:28.

Straus SE, Brouwers M, Johnson D, et al. Core competencies in the science and practice of knowledge translation: description of a Canadian strategic training initiative. Implement Sci. 2011;6:127.

Meissner H, Glasgow RE, Vinson CA, et al. The US training institute for dissemination and implementation research in health. Implement Sci. 2013;8:12.

Proctor EK, Landsverk J, Baumann AA, et al. The implementation research institute: training mental health implementation researchers in the United States. Implement Sci. 2013;8:105.

Proctor E, Ramsey AT, Brown MT, Malone S, Hooley C, McKay V. Training in Implementation Practice Leadership (TRIPLE): evaluation of a novel practice change strategy in behavioural health organisations. Implement Sci. 2019;14:66.

Goodenough B, Fleming R, Young M, Burns K, Jones C, Forbes F. Raising awareness of research evidence among professionals delivering dementia care: are knowledge translation workshops useful? Gerontology Geriat Ed. 2017;38(4):392–406.

Jones K, Armstrong R, Pettman T, Waters E. Cochrane Update. Knowledge translation for researchers: developing training to support public health researchers KTE efforts. J Public Health. 2015;37(2):364–6.

Marriott BR, Rodriguez AL, Landes SJ, Lewis CC, Comtois KA. A methodology for enhancing implementation science proposals: comparison of face-to-face versus virtual workshops. Implement Sci. 2016;11:62.

Moore JE, Rashid S, Park JS, Khan S, Straus SE. Longitudinal evaluation of a course to build core competencies in implementation practice. Implement Sci. 2018;13:106.

Morrato EH, Rabin B, Proctor J, et al. Bringing it home: expanding the local reach of dissemination and implementation training via a university-based workshop. Implement Sci. 2015;10:94.

Ramaswamy R, Mosnier J, Reed K, Powell BJ, Schenck AP. Building capacity for Public Health 3.0: introducing implementation science into a MPH curriculum. Implement Sci. 2019;14:18.

Means AR, Phillips DE, Lurton G, et al. The role of implementation science training in global health: from the perspective of graduates of the field’s first dedicated doctoral program. Glob Health Action. 2016;9(1):31899.

Ackerman S, Boscardin C, Karliner L, et al. The Action Research Program: experiential learning in systems-based practice for first year medical students. Teach Learn Med. 2016;28(20):183–91.

Ulrich C, Mahler C, Forstner J, Szecsenyi J, Wensing M. Teaching implementation science in a new Master of Science program in Germany: a survey of stakeholder expectations. Implement Sci. 2017;12:55.

Padek M, Mir N, Jacob RR, et al. Training scholars in dissemination and implementation research for cancer prevention and control: a mentored approach. Implement Sci. 2018;13:18.

‘Public Health Implementation Science’ Virginia Tech. https://www.tbmh.vt.edu/focus-areas/health-implement.html (accessed 9/1/2019).

‘Fundamentals of Implementation Science’, University of Washington https://edgh.washington.edu/courses/fundamentals-implementation-science (accessed 9/1/2019).

Gonzalez R, Handley MA, Ackerman S, O’Sullivan PS. Increasing the translation of evidence into practice, policy and public health improvements: a framework for training health professionals in implementation and dissemination science. Acad Med. 2012;87(3):271–8.

‘Introduction to implementation science, theory and design’ University of California, San Francisco. https://epibiostat.ucsf.edu/introduction-implementation-science-theory-and-design (accessed 12/1/2019).

‘Implementation science certificate’ University of California, San Francisco. https://epibiostat.ucsf.edu/faqs-implementation-science-certificate (accessed 12/1/2019).

‘Implementation and dissemination science’ University of Maryland https://www.graduate.umaryland.edu/research/ (accessed 12/1/2019).

‘Implementation Science Seminar Series’. Quality Enhancement Research Initiative (QUERI) Implementation Seminar Series’, U.S Department of Veterans Affairs. https://www.hsrd.research.va.gov/cyberseminars/series.cfm (accessed 12/1/2019).

‘Implementation Science Research Webinar Series’ National Cancer Institute http://news.consortiumforis.org/training/ucsf-implementation-science-online-training-program/ (accessed 12/1/2019).

‘UK Implementation Science Masterclass, Kings College London. https://www.kcl.ac.uk/events/implementation-science-masterclass (accessed 12/1/2019).

‘Implementation science module’. University of Exeter. http://medicine.exeter.ac.uk/programmes/programme/modules/module/?moduleCode=HPDM026&ay=2015/6 (accessed 12/1/2019).

Wahabi HA, Al-Ansary A. Innovative teaching methods for capacity building in knowledge translation. BMC Med Ed. 2011;11:85.

‘Specialist certificate in implementation science’ University of Melbourne. https://study.unimelb.edu.au/find/courses/graduate/specialist-certificate-in-implementation-science/ (accessed 12/1/2019).

Osanjo GO, Oyugi JO, Kibwage IO, et al. Building capacity in implementation science research training at the University of Nairobi. Implement Sci. 2016;11:30.

‘Massive open online course on implementation research (MOOC). The Special Programme for Research and Training in Tropical Diseases. https://www.publichealthupdate.com/registration-open-tdr-massive-open-online-course-mooc-on-implementation-research/ (accessed 12/1/2019).

Luke DA, Baumann AA, Carothers BJ, Landsverk J, Proctor EK. Forging a link between mentoring and collaboration: a new training model for implementation science. Implement Sci. 2016;11:137.

Park JS, Moore JE, Sayal R, et al. Evaluation of the “Foundations in knowledge translation” training initiative: preparing end users to practice KT. Implement Svi. 2018;13:63.

Fade S, Halter, M. Understanding South London’s Capacity for Implementation and Improvement Science, 2014. Report prepared for Health Innovation Network South London (south London’s academic health science network) and the National Institute for Health Research Collaboration for Leadership in Applied Health Research and Care (CLAHRC) South London.

Kho ME, Estey EA, Deforge RT, Mak L, Bell BL. Riding the knowledge translation roundabout: lessons learned from the Canadian Institutes of Health Research Summer Institute in knowledge translation. Implement Sci. 2009;4:33.

Metz A, Louison L, Ward C, Burke K. Implementation specialist practice profile: skills and competencies for implementation practitioners. Working draft, 2018. https://www.effectiveservices.org/downloads/Implementation_specialist_practice_profile.pdf (accessed 12/1/2019).

Mallidou AA, Atherton P, Chane L, Frisch N, Glegg S, Scarrow G. Core knowledge translation competencies: a scoping review. BMC Health Serv Res. 2018;18:502.

Newman K, Van Eerd D, Powell B, et al. Identifying emerging priorities in Knowledge Translation from the perspective of trainees. BMC Health Serv Res. 2014;14(Suppl 2):P130.

Darnell D, Dorsey CN, Melvin A, Chi J, Lyon AR, Lewis CC. A content analysis of dissemination and implementation science resource initiatives: what types of resources do they offer to advance the field? Implement Sci. 2017;12:137.

Padek M, Brownson R, Proctor E, et al. Developing dissemination and implementation competencies for training programs. 2015; 10(Suppl 1): A39.

Proctor E, Carpenter C, Brown CH, et al. Advancing the science of dissemination and implementation: three “6th NIH Meetings” on training, measures, and methods. Implement Sci. 2015;10(Suppl 1):A13.

Padek M, Colditz G, Dobbins M, Koscielniak N, Proctor EK, Sales AE, et al. Developing educational competencies for dissemination and implementation research training programs: an exploratory analysis using card sorts. Implement Sci. 2015;10:114 https://doi.org/10.1186/s13012-015-0304-3.

Chambers D, Proctor EK. Advancing a comprehensive plan for dissemination and implementation research training 6th NIH Meeting on Dissemination and Implementation Research in Health: a working meeting on training. In National Cancer Institute Division of Cancer Control & Population Science Implementation Science Webinar Series; January, 28th 2014. Retrieved from https://researchtoreality.cancer.gov/node/1281.

‘Implementation Science online training programme’. University of California, San Francisco. http://news.consortiumforis.org/training/ucsf-implementation-science-online-training-program/ (accessed 12/1/2019).

‘Inspiring change: creating impact with evidence-based implementation’. The Centre for Implementation. https://thecenterforimplementation.teachable.com/p/inspiring-change (accessed 12/1/2019).

Bauman AA, Carothers BJ, Landsverk, et al. Evaluation of the implementation research institute: trainees’ publications and grant productivity. Adm Policy Ment Health Ment Health Serv Res. 2019;8(1):105.

Stamatakis KA, Norton WE, Stirman SW, Melvin C, Brownson RC. Developing the next generation of dissemination and implementation researchers: insights from initial trainees. Implement Sci. 2013;8:29.

Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, Griffey R, Hensley M. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Admin Pol Ment Health. 2011;38:65–76. https://doi.org/10.1007/s10488-010-0319-7.

Acknowledgements

We would like to thank the delegates who took the time to complete the evaluation survey. We would also like to thank any individuals that assisted in the marketing of the ISM, its organisation and/or delivery and those that helped facilitate of chair workshops or teaching sessions. There are too many individuals to name but thank you to you all!

Funding

RD, NS, MB, AK, GT, JS, and LG are supported by the National Institute for Health Research (NIHR) Applied Research Collaboration: South London at King’s College Hospital NHS Foundation Trust. NS and LG are members of King’s Improvement Science, which offers co-funding to the NIHR ARC South London and comprises a specialist team of improvement scientists and senior researchers based at King’s College London. Its work is funded by King’s Health Partners (Guy’s and St Thomas’ NHS Foundation Trust, King’s College Hospital NHS Foundation Trust, King’s College London and South London and Maudsley NHS Foundation Trust), Guy’s and St Thomas’ Charity, and the Maudsley Charity. GT is also supported by the by the NIHR Asset Global Health Unit award, the UK Medical Research Council in relation the Emilia (MR/S001255/1) and Indigo Partnership (MR/R023697/1) awards and receives support from the National Institute of Mental Health of the National Institutes of Health under award number R01MH100470 (Cobalt study). The views expressed in this publication are those of the author(s) and not necessarily those of the NHS, NIHR, or the Department of Health and Social Care.

Author information

Authors and Affiliations

Contributions

RD designed the paper; was responsible for its conceptualization, drafting, and edits; and has been involved in the organising committee of the ISM. NS and BM are directors of the ISM and BM has been involved since its inception and NS from 2015-present. NS has been involved in editing the manuscript. GT, LG, and JS have contributed to the design and delivery of the curriculum of the ISM. AK, MB, and LG have been part of the ISM organising committee and compiled and analysed the data for the evaluation. LG and JS have been involved in the organisation of the ISM and the design and delivery of the course curriculum. All authors read and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This paper presents the findings of an education evaluation of the ‘UK Implementation Science Masterclass’. Ethical approval was not required. Completing the evaluation form was interpreted as informed consent to participate.

Consent for publication

Not applicable

Competing interests

Nick Sevdalis is the Director of London Safety and Training Solutions Ltd, which provides quality and safety training and advisory services on a consultancy basis to healthcare organisation globally. Brian Mittman (2014–present) and Nick Sevdalis (2015–present) are co-directors of the ISM. Graham Thornicroft was a co-director of the ISM in 2014. The other authors have no conflicts to declare.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Davis, R., Mittman, B., Boyton, M. et al. Developing implementation research capacity: longitudinal evaluation of the King’s College London Implementation Science Masterclass, 2014–2019. Implement Sci Commun 1, 74 (2020). https://doi.org/10.1186/s43058-020-00066-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s43058-020-00066-w