Abstract

Background

Use of the Debriefing Assessment for Simulation in Healthcare (DASH©) would be beneficial for novice debriefers with less or no formal training in debriefing. However, the DASH translated into Korean and tested for psychometrics is not yet available. Thus, this study was to develop a Korean version of the DASH student version (SV) and test its reliability and validity among baccalaureate nursing students in Korea.

Methods

The participants were 99 baccalaureate nursing students. Content validity using content validity index (CVI), construct validity using exploratory factor analysis (EFA) and confirmatory factor analysis (CFA), and internal consistency using Cronbach’s alpha coefficient were assessed.

Results

Both Item-CVIs and Scale-CVI were acceptable. EFA supported the unidimensional latent structure of Korean DASH-SV and results of CFA indicated 6 items converged within the extracted factor, significantly contributing to the factor (p ≤ .05). Items were internally consistent (Cronbach’s α = 0.82).

Conclusion

The Korean version of the DASH-SV is arguably a valid and reliable measure of instructor behaviors that could improve faculty debriefing and student learning in the long term.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Background

The vulnerabilities of relying on clinical training placements alone for new nurse readiness and the importance of nursing faculty being prepared to use alternate training modalities such as simulation have been studied [3]. Simulation-based learning is a potent complement to clinical placements and, in some countries, a potent substitute up to a certain curriculum proportion [1, 12]. However, this substitution requires a trained faculty cadre who are comfortable with simulation-based learning including debriefing. The challenge in many Korean nursing programs is that there is little or no faculty development for crucial debriefing skills [20].

One approach to closing this gap is to utilize debriefing assessment tools to create standards and develop shared agreements among faculty as to what constitutes high-impact and psychologically safe debriefing. Inviting student assessment of faculty debriefing skills leverages the ubiquitous presence of students to provide feedback via ratings. To date, to the authors’ knowledge, there is no such debriefing tool widely available and validated in the Korean language. To address this need, our team translated and conducted several tests of the validity of a Korean language translation of a leading debriefing assessment tool, the Debriefing Assessment for Simulation in Healthcare (DASH).

The DASH tool allows for the evaluation of instructor behaviors that facilitate learning and change in experiential contexts based on six elements, each scored on a 7-point scale with 1 = extremely ineffective/detrimental to 7 = extremely effective/outstanding [2, 21]. There are three ways in which instructors can seek evaluation: self-evaluate (Instructor Version) or receive evaluation from students (Student Version) or trained raters (Rater Version). Instructors, students, or raters can each use a short or long form to provide ratings and feedback on six DASH elements or twenty-three behaviors associated with the elements. While these tools, founded on identical elements and behaviors, are available in multiple languages, a version that has been formally translated into Korean and tested for psychometrics is not yet available. Thus, the purpose of the study was to develop a Korean version of the DASH-Student Version (SV), which can be used to translate all other versions and test its reliability and validity among baccalaureate nursing students in South Korea.

Methods

Design

The World Health Organization’s process of translation and adaptation of the instrument was used to develop a Korean version of the DASH-Student Version [23]. Psychometric measurement aspects of the Korean version of the DASH were tested for its validity and reliability using data collected from a survey. Figure 1 outlines the translation and psychometric testing process.

Setting and participants

A convenience sample of nursing students was recruited from one college of nursing in a major university in a large metropolitan city in South Korea. Participants who were eligible were (1) 4th year nursing students who have experienced both simulation education and clinical experiences, and (2) who were currently taking an “Integrated comprehensive clinical simulation” course that included various simulation scenarios that covered clinical subject matter including medical-surgical, pediatric, gerontology and emergency nursing. Subjects that were learned throughout the entire program. This simulation course provided students with an opportunity to apply what they had learned from previous courses and demonstrate their readiness for clinical practice as senior students.

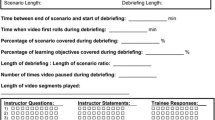

The four simulation scenarios that this cohort of students participated in were (1) a diabetic patient experiencing hypoglycemia, (2) a pediatric patient with febrile seizure, (3) an older adult patient at risk for falls, and (4) a post-operative orthopedic patient. Simulation and debriefing of each of the cases were facilitated by an instructor as groups of 6–7 students rotated through all four cases as a part of their regular simulation experience, over a period of 8 weeks (Fig. 2). Recruitment and data collection were conducted at the end of the fourth scenario for students to grow comfortable with simulation and debriefing, and to control the number of simulation and debriefing exposure.

Translation

The Korean version of the DASH was developed following the process of translation and adaptation of the instrument proposed by the World Health Organization [23]. First, we acquired permission from the original developers of DASH [2]. Second, one bilingual professional in nursing who is experienced in facilitating clinical simulations independently conducted forward translation. Third, an expert panel, consisting of six faculty members of nursing schools currently teaching clinical simulation courses, evaluated the translated instrument for the content validity index (CVI). The expert panel rated each item of the K-DASH in terms of its relevance to the underlying construct on a 4-point scale (1 = not relevant, 2 = somewhat relevant, 3 = quite relevant, 4 = highly relevant) [6]. In addition, the expert panel commented on each item if they had any suggestions or questions. Through the expert panel discussion, the translated instrument was adjusted, and the K-DASH was produced for back-translation. Then, the back-translation of the K-DASH from Korean to English was conducted by a bilingual translator. The back-translated K-DASH (in English) was compared against the original DASH by one of the original developers of DASH-Student Version (R.S.). Feedback was incorporated to yield the final K-DASH, which was used for psychometric testing at a college of nursing in a major university in a large metropolitan city in South Korea.

Data collection

Data were collected using a self-reported questionnaire at the end of the fourth case. To recruit study participants prior to the fourth simulation scenario, a researcher [BKP] provided the overall information of this study including that participation in this study would not affect their grade in this course. Then, the researcher left the classroom to eliminate the risk of unintended coercion by faculty. A trained research assistant provided additional explanation and distributed consent forms to students who volunteered to participate in the study. After the simulation and debriefing were complete, participants filled out the K-DASH survey which also included an opportunity to provide feedback on the K-DASH items themselves (e.g., related to readability of the items). Figure 2 shows the flow of simulation offerings and data collection. Students’ comments were taken into consideration in finalizing all six versions of the K-DASH, i.e., short and long DASH instructor, rater, and student versions.

Ethical considerations

This study was reviewed and approved by the corresponding author’s Institutional Review Board (2018–0050). The researchers did not engage in recruitment or data collection. The informed consent was voluntarily obtained from all participants. Research participants were provided with $10 worth of stationery as a token of appreciation, as disclosed in the consent form.

Data analysis

Data were first entered into IBM SPSS 25.0 (IBM Corp, Armonk, NY, USA) for data cleaning and analyzed in both IBM SPSS and Mplus 8.1 (Muthén & Muthén, Los Angeles, CA, USA). Descriptive statistics were used to characterize the sample. Inter-item correlations were computed to evaluate the adequacy of items and Cronbach’s alpha was used to measure reliability.

To determine the content validity index for individual items (I-CVI), six members of the expert panel rated each K-DASH item in terms of its relevance to the underlying construct on a 4-point scale. All members of the expert panel had more than 3 years of simulation and debriefing experience. Then, I-CVI was computed for each item as the number of experts giving a rating of either 3 or 4, divided by the number of experts which led to the proportion of agreement about relevance. The content validity index for the scale (S-CVI) was calculated as the average of the I-CVIs for all items on the scale [19]. An I-CVI higher than 0.78 is considered excellent, and S-CVI higher than 0.80 is considered acceptable (Polit and Beck, 2020).

While the items in the K-DASH student version were measured on a 7-point scale, most subjects responded positively on all items between 4 and 7, with higher scores indicating positivity. Using the first group (n = 49) exploratory factor analysis (EFA) using polychoric correlation coefficients was performed to examine the underlying theoretical structure of the translated K-DASH-Student Version; Cronbach’s alpha was computed for test reliability. Lastly, confirmatory factor analysis (CFA) with a diagonally weighted least square (WLSMV) estimator rather than a maximum likelihood (ML) estimator was performed to evaluate the factorial validity of the K-DASH student version to compare with the second group (n = 50). For the CFA, we used Kenny’s recommendations [14] for a good fitting model of (a) the ratio of chi-square to degrees of freedom (df should be 2:1 or less with non-significance; (b the root mean square error of approximation (RMSEA should be 0.08 or less; (c the confirmatory fit index (CFI and Tucker-Lewis index (TLI should be 0.95 or greater; (d the standardized Root Mean Square Residual (SRMR should be 0.08 or less. All statistical significances were reported at p ≤ 0.05.

Face validity and finalization of K-DASH

Based on the statistical analysis and comments from students, the K-DASH was adjusted. The expert panel that completed the CVI reviewed the updated K-DASH for face validity and subjectively evaluated if the tool is viewed as achieving its intended goals of assessment of debriefing.

Results

All 99 students who were eligible participated and completed the survey. Most of the students who completed the survey were female (84.8%). On the 7-point DASH scale (from 1 to 7), the average score of 6 items in the Korean version of the DASH was 6.12 (range 5.98–6.27, SD 0.803) (Table 1). The frequency of students’ responses to each item was mainly distributed between 4 and 6 without any from 1 through 3.

Inter-item correlations and reliability

Inter-item correlations were computed for items in the Korean DASH to examine the adequacy of items. All 6 items were significantly correlated with each other with coefficients ranging between 0.29 and 0.62, on or above the recommended value of r = 0.30 (p ≤ 0.05). The Cronbach’s alpha was 0.82 suggesting that the items have relatively high internal consistency.

Content validity

The content validity index for the scale (S-CVI) was calculated as the average of the I-CVIs for all items on the scale [19]. An I-CVI higher than 0.78 is considered excellent, and S-CVI higher than 0.80 is considered acceptable (Polit and Beck, 2020). Both the I-CVI and S-CVI of the Korean version of the DASH were excellent with I-CVI higher than 0.83 for all items and the S-CVI of 0.94 (Table 2), suggesting that K-DASH is measuring its underlying construct of debriefing. The overall recommendations from the expert panel were not on the content but rather, focused on conserving the original meaning of DASH items that could sometimes be altered in the process of translation from one language to another and back again. For example, “set the stage for” in Korean does not deliver the full sense of the original meaning, thus we translated this phrase as “prepare the conditions for.” The authors made every effort to develop a Korean version that accounts for the simulation education environment and culture in Korea while conserving the original intent and underlying meaning of each of the behaviors and elements.

Construct validity

Exploratory factor analysis

Structured debriefing using Kaiser’s rule [13] of eigenvalue greater than 1 to extract factors and ProMax rotation produced a one-factor solution with an eigenvalue greater than 1, accounting for 55.4% of the variance. This suggested a unidimensional latent structure of the Korean version of the DASH that, based on the data collected from students K-DASH, measures one construct (i.e., debriefing).

Confirmatory factor analysis

Results of confirmatory factor analysis with the WLSMV estimator indicated that the fit indices were satisfactory with a nonsignificant χ2/df ratio of 1.39, RMSEA of 0.089, SRMR of 0.065, CFI of 0.98, and TLI of 0.97. Additionally, all standardized loadings for items were statistically significant (p ≤ 0.05) with moderately large values ranging between 0.56 and 0.90, indicating that each of the 6 items converges within the extracted factor and significantly contributes to the factor. The composite reliability (CR) was 0.87 and the average of variance extracted (AVE) was 0.53, indicating good convergent validity, the degree of confidence that a trait is well measured by its indicators [9]. These results support the findings of EFA and the reliability of the scale, suggesting that the set of items in K-DASH relates to the given latent variable (i.e. instructor behaviors that facilitate learning and change in experiential contexts) and captures a good amount of the variance in the trait or the latent variable. The results of both EFA and CFA are summarized in Table 3.

Discussion

The internal consistency of the Korean version of the DASH was high (Cronbach’s alpha = 0.82), comparable to that of the original instrument (Cronbach’s alpha = 0.89), although the original study was conducted using the rater version [2]. This finding aligned with the reliability reported for a study conducted in the USA, using the student version with nursing students (Cronbach’s alpha = 0.82) [7]. The results of EFA suggested a unidimensional latent structure of the Korean version of the DASH, and the results of CFA confirmed that each of the 6 items related to or converged with the extracted factor. Therefore, this suggests that the 6 items captured a good amount of the instructor debriefing behaviors which the K-DASH is intended to assess. To the best of the authors’ knowledge, this is the first study exploring the content validity and construct validity specifically using CVI, EFA, and CFA and may serve as a comparison metrics for future psychometric studies.

In South Korea, the use of simulation in nursing colleges is growing sharply, but debriefing methods are often neglected by instructors [11]. There is a lack of simulation instructors who are trained for structured debriefing [20]. The largest gap between the significance of practice and actual performance was reported to be in reflection and facilitation [20]. Structured debriefing is one of the important characteristics of effective debriefing in simulation-based learning [8]. From the students’ perspective, the debriefing process from addressing emotions to reflecting and summarizing, improved their learning [8]. To provide structured debriefing, facilitators require formal training in debriefing techniques [22] as well as formal tools, such as the DASH, to assess debriefing skills. Considering the current situation of nursing simulation in Korea, the use of the DASH would be beneficial for novice debriefers with less or no formal training in debriefing structure and techniques because it will provide a reference for the standard behaviors required for effective debriefing, organized into six key elements [2]. The DASH also suggests the need to address physical or environmental barriers to quality debriefing, such as lack of time and space for debriefing or the large number of students in a simulation session that are identified as reasons why debriefing is overlooked or shortened in South Korea [11]. The Korean version of the DASH can help instructors improve the effectiveness of their debriefings and thereby contribute to promoting the quality of nursing simulation education in Korea.

Of note, negative answer choices: (1) extremely ineffective/detrimental, (2) consistently ineffective/very poor, or (3) mostly ineffective/poor—were not selected by the student participants in this study. Ratings were primarily distributed between 4 through 6 without any from 1 through 3. The total mean score was 6.12 out of 7 (extremely effective/outstanding) and each element's mean score ranged from 5.98 to 6.27. This result was different from a study conducted in the US and Australia with students, doctors, and registered nurses, which had a wider range of DASH mean scores from 5.00 to 7.00 [5]. Another study with pediatric and anesthesia residents simulation education reported a wide range of DASH-Student Version median scores from 4.00 to 7.00 [10]. While these results may purely reflect the quality of the debriefing, it would be prudent to consider the cultural aspects. In certain Asian cultures, students are expected to show respect to teachers,often students do not consider themselves to be in a position to negatively critique their professors. One of the students commented that it would not be appropriate to use those strong negative descriptions, such as detrimental, in their ratings. Some students seemed to be comfortable with giving a rating of 7 (strong positive descriptions. Considering students' tendency to choose the neutral mid-point category, a 7-point rating scale may provide a wider range of variance to differentiate raters’ perspectives, compared to a 5-point rating scale. Conversely, reducing the number of choices to a 5-point rating scale may yield meaningful differences (e.g., a difference between 4 and 5 could be greater in a 5-point rating scale than a 7-point rating scale). Another cultural difference was the participation rate of students 100% of eligible students participated even when a research assistant conducted recruitment and data collection instead of their professor. In Korea, students are generally accepting of academic assignments and research. It is not uncommon to see exceptionally high participation rates [15, 18]. Regardless of cultural differences, the high participation rate could be attributed to the fact that students completed K-DASH right after debriefing.

The final version of the K-DASH, evaluated for face validity by the expert panel, can be found (https://harvardmedsim.org/debriefing-assessment-for-simulation-in-healthcare-dash-korean).

Limitations

As with all psychometric testing studies, the findings from this particular study are based on data collected in this setting and population. Further psychometric testing in different Korean settings with different populations is warranted to increase generalizability. Additionally, future investigation with a larger sample size would confirm the stability of results in this study as the current study had a minimal sample size needed to split the sample for both EFA and CFA. The split was necessary to conduct EFA and CFA on a different sample as running the analysis on the same sample can introduce bias, leading to overfitting and compromising the validity of findings. While a larger sample size should be preferred to ensure the stability and reliability of the factor structure, studies with smaller sample sizes can still provide meaningful insights under certain circumstances. As MacCallum et al. [16] demonstrated, for example, the factor recovery can be acceptable with smaller samples if the communalities are high and factors are well-defined, which was the case for K-DASH containing 6 items designed to form a single factor. Additionally, item factor loadings for this study were substantial and significant, providing meaningful preliminary insight about the factor structure of K-DASH.

While the translation was conducted following the process of translation and adaptation of the instrument proposed by the World Health Organization and was validated by content experts, linguistic and cultural nuances interpreted could vary by learners in different age groups (e.g., baby boomers and generation z students), settings (e.g., academic or healthcare industry), disciplines (including intra and inter-disciplinary), or with different life experiences (e.g., those with or without study abroad experiences). Considering that “learning assumptions”, cultural context, and team dynamics may influence the active reflection and participation required in a debriefing session, the culturally sensitive application of models and tools is crucial for maximized learning outcomes [4]. This translated tool can benefit from further linguistic/cultural validation following the process used by Muller-Botti and colleagues [17].

Cultural differences, such as preference towards positive rating versus negative rating can be a limitation. Providing students with an orientation to the tool, including an introduction to the purpose of the assessment and the concept of constructive feedback may help address this tendency.

Conclusions

As nursing schools worldwide scramble to meet the growing demand for work-ready graduates, robust faculty development is crucial. One proven way to prepare faculty to lead robust simulation-based learning activities is to strengthen their debriefing skills via standards and feedback. The Korean version of the DASH appears to be a reasonably valid and reliable measure of instructor behaviors that facilitate learning and change in faculty skills, and by extension, nurse trainee skills in experiential contexts in Korea.

Availability of data and materials

The datasets used and/or analyzed in this study are available from the corresponding author on reasonable request.

Abbreviations

- DASH© :

-

The Debriefing Assessment for Simulation in Healthcare

- DASH-SV:

-

DASH-Student Version

- CVI:

-

Content validity index

- EFA:

-

Exploratory factor analysis

- CFA:

-

Confirmatory factor analysis

- WLSMV:

-

With diagonally weighted least square

- ML:

-

Maximum likelihood

- RMSEA:

-

Root mean square error of approximation

- CFI:

-

Confirmatory fit index

- TLI:

-

Tucker-Lewis index

- SRMR:

-

Standardized root mean square residual

- CR:

-

Composite reliability

- AVE:

-

Average of variance extracted

References

Alexander M, Durham CF, Hooper JI, Jeffries PR, Goldman N, Kardong-Edgren SS, Kesten KS, Spector N, Tagliareni E, Radtke B, Tillman C. NCSBN simulation guidelines for prelicensure nursing programs. J Nurs Regul. 2015;6(3):39–42. https://doi.org/10.1016/S2155-8256(15)30783-3.

Brett-Fleegler M, Rudolph J, Eppich W, Monuteaux M, Fleegler E, Cheng A, Simon R. Debriefing assessment for simulation in healthcare: development and psychometric properties. Simulation in Healthcare. 2012;7(5):288–94. https://doi.org/10.1097/SIH.0b013e3182620228.

Cant RP, Cooper SJ. Use of simulation-based learning in undergraduate nurse education: an umbrella systematic review. Nurse Educ Today. 2017;49:63–71. https://doi.org/10.1016/j.nedt.2016.11.015.

Chung HS, Dieckmann P, Issenberg SB. It is time to consider cultural differences in debriefing. Simulation in Healthcare. 2013;8(3):166–70. https://doi.org/10.1097/SIH.0b013e318291d9ef.

Coggins A, Hong SS, Baliga K, Halamek LP. Immediate faculty feedback using debriefing timing data and conversational diagrams. Adv Simul. 2022;7:7. https://doi.org/10.1186/s41077-022-00203-6.

Davis LL. Instrument review: Getting the most from a panel of experts. Appl Nurs Res. 1992;5(4):194–7. https://doi.org/10.1016/S0897-1897(05)80008-4.

Dreifuerst KT. Using debriefing for meaningful learning to foster development of clinical reasoning in simulation. J Nurs Educ. 2012;51(6):326–33. https://doi.org/10.3928/01484834-20120409-02.

Fey MK, Scrandis D, Daniels A, Haut C. Learning through debriefing: Students’ perspectives. Clin Simul Nurs. 2014;10(5):e249–56. https://doi.org/10.1016/j.ecns.2013.12.009.

Fornell C, Larcker DF. Evaluating structural equation models with unobservable variables and measurement error. J Mark Res. 1981;18(1):39–50. https://doi.org/10.1177/002224378101800104.

Gross IT, Whitfill T, Auzina L, Auerbach M, Balmaks R. Telementoring for remote simulation instructor training and faculty development using telesimulation. BMJ Simulation & Technology Enhanced Learning. 2021;7(2):61–5. https://doi.org/10.1136/bmjstel-2019-000512.

Ha E-H, Song H-S. The effects of structured self-debriefing using on the clinical competency, self-efficacy, and educational satisfaction in nursing students after simulation. The Journal of Korean academic society of nursing education. 2015;21(4):445–54. https://doi.org/10.5977/jkasne.2015.21.4.445.

Hayden J, Keegan M, Kardong-Edgren S, Smiley RA. Reliability and validity testing of the Creighton Competency Evaluation Instrument for use in the NCSBN National Simulation Study. Nurs Educ Perspect. 2014;35(4):244–52. https://doi.org/10.5480/13-1130.1.

Kaiser HF. The application of electronic computers to factor analysis. Educ Psychol Measur. 1960;20:141–51. https://doi.org/10.1177/001316446002000116.

Kenny, D. A. (2012). Measuring model fit. http://davidakenny.net/cm/fit.htm

Lee MK, Park BK. Effects of flipped learning using online materials in a surgical nursing practicum: a pilot stratified group-randomized trial. Healthcare informatics research. 2018;24(1):69. https://doi.org/10.4258/hir.2018.24.1.69.

MacCallum RC, Widaman KF, Zhang S, Hong S. Sample size in factor analysis. Psychol Methods. 1999;4(1):84–99. https://doi.org/10.1037/1082-989X.4.1.84.

Muller-Botti S, Maestre JM, Del Moral I, Fey M, Simon R. Linguistic validation of the debriefing assessment for simulation in healthcare in Spanish and cultural validation for 8 Spanish speaking countries. Simulation in Healthcare. 2021;16(1):13–9. https://doi.org/10.1097/SIH.0000000000000468.

Park BK. Factors influencing ehealth literacy of middle school students in Korea: A descriptive cross-sectional study. Healthcare Informatics Research. 2019;25(3):221. https://doi.org/10.4258/hir.2019.25.3.221.

Polit DF, Beck CT, Owen SV. Is the CVI an acceptable indicator of content validity? Appraisal and recommendations. Res Nurs Health. 2007;30(4):459–67. https://doi.org/10.1002/nur.20199.

Roh YS, Kim M, Issenberg SB. Perceived competence and training priorities of Korean nursing simulation instructors. Clin Simul Nurs. 2019;26:54–63. https://doi.org/10.1016/j.ecns.2018.08.001.

Rudolph JW, Simon R, Dufresne RL, Raemer DB. There’s no such thing as “nonjudgmental” debriefing: a theory and method for debriefing with good judgment. Simulation in Healthcare. 2006;1(1):49–55. https://doi.org/10.1097/01266021-200600110-00006.

Watts PI, McDermott DS, Alinier G, Charnetski M, Ludlow J, Horsley E, Meakim C, Nawathe PA. Healthcare simulation standards of best practiceTM simulation design. Clin Simul Nurs. 2021;58:14–21. https://doi.org/10.1016/j.ecns.2021.08.009.

World Health Organization. (2021). Process of translation and adaptation of instruments. Retrieved January 20, 2023 from https://www.who.int/substance_abuse/research_tools/translation/en/

Acknowledgements

The authors would like to thank the participants, the members of the expert panel, and the participants of this study for their support with this work as well as Dr. Suzie Kardong-Edgren, Associate Professor of Health Professions Education at MGH Institute of Health Professions for her review of the manuscript.

Funding

This work was supported by a National Research Foundation of Korea (NRF) grant funded by the Government of Korea (MISP No. 2016R1C1B2013649) for study design and data collection, as well as a National Research Foundation of Korea (NRF) grant funded by the Government of Korea (MSIT No. 2020R1C1C1010602) for data analysis, manuscript development, and publication.

Author information

Authors and Affiliations

Contributions

Seon-Yoon Chung: conceptualization, methodology, investigation, writing—original draft. Bu Kyung Park: conceptualization, methodology, investigation, resources, writing—original draft. Myoung Jin Kim: methodology, formal analysis, writing—original draft. Jenny Rudolph: writing—review and editing, supervision. Mary Fey: writing—original draft. Robert Simon: writing—DASH senior author, review of the back translation, review and editing, supervision. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This study was reviewed and approved by the corresponding author’s Institutional Review Board (KNU-2018–0050). The informed consent was voluntarily obtained from all participants.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Chung, SY., Park, B.K., Kim, M.J. et al. Testing reliability and validity of the Korean version of Debriefing Assessment for Simulation in Healthcare (K-DASH). Adv Simul 9, 32 (2024). https://doi.org/10.1186/s41077-024-00305-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s41077-024-00305-3