Abstract

Background

Diabetic retinopathy (DR) is a leading cause of blindness. Our objective was to evaluate the performance of an artificial intelligence (AI) system integrated into a handheld smartphone-based retinal camera for DR screening using a single retinal image per eye.

Methods

Images were obtained from individuals with diabetes during a mass screening program for DR in Blumenau, Southern Brazil, conducted by trained operators. Automatic analysis was conducted using an AI system (EyerMaps™, Phelcom Technologies LLC, Boston, USA) with one macula-centered, 45-degree field of view retinal image per eye. The results were compared to the assessment by a retinal specialist, considered as the ground truth, using two images per eye. Patients with ungradable images were excluded from the analysis.

Results

A total of 686 individuals (average age 59.2 ± 13.3 years, 56.7% women, diabetes duration 12.1 ± 9.4 years) were included in the analysis. The rates of insulin use, daily glycemic monitoring, and systemic hypertension treatment were 68.4%, 70.2%, and 70.2%, respectively. Although 97.3% of patients were aware of the risk of blindness associated with diabetes, more than half of them underwent their first retinal examination during the event. The majority (82.5%) relied exclusively on the public health system. Approximately 43.4% of individuals were either illiterate or had not completed elementary school. DR classification based on the ground truth was as follows: absent or nonproliferative mild DR 86.9%, more than mild (mtm) DR 13.1%. The AI system achieved sensitivity, specificity, positive predictive value, and negative predictive value percentages (95% CI) for mtmDR as follows: 93.6% (87.8–97.2), 71.7% (67.8–75.4), 42.7% (39.3–46.2), and 98.0% (96.2–98.9), respectively. The area under the ROC curve was 86.4%.

Conclusion

The portable retinal camera combined with AI demonstrated high sensitivity for DR screening using only one image per eye, offering a simpler protocol compared to the traditional approach of two images per eye. Simplifying the DR screening process could enhance adherence rates and overall program coverage.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Background

The screening of diabetic retinopathy (DR) is a milestone for the prevention of blindness and is recommended by many countries as well as the World Health Organization [1]. Successful screening strategies worldwide are usually based on color fundus photographs (CFPs), such as the English program [1]. However, blindness secondary to diabetes is still an unmet need in most low- and middle-income countries [2] and also in some high-income countries: in the USA, rates of screening as low as have been reported [3].

Solutions for increasing screening rates include public health policies, health education [2] and technological breakthroughs which may render the process simpler and more cost-effective. In that sense, the incorporation of telemedicine protocols, handheld devices, and artificial intelligence (AI) have all shown to increase the efficiency of screening [4]. Recently, autonomous AI systems have been granted regulatory approval for the detection of DR based on the analysis of two retinal images per eye [5, 6].

The imaging protocol for DR screening has gone through an evolution over the last decades, from the original ETDRS protocol of 7 fields until the widely accepted protocol of two retinal images per eye [7]. Simpler protocols have been associated with increased adherence, ultimately contributing to a program´s efficiency [7]. The challenge is to balance a simpler protocol without losing image quality and diagnostic accuracy. A protocol based on a single image per eye may save significant examination time in high-burden settings, such as mass screening campaigns, where more than one thousand people are screened for DR in a single morning. Such protocol may also be suitable for a staged mydriasis strategy: due to pupillary reflex secondary to the camera flash, the second image is harder to obtain without pharmacological mydriasis. In that sense, the ungradable rate is expected to be higher with two photos.

Our objective was to evaluate the performance of a DR screening protocol that employed a single retinal photo per eye, obtained with a handheld retinal camera and evaluated by an embedded AI system.

Methods

Study design, population and setting

This retrospective study enrolled a convenience sample of individuals aged over 18 years old with a previous type 1 or type 2 diabetes mellitus (DM) diagnosis who were summoned to attend the Blumenau Diabetes Campaign, a DR screening strategy that occurred from February to November 2021 at the city of Blumenau, Southern Brazil. The study protocol was approved by the ethics Committee of Fundação Universidade Regional de Blumenau (#39352320.5.0000.5370) and was conducted in compliance with the Declaration of Helsinki, following the institutional ethics committees. After signing informed consent, participants answered a questionnaire for demographic and self-reported clinical characteristics: age, gender, income, profession, educational level, type of diabetes, and diabetes duration. After answering the questionnaire, patients underwent ocular imaging.

Imaging acquisition and grading

Imaging acquisition protocol and expert reading are detailed elsewhere [8]. Briefly, smartphone-based hand-held devices (Eyer, Phelcom Technologies LLC, Boston, MA) were used for the acquisition of two images of the posterior segment—one centered on macula and another disc centered (45° field of view)—for each eye, after mydriasis induced by 1% tropicamide eye-drops. Image acquisition was performed by a team of previously trained medical students, at public primary care health units. Human image reading was performed in a store-and-forward fashion at EyerCloud platform (Phelcom Technologies LLC, Boston, MA) by a single retinal specialist (FMP) after anonymization and quality evaluation. This ground truth analysis by a human grader was performed using two images per eye. Classification of DR was given per individual, considering the most affected eye, according to the International Council of Ophthalmology Diabetic Retinopathy (ICDR) classification. Patients with ungradable fundus images had their anterior segment evaluated for cataracts or other media opacities. No information other than ocular images was available for the reader, and the human grader was masked to the automated evaluation described below.

Automated detection of DR

Images corresponding to one macula-centered image of each eye, were graded by an AI system trained with the Kaggle Diabetic Retinopathy dataset (EyePACS) and transfer learning with a dataset of approximately 16,000 fundus images captured using Phelcom Eyer. The system was previously validated for the detection of more than mild DR (mtmDR), details of which have already been described by our group elsewhere [8]. Only individuals who had images with enough quality were included in the analysis. In brief, the system is a modified version of the convolutional neural network (CNN) Xception, the input having been changed to receive images of size 699 × 699 × 3 RGB, with two fully connected layers of 2100 neurons added at the top: two neurons with softmax activation classifying the network input according to class. Softmax normalized the respective neuron input values, creating a probabilistic distribution in which the sum will be 1; the prediction corresponding to the interval between 0 and 1, indicating the likelihood of DR.

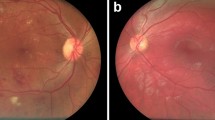

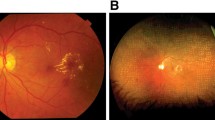

To visualize the location of the most important regions obtained by CNN, to discriminate between classes, the Gradient-Based Class Activation Map (GradCam) was used; it generates a heat map (EyerMaps, Phelcom Technologies LLC, Boston, MA) which highlights the detected changes (Fig. 1).

Example of heatmap visualization. (A, C and E) Color fundus photograph depicting clinical signs of diabetic retinopathy such as hard exudates and hemorrhages. (B, D and F) Overlay with the heatmap visualization can help identify lesions, flagged in a color scale, from blue (low importance) to red (high importance)

Statistical analysis

Data were collected in MS Excel 2010 files (Microsoft Corporation, Redmond, WA, USA). Statistical analyses were performed using SPSS 19.0 for Windows (SPSS Inc., Chicago, IL, USA). Individual’s characteristics and quantitative variables are presented in terms of mean and standard deviation (SD). Sensitivity, specificity, positive predictive value (PPV), and negative predictive value (NPV), and their 95% confidence intervals (CIs), were calculated for the device outputs with no or mild DR and mtmDR compared with the corresponding reference standard classifications; comparison was made against human reading as the ground-truth; expert reading was based on the analysis of two retinal images per eye, while AI output considered only a single, macula-centered image per eye. The 0.3 threshold was chosen as the operating point (see Supplementary Material). Diagnostic accuracy is reported according to the Standards for Reporting of Diagnostic Accuracy Studies (STARD) [9].

Results

Digital fundus photography images were obtained for both eyes of 817 individuals, 131 of whom (16%) could not be automatically analyzed due to insufficient quality. The remaining 686 individuals (average age 59.2 ± 13.3 years old, 56.7% women) met the inclusion criteria and had their images analyzed by the automatic system. Diabetes duration was 12.1 ± 9.4 years. Rates of insulin use, daily glycemic monitoring and treatment for systemic hypertension were 68.4%, 70.2% and 70.2%, respectively. Even though 97.3% of patients knew about the risk of blindness due to diabetes, 52.3% of patients underwent their first retinal examination during the event. The majority (82.5%) relied exclusively on the public health system. Individuals who were illiterate or who had not completed elementary school were 43.4%. DR classification according to the ground truth was as follows (Table 1): absent 68.1%, Nonproliferative (NP) Mild DR 19.1%, NP Moderate DR 6.8%, NP Severe DR 2.0%, Proliferative DR 4.5%; diabetic macular edema was detected in 7.7%.

Artificial intelligence system performance

The sensitivity/specificity, per the human grading standard, for the device to detect mtmDR was 93.6% (95% CI 87.9–97.2)/71.8% (95% CI 67.9–75.4), the Confusion Matrix is presented in Table 2. Figure 2 depicts the Standards for Reporting of Diagnostic Accuracy Studies (STARD) diagram for the algorithm mtmDR output. PPV and NPV for mtmDR were 42.7% (39.3–46.2), and 98.0% (96.2–98.9), respectively. Area under the receiver operating characteristic (ROC) curve was 0.86.

Discussion

We herein report the results of automatic analysis for the detection of mtmDR with a single retinal image, obtained with a portable smartphone-based retinal camera. A high sensitivity (sensitivity 91,27%) had already been previously described for algorithmic evaluation of a two-image protocol with the same device [8]. The high sensitivity of the embedded AI system in our real-world sample compares well with previous reports of other automated systems [5, 10, 11]. The first two AI systems approved by the FDA for DR screening rely on protocols of two retinal images per eye and use traditional, tabletop retinal cameras: Idx DR [5] and EyeArt [6], and a recent study that validated seven AI systems for DR screening in the real world based on protocols of two retinal images per eye found sensitivities ranging from 50.98 to 85.90%, and specificity from 60.42 to 83.69% [11].

Portable handheld, low-cost retinal cameras have the potential to broaden the reach of DR screening programs, widening geographic areas and reaching populations that otherwise would not be screened by traditional methods [12]; such aspects potentially increase program´s efficiency due to higher coverage and increased adherence. A handheld device with integrated AI analysis has been reported in a screening performance with four fundus images per eye [13] with sensitivity of 95.8% and specificity of 80.2% for the detection of any DR. Another recent study with a handheld device and the same AI system, but a protocol of five fundus images per eye, reported a sensitivity of 87.0% and specificity of 78.6% for the detection of referable DR [14].

We have studied the performance of AI on a protocol based on a single fundus image per eye. It has been established that, regarding expert human reading, a single image protocol loses diagnostic accuracy in comparison to a two-images protocol [15]. However, with automatic reading, performance was considered satisfactory for screening, with the obvious advantages of obtaining one single image per eye; efforts to facilitate the process and make it less time-consuming are warranted to increase efficiency. Interestingly, macula-centered images have been considered to correspond to the most important region for deep learning systems in the evaluation of DR [16]. We have attained comparable diagnostic accuracy in comparison with the results reported by Nunez do Rio and colleagues [17]: their performance of a Deep Learning algorithm for the detection of referable DR analyzing only one retinal image per eye was as follows: sensitivity of 72.08% and specificity of 85.65%, corresponding to our threshold 0.85 (see Supplementary Material 1). The performance of our strategy also compares well with results from trained human readers when analyzing one image per eye [18].

Comparing to other algorithms, it has a relatively low specificity 71.8% (95% CI 67.9–75.4). However, it’s important to note that this is not an autonomous system, and retina specialists review the images before referring patients for an in-person evaluation. This approach helps to minimize costs while improving the specificity of the method through evaluation by specialists for those patients who truly require it. In this same strategy, Xie demonstrated that assistive and non-autonomous systems exhibit greater cost-effectiveness when compared to purely autonomous systems [19]. Improving the algorithm technology may increase this specificity without losing its main characteristic of a high sensitivity method for mass screening programs.

Another important aspect to discuss is the pupil status for retinal imaging, this might affect the number of ungradable images and AI performance. Piyaseana and cols reported that the proportion of ungradable images in non-mydriatic settings was 18.6% compared to 6.2% in mydriatic settings [20]. The present study was conducted in a mass screening program and pupil dilation was performed to ensure a faster imaging acquisition. It’s true that pupil dilation reduces the number of ungradable images and may increase algorithm performance. One strategy could be to use the staged mydriasis and dilate just those patients where image quality was not sufficient without pupil dilation.

The PPV reported in the present study was 42.7%, and the NPV was 98.1%; for the purpose of screening, high NPV is important to ensure that negative cases indeed do not have DR, while a low threshold for unclear cases possibly leads to low PPV [11]. A recent study that validated seven AI systems for DR screening in the real world based on protocols of four retinal images per eye found PPVs ranging from 36.46 to 50.80% and NPV from 82.72 to 93.69% [11]; a heterogeneous distribution of PPVs among different datasets has been attributed to differences in disease prevalence, on the basis of Bayes theorem [11]: sites with higher prevalence rates had higher PPV; of note, our sample had the majority of patients with no signs of disease (68%), which may account for the relatively low PPV.

Regarding the population evaluated in the present study, even though the screening was performed on a State that presents the 3rd highest human development index (HDI) of the country [21], over half of participants had their first fundus evaluation during this initiative, despite having a diabetes duration of 12.1 ± 9.4 years, evidencing that access to healthcare also lacks in such high-ranked settings of a middle-income country. Brazil is considered to host the sixth biggest population of individuals with diabetes worldwide [22]; being a country with continental dimensions and heterogeneous realities, Brazil also has many differences regarding social and economic aspects. As an example, a comparison between data collected in Blumenau (Southern Brazil) and Itabuna, situated in Bahia state (Northeastern Brazil), ranked 22nd for HDI, shows significant differences on the health profile of patients who underwent DR screening: the present sample from Blumenau, consisting of 686 individuals aged 59.2 ± 13.3 years, with average diabetes duration of 12.1 years, reported use of insulin in 68.4%; more than mild DR was present in 12.8%; and educational level was up to elementary school in 43.3%. In contrast, a sample of 940 individuals with diabetes from Itabuna aged 60.8 + 11.4 years, with average diabetes duration of 10.4 years, reported use of insulin in 25.8%; more than mild DR was present in 25.7%; and educational level was up to elementary school in 54.4% [15].

Despite the southern region of Brazil being one of the most developed in the country, with the municipality of Blumenau boasting one of the highest Human Development Index (HDI) levels nationwide, access to early detection of diabetic retinopathy remains highly limited. The need for mass screening programs highlights the population’s lack of access to DR evaluation. Thus, implementing mass screening programs and potentially incorporating regular and continuous assessment utilizing portable cameras in primary healthcare facilities could help decrease waiting times and improve access. This approach would serve as an effective strategy to mitigate diabetes-related blindness cases. A sentence has been included in the discussion to address this aspect.

We believe the main strength of this study is to present an automatic system with a potential to yield a high sensitivity for DR screening after evaluation of a single retinal image per eye; of note, the sensitivity attained was higher than the pre-specified endpoint for FDA approval of an automatic DR screening system [5]. Further steps for a DR screening program that would deploy the present tool could include acquisition of a second fundus image per eye only for detected cases, thereby rendering the screening process simpler for most patients, who would only need one image; further studies are needed to investigate this hypothesis.

Our study has several limitations, the most notable of which is that human grading was performed by only one specialist, a potential source of bias. Additionally, automatic evaluation was performed only on images with sufficient quality, limiting partially our conclusions regarding the real world, when a considerable rate of patients has ungradable images, mainly due to cataracts. Furthermore, diabetic maculopathy was not evaluated with gold standard methods; instead, its presence was inferred in non-stereoscopic images. Finally, the lack of comprehensive clinical and laboratory data is also a limitation of the current study.

This study presents a new concept of a single-image approach for diabetic retinopathy screening. However, due to its methodological limitations, particularly the fact that it had only one evaluator, its results need to be interpreted with caution. A high sensitivity prototocol was obtained for DR screening with a portable retinal camera and automatic analysis of only one image per eye. Further studies are needed to clarify whether a simpler strategy as compared to the traditional, two images per eye protocol, could contribute to superior patient outcomes, including increased adherence rates and increased overall efficacy of DR screening programs.

Data Availability

Not applicable.

Abbreviations

- AI:

-

Artificial intelligence

- CFPs:

-

Color fundus photographs

- CNN:

-

Convolutional neural network

- CIs:

-

Confidence intervals

- DM:

-

Diabetes melittus

- DR:

-

Diabetic retinopathy

- GradCam:

-

Gradient-Based Class Activation Map

- ICDR:

-

International Council of Ophthalmology Diabetic Retinopathy

- mtm:

-

More than mild

- NPV:

-

Negative predictive value

- NP:

-

Non-proliferative

- PPV:

-

Positive predictive value

- SD:

-

Standard deviation

References

Scanlon PH. The contribution of the English NHS Diabetic Eye Screening Programme to reductions in diabetes-related blindness, comparisons within Europe, and future challenges. Acta Diabetol. 2021 Apr;58(4):521–30. https://doi.org/10.1007/s00592-021-01687-w. Epub 2021 Apr 8. PMID: 33830332; PMCID: PMC8053650.

Malerbi FK, Melo GB. Feasibility of screening for diabetic retinopathy using artificial intelligence, Brazil. Bull World Health Organ. 2022 Oct 1;100(10):643–647. https://doi.org/10.2471/BLT.22.288580. Epub 2022 Aug 22. PMID: 36188015; PMCID: PMC9511671.

Song A, Lusk JB, Roh KM et al. Practice Patterns of Fundoscopic Examination for Diabetic Retinopathy Screening in Primary Care. JAMA Netw Open. 2022 Jun 1;5(6):e2218753. https://doi.org/10.1001/jamanetworkopen.2022.18753. PMID: 35759262; PMCID: PMC9237789.

Ruamviboonsuk P, Tiwari R, Sayres R et al. Real-time diabetic retinopathy screening by deep learning in a multisite national screening programme: a prospective interventional cohort study. Lancet Digit Health 2022 Apr;4(4):e235–44. https://doi.org/10.1016/S2589-7500(22)00017-6. Epub 2022 Mar 7. PMID: 35272972.

Abràmoff MD, Lavin PT, Birch M, et al. Pivotal trial of an autonomous AI-based diagnostic system for detection of diabetic retinopathy in primary care offices. NPJ Digit Med. 2018;1:39. https://doi.org/10.1038/s41746-018-0040-6

Ipp E, Liljenquist D, Bode B, EyeArt Study Group. ;. Pivotal Evaluation of an Artificial Intelligence System for Autonomous Detection of Referrable and Vision-Threatening Diabetic Retinopathy. JAMA Netw Open. 2021 Nov 1;4(11):e2134254. doi: 10.1001/jamanetworkopen.2021.34254. Erratum in: JAMA Netw Open. 2021 Dec 1;4(12):e2144317. PMID: 34779843; PMCID: PMC8593763.

Huemer J, Wagner SK, Sim DA. The evolution of Diabetic Retinopathy Screening Programmes: a chronology of retinal photography from 35 mm slides to Artificial Intelligence. Clin Ophthalmol 2020 Jul 20;14:2021–35. https://doi.org/10.2147/OPTH.S261629. PMID: 32764868; PMCID: PMC7381763.

Malerbi FK, Andrade RE, Morales PH, et al. Diabetic Retinopathy Screening using Artificial Intelligence and Handheld Smartphone-Based retinal camera. J Diabetes Sci Technol. 2022 May;16(3):716–23. doi: 10.1177/1932296820985567. Epub 2021 Jan 12. PMID: 33435711; PMCID: PMC9294565.

Šimundić AM. Measures of Diagnostic Accuracy: Basic Definitions. EJIFCC. 2009 Jan 20;19(4):203 – 11. PMID: 27683318; PMCID: PMC4975285.

Verbraak FD, Abramoff MD, Bausch GCF, et al. Diagnostic accuracy of a device for the automated detection of diabetic retinopathy in a primary care setting. Diabetes Care. 2019;42:651–6.

Lee AY, Yanagihara RT, Lee CS, et al. Multicenter, Head-to-Head, real-world validation study of seven automated Artificial Intelligence Diabetic Retinopathy Screening Systems. Diabetes Care. 2021 May;44(5):1168–75. https://doi.org/10.2337/dc20-1877. Epub 2021 Jan 5. PMID: 33402366; PMCID: PMC8132324.

Salongcay RP, Aquino LAC, Salva CMG, et al. Comparison of Handheld Retinal Imaging with ETDRS 7-Standard Field Photography for Diabetic Retinopathy and Diabetic Macular Edema. Ophthalmol Retina. 2022 Jul;6(7):548–56. Epub 2022 Mar 9. PMID: 35278726.

Rajalakshmi R, Subashini R, Anjana RM, Mohan V. Automated diabetic retinopathy detection in smartphone-based fundus photography using artificial intelligence. Eye (Lond). 2018 Jun;32(6):1138–44. https://doi.org/10.1038/s41433-018-0064-9. Epub 2018 Mar 9. PMID: 29520050; PMCID: PMC5997766.

Kim TN, Aaberg MT, Li P, et al. Comparison of automated and expert human grading of diabetic retinopathy using smartphone-based retinal photography. Eye (Lond). 2021 Jan;35(1):334–42. https://doi.org/10.1038/s41433-020-0849-5. Epub 2020 Apr 27. PMID: 32341536; PMCID: PMC7852658.

Malerbi FK, Morales PH, Farah ME, Brazilian Type 1 Diabetes Study Group. ;. Comparison between binocular indirect ophthalmoscopy and digital retinography for diabetic retinopathy screening: the multicenter brazilian type 1 diabetes study. Diabetol Metab Syndr. 2015 Dec 21;7:116. https://doi.org/10.1186/s13098-015-0110-8. PMID: 26697120; PMCID: PMC4687381.

Bora A, Balasubramanian S, Babenko B et al. Predicting the risk of developing diabetic retinopathy using deep learning. Lancet Digit Health. 2021 Jan;3(1):e10-e19. doi: 10.1016/S2589-7500(20)30250-8. Epub 2020 Nov 26. PMID: 33735063. https://doi.org/10.1016/S2589-7500(20)30250-8

Nunez do Rio JM, Nderitu P, Bergeles C, et al. Evaluating a Deep Learning Diabetic Retinopathy Grading System developed on mydriatic retinal images when Applied to Non-Mydriatic Community Screening. J Clin Med. 2022 Jan;26(3):614. https://doi.org/10.3390/jcm11030614. PMID: 35160065; PMCID: PMC8836386.

Srinivasan S, Shetty S, Natarajan V et al. Development and Validation of a Diabetic Retinopathy Referral Algorithm Based on Single-Field Fundus Photography. PLoS One. 2016 Sep 23;11(9):e0163108. https://doi.org/10.1371/journal.pone.0163108. PMID: 27661981; PMCID: PMC5035083.

Xie Y, Nguyen QD, Hamzah H et al. Artificial intelligence for teleophthalmology-based diabetic retinopathy screening in a national program: an economic analysis modelling study Lancet Digit Health. 2020 May;2(5):e240–9. https://doi.org/10.1016/S2589-7500(20)30060-1. Epub 2020 Apr 23.

Piyasena MMPN, Murthy GVS, Yip JLY, et al. Systematic review and meta-analysis of diagnostic accuracy of detection of any level of diabetic retinopathy using digital retinal imaging. Syst Rev. 2018;7(1):182. https://doi.org/10.1186/s13643-018-0846-y

http://www.atlasbrasil.org.br/ranking [ accessed April 15, 2023].

Magliano DJ, Boyko EJ. IDF Diabetes Atlas 10th edition scientific committee, IDF DIABETES ATLAS, International Diabetes Federation, Brussels, 2022. https://www.ncbi.nlm.nih.gov/pubmed/35914061

Acknowledgements

Associação Filosófica e Beneficente Justiça e Trabalho, Lions Clube Blumenau, Associação Renal Vida and Prefeitura Municipal de Blumenau for their support in this project.

Funding

No financial support.

Author information

Authors and Affiliations

Contributions

All authors participated in data collection, manuscript preparation, and critical revision of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The study was performed with the approval of the Research ethics Committee of FURB (Fundacao Universidade Regional de Blumenau) under protocol number (#39352320.5.0000.5370) and complied with the guidelines of the Helsinki Declaration.

Consent for publication

It is a retrospective cohort; no consent could be obtained.

Competing interests

Jose Augusto Stuchi, Diego Lencione, Paulo Victor de Souza Prado and Fernando Yamanaka work at Phelcom Technologies, São Carlos (SP) Brazil, related to this publication. Fernando Korn Malerbi has received consulting fees from Phelcom Technologies. Fernando Lojudice is a Bayer Healthcare – Brazil, São Paulo (SP), Brazil employee, but nothing to disclose for this publication.

Fernando Penha serves as Associate Editor of this journal; however, this article was independently handled by a member of the Editorial Board.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Penha, F.M., Priotto, B.M., Hennig, F. et al. Single retinal image for diabetic retinopathy screening: performance of a handheld device with embedded artificial intelligence. Int J Retin Vitr 9, 41 (2023). https://doi.org/10.1186/s40942-023-00477-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40942-023-00477-6