Abstract

Purpose

Dynamic PET is an essential tool in oncology due to its ability to visualize and quantify radiotracer uptake, which has the potential to improve imaging quality. However, image noise caused by a low photon count in dynamic PET is more significant than in static PET. This study aims to develop a novel denoising method, namely the Guided Block Matching and 4-D Transform Domain Filter (GBM4D) projection, to enhance dynamic PET image reconstruction.

Methods

The sinogram was first transformed using the Anscombe method, then denoised using a combination of hard thresholding and Wiener filtering. Each denoising step involved guided block matching and grouping, collaborative filtering, and weighted averaging. The guided block matching was performed on accumulated PET sinograms to prevent mismatching due to low photon counts. The performance of the proposed denoising method (GBM4D) was compared to other methods such as wavelet, total variation, non-local means, and BM3D using computer simulations on the Shepp–Logan and digital brain phantoms. The denoising methods were also applied to real patient data for evaluation.

Results

In all phantom studies, GBM4D outperformed other denoising methods in all time frames based on the structural similarity and peak signal-to-noise ratio. Moreover, GBM4D yielded the lowest root mean square error in the time-activity curve of all tissues and produced the highest image quality when applied to real patient data.

Conclusion

GBM4D demonstrates excellent denoising and edge-preserving capabilities, as validated through qualitative and quantitative assessments of both temporal and spatial denoising performance.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Introduction

Positron emission tomography (PET) is a non-invasive imaging technique that collects information on the annihilation of positrons and electrons via high-energy photon detection. When used in conjunction with 2-deoxy-2-[18F]fluoro-D-glucose (FDG), PET is widely recognized as a valuable tool for detecting pathological changes in various applications, including neurology, cardiology, and oncology [1]. Dynamic PET is particularly valuable in oncology due to its ability to visualize and quantify radiotracer uptake [2]. Additionally, dynamic PET enables analysis by the time activity curve (TAC) based on the compartment model [3]. However, compared to static PET, dynamic PET images suffer from higher noise levels in single voxels, which poses a significant challenge to denoising techniques [2]. Noise can be reduced by reconstruction methods, such as the iterative-based ordered subset expectation maximization (OSEM) reconstruction method, but excessive blurring may occur with an increase in the number of subsets and iteration times [4]. Furthermore, the PET denoising algorithm helps to obtain images at the same noise level with reduced injection activity and scanning time. Thus, the development of denoising algorithms suitable for PET imaging is an active area of research. Common denoising techniques such as Gaussian, median, and Wiener filters are not very effective in reducing noise in PET images due to spillover activity or low efficiency [5]. To address this, various edge-preserving or non-local algorithms have been proposed to denoise the PET images, mostly post-reconstruction, including bilateral filtering, wavelet-based techniques, guided image filtering, temporal and spatiotemporal smoothing techniques, and non-local filters like non-local mean (NLM) and block-matching 3D (BM3D) along with its higher dimensional form BM4D and BM5D [6,7,8,9,10,11,12,13,14,15,16,17]. Combined with anatomical information, the performance of various filters can be further improved [18,19,20,21].

BM3D is conducted by block matching followed by 3-D transform domain filtering. The block-matching process generates grouped fragments by collecting similar patches and stacking them into a 3-D group. BM3D exploits the similarity between the blocks to enhance the sparsity so that the transformed coefficients of grouped fragments can be better shrunk. The similarity between small blocks at the different spatial and temporal positions in PET images is common, which motivates the use of grouping and collaborative filtering for PET images, especially dynamic PET images [9, 10]. Ote et al. [8] proposed a post-reconstruction kinetics-induced BM5D filtering to denoise dynamic PET images. Radioactive decay is well-modeled as a Poisson process. Block matching and transform-domain collaborative filtering based on Anscombe transformation has been proven successful in denoising images with Poisson noise [22]. However, the reconstructed is decidedly non-Poisson [23].

Given the unique characteristics of dynamic PET images, simplifying the block-matching process using an accumulative activity map, as outlined in this work, can be highly beneficial. This involves performing block matching in 2D on an accumulative sinogram (referred to as guide image in this work), allowing corresponding 3D dynamic sinogram blocks to be easily grouped and stacked into 4D stacks, which reduces the likelihood of mismatching and minimizes computational costs associated with grouping. By stacking similar patches and filtering in the 4-D transform domain of the dynamic PET sinogram, the sparsity of transformed coefficients of the patches can be further reduced based on kinetic information in sinograms, enabling TAC denoising and spatial denoising to be conducted simultaneously.

Here, we developed guided block matching and 4-D transform domain filter (GBM4D) projection denoising method for dynamic PET image reconstruction. To evaluate the performance of the GBM4D algorithm, a simulation sinogram of two digital phantoms and a clinical head PET sinogram was included in this work for quantitative and qualitative evaluation.

Methods

Algorithm

Overview of GBM4D

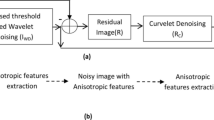

The noise of dynamic PET sinogram can be well-modeled as Poisson distribution. BM3D and BM4D methods are designed for Gaussian noise. Thus, the generalization Anscombe transform was first applied to the sinogram. The general procedure of GBM4D is demonstrated in Fig. 1.

The final estimation is obtained by inversed Anscombe transformation of GBM4D filtered sinogram. The algorithm consists of two steps: hard thresholding step and Wiener filtering step. Each of the processes consists of block-matching and collaborative filtering by shrinkage in a 4-D transform domain as follows:

-

Find blocks that are similar to the reference block in the cumulative PET sinogram. 2-D blocks at the corresponding spatial position in each scanning frame are stacked together to generate 3-D sinogram blocks. All similar blocks were stacked together to form a 4-D array (group).

-

Perform collaborative filtering of the group, then aggregate the sinograms by returning the filtered 3-D blocks to the original position.

Detailed of the Anscombe transformation and denoising Step 1 and Step 2 in Fig. 1 will be described in the following sections.

Guided block matching and grouping in Step 1

Considering Poisson noise in noisy sinogram \(z:X, T \to {\mathbb{R}}\) as the form

where \({\mathcal{P}}\) is independent random Poisson distribution, and y is the true sinogram. The Anscombe transformation was first conducted on the sinogram before Step 1. In this case, only pure Poisson noise is considered. After denoising process (both Step 1 and Step 2 in Fig. 1), inverse Anscombe exact unbiased transformation were conducted using validated database to avoid biased inverse result in low-count Poisson image [22].

After general Anscombe transformation, it is reasonable to assume that the noisy sinogram \(z:X, T \to {\mathbb{R}}\) as the form

where \(x\) is the 2-D spatial position of the sinogram \(X \subset {\mathbb{Z}}^{2}\), t is the temporal position of dynamic sinograms \(T \subset {\mathbb{R}}^{ + }\) and \(\eta \left( \cdot \right)\sim N\left( {0,\sigma^{2} } \right)\). In the block-matching process, the similarity of two blocks were measured by the inverse of the \(\ell^{2}\)-distance. If the true image y were available, the block distance would be measured as:

where \(\left\| \cdot \right\|_{2}\) denotes the \(\ell^{2}\)-norm, and the blocks \(Z_{{x_{R} }}^{t} \;{\text{and}}\;Z_{x}^{t}\) are in \(z\) and are located at \(x_{R}\) and \(x \in X\) at time \(t \in T\) and \(x_{R}\) are located at the reference position, and blocks \(Y_{{x_{R} }}^{t} { }\;{\text{and}}\;{ }Y_{x}^{t}\) are located at \(x_{R}\) and \(x \in X\) at time \(t \in T\) in \(y\), N denotes the block size. In realistic situations, only noisy blocks \(Z_{{x_{R} }}^{t} { }\;{\text{and}}\; Z_{x}^{t}\) are available. Therefore, the distance is estimated as:

The distance is a non-central chi-square random variable with expectation

and variance

The variance grows asymptotically with \({\mathcal{O}}\left( {\sigma^{4} } \right)\). For dynamic PET sinograms, the noise in each frame is relatively large compared with accumulative sinograms due to fewer photon counts in each frame. For larger \(\sigma\), the probability densities of different \(\hat{d}\left( {Z_{{x_{R} }}^{t} ,{ }Z_{x}^{t} } \right)\) might overlap heavily. Such mismatches can worsen the sparsity in the 4-D groups, which may lead to inefficiency in the collaborative filtering process. Previous work used coarse prefiltering to avoid such mismatch [8, 9], which is realized by linear transform on blocks and hard-thresholding. In this work, coarse prefiltering was applied to avoid mismatch along with the introduction of guide image (accumulated transformed PET sinograms). The distance after coarse prefiltering can be written as:

where \(\Upsilon^{\prime }\) is the hard-thresholding operator and \({\mathcal{T}}\) is the linear transformation and

As stated previously, the transformed accumulated PET sinogram has smaller noise compared with each dynamic PET sinogram frame. Therefore, we used accumulated transformed PET sinograms as guide images. For Step 1, the blocking set at \(x_{R}\), \(S_{{x_{R} }}\), generated by block matching contains the blocks in each frame at \(x_{R}\) and \(x\) where \(Z_{{x_{R} }}\) and \(Z_{x}\) of guide image is similar:

where \(\tau_{{{\text{match}}}}\) is the maximum \(\hat{d}\left( {Z_{{x_{R} }} , Z_{x} } \right)\) for which the block is considered similar to reference block. The block group is formed base on \(S_{{x_{R} }}\) by stacking \(Z_{{xS_{{x_{R} }} }}^{t}\) into a 4-D array. The array is of size \(N \times N \times \left| T \right| \times \left| {S_{{x_{R} }} } \right|\).

Collaborative filtering using hard-thresholding in Step 1

The collaborative filtering of \(Z_{{x\varepsilon S_{{x_{R} }} }}^{t}\) is conducted in 4-D domain using hard-thresholding in Step 1 in Fig. 1. This filtering can maintain good sparsity while obtaining the information of the correlation 1) between the pixels of a single block 2) between the pixels at the corresponding spatial position in grouped blocks 3) between the pixels at the corresponding temporal position in grouped blocks.

Similar 3-D patches were stacked to form 4-D patches to conduct collaborative filtering. For BM3D, denoising takes advantage of the sparsity in the spectrum of 3-D similar block groups. As demonstrated in Fig. 2, the sparsity of the 3-D block spectrum is enhanced by introducing kinetic information since the temporal correlation in the signals is also considered in GBM4D. The hard-thresholding filtering in the 4-D domain is expressed as:

Example of a 3-D spectrum of a group in BM3D method performed on a single sinogram frame of dynamic PET transformed by 3-D linear transform b 4-D spectrum of the group in GBM4D method performed on a dynamic PET sinogram transformed by 4-D linear transform. The 4-D spectrum is sparser than the 3-D spectrum

\({\mathcal{T}}_{4D}\) and \({\mathcal{T}}_{4D}^{ - 1}\) are the normalized 4-D linear transform and inverse transform. In this work, 3-D DCT in spatial and temporal domain followed by 1D DCT transform in group direction and its inverse transform are applied. \({\Upsilon }\) denotes the hard-thresholding process in Step 1 in Fig. 1:

Here, \(\lambda_{4D}\) is set to 2.8 based on a previous study [24]. After aggregation by weighted average (detailed stated in 2.1.5), the filtered blocks were returned to the original position to form the basic estimation of sinogram \(\hat{y}^{{{\text{basic}}}} \left( {x, t} \right)\) in Step 1 in Fig. 1.

Grouping and collaborative wiener filtering in Step 2

Step 1 gives a basic estimation of true dynamic PET sinogram \(\hat{y}^{{{\text{basic}}}} \left( {x, t} \right)\). By accumulated sinogram based on \(\hat{y}^{{{\text{basic}}}} \left( {x, t} \right)\), the guide image in Step 2 is calculated as:

The denoising is further improved by performing the grouping in Step 2 in Fig. 1 using the basic estimation and applying Wiener filtering.

As stated previously, the accumulated basic estimation, referring to guide image, is significantly attenuated, which helps to find more accurate block groups. The match blocks in Step 2 were generated as:

\(\hat{Y}_{{S_{{x_{R} }}^{{{\text{Wie}}}} }}^{{{\text{basic}}_{{\text{t}}} }}\) as the stacked block of grouped basic estimation blocks and \(Z_{{S_{{x_{R} }}^{{{\text{Wie}}}} }}^{{{\text{basic}}_{{\text{t}}} }}\) as the stacked block of grouped noisy sinogram blocks. The Wiener shrinkage coefficient is calculated as:

where

By using the Wiener filtering, power spectrum of the basic estimate can be used to filter the groups by minimizing the least-square of the difference between modeled and filtered signals. After aggregation by weighted average, the Weiner filtered blocks were returned to the original position to form the final estimation of sinogram.

Aggregation by weighted average in Step 1 and Step 2

By returning the filtered block to the original position, the estimation of \(\hat{y}^{{{\text{basic}}}} \; {\text{and }}\; \hat{y}^{{{\text{wiener}}}}\) can be calculated for both Step 1 and Step 2 in Fig. 1, which is called aggregation. Weighted average aggregation was adopted in this work as:

where \(\chi_{{x_{m} }} :X \to \left\{ {0, 1} \right\}\) is the characteristic function of the block and \(\omega_{{x_{R} }}\) is the weight function based on [7]. Kaiser window is also part of the weights to reduce border effects [7, 25].

Experimental setup

Computer simulation

We performed computer simulation on the Shepp–Logan phantom (SLP) [26] and a digital brain phantom developed by Martin A. Belzunce et al. [27] For SLP, only physical decay was considered when generating the sinogram. The reconstruction image size was 8 × 128 × 128 × 128. For the digital brain phantom, TACs of gray matter, white matter and tumor tissue were calculated by compartment model. The pharmacokinetic parameters of gray matter, white matter and tumor were \(K_{1}\) = 0.1104, 0.0622, 0.0640 mL/min/mL, \(k_{2}\) = 0.1910, 0.1248, 0.0890 mL/min/mL, \(k_{3}\) = 0.1024, 0.0070, 0.0738 mL/min/mL. \(F_{{\text{v}}}\) were set to 0. The input function was extracted from previous work [28]. According to a previous study [2], a dynamic PET of 8 \(\times\) 6 min was performed. The tumor size is 4 × 4 × 4 pixels. The size of sinograms is 8 × 128 × 128 × 128. The sinogram is generated by forward radon transformation using Python scikit-image toolkit. After generating the noise-free sinogram, Poisson noise was added to the sinogram assuming the total photon count of 5 \(\times\) 108 according to previous simulation work [8]. The dynamic PET was then reconstructed using 2D-OSEM with twenty iterations and eight subsets with matrix size of 128 × 128 and no post-filter. The update equation for the OSEM can be briefly described as:

where \(f\) is the image under reconstruction, j and k are voxel indices, n is iteration number b is the subset number, \(i\) is the sinogram indices and \(S_{b}\) is subset \(b\). \(p\) is the sinogram voxel measurement, and \(H\) is the system matrix generated by inversed radon transform using Python scikit-image toolkit. The size of the reconstruction image was 8 × 128 × 128 × 128, and the voxel size is 1.5 × 1.5 × 1.5 mm3. All simulation and reconstruction were performed based on PYTHON.

To validate the performance of GBM4D compared with other algorithms, total variation, wavelet, non-local means (NLM), and BM3D method were applied to denoise the sinogram using skimage toolkit in PYTHON except for BM3D. Total variation denoising aims at obtaining an image that has a minimal total variation norm. The weight of the total variation is set to 0.1 [29]. The non-local means algorithm replaces the value of a pixel by an average of a selection of other similar non-local pixels values. The patch size and the search area of NLM are set to 5 × 5 and 13 × 13 pixels [30]. Wavelet denoising uses the wavelet representation of the image to removed noise by shrinking all coefficients toward zero by a given amount. Soft thresholding and Bayes shrinking methods were adopted for wavelet denoising [31]. During the denoising process, the robust wavelet-based estimator of the noise standard deviation was applied based on a previous study [32]. Before the denoising, generalized Anscombe transformation was performed on all sinograms since all the methods were designed based on Gaussian noise instead of Poisson noise. The exact unbiased inverse of the Anscombe transformation was then performed on the denoised sinogram before the reconstruction. To exclude the effect of the reconstruction algorithm, the ground truth images were the reconstruction of the noiseless sinogram.

For the quantitative evaluation of different denoising methods, the structural similarity (SSIM) and peak signal-to-noise ratio (PSNR) were calculated. SSIM measures the similarity of ground truth and denoised image based on the degradation of structural information [33]:

where \(\mu_{g}\), \(\mu_{d}\) are the mean of the ground truth image and the denoised image, \(\sigma_{g}^{2}\), \(\sigma_{d}^{2}\) are the deviation of the ground truth image and the denoised image, \(\sigma_{gd}\) is the covariance of the ground truth image and the denoised image, \(c_{1}\) = \(c_{2}\) = 0.012. PSNR was calculated in this work to measure the image quality at the pixel level:

where RMSE is the root mean square error between the ground truth image and the denoised image and peak is the peak value of the ground truth image. SSIM and PSNR in each time frame were measured. The TACs of different tissues were measured in the volume of interest of 4 × 4 × 4 pixels. The region of interest (ROI) positions can be seen in Fig. 7. To quantitatively measure the temporal smoothing performance of GBM4D, RMSE of TACs in different tissues measured from denoised images compared with the ground truth were calculated.

Real patient data

The real patient data in this retrospective study are based on an open accessed dynamic PET list-mode sinogram data source, which is acquired on a Siemens Biograph mMR, using amyloid tracer 18F-florbetapir, provided by Avid Radiopharmaceuticals, Inc., a wholly-owned subsidiary of Lilly [34, 35]. The data extraction and reconstruction of the dynamic PET data were performed offline using NiftyPET. The reconstruction was performed using histogram mode with image matrix sizes of 344 × 344 and no post-filter. [36]. The frame setting is also 8 \(\times\) 6 min. The dynamic PET images were then reconstructed using OSEM with four iterations and eight subsets. The reconstruction PET image size was 8 × 127 × 344 × 344. The direct sinogram and oblique sinograms were denoised separately. The denoising methods and parameters are the same as stated in the previous section.

Result

Computer simulation

To qualitatively validate the algorithm when only considering physical attenuation, Fig. 3 shows the 8th frame of the reconstructed dynamic PET image of SLP before and after applying different denoising approaches with 1.4M counts per slice. The wavelet method can preserve the structural details, but the noise was not properly removed. The total variation method tends to over-smooth the image, causing the loss of image details. An obvious image distortion can be seen in NLM denoised image. GBM4D shows better performance in edge-preserving compared with BM3D. As shown in Fig. 4, the denoising performance of GBM4D can be further demonstrated with the horizontal profile of the reconstruction image before denoising and after different denoising approaches. GBM4D denoised image shows great consistency with the ground truth. To quantitatively measure the performance of different denoising approaches, SSIM and PSNR were measured as shown in Table 1. For both indexes, GBM4D shows the best denoising performance compared with other approaches. SLP is a phantom with rather uniform tissue distribution locally, causing less chance of mismatching in BM3D and better accuracy of denoised image patch in NLM. Therefore, the performances between different algorithms are similar except for total variation denoising. The respectively small noise and artifact in noisy images also contribute to the overall better denoising performance of different algorithms. The differences of indexes in different frames caused by the photon counts were also small for all algorithms.

Figure 5 shows the digital brain phantom sinogram before and after applying different denoising approaches. When considering biokinetic in the phantom, GBM4D still showed better denoising performance in sinogram perspective. Figure 6 shows the digital brain phantom images reconstructed from the sinogram before and after applying different denoising approaches. When considering the simulated digital brain phantom, GBM4D showed better denoising performance, especially for the 1st frame which is of fewer photon counts. It is shown in Fig. 6 that tumor tissue can be only detected when using the GBM4D denoising technique. Because wavelet is not able to represent discontinuities along edges or curves in images or objects efficiently, wavelet denoised reconstructed image showed ring artifacts caused by the streaking artifacts in wavelet denoised sinogram. Similar to the previous result, the total variation method tends to over-smooth the image. Compared with NLM and BM3D, GBM4D showed greater denoising and edge-preserving performance, especially for the frame of lower photon counts. Such a result can be also demonstrated in Fig. 7. Figure 7 shows the great consistency of the ground truth and the GBM4D denoised image horizontal profile. To quantitatively evaluate the performance of GBM4D and other denoising approaches, SSIM and PSNR were calculated. Table 2 shows GBM4D significantly improved SSIM and PSNR for each frame (p < 0.001). The differences of indexes in different frames caused by the photon counts were also reduced while using GBM4D compared with other denoising methods. For wavelet, NLM and BM3D methods, the denoising performance for frames with fewer photon counts was significantly inferior to frames with higher counts.

Figure 8 shows that GBM4D also has the best temporal denoising performance compared with other denoising approaches considered in this work. The GBM4D denoised image shows great consistency with ground truth in TACs of different tissue. The RMSE of different tissue TACs when using GBM4D is the lowest among all (0.51, 0.49, 0.31 for gray matter, tumor and white matter).

Real patient data

Figure 9 shows the denoising result of real patient brain PET data. Only NLM, BM3D and GBM4D results were shown for the superior performance shown in the previous section. The boundary of the ventricle can be more clearly shown in the GBM4D denoised image, especially for frames of lower counts. Figure 10 shows the TACs of white matter and gray matter in real patient data. The superior temporal denoising performance of GBM4D can be also observed in real patient data.

Discussion

This work proposed a new approach of block matching and collaborative filtering method using guide image and 4D filtering designed for dynamic PET images. The guide image combined with coarse prefiltering prevents mismatching in the grouping process, which leads to superior performance in edge-preserving in both computer-simulating images and real patient images. The mismatching during the grouping process can be significantly reduced, which can be observed from the reduction of artifacts in reconstructed images using BM3D and GBM4D denoised sinograms. 4D filtering provided a sparser spectrum of image blocks, leading to the significantly improved temporal and spatial denoising performance of GBM4D compared with the traditional BM3D method. Therefore, TACs of different tissues can be more accurately estimated and the denoising performance of GBM4D when applied to images of lower counts are superior.

For quantitative evaluation, considering the phantom of only physical decay and the phantom of both biomedical decay and physical decay, SSIM and PSNR are both significantly improved using the GBM4D approach. The indexes showed that GBM4D can successively remove the Poisson noise in the dynamic PET sinogram. The RMSE of TACs in ROI in digital brain phantoms was significantly reduced when applying GBM4D. The kinetic analysis of different denoised reconstructed dynamic PET images will be studied in future work.

Previous research also developed various types of block matching and collaborative approaches [7,8,9,10]. When considering dynamic PET sinogram image, BM5D can also be applied to remove the Poisson image. However, the computational burden would be dramatically increased. Using a guided image in GBM4D can reduce the computational burden during the grouping process and prevent mismatching. Most works aimed at denoising images after OSEM reconstruction using block matching and collaborative approaches. In this way, the computational burden can be reduced. The noise of reconstruction images is not exact Poisson noise but has the form of Poisson noise [10]. The Anscombe transformation and its inverse transformation would lead to bias in activity and inferior performance of denoising approaches, as shown in Fig. 11.

GBM4D, when applied to dynamic PET with more frames, has greater potential for image quality improvement compared with BM3D, for more information is contained in the temporal aspect during the collaborative process and relatively fewer chances of mismatching using guide image. Only eight frames were considered in this work to evaluate the performance of such a denoising approach to avoid the dramatic differences between the sparsity of 3D blocks and 4D blocks spectrum, which helps to reduce the possibility of mismatching in BM3D.

A great amount of image-denoising methods based on deep convolution net neural (CNN) have been developed [37,38,39,40]. Further work would be done to compare GBM4D with CNN-based approaches. GBM4D, as a new non-local denoising method, suffered from the same disadvantage shared with other non-local methods, which is the computational burden. GBM4D can be transplanted to GPU devices, which can significantly reduce the computation time. Further comparison between BM5D and GBM4D will be conducted in the future when acceptable computational burden for both methods were achievable.

Conclusion

In this study, we developed a guided block matching and 4-D transform domain filter projection denoising method for dynamic PET image reconstruction and thoroughly investigate the performance of the approach. GBM4D shows great denoising and edge-preserving function. The temporal and spatial denoising performances were both validated qualitatively and quantitatively. Additionally, the use of a guide image in the block-matching process and 4-D filtering allowed for the reduction of artifacts and improved accuracy in TACs estimation. The GBM4D outperformed BM3D in terms of sparsity and denoising performance. Quantitative evaluation using SSIM, PSNR, and RMSE of TACs showed that GBM4D can effectively remove Poisson noise in dynamic PET sinograms. GBM4D, as a new form of block matching and collaborative denoising method, can significantly improve the denoising performance for dynamic PET image reconstruction both spatially and temporally compared with traditional BM3D.

Availability of data and materials

The data used in this study are available from the corresponding author on reasonable request.

References

Ziegler SI. Positron emission tomography: principles, technology, and recent developments. Nucl Phys A. 2005;752:679–87. https://doi.org/10.1016/j.nuclphysa.2005.02.067.

Rahmim A, Lodge MA, Karakatsanis NA, Panin VY, Zhou Y, McMillan A, et al. Dynamic whole-body PET imaging: principles, potentials and applications. Eur J Nucl Med Mol Imaging. 2019;46(2):501–18. https://doi.org/10.1007/s00259-018-4153-6.

Gallezot JD, Lu Y, Naganawa M, Carson RE. Parametric Imaging with PET and SPECT. IEEE Trans Radiat Plasma Med Sci. 2020;4(1):1–23. https://doi.org/10.1109/TRPMS.2019.2908633.

Ozaki S, Haga A, Chao E, Maurer C, Nawa K, Ohta T, et al. Fast statistical iterative reconstruction for mega-voltage computed tomography. J Med Investig. 2020;67(12):30–9. https://doi.org/10.2152/jmi.67.30.

Mohd Sagheer SV, George SN. A review on medical image denoising algorithms. Biomed Signal Process Control. 2020;61:102036. https://doi.org/10.1016/j.bspc.2020.102036.

Buades A, Coll B, Morel JM, editors. A non-local algorithm for image denoising. 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR'05); 2005 20–25 June 2005.

Dabov K, Foi A, Katkovnik V, Egiazarian K. Image denoising by sparse 3-D transform-domain collaborative filtering. IEEE Trans Image Process. 2007;16(8):2080–95. https://doi.org/10.1109/TIP.2007.901238.

Ote K, Hashimoto F, Kakimoto A, Isobe T, Inubushi T, Ota R, et al. Kinetics-induced block matching and 5-D transform domain filtering for dynamic PET Image denoising. IEEE Trans Plasma Med Sci. 2020;4(6):720–8. https://doi.org/10.1109/TRPMS.2020.3000221.

Peltonen S, Tuna U, Sánchez-Monge E, Ruotsalainen U, editors. PET sinogram denoising by block-matching and 3D filtering. 2011 IEEE Nuclear Science Symposium Conference Record; 2011 23–29 Oct. 2011.

Yu S, Muhammed HH, editors. Noise type evaluation in positron emission tomography images. 2016 1st International Conference on Biomedical Engineering (IBIOMED); 2016 5–6 Oct. 2016.

Hofheinz F, Langner J, Beuthien-Baumann B, Oehme L, Steinbach J, Kotzerke J, et al. Suitability of bilateral filtering for edge-preserving noise reduction in PET. EJNMMI Res. 2011;1(1):23. https://doi.org/10.1186/2191-219x-1-23.

Lin JW, Laine AF, Bergmann SR. Improving PET-based physiological quantification through methods of wavelet denoising. IEEE Trans Biomed Eng. 2001;48(2):202–12. https://doi.org/10.1109/10.909641.

Hashimoto F, Ohba H, Ote K, Tsukada H. Denoising of dynamic sinogram by image guided filtering for positron emission tomography. IEEE Trans Radiat Plasma Med Sci. 2018;2(6):541–8. https://doi.org/10.1109/TRPMS.2018.2869936.

He K, Sun J, Tang X. Guided image filtering. IEEE Trans Pattern Anal Mach Intell. 2013;35(6):1397–409. https://doi.org/10.1109/tpami.2012.213.

Reader AJ, Verhaeghe J. 4D image reconstruction for emission tomography. Phys Med Biol. 2014;59(22):R371–418. https://doi.org/10.1088/0031-9155/59/22/r371.

Christian BT, Vandehey NT, Floberg JM, Mistretta CA. Dynamic PET denoising with HYPR processing. J Nucl Med. 2010;51(7):1147–54. https://doi.org/10.2967/jnumed.109.073999.

Tauber C, Stute S, Chau M, Spiteri P, Chalon S, Guilloteau D, et al. Spatio-temporal diffusion of dynamic PET images. Phys Med Biol. 2011;56(20):6583–96. https://doi.org/10.1088/0031-9155/56/20/004.

Arabi H, Zaidi H. Spatially guided nonlocal mean approach for denoising of PET images. Med Phys. 2020;47(4):1656–69. https://doi.org/10.1002/mp.14024.

Boussion N, Cheze Le Rest C, Hatt M, Visvikis D. Incorporation of wavelet-based denoising in iterative deconvolution for partial volume correction in whole-body PET imaging. Eur J Nucl Med Mol Imaging. 2009;36(7):1064–75. https://doi.org/10.1007/s00259-009-1065-5.

Chan C, Fulton R, Barnett R, Feng DD, Meikle S. Postreconstruction nonlocal means filtering of whole-body PET with an anatomical prior. IEEE Trans Med Imaging. 2014;33(3):636–50. https://doi.org/10.1109/tmi.2013.2292881.

Le Pogam A, Hanzouli H, Hatt M, Cheze Le Rest C, Visvikis D. Denoising of PET images by combining wavelets and curvelets for improved preservation of resolution and quantitation. Med Image Anal. 2013;17(8):877–91. https://doi.org/10.1016/j.media.2013.05.005.

Makitalo M, Foi A. Optimal inversion of the generalized anscombe transformation for Poisson–Gaussian noise. IEEE Trans Image Process. 2013;22(1):91–103. https://doi.org/10.1109/tip.2012.2202675.

Teymurazyan A, Riauka T, Jans HS, Robinson D. Properties of noise in positron emission tomography images reconstructed with filtered-backprojection and row-action maximum likelihood algorithm. J Digit Imaging. 2013;26(3):447–56. https://doi.org/10.1007/s10278-012-9511-5.

Maggioni M, Katkovnik V, Egiazarian K, Foi A. Nonlocal transform-domain filter for volumetric data denoising and reconstruction. IEEE Trans Image Process. 2013;22(1):119–33. https://doi.org/10.1109/TIP.2012.2210725.

Yang J, Zhang X, Yue H, Cai C, Hou C. IBM3D: integer BM3D for efficient image denoising. Circuits Syst Signal Process. 2019;38(2):750–63. https://doi.org/10.1007/s00034-018-0882-9.

Shepp LA, Logan BF. The Fourier reconstruction of a head section. IEEE Trans Nucl Sci. 1974;21(3):21–43. https://doi.org/10.1109/TNS.1974.6499235.

Belzunce MA, Reader AJ. Technical note: ultra high-resolution radiotracer-specific digital pet brain phantoms based on the BigBrain atlas. Med Phys. 2020;47(8):3356–62. https://doi.org/10.1002/mp.14218.

Feng D, Wong KP, Wu CM, Siu WC. A technique for extracting physiological parameters and the required input function simultaneously from PET image measurements: theory and simulation study. IEEE Trans Inf Technol Biomed. 1997;1(4):243–54. https://doi.org/10.1109/4233.681168.

Qiao Z, Redler G, Epel B, Halpern H. A balanced total-variation-Chambolle-Pock algorithm for EPR imaging. J Magn Reson. 2021;328:107009. https://doi.org/10.1016/j.jmr.2021.107009.

Jomaa H, Mabrouk R, Khlifa N, Morain-Nicolier F. Denoising of dynamic PET images using a multi-scale transform and non-local means filter. Biomed Signal Process Control. 2018;41:69–80. https://doi.org/10.1016/j.bspc.2017.11.002.

Chang SG, Bin Y, Vetterli M. Adaptive wavelet thresholding for image denoising and compression. IEEE Trans Image Process. 2000;9(9):1532–46. https://doi.org/10.1109/83.862633.

Donoho DL, Johnstone IM. Ideal spatial adaptation by wavelet shrinkage. Biometrika. 1994;81:425–55.

Zhou W, Bovik AC, Sheikh HR, Simoncelli EP. Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process. 2004;13(4):600–12. https://doi.org/10.1109/TIP.2003.819861.

Single amyloid PET scan on the Siemens Biograph mMR. [database on the Internet]2018. Available from: https://doi.org/10.5281/ZENODO.1472951. Accessed: 2023, February 13.

Lane CA, Parker TD, Cash DM, Macpherson K, Donnachie E, Murray-Smith H, et al. Study protocol: Insight 46—a neuroscience sub-study of the MRC National Survey of Health and Development. BMC Neurol. 2017;17(1):75. https://doi.org/10.1186/s12883-017-0846-x.

Markiewicz PJ, Ehrhardt MJ, Erlandsson K, Noonan PJ, Barnes A, Schott JM, et al. NiftyPET: a high-throughput software platform for high quantitative accuracy and precision PET imaging and analysis. Neuroinformatics. 2018;16(1):95–115. https://doi.org/10.1007/s12021-017-9352-y.

Cui J, Gong K, Guo N, Wu C, Meng X, Kim K, et al. PET image denoising using unsupervised deep learning. Eur J Nucl Med Mol Imaging. 2019;46(13):2780–9. https://doi.org/10.1007/s00259-019-04468-4.

He Y, Cao S, Zhang H, Sun H, Wang F, Zhu H, et al. Dynamic PET image denoising with deep learning-based joint filtering. IEEE Access. 2021;9:41998–2012. https://doi.org/10.1109/access.2021.3064926.

Liu Q, Liu H, Mirian N, Ren S, Viswanath V, Karp J, et al. A personalized deep learning denoising strategy for low-count PET images. Phys Med Biol. 2022. https://doi.org/10.1088/1361-6560/ac783d.

Sun H, Peng L, Zhang H, He Y, Cao S, Lu L. Dynamic PET image denoising using deep image prior combined with regularization by denoising. IEEE Access. 2021;9:52378–92. https://doi.org/10.1109/access.2021.3069236.

Acknowledgements

Not applicable.

Funding

This work was supported by the National Natural Science Foundation of China (32227801).

Author information

Authors and Affiliations

Contributions

LX performed the methodology design, validation, data analysis and writing; WZ performed review & editing; HL performed review & editing; TX performed review & editing and funding acquisition.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This work does not involve humans or animal participants.

Consent for publication

The work described has not been published before.

Competing interest

The authors have no relevant conflicts of interest to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xin, L., Zhuo, W., Liu, H. et al. Guided block matching and 4-D transform domain filter projection denoising method for dynamic PET image reconstruction. EJNMMI Phys 10, 59 (2023). https://doi.org/10.1186/s40658-023-00580-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40658-023-00580-5