Abstract

Background

Emotional cognitive impairment is a core phenotype of the clinical symptoms of psychiatric disorders. The ability to measure emotional cognition is useful for assessing neurodegenerative conditions and treatment responses. However, certain factors such as culture, gender, and generation influence emotional recognition, and these differences require examination. We investigated the characteristics of healthy young Japanese adults with respect to facial expression recognition.

Methods

We generated 17 models of facial expressions for each of the six basic emotions (happiness, sadness, anger, fear, disgust, and surprise) at three levels of emotional intensity using the Facial Acting Coding System (FACS). Thirty healthy Japanese young adults evaluated the type of emotion and emotional intensity the models represented to them.

Results

Assessment accuracy for all emotions, except fear, exceeded 60% in approximately half of the videos. Most facial expressions of fear were rarely accurately recognized. Gender differences were observed with respect to both faces and participants, indicating that expressions on female faces were more recognizable than those on male faces, and female participants had more accurate perceptions of facial emotions than males.

Conclusion

The videos used may constitute a dataset, with the possible exception of those that represent fear. The subject’s ability to recognize the type and intensity of emotions was affected by the gender of the portrayed face and the evaluator’s gender. These gender differences must be considered when developing a scale of facial expression recognition.

Similar content being viewed by others

Backgrounds

Affective cognitive impairment is a core clinical symptom of many neurological and psychiatric disorders [1,2,3]. Emotion is expressed through the face and voice. The inability to recognize facial expressions of emotions is closely associated with neurological and psychiatric disorders [4,5,6,7,8]. Therefore, the assessment of facial emotion recognition is useful for clarifying disease pathogenesis. Currently, mental health care is based on a disease-specific approach. However, many mental illnesses, such as depression, schizophrenia, and other affective disorders, and psychiatric symptoms, such as low motivation, depression, and cognitive impairment, share extensive commonalities [9,10,11]. Disparate diagnostic methods developed for various diseases are inadequate for such patients and treatment should focus on specific clinical conditions and diseases. Cognitive impairment regarding the recognition of facial expressions of emotion is found in a variety of neurological and psychiatric disorders and is associated with a decline in social skills and quality of life. Accurate assessment and improvement of facial emotional recognition using a treatment focused on specific clinical symptoms, such as the misrecognition of emotions, can improve a patient’s quality of life.

However, the influences of gender, age, and culture on emotional recognition were obvious. Previous studies on facial expression recognition have shown that older people have reduced accuracy in recognizing negative emotions such as anger, sadness, and fear compared to younger people, while there were no consistent difficulties with happiness, surprise, or disgust [12, 13]. Westerners tend to accurately identify expressions of fear and disgust, which are among the six basic emotions considered universal, whereas East Asians cannot reliably identify them [14, 15]. The Japanese have been reported to tend to suppress emotional expressions more than Westerners [16, 17], and this suppression of emotional expressions has been reported to be stronger in men than in women [18]. When recognizing facial expressions, it has been shown that East Asians often look at the area around the eyes, while Westerners tend to look mainly at the area around the mouth [19, 20], suggesting that there may also be differences in the way facial expressions are recognized. These differences are thought to be rooted in differences in cultural and gender norms between countries and across generations, in addition to physical factors, such as aging. It has become clear that various factors influence facial expression recognition and that facial expression recognition varies by culture, gender, and generation. Thus, to assess emotion recognition in patients with affective disorders, it is necessary to investigate how these factors individually affect facial expression recognition before examining the characteristics of emotion recognition in diseases and symptoms.

Furthermore, the importance of recognizing moving images, in addition to the traditional use of facial expression recognition using still images, has recently been discussed. In the field of facial expression research, many studies have used still images of facial expressions. However, studies using dynamic images have recently begun [21,22,23,24]. It has been shown that facial expression recognition with dynamic images elicits stronger emotional responses than with still images [25, 26], and dynamic stimuli have been suggested to be useful in assessing psychiatric disorders that may impair emotion recognition [27, 28]. In recent years, a method for evaluating emotional recognition using the Facial Acting Coding System (FACS) has gained international recognition [29,30,31] and is expected to be utilized in the medical field [32, 33]. The FACS is a system for classifying human facial movements by appearance and is used as a standard for systematically classifying physical expressions of emotions. It is a computed, automated system that detects faces in videos, extracts facial geometric features, and creates a temporal profile of each facial movement. Humans recognize emotions through the observation of facial expressions in motion, and we believe that video-based indicators are more representative of the reality of facial expressions.

We investigated the emotional recognition of healthy young Japanese adults in response to videos of facial expressions. We chose 20–23-year-old young adult university students as the subjects because we expected them to have less variability in emotion recognition function than younger or elderly people [34,35,36,37,38]. Verification of Japanese characteristics in facial expression recognition, including the judgment of emotional categories and emotional intensities, is essential for indicating clinical treatments and therapeutic assessments for Japanese psychiatric patients. We investigated the characteristics of facial expression recognition in young Japanese individuals to establish a video-based facial expression recognition assessment method for Japanese populations. Because it has been reported that patients with psychiatric disorders, including affective disorders, perceive the intensity of emotion [39, 40] as well as the type of emotion [41, 42] differently from healthy controls, we evaluated both the intensity and type of emotion.

Although own-age and own-race biases have been found in facial expression recognition [43,44,45,46], the influence of the gender of the face as a stimulus has rarely been discussed. In the present study, we hypothesized that the gender of the stimuli might affect emotion recognition, as the age and race of the stimuli affect emotion recognition. We investigated the effects of the gender of the evaluator and the stimulus face on facial expression recognition.

Methods

Healthy Japanese participants were asked to identify emotions and evaluate the intensity of emotions after viewing videos of facial expressions.

Pre-experiment

Researchers at the University of Glasgow created models of 2,400 facial expressions by manipulating random facial muscles using FACS. Each model expression took the form of an animated image that changed from an emotionally neutral face to an emotional expression, and then back to a neutral face through manipulated changes in the facial muscles. In each model, the facial expression had a peak at approximately 0.5 s after the beginning of the video. The entire sequence took approximately 1.25 s. The evaluators in our study, 12 healthy Japanese undergraduates (6 males and 6 females; mean age 21.3 ± 0.6 years), responded to each of the six basic emotions (happiness, sadness, anger, fear, disgust, surprise) in each of the 2,400 models. We selected the top 17 models for each emotion judged by the evaluators as the same emotion and manipulated the models using FACS to achieve three levels of emotional intensity.

Experiment

Participants

The participants in the main experiment were 30 healthy Japanese university students (15 males; mean age 21.4 ± 1.1 years, 15 females; mean age 21.1 ± 0.6 years) who had not participated in the pre-experiment. None of the participants had visual impairments, including loss of vision, autism spectrum disorders, prosopagnosia, or other characteristics that could cause difficulty in recognizing emotions. All participants were free of learning disabilities or synesthesia. All participants had been exposed to Japanese culture throughout their lives, and none had spent long periods outside Japan or had been exposed to non-Japanese culture for extended periods.

Materials

In total, 306 facial expressions (6 emotions × 17 expression models × 3 levels of intensity) set up in the pre-experiment were applied to the four Japanese faces (two for each gender), creating 1,224 animated images of facial expressions. A monitor (23.6"; aspect ratio 16:3; resolution 1920 × 1080 ppi) displaying images of facial expressions was placed 69 cm away from the participants, and the visual angle to the displayed faces was 14.4° vertically and 9.7° horizontally. Each display had a black background. The experiment was performed in a quiet, externally light-free, shielded room. Each participant sat in a chair with their chin placed on a desk chinrest and held a computer mouse with the dominant hand.

Procedure

For the 1,224 facial expression videos, the participants judged the emotional type and evaluated the intensity of each animated image. The images were randomly divided into 51 × 4 blocks × 6 sessions and the participants took breaks between sessions when appropriate. Each image was presented only once. Options for the type of emotion (happiness, surprise, fear, disgust, anger, and sadness) and intensity (strong, intermediate, or weak) were displayed on the screen. After a choice was made, the next image was displayed (Fig. 1). The images were shown in a random sequence. We used PsychoPy3 (University of Nottingham) to present the images and obtain responses.

Statistical analysis

First, we calculated the mean and standard deviation of accuracy, that is, the percentage of cases in which the evaluators chose the same type of emotion as intended. Second, an ANOVA with three factors (the gender of facial images, the gender of evaluators, and emotion) was performed using SPSS (version 27) to reveal the gender effect of the evaluators and stimulus faces.

Third, the intensity of emotions was analyzed. An analysis was performed for each emotion type. We set the intensity of the emotion to 1 point for weak, 2 points for intermediate, and 3 points for strong. The value of the intensity evaluated by the evaluator was subtracted from the intended intensity and used for further analysis of the emotional intensity. The mean and standard deviation of the values were calculated, and an ANOVA was performed to reveal the gender effect of the evaluators and stimulus faces.

Results

Evaluation of the types of emotions

The accuracy with which each emotion was assessed was as follows: happiness, 97.9%; sadness, 54.2%; anger, 65.5%; fear, 19.4%; disgust, 68.2%; surprise, 91.8%. Except for fear, the evaluators were more than 60% accurate for approximately half of the videos. However, the highest level of accuracy for facial expressions of fear was only 57%. A few fear images were recognized as such.

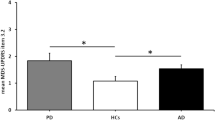

ANOVA showed the main effect of emotion (F (5, 2376) = 1016.47, p < 0.001, η2p = 0.681), the gender of facial images (F (1, 2376) = 6.87, p = 0.009, η2p = 0.003), and the gender of evaluators (F (1, 2376) = 44.07, p < 0.001, η2p = 0.018) (Table 1). Interactions were found between the gender of facial images and emotion (F (5, 2376) = 17.25, p < 0.001, η2p = 0.035), and between the gender of evaluators and emotion (F (5, 2376) = 11.10, p < 0.001, η2p = 0.023), but not between the gender of facial images and that of evaluators (F (1, 2376) = 0.003, p = 0.957, η2p = 0.000) (Table 1). The individual analysis for each emotion showed the main effects of the gender of facial images, except in the case of fear (happiness; F (1, 404) = 36.93, p < 0.001, η2p = 0.084: sadness; F (1, 404) = 6.83, p = 0.009, η2p = 0.017: anger; F (1, 404) = 18.72, p < 0.001, η2p = 0.044: fear; F (1, 356) = 0.57, p = 0.450, η2p = 0.002: disgust; F (1, 404) = 15.03, p < 0.001, η2p = 0.036: surprise; F (1, 404) = 75.91, p < 0.001, η2p = 0.158), and the main effects of evaluators’ gender except for faces with happy and angry expressions (happiness; F (1, 404) = 0.00, p = 1.000, η2p = 0.000: sadness; F (1, 404) = 25.01, p < 0.001, η2p = 0.058: anger; F (1, 404) = 1.63, p = 0.203, η2p = 0.004: fear; F (1, 356) = 63.38, p < 0.001, η2p = 0.151: disgust; F (1, 404) = 14.49, p < 0.001, η2p = 0.035: surprise; F (1, 404) = 9.28, p = 0.002, η2p = 0.022) (Table 2). These results indicate that female faces were more recognizable when they showed happiness, sadness, disgust, and surprise, whereas male faces were more recognizable when they showed anger. Female evaluators accurately recognized sad, fearful, disgusted, and surprised faces (Fig. 2). An interaction was observed between the gender of the faces and the evaluators of happy faces (F (1, 404) = 9.77, p = 0.002, η2p = 0.024), suggesting that female evaluators had the highest accuracy for happy female faces and the lowest accuracy for happy male faces.

Accuracy depends on the gender of the faces and the evaluators. Female faces were more recognizable for most emotions, except anger and fear, while male faces were more recognizable when they showed anger. Female evaluators were able to identify most emotions accurately, except for happiness and anger. Notes: Error bars indicate standard errors

Evaluation of the intensity of emotion

We analyzed the evaluators’ judgments of the intensity of the emotion in the images only in cases where they accurately judged the type of emotion. We set the intensity of the emotion to 1 point for weak, 2 points for intermediate, and 3 points for strong. An ANOVA was used to analyze the difference between the intended score in the video and the evaluator’s score. The main effects of the face’s gender on the evaluation were observed for happy (F (1,5984) = 130.78, p < 0.001, η2p = 0.021), fearful (F (1,1045) = 6.27, p = 0.012, η2p = 0.006), and surprised (F (1,5603) = 26.45, p < 0.001, η2p = 0.005) faces, while sad (F (1,3308) = 1.77, p = 0.184, η2p = 0.001), angry (F (1,4001) = 3.22, p = 0.073, η2p = 0.001), and disgusted (F (1,4163) = 1.36, p = 0.243, η2p = 0.000) faces did not show it (Table 2). The main effect of the gender of the evaluators was observed for happy (F (1,5984) = 22.45, p < 0.001, η2p = 0.004), sad (F (1,3308) = 4.32, p = 0.038, η2p = 0.001), disgusted (F (1,4163) = 84.74, p < 0.001, η2p = 0.020), and surprised (F (1,5603) = 25.68, p < 0.001, η2p = 0.005) faces, while angry(F (1,4001) = 5.55, p = 0.02, η2p = 0.001) and fearful(F (1,1045) = 0.086, p = 0.77, η2p = 0.000) faces did not show it (Table 2). These results suggest that even though the movements of male and female facial expressions were the same, female faces were recognized as being more intense in terms of happy and surprised expressions. They were recognized as less intense in their expression of fear. Female evaluators perceived happy, sad, disgusted, and surprised expressions to be more intense than the male evaluators (Fig. 3). An interaction between the gender of the face and that of the evaluators was observed for happy faces, which indicated the highest intensity when female evaluators evaluated female faces and the lowest intensity when male evaluators evaluated male faces.

Gender differences in recognition of emotional intensity. The intensity of the emotion was set to 1 point for weak, 2 points for intermediate, and 3 points for strong. The differences in intensity were calculated by subtracting the intended value from the evaluated value. The expression of fear was judged to be less intense. Female evaluators tended to perceive most emotions as being more intense than male evaluators. Notes: Error bars indicate standard errors

Discussion

We focused on the factors that influence the evaluation of emotional facial expressions by healthy Japanese subjects to develop a method for assessing the pathophysiology of psychiatric disorders. In particular, we investigated the influence of the gender of the faces and evaluators on emotional recognition.

The results showed that females had a superior ability to recognize facial emotions than males. The gender of the faces influenced the evaluators’ judgment, with male faces being more recognizable for anger and female faces being more recognizable for other emotions. The evaluation of emotional intensities also differed according to the gender of the faces and evaluators.

Evaluators’ gender

Our results showed that females were more accurate than males in recognizing emotions from facial expressions, especially sadness, fear, disgust, and surprise. This is consistent with previous studies suggesting females’ higher accuracy in emotional recognition [47,48,49]. Females are generally considered more social than males [50,51,52], and our results may reflect this finding.

People recognized happiness more easily when the gender of the face and evaluators matched. People tend to have their own gender biases when recognizing other faces. In particular, females tend to be better able to recognize female faces more accurately than male faces [53]. The own-gender bias of male evaluators has not yet been clarified [53]; however, for obvious facial expressions such as happiness, this bias could be enhanced for males as well, resulting in higher accuracy recognition of facial expressions for the same gender.

Facial gender

The facial expression videos for this study were created by applying a specific facial expression model to both male and female faces, eliminating any differences in the facial movements in the expression of the male and female faces. However, we identified a gender difference in the accuracy of the evaluations, suggesting that facial gender affects the accuracy of expressed emotions. Female faces were more recognizable in happy, sad, disgusted, and surprised expressions, whereas male faces were more recognizable in angry expressions. To interpret this sex difference, we developed the following two hypotheses. First, female evaluators, who are considered to have superior emotion recognition abilities, tend to recognize female faces more easily because of gender bias. Females have a higher degree of sociality in recognizing emotions than males. Own-sex advantages and higher sociality may contribute to greater accuracy when observing female faces. Another reason for this is the influence of Japanese culture, which discourages the overt expression of emotions. A previous study [54] found that American students expressed disgust when viewing highly stressful films, whereas Japanese students concealed their feelings. It has also been suggested that this suppression of emotional expression in Japanese students is stronger in males than in females [55]; females express their feelings with their facial expressions more than males do, which may imply that the Japanese may have greater familiarity with perceiving emotions on female faces than on male faces. The evaluators may have found it easier to recognize facial expressions in female faces. Likewise, males tend to express their anger more than females [55], making angry faces more recognizable. In this case, the accuracy with male angry faces was greater than for female faces. Previous studies have shown that the expression of happiness is recognized more accurately and quickly on female faces than on male faces and that male faces expressing anger are recognized more accurately and quickly than female faces expressing anger [56, 57]. Our results are consistent with these findings. Likewise, the present study found that the sex of the face made a difference in recognizing emotions, even when there were no differences in facial muscle movements.

Emotional intensity

The female evaluators judged the intensity of the emotions to be stronger than the male evaluators regarding the expressions of happiness, sadness, disgust, and surprise. A previous study on Chinese adolescents reported that female participants judged emotionally negative and positive photos to have a higher intensity than male participants did [58]. However, this finding varies by age and culture. A German study reported that females perceive emotionally negative photos to be more intense than males, but emotionally positive photos are less intense [58]. The difference in the gender of the evaluators when recognizing emotional intensity is not universal and varies according to gender norms and stereotypes based on culture and generation. For the Japanese evaluators in the present study, females judged the intensity of the faces expressing happy, sad, disgusted, and surprised emotions to be stronger than males, consistent with the Chinese study. This might be related to the fact that the culture, including gender roles in Japan, is similar to that of China [59].

The evaluators recognized female faces as having more intensity for happiness and surprise, whereas male faces were recognized as more intense for fear. Both male and female faces were presented with the same facial muscle movements, and this unexpected result regarding the intensity of emotions between male and female faces may reflect the evaluators’ gender preferences. Gender stereotypes [60, 61], such as “Females are emotional” and “Males should not be vulnerable,” may have influenced the evaluators more in the context of the display of more potent emotions such as happiness and surprise, resulting in a difference in the evaluators’ recognition of the emotional intensity.

Another factor that potentially influenced the evaluation of emotional intensity was related to the different features of the male and female faces. Although the relationship between facial features and emotion recognition remains unclear, reports have shown that masculine features, such as a square jaw, thick eyebrows, and a high forehead, match features expressing anger [56, 62]. The construction features of the male and female faces may have influenced the evaluators’ emotional intensity results. A previous study using functional magnetic resonance imaging found that when a male observes a female face, the activity of the amygdala increases independent of the type of emotion expressed in the image. However, the reverse does not hold when a woman is looking at a male face or when any person, regardless of the observer’s sex, is looking at a face of the same gender, [63]. This difference in brain activity may result in differences in the perception of emotional intensity and the emotions themselves.

Differences by type of emotion

The accuracy with which each emotion was assessed was as follows: happiness, 97.9%; sadness, 54.2%; anger, 65.5%; fear, 19.4%; disgust, 68.2%; surprise, 91.8%. The evaluators identified only 19.4% of the facial expressions that expressed fear. In a few cases, the evaluators judged other facial expressions as fear. Fear was identified in 6.18% of all facial expression images, well below the expected percentage of 16.7%. These results are consistent with previous studies showing that Japanese people are less likely to recognize fear [64] and less accurate in judging fear as fear [64, 65], suggesting that fear is less recognizable by the Japanese. However, 46% of the facial expression images that were judged as fearful were designed to have the fearful expression. This indicates that Japanese people can recognize facial expressions of fear as fear but are less likely to do so compared to other emotions. Fear tended to be confused with sadness and disgust.

According to Paul Ekman [66], the facial expression of fear is likely to be confused with surprise, as its characteristics are as follows: 1) eyebrows raised and pulled together, 2) raised upper eyelids, 3) tense lower eyelids, and 4) open and dropped jaws and lips stretched horizontally backwards. The most “fear-like” image (Fig. 4) in this study included these eyebrow and lip features, but it was not a typical facial expression of fear in other respects. However, the facial expressions identified as fear, including those representing other emotions, shared the same eyebrow action. Previous studies showed that Westerners pay closer attention to mouth movements when recognizing emotions from facial expressions, while most Japanese pay closer attention to eye movements [20, 67]. Consistent with these findings, our results suggest that Japanese people tend to infer emotions mainly from the movements of the eyebrows and eyes rather than from the movements of the mouth and jaw.

Clinical application of facial recognition assessment and future directions of the study

This study aimed to establish a method for assessing psychiatric disorders for Japanese populations using facial expression recognition. However, because various factors such as cultural and gender differences are involved in facial expression recognition, it is necessary to investigate the details of these factors to establish an assessment scale. In this preliminary study, we investigated sex differences in facial expression recognition in a single age group exposed to a single culture. Although studies assessing facial expression recognition in psychiatric disorders usually control for age and race, only a few have discussed gender bias. In this regard, we believe it is significant that this study clarified that not only the gender of the evaluator but also that of the facial stimuli affected the evaluation of facial expressions.

In addition, the young adult participants in this study were culturally and age homogeneous. Young adults are theoretically at the peak of cognitive functioning, and future similar experiments with subjects of different age groups could provide a basis for investigating physiological and pathological age-related changes in facial emotion recognition. The results of this study shed light on age-related deficits in emotion recognition and additional impairments underlying pathophysiological neural processes. Neurophysiological activity in facial emotion recognition has been reported to vary with the evaluator’s age [68, 69], sex [70], and even culture [71]. Our future work, using a cultural- and age-unified dataset and neurophysiological techniques, may enable the identification of core neural networks associated with emotion recognition.

Limitations of this study

This study has some limitations. First, the sample size is small. A small sample size leads to high variability, which may lead to bias. The number of subjects must be increased for a discussion with certainty. Second, the participants in this study were young. It has been suggested that own-age bias is involved in facial expression recognition [43, 44], and if an assessment of facial expression recognition is used as a scale to evaluate mental status, it is necessary to confirm how facial expressions are recognized, not only in younger people but also in elderly people. The third category includes facial expressions that express fear. Unfortunately, the evaluators judged facial expressions intended as fearful incorrectly. Japanese people are indeed able to recognize facial expressions of fear but are less likely to do so compared to other emotions. Further studies should be conducted to investigate the facial expressions that Japanese people recognize as fearful.

Conclusions

This study investigated factors influencing the emotional evaluation of facial moves. Although the gender of the evaluators and the gender of the faces influenced how the evaluators judged emotions, the emotional facial movies used in this study, except for fear, were evaluated accurately, suggesting that these videos could be used as a dataset. This study has some limitations regarding sample size and specific samples. In contrast, it did show that females tended to recognize the intended emotion more accurately and intensely than males, at least for young, healthy Japanese evaluators. Female faces were more recognizable than male faces. As these inclinations might be related to gender norms, age, and culture, further studies on other age groups and cultures are required to create a more comprehensive dataset. In particular, fear was difficult for the participants, who were all Japanese, to recognize. Thus, it is necessary to consider the facial features that a Japanese participant group would recognize as expressing fear.

Availability of data and materials

The datasets used and analyzed in the current study are available from the corresponding author upon reasonable request.

Abbreviations

- FACS:

-

Facial Acting Coding System

- ANOVA:

-

Analysis of variance

References

Affective symptoms of institutionalized adults with mental retardation [press release]. US: American Assn on Mental Retardation1993.

Kennedy SH. Core symptoms of major depressive disorder: relevance to diagnosis and treatment. Dialogues Clin Neurosci. 2008;10(3):271–7.

Hill NL, Mogle J, Wion R, Munoz E, DePasquale N, Yevchak AM, et al. Subjective cognitive impairment and affective symptoms: a systematic review. Gerontologist. 2016;56(6):e109–27.

Rubinow DR, Post RM. Impaired recognition of affect in facial expression in depressed patients. Biol Psychiatry. 1992;31(9):947–53.

Celani G, Battacchi MW, Arcidiacono L. The understanding of the emotional meaning of facial expressions in people with autism. J Autism Dev Disord. 1999;29(1):57–66.

Addington J, Saeedi H, Addington D. Facial affect recognition: a mediator between cognitive and social functioning in psychosis? Schizophr Res. 2006;85(1–3):142–50.

Vrijen C, Hartman CA, Oldehinkel AJ. Slow identification of facial happiness in early adolescence predicts onset of depression during 8 years of follow-up. Eur Child Adolesc Psychiatry. 2016;25(11):1255–66.

Gao Z, Zhao W, Liu S, Liu Z, Yang C, Xu Y. Facial emotion recognition in schizophrenia. Front Psychiatry. 2021;12:633717.

Bambole V, Johnston ME, Shah NB, Sonavane SS, Desouza A, Shrivastava AK. Symptom overlap between schizophrenia and bipolar mood disorder: Diagnostic issues. Open J Psychiatry. 2013;3:8–15.

Malhi GS, Byrow Y, Outhred T, Fritz K. Exclusion of overlapping symptoms in DSM-5 mixed features specifier: heuristic diagnostic and treatment implications. CNS Spectr. 2016;22(2):126–33.

Zhu Y, Womer FY, Leng H, Chang M, Yin Z, Wei Y, et al. The Relationship between cognitive dysfunction and symptom dimensions across schizophrenia, bipolar disorder, and major depressive disorder. Front Psychiatry. 2019;10:253.

Ruffman T, Henry JD, Livingstone V, Phillips LH. A meta-analytic review of emotion recognition and aging: Implications for neuropsychological models of aging. Neurosci Biobehav Rev. 2008;32(4):863–81.

Grainger SA, Henry JD, Phillips LH, Vanman EJ, Allen R. Age deficits in facial affect recognition: the influence of dynamic cues. J Gerontol Series B. 2015;72(4):622–32.

Elfenbein HA, Ambady N. On the universality and cultural specificity of emotion recognition: a meta-analysis. Psychol Bull. 2002;128(2):203–35.

Russell JA. Is there universal recognition of emotion from facial expression? A review of the cross-cultural studies. Psychol Bull. 1994;115(1):102–41.

Friesen WV. Cultural differences in facial expressions in a social situation: An experimental test on the concept of display rules. San Francisco: University of California; 1973.

Schouten A, Boiger M, Kirchner-Häusler A, Uchida Y, Mesquita B. Cultural differences in emotion suppression in Belgian and japanese couples: a social functional model. Front Psychol. 2020;11:1048.

Inamine M, Endo M. The effects of context and gender on the facial expressions of emotions. J Surg Res. 2009;17:134–42.

Jack RE, Garrod OG, Yu H, Caldara R, Schyns PG. Facial expressions of emotion are not culturally universal. Proc Natl Acad Sci U S A. 2012;109(19):7241–4.

Yuki M, Maddux WW, Masuda T. Are the windows to the soul the same in the East and West? Cultural differences in using the eyes and mouth as cues to recognize emotions in Japan and the United States. J Exp Soc Psychol. 2007;43(2):303–11.

Ambadar Z, Schooler JW, Cohn JF. Deciphering the enigmatic face: the importance of facial dynamics in interpreting subtle facial expressions. Psychol Sci. 2005;16(5):403–10.

Bould E, Morris N, Wink B. Recognising subtle emotional expressions: The role of facial movements. Cogn Emot. 2008;22(8):1569–87.

Krumhuber EG, Kappas A, Manstead ASR. Effects of dynamic aspects of facial expressions: a review. Emot Rev. 2013;5(1):41–6.

Tobin A, Favelle S, Palermo R. Dynamic facial expressions are processed holistically, but not more holistically than static facial expressions. Cogn Emot. 2016;30(6):1208–21.

Holland CAC, Ebner NC, Lin T, Samanez-Larkin GR. Emotion identification across adulthood using the Dynamic FACES database of emotional expressions in younger, middle aged, and older adults. Cogn Emot. 2019;33(2):245–57.

Kamachi M, Bruce V, Mukaida S, Gyoba J, Yoshikawa S, Akamatsu S. Dynamic properties influence the perception of facial expressions. Perception. 2013;42(11):1266–78.

Blair RJ, Colledge E, Murray L, Mitchell DG. A selective impairment in the processing of sad and fearful expressions in children with psychopathic tendencies. J Abnorm Child Psychol. 2001;29(6):491–8.

Gepner B, Deruelle C, Grynfeltt S. Motion and emotion: a novel approach to the study of face processing by young autistic children. J Autism Dev Disord. 2001;31(1):37–45.

Valstar MF, Mehu M, Jiang B, Pantic M, Scherer K. Meta-analysis of the first facial expression recognition challenge. IEEE Trans Syst Man Cyberne. 2012;42(4):966–79 Part B (Cybernetics).

Vaiman M, Wagner MA, Caicedo E, Pereno GL. Development and validation of an Argentine set of facial expressions of emotion. Cogn Emot. 2017;31(2):249–60.

Krumhuber EG, Küster D, Namba S, Skora L. Human and machine validation of 14 databases of dynamic facial expressions. Behav Res Methods. 2021;53(2):686–701.

Lautenbacher S, Kunz M. Facial pain expression in dementia: a review of the experimental and clinical evidence. Curr Alzheimer Res. 2017;14(5):501–5.

Kirsch A, Brunnhuber S. Facial expression and experience of emotions in psychodynamic interviews with patients with PTSD in comparison to healthy subjects. Psychopathology. 2007;40(5):296–302.

Visser M. Emotion recognition and aging. Comparing a labeling task with a categorization task using facial Representations. Front Psychol. 2020;11:139.

Ochi R, Midorikawa A. Decline in emotional face recognition among elderly people may reflect mild cognitive impairment. Front Psychol. 2021;12:664367.

Cortes DS, Tornberg C, Bänziger T, Elfenbein HA, Fischer H, Laukka P. Effects of aging on emotion recognition from dynamic multimodal expressions and vocalizations. Sci Rep. 2021;11(1):2647.

Lawrence K, Campbell R, Skuse D. Age, gender, and puberty influence the development of facial emotion recognition. Front Psychol. 2015;6:761.

Ruffman T, Kong Q, Lim HM, Du K, Tiainen E. Recognition of facial emotions across the lifespan: 8-year-olds resemble older adults. Br J Dev Psychol. 2023;41(2):128–39.

Monkul ES, Green MJ, Barrett JA, Robinson JL, Velligan DI, Glahn DC. A social cognitive approach to emotional intensity judgment deficits in schizophrenia. Schizophr Res. 2007;94(1):245–52.

Kohler CG, Turner TH, Bilker WB, Brensinger CM, Siegel SJ, Kanes SJ, et al. Facial emotion recognition in schizophrenia: intensity effects and error pattern. Am J Psychiatry. 2003;160(10):1768–74.

Zhao L, Wang X, Sun G. Positive classification advantage of categorizing emotional faces in patients with major depressive disorder. Front Psychol. 2022;13:734405.

Tsypes A, Burkhouse KL, Gibb BE. Classification of facial expressions of emotion and risk for suicidal ideation in children of depressed mothers: Evidence from cross-sectional and prospective analyses. J Affect Disord. 2016;197:147–50.

Rhodes M, Anastasi J. The Own-age bias in face recognition: a meta-analytic and theoretical review. Psychol Bull. 2011;138:146–74.

Surian D, van den Boomen C. The age bias in labeling facial expressions in children: Effects of intensity and expression. PLoS One. 2022;17(12):e0278483.

Wang X, Han S. Processing of facial expressions of same-race and other-race faces: distinct and shared neural underpinnings. Soc Cogn Affect Neurosci. 2021;16(6):576–92.

Wong HK, Stephen ID, Keeble DRT. The own-race bias for face recognition in a multiracial society. Front Psychol. 2020;11:208.

Hall JA, Matsumoto D. Gender differences in judgments of multiple emotions from facial expressions. Emotion (Washington, DC). 2004;4(2):201.

Weisenbach SL, Rapport LJ, Briceno EM, Haase BD, Vederman AC, Bieliauskas LA, et al. Reduced emotion processing efficiency in healthy males relative to females. Soc Cogn Affect Neurosci. 2012;9(3):316–25.

Kessels RP, Montagne B, Hendriks AW, Perrett DI, de Haan EH. Assessment of perception of morphed facial expressions using the Emotion Recognition Task: Normative data from healthy participants aged 8–75. J Neuropsychol. 2014;8(1):75–93.

Groves KS. Gender differences in social and emotional skills and charismatic leadership. J Leadersh Organ Stud. 2005;11(3):30–46.

Abdi B. Gender differences in social skills, problem behaviours and academic competence of Iranian kindergarten children based on their parent and teacher ratings. Procedia Soc Behav Sci. 2010;5:1175–9.

Gomes RMS, Pereira AS. Influence of age and gender in acquiring social skills in Portuguese preschool education. Psychology. 2014;5(2):99–103.

Herlitz A, Lovén J. Sex differences and the own-gender bias in face recognition: A meta-analytic review. Vis Cogn. 2013;21(9–10):1306–36.

Friesen WV, editor Cultural differences in facial expressions in a social situation: An experimental test on the concept of display rules. 1973.

Inamine M, Endo M. The effects of context and gender on the facial expressions of emotions: a study of Japanese college students (in Japanese). Jpn J Res Emot. 2009;17(2):134–42.

Becker DV, Kenrick DT, Neuberg SL, Blackwell KC, Smith DM. The confounded nature of angry men and happy women. J Pers Soc Psychol. 2007;92(2):179–90.

Hess U, Adams RB Jr., Grammer K, Kleck RE. Face gender and emotion expression: are angry women more like men? J Vis. 2009;9(12):19.1-8.

Gong X, Wong N, Wang D. Are gender differences in emotion culturally universal? comparison of emotional intensity between Chinese and German samples. J Cross Cult Psychol. 2018;49(6):993–1005.

Zhou M. Changes in the gender stereotypes in contrastive studies of Japanese and Chinese: focusing on gender expressions and the usage of language by women and men (in Japanese). J Educ Res. 2020;25:45–53.

Fischer AH, Manstead ASR. The relation between gender and emotion in different cultures. Gender and emotion: Social psychological perspectives. Studies in emotion and social interaction. Second series. New York, NY, US: Cambridge University Press; 2000. p. 71–94.

Plant EA, Hyde JS, Keltner D, Devine PG. The gender stereotyping of emotions. Psychol Women Q. 2000;24(1):81–92.

When Two Do the Same. It Might Not Mean the Same: The Perception of Emotional Expressions Shown by Men and Women [press release]. New York, NY, US: Cambridge University Press; 2007.

Kret M, Pichon S, Grezes J, De Gelder B. Men fear other men most: gender specific brain activations in perceiving threat from dynamic faces and bodies – an fMRI study. Front Psychol. 2011;2:3.

Takagi S, Hiramatsu S, Tanaka A. Emotion perception from audiovisual expressions by faces and voices. Cogn Stud Bull Jpn Cogn Sci Soc. 2014;21(3):344–62.

Shioiri T, Someya T, Helmeste D, Tang S. Cultural difference in recognition of facial emotional expression: Contrast between Japanese and American raters. Psychiatry Clin Neurosci. 1999;53:629–33.

Fear 2022 [Available from: https://www.paulekman.com/universal-emotions/what-is-fear/.

Senju A, Vernetti A, Kikuchi Y, Akechi H, Hasegawa T, Johnson MH. Cultural background modulates how we look at other persons’ gaze. Int J Behav Dev. 2013;37(2):131–6.

Izumika R, Cabeza R, Tsukiura T. Neural mechanisms of perceiving and subsequently recollecting emotional facial expressions in young and older adults. J Cogn Neurosci. 2022;34(7):1183–204.

Keightley ML, Chiew KS, Winocur G, Grady CL. Age-related differences in brain activity underlying identification of emotional expressions in faces. Soc Cogn Affect Neurosci. 2007;2(4):292–302.

Jenkins LM, Kendall AD, Kassel MT, Patrón VG, Gowins JR, Dion C, et al. Considering sex differences clarifies the effects of depression on facial emotion processing during fMRI. J Affect Disord. 2018;225:129–36.

Harada T, Mano Y, Komeda H, Hechtman LA, Pornpattananangkul N, Parrish TB, et al. Cultural influences on neural systems of intergroup emotion perception: An fMRI study. Neuropsychologia. 2020;137:107254.

Acknowledgements

The authors are responsible for the scientific content of this study. We thank the members of the Department of Neuropsychiatry, Graduate School of Medicine, Nippon Medical School and the members of the Department of Medical Technology, Ehime Prefectural University of Health Sciences, for their support. We are grateful to Prof. Rachael E. Jack at the School of Psychology and Neuroscience, University of Glasgow, for her helpful suggestions regarding the study procedures.

Funding

The authors received financial support for this study. This study was supported by the Japan Society for the Promotion of Science [19K08028, 16KK0212 to MK] for the design of the study and the collection, analysis, and interpretation of the data, and the Ehime Prefectural University of Health Sciences Grant-in-Aid for Education and Research to TH for the design of the study, and the collection and interpretation of data and writing the manuscript.

Author information

Authors and Affiliations

Contributions

MK planned the study protocol, created the tasks, performed the experiments, and was the dissertation-writing advisor. TH created the tasks, performed the experiments, analyzed the data, and was a major contributor to writing the manuscript. All the authors have read and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

All participants provided written informed consent, and the study was approved by the ethics committees of Nippon Medical School Tama Nagayama Hospital (approval number 660) and Ehime Prefectural University of Health Sciences (approval number 21–018).

Consent for publication

Written informed consent for publication was obtained from each participant in this study.

Competing interests

The authors declare no conflicts of interest in this study.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Hama, T., Koeda, M. Characteristics of healthy Japanese young adults with respect to recognition of facial expressions: a preliminary study. BMC Psychol 11, 237 (2023). https://doi.org/10.1186/s40359-023-01281-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40359-023-01281-5