Abstract

Background

Studies on animal behaviour often involve the quantification of the occurrence and duration of various activities. When direct observations are challenging (e.g., at night, in a burrow, at sea), animal-borne devices can be used to remotely record the movement and behaviour of an animal (e.g., changing body posture and movement, geographical position) and/or its immediate surrounding environment (e.g., wet or dry, pressure, temperature, light). Changes in these recorded variables are related to different activities undertaken by the animal. Here we explored the use of animal-borne acoustic recorders to automatically infer activities in seabirds.

Results

We deployed acoustic recorders on Cape gannets and analysed sound data from 10 foraging trips. The different activities (flying, floating on water and diving) were associated with clearly distinguishable acoustic features. We developed a method to automatically identify the activities of equipped individuals, exclusively from animal-borne acoustic data. A random subset of four foraging trips was manually labelled and used to train a classification algorithm (k-nearest neighbour model). The algorithm correctly classified activities with a global accuracy of 98.46%. The model was then used to automatically assess the activity budgets on the remaining non-labelled data, as an illustrative example. In addition, we conducted a systematic review of studies that have previously used data from animal-borne devices to automatically classify animal behaviour (n = 61 classifications from 54 articles). The majority of studies (82%) used accelerometers (alone or in combination with other sensors, such as gyroscopes or magnetometers) for classifying activities, and to a lesser extent GPS, acoustic recorders or pressure sensors, all potentially providing a good accuracy of classification (> 90%).

Conclusion

This article demonstrates that acoustic data alone can be used to reconstruct activity budgets with very good accuracy. In addition to the animal’s activity, acoustic devices record the environment of equipped animals (biophony, geophony, anthropophony) that can be essential to contextualise the behaviour of animals. They hence provide a valuable alternative to the set of tools available to assess animals’ behaviours and activities in the wild.

Similar content being viewed by others

Background

Studies on animal behaviour often involve the quantification of individuals’ activities [1], from the definition of an ethogram to the quantification of an activity budget [2]. Knowledge on how individuals allocate their time according to different activities is important in terms of understanding their flexibility towards changes in the environment, such as variations in temperature [3, 4], habitat [5, 6], social systems [7] or prey availability [8, 9].

Traditionally, the assessment of activity budgets has required long hours of observations in the field [10], and have been applied to various species (e.g., in primates [11,12,13], birds [14, 15], deer [16], rodents [17], fish [18], bats [19], insects [20], seals [21], cetaceans [22]). However, this is not always practical. For example, when animals are active at night, when they spend time in hidden enclosed places (e.g., burrow), or when they travel long distances in remote areas (e.g., dense forest, ocean) direct observations are hindered. In addition, the presence of a human observer can potentially disturb the animals and impact the integrity of collected information on their behaviours [23]. Recent technological developments have given rise to devices that can be deployed on animals (i.e., animal-borne devices) and that can thus remotely record variables that are related to different activities undertaken by study animals. This has greatly enhanced our understanding of time allocation in elusive wild populations [24,25,26,27].

Several types of instruments have been used to study animal activity budgets. First, changes in the geographic location of an animal may inform on its activity. From the recordings geographical positions using tracking devices such as radio-tracking, or global positioning systems (GPS), the speed [28, 29], the sinuosity [30, 31] or a combination of the two [32] can be derived to infer behavioural activities. Second, if species are moving through different environments to engage in various activities, distinctive features of the environments can be recorded and related to activities. For example, in seabirds, sensors recording the accumulated time spent immersed in water inform on the time spent floating on the water or diving [33,34,35,36]. Similarly, temperature loggers have been used to estimate the time spent in different environments, such as in the water, in the air and on the land [37, 38]. For diving species, detailed information on animals’ diving behaviours can be obtained from the use of time depth recorders [39,40,41]. Ultimately, combining data from different sources, e.g., recordings of the depth, the temperature and the light, have been shown to allow for robust interpretations of activities undertaken by elusive animals [42].Third, since the animal’s behaviour is the direct consequence of its coordinated body movement [43], the body motion and posture of an animal can be monitored and allow researchers to make inferences about an animal’s behaviour. Acceleration sensors have hence often been used to study animal behaviour [44]. The further design of bi- [45] and then tri-axial accelerometry [46] allowed for more detailed study of animal movements in three dimensions and increased the number of different activities that could be recorded and automatically identified [46]. In addition to time-activity budgets, such information is increasingly used to assess energy expenditure during each activity [47]. Providing that sufficient knowledge on the species and their movement during different activities are available to correctly interpret the motion in every axis, accelerometers are extremely powerful tools to record animals’ activities remotely. As such, they have been widely used on a great diversity of species (reviewed in [48]). However, accelerometry data is limited in terms of surrounding environmental information it can yield, with such information potentially underpinning meaningful interpretation of these behaviours.

Animal-borne acoustic devices can record and monitor the vocalization of animals in various contexts. In addition to these vocalizations, sound recordings can also provide information on the activities of animals, since different activities generate different sounds and background noise. Hence, information on speed of movement (particle flow), different environments (open air, shelter, water), environmental interactions (browsing, gnawing, digging, scratching, diving, etc.) can be captured. With the recent advancement of acoustic recording technologies, this concept has been explored and applied to visually identify the flipper strokes of seals [49] and the foraging behaviour of deer [50] and bats [51] from spectrograms. Furthermore, the automatic detection of the behaviours and activities of birds from sound data have previously been demonstrated [52]. Acoustic recorders have also been used to improve automatic classification of behaviours from accelerometers [53, 54].

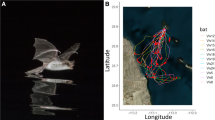

Here, we aimed to first solve the challenge of recording sound data through instrument deployment on wild free-ranging seabirds, i.e., species that move both in the air and in the water, where most dive to feed on marine resources. Second, we developed a procedure based on existing statistical learning methods to automatically identify the activities of equipped individuals, exclusively from animal-borne acoustic data, to assess their time-activity budgets. Our study species is the Cape gannet Morus capensis, an endangered seabird endemic to southern Africa [55]. This species has been recently classified as endangered by the IUCN red list because of a drastic loss of more than 50% of the population over three generations [56]. This has mostly been related to a massive decrease of their natural feeding resources due to fisheries [57,58,59]. Cape gannets feed mainly on small pelagic fish, sardines Sardinops sagax and anchovies Engraulis encrasicolus [60]. Their foraging effort, in terms of trip duration and time spent in different activities, reflects the abundance of their natural prey in the local marine environment [61,62,63,64]. Furthermore, their foraging effort directly influences their breeding investment and success [65, 66]. As a consequence, the monitoring of their foraging activities at sea is of particular interest in relation to both the local marine ecosystem and the management of this threatened species. We deployed acoustic recorders on chick-rearing Cape gannets to record their behaviour at sea (data from 10 adults used in this study). Based on previous work with observations from bird-borne video cameras [67] we identified three different main activities: floating on the water, flying, and diving. These activities are associated with different sounds that can be identified by a trained human ear so that they were manually labelled on a subset of the data set (data from four individuals randomly selected representing ~ 33 h of acoustic data). Thirty five acoustic features were then extracted to acoustically describe the activities. A supervised learning algorithm was trained on the labelled data to automatically identify activities on non-labelled data (total of ~ 93 h of acoustic data). To do this, five types of supervised learning algorithms were tested using the Classification Learner App (Statistics and Machine Learning Toolbox, Matlab R2019b) and the k-nearest neighbour model was finally chosen for its performance on the diving-class activity (rare class of high interest). The resulting time-activity budget of foraging Cape gannets, as quantified from acoustic data exclusively, is presented and compared with results obtained from previous studies on the same species but using different devices. Furthermore, we conducted a systematic review on studies that automatically classified activities from animal-borne devices and compared the performances obtained from the analysis of various types of devices.

Results

Different sounds for different activities

Each activity undertaken by the Cape gannets when foraging at sea was associated with different sounds recorded by the bird-borne acoustic devices (Fig. 1A).

Illustration of (A) the sound spectrogram along with (B) the manual identification and labelling of activities and (C, D) the predictions before and after revision. Three main activities were defined and included in the budget (flying, diving and floating on the water) and two additional transition activities (entering water and taking off) were used exclusively for the revision algorithm. These transition activities were used to confirm dive and flying events, and then merged into their corresponding main activity. Isolated segments were removed and relabelled and predictions were smoothed using a moving median over 6 segments

Different values of acoustic features were measured for each activity (Fig. 2), as calculated on sound segments of length ~ 1.4 s (corresponding to 214 samples). For example, the sound spectrogram (Fig. 1A) shows that the sound is louder and spans a wider frequency range during flying compared to diving or when floating on water, and this was measured by their mean RMS and spectral bandwidth values (see red crosses in Fig. 2A, B). For all the features though, the distributions for each activity overlap in some way (Fig. 2).

Density estimation of a selection of acoustic features for each activity (8 out of 21 temporal features, 8 out of 14 spectral features). Means and medians are represented by blue and black lines, respectively. The red crosses indicate the values for each feature calculated on the data sample illustrated on Fig. 1 (calculated as means on all segments per class)

Automatic identification of activities from sound data

Among the five types of supervised learning algorithms that were tested (see “Materials and methods”), the k-nearest neighbour model was finally chosen, because its ratio between true and false positives for the diving class (of highest interest in our study case) was higher than that in other algorithms, still with a similar global accuracy.

The classification procedure was able to correctly classify the activities of Cape gannets (the “labelled set”) with a global accuracy of 98.46%. The performances, as measured by the global confusion matrix and the ROC’s area under the curve (AUC) for each class, varied per activity (Additional file 1: Figure S1). The sensitivity was lowest for the class ‘diving” compared to the other classes (Fig. 3), meaning that over all “diving” segments, 62.3% (908/1457) were correctly detected (others were wrongly classified as floating or flying), whereas for “flying” and “floating” segments, > 98% of segments were correctly detected (Fig. 3). Nonetheless, when diving was predicted, it was reliable given the high precision value (95.5%, Fig. 3). The classes “floating on water” and “flying” were predicted with high accuracy, given the high values of both indicators in all instances (> 97%, Fig. 3). Overall, the number of false negatives and false positives was low, as measured by the high value of “Informedness” at 97.66% (the multi-class equivalent of the Youden’s index). These results were constant among the four individuals studied, with the classification performances being similar between individuals (Additional file 2: Figure S2).

Performances of the algorithm (after classification and revision) on the labelled data set (data points correspond to time segments ~ 1.4 s) summed over all individual bird files (4 individuals). The confusion matrices (squared 3 * 3 matrices) shows the number of correctly classified events (True Positives, TP) for each class on the diagonal, the number of False Positive (FP) per column for each class (except the value on diagonal) and the number of False Negative (FN) per line for each class (except the value on diagonal). Performance indices of Precision (TP/(TP + FP)) and Sensitivity (TP/(TP + FN)) are shown for each class on the bottom row and right column, respectively

When studied in terms of activity budget (meaning that 1.4 s segments are grouped into “events” of the same activity), it appeared that the number of predicted events were over-estimated, although they were predicted with shorter duration (Fig. 3B). Nonetheless, when studied in terms of time-activity budget, the predicted time spent in each activity was very close to the observed time (between 0.3 and 1.1% of difference depending on the activity, Table 1).

Acoustic-based time-activity budget of a seabird

Applying the algorithm to non-labelled data, we found that when foraging in December 2015 from Bird Island (Algoa Bay, South Africa), chick-rearing Cape gannets spent on average 35.1%, 63.7%, and 1.2% of their time flying, on the water and diving, respectively. Eight of the nine individuals spent most of their time floating on the water, although this varied largely per individual (range 43.3–80.1% of time, Fig. 5, Additional file 5: Table S1). The number of dives estimated per individual also varied greatly between individuals, from 23 to 174 dives per trip (Fig. 4).

Systematic review on automatic classification of activities from animal-borne devices

We extracted information from 61 reviewed classifications (54 articles, including our study), published between 2000 and the 5th of April 2021, that automatically classified activities using supervised learning algorithms and based on data from animal-borne devices (Table 2).

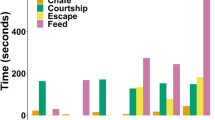

Terrestrial species were by far the most studied species (n = 40, Table 2, Fig. 5), followed with aquatic species (n = 13) and flying species (n = 8). The most commonly used devices were accelerometers (82% of reviewed studies, Table 2), either alone (n = 34 studies) or in association with other devices (n = 16). Acoustic recorders have rarely been used in this context as we found only three studies that met our criteria for the systematic review. The weight of devices was reported in only 48% of the studies and ranged widely for all devices categories (Table 2). The different types of devices varied in terms of sampling frequency, with the GPS devices being the most limited (up to 1 Hz at the highest), while acoustic recorders provided the highest sampling frequency (> 10 kHz). In comparison, accelerometers were used over a large range of sampling rates, from 0.02 to 100 Hz (Table 2). Although the sampling frequency did not seem to be directly related to the global accuracy, a higher sampling frequency seemed to allow for a higher number of activities studied in the activity budget (Additional file 3: Figure S3).

Performance of automatic classifications of activity budgets as measured by the global accuracy, as a function of the type of devices used in the 61 reviewed classifications (from 54 articles, including our study). Colours indicate a categorisation of species: n = 40 terrestrial species (green), n = 13 aquatic species (blue), n = 8 flying species (orange). GPS global position systems. Accel Accelerometers. Other devices deployed concomitantly to accelerometers included GPS, gyroscope, magnetometer, pressure sensors, and acoustic recorders [26, 32, 52,53,54, 68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104,105,106,107,108,109,110,111,112,113,114,115]

The number of activities studied in a budget varied greatly among studies, from two to 19 (Table 2), with a mode at three activities (Fig. 6). The highest number of activities (19, Table 2) was extracted from acoustic recorders, followed with a study based on accelerometers (12 activities). The global accuracy of classification reported in the reviewed studies varied between 65 and 100% (Table 2) and this did not seem to be related on the size of the different data sets studied (Additional file 4: Figure S4). The highest accuracies were obtained from accelerometer data (Figs. 5, 6), even though a good accuracy (> 90%) could be achieved using data collected from all types of devices (Fig. 5). Among all articles reviewed, the performance of our classification (98.46%) based exclusively on acoustic data appeared very high and demonstrated that the activity budget of wild animals can be recorded and reconstructed exclusively from acoustic data.

Performance of automatic classifications of activity budgets as measured by the global accuracy, as a function of the number of activities in the budget, extracted from 61 reviewed classifications (54 articles, including our study). Symbols indicates the type of animal-borne devices used to remotely record the behaviour of study animals and the full red circle indicates the values obtained in our study. Number of activities are all integers, but a random horizontal offset was added for the figure display to limit overlap of points. GPS global position systems. Other devices deployed concomitantly to accelerometers included GPS, gyroscopes, magnetometer, pressure sensors and acoustic recorders [26, 32, 52,53,54, 68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104,105,106,107,108,109,110,111,112,113,114,115]

Ultimately, the potentially most important difference among the different types of devices in terms of data yield might be the nature of other types of information provided, in addition to the animal’s activities themselves (Table 2). Accelerometers have been used to reconstruct the energy budget associated with different activities; GPS devices provide information on the geographical position and distribution of the animals; pressure sensors provide information on the diving profiles of aquatic species. In comparison, acoustic recorders provide information on all the sounds surrounding an animal: the biophony (including vocalisations from the equipped animal, its conspecifics, but also heterospecifics), the geophony (all natural but non-biological sounds related to the habitat), and the anthropophony (human-generated sounds).

Discussion

The different activities undertaken by our study animals were associated with distinguishable sets of acoustic features. They could then be automatically identified from sound data exclusively, with very good accuracy (98.5% global accuracy). Although the performances varied per class (i.e., the three main activities, floating on water, flying, and diving), the precision was consistently very high (95.5–99.4%, n = 3 activities) showing that the activities could be predicted with high confidence, especially if studied as percentage of time spent in each of the activities. Our results compared favourably to those of other studies using acoustic data to infer behaviour [52,53,54] and compared very well to all previously published studies that automatically classified activities based on animal-borne devices (Fig. 6). Interestingly, our results based on acoustic data showed a higher classification performance compared to a previous study classifying the same activities on the same study species based on speed and turning angles derived from geographical location data (92.3% global accuracy, 91.8–94.8% precision [32]). In addition to high predictive performances, acoustic devices provide additional information on the surrounding biophony, geophony and anthropophony that can be used to contextualize the observed behaviours. They thus appear a valuable alternative to other devices for the monitoring of animal’s behaviours.

By inferring the behaviour of birds from acoustic data, we were able to estimate the time-activity budget of breeding Cape gannets during their foraging trips. Our estimations are comparable with previous studies on the same species, with Cape gannets always spending proportionately more time on the water than flying: 64% and 35% (this study), 58% and 41% (breeding season 2001–2002 at Bird Island in Lambert’s Bay, based on three-dimensional accelerometry data [116]), 68% and 31% (breeding season 2012–2013 at Bird Island in Algoa Bay, based on geographical location data [117]), respectively. The number of dives predicted in our study was also within similar range compared to previous studies: 23–174 (this study), 10–110 (breeding season 2012–2013 at Bird Island in Algoa Bay, based on time-depth recorders, [118]), 12–218 (breeding seasons 2012 and 2014 on Malgas, based on time-depth recorders, [57]).

Various devices are available to remotely record an animal’s behaviours and activities. Our systematic review showed that accelerometers are the devices most commonly used for this purpose, even though a good accuracy of classification can be obtained from a range of devices. The weight of devices did not appear to be the most limiting factor, since all types of devices can be found at a relatively small size (< 20 g, the smallest device being an accelerometer at 2 g). Otherwise, the sampling frequency of the different types of devices might also be an important factor, since our results suggest that a higher sampling frequency may provide access to a higher number of recorded activities, and thus a more detailed description of the animal’s behaviours. In this respect, the most limiting device would be the GPS, and the device with the highest potential would be the acoustic recorder. Ultimately, if technical aspects can be overcome (e.g., deployment techniques and weight of devices, data analyses and classification algorithm using recent machine learning techniques), our systematic review suggested that the most important factor to be considered when choosing a device for recording an animal’s activities should be access to additional information. Indeed, if all types of devices can provide a good accuracy of classification on the animal’s activities, they all record different variables. As a consequence, they each provide additional information on different aspects related to the animal’s behaviours. Accelerometers record the fine-scale movements of animals in three dimensions, and thus provide details on movement related activities [48, 116, 119]. In addition to behavioural activities, accelerometers can be used to measure the energy expenditures of animals during different activities and thus allow for reconstructing dynamic energy budget models [47]. Time-depth recorders are best adapted for aquatic animals by providing detailed information on their diving behaviour [40, 120, 121]. In comparison, acoustic recorders do not measure the displacement or body movement of animals directly, yet our study proved that they can be used alone to reconstruct the activities of animals with very high accuracy that are comparable to what is obtained using other devices, such as accelerometers. In addition, acoustic recorders simultaneously record the biophony, geophony and anthropophony in the environment of equipped animals, and thus provide a large diversity of other information that can be essential to interpret the animal behaviours in a meaningful way. The physiology (heart rate) and the breeding behaviour (hatchling sounds in a burrow) of some species can be recorded remotely using acoustic devices [122]. The surrounding environment of equipped animals is also recorded and could help contextualize specific behaviours [52]. The vocalizations of equipped animals allow the study of variations in social interactions and grouping behaviours in different contexts [123, 124]. Furthermore, multi-species associations can be recorded. For example, in our data set, we recorded dolphin whistles underwater during some of the dives performed by equipped Cape gannets (data not shown). We could imagine that interactions between seabirds and fisheries or human marine activities could be recorded as well. Similar information on the surrounding context of animals can also be obtained using animal-borne video cameras [125,126,127], but in comparison acoustic recorders are much smaller in size and weight (which can be crucial for deployments on wild animals), they can record continuously for a much longer duration, and they record sounds from all directions, where cameras are limited by their field of view. Ultimately, combining different recorders may help reconstruct a more comprehensive understanding of animal behaviour in their natural environment [42, 53, 54], as long as this is done without compromising the welfare and behaviour of the study animals [128].

Conclusion

This article demonstrates the use of animal-borne acoustic data alone to automatically infer the activities of wild elusive animals with high accuracy. In addition to animal’s activities, acoustic recorders provide information on the surrounding environment of equipped animals (biophony, geophony, anthropophony) that can be essential to contextualize and interpret the behaviour of study animals. They, therefore, show promise to become a valuable and more regularly used alternative to the set of devices used to record animal activities remotely.

Materials and methods

Data collection

Fieldwork took place on Bird Island (Algoa Bay, South Africa) during December 2015. We deployed twenty devices (details below) on chick-rearing Cape gannets to record their behaviour while foraging at sea. Four individuals were randomly selected for manual identification of activity and model training. The trained model was then applied to automatically predict time-activity budget on the data, where the entire foraging trip was recorded, which comprised of another six individuals (trips not recorded in full resulted from progressive water damage).

Deployment procedure

Birds on departure to sea were captured near their nest using a pole with a hook on the end. Only one parent was captured per nest and devices were attached for one foraging trip only (usually 1–2 days), while the partner was on the nest guarding the chick. Nests were then monitored every hour from sunrise to sunset, and the deployed birds were captured again soon after their return to the colony and the devices were retrieved. Birds were handled for 8 and 6 min on average for the first and second capture, respectively. The handling procedure consisted of attaching devices (using adhesive tape, Tesa, Germany) and measuring the bird's body mass for the first capture (average 2580 g, n = 10 birds, measured with Pesola, Baar, Switzerland, precision 50 g), and retrieving devices and taking standard measurements (not used in this study) for the second capture. Acoustic recorders were deployed in combination with a GPS (global positioning system) device on eight birds (total mass 60 g, 2.3% of bird body mass), a GPS and a video camera on one bird (90 g, 3.4% of bird body mass), or a time-depth recorders and a video camera on 11 birds (80 g, 3.1% of bird body mass). The devices had no significant effect on the duration of foraging trips, when compared between equipped and non-equipped birds (for details see [124]), so normal behaviour was assumed. Only the data from the acoustic recorders were used in this study.

Acoustic recorders

Audio recorders (Edic-mini Tiny + B80, frequency response 100 Hz–10 kHz ± 3 dB, 65 dB dynamic range, TS-Market Ltd., Russia, fitted with a CR2450 battery, 16.2 g, autonomy estimated for ~ 50 h at 22 kHz in our study, and provided for 190 h at 8 kHz by the manufacturer) were set up to record sound in mono at a sampling frequency of 22.05 kHz. They recorded continuously, hence collecting data during the whole foraging trip of the birds. The main challenge for collecting such acoustic data was to ensure high quality recordings on board a flying and diving bird. To limit disturbance from the wind, we placed the audio recorder on the lower back of the bird, under feathers and facing backwards. In addition, a thin layer of foam was added after the first deployment to reduce flow and background noise. We sealed the microphones in nitrile glove materials (amplitude attenuation of 6 dBSPL both in the air and in the water, no modification of the frequency response, as measured in the laboratory) to keep the devices sufficiently dry when immersed in the sea water but still ensure good quality sound recordings (avoiding thick waterproof casing).

Manual identification and label of activities

The activities of Cape gannets when foraging at sea were manually identified on a subset of our data set (henceforth referred to as “labelled dataset”). The data retrieved from four deployed Cape gannets were randomly selected, comprising of ~ 33 h of recordings. Based on previous work with observations from bird-borne video cameras [67] we identified three different main activities: floating on the water, flying, and diving. Those three activities are associated with different sounds that can clearly be identified by a trained human ear (Fig. 1). When the bird is flying, the wind is usually loud and the wing flapping can sometimes be heard. When the bird is on water the ambient noise is usually less, sometimes with water splashing sounds. The take-off is distinguishable with loud flapping at a high rate. Gannets dive in the water at high speed, up to 24 m s−1 [129] so they enter the water with a loud impact noise, often saturating the amplitude of recording. Coming out of the water is also usually loud with sounds of rising bubbles. To manually label these data, the spectrograms of the selected sound data were visually observed and the sound was played concomitantly using the software Avisoft-SASLab Pro (version 5.2.09, Avisoft Bioacoustics, Germany). A total of 318 events “floating on the water”, 391 events “flying” and 243 events “diving” were identified and labelled. Those labelled data were then used to characterize the acoustics properties of each activity and to train the classification algorithm (using a cross-validation procedure, details below).

Characterization of activity from acoustic features

To characterize the bird’s activity from the sound recordings, an automatic feature extraction was applied. For each sound recording, the algorithm followed four steps. First, the sound data were downsampled at 12 kHz. Second, to remove low frequency acoustic noise, the sound recordings were high-passed filtered (above 10 Hz) using a second-order Butterworth filter. Third, the recordings were divided into small sound segments of ~ 1.4 s (corresponding to 214 samples). This segment length was chosen to reflect the dynamic of movement of our study species. In particular, the dives last on average 20 s (minimum 6 s) and always start with an ‘entering the water’ that displays very specific sound features (Fig. 2) and lasts 1–2 s. A segment length of 214 (corresponding to 1.4 s) thus appeared most appropriate. The algorithm was also tested using segment lengths of 213 (0.68 s) and 215 (2.76 s) and they led to similar results (not shown). Fourth, a set of temporal (n = 21) and spectral (n = 14) features were extracted from each sound segment to acoustically describe the activities. Temporal features included envelope features, such as root mean square (RMS), peak to peak and peak to RMS values (means and standard deviations), and also signal skewness, kurtosis, entropy, quantiles and zero crossing rate. Spectral features were computed from the power spectrum (Fast Fourier transform) and included dominant frequency features (dominant frequency value, magnitude, ratio to the total energy, bandwidth at − 10 dB, spectral centroid and spectral flatness (the two latter computed as per [130]) in addition to quartiles of energy and the ratio of energy above three fixed thresholds (300, 1500, 5000 Hz). All acoustic features were computed using Matlab R2019b custom scripts.

The three main activities were re-defined into five categories: floating on the water, taking-off (three first segments of flying when preceded with floating on water), flying, entering water (first segment of diving when preceded with flying), and diving. The two transition classes were used for the ‘revision algorithm’ as described in the following section (“Classification procedure”).

Classification procedure

The labelled data set was used to train and test a classification algorithm following a fivefold cross-validation procedure. Briefly, this procedure consisted of splitting the data set into a training set containing 4/5 of the data to train the algorithm, and testing it on the remaining 1/5. This partitioning of the data into training and test set was done five times, and performances of the algorithm on the test sets were averaged over those five replication.

Five types of supervised learning algorithms were tested (Decision trees, Discriminant Analysis, Support Vector Machines, Nearest neighbour classifiers and ensemble classifiers), with some providing high classification results (above 90%). Among them, the k-nearest neighbour model was finally chosen, because its ratio between true and false positives for the diving class (of highest interest in our study case) was higher than that in other algorithms, still with a similar global accuracy. The k-nearest neighbour algorithm was implemented with five neighbours, Euclidian distance as distance metric and equal distance weight.

In all tested models, each sound segment was considered as independent from each other. As a strong dependence exists (for instance, Cape gannets do not fly just after diving without transitioning on the water), a ‘revision algorithm’ was applied subsequently to the results of the classification procedure. First, ‘entering water’ segments were used to confirm a dive event or deleted if no dive segment was following the entering water segment. A similar procedure was used with the take-off and flying segments. Then, transition segments were merged into their corresponding class (entering water was relabelled and merged with its associated diving event, similarly for take-off merged with flying). Isolated segments (defined as segments of one type occurring within a 6-segments long window of similar label segments) were removed and relabelled so that a coherent 6-segments long window of unique event was kept (Fig. 1C, D). Finally, predictions were smoothed using a moving median 6 segments-long window (corresponding to ~ 8.42 s) to further reduce the rapid changes in the class of segments predicted over short duration and thus improve the prediction of events.

All algorithms were implemented using Matlab R2019b and the Statistics and Machine Learning toolbox. Four metrics were used to assess the accuracy of prediction: the global accuracy (total number of segments correctly classified divided by the total number of segments), the sensitivity (also called recall or true positive rate) which measures the proportion of True Positives that are correctly classified), the precision (also called positive predictive value) which measures the ratio of the True Positives over all Positives), and the “Informedness” (the multi-class equivalent of the Youden’s index) which is a summarised performance measure of sensitivity and precision indices for all classes.

Precision = TP/(TP + FP) and Sensitivity = TP/(TP + FN), where TP stands for True Positive, FP for False Positive and FN for False Negative.

Application: acoustic-based time-activity budget of Cape gannets

The classification algorithm was applied to unlabelled acoustic data to predict the activities of Cape gannets when foraging. Only the data with full foraging trips were kept at this stage. These included six new individuals, plus one individual for which part of the data was labelled and used in the trained model. The activity of birds was then predicted on a total of ~ 93 h of acoustic recordings. The time-activity budgets (based on the number and duration of events) of unlabelled trips were computed by grouping successive segments (1.4 s) of similar activity into ‘events’ (see, for example, Fig. 1D). For instance, a 7-s period of diving, corresponding to 5 continuous time segments labelled as diving, was considered as one diving ‘event’.

Systematic review

To place our study into perspective and discuss the use of acoustic recorders among the different devices available for remotely recording and inferring behaviour, we conducted a systematic review on articles that automatically classified activities from animal-borne devices. We searched for articles in a systematic, repeatable way, using the ISI Web of Science Core Collection database. Our search included articles in English from 2000 to 2021, and was based on the following keywords:

(((((((((TS = ((“time budget*” OR "time-budget*" OR “activity budget*” OR "activity-budget*" OR “time-activity budget*” OR “state budget*” OR “behavio*ral state*” OR “behavio*r-time budget*” OR “behavio*r* classif*” OR “behavio*r discrimination” OR "behavio*r* categor*" OR "scene-classif*") AND (recorder* OR device* OR tag* OR biologging OR bio-logging OR logger* OR datalogger* OR biologger* OR bio-logger* OR collar* OR sensor* OR "animal-borne" OR "animal borne") AND (behavio*r*) AND (classif* OR accuracy OR “machine-learning” OR “machine learning” OR “supervised learning” OR “feature learning” OR "infer* behavio*r*"))))))))))).

On the 5th of April 2021 this query resulted in a list of 202 articles. These articles were first checked for relevance to our scope: use of animal-borne devices on non-human animals to record and infer activity budgets, training of an automatic classification (supervised learning algorithm) on data with direct observation (visual or video recorded) and with a quantification of algorithm performance. This resulted in a final list of 54 articles from which information was extracted. If several classifications were performed in an article (data from different devices, or classification on different animal’s activities), one line of data was extracted for each classification. The information extracted included: the species studied, a categorisation of the species (flying, terrestrial, aquatic), the number of individuals equipped, the devices attached on animals (all devices, the ones used to infer activities, the ones used to train and validate classification), the weight of devices (as a mass and as a percentage of the animal’s body mass), the size of the data set (as a number of data points), the sampling frequency, the number of activities, the list of activities, the algorithms used, the global accuracy obtained, other performances (when provided), the percentage of data used for training, the use (or not) of a cross-validation procedure. The entire data table can be found in the Additional file 6: Table S2 from which we extracted information provided in the main text. We identified five categories of devices used: accelerometers alone, accelerometers combined with other devices, GPS devices, acoustic recorders, and pressure sensors. We then compared the global accuracy obtained by the different studies, as a function of the type of devices used to infer activities and the number of activities in the budget. We acknowledge that the measure of global accuracy is limited and does not inform fully on a classification performance. In particular, this measure does not inform on the performance for the different behaviours and can hide a poor performance on rare behaviours (which are often of higher interest in biology and ecology). However, the measure of global accuracy is the most standard performance measure used, and was the only one that we could extract from (almost) all reviewed articles to allow comparison.

Availability of data and materials

The data sets used and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Tinbergen N. On aims and methods of ethology. Ethology. 1963. https://doi.org/10.1111/j.1439-0310.1963.tb01161.x.

Lehner PN. Handbook of ethological methods. Cambridge: Cambridge University Press; 1998.

Hanya G. Seasonal variations in the activity budget of Japanese macaques in the coniferous forest of Yakushima: effects of food and temperature. Am J Primatol. 2004;63:165–77.

Rieger I, Weihe WH. Weather dependent behaviour of captive striped hyaenas (Hyaena hyaena). Int J Biometeorol. 1975;19:122–6.

Collins WB, Urness PJ, Austin DD. Elk diets and activities on different lodgepole pine habitat segments. J Wildl Manag. 1978;42:799–810.

Wauters L, Swinnen C, Dhondt AA. Activity budget and foraging behaviour of red squirrels (Sciurus vulgaris) in coniferous and deciduous habitats. J Zool. 1992;227:71–86.

Yahner RH. The adaptive nature of the social system and behavior in the eastern chipmunk, Tamias striatus. Behav Ecol Sociobiol. 1978;3:397–427.

Boyd IL. Foraging and provisioning in Antarctic fur seals: interannual variability in time-energy budgets. Behav Ecol. 1999;10:198–208.

Davoren GK. Variability in foraging in response to changing prey distributions in rhinoceros auklets. Mar Ecol Prog Ser. 2000;198:183–291.

Altmann J. Observational study of behavior: sampling methods. Behaviour. 1974;49:227–66.

Altmann SA. Baboons, space, time, and energy. Integr Comp Biol. 1974;14:221–48.

Seyfarth RM, Cheney DL, Marler P. Vervet monkey alarm calls: semantic communication in a free-ranging primate. Anim Behav. 1980;28:1070–94.

Strayer FF, Strayer J. An ethological analysis of social agonism and dominance relations among preschool children. Child Dev. 1976;47:980–9.

Caraco T. Time budgeting and group size: a test of theory. Ecology. 1979;60:618–27.

Puttick GM. Foraging behaviour and activity budgets of Curlew Sandpipers. Ardea. 1979;67:1–122.

Turner DC. An analysis of time-budgeting by Roe Deer (Capreol Us Capreol Us) in an agricultural area. Behaviour. 1979;71:246–89.

Baumgardner DJ, Ward SE, Dewsbury DA. Diurnal patterning of eight activities in 14 species of muroid rodents. Anim Learn Behav. 1980;8:322–30.

Nursall JR. The activity budget and use of territory by a tropical blenniid fish. Zool J Linn Soc. 1981;72:69–92.

Anthony ELP, Stack MH, Kunz TH. Night roosting and the nocturnal time budget of the little brown bat, Myotis lucifugus: effects of reproductive status, prey density, and environmental conditions. Oecologia. 1981;51:151–6.

Abrol DP. Time and energy budgets of alfalfa pollinating bees Megachile nana bingh and Megachile flavipes Spinola (Hymenoptera: Megachilidae). Proc Indian Acad Sci (Anim Sci). 1986;95:579–86.

Boness DJ. Activity budget of male gray seals, Halichoerus grypus. J Mammal. 1984;65:291–7.

Mann J. Behavioral sampling methods for cetaceans: a review and critique. Mar Mamm Sci. 1999;15:102–22.

Burghardt GM, Bartmess-LeVasseur JN, Browning SA, Morrison KE, Stec CL, Zachau CE, et al. Perspectives—minimizing observer bias in behavioral studies: a review and recommendations. Ethology. 2012;118:511–7.

Cooke SJ, Hinch SG, Wikelski M, Andrews RD, Kuchel LJ, Wolcott TG, et al. Biotelemetry: a mechanistic approach to ecology. Trends Ecol Evol (Amst). 2004;19:334–43.

Ropert-Coudert Y, Wilson RP. Trends and perspectives in animal-attached remote sensing. Front Ecol Environ. 2005;3:437–44.

Shamoun-Baranes J, Bom R, van Loon EE, Ens BJ, Oosterbeek K, Bouten W. From sensor data to animal behaviour: an oystercatcher example. PLoS ONE. 2012;7:e37997.

Wilmers CC, Nickel B, Bryce CM, Smith JA, Wheat RE, Yovovich V. The golden age of bio-logging: how animal-borne sensors are advancing the frontiers of ecology. Ecology. 2015;96:1741–53.

Mullers RHE, Navarro RA, Daan S, Tinbergen JM, Meijer HAJ. Energetic costs of foraging in breeding Cape gannets Morus capensis. Mar Ecol Prog Ser. 2009;393:161–71.

Palomares F, Delibes M. Determining activity types and budgets from movement speed of radio-marked mongooses. J Wildl Manag. 1993;57:164–7.

Benhamou S. How to reliably estimate the tortuosity of an animal’s path: straightness, sinuosity, or fractal dimension? J Theor Biol. 2004;229:209–20.

Bonadonna F, Bajzak C, Benhamou S, Igloi K, Jouventin P, Lipp HP, et al. Orientation in the wandering albatross: interfering with magnetic perception does not affect orientation performance. Proc R Soc B. 2005;272:489–95.

Thiebault A, Dubroca L, Mullers RHE, Tremblay Y, Pistorius PA. “m2b” package in r: deriving multiple variables from movement data to predict behavioural states with random forests. Methods Ecol Evol. 2018. https://doi.org/10.1111/2041-210X.12989/abstract.

Cairns DK, Bredin KA, Montevecchi WA. Activity budgets and foraging ranges of breeding common murres. Auk. 1987;104:218–24.

Gutowsky SE, Gutowsky LF, Jonsen ID, Leonard ML, Naughton MB, Romano MD, et al. Daily activity budgets reveal a quasi-flightless stage during non-breeding in Hawaiian albatrosses. Mov Ecol. 2014;2:23–23.

LeFebvre EA, Birkebak RC, Dorman FD. A flight-time integrator for birds. Auk. 1967;84:124–8.

Prince PA, Francis MD. Activity budgets of foraging gray-headed albatrosses. The Condor. 1984;86:297–300.

Garthe S, Grémillet D, Furness RW. At-sea-activity and foraging efficiency in chick-rearing northern gannets Sula bassana: a case study in Shetland. Mar Ecol Prog Ser. 1999;185:93–9.

Wilson RP, Weimerskirch H, Lys P. A device for measuring seabird activity at sea. J Avian Biol. 1995;26:172–5.

Madsen PT, Payne R, Kristiansen NU, Wahlberg M, Kerr I, Møhl B. Sperm whale sound production studied with ultrasound time/depth-recording tags. J Exp Biol. 2002;205:1899–906.

Naito Y, Asaga T, Ohyama Y. Diving behavior of Adélie penguins determined by time-depth recorder. The Condor. 1990;92:582–6.

Wilson RP, Bain CAR. An inexpensive depth gauge for penguins. J Wildl Manag. 1984;48:1077–84.

Tremblay Y, Cherel Y, Oremus M, Tveraa T, Chastel O. Unconventional ventral attachment of time–depth recorders as a new method for investigating time budget and diving behaviour of seabirds. J Exp Biol. 2003;206:1929–40.

Tinbergen N. “Derived” activities; their causation, biological significance, origin, and emancipation during evolution. Q Rev Biol. 1952;27:1–32.

Mohus I. A storing telemetry-transmitter for recording bird activity. Ornis Scandinavica (Scand J Ornithol). 1987;18:227–30.

Yoda K, Sato K, Niizuma Y, Kurita M, Bost C, Maho YL, et al. Precise monitoring of porpoising behaviour of Adelie penguins determined using acceleration data loggers. J Exp Biol. 1999;202:3121–6.

Yoda K, Naito Y, Sato K, Takahashi A, Nishikawa J, Ropert-Coudert Y, et al. A new technique for monitoring the behaviour of free-ranging Adelie penguins. J Exp Biol. 2001;204:685–90.

Chimienti M, Desforges J-P, Beumer LT, Nabe-Nielsen J, van Beest FM, Schmidt NM. Energetics as common currency for integrating high resolution activity patterns into dynamic energy budget-individual based models. Ecol Model. 2020;434:109250.

Shepard ELC, Wilson RP, Quintana F, Laich AG, Liebsch N, Albareda DA, et al. Identification of animal movement patterns using tri-axial accelerometry. Endanger Species Res. 2008;10:47–60.

Insley SJ, Robson BW, Yack T, Ream RR, Burgess WC. Acoustic determination of activity and flipper stroke rate in foraging northern fur seal females. Endanger Species Res. 2008;4:147–55.

Lynch E, Angeloni L, Fristrup K, Joyce D, Wittemyer G. The use of on-animal acoustical recording devices for studying animal behavior. Ecol Evol. 2013;3:2030–7.

Greif S, Yovel Y. Using on-board sound recordings to infer behaviour of free-moving wild animals. J Exp Biol. 2019;222:jeb184689.

Stowell D, Benetos E, Gill LF. On-bird sound recordings: automatic acoustic recognition of activities and contexts. IEEE/ACM Trans Audio Speech Lang Process. 2017;25:1193–206.

Studd EK, Boudreau MR, Majchrzak YN, Menzies AK, Peers MJL, Seguin JL, et al. Use of acceleration and acoustics to classify behavior, generate time budgets, and evaluate responses to moonlight in free-ranging snowshoe hares. Front Ecol Evol. 2019. https://doi.org/10.3389/fevo.2019.00154/full.

Wijers M, Trethowan P, Markham A, du Preez B, Chamaillé-Jammes S, Loveridge A, et al. Listening to lions: animal-borne acoustic sensors improve bio-logger calibration and behaviour classification performance. Front Ecol Evol. 2018. https://doi.org/10.3389/fevo.2018.00171/full.

Sherley RB, Crawford RJ, Dyer BM, Kemper J, Makhado AB, Masotla M, et al. The status and conservation of the Cape Gannet Morus capensis. Ostrich. 2019;90:335–46.

BirdLife International. Morus capensis. The IUCN Red List of Threatened Species 2018. 2018. https://doi.org/10.2305/IUCN.UK.2018-2.RLTS.T22696668A132587992.en. Accessed 26 Dec 2018.

Grémillet D, Péron C, Kato A, Amélineau F, Ropert-Coudert Y, Ryan PG, et al. Starving seabirds: unprofitable foraging and its fitness consequences in Cape gannets competing with fisheries in the Benguela upwelling ecosystem. Mar Biol. 2016;163:35.

Coetzee JC, van der Lingen CD, Hutchings L, Fairweather TP. Has the fishery contributed to a major shift in the distribution of South African sardine? ICES J Mar Sci. 2008;65:1676–88.

Crawford RJM, Dundee BL, Dyer BM, Klages NTW, Meÿer MA, Upfold L. Trends in numbers of Cape gannets (Morus capensis), 1956/1957–2005/2006, with a consideration of the influence of food and other factors. ICES J Mar Sci. 2007;64:169–77.

Green DB, Klages NTW, Crawford RJM, Coetzee JC, Dyer BM, Rishworth GM, et al. Dietary change in Cape gannets reflects distributional and demographic shifts in two South African commercial fish stocks. ICES J Mar Sci. 2014. http://icesjms.oxfordjournals.org/content/early/2014/11/23/icesjms.fsu203. Accessed 27 Nov 2014.

Cohen LA, Pichegru L, Grmillet D, Coetzee J, Upfold L, Ryan PG. Changes in prey availability impact the foraging behaviour and fitness of Cape gannets over a decade. Mar Ecol Prog Ser. 2014;505:281–93.

Green DB, Coetzee JC, Rishworth GM, Pistorius PA. Foraging distribution of Cape gannets in relation to oceanographic features, prey availability and marine protected areas. Mar Ecol Prog Ser. 2015;537:277–88.

Mullers RHE, Tinbergen JM. Parental provisioning behaviour predicts survival of cape gannet chicks under poor conditions. Ardea. 2009;97:89–98.

Pichegru L, Ryan PG, van der Lingen CD, Coetzee J, RopertCoudert Y, Grémillet D. Foraging behaviour and energetics of Cape gannets Morus capensis feeding on live prey and fishery discards in the Benguela upwelling system. Mar Ecol Prog Ser. 2007;350:127–36.

Mullers RHE, Navarro RA. Foraging behaviour of Cape gannets as an indicator of colony health status. Endanger Species Res. 2010;12:193–202.

Rishworth GM, Pistorius PA. Protection and provisioning: the role of parental behaviour in terms of chick growth and survival in a pelagic seabird. Mar Ecol Prog Ser. 2015;530:153–62.

Thiebault A, Mullers R, Pistorius P, Meza-Torres MA, Dubroca L, Green D, et al. From colony to first patch: processes of prey searching and social information in Cape Gannets. Auk. 2014;131:595–609.

Yang Y, Yeh H-G, Zhang W, Lee CJ, Meese EN, Lowe CG. Feature extraction, selection, and K-nearest neighbors algorithm for shark behavior classification based on imbalanced dataset. IEEE Sens J. 2021;21:6429–39.

Brennan J, Johnson P, Olson K. Classifying season long livestock grazing behavior with the use of a low-cost GPS and accelerometer. Comput Electron Agric. 2021;181:105957.

Sutton GJ, Bost CA, Kouzani AZ, Adams SD, Mitchell K, Arnould JPY. Fine-scale foraging effort and efficiency of Macaroni penguins is influenced by prey type, patch density and temporal dynamics. Mar Biol. 2021;168:3.

Brewster LR, Dale JJ, Guttridge TL, Gruber SH, Hansell AC, Elliott M, et al. Development and application of a machine learning algorithm for classification of elasmobranch behaviour from accelerometry data. Mar Biol. 2018;165:62.

Studd EK, Landry-Cuerrier M, Menzies AK, Boutin S, McAdam AG, Lane JE, et al. Behavioral classification of low-frequency acceleration and temperature data from a free-ranging small mammal. Ecol Evol. 2019;9:619–30.

Hammond TT, Springthorpe D, Walsh RE, Berg-Kirkpatrick T. Using accelerometers to remotely and automatically characterize behavior in small animals. J Exp Biol. 2016;219:1618–24.

Nuijten R, Prins EF, Lammers J, Mager C, Nolet BA. Calibrating tri-axial accelerometers for remote behavioural observations in Bewick’s swans. JZAR. 2020;8:231–8.

Jeantet L, Dell’Amico F, Forin-Wiart M-A, Coutant M, Bonola M, Etienne D, et al. Combined use of two supervised learning algorithms to model sea turtle behaviours from tri-axial acceleration data. J Exp Biol. 2018;221. https://jeb.biologists.org/content/221/10/jeb177378. Accessed 16 Feb 2021.

Ladds MA, Thompson AP, Slip DJ, Hocking DP, Harcourt RG. Seeing it all: evaluating supervised machine learning methods for the classification of diverse otariid behaviours. PLoS ONE. 2016;11:e0166898.

Homburger H, Schneider MK, Hilfiker S, Lüscher A. Inferring behavioral states of grazing livestock from high-frequency position data alone. PLoS ONE. 2014;9:e114522.

Jeantet L, Planas-Bielsa V, Benhamou S, Geiger S, Martin J, Siegwalt F, et al. Behavioural inference from signal processing using animal-borne multi-sensor loggers: a novel solution to extend the knowledge of sea turtle ecology. R Soc Open Sci. 2020;7:200139.

Martiskainen P, Järvinen M, Skön J-P, Tiirikainen J, Kolehmainen M, Mononen J. Cow behaviour pattern recognition using a three-dimensional accelerometer and support vector machines. Appl Anim Behav Sci. 2009;119:32–8.

Hu S, Ingham A, Schmoelzl S, McNally J, Little B, Smith D, et al. Inclusion of features derived from a mixture of time window sizes improved classification accuracy of machine learning algorithms for sheep grazing behaviours. Comput Electron Agric. 2020;179:105857.

Chakravarty P, Cozzi G, Dejnabadi H, Léziart P-A, Manser M, Ozgul A, et al. Seek and learn: automated identification of microevents in animal behaviour using envelopes of acceleration data and machine learning. Methods Ecol Evol. 2020;11:1639–51.

DeSantis DL, Mata-Silva V, Johnson JD, Wagler AE. Integrative framework for long-term activity monitoring of small and secretive animals: validation with a Cryptic Pitviper. Front Ecol Evol. 2020. https://doi.org/10.3389/fevo.2020.00169/full.

Barwick J, Lamb DW, Dobos R, Welch M, Schneider D, Trotter M. Identifying sheep activity from tri-axial acceleration signals using a moving window classification model. Remote Sens. 2020;12:646.

Fogarty ES, Swain DL, Cronin GM, Moraes LE, Trotter M. Behaviour classification of extensively grazed sheep using machine learning. Comput Electron Agric. 2020;169:105175.

Phi Khanh PC, Tran D-T, Duong VT, Thinh NH, Tran D-N. The new design of cows’ behavior classifier based on acceleration data and proposed feature set. Math Biosci Eng. 2020;17:2760–80.

Eerdekens A, Deruyck M, Fontaine J, Martens L, Poorter ED, Joseph W. Automatic equine activity detection by convolutional neural networks using accelerometer data. Comput Electron Agric. 2020;168:105139.

Flaspohler GE, Caruso F, Mooney TA, Katija K, Fontes J, Afonso P, et al. Quantifying the swimming gaits of veined squid (Loligo forbesii) using bio-logging tags. J Exp Biol. 2019. https://doi.org/10.1242/jeb.198226.

Achour B, Belkadi M, Aoudjit R, Laghrouche M. Unsupervised automated monitoring of dairy cows’ behavior based on Inertial Measurement Unit attached to their back. Comput Electron Agric. 2019;167:105068.

Sakai K, Oishi K, Miwa M, Kumagai H, Hirooka H. Behavior classification of goats using 9-axis multi sensors: the effect of imbalanced datasets on classification performance. Comput Electron Agric. 2019;166:105027.

Riaboff L, Aubin S, Bédère N, Couvreur S, Madouasse A, Goumand E, et al. Evaluation of pre-processing methods for the prediction of cattle behaviour from accelerometer data. Comput Electron Agric. 2019;165:104961.

Fannjiang C, Mooney TA, Cones S, Mann D, Shorter KA, Katija K. Augmenting biologging with supervised machine learning to study in situ behavior of the medusa Chrysaora fuscescens. J Exp Biol. 2019. https://doi.org/10.1242/jeb.207654.

Hurme E, Gurarie E, Greif S, Herrera MLG, Flores-Martínez JJ, Wilkinson GS, et al. Acoustic evaluation of behavioral states predicted from GPS tracking: a case study of a marine fishing bat. Mov Ecol. 2019;7:21.

Beltramino LE, Venerus LA, Trobbiani GA, Wilson RP, Ciancio JE. Activity budgets for the sedentary Argentine sea bass Acanthistius patachonicus inferred from accelerometer data loggers. Austral Ecol. 2019;44:397–408.

Tamura T, Okubo Y, Deguchi Y, Koshikawa S, Takahashi M, Chida Y, et al. Dairy cattle behavior classifications based on decision tree learning using 3-axis neck-mounted accelerometers. Anim Sci J. 2019;90:589–96.

le Roux SP, Wolhuter R, Niesler T. Energy-aware feature and model selection for onboard behavior classification in low-power animal borne sensor applications. IEEE Sens J. 2019;19:2722–34.

Hounslow JL, Brewster LR, Lear KO, Guttridge TL, Daly R, Whitney NM, et al. Assessing the effects of sampling frequency on behavioural classification of accelerometer data. J Exp Mar Biol Ecol. 2019;512:22–30.

Benaissa S, Tuyttens FAM, Plets D, Cattrysse H, Martens L, Vandaele L, et al. Classification of ingestive-related cow behaviours using RumiWatch halter and neck-mounted accelerometers. Appl Anim Behav Sci. 2019;211:9–16.

Peng Y, Kondo N, Fujiura T, Suzuki T, Wulandari, Yoshioka H, et al. Classification of multiple cattle behavior patterns using a recurrent neural network with long short-term memory and inertial measurement units. Comput Electron Agric. 2019;157:247–53.

Mansbridge N, Mitsch J, Bollard N, Ellis K, Miguel-Pacheco GG, Dottorini T, et al. Feature selection and comparison of machine learning algorithms in classification of grazing and rumination behaviour in sheep. Sensors. 2018;18:3532.

le Roux SP, Wolhuter R, Stevens N, Niesler T. Reduced energy and memory requirements by on-board behavior classification for animal-borne sensor applications. IEEE Sens J. 2018;18:4261–8.

Walton E, Casey C, Mitsch J, Vázquez-Diosdado JA, Yan J, Dottorini T, et al. Evaluation of sampling frequency, window size and sensor position for classification of sheep behaviour. R Soc Open Sci. 2018;5:171442.

Tofastrud M, Hegnes H, Devineau O, Zimmermann B. Activity patterns of free-ranging beef cattle in Norway. Acta Agric Scand Sect A Anim Sci. 2018;68:39–47.

Uijl ID, Álvarez CBG, Bartram D, Dror Y, Holland R, Cook A. External validation of a collar-mounted triaxial accelerometer for second-by-second monitoring of eight behavioural states in dogs. PLoS ONE. 2017;12:e0188481.

Heerah K, Woillez M, Fablet R, Garren F, Martin S, De Pontual H. Coupling spectral analysis and hidden Markov models for the segmentation of behavioural patterns. Mov Ecol. 2017;5:20.

Clark PE, Johnson DE, Ganskopp DC, Varva M, Cook JG, Cook RC, et al. Contrasting daily and seasonal activity and movement of sympatric elk and cattle. Rangel Ecol Manage. 2017;70:183–91.

Smith D, Rahman A, Bishop-Hurley GJ, Hills J, Shahriar S, Henry D, et al. Behavior classification of cows fitted with motion collars: decomposing multi-class classification into a set of binary problems. Comput Electron Agric. 2016;131:40–50.

Gaylord AJ, Sanchez DM, Van Sickle J. Choosing sampling interval durations for remotely classifying rocky mountain elk behavior. J Fish Wildl Manag. 2016;7:213–21.

Lush L, Ellwood S, Markham A, Ward AI, Wheeler P. Use of tri-axial accelerometers to assess terrestrial mammal behaviour in the wild. J Zool. 2016;298:257–65.

Dutta R, Smith D, Rawnsley R, Bishop-Hurley G, Hills J, Timms G, et al. Dynamic cattle behavioural classification using supervised ensemble classifiers. Comput Electron Agric. 2015;111:18–28.

González LA, Bishop-Hurley GJ, Handcock RN, Crossman C. Behavioral classification of data from collars containing motion sensors in grazing cattle. Comput Electron Agric. 2015;110:91–102.

Broekhuis F, Grünewälder S, McNutt JW, Macdonald DW. Optimal hunting conditions drive circalunar behavior of a diurnal carnivore. Behav Ecol. 2014;25:1268–75.

Gerencsér L, Vásárhelyi G, Nagy M, Vicsek T, Miklósi A. Identification of behaviour in freely moving dogs (Canis familiaris) using inertial sensors. PLoS ONE. 2013;8:e77814.

Nadimi ES, Jørgensen RN, Blanes-Vidal V, Christensen S. Monitoring and classifying animal behavior using ZigBee-based mobile ad hoc wireless sensor networks and artificial neural networks. Comput Electron Agric. 2012;82:44–54.

Umstätter C, Waterhouse A, Holland JP. An automated sensor-based method of simple behavioural classification of sheep in extensive systems. Comput Electron Agric. 2008;64:19–26.

Schlecht E, Hülsebusch C, Mahler F, Becker K. The use of differentially corrected global positioning system to monitor activities of cattle at pasture. Appl Anim Behav Sci. 2004;85:185–202.

Ropert-Coudert Y, Grémillet D, Kato A, Ryan PG, Naito Y, Le Maho Y. A fine-scale time budget of Cape gannets provides insights into the foraging strategies of coastal seabirds. Anim Behav. 2004;67:985–92.

Botha JA, Rishworth GM, Thiebault A, Green DB, Pistorius PA. Sex-specific foraging over space and time in Cape gannets during chick rearing. Mar Ecol Prog Ser. 2017;579:157–67.

Rishworth GM, Tremblay Y, Green DB, Pistorius PA. An automated approach towards measuring time-activity budgets in colonial seabirds. Methods Ecol Evol. 2014;5:854–63.

Kawabe R, Kawano T, Nakano N, Yamashita N, Hiraishi T, Naito Y. Simultaneous measurement of swimming speed and tail beat activity of free-swimming rainbow trout Oncorhynchus mykiss using an acceleration data-logger. Fish Sci. 2003;69:959–65.

Bost CA, Handrich Y, Butler PJ, Fahlman A, Halsey LG, Woakes AJ, et al. Changes in dive profiles as an indicator of feeding success in king and Adélie penguins. Deep Sea Res Part II. 2007;54:248–55.

Tremblay Y, Cherel Y. Synchronous underwater foraging behavior in penguins. The Condor. 1999;101:179–85.

Couchoux C, Aubert M, Garant D, Réale D. Spying on small wildlife sounds using affordable collar-mounted miniature microphones: an innovative method to record individual daylong vocalisations in chipmunks. Sci Rep. 2015;5:10118.

Mcinnes AM, Thiebault A, Cloete T, Pichegru L, Aubin T, Mcgeorge C, et al. Social context and prey composition are associated with calling behaviour in a diving seabird. Ibis. 2019;162:1047–59.

Thiebault A, Charrier I, Pistorius P, Aubin T. At sea vocal repertoire of a foraging seabird. J Avian Biol. 2019. https://doi.org/10.1111/jav.02032.

Mattern T, McPherson MD, Ellenberg U, Heezik Y van, Seddon PJ. High definition video loggers provide new insights into behaviour, physiology, and the oceanic habitat of marine top predators. PeerJ Inc.; 2017 Mar. Report No.: e2765v2. https://peerj.com/preprints/2765.

Tremblay Y, Thiebault A, Mullers R, Pistorius P. Bird-borne video-cameras show that seabird movement patterns relate to previously unrevealed proximate environment, Not Prey. PLoS ONE. 2014;9:e88424.

Votier SC, Bicknell A, Cox SL, Scales KL, Patrick SC. A bird’s eye view of discard reforms: bird-borne cameras reveal seabird/fishery interactions. PLoS ONE. 2013;8:e57376.

Vandenabeele SP, Shepard EL, Grogan A, Wilson RP. When three per cent may not be three per cent; device-equipped seabirds experience variable flight constraints. Mar Biol. 2012;159:1–14.

Lee DN, Reddish PE. Plummeting gannets: a paradigm of ecological optics. Nature. 1981;293:293–4.

Ramalingam A, Krishnan S. Gaussian mixture modeling of short-time fourier transform features for audio fingerprinting. IEEE Trans Inf Forensics Secur. 2006;1:457–63.

Acknowledgements

We thank South African National Parks for logistical support during fieldwork. We thank Rabi’a Ryklief, Jonathan Botha and Melanie Wells for their help in the field.

Funding

AT was funded by the Nelson Mandela University. Acoustic devices were funded by the CNRS. The rest of the material and all fieldwork expenses were funded by the South Africa’s National Research Foundation.

Author information

Authors and Affiliations

Contributions

Idea conception: TA, IC. Data collection: AT, PP, TA, IC. Data analyses: AT, CH, TA, IC. Writing: AT, CH. All authors substantively revised the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Fieldwork took place under a permit from SANParks: PISTP1238. All experimental protocols were approved by both SANParks and the Nelson Mandela University Ethics Committee (Reference: A10-SCI-ZOO-008), and were carried out in accordance with the approved guidelines.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1: Figure S1.

Classification performance of the algorithm used in the study, showing (A) the global confusion matrix (on labelled data) and (B) the receiver operating characteristic curves for the diving, floating on water and flying classes, respectively. dv = diving, ent_wat = entering water, wat = floating on water, takeoff = taking off, fly = flying.

Additional file 2: Figure S2.

Performances of the algorithm (after classification and revision) on the training data set, for each individual bird file (17M, 19M, 34M, 41M). The confusion matrices (squared 3 * 3 matrices) are shown together with the Precision (bottom row) and the Sensitivity (right column).

Additional file 3: Figure S3.

(A) Performance of automatic classifications as measured by the global accuracy and (B) number of activities in the budget, as a function of the sampling frequency at which data were recorded, in the 61 reviewed classifications (from 54 articles, including our study) [26, 32, 52–54, 68–115].

Additional file 4: Figure S4.

Performance of automatic classifications as measured by the global accuracy, as a function of the size of data sets in the 61 reviewed classifications (from 54 articles, including our study). References: [26, 32, 52–54, 68–115].

Additional file 5: Table S1.

Number, duration and proportion of time spent in each activity (diving, floating on water and flying), for each of the 9 Cape gannets with full trips recorded. Individual 41M (used in model training) is not included here as the trip was only partially recorded. Individual 17M was manually labelled on a part of the entire trip.

Additional file 6: Table S2.

Systematic review on published articles that automatically classified activities based on animal-borne devices.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Thiebault, A., Huetz, C., Pistorius, P. et al. Animal-borne acoustic data alone can provide high accuracy classification of activity budgets. Anim Biotelemetry 9, 28 (2021). https://doi.org/10.1186/s40317-021-00251-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40317-021-00251-1