Abstract

Materials science research has witnessed an increasing use of data mining techniques in establishing process‐structure‐property relationships. Significant advances in high‐throughput experiments and computational capability have resulted in the generation of huge amounts of data. Various statistical methods are currently employed to reduce the noise, redundancy, and the dimensionality of the data to make analysis more tractable. Popular methods for reduction (like principal component analysis) assume a linear relationship between the input and output variables. Recent developments in non‐linear reduction (neural networks, self‐organizing maps), though successful, have computational issues associated with convergence and scalability. Another significant barrier to use dimensionality reduction techniques in materials science is the lack of ease of use owing to their complex mathematical formulations. This paper reviews various spectral‐based techniques that efficiently unravel linear and non‐linear structures in the data which can subsequently be used to tractably investigate process‐structure‐property relationships. In addition, we describe techniques (based on graph‐theoretic analysis) to estimate the optimal dimensionality of the low‐dimensional parametric representation. We show how these techniques can be packaged into a modular, computationally scalable software framework with a graphical user interface ‐ Scalable Extensible Toolkit for Dimensionality Reduction (SETDiR). This interface helps to separate out the mathematics and computational aspects from the materials science applications, thus significantly enhancing utility to the materials science community. The applicability of this framework in constructing reduced order models of complicated materials dataset is illustrated with an example dataset of apatites described in structural descriptor space. Cluster analysis of the low‐dimensional plots yielded interesting insights into the correlation between several structural descriptors like ionic radius and covalence with characteristic properties like apatite stability. This information is crucial as it can promote the use of apatite materials as a potential host system for immobilizing toxic elements.

Similar content being viewed by others

1 Background

Using data mining techniques to probe and establish process‐structure‐property relationships has witnessed a growing interest owing to its ability to accelerate the process of tailoring materials by design. Before the advent of data mining techniques, scientists used a variety of empirical and diagrammatic techniques [1], like pettifor maps [2], to establish relationships between structure and mechanical properties. Pettifor maps, one of the earliest graphical representation techniques, is exceedingly efficient except that it requires a thorough understanding and intuition about the materials. Recent progress in computational capabilities has seen the advent of more complicated paradigms ‐ so‐called virtual interrogation techniques ‐ which span from first‐principles calculations to multi‐scale models [3]‐[7]. These complex multi‐physics and/or statistical techniques and simulations [8],[9] result in an integrated set of tools which can predict the relationships between chemical, microstructural, and mechanical properties producing an exponentially large collection of data. Simultaneously, experimental methods ‐ combinatorial materials synthesis [10],[11], high‐throughput experimentation, atom probe tomography ‐ allow synthesis and screening of a large number of materials while generating huge amounts of multivariate data.

A key challenge is then to efficiently probe this large data to extract correlations between structure and property. This data explosion has motivated the use of data mining techniques in materials science to explore, design, and tailor materials and structures. A key stage in this process is to reduce the size of the data, while minimizing the loss of information during this data reduction. This process is called data dimensionality reduction. By definition, dimensionality reduction (DR) is the process of reducing the dimensionality of the given set of (usually unordered) data points and extracting the low‐dimensional (or parameter space) embedding with a desired property (for example, distance, topology, etc.) being preserved throughout the process. Examples for DR methods are principal component analysis (PCA) [12], Isomap [13], Hessian locally linear embedding (hLLE) [14], etc. Applying DR methods enables visualization of the high‐dimensional data and also estimates the optimal number of dimensions required to represent the data without considerable loss of information. Additionally, burgeoning cyberinfrastructure‐based tools and collaborations sustained by the government’s recent Materials Genome Initiative (MGI) provides a great platform to leverage the data dimensionality reduction tools. This will enable integration of information obtained from the individual high‐throughput simulations and experimentation efforts in various domains (e.g., mechanical, electrical, electro‐magnetic, etc.) and at multiple length‐scales (macro‐meso‐micro‐nano) in a fashion as never seen before [15].

Data dimensionality reduction is not a novel concept. Page [16] describes different techniques of data reduction and their applicability for establishing process‐structure‐property relationships. Statistical methods like PCA [17] and factor analysis (FA) [18] have been used on materials data generated by first‐principles calculations or by experimental methods. However, dimensionality reduction techniques like PCA or factor analysis to establish process‐structure‐property relationships traditionally assume a linear relationship among the variables. This is often not strictly valid; the data usually lies on a non‐linear manifold (or surface) [13],[19]. Non‐linear dimensionality reduction (NLDR) techniques can be applied to unravel the non‐linear structure from unordered data. An example of such application for constructing a low‐dimensional stochastic representation of property variations in random heterogenous media is [19]. Another exciting application of data dimensionality reduction is in combination with quantum mechanics‐based calculations to predict the structure [20]‐[22]. For a more mathematical list of linear and non‐linear DR techniques, the interested reader can consult [23],[24].

In this paper, the theory and mathematics behind various linear and non‐linear dimensionality reduction methods is explained. The mathematical aspects of dimensionality reduction are packaged into an easy‐to‐use software framework called Scalable Extensible Toolkit for Dimensionality Reduction (SETDiR) which (a) provides a user‐friendly interface that successfully abstracts user from the mathematical intricacies, (b) allows for easy post‐processing of the data, and (c) represents the data in a visual format and allows the user to store the output in standard digital image format (eg: JPEG), thus making data more tractable and providing an intuitive understanding of the data. We conclude by applying the techniques discussed on a dataset of apatites [25]‐[29] described using several structural descriptors. This paper is seen as an extension of our recent work [30]. Apatites (A 4IA 6II(B O4)6X2) have the ability to accommodate numerous chemical substitutions and hence represent a unique family of crystal chemistries with properties catering many technological applications, such as toxic element immobilization, luminescence, and electrolytes for intermediate temperature solid oxide fuel cells, to name a few [25]‐[29].

The outline of the paper is as follows: The section ‘Methods: dimensionality reduction’ briefly describes the concepts of DR, algorithms, and the dimensionality estimators that can be used to estimate the dimensionality. The software framework, SETDiR, developed to apply DR techniques is described in the section ‘Software: SETDiR’. The section ‘Results and discussion’ discusses the interpretation of low‐dimensional results obtained by applying SETDiR to the apatite dataset.

2 Methods: dimensionality reduction

The problem of dimensionality reduction can be formulated as follows. Consider a set of data, X. This set consists of n data points, xi. Each of the data points xi is vectorized to form a ‘column’ vector of size D. Usually, D is large. Thus, X={x0,x1,…,xn−1} of n points, where and D≫1. Visualizing and analyzing correlations, patterns, and connections within high‐dimensional dataset is difficult. Hence, we are interested in finding a set of equivalent low‐dimensional points, Y={y0,y1,…,yn−1}, that exhibit the same correlations, patterns, and connections as the high‐dimensional data. This is mathematically posed asFind Y={y0,y1,…,yn−1}, such that , d≪D and ∀i,j |xi−xj|h=|yi−yj|h. Here, |a−b|h denotes a specific norm that captures properties, connections, or correlations we want to preserve during dimensionality reduction [23].

For instance, by defining h as Euclidean norm, we preserve Euclidean distance, thus obtaining a reduction equivalent to the standard technique of PCA [12]. Similarly, defining h to be the angular distance (or conformal distance [31]) results in locally linear embedding (LLE) [32] that preserves local angles between points. In a typical application [33],[34], xi represents a state of the analyzed system, e.g., temperature field, concentration distribution, or characteristic properties of a system. Such state description can be derived from experimental sensor data or can be the result of a numerical simulation. However, irrespective of the source, it is characterized by high dimensionality, that is D is typically of the order of 102 to 106[35],[36]. While xi represents just a single state of the system, contemporary data acquisition setups deliver large collections of such observations, which correspond to the temporal or parametric evolution of the system [33]. Thus, the cardinality n of the resulting set X is usually large (n∼102 to 105). Intuitively, information obfuscation increases with the data dimensionality. Therefore, in the process of DR, we seek as small a dimension d as possible, given the constraints induced by the norm |a−b|h[23]. Routinely, d<4 as it permits, for instance, visualization of the set Y.

The key mathematical idea underpinning DR can be explained as follows: We encode the desired information about X, i.e., topology or distance, in its entirety by considering all pairs of points in X. This encoding is represented as a matrix An×n. Next, we subject matrix A to unitary transformation V, i.e., transformation that preserves the norm of A (thus, preserving connectivities and correlations in the data), to obtain its sparsest form Λ, where A=V Λ VT. Here, Λn×n is a diagonal matrix with rapidly diminishing entries. As a result, it is sufficient to consider only a small, d, number of entries of Λ to capture all the information encoded in A. These d entries constitute the set Y. The above procedure hinges on the fact that unitary transformations preserve original properties of A[37]. Note also, that it requires a method to construct matrix A in the first place. Indeed, what differentiates various spectral data dimensionality methods is the way information is encoded in A.

We focus on four different DR methods: (a) PCA, a linear DR method; (b) Isomap, a non‐linear isometry‐preserving DR method; (c) LLE, a non‐linear conformal‐preserving DR method; and (d) Hessian LLE, a topology‐preserving DR method.

2.1 Principal component analysis

PCA is a powerful and a popular DR strategy due to its simplicity and ease in implementation. It is based on the premise that the high‐dimensional data is a linear combination of a set of hidden low‐dimensional axes. PCA then extracts the latent parameters or low‐dimensional axes by reorienting the axes of the high‐dimensional space in such a way that the variance of the variables is maximized [23].

PCA algorithm

-

1.

Compute the pair‐wise Euclidean distance for all points in the input data X. Store it as a matrix [ E].

-

2.

Construct a matrix [W ∗] such that the elements of [W ∗] are −0.5 times the square of the elements of the euclidean distance matrix [E].

-

3.

Find the dissimilarity matrix [ A] by double centering [W ∗]:

(1)

-

4.

Solve for the largest d eigenpairs of [A]:

(3)

-

5.

Construct the low‐dimensional representation in from the eigenpairs:

(4)

The functionality of the identity matrix is to extract the most important d‐dimensions from the eigenpairs of [A].

The limitation of PCA is that it assumes the data lies on a linear space and hence performs poorly on the data that are inherently non‐linear. In these cases, PCA also tends to over‐estimate the dimensionality of the data.

2.2 Isomap

Isomap relaxes the assumption of PCA that the data lies on a linear space. A classic example of a non‐linear manifold is the Swiss roll. Figure 1 shows how PCA tries to fit the best linear plane while Isomap unravels the low‐dimensional surface. Isomap essentially smooths out the non‐linear manifold into a corresponding linear space and subsequently applies PCA. This smoothing out can intuitively be understood in the context of the spiral, where the ends of the spiral are pulled out to straighten the spiral into a straight line. Isomap accomplishes this objective mathematically by ensuring that the geodesic distance between data points are preserved under transformations. The geodesic distance is the distance measured along the curved surface on which the points rest [23]. Since it preserves (geodesic) distances, Isomap is an isometry (distance‐preserving) transformation. The underlying mathematics of the Isomap algorithm assumes that the data lies on a manifold which is convex (but not necessarily linear). Note that both PCA and Isomap are isometric mappings; PCA preserves pair‐wise Euclidean distances of the points while Isomap preserves the geodesic distance.

Isomap algorithm

-

1.

Compute the pair‐wise Euclidean distance matrix [E] from the input data X.

-

2.

Compute the k‐nearest neighbors of each point from the distance matrix [E].

-

3.

Compute the pair‐wise geodesic distance matrix [G] from [E]. This is done using Floyd’s algorithm [38].

-

4.

Construct a matrix [W ∗] such that the elements of [W ∗] are −0.5 times the square of the elements of the geodesic distance matrix [G].

-

5.

Find the dissimilarity matrix [A] by double centering [W ∗]:

(5)

-

6.

Solve for the largest d eigenpairs of A:

(7)

-

7.

Construct the low‐dimensional representation in from the eigenpairs:

(8)

The non‐linearity in the data is accounted for by using geodesic distance metric. The graph distance is used to approximate the geodesic distance [39]. Graph distance between a pair of points in a graph (V, E) is the shortest path connecting the two given points. The graph distances are calculated using the well‐known Floyd’s algorithm [38].

2.3 Locally linear embedding

In contrast to PCA and Isomap methods which preserve distances, LLE preserves the local topology (or local orientation, or angles between data points). LLE uses the notion that locally a non‐linear manifold (or curve) is well‐approximated by a linear curve. In other words, the manifold is locally linear and hence can be represented as a patchwork of linear curves. The algorithm first divides the manifold into patches and reconstructs each point in the patch based on the information (or weights) obtained from its neighbors (i.e., infer how a specific point is located with respect to its neighbors). This process extracts the local topology of the data. Finally, the algorithm reconstructs the global structure by combining individual patches and finding an optimized, low‐dimensional representation. Numerically, local topology information is constructed by finding the k‐nearest neighbors of each data point and reconstructing each point from the information about the weights of the neighbors. The global reconstruction from the local patches is accomplished by assimilating the individual weight matrices to form a global weight matrix [W] and evaluating the smallest eigenvalues of normalized global weight matrix [A].

LLE algorithm

-

1.

For each of the n input vectors from X={x 0,x 1,…,x n−1}:

-

(a)

Find the k‐nearest neighbors of the data point x i.

-

(b)

Construct the local covariance or Gram matrix G i

(9)

where xr and xs are neighbors of xi.

-

(c)

Weight vector, w i is computed by solving the linear system:

(10)

where 1 is a k×1 vector of ones.

-

2.

Using the vectors w i, build the sparse matrix W. The (i,j) of W is zero if x i and x j are not neighbors. If x i and x j are neighbors, then W(i,j) takes the values of the corresponding with vector, w i(j).

-

3.

From W, build A:

(11)

-

4.

Compute the eigenpairs (corresponding to the smallest eigenvalues) for A:

(12)

-

5.

Compute the low‐dimensional points in from the smallest eigenpairs.

2.4 Hessian LLE

Hessian LLE [14] (hLLE) is a modification of LLE and Laplacian Eigenmaps [40]. Mathematically, hLLE replaces the Laplacian (first derivative) operator with a Hessian (second derivative) operator over the graph. hLLE constructs patches, performs a local PCA on each patch, constructs a global Hessian from the eigenvectors thus obtained, and finally finds the low‐dimensional representation from the eigenpairs of the Hessian. hLLE is a topology preservation method and assumes that the manifold is locally linear.

hLLE algorithm

-

1.

At each given point x i, construct a k×n neighborhood matrix M i such that each row, j, of the matrix represents a point

(13)

where is the mean of k neighboring points.

-

2.

Perform singular value decomposition (SVD) of the M i to obtain the SVD matrices, U, V, D.

-

3.

Construct the (N∗d(d+1)/2) local Hessian matrix [H]i such that the first column is a vector of all ones and the next d columns are the columns of U followed by the products of all the d columns of [U].

-

4.

Compute Gram‐Schmidt orthogonalization [37] on the local Hessians [H]i and assimilate the last d(d+1)/2 orthonormal vectors of each to construct the global Hessian matrix [A] [14].

-

5.

Compute the eigenpairs (corresponding to the smallest eigenvalues) of the Hessian matrix:

(14)

-

6.

Compute the low‐dimensional points [Y] in from the eigenpairs:

(15)

An important point to note here is that, as discussed in the section ‘Methods: dimen‐sionality reduction’, matrix [A] encodes the required information for each of the DR techniques, and the construction of this matrix is what differentiates a spectral DR method from the rest. Matrix [A] is a normalized Euclidean matrix in the case of PCA, a normalized geodesic matrix in the case of Isomap, a normalized Hessian matrix for hLLE, and so on.

2.5 Dimensionality estimators

A key step in constructing the low‐dimensional points from the data is the choice of the low dimensionality or optimal dimensionality d. Methods like PCA and Isomap have an implicit technique to estimate the low dimensionality (approximately) using scree plots. We introduce a graph‐based technique that rigorously estimates the latent dimensionality of the data, which can be used in conjunction with the scree plot.

2.5.1 Dimensionality from the scree plot

Scree plot is a plot of the eigenvalues with the eigenvalues arranged in decreasing order of their magnitude. Scree plots obtained from PCA and Isomap (distance‐preserving methods) give an estimate of the dimensionality. A heuristic method of identifying the dimensionality is by identifying the elbow in the scree plot. A more quantitative estimate of dimensionality is estimated by choosing a value for pvar(d) that ensures a threshold of the minimum percentage variability. If λ1>=λ2>=…λn are the individual eigenvalues arranged in descending order, the percentage variability (pvar(d)) covered by considering first d eigenvalues is given by

A usual approach is to choose a d that takes 95% of the variability into account.

2.5.2 Geodesic minimal spanning tree estimator

We have recently utilized a dimensionality estimator based on the BHH theorem (Breadwood‐Halton‐Hammersley Theorem) [41]. This theorem states that the rate of convergence of the length of minimal spanning treea gives a measure of the latent dimensionality. This theorem allows one to express the dimensionality (d) of an unordered dataset as a function of the length of geodesic minimal spanning tree (GMST) of the graph of the dataset. Specifically, the slope of a log(n) vs. log(Ln) plot constructed by calculating the GMST length (Ln) with respect to increasing size of randomly chosen data points (n) provides an estimate of the dimensionality: , where m is the slope of the log‐log plot [19].

2.5.3 Correlation dimension

Correlation dimension is a space‐filling dimension which is derived from a more generic fractal dimension by assigning a value of q=2 in

where μ is a Borel probability measure on a metric space . is a closed ball of radius ε centered on z.

Numerical definition of correlation dimension is given by

where is a measure of proportion of distances less than ε[23],[42]. Intuitively, these ε values are like window ranges through which one zooms through the data. Too small ε will render the data as individual points, while too huge ε will make the entire dataset look like a single fuzzy spot. Hence, correlation dimension is sensitive to the epsilon values. One important point to note, however, is that the correlation dimension provides the user with a lower bound of the optimal dimensionality.

2.6 Software: SETDiR

These DR techniques are packaged into a modular, scalable framework for ease of use by the materials science community. We call this package, SETDiR. This framework contains two major components:

-

1.

Core functionality: developed using C++

-

2.

User interface: developed based on Java (Swing)

Figure 2 describes the scope of the functionality of both modules in SETDiR.

2.6.1 Core functionality

Functionality is developed using object‐oriented C++ programming language. It implements the following methods: PCA, Isomap, LLE, and dimensionality estimators like GMST and correlation dimension estimators [23].

2.6.2 User interface

A graphical user interface (shown in Figure 3) is developed using Java™ Swings Components with the following features which make it user‐friendly:

-

1.

Abstracts the user from the mathematical and programming details.

-

2.

Displays the results graphically and enhances the visualization of low‐dimensional points.

-

3.

Easy post‐processing of results: in‐built cluster analysis, ability to save plots as image files.

-

4.

Organized settings tabs: Based on the niche of the user, the solver settings are organized as Basic User and Advanced User tabs which abstract a new or a naive user from, otherwise overwhelming, details.

This framework can be downloaded from SETDiR (http://setdir.engineering.iastate.edu/doku.php?id=download). A more detailed discussion of the parallel features of the code is deferred to another publication. We next showcase the framework and the mathematical strategies on the apatite dataset.

3 Results and discussion

In this section of the paper, we compare and contrast the algorithms on an interesting dataset of apatites with immense technological and scientific significance. Apatites have the ability to accommodate numerous chemical substitutions and exhibit a broad range of multifunctional properties. The rich chemical and structural diversity provides a fertile ground for the synthesis of technologically relevant compounds [25]‐[29]. Chemically, apatites are conveniently described by the general formula , where AI and AII are distinct crystallographic sites that usually accommodate larger monovalent (Na +, Li +, etc.), divalent (Ca 2+, Sr 2+, Ba 2+, Pb 2+, etc.), and trivalent (Y 3+, Ce 3+, La 3+, etc.), B‐site is occupied by smaller tetrahedrally coordinated cations (Si 4+, P 5+, V 5+, Cr 5+, etc.), and the X‐site is occupied by halides (F −, Cl −, Br −), oxides, and hydroxides. Establishing the relationship between the microscopic properties of apatite complexes with those of the macroscopic properties can help us in gaining an understanding and promote the use of apatites in various technological applications. For example, information about the relative stability of the apatite complexes can promote the utilization of apatites as a suitable host material for immobilizing toxic elements such as lead, cadmium, and mercury (i.e., by identifying an apatite chemical composition that contain at least one of the aforementioned toxic elements and yet remaining thermodynamically stable). DR techniques offer unique insights into the originally intractable high‐dimensional datasets by enabling visual clustering and pattern association, thereby establishing process‐structure‐property relationship for chemically complex solids such as apatites.

3.1 Apatite data description

The crystal structure of the aristotype apatite with hexagonal unit cell is shown in the Figure 4 with the atoms projected along the (001) axis. The polyhedral representation of AIO6 and B O4 structural units are clearly shown with the Ca II‐site (pink atoms) and F‐site (green atoms) occupying the tunnel. Thin black line represents the unit‐cell of the hexagonal lattice.

The sample apatite dataset considered consists of 25 different compositions described using 29 structural descriptors. These structural descriptors, when modified, affect the crystal structure [44]. Therefore, by establishing the relationship between the crystal structure and these structural descriptors and analyzing the clustering of different compositions, conclusions can be drawn about how the changes in these structural descriptors (defining the atomic features) could affect the macroscopic properties (such as elastic modulus, band gap, and conductivity). The bond length, bond angle, lattice constants, and total energy data are taken from the work of Mercier et al. [26]; the ionic radii data are taken from the work of Shannon [45] and the electronegativity data is based on the Pauling’s scale [46]. The ionic radii of AI‐site () has a coordination number nine and AII‐site () has a coordination number seven (when the X‐site is F −) or eight (when the X‐site is Cl − or Br −). Our database describes Ca, Ba, Sr, Pb, Hg, Zn, and Cd in the A‐site; P, As, Cr, V, and Mn in the B‐site; and F, Cl, and Br in the X‐site. The 25 compounds considered in this study belong to the aristotype P 63/m hexagonal space group. We utilize SETDiR on the apatite data and present some of the results below. More information regarding the source of the apatite data can be found in [44]. A preliminary analysis (focusing only on PCA) can be found in [30].

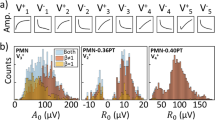

3.2 Dimensionality estimation

SETDiR first estimates the dimensionality using the scree plot. A scree plot is a plot of eigenvalue indices vs. eigenvalues. The occurrence of an elbow (or a sharp drop in eigenvalues) in a scree plot gives the estimate of the dimensionality of the data. Figure 5 displays the scree plots when the input vectors {x0,x1,…,xn−1} were normalized with respect to that when they were not normalized. We plot for comparison the eigenvalues that are obtained from both PCA and Isomap. This plot shows how the second eigenvalue collapses to zero when the input vectors are not normalized and hence emphasizes the importance of normalization of input vectorsb. It is also interesting to compare the eigenvalues of PCA and Isomap for normalized input: PCA being a linear method over‐estimates the dimensionality as 5, while Isomap estimates it to be 3. SETDiR subsequently uses the geodesic minimal spanning tree method to estimate the dimensionality of the apatite data. This method gives a rigorous estimate of 3 (Figure 6), which matches the outcome of the more heuristic scree plot estimate.

3.3 Low‐dimensional plots

In this section, we discuss the visual interpretation of the low‐dimensional plots obtained by applying the dimensionality reduction techniques ‐ PCA, Isomap, LLE, and hLLE ‐ to a set of apatites described using structural descriptors. Figure 7 (left) shows the 2D plot between principal components 2 and 3. The reason for showing this plot is that PC2‐PC3 map captures pattern that is similar to Isomap components 1 and 2. While we find associations among compounds that are similar to those as shown in Figure 7 (right), the nature of information is manifested differently. This is mainly attributed to the differences in the underlying mathematics of the two techniques, where PCA is essentially a linear technique and, on the other hand, Isomap is a non‐linear technique. To further interpret the hidden information captured by Isomap classification map (Figure 7), we have focused on the three regions separately.

Apatite PCA (left) and Isomap (right) result interpretation [[43]].

Figure 7 (right) shows a two‐dimensional classification map with isomap components 1 and 2 in the orthogonal axes. The two‐dimensional classification map groups various apatite compounds into three distinct regions that capture various interactions between A‐, B‐, and X‐site ions in complex apatite crystal structure. Region 1 corresponds to apatite compounds with fluoride (F) ion in the X‐site. All apatite compounds in this region contain only F in the X‐site but has different A‐site (Ca, Sr, Pb, Ba, Cd, Zn) and B‐site elements (P, Mn, V). Therefore, this unique region classifies F‐apatites from Cl and Br‐apatites. Region 2 belongs to apatite compounds with phosphorus (P) ion in the B‐site and contains Cl and Br ions in the X‐site. The uniqueness of this region is manifested mainly due to the presence of only smaller P ions in the B‐site. Similarly, region 3 belongs to apatite compounds with Cl ions in the X‐site and contains larger B‐site Cr, V, and As cations.

Figure 8 (right) presents the results from hLLE. It can be observed that the compounds that have highly covalent A‐site cation (e.g., Hg 2+ and Pb 2+) and highly covalent B‐site cation (P 5+) clearly separate out from the rest. An exception to this rule is Pb10(CrO4)6Cl2. Our PCA‐derived structure map also revealed similar pattern ‐ i.e., Pb10(CrO4)6Cl2 was found to not obey the general trend [44]. Note that the presence of Cr cation in the B‐site has been known to cause structural distortions in apatites.

Apatite LLE (left) and hLLE (right) result interpretation [[43]].

For example, Sr10(PO4)6Cl2 has a P 63/m symmetry, whereas Sr10(CrO4)6Cl2 has a distorted P 63 symmetry [28]. Based on the previous PCA work [44], we attribute the cause for this exception to two bond distortion angles: (i) rotation angle of AII‐ AII‐ AII triangular units and the angle that bond AI‐ O1 makes with the c‐axis. Compared to Hessian LLE, we cannot find any clear pattern with respect to chemical bonding in the LLE result Figure 8 (left).

Figure 9 shows a zoomed‐in plot of the Hessian LLE result.c Around the origin, we can find two clusters of compounds: (i) one on the left with negative component 1 value corresponding to compounds that have ionic alkaline earth metal cations in the A‐site and (ii) one on the right with positive component 1 value corresponding to compounds that have covalent A‐site cations. An exception here is Ca10(CrO4)6Cl2, which is found among the covalent A‐site cluster indicating that Ca10(CrO4)6Cl2 may have a distorted symmetry. It is important to recognize that neither PCA nor Isomap identifies Ca10(CrO4)6Cl2 as an exception. Compared to hLLE, we do not find any intriguing insights from the LLE analysis and therefore, we do not discuss LLE results.

Apatite hLLE result interpretation [[43]].

One needs to explore different manifold methods to fully understand high dimensional correlations and mappings. Hence, in the following section, we shall explore the impact of the Isomap analysis.

In Figure 10 region 1, the ionic radii of A‐site elements increases along the direction shown, with Zn 2+ cation being the smallest and Ba 2+ being the largest. Note that this A‐site ionic radii trend is not clearly seen in the PC2‐PC3 classification map (Figure 7). One of the key outcomes from Figure 10 is the identification that Pb10(PO4)6F2 compound is an outlier. In terms of Shannon’s ionic radii scale, Pb 2+ is larger than Ca 2+ but smaller than Sr 2+ cation. Ideally (assuming apatites as ionic crystals), the relative position of Pb10(PO4)6F2 should have been between Ca10(PO4)6F2 and Sr10(PO4)6F2 compounds in the map. However, this was not the case. The physical reason behind this observation could be attributed to the electronic structure of Pb 2+ ions [47]. The theoretical electronic structure calculations indicate that in the atom‐projected density of states curves, the Pb 2+ ions have active 6s2 lone‐pair electrons that hybridize with oxygen 2p electrons resulting in a strong covalent bond formation. Indeed, recent density functional theory (DFT) calculations [48] show that the electronic band gap (at the generalized gradient approximation (GGA) level) for Pb10(PO4)6F2 is 3.7 eV, which is approximately 2 eV smaller compared to Ca10(PO4)6F2 (5.67 eV) and Sr10(PO4)6F2 compounds (5.35 eV).

Apatite Isomap result interpretation (region 1) [[43]].

In our dataset, the electronic structure information of A‐site elements was quantified using Pauling’s electronegativity data. While PCA captures this behavior, the dominating effect of the electronic structure of Pb 2+ ions is more transparent within the mathematical framework of non‐linear Isomap analysis.

Besides, from Figure 10, it can also be inferred that the bond distortions of Zn apatite is different from other compounds. This trend correlates well with the non‐existence of Zn10(PO4)6F2 compounds due to the difficulty in experimental synthesis [49]. On the other hand, the relative correlation position of Hg10(PO4)6F2 compound offer intriguing insights. In fact, the uniqueness of Hg10(PO4)6F2 chemistry was previously detected in a PCA‐derived structure map [44], which clearly identified the composition as an outlier among other isostructural compounds. Guided by this original insight from PCA, recently, Balachandran et al. [48] showed using DFT calculations that the ground state structure of Hg10(PO4)6F2 is triclinic (space group ). Although the ionic size of Hg 2+ is very close to that of Ca 2+, the aristotype P 63/m symmetry distorts to symmetry in Hg10(PO4)6F2 due to the mixing of fully occupied Hg‐ 5d10 orbitals with the empty Hg‐ 6s0 orbitals. This mixing is unavailable to the Ca10(PO4)6F2 compound, because it does not have orbitals of appropriate symmetry.

In Figure 11, region 2 is highlighted where we find a clear trend of apatite compounds with respect to the ionic radii of A‐site elements. Similar to region 1, Pb apatites manifest themselves as outliers in region 2. The unique electronic structure of Pb 2+ cations in forming a covalent bond with oxygen 2p‐states is identified as the reason for the deviation of Pb apatites from the expected trend. The covalent bonding among Pb compounds appear to be independent of X‐site anion, when the B‐site is occupied by phosphorus cations. In Figure 11, Hg10(PO4)6Cl2 compound is found to be closely associated with Ca10(PO4)6Br2 indicating some similarity in the bond distortions of the two compounds. In comparing the relative correlation position of all Cl‐containing apatites (except Pb‐based compounds) in region 2, we predict Hg10(PO4)6Cl2 to have a stable apatite structure type (in sharp contrast to Hg10(PO4)6F2).

Apatite Isomap result interpretation (region 2) [[43]].

Figure 12 describes region 3 where we find clusters of apatite compounds with Cl ions in the X‐site and contain larger V, Cr, and As cations in the B‐site. The ionic radius of A‐site element increases in the direction as shown in the figure, and in this case, the Pb apatites are not outliers. The presence of large V, Cr, and As cations (compared to smaller P cations in regions 1 and 2) in the B‐site were identified as the reason for this behavior. Region 3 also identifies the existence of complex relationship between A‐site and B‐site chemistries in Cl apatites.

Apatite Isomap result interpretation (region 3) [[43]].

Several topological observations can be made on the data. Firstly, since low‐dimensional points obtained are different for both Isomap and PCA, it could be interpreted that the apatite data lie on a non‐linear manifold in the embedding space. However, a counter argument can be made based on the fact that PC2‐PC3 plot shows similar trends and clustering as that in Isomap1‐Isomap2. One possible reason for this happening could be due to the existence of outliers dominating and deviating the first PCA component (PC1) while Isomap being unaffected by this outlier; in which case, the data could actually be lying on a linear manifold. Secondly, the different clustering phenomena observed along different dimensionality reduction techniques might imply that the pattern/features seen in PCA and Isomap clusters are a function of the distance preserved, while those in hLLE and LLE is a function of the topology preserved. Hence, these chosen features represented by these clusters happen to be preserved all along the dimensionality reduction process from the embedded space to the lower‐dimensional space.

4 Conclusions

In this paper, we have detailed a mathematical framework of various data dimensionality reduction techniques for constructing reduced order models of complicated datasets and discussed the key questions involved in data selection. We introduced the basic principles behind data dimensionality reductiond. The techniques are packaged into a modular, computational scalable software framework with a graphical user interface ‐ SETDiR. This interface helps to separate out the mathematics and computational aspects from the scientific applications, thus significantly enhancing utility of DR techniques to the scientific community. The applicability of this framework in constructing reduced order models of complicated materials dataset is illustrated with an example dataset of apatites. SETDiR was applied to a dataset of 25 apatites being described by 29 of its structural descriptors. The corresponding low‐dimensional plots revealed previously unappreciated insights into the correlation between structural descriptors like ionic radius, bond covalence, etc., with properties such as apatite compound formability and crystal symmetry. The plots also uncovered that the shape of the surface on which the data lies could be non‐linear. This information is crucial as it can promote the use of apatite materials as a potential host lattice for immobilizing toxic elements.

5 Availability of supporting data

Information regarding the source of the apatite data can be found in [44].

6 Endnotes

a A tree is a graph where each pair of vertices is connected exactly with one path. A spanning tree of a graph G(V,E) is a sub‐graph that traces all the vertices in the graph. A minimal spanning tree (MST) of a weighted graph G(V,E,W) is a spanning tree with a minimal sum of the edge weights (length of the MST) along the tree. A geodesic minimal spanning tree (GMST) is an MST with edge weight representing geodesic distance. Computationally, GMST is computed using Prim’s (greedy) algorithm [50].

b Normalization of a variable is forcing a limit of [ −1, 1] or [0, 1] to an existing limit of [a, b] of a variable by dividing the sequence of numbers with the maximum absolute value of the sequence.

c Hessian LLE is highly sensitive to neighborhood size and is much more sensitive to the input estimated dimensionality. Incorrect input of estimated dimensionality implies construction of tangent planes of incorrect dimensions which, in turn, implies sub‐optimal low‐dimensional representation.

d A comprehensive catalogue of non‐linear dimensionality reduction techniques along with the mathematical prerequisites for understanding dimensionality reduction could be found in [23].

References

Rabe KM, Phillips JC, Villars P, Brown ID: Global multinary structural chemistry of stable quasicrystals, high‐ tc ferroelectrics, and high‐ tc superconductors. Phys Rev B 1992, 45: 7650–7676. 10.1103/PhysRevB.45.7650

Morgan D, Rodgers J, Ceder G: Automatic construction, implementation and assessment of pettifor maps. J Phys: Condens Matter 2003, 15(25):4361.

Chawla N, Ganesh VV, Wunsch B: Three‐dimensional (3d) microstructure visualization and finite element modeling of the mechanical behavior of SiC particle reinforced aluminum composites. Scripta Materialia 2004, 51(2):161–165. 10.1016/j.scriptamat.2004.03.043

Langer SA, Jr. Fuller ER, Carter WC: OOF: an image‐based finite‐element analysis of material microstructures. Comput Sci Eng 2001, 3(3):15–23. 10.1109/5992.919261

Liu ZK, Chen LQ, Raghavan P, Du Q, Sofo JO, Langer SA, Wolverton C: An integrated framework for multi‐scale materials simulation and design. J Comput Aided Mater Des 2004, 11: 183–199. 10.1007/s10820-005-3173-2

van Rietbergen B, Weinans H, Huiskes R, Odgaard A: A new method to determine trabecular bone elastic properties and loading using micromechanical finite‐element models. J Biomech 1995, 28(1):69–81. 10.1016/0021-9290(95)80008-5

Yue ZQ, Chen S, Tham LG: Finite element modeling of geomaterials using digital image processing. Comput Geotechnics 2003, 30(5):375–397. 10.1016/S0266-352X(03)00015-6

McVeigh C, Liu WK: Linking microstructure and properties through a predictive multiresolution continuum. Comput Methods Appl Mech Eng 2008, 197(4142):3268–3290. 10.1016/j.cma.2007.12.020

Zabaras N, Sundararaghavan V, Sankaran S: An information‐theoretic approach for obtaining property PDFs from macro specifications of microstructural variability. TMS Lett 2006, 3: 1–2.

Meredith JC, Smith AP, Karim A, Amis EJ: Combinatorial materials science for polymer thin‐film dewetting. Macromolecules 2000, 33(26):9747–9756. 10.1021/ma001298g

Takeuchi I, Lauterbach J, Fasolka MJ: Combinatorial materials synthesis. Mater Today 2005, 8(10):18–26. 10.1016/S1369-7021(05)71121-4

Lumley JL (1967) The structure of inhomogeneous turbulent flows. Atmospheric turbulence and radio wave propagation166–178. Lumley JL (1967) The structure of inhomogeneous turbulent flows. Atmospheric turbulence and radio wave propagation166–178.

Tenenbaum JB, de Silva V, Langford JC: A global geometric framework for nonlinear dimensionality reduction. Science 2000, 290(5500):2319–2323. 10.1126/science.290.5500.2319

Donoho DL, Grimes C: Hessian eigenmaps: new locally linear embedding techniques for high‐dimensional data. Proc Natl Acad Sci 2003, 100: 5591–5596. 10.1073/pnas.1031596100

Jain A, Ong SP, Hautier G, Chen W, Richards WD, Dacek S, Cholia S, Gunter D, Skinner D, Ceder G, Persson K: The materials project: a materials genome approach to accelerating materials innovation. APL Mater 2013, 1(1):011002. 10.1063/1.4812323

Page YL (2006) Data mining in and around crystal structure databases. MRS Bulletin 31: 991–994. Page YL (2006)

Rajan K, Suh C, Mendez PF: Principal component analysis and dimensional analysis as materials informatics tools to reduce dimensionality in materials science and engineering. Stat Anal Data Mining 2009, 1(6):361–371. 10.1002/sam.10031

Brasca R, Vergara LI, Passeggi MCG, Ferrona J (2007) Chemical changes of titanium and titanium dioxide under electron bombardment. Mat Res 10: 283–288.

Ganapathysubramanian B, Zabaras N: A non‐linear dimension reduction methodology for generating data‐driven stochastic input models. J Comput Phys 2008, 227(13):6612–6637. 10.1016/j.jcp.2008.03.023

Curtarolo S, Morgan D, Persson K, Rodgers J, Ceder G (2003) Predicting crystal structures with data mining of quantum calculations. Phys Rev Lett 91: 135503. Curtarolo S, Morgan D, Persson K, Rodgers J, Ceder G (2003) Predicting crystal structures with data mining of quantum calculations. Phys Rev Lett 91: 135503.

Fischer CC, Tibbetts KJ, Morgan D, Ceder G: Predicting crystal structure by merging data mining with quantum mechanics. Nat Mater 2006, 5(8):641–646. 10.1038/nmat1691

Morgan D, Ceder G, Curtarolo S: High‐throughput and data mining with ab initio methods. Meas Sci Technol 2005, 16(1):296. 10.1088/0957-0233/16/1/039

Lee JA, Verleysen M (2007) Nonlinear dimensionality reduction. Springer.

Van der Maaten LJP, Postma EO, Van Den Herik HJ (2009) Dimensionality reduction: a comparative review.

Elliott JC: Structure and chemistry of the apatites and other calcium orthophosphates, volume 4. Elsevier, Amsterdam; 1994.

Mercier PHJ, Le Page Y, Whitfield PS, Mitchell LD, Davidson IJ, White TJ: Geometrical parameterization of the crystal chemistry of P63/m apatites: comparison with experimental data and ab initio results. Acta Crystallogr Sect B: Structural Sci 2005, 61(6):635–655. 10.1107/S0108768105031125

Pramana SS, Klooster WT, White TJ: A taxonomy of apatite frameworks for the crystal chemical design of fuel cell electrolytes. J Solid State Chem 2008, 181(8):1717–1722. 10.1016/j.jssc.2008.03.028

White T, Ferraris C, Kim J, Madhavi S: Apatite–an adaptive framework structure. Rev Mineralogy Geochem 2005, 57(1):307–401. 10.2138/rmg.2005.57.10

White TJ, Dong ZL: Structural derivation and crystal chemistry of apatites. Acta Crystallogr Sect B: Structural Sci 2003, 59(1):1–16. 10.1107/S0108768102019894

Samudrala S, Rajan K, Ganapathysubramanian B (2013) Data dimensionality reduction in materials science In: Informatics for materials science and engineering: data-driven discovery for accelerated experimentation and application.. Elsevier Science.

Bergman S (1950) The kernel function and conformal mapping. Am Math Soc.

Roweis ST, Saul LK: Nonlinear dimensionality reduction by locally linear embedding. Science 2000, 290(5500):2323–2326. 10.1126/science.290.5500.2323

Fontanini A, Olsen M, Ganapathysubramanian B (2011) Thermal comparison between ceiling diffusers and fabric ductwork diffusers for green buildings, Energy and Buildings43(11): 2973–2987. ISSN 0378–7788. ., [http://dx.doi.org/10.1016/j.enbuild.2011.07.005]

Amini H, Sollier E, Masaeli M, Xie Y, Ganapathysubramanian B, Stone HA, Di Carlo D (2013) Engineering fluid flow using sequenced microstructures. Nature Communications 4: 2013.

Guo Q (2013) Incorporating stochastic analysis in wind turbine design: data-driven random temporal-spatial parameterization and uncertainty quantication. Graduate Theses and Dissertations. Paper 13206. ., [http://lib.dr.iastate.edu/etd/13206]

Wodo O, Tirthapura S, Chaudhary S, Ganapathysubramanian B (2012) A novel graph based formulation for characterizing morphology with application to organic solar cells. Org Electron: 1105–1113.

Golub GH, Van Loan CF (1996) Matrix computations. The John Hopkins University Press. Golub GH, Van Loan CF (1996) Matrix computations. The John Hopkins University Press.

Floyd RW: Algorithm 97: shortest path. Commun ACM 1962, 5(6):345. 10.1145/367766.368168

Bernstein M, De Silva V, Langford JC, Tenenbaum JB: Graph approximations to geodesics on embedded manifolds. Technical report, Department of Psychology, Stanford University; 2000.

Belkin M, Niyogi P: Laplacian eigenmaps for dimensionality reduction and data representation. Neural Comput 2003, 15(6):1373–1396. 10.1162/089976603321780317

Beardwood J, Halton JH, Hammersley JM (1959) The shortest path through many points. Math Proc Camb Philos Soc 55: 299–327.

Grassberger P, Procaccia I: Measuring the strangeness of strange attractors. Phys D: Nonlinear Phenomena 1983, 9(12):189–208. 10.1016/0167-2789(83)90298-1

Balachandran PV: Statistical learning for chemical crystallography. PhD thesis, Iowa State University; 2011.

Balachandran PV, Rajan K (2012) Structure maps for AI4AII6(BO4)6X2 apatite compounds via data mining. Acta Crystallogr Sect B 68(1): 24–33.

Shannon RD: Revised effective ionic radii and systematic studies of interatomic distances in halides and chalcogenides. Acta Crystallographic Sect A: Crystal Phys Diffraction Theor Gen Crystallography 1976, 32(5):751–767. 10.1107/S0567739476001551

Pauling L (1960) The nature of the chemical bond and the structure of molecules and crystals: an introduction to modern structural chemistry, vol 18. Cornell University Press.

Matsunaga K, Inamori H, Murata H (2008) Theoretical trend of ion exchange ability with divalent cations in hydroxyapatite. Phys Rev B 78: 094101.

Balachandran PV, Rajan K, Rondinelli JM (2014) Electronically driven structural transitions in A10(PO4)6F2 apatites (A = Ca, Sr, Pb, Cd and Hg). Acta Crystallogr Sect B 70: 612–615.

Flora NJ, Hamilton KW, Schaeffer RW, Yoder CH: A comparative study of the synthesis of calcium, strontium, barium, cadmium, and lead apatites in aqueous solution. Synthesis Reactivity Inorganic Metal‐organic Chem 2004, 34(3):503–521. 10.1081/SIM-120030437

Prim RC: Shortest connection networks and some generalizations. Bell Syst Tech J 1957, 36(6):1389–1401. 10.1002/j.1538-7305.1957.tb01515.x

Acknowledgements

We gratefully acknowledge the support from the National Science Foundation (NSF) grant CDI‐ NSF‐CDI ‐PHY 09‐41576. KR acknowledges the support from NSF: DMR‐ 13‐07811 and DMS‐11‐25909, Department of Homeland Security/NSF‐ARI Program: CMMI 09‐389018; Army Research Office grant W911NF‐10‐0397, Air Force Office of Scientific Research SFA9550‐12‐1‐0456, and the Wilkinson Professorship of Interdisciplinary Engineering. BG also acknowledges the support from NSF CAREER CMMI‐11‐49365.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

SKS, BG, and JZ formulated the mathematical framework. SKS and JZ implemented the mathematical framework. SKS and PVB performed the model reduction on the apatite data to extract the low‐dimensional representation. PVB and KR interpreted the results. SKS, PVB, BG, JZ, and KR discussed the results and wrote the paper. All authors read and approved the final manuscript.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (https://creativecommons.org/licenses/by/4.0), which permits use, duplication, adaptation, distribution, and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Samudrala, S.K., Balachandran, P.V., Zola, J. et al. A software framework for data dimensionality reduction: application to chemical crystallography. Integr Mater Manuf Innov 3, 205–224 (2014). https://doi.org/10.1186/s40192-014-0017-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1186/s40192-014-0017-5