Abstract

In this paper, the Jeffreys priors for the step-stress partially accelerated life test with Type-II adaptive progressive hybrid censoring scheme data are considered. Given a density function family satisfied certain regularity conditions, the Fisher information matrix and Jeffreys priors are obtained. Taking the Weibull distribution as an example, the Jeffreys priors, posterior analysis and its permissibility are discussed. The results, which present that how the accelerated stress levels, censored size, hybrid censoring time and stress change time etc. affect the Jeffreys priors, are obtained. In addition, a theorem which shows there exists a relationship between single observation and multi observations for permissible priors is proved. Finally, using Metroplis with in Gibbs sampling algorithm, these factors are confirmed by computing the frequentist coverage probabilities.

Similar content being viewed by others

Background

Noninformative priors, which make the Bayesian analysis is a distinct field in some sense, have received a lot of attention in the past decades (see references Berger et al. 2009, 2014; Guan et al. 2013; Jeffreys 1946). Among important noninformative priors are Jeffreys priors because of invariant and good frequentist properties.

Given a model \(\{p(y|{\theta }), y\in {\mathcal {Y}},{\theta }\in \Theta \}\) and a prior \(\pi ({\theta })\), for any invertible transform \(\eta =\eta ({\theta })\), the prior \(\pi ({\theta })\) is said to be satisfied Jeffreys’ rule if

where \(|J(\eta )|\) is the Jacobian of the transformation from \(\eta\) to \({\theta }\).

Jeffreys (1946) proposed the famous Jeffreys prior

satisfies the Jeffreys’ rule (1), where \(|I({\theta })|\) denotes the determinant of the expected Fisher information matrix \(I({\theta })\). The another important property of Jeffreys prior is its good frequentist properties.

For a prior \(\pi ({\theta })\), given a set of observations \(\mathbf y\) of size n. Let \({\theta }_{n}^{\pi }({\alpha }|\mathbf y )\) denote the \({\alpha }\)th quantile of the posterior distribution of \({\theta }\), i.e.,

A prior \(\pi\) is said to be an ith order matching prior for \({\theta }\) if

In the uniparametric case, for any smooth prior \(\pi ^{*}({\theta })\) under regularity conditions, Welch and Peers (1963) show that the frequentist coverage probability is

That is \(\pi ^{*}({\theta })\) is the first-order matching prior. However, using the Jeffreys prior \(\pi ({\theta })\), then

that is Jeffreys prior \(\pi ({\theta })\) is the second-order matching prior. Moreover, Welch and Peers (1963) also show that Jeffreys prior is the unique second-order matching prior under certain regularity conditions.

In the survival analysis, there are exist two most popularly methods to save the experiment cost, one is accelerated life test, the another is censored data. As products become highly reliable with substantially long life-spans, time-consuming and expensive tests are often required to collect a sufficient amount of failure data for analysis. This problem has been solved by use of accelerated life tests (ALT), in which the units are subjected to higher than normal stress levels, like pressure, voltage, vibration and temperature, etc., to induce rapid failures. The test is said to be SSPALT (see references Dharmadhikari and Rahman 2003; Han 2015; Ismail 2014; Wu et al. 2014; Abd-Elfattah et al. 2008), if a test unit is first run at normal condition and, if it does not fail for a specified time, then it is run at accelerated stress until failure occurs or the observation is censored.

In reliability experiments, the another way to to save time and reduce cost is censored data (see references Voltermana et al. 2014; Wu et al. 2014; Balakrishnan and Kundu 2013; Park et al. 2015. If the experimental time is fixed, we called the scheme is Type-I censoring scheme, in this case the number of observed failures is a random variable. Otherwise, if the number of observed failures is fixed, we called the scheme is Type-II censoring scheme, in this case the experimental time is a random variable.

In this paper, we investigate the Jeffreys priors for SSPALT with Type-II APHCS, this censoring scheme will be illustrated in the next section. The main results of this paper can be briefly described as follows.

-

Given a model \(\{p(y|{\theta }),y\in {\mathcal {Y}},{\theta }\in \Theta \}\) under certain regularity conditions, the likelihood function for the SSPALT with Type-II APHCS data is unified, and is done in “Likelihood function and special sub-likelihood functions” section.

-

The elements of Fisher information matrix are investigated under different censoring schemes. The relationships among with the Jeffreys priors which obtained from censored data and uncensored data are researched, and are done in “Jeffreys priors for survival models” section.

-

Taking the Weibull distribution as an example, the Jeffreys priors \(\pi _{1J}({\theta }),\) \(\pi _{2J}({\theta }),\) \(\pi _{3J}({\theta })\) and \(\pi _{4J}({\theta })\) based on the SSPALT with Type-II APHCS, Type-II APHCS, Type-II CS and complete samples are discussed, respectively, and are done in “Jeffreys priors for the Weibull distribution” section.

-

The posterior analyses based on the Jeffreys priors are studied in “Posterior analysis” section.

-

The permissibility of Jeffreys priors is analyzed, and a theorem which shows that there exists a relationship between single observation and multi observations for permissible prior is proved, and are done in “Permissible Jeffreys priors” section.

-

Using the random way Metroplis with in Gibbs sampling techniques, simulation is acquired in “Simulation studies and frequency analysis” section.

For convenience, we define the following notations:

- \(n_{u}\)::

-

the number of observed items at normal condition.

- \(n_{a}\)::

-

the number of observed items at accelerated condition.

- \(c_{u}\)::

-

times of censored items at normal condition.

- \(c_{a}\)::

-

times of censored items at accelerated condition.

- k::

-

acceleration factor.

- \(\tau\)::

-

stress change time.

- \(\eta\)::

-

hybrid censoring time.

- \(I_{A}\)::

-

indicator function on a set A.

- \(R_{i}\)::

-

the number of units removed at the time of the ith failure.

- \({\mathbf y} ^{n}\)::

-

vector of \((y_{1},\dots ,y_{n})\), specially \({\mathbf y} ^{0}=0\).

- \({\mathbf y} ^{i,j}\)::

-

vector of \((y_{i},\dots ,y_{j})\) for \(1< i< j\le n\), 0 for \(i>j\), \(y_{i}\) for \(i=j\).

- \(y_{i:m:n}\)::

-

ith observed failure times.

- \(A\mathop {=}\limits ^{\mathrm {def}}B\)::

-

B can be defined by A.

Progressive hybrid Type-II censoring schemes

In order to overcome the drawbacks of Type-I and Type-II censoring schemes, the mixture of Type-I and Type-II censoring schemes, which known as hybrid censoring scheme, was originally introduced by Epstein (1954). If each of the failure times, there are some surviving units are randomly removed from the experiment, and all the remaining surviving units are removed from the experiment at the time when the conditions of the terminate experiment are satisfied, we said this scheme is progressive censoring scheme (see Balakrishnan and Aggarwala 2000; Balakrishnan and Kundu 2013). In this paper, we consider the Type-II APHCS (see Ng et al. 2009) which can be defined as follows:

- 1.:

-

Suppose n items are placed on a life-test, the effective sample size \(m<n\) and time \(\eta\) are fixed in advance.

- 2.:

-

At the time of the first failure denoted \(Y_{1:m:n}\), \(R_{1}\) of the remaining \(n-1\) surviving units are randomly removed from the experiment.

- 3.:

-

The experimental continues, at the time of the kth failure denoted \(Y_{k:m:n}\), \(R_{k}\) of the remaining \(n-k-\sum _{i=1}^{k-1}R_{i}\) surviving units are randomly removed from the experiment.

- 4a.:

-

If the mth failure time occurs before time \(\eta\) \((i.e., Y_{m:m:n}<\eta )\), the experiment will be terminated at time \(Y_{m:m:n}\) and all remaining \(n-m-\sum _{i=1}^{m-1}R_{i}\) surviving units are removed from the experiment.

- 4b.:

-

Otherwise, once the experimental time passes time \(\eta\), but the number of observed failures has not yet reached m \((i.e., Y_{j:m:n}<\eta <Y_{j+1:m:n}<Y_{m:m:n})\). They do not withdraw any units at all except for the time of the mth failure where all remaining \(n-m-\sum _{i=1}^{j}R_{j}\) surviving items are removed.

From now on, we use the censoring scheme a and the censoring scheme b denote the schemes \((1){\rightarrow }(2){\rightarrow }(3){\rightarrow }(4a)\) and \((1){\rightarrow }(2){\rightarrow }(3){\rightarrow }(4b)\), respectively.

Likelihood function and special sub-likelihood functions

For simplicity, let \({\mathbf Y} ^{m}=(Y_{1},Y_{2},\dots ,Y_{m})\mathop {=}\limits ^{\mathrm {def}}(Y_{1:m:n},Y_{2:m:n},\dots ,Y_{m:m:n})\) denotes a Type-II APHCS sample from a density function family \(\{f(y|{\theta }),y\in {\mathcal {Y}},{\theta }\in \Theta \}\) under Cramer–Rao regularity conditions, with distribution function \(F(y)\mathop {=}\limits ^{\mathrm {def}}F(y\mid {\theta })\) and survival function \(S(y)\mathop {=}\limits ^{\mathrm {def}}S(y\mid {\theta })\).

Based on the transformation variable technique proposed by DeGroot and Goel (1979):

where T is the lifetime of the unit under normal use condition, \(\tau\) is the stress change time and \(k>1\) is the acceleration factor. The density function and survival function of Y under SSPALT model can be given by, respectively,

where

We suppose, without loss of generality, that \(\tau \le \eta\) except for particular pointed place. In order to unify the likelihood function, we introduce the following indicator functions:

Then, based on the Ismail (2014), the joint density function of SSPALT model with Type-II APHCS data is given by

where

\(R_{m}=n-m-\sum _{i=1}^{j}R_{i}\), for \(Y_{j}<\eta <Y_{j+1}\le Y_{m}\), and \(R_{m}=n-m-\sum _{i=1}^{m-1}R_{i}\), for \(Y_{m}<\eta .\) Obviously

Assume \(D_{1}\) and \(C_{1}\) are the sets of individuals for whom lifetimes are observed and censored at normal conditions, respectively. Similarly, suppose \(D_{2}\) and \(C_{2}\) are the sets of individuals for whom lifetimes are observed and censored at accelerated conditions, respectively. Then the likelihood function (3) can be rewritten as

Remark 1

Consider some special cases, we have the following results.

-

If \({\mathbf R} ^{m-1}={\mathbf 0}\), \(\tau <y_{m}\), then the Eq. (4) can be reduced to

$$\begin{aligned} L({\theta };{\mathbf y} ^{m})=\prod _{i\in D_{k}}f_{k}(y_{i}\mid {\theta })S_{2}^{R_{m}}(y_{m}\mid {\theta }),\quad k=1,2, \end{aligned}$$(5)that is the likelihood function for a SSPALT model with Type-II censored data.

-

Let the stress change time \(\tau\) be big enough, i.e., \(\tau >y_{m}\). Then, the accelerated stress is invalid in the life test, the Eq. (4) becomes

$$\begin{aligned} L({\theta };{\mathbf y} ^{m})=\prod _{i\in D_{1}}f_{1}(y_{i}\mid {\theta })\prod _{i\in C_{1}}S_{1}^{R_{i}}(y_{i}\mid {\theta }), \end{aligned}$$(6)that is the likelihood function for a life test model with Type-II APHCS data.

-

Let the pre-specified time \(\eta\) and stress change time \(\tau\) are big enough such that \(y_{m}<\min \{\tau ,\eta \}\), then the Eq. (4) can be simplified to

$$\begin{aligned} L({\theta };{\mathbf y} ^{m})=\prod _{i=1}^{m}f_{1}(y_{i}\mid {\theta })S_{1}^{n-m}(y_{m}\mid {\theta }), \end{aligned}$$(7)that is the likelihood function for a life test model with Type-II censored data.

Jeffreys priors for survival models

In fact, much work has been done to study the Jeffreys priors for life test with censored data. This goes to the early works of Santis et al. (2001) and Fu et al. (2012).

Let \({\mathbf Y} ^{n}\) be a set of data with size n, \(L({\theta };{\mathbf y} ^{n})\) be a likelihood function for an unknown parameter \({\theta }\), then the Jeffreys prior for \({\theta }\) is defined by

where \(\mid I({\theta })\mid\) denotes the determinant of the expected Fisher information matrix \(I({\theta })\), whose \(\{h,j\}\) element is given by

where expectation \(E_{{\theta }}\) with respect to the random variable Y.

In the life test with Type-II APHCS, notice that the number of removed units at the ith \((i=1,2,\dots ,m)\) failure \(R_{i}\) is a random variable, then \({\mathbf R} ^{m}\) is a random vector. We suppose that \(R_{1},R_{2},\dots ,R_{m}\) are i.i.d., data, and

where \(R_{i}(i=1,2,\dots ,m)\) may be not an integer, it contradict with practical, we must become it integer using proper methods in the sense of approximate in the simulation studies.

For convenience, \(k=1, 2\), we introduce the following notations:

Theorem 1

Let \({\varvec{Y}}^{n}\) be a sample of independent life data, with likelihood function \(L({\theta };{\varvec{y}}^{m})\) given in (4), then we have the following results:

- (a):

-

$$\begin{aligned} J_{hj}=-E_{{\theta }}\left[ {\partial }_{hj}^{2} {\mathscr {L}}({\theta })\mid {\theta }\right] =-\left( H_{D}({\theta }_{h},{\theta }_{j})+H_{C}({\theta }_{h},{\theta }_{j})\right) , \end{aligned}$$

where

$$\begin{aligned} H_{D}({\theta }_{h},{\theta }_{j})& = {} {\mathscr {E}}^{D_{1}}_{\sum }({\theta })P\left\{ {\delta }_{1i}=1\mid {\theta }\right\} + {\mathscr {E}}^{D_{2}}_{\sum }({\theta })P\left\{ {\delta }_{1i}=0\mid {\theta }\right\} ,\\ H_{C}({\theta }_{h},{\theta }_{j})& = {} {\mathscr {E}}^{C_{1}}_{\sum }({\theta })P\left\{ {\delta }_{1i}=1\mid {\theta }\right\} +{\mathscr {E}}^{C_{2}}_{\sum }({\theta })P\left\{ {\delta }_{2i}^{c}=1\mid {\theta }\right\} . \end{aligned}$$ - (b):

-

If the data are i.i.d., then

$$\begin{aligned}&H_{D}({\theta }_{h},{\theta }_{j})=\sum _{k=1,2}\sum _{i=1}^{m}{\mathscr {E}}^{D_{k}}({\theta })P\left\{ {\delta }_{1i}=2-k\mid {\theta }\right\} ,\\&H_{C}({\theta }_{h},{\theta }_{j})={\mathscr {E}}^{C_{1}}({\theta })\sum _{i=1}^{m}P\left\{ {\delta }_{1i}=1\mid {\theta }\right\} + {\mathscr {E}}^{C_{2}}({\theta })\sum _{i=1}^{m}P\left\{ {\delta }_{2i}^{c}=1\mid {\theta }\right\} . \end{aligned}$$

Proof

See “Appendix 1”. \(\square\)

Theorem 2

Let \({\varvec{Y}}^{n}\) be a sample of independent life data, with likelihood function \(L({\theta };{\varvec{y}}^{n})\) given in (4), then

- (a):

-

\(J_{hj}=E\left[ n_{u}\mid {\theta }\right] \left( {\mathscr {E}}^{D_{2}}_{\sum }({\theta })+{\mathscr {E}}^{C_{2}}_{\sum }({\theta })- {\mathscr {E}}^{D_{1}}_{\sum }({\theta })-{\mathscr {E}}^{C_{1}}_{\sum }({\theta })\right) -m{\mathscr {E}}^{D_{2}}_{\sum }({\theta })-m{\mathscr {E}}^{C_{2}}_{\sum }({\theta }),\) if \(Y_{m}<\eta\).

- (b):

-

\(J_{hj}=E\left[ n_{u}\mid {\theta }\right] \left( {\mathscr {E}}^{D_{2}}_{\sum }({\theta })+{\mathscr {E}}^{C_{2}}_{\sum }({\theta })- {\mathscr {E}}^{D_{1}}_{\sum }({\theta })-{\mathscr {E}}^{C_{1}}_{\sum }({\theta })\right) -m{\mathscr {E}}^{D_{2}}_{\sum }({\theta })-(j+1){\mathscr {E}}^{C_{2}}_{\sum }({\theta }),\) if \(Y_{j}<\eta <Y_{j+1}<Y_{m}\).

Proof

See “Appendix 2”. \(\square\)

Theorem 3

Let \({\varvec{Y}}^{n}\) be a sample of i.i.d., life data, with likelihood function \(L({\theta };{\varvec{y}}^{m})\) given in (4), and \(Y_{j}<\eta <Y_{j+1}<Y_{m}\), then

Proof

If the data are i.i.d., from the Theorem 1, the expectation does not depend on index i, then

that is

Similarly, we have

If \(\eta \le \tau <Y_{m}\), then the censored data only valid at time \(Y_{m}\). If \(Y_{m}<\tau\), then the accelerated stress is invalid. The results can be shown via a standard computation. \(\square\)

Based on the Theorem 3, we get the following results.

Corollary 1

Let \({\varvec{Y}}^{n}\) be a sample of i.i.d., life data, with likelihood function \(L({\theta };{\varvec{y}}^{m})\) given in (4), \(C_{k}=\emptyset\) \((k=1,2)\), then

Corollary 2

Let \({\varvec{Y}}^{n}\) be a sample of i.i.d., life data, with likelihood function \(L({\theta };{\varvec{y}}^{n})\) given in (4), \(\dim ({\theta })=p\) and \(C_{k}=\emptyset\) \((k=1,2)\), then the Jeffreys prior is

where \(\widetilde{\pi }^{J}({\theta })\) is the Jeffreys prior for the uncensored case under normal conditions.

Jeffreys priors for the Weibull distribution

In this section, we investigate the Jeffreys priors for the Weibull distribution. Suppose n independent units are placed on a life test, and \({\mathbf Y} ^{n}\) denotes a Type-II APHCS sample from the Weibull distribution with shape and scale parameters as \({\beta }\) and \({\theta }\) respectively, the probability density function (pdf) of Y is given by

In the uncensored data and without accelerated stress setting, Sun (1997) proved that the Jeffreys prior for the Weibull density function is

In order to obtain the exact results, in this section, we assume that the censoring scheme is b. Based on the Balakrishnan and Kundu (2013), the likelihood function under SSPALT with Type II APHCS can be provided as follows

Let \(Y_{a}=\tau +k(Y-\tau )\), then the density function of \(Z_{a}=\left( Y_{a}/{\theta }\right) ^{{\beta }}\) can be obtained via a standard computation, that is

Observe that the density function of \(z_{a}\) is complicated, for simplicity, we also introduce the notations

provided that the integral exists, where \(E_{({\theta },{\beta })}\) means the expectation being with respect to the random variable \(Z_{a}\).

If the acceleration factor \(k=1\), that is \(Y=Y_{a}\), \(Z=(Y/{\theta })^{{\beta }}\) is an exponential random variable with mean 1. For \(u\ge 1\), let

be the uth moment of \(\log (Z)\), we have the following relationships between \({\gamma }\) and \(\mathscr {E}\):

-

\({\mathscr {E}}^{1,0}=1,\quad {\mathscr {E}}^{0,1}={\gamma }_{1}\),

-

\({\mathscr {E}}^{1,1}=1+{\gamma }_{1},\quad {\mathscr {E}}^{1,2}={\gamma }_{2}+2{\gamma }_{1},\)

where \(-{\gamma }_{1}\) is Euler’s constant, \({\gamma }_{2}-{\gamma }_{1}^{2}\) is the variance of \(\log (Z)\). After a standard computation, some results can be obtained as follows.

Lemma 1

From the density function (11) and the likelihood function (13), we have

Proof

See “Appendix 3”. \(\square\)

Therefore, the expected Fisher information matrix of \(({\theta },{\beta })\) is

and the determinant of \(\Sigma\) is

where

The following result can be immediately obtained.

Theorem 4

Let \({\varvec{Y}}^{n}\) be the failure times observed from Weibull \(({\theta },{\beta })\), then the Jeffreys prior based on the SSPALT with Type-II APHCS is given by

where \(\psi _{1}({\beta })>0\) is a constraint which may not be satisfied in practice.

A obvious fact is that the Jeffreys prior depends on the accelerated stress levels, the number of observed units and the number of censored items. But how about without accelerated stress? In this case, \(n_{a}=c_{a}=0,n_{u}=m\), then

Theorem 5

Let \({\varvec{Y}}^{n}\) be the failure times observed from Weibull \(({\theta },{\beta })\), then the Jeffreys prior with the Type-II APHCS is given by

where \(\psi _{2}({\beta })=({\mathcal {M}}+1+{\mathcal {M}}{\beta }^{-1})(1+({\mathcal {M}}+1)({\gamma }_{2}+2{\gamma }_{1})) -(1-({\mathcal {M}}+1)({\gamma }_{1}+2))^{2}>0\).

If the experimenter dose not remove the sample at each failure time except the mth failure, that is Type-II CS, then \(n_{u}=m\), \(n_{u}{\mathcal {M}}=R_{m}\), we have

The following results are obtained directly.

Theorem 6

Let \({\varvec{Y}}^{n}\) be the failure times observed from Weibull \(({\theta },{\beta })\), then the Jeffreys prior with the Type-II CS is given by

where \(\psi _{3}({\beta })=(m+R_{m}+R_{m}{\beta }^{-1})(m+(m+R_{m})({\gamma }_{2}+2{\gamma }_{1})) -(m(1+{\gamma }_{1})+R_{m}({\gamma }_{1}+2))^{2}>0\).

Considering the censored data does not presented in the life test, then \(m=n, R_{m}=0\).

Corollary 3

Let \({\varvec{Y}}^{n}\) be the failure times observed from Weibull \(({\theta },{\beta })\), then the Jeffreys prior with complete sample is given by

where \(\psi _{4}={\gamma }_{2}-{\gamma }_{1}^{2}\) is the variance of \(\log (Z)\), \(Z\sim \exp (1)\).

Posterior analysis

Suppose \({\mathbf Y} ^{n}\) is a sample from Weibull \(({\theta },{\beta })\) under the SSPALT with Type-II APHCS. Let

-

\(\sum _{i\in [1:n_{u}:j:m]}(y_{i},y_{ai},R_{i},{\beta }) \mathop {=}\limits ^{\mathrm {def}}\sum _{i=1}^{n_{u}}(1+R_{i})y_{i}^{{\beta }}+\sum _{i=n_{u}+1}^{j}(1+R_{i})y_{ai}^{{\beta }} +\sum _{i=j+1}^{m}y_{ai}^{{\beta }}+R_{m}y_{am}^{{\beta }};\)

-

\(\prod _{i\in [1:n_{u}:m]}(y_{i},y_{ai},{\beta }) \mathop {=}\limits ^{\mathrm {def}}\prod _{i=1}^{n_{u}}y_{i}^{{\beta }-1}\prod _{i=n_{u}+1}^{m}y_{ai}^{{\beta }-1};\)

-

\(m_{1}\mathop {=}\limits ^{\mathrm {def}}n_{u}({\mathcal {M}}+1)+ ({\mathcal {M}}c_{a}+n_{a}){\mathscr {E}}^{1,0}\);

-

\(m_{2}\mathop {=}\limits ^{\mathrm {def}}m+n_{u}({\mathcal {M}}+1)({\gamma }_{2}+2{\gamma }_{1})+ ({\mathcal {M}}c_{a}+n_{a}){\mathscr {E}}^{1,2}\).

Notice that \(n_{u}+n_{a}=m\), it is clear that \(m_{1}>m\), \(\sqrt{\psi _{1}({\beta })}\le m_{1}m_{2}+m_{2}(m_{1}-m){\beta }^{-1}\).

Let

If there is \(Z_{k}\) such that \(Z_{k}\le \max \{Z_{1},\dots ,Z_{m}\}\), \(m\ge 2\), and the observations are distinct. Then from proposition 1 in Sun (1997), we have

Similarly, we can check the following result.

Theorem 7

If \(m\ge 2\) and \(Y_{1},\dots ,Y_{n_{u}},Y_{n_{u}+1},\dots ,Y_{m}\) are distinct, then the posterior distribution of \(({\theta },{\beta })\) based on the Jeffreys priors \(\pi _{1J},\pi _{2J},\pi _{3J},\pi _{4J}\) are proper, respectively.

Under this theorem conditions, the following results can be arrived via a standard computation.

Theorem 8

Given the Jeffreys prior \(\pi _{1J}\) based on the SSPALT with Type-II APHCS, then

- (a):

-

the marginal posterior density of \({\theta }\) is given by

$$\begin{aligned} h_{11}({\theta }|{{\varvec{data}}})=\frac{1}{c_{1}}\int _{0}^{\infty }\frac{{\beta }^{m}\sqrt{\psi _{1}({\beta })}}{{\theta }^{m{\beta }+1}} \prod _{i\in [1:n_{u}:m]}(y_{i},y_{ai},{\beta })\exp \left\{ -\frac{\sum _{i\in [1:n_{u}:j:m]} (y_{i},y_{ai},R_{i},{\beta })}{{\theta }^{{\beta }}}\right\} d{\beta }; \end{aligned}$$ - (b):

-

the marginal posterior cumulative distribution function of \({\theta }\) is given by

$$\begin{aligned} H_{11}({\theta }|{{\varvec{data}}})& = {} \frac{1}{c_{1}}\int _{0}^{\infty }s^{m-1}\sqrt{\psi _{1}(s)} \prod _{i\in [1:n_{u}:m]}(y_{i},y_{ai},s)\left( \sum _{i\in [1:n_{u}:j:m]} (y_{i},y_{ai},R_{i},s)\right) ^{-m}\\&\quad \times I_{\Gamma }\left( m,\frac{\sum _{i\in [1:n_{u}:j:m]} (y_{i},y_{ai},R_{i},s)}{{\theta }^{s}}\right) ds; \end{aligned}$$ - (c):

-

the marginal posterior density of \({\beta }\) is given by

$$\begin{aligned} h_{12}({\beta }|{{\varvec{data}}})=\frac{1}{c_{1}}{\beta }^{m-1}\sqrt{\psi _{1}({\beta })}\Gamma (m) \prod _{i\in [1:n_{u}:m]}(y_{i},y_{ai},{\beta })\left( \sum _{i\in [1:n_{u}:j:m]} (y_{i},y_{ai},R_{i},{\beta })\right) ^{-m}; \end{aligned}$$ - (d):

-

the marginal posterior cumulative distribution function of \({\beta }\) is given by

$$\begin{aligned} H_{12}({\beta }|{{\varvec{data}}})=\frac{1}{c_{1}}\Gamma (m)\int _{0}^{{\beta }}s^{m-1}\sqrt{\psi _{1}(s)} \prod _{i\in [1:n_{u}:m]}(y_{i},y_{ai},s)\left( \sum _{i\in [1:n_{u}:j:m]} (y_{i},y_{ai},R_{i},s)\right) ^{-m}ds. \end{aligned}$$where

$$\begin{aligned} c_{1}\mathop {=}\limits ^{\mathrm {def}}\Gamma (m)\int _{0}^{\infty }s^{m-1}\sqrt{\psi _{1}(s)} \prod _{i\in [1:n_{u}:m]}(y_{i},y_{ai},s)\left( \sum _{i\in [1:n_{u}:j:m]} (y_{i},y_{ai},R_{i},s)\right) ^{-m}ds \end{aligned}$$is the normalizing constant, \(I_{\Gamma }(m,y)\mathop {=}\limits ^{\mathrm {def}}\int _{y}^{\infty }s^{m-1}\exp \{-s\}ds\) is the complementary incomplete Gamma function and \(\Gamma (\cdot )\) is the Gamma function.

Permissible Jeffreys priors

Permissible priors, as pointed by Berger et al. (2014), can be viewed as some objective priors to those that satisfy the expected logarithmic convergence condition. We first recall the following definitions, for more details, we refer to Kullback and Leibler (1951), and Berger et al. (2009).

Definition 1

(Kullback and Leibler 1951) The logarithmic divergence of a probability density \(\widetilde{p}(y)\) of the random vector \(y\in \mathcal {Y}\) from its true probability density p(y), denoted by

provided the integral (or the sum) is finite.

\({\mathcal {K}}\{\widetilde{p}\mid p\}\) given a method that how to measure the distance between the distribution p and \(\widetilde{p}\). It is clear that \({\mathcal {K}}\{\widetilde{p}\mid p\}\) does not the normal in the meaning of functional analysis because it may be \({\mathcal {K}}\{\widetilde{p}\mid p\}\ne {\mathcal {K}}\{p\mid \widetilde{p}\}\). Berger et al. (2014) suggested that \({\mathcal {K}}\{\widetilde{p}\mid p\}\) is a divergence, not a distance, a generalized divergence can be found in reference Bernardo (2005) where the divergence is equipped with both primary advantages and normal benefits.

Definition 2

(Berger et al. 2009) Consider a parametric model \(\{p(y\mid {\theta }),y\in {\mathcal {Y}},{\theta }\in \Theta \}\), a strictly positive continuous function \(\pi ({\theta }),{\theta }\in \Theta\), and an approximating compact sequence \(\{\Theta _{i}\}_{i=1}^{\infty }\) of parameter spaces. The corresponding sequence of posteriors \(\{\pi _{i}({\theta }\mid y)\}_{i=1}^{\infty }\) is said to be expected logarithmically convergent to the formal posterior \(\pi ({\theta }\mid y)\) if

where \(p_{i}(y)=\int _{\Theta _{i}}p(y\mid {\theta })\pi _{i}({\theta })d{\theta }\).

Definition 3

(Berger et al. 2009) A strictly positive continuous function \(\pi ({\theta })\) is a permissible prior for model \(\{p(y\mid {\theta }),y\in {\mathcal {Y}},{\theta }\in \Theta \}\) if

-

1.

for all \(y\in \mathcal {Y}\), \(\pi ({\theta }|y)\) is proper, that is \(\int _{\Theta }p(y|{\theta })\pi ({\theta })d{\theta }<\infty\).

-

2.

for some approximating compact sequence, the corresponding posterior sequence is expected logarithmically convergent to \(\pi ({\theta }|y)\propto p(y|{\theta })\pi ({\theta })\).

Observe that \({\theta }\) is a scale parameter of \(Weibull({\theta },{\beta })\), using the results of Corollary 2 in Berger et al. (2009), we have

where \({\varepsilon }>0\) is a constant number, then we have \(\pi ({\theta })={\theta }^{-1}\) is a permissible prior function for the Weibull \(({\theta },{\beta })\) probability density function.

As suggested Berger et al. (2009), a prior might be permissible for a larger sample size, even if it is not permissible for a minimal sample size. But if a prior permissible for the minimal sample size, can we obtain it is permissible for the larger sample size? The answer is positive. In fact, there exists a relationship between single observation and multi observations concerning with the permissibility of a prior. This relationship first illustrated by Berger et al. (2009), and based on which, we have the following theorem.

Theorem 9

Let \(\{p({\varvec{y}}^{n}\mid {\theta })=p({\varvec{y}}^{k}\mid {\varvec{y}}^{k+1,n},{\theta })p({\varvec{y}}^{k+1,n}\mid {\theta }),k=1,2,\dots ,n,y_{i}\in {\mathcal {Y}}_{i}\subset {\mathcal {Y}},{\theta }\in \Theta \}\) be a likelihood function family. Consider a continuous improper prior \(\pi ({\theta })\) satisfying

For any compact set \(\Theta _{0}\subset \Theta\), \(\pi _{0}({\theta })=\frac{\pi ({\theta }){\varvec{I}}_{\Theta _{0}}}{\int _{\Theta _{0}}\pi ({\theta })d{\theta }}\), then

where

Proof

See “Appendix 4”. \(\square\)

Theorem 9 guarantees that the Jeffreys prior \(\pi ({\theta })={\theta }^{-1}\) is a permissible prior function for the multi observations. This theorem also reveals that the expected logarithmic discrepancy is monotonically non-increasing in sample size, but how much they difference, the following corollary gives exact answer.

Corollary 4

Let \({\mathcal {Y}}\) be a sample space, \(\{p({\varvec{y}}^{n}\mid {\theta })=p({\varvec{y}}^{k}\mid {\varvec{y}}^{k+1,n},{\theta })p({\varvec{y}}^{k+1,n}\mid {\theta }),k=1,2,\dots ,n,y_{i}\in {\mathcal {Y}}_{i}\subset {\mathcal {Y}},{\theta }\in \Theta \}\) be a likelihood function family. Consider a continuous improper prior \(\pi ({\theta })\) satisfying

For any compact set \(\Theta _{0}\subset \Theta\), \(\pi _{0}({\theta })=\frac{\pi ({\theta }){\varvec{I}}_{\Theta _{0}}}{\int _{\Theta _{0}}\pi ({\theta })d{\theta }}\), then

where \(\pi _{0}({\theta }, {\varvec{y}}^{k,n}\mid {\varvec{y}}^{k-1}),m_{0}( {\varvec{y}}^{k-1}\mid {\varvec{y}}^{k,n})\) are same as in Theorem 9.

Simulation studies and frequency analysis

Coverage probabilities are used to value a prior good or bad. The idea, as suggested by Ye (1993), is that if prior \(\pi _{1}\) has generally smaller difference between the posterior probabilities of Bayesian credible sets and the frequentist probabilities of the corresponding confidence sets than does \(\pi _{2}\), then prior \(\pi _{1}\) is favorable.

Let \(Y\sim Weibull({\theta },{\beta })\), given the Jeffreys prior \(\pi _{1J}\) based on the SSPALT with Type-II APHCS data, it is can be seen that the joint posterior density function is very complicated. Samples of \({\theta }\) and \({\beta }\) cannot be generated analytically to well known distributions, so sample directly by standard methods may be difficult. Now we resort to the hybrid algorithm, which introduced by Tierney (1994), by combining Metropolis sampling with the Gibbs sampling scheme using normal proposal distribution. Solimana et al. (2012) referred to the algorithm as hybrid MCMC method.

Notice that the full posterior conditional distributions of \({\theta }\) and \({\beta }\) are given by, respectively

where \(\sum\), \(\prod\), \(\psi _{1}\) can be found in “Jeffreys priors for the Weibull distribution” section, \(y_{i}(i=1,\ldots ,m)\) are Type-II progressive censoring samples generated by using the algorithm presented in Balakrishnan and Sandhu (1995) and Balakrishnan and Aggarwala (2000).

Given the current value of \({\theta }^{(j-1)}\), to sample \({\theta }\) from (16), a proposal value \({\theta }^{'}\) can be obtained from \(N({\theta }^{(j-1)},K_{{\theta }}V_{{\theta }})\), then we renew the \({\theta }^{(j-1)}\) by a probability, where \(V_{{\theta }}\) and \(K_{{\theta }}\) are variances-covariances matrix and scaling factor that adjust the rate of rejected or accepted samples. Similarly, given the current value of \({\beta }^{(j-1)}\), to sample \({\beta }\) from (17), a proposal value \({\beta }^{'}\) can be obtained from \(N({\beta }^{(j-1)},K_{{\beta }}V_{{\beta }})\), then we renew the \({\beta }^{(j-1)}\) by a probability. Robert et al. (1996) suggested that the reject rate in [0.15, 0.5] may be yields a well result. Specifically, this algorithm can be described as follows:

-

1.

Given the current values of \({\theta }^{(j-1)}\) and \({\beta }^{(j-1)}\).

-

2.

Using Metropolis random walk algorithm, generate \({\theta }^{(j)}\) from \(\pi _{1}({\theta }^{(j-1)}|{\beta }^{(j-1)},{\mathbf{data} })\) with normal proposal distribution \(N({\theta }^{(j-1)},K_{{\theta }}V_{{\theta }})\).

-

2a. Simulate a candidate value \({\theta }^{'}\) from the proposal density \(N({\theta }^{(j-1)},K_{{\theta }}V_{{\theta }})\).

-

2b. Compute the ratio \(r_{1}=\frac{\pi _{1}({\theta }^{'}|{\beta }^{(j-1)},{\mathbf{data} })}{\pi _{1}({\theta }^{(j-1)}|{\beta }^{(j-1)},{\mathbf{data} })}\).

-

2c. Compute the acceptance probability \(p_{1}=\min \{1,r_{1}\}\).

-

2d. Sample a value \({\theta }^{(j)}\) such that \({\theta }^{(j)}={\theta }^{'}\) with probability \(p_{1}\), otherwise \({\theta }^{(j)}={\theta }^{(j-1)}\).

-

-

3.

Employing Metropolis random walk algorithm, generate \({\beta }^{(j)}\) from \(\pi _{2}({\beta }^{(j-1)}|{\theta }^{(j)},{\mathbf{data} })\) with normal proposal distribution \(N({\beta }^{(j-1)},K_{{\beta }}V_{{\beta }})\).

-

3a. Simulate a candidate value \({\beta }^{'}\) from the proposal density \(N({\beta }^{(j-1)},K_{{\beta }}V_{{\beta }})\).

-

3b. Compute the ratio \(r_{2}=\frac{\pi _{2}({\beta }^{'}|{\theta }^{(j)},{\mathbf{data} })}{\pi _{2}({\beta }^{(j-1)}|{\theta }^{(j)},{\mathbf{data} })}\).

-

3c. Compute the acceptance probability \(p_{2}=\min \{1,r_{2}\}\).

-

3d. Sample a value \({\beta }^{(j)}\) such that \({\beta }^{(j)}={\theta }^{'}\) with probability \(p_{2}\), otherwise \({\beta }^{(j)}={\beta }^{(j-1)}\).

-

-

4.

Repeat the steps N times.

Let sample size \(n=30\), censoring scheme \(R=(0,1,1,2,1,1,2,3,1,2,2,2)\), stress change time is the 7th unit failure time, parameter true values \(({\theta },{\beta })=(1,1)\). We run this algorithm to generate a Markov chain with 50,000 observations. Discarding the first 500 values as burn-in period. Figures 1 and 2 are the outputs of the Markov chain under use normal condition and accelerated condition, respectively. It is clear that the chains are convergence well. The reject rates are about 0.19, 0.22 in Fig. 1 for \({\theta }\), \({\beta }\), and 0.37, 0.41 in Fig. 2 for \({\theta }\), \({\beta }\), respectively.

Let \({\theta }^{\pi }({\alpha }|{\mathbf{data }})\) be the posterior \({\alpha }\)-quantile of \({\theta }\) given \({\mathbf{data} }\). That is \(F({\theta }^{\pi }({\alpha }|{\mathbf{data} })|{\mathbf{data} })={\alpha }\), here \(F(\cdot |{\mathbf{data} })\) is the marginal posterior distribution of \({\theta }\). The frequentist coverage probability of this one side credible interval of \({\theta }\) is given by

Similarly, Let \({\beta }^{\pi }({\alpha }|{\mathbf{data} })\) be the posterior \({\alpha }\)-quantile of \({\beta }\) given \({\mathbf{data} }\). The frequentist coverage probability of this one side credible interval of \({\beta }\) is given by

To sum up, take \(Q^{\pi }({\alpha };{\theta })\) for an example, the computation of frequentist coverage probabilities are based on the following procedure.

-

1.

Given the true value of \({\theta }\) and \({\beta }\), Typle-II APHCS samples \({\mathbf y }^{n}\) are generated from the distribution \(Weibull({\theta },{\beta })\).

-

2.

For each generated sample \({\mathbf y} ^{n}\), the posterior \({\alpha }\) quantile of \({\theta }\), \({\theta }^{\pi }({\alpha }|{\mathbf y }^{n})\), can be estimated by the above hybrid MCMC method.

-

3.

Repeated N times for steps 1 and 2, the frequentist coverage probability \(Q^{\pi }({\alpha };{\theta })\) can be estimated by the relative frequency

$$\begin{aligned} \frac{\sharp \{{\theta }<{\theta }^{\pi }({\alpha }|{\mathbf y} ^{n})\}}{N}, \end{aligned}$$

where \(\sharp \{{\theta }<{\theta }^{\pi }({\alpha }|{\mathbf y} ^{n})\}\) denotes the number of \({\theta }\) less than random variable \({\theta }^{\pi }({\alpha }|{\mathbf y} ^{n})\).

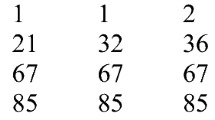

Tables 1, 2 and 3 can be obtained according to the above algorithm. Some of the points are quite clear from the numerical results.

-

As expected, from Table 1, it is observed that the performances of all frequentist coverage probabilities become better when the sample size increases and censored sample size decreases, and they are sensitive to the stress levels k.

-

The results are reported in Table 2 show that as the proportion of censored observations increase, the frequentist coverage probabilities decrease.

-

However, as Table 3 presents that the frequentist coverage probabilities do not much sensitive to the parameter true values \(({\theta },{\beta })\).

Concluding remarks

Jeffreys prior, as one of the most important noninformative priors, is discussed under the SSPALT setting with Type-II adaptive progressive hybrid censored data. The likelihood function, which contains two cases of the adaptive progressive hybrid censoring data, is unified. Let \(f(y|{\theta })\in \{f(y|{\theta }),y\in {\mathcal {Y}},{\theta }\in \Theta \}\), the Jeffreys priors for the survival models are obtained.

Taking Weibull distribution as an example, the Jeffreys priors based on the SSPALT with Type-II APHCS data are discussed, the other special cases also obtained. Besides, the posterior analyses based on these priors are studied. Employing Kullback–Leibler divergence as a measurement for the distance between two distributions, the permissibility of the priors is presented.

For one thing, given a prior, we can predict a future observation based on the observations by using the Bayesian predictive density function. However, there are few references study the prediction based on the noninformative priors. Work in these directions are currently under progress and we hope to report these findings in our future work. For another, note that an alternative generalisation of Kullback–Leibler divergence is \({\alpha }\)-divergence suggested by Amari (1985), it will be of great interest to establish the permissibility for a prior based on the \({\alpha }\)-divergence, more work may be needed along these directions.

References

Abd-Elfattah AM, Hassan AS, Nassr SG (2008) Estimation in step-stress partially accelerated life tests for the Burr type XII distribution using type I censoring. Stat Methodol 5:502–514

Amari S (1985) Differential-geometrical methods in statistics, vol 28. Lecture Notes in Statistics. Springer, New York

Balakrishnan N, Aggarwala R (2000) Progressive censoring theory, methods, and application, statistics for industry and technology. Birkhäuser, Boston

Balakrishnan N, Sandhu RA (1995) A simple simulational algorithm for generating progressive type-II censored samples. Am Stat 49:229–230

Balakrishnan N, Kundu D (2013) Hybrid censoring: models, inferential results and applications. Comput Stat Data Anal 57:166–209

Berger JO, Bernardo JM, Sun DC (2009) The formal definition of reference priors. Ann Stat 37:905–938

Berger JO, Bernardo JM, Sun DC (2014) Overall objective priors. Bayesian Analysis TBA 1-36

Bernardo JM (2005) Handbook of statistics 25 (DK Dey and CR Rao eds). Elsevier, Amsterdam

DeGroot MH, Goel PK (1979) Bayesian and optimal design in partially accelerated life testing. Naval Res Logist 16:223–235

Dharmadhikari AD, Rahman MM (2003) A model for step-stress accelerated life testing. Naval Res Logist 50:841–868

Epstein B (1954) Truncated life-test in exponential case. Ann Math Stat 25:555–564

Fu JY, Xu AC, Tang YC (2012) Objective Bayesian analysis of Pareto distribution under progressive type-II censoring. Stat Probab Lett 82:1829–1836

Guan Q, Tang YC, Xu AC (2013) Objective Bayesian analysis for bivariate Marshall–Olkin exponential distribution. Comput Stat Data Anal 64:299–313

Han D (2015) Estimation in step-stress life tests with complementary risks from the exponentiated exponential distribution under time constraint and its applications to UAV data. Stat Methodol 23:103–122

Ismail AA (2014) Likelihood inference for a step-stress partially accelerated life test model with type-I progressively hybrid censored data from Weibull distribution. J Stat Comput Simul 84:2486–2494

Jeffreys H (1946) An invariant form for the prior probability in estimation problems. Proc R Soc Lond Ser A Math Phys Sci 186:453–461

Kullback S, Leibler RA (1951) On information and sufficiency. Ann Math Stat 22:79–86

Ng HKT, Kundu D, Chan PS (2009) Statistical analysis of exponential lifetimes under an adaptive hybrid type-II progressive censoring scheme. Naval Res. Logist 56:687–698

Park S, Ng HKT, Chan PS (2015) On the Fisher information and design of a flexible progressive censored experiment. Stat Probab Lett 97:142–149

Robert GO, Gelman A, Gilks WR (1996) Weak convergence and optimal scaling of random walk Metropolis algorithms. Ann Appl Probab 7:110–120

Santis FD, Mortera J, Nardi A (2001) Jeffreys priors for survival models with censored data. J Stat Plan Inference 99:193–209

Solimana AA, Abd-Ellahb AH, Abou-Elheggag NA, Ahmedb EA (2012) Modified Weibull model: a Bayes study using MCMC approach based on progressive censoring data. Reliab Eng Syst Saf 100:48–57

Sun DC (1997) A note on noninformative priors for Weibull distributions. J Stat Plan Inference 61:319–338

Tierney L (1994) Markov chains for exploring posterior distributions (with discussion). Ann Stat 22:1701–1762

Voltermana W, Balakrishnan N, Cramer E (2014) Exact meta-analysis of several independent progressively type-II censored data. Appl Math Model 38:949–960

Welch BL, Peers HW (1963) On formulae for confidence points based on integrals of weighted likelihoods. Biometrika 76:604–608

Wu M, Shi YM, Sun YD (2014) Inference for accelerated competing failure models from Weibull distribution under type-I progressive hybrid censoring. J Comput Appl Math 263:423–431

Ye KY (1993) Reference prior when the stoping rule depends on the parameter of interest. Am Stat Assoc 88:360–363

Authors’ contributions

YS conceived the initial manuscript, briefly introduced the SSPALT and Typle-II APHCS, and investigated the Jeffreys priors of the survival models. Moreover he was involved in designing of the experiment. FZ constructed the statistical model, unified the likelihood function of the models and discussed the permissibility of Jeffreys priors. He also implemented the experiment by using R and analysed experimental outcomes. All authors read and approved the final manuscript.

Acknowledgements

This work is supported by the National Natural Science Foundation of China (Nos. 71571144, 71171164, 71401134, and 70471057), and Natural Science Basic Research Program of Shaanxi Province (2015JM1003).

Competing interests

The authors declare that they have no competing interests.

Author information

Authors and Affiliations

Corresponding authors

Appendices

Appendix 1: Proof of Theorem 1

Proof

From Eq. (4), we have

Then

Notice that

Similarly,

Above equations we used the linearity of the mathematical expectation. Then, we get

If the data are i.i.d., then the expectation does not depend on index i, we arrive at

Similarly,

\(\square\)

Appendix 2: Proof of Theorem 2

Proof

Notice that \(n_{u}+n_{a}=m\), and

If \(Y_{m}<\eta\), then,

If \(Y_{j}<\eta <Y_{j+1}<Y_{m}\), then \({\delta }_{2i}^{c}={\delta }_{2i}^{c\eta }\), and \(\sum _{i=1}^{m}{\delta }_{2i}^{c\eta }=j-n_{u}-1+1+1=j-n_{u}+1\), we have

\(\square\)

Appendix 3: Proof of Lemma 1

Proof

Letting \(y_{ai}=\tau +k(y_{i}-\tau )\) \((i=1,\dots ,m)\). Substituting the density function (11) into Eq. (13), then take logarithm on both sides of the equation, we get

Taking the partial derivatives with respect to \({\theta },{\beta }\), respectively, yields

Notice that the data are i.i.d., and \(E(R_{1})={\mathcal {M}}\), combining (14) and (15) implies

Similarly, we have

\(\square\)

Appendix 4: Proof of Theorem 9

Proof

Observed that \(f(y\mid {\theta })\) and \(\pi ({\theta })\) are continuous on \({\mathcal {Y}}\) and \(\Theta\), respectively, then the integral can be changed order under using the Fubini Theorem. Now, we check the first inequality.

where

where

Above equalities we used \(\idotsint \limits _{\prod _{i=1}^{n-1}{\mathcal {Y}}_{i}} m_{0}({\mathbf y} ^{n-1}\mid y_{n}) d{\mathbf y} ^{n-1}=1\).

Notice that

Using the concavity of \(\log (t)\) on \({\mathbb {R}}^{+}\), we obtain

Therefore, (18)–(21) imply that

that is the first inequality.

Similarly, for \(k=2,3,\dots ,n\), we get

\(\square\)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Zhang, F., Shi, Y. Permissible noninformative priors for the accelerated life test model with censored data. SpringerPlus 5, 366 (2016). https://doi.org/10.1186/s40064-016-2004-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40064-016-2004-0

Keywords

- Jeffreys priors

- Permissible priors

- Partially accelerated life test

- Progressive Type-II censoring

- Metroplis with in Gibbs sampling