Abstract

Background

Hospital-acquired pneumonia (HAP) and its specific subset, non-ventilator hospital-acquired pneumonia (nvHAP) are significant contributors to patient morbidity and mortality. Automated surveillance systems for these healthcare-associated infections have emerged as a potentially beneficial replacement for manual surveillance. This systematic review aims to synthesise the existing literature on the characteristics and performance of automated nvHAP and HAP surveillance systems.

Methods

We conducted a systematic search of publications describing automated surveillance of nvHAP and HAP. Our inclusion criteria covered articles that described fully and semi-automated systems without limitations on patient demographics or healthcare settings. We detailed the algorithms in each study and reported the performance characteristics of automated systems that were validated against specific reference methods. Two published metrics were employed to assess the quality of the included studies.

Results

Our review identified 12 eligible studies that collectively describe 24 distinct candidate definitions, 23 for fully automated systems and one for a semi-automated system. These systems were employed exclusively in high-income countries and the majority were published after 2018. The algorithms commonly included radiology, leukocyte counts, temperature, antibiotic administration, and microbiology results. Validated surveillance systems' performance varied, with sensitivities for fully automated systems ranging from 40 to 99%, specificities from 58 and 98%, and positive predictive values from 8 to 71%. Validation was often carried out on small, pre-selected patient populations.

Conclusions

Recent years have seen a steep increase in publications on automated surveillance systems for nvHAP and HAP, which increase efficiency and reduce manual workload. However, the performance of fully automated surveillance remains moderate when compared to manual surveillance. The considerable heterogeneity in candidate surveillance definitions and reference standards, as well as validation on small or pre-selected samples, limits the generalisability of the findings. Further research, involving larger and broader patient populations is required to better understand the performance and applicability of automated nvHAP surveillance.

Similar content being viewed by others

Background

Non-ventilator-associated hospital-acquired pneumonia (nvHAP) is a specific subset of hospital-acquired pneumonia (HAP) that affects patients without an invasive respiratory assist device, thereby differentiating it from ventilator-associated pneumonia (VAP) [1]. Despite being one of the most common healthcare-associated infections (HAIs) [2,3,4], and having considerable implications for patient morbidity, mortality, and healthcare expenditure [5], and noteworthy contribution to heightened antibiotic use, nvHAP has long been overlooked by the infection prevention and control (IPC) community [6, 7]. Recently, the importance and unique risk factors of nvHAP have led to the inclusion of nvHAP in internationally recognised IPC guidelines [8], and research into interventions to mitigate nvHAP has been gaining momentum over the past five years [9,10,11,12].

Surveillance is widely recognised as a fundamental aspect of infection prevention and control (IPC), instrumental in detecting outbreaks, shaping preventative initiatives, and assessing the efficacy of interventions [1]. Traditionally, HAI surveillance constitutes a labour-intensive exercise, heavily dependent on manual data collection and the nuanced clinical insights of IPC specialists. The emergence of fully and semi-automated surveillance systems holds the promise of a significant turning point in IPC [13]. These novel systems aim to streamline data acquisition, improve analytical precision, and expedite intervention, thereby maximising the utilisation of human and financial resources. However, the successful deployment of these automated systems often depends on the availability of the required data in a structured, electronic form. Complicating this is the presence of multiple, sometimes discordant, IT solutions within healthcare settings. Despite these challenges, the transformative potential of automated systems to reshape traditional surveillance methodologies highlights the increasing role of information technology and data science in contemporary healthcare environments [14]. The PRAISE network, a collaboration involving 30 experts from 10 European countries, provides a comprehensive roadmap for transitioning from conventional manual surveillance to automated systems [15]. The guidance underscores the importance of uniform data, stakeholder engagement, and methodological re-evaluation as crucial steps for successful large-scale implementation to elevate the quality of care.

While automated surveillance offers considerable advantages, there is a noticeable gap in both the scholarly and practical discourse about its applicability to nvHAP. Given the condition's widespread prevalence and its implications for the health of virtually all hospitalised patients, it is imperative to assess the performance of automated surveillance systems in detecting nvHAP as a foundation for preventative measures. Additionally, the unique complexities and challenges associated with nvHAP, including surveillance definitions that typically rely on unstructured data formats for signs and symptoms, may necessitate tailored solutions distinct from those for other HAIs. A 2019 systematic review of electronically aided surveillance systems for HAIs in general also covered performance metrics for lower respiratory tract infections but did not distinguish between nvHAP and VAP [16]. In light of the rapidly evolving literature on automated nvHAP surveillance, our systematic review aims to fill this knowledge gap. We focus on delineating the current state of fully automated and semi-automated surveillance systems specific to HAP, with a special focus on nvHAP.

Methods

We followed Preferred Reporting Items for Systematic Reviews and Meta-Analysis (PRISMA) recommendations when conducting this systematic review [17]. The study was registered at Prospero (Ref CRD42023444958). We searched Medline/Ovid, EMBASE, and the Cochrane Library for studies published before May 24th, 2023, without language restriction. The detailed search strategy was elaborated in collaboration with a health sciences librarian and is included in the Additional file 1. Duplicates were excluded, and additional articles were identified by reference list search from articles undergoing full-text review.

We incorporated studies that detailed automated surveillance methodologies for non-ventilated hospital-acquired pneumonia (nvHAP), as defined by the PRAISE Roadmap [15]. This encompassed both fully and semi-automated detection approaches, utilising data sources from electronic medical records, laboratory data and administrative claims data. Our review not only included articles specifically targeting nvHAP surveillance but also those focused on HAP overall, provided they did not exclusively concentrate on ventilator-associated pneumonia. We imposed no limitations on patient demographics or healthcare settings, embracing both hospital environments and other care facilities such as nursing homes or rehabilitation centres. The included articles were categorised based on whether they solely described the automated surveillance methodology or also provided validation of the system. Works limited to abstracts or posters were excluded from the review.

Two independent reviewers (AW and HS) conducted title and abstract screening. Any paper selected by either reviewer advanced to a full-text review stage. Subsequently, full-text evaluations were independently carried out by the same two reviewers. Discrepancies concerning article inclusion were deliberated between the two reviewers. In cases where consensus could not be reached, a third reviewer (AS) was consulted for final adjudication.

Utilising a standardised template, we extracted the following variables: year of publication, country, year and setting of surveillance, patient population, number of patients monitored, and the type of pneumonia (either nvHAP or HAP). We also catalogued the type of surveillance (fully or semi-automated), components incorporated into the selection algorithm, and incidence or incidence rates of nvHAP or HAP as determined by the automated surveillance. Additionally, the type of publication—whether it solely described the method or also included validation—was noted. For studies that validated their surveillance system, we further documented the type of reference standard used, and various performance metrics, including sensitivity, specificity, positive predictive value (PPV), negative predictive value (NPV), and workload reduction.

To evaluate the quality of the study design across all included papers, we employed the quality assessment instrument outlined by Streefkerk et al., utilising five of the six included quality indicators [16]. In the case of studies that validated automated surveillance methods against a reference standard, we used a modified version of the QUADAS-2 tool that was designed for assessing the quality of diagnostic accuracy studies, applying nine of its eleven ‘signalling questions’ [18].

Data were synthesised and presented in tables and within the full text. Given the considerable variability in automated surveillance methodologies, reference standards, and patient populations among the studies, we opted not to conduct a meta-analysis. Ethical approval was deemed unnecessary for this literature review.

Results

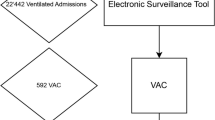

After eliminating duplicates, our database and manual reference searches yielded 380 articles. Following the screening of titles and abstracts, 328 articles were excluded, the full-text review of 52 articles left 13 (3.4% of the initial total) that satisfied our eligibility criteria and were included in the final review (Fig. 1). It is noteworthy that two of these articles described the same automated surveillance system and patient cohort, but each from a unique perspective—one from an infection prevention and control (IPC) [19], and the other from an information technology (IT) perspective [20]. These articles are jointly cited in subsequent sections [19, 20], bringing the count to 12 unique studies for our review.

Of the studies reviewed, 11 featured fully automated surveillance systems, while one showcased a semi-automated approach [21] (Table 1). Geographically, all the articles originated from high-income countries: eight from the United States, two from Switzerland, and one from Australia and France. All articles were published in 2005 or later, with nine (75%) appearing in or after 2018. Six studies specifically focused on non-ventilator hospital-acquired pneumonia (nvHAP), while the remaining six examined hospital-acquired pneumonia (HAP) more broadly.

Table 2 delineates 24 unique candidate definitions for surveillance systems, 23 fully and 1 semi-automated, with each publication contributing between one and ten definitions. Four articles examined iterations of fully automated nvHAP surveillance systems that incorporate impaired oxygenation in various combinations including chest radiology, fever, leukocyte count, microbiology, and antibiotic use [22,23,24,25]. Chest radiology was included as an indicator in seven systems, leucocytosis or leukopenia in eight, and fever in nine. Two surveillance systems integrated radiology with fever or leucocytosis resp. leukopenia, aligning with the ECDC or (when coupled with altered mental status) the CDC's pneumonia definition criteria [1, 26]. Microbiology results were incorporated in nine systems, and antibiotic administration was part of 14. Three articles focused exclusively on automated surveillance systems using ICD-10 discharge diagnostic codes [27,28,29], while two others combined ICD-10 codes with additional algorithmic elements [10, 30]. Three studies explored surveillance systems that employed natural language processing of radiology reports, clinical notes, or discharge summaries [10, 19, 20, 31].

Among the 23 surveillance systems described, 14 underwent validation. Three algorithms (No. 1, 8, and 10) were validated against multiple reference standards [22,23,24,25], while one paper validated several algorithms (No. 18-23) against one single reference standard [29] (Table 3). Eight studies validated their automated systems using well-established criteria such as National Nosocomial Infections Surveillance System (NNIS) [19, 20], National Healthcare Safety Network—Centre for Disease Control and Prevention (NHSN-CDC) [22, 24, 25, 28], Hospitals in Europe Link for Infection Control through Surveillance/European Centre for Disease Prevention and Control (HELICS/ECDC) [21, 27, 29], or Veterans Affairs Surgical Quality Improvement Program (VASQIP) applied by manual chart review by one or two reviewers [31]. One publication described validation against discharge diagnostic codes [22], while two studies utilised diagnoses provided by treating physicians [22, 23]. Additional validations were performed against discharge summaries [22], nvHAP as defined by an expert reviewer [22, 24], or a composite of the aforementioned criteria [25].

For fully automated surveillance, the sensitivity of the algorithms varied between 40 and 99%, specificity ranged from 58 to 98%, PPV spanned from 8 to 71%, and NPV extended from 74 to 100%. The only study describing semi-automated surveillance reported a sensitivity and NPV of 98% and 99%, respectively [21]. While all fully automated surveillance systems inherently achieve a 100% reduction in workload, the actual time saving was not reported in any of the studies. The only semi-automated system documented a 94% decrease in patients requiring manual screening but did not report the time reduction either [21].

Table 4 presents the quality scores for the included papers, which varied from 10 to 23 out of a possible 25 points as per the modified quality assessment tool by Streefkerk et al. [16]. Suboptimal scoring was common in separating the test from the validation cohorts (“Indicator 1”), as only one study included a separate derivation and a validation cohort [31], and in reporting the scope of performance characteristics (“Indicator 5”) with five studies scoring 0 because they did not validate the automated system or did not report sensitivity. The scores achieved in the adapted QUADAS-2 instrument [18], ranged between 7 and 9 out of a maximum of 9 points. Seven studies scored 0 in either the item “Did the study avoid inappropriate exclusion?” or “Did all patients receive a reference standard?” as either the surveillance or the reference standard was only applied to a subset of patients.

Discussion

We performed a systematic literature review on automated surveillance of HAP, with a specific focus on nvHAP. We found 13 articles representing 12 distinct studies, with 9 published after 2018, of which 6 focussing specifically on nvHAP [10, 21,22,23,24,25]. Except for one article, all described fully automated systems, featuring 24 different candidate definitions for surveillance. Validation was performed for 14 of these systems and relied on a range of mostly manual reference standards, most frequently employing definitions from authoritative organisations like the ECDC and the CDC. The performance of the fully automated surveillance systems varied, with higher sensitivity often correlated with lower positive predictive values (PPV) and vice versa.

Key metrics for evaluating automated surveillance systems include sensitivity, specificity, PPV, and NPV. The PRAISE network emphasises the importance of these metrics and recommends study designs to minimise differential and partial bias [15]. In our review, all but one validation study reported PPV. The majority also reported sensitivity, specificity, and NPV. The one semi-automated system we reviewed stood out with a sensitivity of 98% [21]. According to guidelines by van Mourik et al., semi-automated systems should ideally achieve a sensitivity above 90% [15]. In contrast, the fully automated systems demonstrating high sensitivity, often lagged in PPV and specificity. Such inconsistencies in wrongly classifying patients as having nvHAP could undermine trust among clinicians and administrators. Yet, Stern et al. point out that manual surveillance is not without its own reliability issues, the authors found a simple agreement between two reviewers assessing patients for CDC-NHSN pneumonia criteria of 75% and a moderate interrater agreement (Cohen Kappa: 0.5) [22]. This suggests that automated systems offer reliability comparable to human operatives. While the subjectivity, complexity, and ambiguity of clinical and surveillance definitions for pneumonia have been extensively debated [22], the gold standard for diagnosis, namely pathology, is seldom available. Currently, there are no universally accepted guidelines for validating automated HAI surveillance systems, leaving key questions about the minimal number of reviewers and performance criteria unanswered. Establishing such guidelines would significantly advance the development and validation of automated systems for nvHAP and other HAIs. Streefkerk et al. suggested an overall performance score (i.e. multiplying sensitivity and specificity) of ≥ 0.85 as a standard [16]. Notably, none of the fully automated systems in our review met this criterion.

Most validation studies in this review, except for two [19, 20, 29], assessed automated systems on preselected patient groups. Such selection often limits the system's applicability to a broader patient base. Furthermore, many studies had small sample sizes, between 120 and 250 patients, leading to less precise performance metrics.

Broadly, the identified automated surveillance systems fall into three categories: those utilising clinical data (some applying NLP methods for data extraction), those relying on discharge diagnostic codes, and those employing a combination of both). Systems relying mainly on pneumonia discharge codes show poor results, with sensitivities between 40 and 60% and even lower PPVs of 18–36% [27,28,29], raising questions about their inclusion in algorithms. In terms of components of systems using clinical data, earlier studies used factors like microbiology and antibiotic prescriptions, while recent ones focus on internationally accepted nvHAP criteria [1, 26], such as radiology, fever, and abnormal leukocyte counts. Antibiotic use is frequently included, given its role in treating HAP, which are rarely of viral aetiology only [21]. A group of researchers has significantly shaped this field since 2019, developing automated systems based on CDC definitions for pneumonia and ventilator-associated events [32, 33]. These systems focus on "worsening oxygenation" as a key criterion [22,23,24,25], and have been tested across multiple hospitals in pre-selected patient groups with deteriorating oxygen levels. Depending on the manual reference method and the candidate surveillance definition, sensitivities ranged from 56 to 71% and PPVs from 35 to 81%. However, the focus on deteriorating oxygen levels is debatable. While such patients may be more likely to experience adverse outcomes like ICU admission and death, the extent of nvHAP occurrence among patients who do not experience oxygenation impairment is still unknown. Considering antibiotic stewardship, this latter group could also significantly impact the number of preventable antibiotic prescriptions.

While currently many existing surveillance systems rely on structured data formats, established definitions and clinical diagnoses of pneumonia often include symptoms or findings typically recorded in unstructured text, such as clinical notes or discharge summaries, or images. Although three studies applied natural language processing (NLP) technology, the potential of artificial intelligence (AI) was not yet fully exploited in the published studies. The inclusion of AI could address this gap and further limiting manual work in semi-automated surveillance or increasing the performance of fully automated surveillance. Initial efforts date back to as early as 2005, spearheaded by researchers like Mendonca and Haas et al. [19, 20]. These advancements show great potential for incorporating often-overlooked symptomatology, such as coughing or auscultation findings, into future automated surveillance systems. For example, cutting-edge technologies like GPT-4, as explored by Perret and Schmid [34], could facilitate such integration. Furthermore, AI algorithms have already demonstrated capabilities that equal or surpass radiologists in identifying singular anomalies in chest X-rays [35].

Our review has limitations. While we aimed to include all validation studies on automated nvHAP surveillance, we may have missed some without validation that were part of intervention studies. The studies we did include showed considerable heterogeneity in study methodologies, surveillance algorithms, patient cohorts, and quality indicators, making a meta-analysis to calculate a collective performance impractical and prohibited a precise identification of most promising system elements. The lack of multi-setting validation and the small sample sizes in most studies affect our conclusions' robustness [21,22,23, 27, 28, 31].

Conclusion

Automated surveillance undeniably reduces workload, allows real-time reporting, and enables rapid interventions. Progress has been made in recent years to develop and validate automated nvHAP surveillance systems. However, the varied study designs and validation methods reviewed do not allow us to conclusively determine which features of nvHAP surveillance algorithms are most effective. From a standpoint of careful analysis and practical insights, some general advice can be offered. Firstly, we recommend to integrate indicators in nvHAP selection algorithms that are universally present in all nvHAP patients, such as radiology. For indicators with lower sensitivity, such as discharge diagnostic codes or positive microbiology results, a judicious application is advised. These might still be used as optional criteria or components of a sophisticated multivariable regression model. When the sensitivity of a specific indicator is uncertain, a detailed evaluation in a larger patient cohort with confirmed (nv)HAP, determined through manual surveillance, is essential. Incorporating recognised surveillance elements like fever or abnormal leucocyte counts can enhance the alignment with manual methods. Although the end goal is a fully automated HAP surveillance system, adopting semi-automated systems in the interim might be a practical approach, at least until the reliability of fully automated systems is indisputably established. Currently, the adequacy of fully automated systems, as indicated by the available performance metrics, remains a subject for debate. To provide a more conclusive evaluation, future research should employ a rigorous validation process to avoid bias and include broad patient populations. The implementation of emerging AI techniques holds the potential to revolutionise surveillance in the near future, provided challenges such as data privacy and AI biases can be overcome [36]. The capability of AI to mine extensive information from unstructured clinical data, especially concerning symptomatology and radiology, could significantly enhance the performance of automated surveillance systems..

Availability of data and materials

Not applicable.

References

European Centre for Disease Prevention and Control. Point prevalence survey of healthcare-associated infections and antimicrobial use in European acute care hospitals–protocol version 5.3. Stockholm: ECDC; 2016. http://ecdc.europa.eu/en/publications/Publications/PPS-HAI-antimicrobial-use-EU-acute-care-hospitals-V5-3.pdf. Accessed 4 Jan 2024.

Magill SS, Edwards JR, Bamberg W, Beldavs ZG, Dumyati G, Kainer MA, Lynfield R, Maloney M, McAllister-Hollod L, Nadle J, Ray SM, Thompson DL, Wilson LE, Fridkin SK, Healthcare EIP. Multistate point-prevalence survey of health care-associated infections. New Engl J Med. 2014;370(13):1198–208.

Zingg W, Metsini A, Balmelli C, Neofytos D, Behnke M, Gardiol C, Widmer A, Pittet D. On behalf of the Swissnoso N. National point prevalence survey on healthcare-associated infections in acute care hospitals, Switzerland 2017. Euro Surveill. 2019;24:32.

European Centre for Disease Prevention and Control. Point prevalence survey of healthcare-associated infections and antimicrobial use in European acute care hospitals, 2016–2017. Stockholm: ECDC; 2023. https://www.ecdc.europa.eu/sites/default/files/documents/healthcare-associated--infections-antimicrobial-use-point-prevalence-survey-2016-2017.pdf. Accessed 4 Jan 2024.

Davis JF, Edward. Pennsylvania. Patient Safety Authority. ECRI (Organization) Institute for Safe Medication Practices. The Breadth of Hospital-Acquired Pneumonia: Nonventilated versus Ventilated Patients in Pennsylvania. September 2012;Vol. 9, 3

Ewan VC, Witham MD, Kiernan M, Simpson AJ. Hospital-acquired pneumonia surveillance—an unmet need. Lancet Respir Med. 2017;5:771–2.

Munro SC, Baker D, Giuliano KK, Sullivan SC, Haber J, Jones BE, Crist MB, Nelson RE, Carey E, Lounsbury O, Lucatorto M, Miller R, Pauley B, Klompas M. Nonventilator hospital-acquired pneumonia: a call to action. Infect Control Hosp Epidemiol. 2021;42(8):991–6.

Klompas M, Branson R, Cawcutt K, Crist M, Eichenwald EC, Greene LR, Lee G, Maragakis LL, Powell K, Priebe GP, Speck K, Yokoe DS, Berenholtz SM. Strategies to prevent ventilator-associated pneumonia, ventilator-associated events, and nonventilator hospital-acquired pneumonia in acute-care hospitals: 2022 Update. Infect Control Hosp Epidemiol. 2022;43(6):687–713.

Wolfensberger A, Clack L, von Felten S, Faes Hesse M, Saleschus D, Meier MT, Kusejko K, Kouyos R, Held L, Sax H. Prevention of non-ventilator-associated hospital-acquired pneumonia in Switzerland: a type 2 hybrid effectiveness-implementation trial. Lancet Infect Dis. 2023;23(7):836–46.

Lacerna CC, Patey D, Block L, Naik S, Kevorkova Y, Galin J, Parker M, Betts R, Parodi S, Witt D. A successful program preventing nonventilator hospital-acquired pneumonia in a large hospital system. Infect Control Hosp Epidemiol. 2020;41(5):547–52.

Sopena N, Isernia V, Casas I, Diez B, Guasch I, Sabria M, Pedro-Botet ML. Intervention to reduce the incidence of non-ventilator-associated hospital-acquired pneumonia: a pilot study. Am J Infect Control. 2023;51:1324–8.

Klompas M. Progress in preventing non-ventilator-associated hospital-acquired pneumonia. Lancet Infect Dis. 2023;23(7):769–71.

van Mourik MSM. Getting it right: automated surveillance of healthcare-associated infections. Clin Microbiol Infect. 2021;27(Suppl 1):S1–2.

Martischang R, Peters A, Guitart C, Tartari E, Pittet D. Promises and limitations of a digitalized infection control program. J Adv Nurs. 2020;76(8):1876–8.

van Mourik MSM, van Rooden SM, Abbas M, Aspevall O, Astagneau P, Bonten MJM, Carrara E, Gomila-Grange A, de Greeff SC, Gubbels S, Harrison W, Humphreys H, Johansson A, Koek MBG, Kristensen B, Lepape A, Lucet JC, Mookerjee S, Naucler P, Palacios-Baena ZR, Presterl E, Pujol M, Reilly J, Roberts C, Tacconelli E, Teixeira D, Tangden T, Valik JK, Behnke M, Gastmeier P. Network P. PRAISE: providing a roadmap for automated infection surveillance in Europe. Clin Microbiol Infect. 2021;27(Suppl 1):S3–19.

Streefkerk HRA, Verkooijen RP, Bramer WM, Verbrugh HA. Electronically assisted surveillance systems of healthcare-associated infections: a systematic review. Euro Surveill. 2020;25(2):1900321.

Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, Shamseer L, Tetzlaff JM, Akl EA, Brennan SE, Chou R, Glanville J, Grimshaw JM, Hrobjartsson A, Lalu MM, Li T, Loder EW, Mayo-Wilson E, McDonald S, McGuinness LA, Stewart LA, Thomas J, Tricco AC, Welch VA, Whiting P, Moher D. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ. 2021;372: n71.

Whiting PF, Rutjes AW, Westwood ME, Mallett S, Deeks JJ, Reitsma JB, Leeflang MM, Sterne JA, Bossuyt PM. Group Q.-QUADAS-2: a revised tool for the quality assessment of diagnostic accuracy studies. Ann Intern Med. 2011;155(8):529–36.

Haas JP, Mendonca EA, Ross B, Friedman C, Larson E. Use of computerized surveillance to detect nosocomial pneumonia in neonatal intensive care unit patients. Am J Infect Control. 2005;33(8):439–43.

Mendonca EA, Haas J, Shagina L, Larson E, Friedman C. Extracting information on pneumonia in infants using natural language processing of radiology reports. J Biomed Inform. 2005;38(4):314–21.

Wolfensberger A, Jakob W, Faes Hesse M, Kuster SP, Meier AH, Schreiber PW, Clack L, Sax H. Development and validation of a semi-automated surveillance system-lowering the fruit for non-ventilator-associated hospital-acquired pneumonia (nvHAP) prevention. Clin Microbiol Infect. 2019;25(11):1428.

Stern SE, Christensen MA, Nevers MR, Ying J, McKenna C, Munro S, Rhee C, Samore MH, Klompas M, Jones BE. Electronic surveillance criteria for non-ventilator-associated hospital-acquired pneumonia: assessment of reliability and validity. Infect Control Hosp Epidemiol. 2023;8:1–7.

Ji W, McKenna C, Ochoa A, Ramirez Batlle H, Young J, Zhang Z, Rhee C, Clark R, Shenoy ES, Hooper D, Klompas M. Program CDCPE development and assessment of objective surveillance. Definitions for nonventilator hospital-acquired pneumonia. JAMA Netw Open. 2019;2(10):e1913674.

Ramirez Batlle H, Klompas M. Program CDCPE. Accuracy and reliability of electronic versus CDC surveillance criteria for non-ventilator hospital-acquired pneumonia. Infect Control Hosp Epidemiol. 2020;41(2):219–21.

Jones BE, Sarvet AL, Ying J, Jin R, Nevers MR, Stern SE, Ocho A, McKenna C, McLean LE, Christensen MA, Poland RE, Guy JS, Sands KE, Rhee C, Young JG, Klompas M. Incidence and outcomes of non-ventilator-associated hospital-acquired pneumonia in 284 US Hospitals using electronic surveillance criteria. JAMA Netw Open. 2023;6(5): e2314185.

CDC NHSN; Pneumonia (Ventilator-associated [VAP] and non-ventilatorassociated Pneumonia [PNEU]) event. https://www.cdc.gov/nhsn/pdfs/pscmanual/6pscvapcurrent.pdf. Accessed 23 Oct 2023.

Wolfensberger A, Meier AH, Kuster SP, Mehra T, Meier MT, Sax H. Should international classification of diseases codes be used to survey hospital-acquired pneumonia? J Hosp Infect. 2018;99:81–4.

Valentine JC, Gillespie E, Verspoor KM, Hall L, Worth LJ. Performance of ICD-10-AM codes for quality improvement monitoring of hospital-acquired pneumonia in a haematology-oncology casemix in Victoria, Australia. Health Inf Manag. OnlineFirst, November 14, 2022

Bouzbid S, Gicquel Q, Gerbier S, Chomarat M, Pradat E, Fabry J, Lepape A, Metzger MH. Automated detection of nosocomial infections: evaluation of different strategies in an intensive care unit 2000–2006. J Hosp Infect. 2011;79(1):38–43.

Zilberberg MD, Nathanson BH, Sulham K, Fan W, Shorr AF. A novel algorithm to analyze epidemiology and outcomes of carbapenem resistance among patients with hospital-acquired and ventilator-associated pneumonia: a retrospective cohort study. Chest. 2019;155(6):1119–30.

FitzHenry F, Murff HJ, Matheny ME, Gentry N, Fielstein EM, Brown SH, Reeves RM, Aronsky D, Elkin PL, Messina VP, Speroff T. Exploring the frontier of electronic health record surveillance: the case of postoperative complications. Med Care. 2013;51(6):509–16.

CDC NHSN; Ventilator-associated event (VAE). https://www.cdc.gov/nhsn/pdfs/pscmanual/10-vae_final.pdf. Accessed 23 Oct 2023.

CDC NHSN; Pneumonia (Ventilator-associated [VAP] and non-ventilator associated Pneumonia [PNEU]) event. https://www.cdc.gov/nhsn/pdfs/pscmanual/6pscvapcurrent.pdf. Accessed 23 Oct 2023.

Perret J, Schmid A. Application of OpenAI GPT-4 for the retrospective detection of catheter-associated urinary tract infections in a fictitious and curated patient data set. Infect Control Hosp Epidemiol. 2023;45:1–4.

Chassagnon G, Vakalopoulou M, Paragios N, Revel MP. Artificial intelligence applications for thoracic imaging. Eur J Radiol. 2020;123: 108774.

Marra AR, Nori P, Langford BJ, Kobayashi T, Bearman G. Brave new world: leveraging artificial intelligence for advancing healthcare epidemiology, infection prevention, and antimicrobial stewardship. Infect Control Hosp Epidemiol. 2023;44(12):1909–12.

Acknowledgements

We would like to thank Martina Gosteli, Liaison Librarian at the University Library Zurich, for her valuable assistance with the literature search strategy and execution.

Funding

No funding was received for this systematic literature review.

Author information

Authors and Affiliations

Contributions

AW, AS, and HS designed the study. Title and abstract screening, as well as full-text review of all potentially eligible articles was done independently by AW and HS, and AS resolved any disagreements. AW, AS, and HS extracted, analysed and interpreted the data. AW and HS drafted the manuscript, and AS provided a critical review. All authors agree with the content and conclusions of this manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

We declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1

. Embase Search Strategy.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Wolfensberger, A., Scherrer, A.U. & Sax, H. Automated surveillance of non-ventilator-associated hospital-acquired pneumonia (nvHAP): a systematic literature review. Antimicrob Resist Infect Control 13, 30 (2024). https://doi.org/10.1186/s13756-024-01375-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13756-024-01375-8