Abstract

Background

Mammals, globally, are facing population declines. Strategies increasingly employed to recover threatened mammal populations include protecting populations inside predator-free havens, and translocating animals from one site to another, or from a captive breeding program. These approaches can expose predator-naïve animals to predators they have never encountered and as a result, many conservation projects have failed due to the predation of individuals that lacked appropriate anti-predator responses. Hence robust ways to measure anti-predator responses are urgently needed to help identify naïve populations at risk, to select appropriate animals for translocation, and to monitor managed populations for trait change. Here, we outline a protocol for a systematic review that collates existing behavioural assays developed for the purpose of quantifying anti-predator responses, and identifies assay types and predator cues that provoke the greatest behavioural responses.

Methods

We will retrieve articles from academic bibliographic databases and grey literature sources (such as government and conservation management reports), using a Boolean search string. Each article will be screened for the satisfaction of eligibility criteria determined using the PICO (Population—Intervention—Comparator—Outcome) framework, to yield the final article pool. Using metadata extracted from each article, we will map all known behavioural assays for quantifying anti-predator responses in mammals and will then examine the context in which each assay has been implemented (e.g. species tested, predator cue characteristics). Finally, with mixed effects modelling, we will determine which of these assays and predator cue types elicit the greatest behavioural responses (standardised difference in response between treatment and control groups). The final review will highlight the most robust methodology, will reveal promising techniques on which to focus future assay development, and will collate relevant information for conservation managers.

Similar content being viewed by others

Background

The need to quantify anti-predator responses

Mammals are experiencing an alarming rate of extinction [1,2,3] due to anthropogenic impacts such as habitat loss and fragmentation, illegal hunting, and exotic predators [4]. Redressing this loss of biodiversity requires well-informed and well-tested management interventions. Many of these interventions will need to be underpinned by a mechanistic understanding of species’ behaviour.

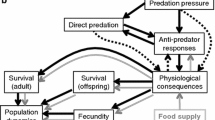

How an animal responds to predators has substantial bearing on its ability to survive. Predation, particularly from introduced predators, has been a major driver of mammal declines and extinctions around the world [5,6,7,8,9]. This is especially true for individuals and populations that have had limited or no exposure to predators, such as many island populations [10, 11], individuals raised in captivity and those moved to an environment with novel predators [12,13,14]. Improving our understanding of how animals behave in response to predatory stimuli should provide crucial insights for their conservation management and can improve our ability to retain antipredator traits in managed populations [12, 15, 16]. An animal’s response to predators may be behavioural (e.g. spatial and temporal avoidance [17, 18], avoiding detection [19] and evasion [20]) or physical responses (e.g. chemical [21] and physical defences [22]). Behavioural responses are likely to be more plastic and responsive at shorter time frames than physical responses, and are therefore particularly important when considering the acute impacts of predators on the persistence of predator-naïve species.

Common strategies employed to prevent faunal extinctions include captive breeding [23], translocations (the deliberate movement of animals from one population or site for release in another [24]) and establishment of populations in predator-free havens (areas isolated from predators through a geographical or physical barrier, such as islands or fenced enclosures [25,26,27]). Such approaches have secured a number of populations of mammals, including African elephants [28, 29], European lynx [30], elk [31], giant pandas [32], and Tasmanian devils [33]. Despite their initial successes, these strategies are at risk of longer term failure because they can expose naïve individuals to novel contexts for which they may lack appropriate behavioural responses. Further, such populations become vulnerable to acute population collapses from uncontrolled predator incursions.

Australia provides a compelling case study to illustrate the challenges of mammal conservation. More than one third of modern mammal extinctions have occurred in Australia, largely due to the introduction of feral cats and foxes [34]. In response, havens free of introduced predators are a key component of conserving much of the remaining mammal fauna [26, 27, 35]. Australia’s current network of havens provides habitats for at least 32 mammal species, and has secured at least 188 populations and sub-populations [26]. Evidence is emerging, however, that in the absence of feral and/or native predators, havened populations no longer exhibit anti-predator behaviours [13, 36,37,38,39,40]. This renders individuals in these populations fundamentally unfit for reintroduction back into areas where predators still persist. Because the success of many translocations has ultimately been compromised by predation [35, 41, 42], the future of mammal conservation in Australia, and more broadly, hinges on developing methods and strategies that can quantify and conserve antipredator behaviours in havened and translocated populations [39].

To undertake an adaptive management approach, we require monitoring and evaluation of anti-predator responses in mammalian species. Despite awareness that behavioural traits such as boldness or shyness can influence conservation outcomes, measuring such traits is rarely incorporated into monitoring and management [16, 43]. Anti-predator responses have only recently been identified as a potential barrier to the success of conservation projects [13, 37,38,39], and while an array of academic literature exists that details various methods for measuring these behaviours [15, 38, 39, 44,45,46,47,48], accessing the methodologies, comparing them for rigor and identifying the most appropriate measure is labour intensive. Stakeholders, such as conservation and population managers, are likely to be seeking this information, but also likely to be limited by the time and resources necessary to find it. Ultimately, we currently lack a robust framework for the universal monitoring and evaluation of anti-predator traits [49]. The first step to developing such a framework is to understand which behavioural assays have been conducted, which are most effective (capture or provoke the greatest behavioural response), and whether the type of predator cue is important. In the absence of this crucial information, the adoption of inappropriate and poorly-performing behavioural metrics may prevail.

Identification and engagement of stakeholders

In addition to the review team, stakeholders relevant to this review have been identified as those who research or manage animal populations, for example, members of species recovery teams (Fig. 1). To ensure the information collected throughout this review is tailored toward the target audience, and thus of the most relevance for application, a variety of stakeholders from each of the categories in Fig. 1 were consulted during the development of this protocol. We invited 27 stakeholders to comment on the draft protocol, and after receiving 16 replies (ten from Australia and six from other countries), we incorporated their suggestions.

Objective of the review

We will present all known behavioural assays for measuring or quantifying anti-predator responses in mammals by collating information into an accessible format. Specifically, we will: (1) reveal different methods, (2) describe the context within which each method was conducted, and (3) highlight methods or aspects that warrant further examination, thus guiding the future development of behavioural assays. Further, using a modelling approach, we will then identify which types of behavioural assays and predator cues elicit the greatest responses in mammals (difference in effect size between the treatment and control conditions). A formal evidence synthesis is required to explore all potential methods and to avoid bias toward those published in academic journals, because much information may come from governmental reports and species recovery plans [16, 50]. The final review will act as a guide: it will highlight existing methodologies and provide additional information to assess their relevance, allowing stakeholders to easily select the most appropriate and effective behavioural assay for their purpose.

Using the PICO (Population—Intervention—Comparator—Outcome) framework [51], we have broken our review into two questions that will define our search scope. We will first systematically map all known methodologies answering a primary question: what behavioural assays have been used to quantify anti-predator responses in mammals? The elements of this question are:

Population

Free-living, wild-caught, or captive mammals (global).

Intervention

-

(i)

A behavioural assay that quantifies anti-predator responses to predator exposure

-

(ii)

A behavioural assay that quantifies anti-predator responses to predator cues

Articles that conform to both the Population and Intervention criteria will be used to answer this primary question. A secondary question we seek to answer will be assessed quantitatively by modelling the metadata collected from each article, asking: which assay-types and predator cues elicit the greatest behavioural responses? This question utilises the same Population and Intervention criteria as the primary question, but requires further assessment using Comparator and Outcome criteria to select studies for the systematic review. The additional elements of the secondary question are:

Comparator

Comparison between levels of predator exposure (e.g. before versus after exposure, exposure versus no exposure) or comparison between exposure to a predator cue versus a control.

Outcome

Difference in the behavioural response between the treatment (e.g. predator/predator cue exposure) and control conditions. Metrics of responses will differ between studies depending on assay type and will be compared using standardised effect sizes.

Articles that involve at least one Comparator element can then additionally be considered for the systematic review to investigate which Intervention elements (behavioural assays and predator cues) produce the greatest Outcome. The PICO elements of our two questions are illustrated in Fig. 2.

Methods

Searching for articles

Scoping

To develop a search strategy, an initial scoping exercise was conducted using a test-list of 10 benchmark articles that assess anti-predator responses (Additional file 1), each selected as they cover a variety of different assays and predator scenarios. The titles, key words, and abstracts of each scoping article were mined, both manually, and using word clouds (R package wordcloud [52]; in the R environment [53]), to determine the most appropriate search terms [54]. An initial search string was then created using Boolean operators to combine the relevant terms based on the review team’s knowledge, and the terms identified from the scoping articles. Trial searches were conducted using the Web of Science: Core Collection. We systematically removed terms that appeared to broaden the search outside the scope of the review. To ensure the proposed strategy adequately returned relevant literature, the search output was scanned for relevant articles and each of the scoping benchmark articles. Unreturned articles were then closely inspected, and the search strategy was adjusted until it retrieved all 10 benchmark articles [51]. The comprehensiveness of the search strategy was then tested using a list of 5 independent articles (Additional file 1), all of which were retrieved by the final search strategy.

Search strategy

To begin collating articles for this review, bibliographic databases will be searched using the following search string (which will be modified for each specific database language).

TS = ((("antipredator response$" OR "anti-predator response$" OR "antipredator behavio$r" OR "anti-predator behavio$r" OR "escape behavio$r" OR "giving$up density" OR "FID" OR "GUD" OR "flight initiation distance") AND ("predator exposure" OR " prey naivete" OR "naive prey" OR "los$" OR "trait" OR "predator avoid*")) OR (("predator recognition" OR "predator exposure" OR "predation risk" OR "introduced predator$" OR "novel predator$" OR "predator odour") AND ("naive prey" OR "prey naivete" or "escape behavio$r" OR "giving$up density" OR "flight initiation distance" OR "FID" OR "GUD" OR "predator odour")) OR (("antipredator response$" OR "anti-predator response$" OR "antipredator behavio$r"OR "anti-predator behavio$r" OR "escape behavio$r") AND ("predator recognition" OR "predator exposure" OR "introduced predator$" OR "novel predator$"))).

Academic literature

Based on the subject matter covered by each, we will search the following bibliographic databases from which to collect peer-reviewed journal articles: Web of Science (Core Collection, BIOSIS Citation Index, Zoological Record, CAB abstracts) and Scopus.

Grey literature

To reduce bias toward published literature, we aim to also search a variety of grey literature sources [49, 50]. Using our search string above, we will collate theses and dissertations from two bibliographic databases specific to grey literature: Proquest Dissertation and EThOS: UK Theses and Dissertations. Conference proceedings will be searched in the Web of Science database using the predetermined search strategy. The following website will also be searched, using the search terms “anti-predator” and “antipredator”: opengrey.eu; trove.nla.gov.au. Specialist documents will be searched from within the following repositories, using the search terms “anti-predator” and “antipredator”: IUCN general publications (https://portals.iucn.org/library/dir/publications-list); IUCN Conservation Planning Specialist Group (http://www.cpsg.org/document-repository); Conservation Evidence (http://www.ConservationEvidence.com); WWF (https://www.worldwildlife.org/publications). A web-based search engine, Google (www.google.com), will be searched to supplement our search results. The first 50 links returned using each combination of the search terms “anti-predator/antipredator” and “behaviour/behavior”, will be inspected and added to the article pool if not yet identified [55].

Additional literature

Based on the knowledge of the review team and stakeholders, additional publications not identified by the search strategy may also be included.

Search results will be limited to articles written and published in English (due to the language capabilities of the review team). All database and grey-literature searches will be documented, and this information will be made available with the final review publication. All searches will be conducted within two years of the final analysis being submitted for publication.

Article screening and study eligibility criteria

Duplicate articles will be removed, and article screening will be conducted through CADIMA [51, 56]. To remove bias, two screeners will independently review articles at title and abstract level simultaneously to determine relevance, followed by the full text versions, to decide which meet the inclusion criteria. Each screener will assess an overlap of 10% of all articles (to a maximum of 50 articles screened) at both the title/abstract stage, and at the full text stage. Reliability between screeners will be assessed using Kappa calculations (with values > 0.5 deemed acceptable [12, 57]). In instances where screeners do not agree on the inclusion/exclusion of an article, they will discuss, and then consult a third member of the review team if necessary. If theses or dissertations have additionally been published as journal articles or specialist reports, we will assess the methods described in both, and only include the article that provides the most detail. While not anticipated, if reviewers find themselves assessing their own work, a third impartial member of the review team will oversee the assessment of any conflicting articles. A full list of excluded articles will be made available with the final review, detailing reasoning for their exclusion.

Each article will be screened against eligibility criteria based on the PICO framework as outlined in Table 1. The screeners will first review each article by title and abstract simultaneously, to assess the satisfaction of the eligibility criteria (Table 1).

Articles that satisfy the Population and Intervention eligibility criteria will be used to pursue the primary question, and will then additionally be assessed against the Comparator and Outcome eligibility criteria for inclusion in the secondary quantitative component where they may address the effectiveness of the Intervention elements; either assay types or predator cue types. All articles considered for this analysis must have incorporated at least one of the Comparator elements and all of the Outcome elements listed in Table 1. In articles with more than one predator cue or population type (e.g. current, historic and control predator cues or exposure > 5 years ago, in the last five years and never exposed), we will extract the effect size (difference between the treatment condition and the control) of the cue or population that was hypothesized by the authors to elicit the largest response (thus limiting the number of data entries from each article to one per assay).

Study validity assessment

Studies that satisfy the Population and Intervention criteria but not the Comparator and Outcome criteria will not be critically appraised and will exclusively be used in the narrative synthesis identifying different methodologies for quantifying anti-predator responses. Those studies that do satisfy the four Population, Intervention, Comparator and Outcome eligibility criteria will undergo further critical appraisal using the CEE critical appraisal tool (Additional file 2, [59]). Critical appraisal will be undertaken by two members of the review team, and each appraiser will assess on overlap of 5% of studies (to a maximum of 20) to ensure consistency. If appraisers reach different conclusions around any study, the validity criteria will be refined, and consistency checking will be repeated.

Data coding and extraction strategy

Once screened, the following meta-data variables will be extracted or scored where possible:

-

Species

-

Common name

-

Common name

-

Latin name

-

IUCN conservation status

-

Size (small < 5 kg, medium 5–20 kg, large > 20 kg)

-

-

Assay

-

Assay type (e.g. flight initiation distance, trap behaviour, giving-up density)

-

Behaviour measured (e.g. avoidance, docility, exploratory behaviour, fear)

-

What equipment is required (e.g. camera traps, specialist equipment)

-

What equipment is required (e.g. camera traps, specialist equipment)

-

-

Type of predator exposure

-

Comparison between populations with varying exposure to predators (yes/no)

-

Use of predator cue (yes/no)

-

Direct or contextual

-

Olfactory, visual, or acoustic

-

Type of cue (e.g. faeces, urine, call, taxidermied model)

-

-

Cue properties

-

Did the cue move?

-

Size of cue (small < 5 kg, medium 5–20 kg, large > 20 kg)

-

Type of predator (e.g. terrestrial or aerial)

-

-

-

Robustness of methods

-

Sample size

-

Number of individuals

-

Number of populations (treatment groups)

-

Number of repeat measures per individual

-

Number of repeat measures per population

-

-

Measure of repeatability

-

Within individuals

-

Within populations

-

-

Was there a control treatment (exposure or cue)

-

If/how the methods were validated (e.g. fate of individuals, success criteria)

-

-

Effect size (difference in means between treatment and control group)

-

Mean response (and standard deviation) of treatment group

-

Sample size of treatment group

-

Mean response (and standard deviation) of control group

-

Sample size of control group

-

For the quantitative component, we will extract the mean response of each treatment, its corresponding variance (standard deviation, standard error or variance), and the sample size for each treatment. In articles where this information is presented graphically, we will calculate the measures from the figures (with the axes as scale bars) using the software Image J [60]. Metadata will be scored using a customised data sheet (Additional file 3; adapted from [61]) by two members of the review team. Each member will crosscheck 5% of articles (to a maximum of 20) to ensure consistency, and if differences are found in the extracted information, the meta-data protocol will be refined and cross check will begin again until all data extracted is consistent. Where any information is unclear or missing, authors will be contacted. After contacting authors, if the treatment/control standard deviations or sample sizes are absent, or if more than 50% of metadata are still missing, the article will be excluded from the quantitative review component. Extracted data will be made available with the full review as supplementary material.

Potential effect modifiers/reasons for heterogeneity

The following additional factors to be investigated by the review were compiled using the expertise of the review team, incorporating suggestions from stakeholders. We may unintentionally exclude some useful data by only searching articles written in the English language. There may be a bias in the types of animals for which measures have been developed, for example, threatened or charismatic species. The type of predator cue used may substantially affect the outcome, as less effective cues may not be representative of an individuals’ response to a true predation event [62,63,64,65]. For the most robust quantification of behaviour, methodology should use repeat measures, incorporate measures of repeatability, and validate the assays, for example, by quantifying the fitness outcomes of various behavioural responses [66, 67]. With such a systematic review, we hope to highlight where biases may be occurring, and reveal areas where more robust methodology is needed to guide the development of behavioural assays.

Data synthesis and presentation

The results from this systematic review will be presented both in a narrative synthesis (to address the primary question) and with a quantitative analysis (to address the secondary question) [51]. To answer the first question, what behavioural assays have been used to quantify anti-predator responses in mammals, each article and the associated meta-data will be detailed in a table of findings that will divide studies up based on the different assay-types. Specific examples of different methods will be discussed in further detail within the text of the review. Some descriptive statistics based on the meta-data will be used to reveal patterns such as species tested. We will discuss techniques that are used regularly and aspects of existing methodology that have been well developed and tested. For example, we will quantify the number of replicates per study, reveal the proportion of studies that incorporated measures of repeatability, and assess how existing methods have been validated (and describe the mechanisms used). We will also discuss features that are lacking from existing methodology, or characteristics that are poorly represented (e.g. specific taxonomic groups). There will be a section that features suggestions for future development of behavioural assays.

The secondary question, which assay-types and predator cues elicit the greatest behavioural response, will be answered based on the meta-data extracted surrounding the experimental design of each study. Using the treatment means, standard deviations and sample size extracted from each study, we will calculate a standardized measure of effect size for differences between means using Hedges’ g [58]:

where \({\mu }_{t}\) is the mean of the treatment group, \({\mu }_{c}\) is the mean of the control group and \({s}_{p}\) is the pooled standard deviation. The formula for pooled standard deviation is:

where \({n}_{t}\) and \({s}_{t}\) are the number of observations and standard deviation for the treatment group respectively, and \({n}_{c}\) and \({s}_{c}\) are the number of observations and standard deviation for the control group respectively. Hedges’ g was chosen over other effect size measures such as Cohen’s d, as it is suited to a range of sample sizes and because it facilitates comparisons across studies by weighting each measure based on the number of observations [68]. We will build two mixed effects models using R [53] to identify which predator cue types and behavioural assay types elicit the greatest difference in effect size (Hedges’ g), while controlling for potential confounding factors where possible. We will include each study’s unique identifier as a random effect in both models to account for the non-independence of multiple effect sizes from each study. The protocol for this review adheres to the ROSES guidelines (see Additional file 4 for checklist).

Availability of data and materials

The only data used in the preparation of this manuscript was for the scoping exercise, which is available in the additional material. The datasets generated and/or analysed during throughout the duration of this study will be made available in the Dryad digital repository.

References

Ceballos G, Ehrlich PR. Mammal population losses and the extinction crisis. Science. 2002;296(5569):904–7.

Schipper J, Chanson JS, Chiozza F, Cox NA, Hoffmann M, Katariya V, et al. The status of the world’s land and marine mammals: diversity, threat, and knowledge. Science. 2008;322(5899):225.

Spooner FEB, Pearson RG, Freeman R. Rapid warming is associated with population decline among terrestrial birds and mammals globally. Glob Change Biol. 2018;24(10):4521–31.

Newbold T, Hudson LN, Hill SLL, Contu S, Lysenko I, Senior RA, et al. Global effects of land use on local terrestrial biodiversity. Nature. 2015;520(7545):45–50.

Ross AK, Letnic M, Blumstein DT, Moseby KE. Reversing the effects of evolutionary prey naiveté through controlled predator exposure. J Appl Ecol. 2019;56(7):1761–9.

Salo P, Korpimäki E, Banks PB, Nordström M, Dickman CR. Alien predators are more dangerous than native predators to prey populations. Proc Biol Sci. 2007;274(1615):1237–43.

Radford JQ, Woinarski JCZ, Legge S, Baseler M, Bentley J, Burbidge AA, et al. Degrees of population-level susceptibility of Australian terrestrial non-volant mammal species to predation by the introduced red fox (Vulpes vulpes) and feral cat (Felis catus). Wildl Res. 2018;45(7):645–57.

Murphy BP, Woolley L-A, Geyle HM, Legge SM, Palmer R, Dickman CR, et al. Introduced cats (Felis catus) eating a continental fauna: the number of mammals killed in Australia. Biol Cons. 2019;237:28–40.

Clavero M, García-Berthou E. Invasive species are a leading cause of animal extinctions. Trends Ecol Evol. 2005;20(3):110.

Sax Dov F, Gaines Steven D, Brown JH. Species invasions exceed extinctions on islands worldwide: a comparative study of plants and birds. Am Nat. 2002;160(6):766–83.

Loehle C, Eschenbach W. Historical bird and terrestrial mammal extinction rates and causes. Divers Distrib. 2012;18(1):84–91.

Greggor AL, Price CJ, Shier DM. Examining the efficacy of anti-predator training for increasing survival in conservation translocations: a systematic review protocol. Environ Evid. 2019;8(1):11.

Jolly CJ, Phillips BL. Rapid evolution in predator-free conservation havens and its effects on endangered species recovery. Conserv Biol. 2021;35(1):383–5.

Tavecchia G, Viedma C, Martínez-Abraín A, Bartolomé M-A, Gómez JA, Oro D. Maximizing re-introduction success: assessing the immediate cost of release in a threatened waterfowl. Biol Cons. 2009;142(12):3005–12.

West R, Letnic M, Blumstein DT, Moseby KE. Predator exposure improves anti-predator responses in a threatened mammal. J Appl Ecol. 2018;55(1):147–56.

Greggor AL, Blumstein DT, Wong BBM, Berger-Tal O. Using animal behavior in conservation management: a series of systematic reviews and maps. Environ Evid. 2019;8(1):23.

Grassel SM, Rachlow JL, Williams CJ. Spatial interactions between sympatric carnivores: asymmetric avoidance of an intraguild predator. Ecol Evol. 2015;5(14):2762–73.

Higdon SD, Diggins CA, Cherry MJ, Ford WM. Activity patterns and temporal predator avoidance of white-tailed deer (Odocoileus virginianus) during the fawning season. J Ethol. 2019;37(3):283–90.

Hébert M, Versace E, Vallortigara G. Inexperienced preys know when to flee or to freeze in front of a threat. Proc Natl Acad Sci. 2019;116(46):22918–20.

Stankowich T, Coss RG. Effects of risk assessment, predator behavior, and habitat on escape behavior in Columbian black-tailed deer. Behav Ecol. 2006;18(2):358–67.

Medill SA, Renard A, Larivière S. Ontogeny of antipredator behaviour in striped skunks (Mephitis mephitis). Ethol Ecol Evol. 2011;23(1):41–8.

Emlen DJ. The evolution of animal weapons. Annu Rev Ecol Evol Syst. 2008;39(1):387–413.

Rummel L, Martínez-Abraín A, Mayol J, Ruiz-Olmo J, Mañas F, Jiménez J, et al. Use of wild–caught individuals as a key factor for success in vertebrate translocations. Anim Biodivers Conserv. 2016;39(2):207–91.

Langridge J, Sordello R, Reyjol Y. Outcomes of wildlife translocations in protected areas: what is the type and extent of existing evidence? A systematic map protocol. Environmental Evidence. 2020;9(1):16.

Hayward MW, Kerley GIH. Fencing for conservation: Restriction of evolutionary potential or a riposte to threatening processes? Biol Cons. 2009;142(1):1–13.

Legge S, Woinarski JCZ, Burbidge AA, Palmer R, Ringma J, Radford JQ, et al. Havens for threatened Australian mammals: the contributions of fenced areas and offshore islands to the protection of mammal species susceptible to introduced predators. Wildl Res. 2018;45(7):627–44.

Ringma J, Legge S, Woinarski J, Radford J, Wintle B, Bode M. Australia’s mammal fauna requires a strategic and enhanced network of predator-free havens. Nat Ecol Evol. 2018;2(3):410–1.

Evans K, Moore R, Harris S. The social and ecological integration of captive-raised adolescent male african elephants (Loxodonta africana) into a wild population. PLoS ONE. 2013;8(2):e55933.

Pinter-Wollman N, Isbell LA, Hart LA. Assessing translocation outcome: Comparing behavioral and physiological aspects of translocated and resident African elephants (Loxodonta africana). Biol Cons. 2009;142(5):1116–24.

Müller J, Wölfl M, Wölfl S, Müller DWH, Hothorn T, Heurich M. Protected areas shape the spatial distribution of a European lynx population more than 20 years after reintroduction. Biol Cons. 2014;177:210–7.

Muller LI, Murrow JL, Lupardus JL, Clark JD, Yarkovich JG, Stiver WH, et al. Genetic structure in Elk persists after translocation. J Wildl Manag. 2018;82(6):1124–34.

Wei F, Swaisgood R, Hu Y, Nie Y, Yan L, Zhang Z, et al. Progress in the ecology and conservation of giant pandas. Conserv Biol. 2015;29(6):1497–507.

Thalmann S, Peck S, Wise P, Potts JM, Clarke J, Richley J. Translocation of a top-order carnivore: tracking the initial survival, spatial movement, home-range establishment and habitat use of Tasmanian devils on Maria Island. Australian Mammalogy. 2016;38(1):68–79.

Woinarski JCZ, Burbidge AA, Harrison PL. Ongoing unraveling of a continental fauna: decline and extinction of Australian mammals since European settlement. Proc Natl Acad Sci. 2015;112(15):4531–40.

Morris SD, Brook BW, Moseby KE, Johnson CN. Factors affecting success of conservation translocations of terrestrial vertebrates: a global systematic review. Global Ecology and Conservation. 2021;28:e01630.

Muralidhar A, Moore FL, Easton LJ, Jamieson IG, Seddon PJ, van Heezik Y. Know your enemy? Conservation management causes loss of antipredator behaviour to novel predators in New Zealand robins. Anim Behav. 2019;149:135–42.

Blumstein DT, Daniel JC. The loss of anti-predator behaviour following isolation on islands. Proceedings. 2005;272(1573):1663–8.

Blumstein DT, Daniel JC, Springett BP. A test of the multi-predator hypothesis: rapid loss of antipredator behavior after 130 years of isolation. Ethology. 2004;110(11):919–34.

Jolly CJ, Webb JK, Phillips BL. The perils of paradise: an endangered species conserved on an island loses antipredator behaviours within 13 generations. Biol Lett. 2018;14:6.

Cooper WE, Pyron RA, Garland T. Island tameness: living on islands reduces flight initiation distance. Proceedings. 2014;281(1777):1–7.

Moseby KE, Cameron A, Crisp HA. Can predator avoidance training improve reintroduction outcomes for the greater bilby in arid Australia? Anim Behav. 2012;83(4):1011–21.

Moseby KE, Read JL, Paton DC, Copley P, Hill BM, Crisp HA. Predation determines the outcome of 10 reintroduction attempts in arid South Australia. Biol Cons. 2011;144(12):2863–72.

Berger-Tal O, Blumstein DT, Carroll S, Fisher RN, Mesnick SL, Owen MA, et al. A systematic survey of the integration of animal behavior into conservation. Conserv Biol. 2016;30(4):744–53.

Tay NE, Fleming PA, Warburton NM, Moseby KE. Predator exposure enhances the escape behaviour of a small marsupial, the burrowing bettong. Anim Behav. 2021;175:45–56.

Blumstein DT, Mari M, Daniel JC, Ardron JG, Griffin AS, Evans CS. Olfactory predator recognition: wallabies may have to learn to be wary. Anim Conserv. 2002;5(2):87–93.

Saxon-Mills EC, Moseby K, Blumstein DT, Letnic M. Prey naïveté and the anti-predator responses of a vulnerable marsupial prey to known and novel predators. Behav Ecol Sociobiol. 2018;72(9):151.

Steindler LA, Blumstein DT, West R, Moseby KE, Letnic M. Exposure to a novel predator induces visual predator recognition by naïve prey. Behav Ecol Sociobiol. 2020;74(8):102.

Bannister H, Brandle R, Moseby K. Antipredator behaviour of a native marsupial is relaxed when mammalian predators are excluded. Wildl Res. 2018;45(8):726–36.

Berger-Tal O, Greggor AL, Macura B, Adams CA, Blumenthal A, Bouskila A, et al. Systematic reviews and maps as tools for applying behavioral ecology to management and policy. Behav Ecol. 2019;30(1):1–8.

Rothstein HR, Sutton AJ, Borenstein M. Publication Bias in Meta-Analysis: Prevention. Assessment and Adjustments: Wiley; 2005.

Collaboration for Environmental Evidence. Guidelines for Systematic Review and Evidence Synthesis in Environmental Management. www.environmentalevidence.org/Documents/Guidelines/Guidelines4.2.pdf; 2013.

Fellows I. wordcloud: Word Clouds. R package version 2.6. . 2018.

R Core Team. R: A language and environment for statistical computing. . Vienna, Austria: R Foundation for Statistical Computing; 2020.

Foo YZ, O'Dea RE, Koricheva J, Nakagawa S, Lagisz M. A practical guide to question formation, systematic searching and study screening for literature reviews in ecology and evolution. Methods Ecol Evol. 2021.

Smart JM, Burling D. Radiology and the internet: a systematic review of patient information resources. Clin Radiol. 2001;56(11):867–70.

Kohl C, McIntosh EJ, Unger S, Haddaway NR, Kecke S, Schiemann J, et al. Online tools supporting the conduct and reporting of systematic reviews and systematic maps: a case study on CADIMA and review of existing tools. Environ Evid. 2018;7(1):8.

Edwards P, Clarke M, DiGuiseppi C, Pratap S, Roberts I, Wentz R. Identification of randomized controlled trials in systematic reviews: accuracy and reliability of screening records. Stat Med. 2002;21(11):1635–40.

Hedges LV. Distribution theory for glass’s estimator of effect size and related estimators. J Educ Stat. 1981;6(2):107–28.

Konno K, Livoreil B, AS P. CEECAT: Collaboration for Environmental Evidence Critical Appraisal Tool Version 0.2 (prototype). 2021.

Schneider CA, Rasband WS, Eliceiri KW. NIH Image to ImageJ: 25 years of image analysis. Nat Methods. 2012;9:671–5.

Snijders L, Greggor AL, Hilderink F, Doran C. Effectiveness of animal conditioning interventions in reducing human–wildlife conflict: a systematic map protocol. Environ Evid. 2019;8(1):10.

Nolte DL, Mason JR, Epple G, Aronov E, Campbell DL. Why are predator urines aversive to prey? J Chem Ecol. 1994;20(7):1505–16.

Smith ME, Belk MC. Risk assessment in western mosquitofish (Gambusia affinis): do multiple cues have additive effects? Behav Ecol Sociobiol. 2001;51:101–7.

Griffin AS, Blumstein DT, Evans CS. Training captive-bred or translocated animals to avoid predators. Conserv Biol. 2000;14(5):1317–26.

Edwards MC, Ford C, Hoy JM, FitzGibbon S, Murray PJ. How to train your wildlife: a review of predator avoidance training. Appl Anim Behav Sci. 2021;234:105170.

Bell AM, Hankison SJ, Laskowski KL. The repeatability of behaviour: a meta-analysis. Anim Behav. 2009;71:771–83.

Hantula DA. Editorial: replication and reliability in behavior science and behavior analysis: a call for a conversation. Perspectives on Behavior Science. 2019;42(1):1–11.

Harrison F. Getting started with meta-analysis. Methods Ecol Evol. 2011;2(1):1–10.

Acknowledgements

The authors thank Terena Solomons for training NDH in searching bibliographic databases and the stakeholders for their feedback on earlier drafts of the review protocol.

Funding

NDH is funded through the Australian Commonwealth Government RTP Scholarship, the Hermon Slade Foundation (HSF21054 to Nicola Mitchell) and the Holsworth Wildlife Research Endowment. The funding bodies played no role in the development of this study.

Author information

Authors and Affiliations

Contributions

NDH wrote the draft protocol. All authors conceived the study and contributed substantially to the final manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This study does not report on any studies conducted on humans, and exclusively reports on studies conducted on animals by other researchers. The authors rely on the responsibility of the relevant researchers to have sought the approval of an appropriate ethics committee. Studies that fail to report ethics approval (or fail to disclose this information once authors have been contacted if required) will be excluded from the final review.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

List of benchmark and test scoping articles.

Additional file 2.

CEE critical appraisal tool sheet.

Additional file 3.

Customised data collection sheet.

Additional file 4.

ROSES checklist.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Harrison, N.D., Phillips, B.L., Hemmi, J.M. et al. Identifying the most effective behavioural assays and predator cues for quantifying anti-predator responses in mammals: a systematic review protocol. Environ Evid 10, 38 (2021). https://doi.org/10.1186/s13750-021-00253-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13750-021-00253-9