Abstract

This review addresses human capacity for movement in the context of extreme loading and with it the combined effects of metabolic, biomechanical and gravitational stress on the human body. This topic encompasses extreme duration, as occurs in ultra-endurance competitions (e.g. adventure racing and transcontinental races) and expeditions (e.g. polar crossings), to the more gravitationally limited load carriage (e.g. in the military context). Juxtaposed to these circumstances is the extreme metabolic and mechanical unloading associated with space travel, prolonged bedrest and sedentary lifestyle, which may be at least as problematic, and are therefore included as a reference, e.g. when considering exposure, dangers and (mal)adaptations. As per the other reviews in this series, we describe the nature of the stress and the associated consequences; illustrate relevant regulations, including why and how they are set; present the pros and cons for self versus prescribed acute and chronic exposure; describe humans’ (mal)adaptations; and finally suggest future directions for practice and research. In summary, we describe adaptation patterns that are often U or J shaped and that over time minimal or no load carriage decreases the global load carrying capacity and eventually leads to severe adverse effects and manifest disease under minimal absolute but high relative loads. We advocate that further understanding of load carrying capacity and the inherent mechanisms leading to adverse effects may advantageously be studied in this perspective. With improved access to insightful and portable technologies, there are some exciting possibilities to explore these questions in this context.

Similar content being viewed by others

Background

This review within the series of Moving in Extreme Environments addresses human capacity for movement in the context of extreme loading and with it the combined effects of metabolic, biomechanical and gravitational stress on the human body. This topic encompasses extreme duration, as occurs in ultra-endurance competitions (e.g. adventure racing and transcontinental races) and expeditions (e.g. polar crossings), to the more gravitationally limited load carriage (e.g. in the military context). Because these circumstances overlap within themselves and with other reviews in this series, we discuss gravitational and energetic load within the ultra-endurance, expedition and occupational setting, leaving detailed discussion of related environmental factors on human tolerance and performance to those reviews—with the exception of cold-related effects since this is not discussed elsewhere. Juxtaposed to these circumstances is the extreme metabolic and mechanical unloading associated with space travel, prolonged bedrest and sedentary lifestyle, which may be at least as problematic, and are therefore included as a reference (e.g. when considering exposure, dangers and (mal)adaptations).

Extreme loading pertains to the physical demands of carrying or towing mass, including or even exclusively oneself, as far or quickly as possible. The major resistive force is nearly always gravitational; hence the major stress is weight (Newtons, the product of mass and gravitational acceleration). Such stress impacts on all physiological systems. While the term ultra-endurance can describe exercise lasting more than just 4 h [1–3], our focus is on the more extreme end on this continuum, with exercise lasting many hours per day, over multiple consecutive days (e.g. >40-day Arctic expeditions [4, 5] or military training or operations [6–12]) or almost continuously for several days (e.g. adventure racing [13, 14]). Ultra-endurance competition might appear to be a relatively recent phenomenon, with—for example—the first adventure race being held in 1989 (Raid Gauloises), the first official 100-mile Western States Endurance Run in the United States of America held in 1977, the first Hawaii Ironman held in 1978 and, ~50 years earlier, the American Bunion Derby transcontinental foot races held in 1928 and 1929. The modern cycling Grand Tour stage races of Europe [i.e. Tour de France (first raced in 1903), Giro d’Italia (1909), Vuelta a España (1935)] have a longer history of challenging human capacities. All of these were preceded by the first long-distance cycle race in 1869 (L’Arc de Triomphe in Paris to the Cathedral in Rouen). Yet, load carriage in the military context and consideration of its impact on human capabilities has been an issue for many centuries (see [15, 16] and illustrated in Fig. 1). In addition, some modern ultra-endurance events/expeditions relive historical occupational tasks (esp. goods delivery before engine-based transport; e.g. Iditarod race [17]) and a form of ultra-endurance loading will be present in centuries-old spiritual pilgrimages as well as for the hunter–gatherer societies of past millennia. Indeed, endurance loading has shaped our genome and hence several important distinguishing features of our anatomy and physiology [18]. Perhaps, the earliest account of the consequences of extreme physiological loading is of Pheidippides, a hero of ancient Greece who reportedly collapsed and died after relaying the message of victory over Persia in the Battle of Marathon in 490 BC. Thus, the question of how the human body copes and responds to extreme feats of endurance has ancient origins and is still considered and challenged in the present day.

Historical representation of mean and ranges of carried loads by soldiers (reproduced with permission from [16])

The purpose of this review, as with the others in this series, is to (1) describe the nature of the stress (i.e. extreme loading) and the associated dangers/consequences; (2) illustrate what, if any, regulations are in place as well as why and how they are set; (3) present the pros and cons for self versus prescribed acute and chronic exposure; (4) describe humans’ adaptation and/or maladaptation; and finally (5) suggest future directions for practice and research in this area.

Review

What is the stressor/danger for human movement?

Common to all the activities covered in this review is the requirement to carry or tow a load; at minimum an individual simply carrying themselves metabolically and mechanically against gravity, which can involve several vertical kilometres of ascent and descent. Additional load can be that carried in a backpack and webbing (ranging from a hydration system or survival equipment weighing <5 kg to large loads weighing >40 kg), towed in a sled (e.g. 120 kg [4] or 222 kg [5]), carried by hand (e.g. weapons or tools), worn as protection from environmental conditions or hostile elements (e.g. body armour, ~10 kg [19]) or some combination of these. The obvious consequence of this added load is the extra effort and physiological/physical cost (e.g. energetic, stress fractures, eccentric muscle damage) required to carry or tow it, which will be affected by the environmental conditions in which the work is undertaken. Indeed, these issues have been researched over several decades [e.g. [15, 20–22]], and reviewed accordingly [16, 19, 23–26]. Providing extensive detail on this is not within the scope of this review; however, there is a known additional cost of carrying more weight (e.g. [22, 27, 28]), which is lessened by carrying it closer to the centre of gravity (e.g. [23, 29]), thereby also lessening the additional perceived exertion [30]. The increased energy expenditure and physiological strain lowers work capacity, diminishes capabilities (although not necessarily generic to all physical tasks [31]), increases dietary requirements, increases heat stress (particularly if protective clothing is worn; see [32]), reduces mobility and potentially increases the risk of harm; ranging from musculoskeletal strains, to injury as a result of reduced cognitive performance associated with fatigue, through to fatality [e.g. carried loads of 27–41 kg attributed to many drownings during the D-Day landings at Omaha beach during World War II (see [15, 16])]. Yet, it is just as readily fatal to leave critical items in efforts to reduce the carried load, thus a trade-off between carrying essentials (e.g. food, clothing and weaponry) versus moving fast and effectively is fundamental in all of the situations discussed here: sport, occupation and military.

Illness and injury during extreme loading stress is an obvious danger related to this type of human endeavour. Fordham and colleagues reported that 73 % of their 223 adventure racing athletes reported musculoskeletal problems requiring them to stop training for at least 1 day, reduce training, take medicine or seek medical aid. We found a similarly high incidence of injury and illness; 38 of 48 athletes (79 %) reported a total of 49 musculoskeletal injuries during an adventure race [33]. Also prevalent in this 4- to 5-day near-continuous event were skin wounds and infections (43/49), upper respiratory illness (28/49) and gastrointestinal (GI) complaints (8/49; additional five 4-member teams withdrew due to GI complaints) [33]. One seemingly minor injury issue common to all extreme loading settings is the risk of repetitive rubbing on the locomotive limbs (usually of the feet and/or groin/thighs) and against items of carried load, developing into blistering and/or overuse injuries. Blistering and tissue degeneration can also accrue from intense or sustained exposure to heat, cold (see below) or water. While such injuries may have no more than a race-ending consequence in sport, in other settings, such as unsupported polar crossings or combat scenarios, the reduced capability and mobility and/or elevated risk of infection can have life-threatening consequences. Managing and preventing such injuries via optimising equipment (e.g. footwear, pack, body armour), reducing load and improving distribution are well recognised prevention actions for reducing the incidence of injury [23], but not always possible.

One environmental extreme mentioned briefly here is cold air exposure, because several features of prolonged exercise increase the risk of hypothermia and cold-related tissue injuries such as frost nip and frost bite. For example, polar expeditioning, cross-country ski racing, adventure racing and some military settings involve exposure to moderately dry or wet cold stress (e.g. in adventure racing [13]) through to extremely cold air (as low as −45 °C [5]), with only modest rates of heat production (see below). Cold stress is intensified by wind chill (see [34]), while some physical and physiological effects of cold stress are amplified by factors such as hypobaric hypoxia (e.g. elevation of 3000 m on the Polar plateau [5]), sleep deprivation and sustained energy deficit [12]. Prolonged exertion can impair cold tolerance by delaying the onset of shivering [10], impairing vasoconstrictive power of the exercised limbs [35], impairing thermogenic capacity [36, 37] and impairing dexterity and strength by at least 50 % even without core cooling [37, 38]. Yet, humans’ behavioural drive to minimise cold exposure is very strong [37], so their risk depends on their situation. Interestingly, whereas humans have strong adaptive responses to many aspects of prolonged loading (see below), little meaningful adaptation develops against cold exposure that would increase tolerance at the whole-body level [39, 40] or localised level [41], despite recent studies illustrating that some browning of adipose tissue can occur during repeated cold exposure, which would increase thermogenic capacity [42, 43]. Overall, the potential risks for human movement in cold air range from reduced strength and manual dexterity, to loss of mobility and function as a consequence of frost bite, to hypothermia-induced coma and subsequent death if the cold stress is not intervened.

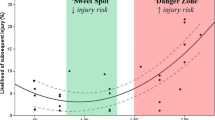

In summary, all physiological systems are impacted by the prolonged metabolic and mechanical impacts of sustained loading, whether in sport, expeditioning or military settings. The consequences of such stress range from being little more than a nuisance to life threatening. These dangers should also be contextualised against those of the extreme unloading caused by sedentariness arising from bedrest, fear-avoidance behaviour due to chronic pain disorders or preferred behaviour. Figure 2 therefore summarises the consequences at both extremes of the spectrum of loading, within the physiological systems (different panels) and across exposure time. Within a few hours of ceasing movement, blood glucose regulation and endothelial function show impairment [44–46]. By 24 h, insulin desensitisation and loss of plasma volume also become evident. Even just reducing normal daily activity (steps) is enough to impair metabolic control and aerobic fitness [47]. These collective effects can ultimately be more debilitating, and make ‘physical inactivity’ the fourth largest contributor to early mortality in the world today [48]. The dangers of sedentary behaviour are thus becoming apparent as being both important and distinct from those of insufficient exercise, based on emerging evidence of its rapid-onset pathophysiological effects [46, 49] and on epidemiological evidence [50]. Importantly, unlike the high-load scenarios described above, the danger is that these effects are initially insidious and appeal to humans’ desire for comfort. Finally, it must also be acknowledged that the two extremes of loading can also be linked through loading-induced injury, causing immobilisation acutely through fracture, sprain or strain, or becoming chronic for or after many years of extreme loading (e.g. osteoarthritis). Thus, one danger of acute or chronic extreme loading is of consequent chronic unloading.

Illustration of adverse effects of the extremes of physical loading as a function of duration of exposure. Phys physical, physiol physiological, MAP mean arterial blood pressure, BRS baroreflex sensitivity, PaCO 2 partial pressure of arterial carbon dioxide, SCD sudden cardiac death, CAD cardiac arterial disease, MI myocardial infarction, CBF cerebral blood flow, PAD peripheral arterial disease, TG triglycerides

What regulations are established, and why/how are they set?

Fatalities in the occupational or recreational setting often initiate reviews, discussion and/or an inquiry that then set new regulations and/or practice to minimise overt risk associated with extreme high-loading settings.

Ultra-endurance competition

The death of Nigel Aylott from a falling boulder dislodged by a fellow competitor in the 2004 Primal Quest adventure race highlights the risks and responsibilities that both racers and race organisers need to consider in conditions made extreme by both physiological (e.g. sleep deprivation, prolonged and continuous competitive exercise stress) and environmental factors inherent to such events (see [51]). Adventure races that are part of the Adventure Racing World Series have a set of Rules of Competition and a Mandatory Equipment list for safety purposes [52], e.g. team members must always be within 50 m of each other, each competitor must carry their own survival equipment and each team a communication device for emergencies. Additional items may be added by race organisers where they are specific to the location, conditions or laws of the host country. Technical competency requirements are also common (e.g. white-water or rope skills), and minimum experience standards may also be applied. Thus, the industry has provided its own regulatory standard, which is aligned with (and ultimately legally bound by) Occupational Health and Safety standards of the host country. Further, organisations such as the United States Adventure Racing Association have been established to guide and assist race directors and committees in conducting fun, safe and fair events [53].

For events like the Marathon des Sables (~6 marathons run in the desert over 6 days), race rules require competitors to hold down fluid or it will be given intravenously [54]. Interestingly, this ‘regulation’ comes with a time penalty, which certainly has the potential to create a negative perception and thus of appropriate and necessary treatment. A requirement of entry is medical certification of ones’ ability to participate, and a resting electrocardiogram report, both presented to the event’s medical team. Other requirements include ceasing forward travel during sandstorms.

Conditions during cold (arctic circle race)

In popular cross-country skiing competitions, temperatures below −25 °C on the major part of the course leads to race cancellation or delay, and with temperatures between −15 and −25 °C caution and specific information to participants on cold weather precautions is mandatory (see [55]). These temperatures are not uncommon in the Arctic Circle race in Greenland, and wind chill may lead to difficult race conditions especially when occurring on the cusp of the −25 °C postponement threshold. Race guidelines suggest that competitors should eat and drink whenever possible and every hour throughout the race. Such recommendations are intended to meet not only the increased energy and water-turnover requirements of the exercise (see below), but also of thermogenesis during exercise with cold stress [37].

To participate in this and other popular cross-country ski races, competitors must abide by the rules and regulations of the International Ski Federation (FIS, [56]) and hold a racer’s licence. Interestingly, the majority of the requirements to attain a racing licence from the FIS, and the rules that determine appropriate conduct as a licence holder, are mostly administrative and logistical (e.g. arriving at the correct time, overtaking protocol), while the health of competitors is deferred to National Associations. Thus, standardised and transparent criteria that need to be met for participation are not always clear.

In another extreme cold event, The Iditarod race (a 1000-mile sled race across Alaska [17]), competitors qualify via the Muster Assessment form, which is completed by judges and officials from other similar events. The assessment form considers ‘skills’ such as general attitude; ability to compete; physical stamina; cold weather preparedness and tolerance; compliance with race rules and policies; sleep deprivation tolerance; equipment selection; mental perseverance; organisation and efficiency; wilderness survival skills; and how an applicant treats their dogs. While this list is comprehensive in listing the potential stressors and behaviours that may be relevant to performance and survival, the ‘tick the box’ nature of the form again seems relatively subjective.

Overall, both the adventure racing and expedition/Nordic racing regulations seem light on rigour. However, perhaps the need to regulate these types of events is less since they will typically attract individuals looking to challenge themselves and have outdoor/wilderness experience and therefore will knowingly accept the responsibility and potential consequences. Yet, some duty of care should be expected of event organisers regardless of the experience and willingness of competitors to engage in such extreme events, as illustrated by the Nigel Aylott accident during the 2004 Primal Quest. Further, the lure of prize money (US$100,000 for winning that event) perhaps jeopardises racers’ safety to a larger extent than do the effects of sleep deprivation and environmental stressors. Ordinarily in ultra-endurance events, little such lure exists and it is both impossible and counter culture to remove all risks, so athletes who declare themselves experienced and aware of the disclosed risks (and agree to them via signed informed consent) must be expected to accept at least some responsibility for mishaps.

Military guidelines

The military has been a key player in setting industry standards for load carriage, especially in the heat. Guidelines have been set to determine the work-to-rest ratio and the amount of fluid consumed. These are determined by the exogenous thermal stress, assessed typically via the wet bulb-globe temperature index, the extent of physical exertion or load carried and other factors (esp. acclimatisation and protective clothing). The relevant research is reviewed extensively elsewhere (e.g. [57–59]), as are guidelines for operational procedures of acute and chronic protection of military personnel (e.g. [60–63]).

Sedentary activity, avoidance behaviour and bedrest

Chronic underloading is a danger with relatively high cost for quality of life, morbidity and mortality, faced by many more people in modern societies than are the settings mentioned above. It is also important to remember that such dangers are not annulled by regular exercise [50]. While exercise is recommended within the public health guidelines of many countries, and is mandated in educational curricula of some countries, regulations generally do not exist to either reduce sedentary behaviour or require asymptomatic people to undertake moderate vigorous physical activity, including exercise [64]. However, for chronic pain disorders (e.g. fibromyalgia, chronic low back pain) and in the rehabilitation phase after injuries on the musculoskeletal system, treatment standards are increasingly being established by national and international medical societies to prevent secondary disabilities or ongoing chronification caused by inappropriate and prolonged immobilisation or unconscious protection [65, 66]. Similarly, cardiac rehabilitation guidelines now include exercise training recommendations rather than bedrest, with exercise-based rehabilitation shown to reduce total mortality, cardiac mortality and hospital readmissions [67]. Ironically, this treatment strategy for cardiac rehabilitation is also a primary prevention for the original disease.

Pros and cons of self vs prescribed exposure

Multiday adventure racing provides perhaps the upper limit of sustained loading acutely, with race competitors exercising nearly continuously across 3–10 days with very limited sleep (e.g. 1 + h/d). While there is certainly a potential for external pressure to continue exercising from fellow team members (often minimised by selection of team members of similar ability), such events provide a model to examine the upper limit of ‘self-prescribed’ exercise. The evidence to date indicates that homeostatic control of key regulated variables such as body core temperature and blood glucose levels is well maintained, despite the wide range of exercise intensities and ambient temperatures, and a large energy deficit [13, 68]. Thus, the prolonged and sustained nature of this acute exposure, along with the contributing effects of sleep deprivation in and of itself [69–71], would appear to be enough to counter the strong intrinsic motivation of athletes such that pace selection across the whole race remains appropriate to homeostatic requirements. Therefore, the need to impose regulations or restrictions does not seem necessary as physiological feedback mechanisms and changes in perception of exertion and reduced motivation as a consequence of sleep deprivation [69, 71] appear capable of protecting individuals from homeostatic failure. Recently, evidence of reduced central drive has been shown to occur during prolonged ultra-endurance exercise (110 km run [72]), providing more evidence for the ‘self’-preservation of homeostasis in this setting. Conversely, the high prevalence of non-steroidal anti-inflammatory drugs and analgesics use in these ultra-endurance athletes [33, 73], often taken alongside stimulants (e.g. caffeine) during competition to ward off the effects of the sleep deprivation, may have an impact on this homeostatic control. The net effect of such acute and chronic drug use on this type of performance and long-term health is unclear and requires further research [73].

Interestingly, the self-selected sustainable pace during these types of events (~40 % VO2 peak [13, 14]) is very similar to the work intensity (30–40 % VO2 max) maintained for multi-day military operations [74–77], and that predicted from laboratory-based work with varying carried loads for both males and females (~45 % VO2 max) [78]. These are obviously relative measures of aerobic power, therefore achieving optimal outcomes—whether in sport, military or other ultra-endurance tasks—requires distributing workload within the group so as to maximise effective velocity. Indeed, towing and load sharing is a common practice in adventure racing. However, the range of absolute aerobic capacities within a group may become an issue when the prescribed parameters of the task are not flexible, e.g. load sharing is not permitted or prudent. Historically, this is a classic scenario within a military training operation, where individuals are exposed to external (and internal; e.g. squad selection criteria) pressures to continue exercising and perform as instructed.

The ‘cons’ for self-prescribed acute exposure seem more relevant in shorter exposures, where strong intrinsic motivation has the potential to override physiological feedback. Indeed, the first 12 h of an adventure race is associated with more intense stress, in that competitors’ exercise pace far exceeds what is sustainable for the race [13, 14], perhaps reflecting a perception that giving up ground to other competitors early will impair the overall outcome, despite that being some days away. As such, the pure ‘self-prescribed’ pace in these early stages is somewhat influenced by other competitors and/or other external factors (e.g. dark zone regulations, whereby night travel is prohibited on some waterways) even among elite adventure racing athletes. An unresolved question—to our knowledge—is whether this asymmetric pacing is optimal in very prolonged endurance activity with or without substantive load carriage. Events such as iron man triathlon, single-day multisport events (e.g. New Zealand’s Coast to Coast race, >12 h) and multiday, stage events (e.g. grand cycle tours) show much higher intensities, typically around the anaerobic threshold (e.g. ~80 % [79–82]). It is in shorter periods like this that behaviour can compromise the effectiveness of physiological negative feedback loops and compromise homeostasis. Indeed, hypohydration and hyponatraemia have been reported during this type of ultra-endurance exercise [83] but are rare in longer events [13, 84–88], except perhaps hypoglycaemia during arm-dependent ultra-endurance exercise [68, 89]. Nevertheless, regardless of how motivated an individual is, the centralised control of homeostasis [90–92] will eventually prioritise survival if an organ’s nutrient or metabolite status is compromised (e.g. via fainting/collapse). The issue is how much strain is accrued on the way to that endpoint (e.g. of body core temperature, electrolyte content, endotoxic load, musculoskeletal trauma), and whether sufficient resources are available to recover homeostasis in any given environment.

Back at the other extreme, in the context of underloading caused by a sedentary lifestyle, clearly self-prescribed exposure is a global disaster, and one that is worsening as labour-saving devices and procedures develop further. While awareness of the benefits of regular physical activity is commonly acknowledged, including by people whose activity levels do not meet public health guidelines, awareness is lacking among the population as to differential effects of exercise vs. inactivity. As mentioned above, regular exercise does not cancel out the effects of sedentary behaviour [50], and this becomes more relevant in a built environment that seeks to reduce labour efforts and is not conducive to activity (e.g. removal of stairs for escalators, remote controlled devices etc.), removing potential opportunities for brief periods of activity/loading that can have positive effects on health [93]. Thus, both social and biological factors are mediating this epidemic of sedentary behaviour in the global population. This is why the biopsychosocial model has become a central strategy for physical and mental behavioural treatment of patients with chronic, geriatric and mental disorders in occupational, rehabilitation and pain medicine [94].

What are the acute and adaptive and/or maladaptive responses to extreme loading?

Musculoskeletal

Depending on the nature of exposure, ultra-loading events may endanger the musculoskeletal system in different locations and ways. Because ultra-endurance races are based on the goal to complete a long distance on foot or non-motorised vehicles in general, the lower extremities are the main loaded parts of the human locomotion system. Until this century, little was known about the consequences of the ongoing biomechanical burden of ultra-endurance events on the bones, joints and soft tissues of the feet and legs. Even now most investigations on ultra-endurance events are limited to field studies on single events (adventure races, marathons, triathlons, bicycle, ski races, etc.) by relatively few researchers focusing on laboratory-based analyses, biomechanical measurements and non-criterion anthropometrical methods [95]. The diagnostic procedure of choice for endurance-related overuse injuries is magnetic resonance imaging (MRI) [96, 97], which provides a logistical challenge to implement in the field. Consequently, direct visualisation and analysis of biomechanical overuse reactions of the musculoskeletal tissues to ultra-endurance activity have not been investigated systematically until very recently. In 2009, the first (and still only) MRI field study was conducted in athletes completing a multistage ultra-endurance running event [the TransEurope FootRace project (TEFR project)]. Whilst following a large cohort of ultra-runners (n = 44) on their way across Europe (~4500 km and taking more than 64 days), a mobile MR-unit was utilised to obtain specific MRI data of overuse injuries [98]. The results of the TEFR project gave new insights on the adaptive possibilities and maladaptive responses of the lower extremity tissues to ultra-running loading. Key findings from this project illustrated how ultra-running impacts on the joints and cartilage, providing important objective data to contribute to the debate regarding risk or non-risk for development of arthrosis in the hip, knee or ankle joints [99, 100] and the circumstances leading to stress fractures.

The impact of prolonged repetitive stress on bone health is estimated via general rules and formulated propositions (Wolff’s law) [101]. Modern theories of bone remodelling predict the functional adaptation of the bone [102, 103], with its resilience to biomechanical impact depending on several individual factors including age, inherited material, preparation time (specific training), hormone status, sex, locomotive technique, peak load and location [104]. However, much less is known of the joint cartilage and its relationship with mechanical demand and biological adaptation. Serial quantitative MRI investigations of biochemical cartilage, as part of the TEFR project on hindfoot, ankle and knee joints, disproved any hypothesis or report that ongoing ultra-endurance running impact is harmful for healthy joints of lower extremities in the absence of obesity, proprioceptive deficit, poor muscle tone or malalignment [105, 106]. To the contrary, results indicated for the first time the ability of normal cartilage matrix to partially regenerate under ongoing multistage ultra-marathon burden in the ankle and hindfoot joints [98]. So, in general, running is joint protective [107, 108] and the magnitude of the distance where running might become dangerous for the joint tissues may be much further than has been previously predicted.

The main reason for withdrawals in ultra-endurance competitions is overuse injuries of the soft tissues of the legs, mainly the tendons, muscles and fascia, summarised as the musculotendinous and myofascial system. Running specific terms like the shin-splint [109] and runner’s knee [110] are established for common overuse syndromes in the sport of endurance running [111, 112]. Their underlying pathophysiology is generally clarified. Specific mobile MRI of the legs in the TEFR project athletes showed that in ultra-running, overuse injuries are mainly intermuscular fascial inflammation processes beginning in one part of the leg. As detailed TEFR project images showed, the so-called shin-splint syndrome is mostly not associated with inflammation of the periosteum, as is commonly assumed, but only with myofascial inflammation of the extensors of the lower leg (see Fig. 3).

High water-sensitive MRI of the left lower leg (TIRM: turbo inversion recovery magnitude): severe “shin-splint” leading to premature termination of TEFR (47 years, male, stage 5 of TEFR, after 261-km run). Thick arrow: panniculitis, epifasciitis; thin arrow: myofasciitis and intermuscular fasciitis (extensors of the lower leg); * inert cortical bone (Tibia) without any periosteal bone reaction

These processes often expand via intermuscular fascial guide rails and lead to overuse problems in the same tissues of the contralateral leg due to asymmetric running when pain occurs in one leg. Pain-related cessation of running then becomes more likely. Figure 4 shows an example of such myofascial overuse problems in the upper legs of an experienced ultra-athlete from the TEFR. As myofascial and musculotendinous overuse injuries in ultra-endurance athletes often lead to withdrawal from a race, the pictured and many other cases from the TEFR show that they can mostly be overrun without any further tissue damage [98]. Nevertheless, a limit for the inflammation burden of these tissues will likely exist, therefore a functional compartment syndrome [113] as the endpoint of such processes has to be respected. Ongoing non-reduced loading may lead to fatal tissue necrosis and permanent damage [114]. Ensuring sufficient arterial and venous blood circulation is the basic prerequisite to overcome ultra-endurance burdens without further damage for the tissues, which is limited not only by the physical stress, but also by the environmental circumstances [115]. As a phylogenetic exception, the human foot seems to have a high resistance to mechanical impact even in the dimension of ultra-endurance loads, since relevant injuries are seldom observed or if they do then only in maladapted and untrained individuals [116–118].

Water-sensitive MRI of upper legs (PDw: proton density weighting): muscle lesions and myofascial inflammation in the upper legs (56 years, male, stage 21 of TEFR, after 1521-km run). Thick arrow: muscle bundle rupture and myositis (M. quadriceps, Vastus intermedius); thin arrow: neurovascular bundle; * panniculitis, fasciitis; ** intermuscular fasciitis. Mq M. quadriceps, -vl vastus lateralis, -vi vastus intermedius, -vm vastus medialis, Mam M. adductor magnus, Msa M. sartorius, Mgr M. gracilis, Msm M. semimembranosus, Mst M. semitendinosus, Mbf M. biceps femoris, -cl caput longum, -cb caput brevis

Extrapolating these TEFR observations of musculoskeletal (mal)adaptations to other recently studied ultra-endurance events with extreme lower-limb loading (e.g. adventure racing and mountain ultra-marathon events such as the Tor-des-Geants) seems reasonable and relevant in two respects. First, such changes in the musculoskeletal system presumably contribute neural signals for pace selection [119]. Second, fatigue in such events appears to have a strong central component that develops relatively early and thus helps protect the musculoskeletal system. Evidence for such protection includes (i) direct measurement of neuromuscular fatigue before, during and after the Tor-des-Geants [120]; (ii) findings of equivalent fatigue in strength and strength endurance for the upper versus lower limbs across an adventure race (in which the lower limbs are utilised most heavily [121]); and (iii) the reduction in those functional capacities being much smaller than the reduction in exercise intensity of racing itself [121].

Neuroendocrine

Desensitisation to, or depletion of, stress-related hormones, humoral factors and neurotransmitters appears to have a role in the ‘selection’ of intensity during ultra-endurance exercise [92]. Research on prolonged, multi-day military training indicates that chronic elevation of circulating noradrenaline may lead to a desensitisation to the sympathetic response [7, 8, 77, 122, 123], which has even been observed within one bout of exercise (36–135 min at 5–10 % below anaerobic threshold [124]). Consistent with this, heart rate becomes lower despite a higher [noradrenaline]plasma at submaximal exercise intensity following a 24-h adventure race simulation [125, 126]. Thus, perhaps the lower heart rate reflects a protective mechanism for the desensitisation, specifically of cardiac muscle.

Cardiovascular

On the other hand, cardiac dysfunction and ‘damage’ following ultra-endurance exercise has been reported repeatedly (reviewed in [127]). The adaptive desensitisation may reduce the pulse pressure and frequency and intensity of ventricular contractions, temporarily reducing work capacity and aiding homeostasis, while chronically, the prolonged and repeated myocardial loading is associated with functional and structural (mal)adaptations. Specifically, functional changes appear mostly reversible following 1 or 2 weeks of recovery [128, 129], while structural remodelling of the right ventricle and myocardial fibrosis in the interventricular septum is evident in some ultra-endurance athletes (e.g. [128]). Further, there is some suggestion that the potential for (mal)adaptive changes in cardiac tissue from prolonged exposure to exercise may explain the elevated prevalence of arrhythmias and sudden cardiac death in chronically fit athletes [130–133]. Although others [134] argue that the primary animal data that support this do not convincingly translate to the human setting, and the epidemiological data that provide the evidence for sudden cardiac death during marathon events do not distinguish the recreational from the elite athlete, nor do they account for potential pre-existing undiagnosed cardiac conditions that may have been brought on by the prolonged exercise [134].

In addition, Masters athletes with lifelong history of exercise training appear to have a blunted cerebrovascular response to arterial carbon dioxide content (PCO2) [135], which seems to conflict with the established link between impaired cerebrovascular responsiveness and disease [e.g. hypertension [136], diabetes [137], dementia [138]] and prediction of all-cause cardiovascular mortality [139]. Thomas and colleagues suggested that the blunted response they observed in their habitually fit Masters athletes was a consequence of the prolonged exposure to elevated arterial CO2 content from exercise (i.e. chronic adaptation), which would presumably include ultra-endurance forms. Finally, the peripheral vasculature may also show maladaptive responses to a prolonged history of ultra-endurance running, with recent reports showing lower large-artery compliance in runners than controls [140]. Collectively, there is limited direct evidence implying permanent cardiac, cerebrovascular or peripheral vascular damage after ultra-endurance exercise, acutely or chronically, although an inverted U- or J-shaped adaptive pattern may be present. Further work is needed to elucidate this area.

Cerebral

Understanding how the brain contributes to optimising performance in extreme environments has gained attention recently. Paulus and colleagues [141] showed that adventure racing athletes have altered (insular) cortex activation during an aversive interoceptive challenge consisting of increased respiratory effort. Interoception is a process suggested to be important for optimal performance because it links the perturbation of internal state as a result of external demands to goal-directed action that maintain a homeostatic balance [142]. Further, these findings in adventure racers were similar to the differential modulation of the right insular cortex in elite military personnel during combat-like performance [143]. Whether these differences in brain activation are a consequence of chronic adaptation or that individuals participating in these activities self-select into them, perhaps as a biological consequence of their neuroanatomy, remains to be determined. Nevertheless, Noakes’ premise [91] that sensory feedback to the brain, its integration and interpretation within the brain (as reflected in behavioural outcomes such as perceived exertion ratings or pace selection), with the interpretation potentially being adaptable, appears to be emerging as an important factor for optimal performance in extreme environments. Indeed, ‘brain endurance training’ for improved endurance performance may be an example of how the brain may adapt (see [144, 145]), and supports the role that the brain has in regulating power output. However, how effective brain training is within the context of extreme loading (e.g. adventure racing), which as already mentioned is often associated with severe sleep deprivation and energy deficits, is unknown. In addition, brain energetics have a likely role in performance within this context, since animal studies have shown that both exhaustive exercise [146, 147] and sleep deprivation [148] reduce brain glycogen stores. Matsui and colleagues have also illustrated that the brain adapts in a similar way to skeletal muscle following exercise, whereby brain glycogen is increased above basal levels following both exhaustive exercise and following 4 weeks of exercise training [147]. Interestingly, the areas of the brain that are most affected are the cortex and hippocampus, both involved in motor control and cognitive function.

Despite all of these findings, we still have limited understanding of the specific neuropsychophysiological processes under ultra-endurance conditions. With modern research methods and techniques becoming accessible in extreme loading settings (e.g. mobile MRI unit), the opportunity to improve this understanding is increasing, and such opportunity has provided new and unexpected insight. For example, MRI voxel-based morphometry (VBM) showed a volume reduction of about 6 % across the 2 months of TEFR in the brains of the ultra-endurance runners competing in that event [149]. As the normal age-related physiological brain volume reduction is less than 0.2 % per year [150, 151], these results appear to have significant implications. However, caution must be taken when interpreting these observations. The grey matter (GM) volume reduction observed was specific to distinct regions of the brain, and specifically regions normally associated with visuospatial and language tasks [152], which were likely to have received reduced activation during this repetitive and relatively isolated 2-month task. Interestingly, the energy-intensive default mode network of the brain also showed reductions in GM volume. However, given that 60–80 % of the brain’s high energy consumption is used in baseline activity [153], perhaps the resting state system is less important during such prolonged running, and the deactivation of this region serves a function of energy conservation during such a catabolic state [152]. Nevertheless, regardless of these acute brain composition changes observed during the TEFR, they all returned to pre-race volumes within eight months following the event. Further, these pre-race volumes were not different to a group of moderate-activity control participants, indicating no chronic (mal)adaptation from training for this event. Collectively, these structural brain data indicate that despite substantial changes to brain composition during the catabolic stress of an ultra-marathon, the observed differences seem to be reversible and adaptive.

A specific field of research is developing due to the recognition that evaluation of pain resilience and mental peculiarities of individuals who repeatedly survive ultra-endurance competitions unscathed can serve as a counter-model to pain and mental disorder research. Although the behaviour of the athletes with repetitive exhausting and painful training every day for several years might support the notion that they have better pain control, the results of Tesarz et al. [154] support the opposite interpretation. There seems to be similarities but also differences in the mechanisms of pain perception and pain control in endurance athletes compared to controls [118]. As discussions on physical and mental resilience to internal and external stimuli are growing [155], further investigations on personality traits in ultra-endurance athletes may become a relevant part in this new field of research.

Metabolic

The capacity of an individual to sustain exercise for prolonged periods of 100+ near-continuous hours or for many hours repeated over many days will depend partly on their capacity for endurance-related metabolism. Indeed, there is ample evidence illustrating metabolic adaptation to extreme loading scenarios. Increased fat oxidation has been reported from studies on polar expeditions [4, 5], although without an evident increase in the fat oxidative power of the sampled muscle, and a differential response for exercising muscles of the upper limb (increased fat oxidation) and lower limb (decreased fat oxidation) [4, 156]. Metabolic adaptations to an adventure race also reveal an extremely pronounced shift towards fat metabolism [68], as occurs also in multi-day military operations [157]. The shift to and reliance on fat metabolism for the predominately low-to-moderate exercise intensity associated with ultra-endurance exercise seem critical, as food intake can be restricted for a number of reasons such as carrying capacity and availability. Indeed, large energy deficits are evident in these settings [5, 14, 158, 159], illustrated well in the Stroud et al. study where both participants were virtually devoid of body fat (~2 %) and severely hypoglycaemic (0.3 mmol L−1) by the end of their 95-day Antarctica polar expedition [5].

Energy and nutrient stores

Energy expenditure can reach 70 MJ in a single 24-h exercise bout, but appears to be typically 30–45 MJ during multiday semi-continuous exercise (adventure racing; [14, 158]), or grand tour cycle races [160]. Consequently, and as mentioned above, there is a significant energy deficit typically observed within this setting, yet this appears not to result in hypoglycaemia [68]. The energy deficits lead to fat mass and lean mass loss, but this is regained when adequate recovery is allowed after the event [161, 162]. The homeostatic balance of micronutrients and trace elements is probably also compromised during prolonged continuous exercise; however, this remains unknown and possibly not of major importance within this time frame. Overall, performance and minimal energy (macronutrient) needs required to continue exercise until completion are determined by balancing the consumption of carbohydrates, the shift towards fat oxidation and the mode(s) and duration of exercise, as well as the combination of upper body vs lower body exercise.

Conclusions

Suggestions and future directions: Practice and research

In the present review, we have primarily focused on the upper end of load carriage and exercise tolerance and capacity. The acute musculoskeletal impacts of such loading are intuitive but the (mal)adaptations are less so. All physiological systems are impacted and these generally have strong capacity for adaptation. However, adaptation patterns of musculoskeletal and physiological systems are often U or J shaped and over time minimal or no load carriage will decrease one’s global load carrying capacity and eventually lead to severe adverse effects and manifest disease under minimal absolute but high relative loads. We advocate that further understanding of load carrying capacity and the inherent mechanisms leading to adverse effects may advantageously be studied in this perspective. Indeed, improved access to insightful and portable technologies is providing possibilities to explore these questions raised throughout the review.

As an industry, the need to impose regulations or restrictions for ultra-endurance competitions like adventure racing does not seem necessary, since evidence to date indicates that physiological feedback mechanisms and changes in perception of exertion and motivation as a consequence of sleep deprivation appear capable of protecting individuals from homeostatic failure. However, the net effect on ultra-endurance performance as well as the long-term health consequences of acute and chronic non-steroidal anti-inflammatory and analgesics drug use, often taken in combination with stimulants like caffeine during competition, does require clarification and understanding of how they may impact on this homeostatic control, and therefore athlete safety.

Finally, while humans have many intrinsic mechanisms to protect themselves against acute and to some extent chronic overloading, it is now clear that no such mechanisms exist to effectively protect against the numerous harmful impacts of chronic underloading. Hence, such guidelines or policy seem at least as important as any directed against overloading.

Abbreviations

- GI:

-

gastrointestinal

- Phys:

-

physical

- Physiol:

-

physiological

- MAP:

-

mean arterial blood pressure

- BRS:

-

baroreflex sensitivity

- PaCO2 :

-

partial pressure of arterial carbon dioxide

- SCD:

-

sudden cardiac death

- CAD:

-

cardiac arterial disease

- MI:

-

myocardial infarction

- CBF:

-

cerebral blood flow

- PAD:

-

peripheral arterial disease

- TG:

-

triglycerides

- FIS:

-

International Ski Federation

- VO2 max:

-

maximal oxygen consumption

- MRI:

-

magnetic resonance imaging

- TEFR:

-

TransEurope FootRace

- Mq:

-

musculus quadriceps

- vl:

-

vastus lateralis

- vi:

-

vastus intermedius

- vm:

-

vastus medialis

- Mam:

-

musculus adductor magnus

- Msa:

-

musculus sartorius

- Mgr:

-

musculus gracilis

- Msm:

-

musculus semimembranosus

- Mst:

-

musculus semitendinosus

- Mbf:

-

musculus biceps femoris

- Cl:

-

caput longum

- Cb:

-

caput brevis

- PCO2 :

-

carbon dioxide

- GM:

-

grey matter

References

Hawley JA, Hopkins WG. Aerobic glycolytic and aerobic lipolytic power systems; a new paradigm with implications for endurance and ultraendurance events. Sports Med. 1995;19(4):240–50.

Kreider RB. Physiological considerations of ultraendurance performance. Int J Sport Nutr. 1991;1(1):3–27.

Laursen PB, Rhodes EC. Factors affecting performance in an ultraendurance triathlon. Sports Med. 2001;31(3):195–209.

Helge JW, et al. Skiing across the Greenland icecap: divergent effects on limb muscle adaptations and substrate oxidation. J Exp Biol. 2003;206(6):1075–83.

Stroud MA, et al. Energy expenditure using isotope-labeled water (2H 182 O), exercise performance, skeletal muscle enzyme activities and plasma biochemical parameters in humans during 95 days of endurance exercise with inadequate energy intake. Eur J Appl Physiol. 1997;76:243–52.

Opstad PK, et al. Performance, mood, and clinical symptoms in men exposed to prolonged, severe physical work and sleep deprivation. Aviat Space Environ Med. 1978;49(9):1065–73.

Opstad PK. Adrenergic desensitization and alterations in free and conjugated catecholamines during prolonged physical strain and energy deficiency. Biog Amines. 1990;7:625–39.

Opstad PK, Asbjorn A, Rognum TO. Altered hormonal responses to short-term bicycle exercise in young men after prolonged physical strain, caloric deficit, and sleep deprivation. Eur J Appl Physiol. 1980;45:51–62.

Castellani JW, et al. Energy expenditure in men and women during 54 h of exercise and caloric deprivation. Med Sci Sports Exerc. 2006;38(5):894–900.

Castellani JW, et al. Eighty-four hours of sustained operations alter thermoregulation during cold exposure. Med Sci Sports Exerc. 2003;35(1):175–81.

Castellani JW, et al. Thermoregulation during cold exposure after several days of exhaustive exercise. J Appl Physiol. 2001;90(3):939–46.

Young AJ, et al. Exertional fatigue, sleep loss, and negative energy balance increase susceptibility to hypothermia. J Appl Physiol. 1998;85(4):1210–7.

Lucas SJE, et al. Intensity and physiological strain of competitive ultra-endurance exercise in humans. J Sports Sci. 2008;26(5):477–89.

Enqvist JK, et al. Energy turnover during 24 hours and 6 days of adventure racing. J Sports Sci. 2010;28(9):947–55.

Marshall SLA. The soldier’s load and the mobility of a nation. Quantico: Marine Corps Association; 1950.

Orr R. The history of the soldier’s load. Australian Army J. 2010;7(2):67–88 Available at: http://works.bepress.com/rob_orr/4.

Bramble DM, Lieberman DE. Endurance running and the evolution of Homo. Nature. 2004;432(7015):345–52.

Larsen BB, Netto KP, Aisbett BP. The effect of body armor on performance, thermal stress, and exertion: a critical review. Mil Med. 2011;176(11):1265–73.

Soule RG, Pandolf KB, Goldman RF. Energy expenditure of heavy load carriage. Ergonomics. 1978;21(5):373–81.

Ricciardi R, Deuster PA, Talbot LA. Metabolic demands of body armor on physical performance in simulated conditions. Mil Med. 2008;173(9):817–24.

Givoni B, Goldman RF. Predicting metabolic energy cost. J Appl Physiol. 1971;30(3):429–33.

Knapik J, Harman E, Reynolds K. Load carriage using packs: a review of physiological, biomechanical and medical aspects. Appl Ergon. 1996;27(3):207–16.

Goldman RF. Environmental limits, their prescription and proscription. Int J Environ Stud. 1973;5(1–4):193–204.

Passmore R, Durnin JVGA. Human energy expenditure. Physiol Rev. 1955;35(4):801–40.

Golriz S, Walker B. Can load carriage system weight, design and placement affect pain and discomfort? a systematic review. J Back Musculoskelet Rehabil. 2011;24(1):1–16.

Beekley MD, et al. Effects of heavy load carriage during constant-speed, simulated, road marching. Mil Med. 2007;172(6):592–5.

Pandolf KB, Givoni B, Goldman RF. Predicting energy expenditure with loads while standing or walking very slowly. J Appl Physiol Respir Environ Exerc Physiol. 1977;43(4):577–81.

Soule RG, Goldman RF. Energy cost of loads carried on the head, hands, or feet. J Appl Physiol. 1969;27(5):687–90.

Holewun M, Lotens WA. The influence of backpack design on physical performance. Ergonomics. 1992;35(2):149–57.

Nindl BC, et al. Physical performance responses during 72 h of military operational stress. Med Sci Sports Exerc. 2002;34(11):1814–22.

Lucas RAI, Epstein Y, Kjellstrom T. Excessive occupational heat exposure: a significant ergonomic challenge and health risk for current and future workers. Extrem Physiol Med. 2014;. doi:10.1186/2046-7648-3-14.

Anglem N, et al. Mood, illness and injury responses and recovery with adventure racing. Wilderness Environ Med. 2008;19(1):30–8.

Holmer I. Evaluation of cold workplaces: an overview of standards for assessment of cold stress. Ind Health. 2009;47(3):228–34.

Castellani JW, et al. Thermoregulation during cold exposure: effects of prior exercise. J Appl Physiol. 1999;87(1):247–52.

Haight JSJ, Keatinge WR. Failure of thermoregulation in the cold during hypoglycaemia induced by exercise and ethanol. J Physiol. 1973;229(1):87–97.

Thompson RL, Hayward JS. Wet-cold exposure and hypothermia: thermal and metabolic responses to prolonged exercise in rain. J Appl Physiol. 1996;81(3):1128–37.

Cheung SS, et al. Changes in manual dexterity following short-term hand and forearm immersion in 10 ℃ water. Aviat Space Environ Med. 2003;74(9):990–3.

Tipton MJ, et al. Habituation of the metabolic and ventilatory responses to cold-water immersion in humans. J Therm Biol. 2013;38(1):24–31.

Brazaitis M, et al. Time course of physiological and psychological responses in humans during a 20 day severe-cold–acclimation programme. PLoS One. 2014;9(4):e94698.

Daanen HAM, Koedam J, Cheung SS. Trainability of cold induced vasodilatation in fingers and toes. Eur J Appl Physiol. 2012;112(7):2595–601.

van der Lans AAJJ, et al. Cold acclimation recruits human brown fat and increases nonshivering thermogenesis. J Clin Investig. 2013;123(8):3395–403.

Marken Lichtenbelt WD, et al. Cold acclimation and health: effect on brown fat, energetics, and insulin sensitivity. Extreme Physiol Med. 2015;4(Suppl 1):45.

McManus AM, et al. Impact of prolonged sitting on vascular function in young girls. Exp Physiol. 2015;100(11):1379–87.

Thosar SS, et al. Effect of prolonged sitting and breaks in sitting time on endothelial function. Med Sci Sports Exerc. 2015;47(4):843–9.

Thorp AA, et al. Alternating bouts of sitting and standing attenuate postprandial glucose responses. Med Sci Sports Exerc. 2014;46(11):2053–61.

Krogh-Madsen R, et al. A 2-week reduction of ambulatory activity attenuates peripheral insulin sensitivity. J Appl Physiol. 2010;108(5):1034–40.

WHO. Global recommendations on physical activity for health. Geneva: WHO Press, World Health Organization; 2012.

Walhin J-P, et al. Exercise counteracts the effects of short-term overfeeding and reduced physical activity independent of energy imbalance in healthy young men. J Physiol. 2013;591(24):6231–43.

Veerman JL, et al. Television viewing time and reduced life expectancy: a life table analysis. Br J Sports Med. 2012;46(13):927–30.

Yen YW. Fatal path. In: Sports ilustrated. 2004. http://www.si.com/vault/2004/10/11/8188159/fatal-path.

Knapik J, et al. Load carriage in military operations: a review of historical, physiological, biomechanical and medical aspects. In: Santee WR, Friedl KE, editors. Military quantitative physiology: problems and concepts in military operational medicine. Fort Detrick: Office of the Surgeon General and the Borden Institute; 2012. p. 303–37.

Knapik JJ, Reynolds KL, Harman E. Soldier load carriage: historical, physiological, biomechanical, and medical aspects. Mil Med. 2004;169(1):45–56.

Orr RM, et al. Load carriage: minimising Soldier Injuries through physical conditioning—a narrative review. J Mil Veterans Health. 2010;18(3):31–8.

Drain J, et al. Load carriage capacity of the dismounted combatant—a commander’s guide: DSTO-TR-2765. Australia: Human Protection and Performance Division, DSTO Defence Science and Technology Organisation; 2012.

Sawka MN, et al. Heat stress control and heat casualty management. No. MISC-04-13. Natick: US Army Research Institute of Environmental Medicine; 2003.

Nevola VR, Staerck J, Harrison M. Commanders’ guide fluid intake during military operations in the heat. Reference guide for commanders. (DSTL/CR15164). Farnborough: Defence Science and Technology Laboratory and QinetiQ ltd; 2005.

Messer P, Owen G, Casey A. Commanders guide. Nutrition for health and performance. A reference guide for commanders (QINETIQ/KI/CHS/CR021120). Farnborough: QinetiQ ltd; 2005.

Bull F, et al. Turning the tide: national policy approaches to increasing physical activity in seven European countries. Br J Sports Med. 2015;49(11):749–56.

Mackichan F, Adamson J, Gooberman-Hill R. Living within your limits: activity restriction in older people experiencing chronic pain. Age Ageing. 2013;42(6):702–8.

McGregor AH, et al. Rehabilitation following surgery for lumbar spinal stenosis: a Cochrane review. Spine. 2014;39(13):1044–54.

Heran BS, et al. Exercise-based cardiac rehabilitation for coronary heart disease. Cochrane Database Syst Rev. 2011;(7):CD001800–CD001800.

Helge JW, et al. Increased fat oxidation and regulation of metabolic genes with ultraendurance exercise. Acta Physiol. 2007;191(1):77–86.

Martin BJ. Effect of sleep deprivation on tolerance of prolonged exercise. Eur J Appl Physiol. 1981;47(4):345–54.

Martin BJ, Gaddis GM. Exercise after sleep deprivation. Med Sci Sports Exerc. 1981;13(4):220–3.

Rodgers CD, et al. Sleep deprivation: effects on work capacity, self-paced walking, contractile properties and perceived exertion. Sleep. 1995;18(1):30–8.

Temesi J, et al. Central fatigue assessed by transcranial magnetic stimulation in ultratrail running. Med Sci Sports Exerc. 2014;46(6):1166–75.

Wichardt E, et al. Rhabdomyolysis/myoglobinemia and NSAID during 48 h ultra-endurance exercise (adventure racing). Eur J Appl Physiol. 2011;111(7):1541–4.

Myles WS, Eclache JP, Beury J. Self-pacing during sustained, repetitive exercise. Aviat Space Environ Med. 1979;50(9):921–4.

Myles WS, Romet TT. Self-paced work in sleep deprived subjects. Ergonomics. 1987;30(8):1175–84.

Opstad PK, et al. Plasma renin activity and serum aldosterone during prolonged physical strain. The significance of sleep and energy deprivation. Eur J Appl Physiol. 1985;54(1):1–6.

Opstad PK, et al. Adrenaline stimulated cyclic adenosine monophosphate response in leucocytes is reduced after prolonged physical activity combined with sleep and energy deprivation. Eur J Appl Physiol. 1994;69(5):371–5.

Evans WJ, et al. Self-paced hard work comparing men and women. Ergonomics. 1980;23(7):613–21.

Rogers I, Speedy D. Fluid balance in a wilderness multisport endurance competitor. (Letters to the editor). New Zealand. J Sports Med. 2000;28(1):3.

Laursen PB, Rhodes EC. Physiological analysis of a high intensity ultraendurance event. Strength Cond J. 1999;21(1):26–38.

Kimber NE, Ross JJ. Heart rate responses during an ultra-endurance multisport event. In: Partners in performance national conference. Wellington; 1996.

Laursen PB, et al. Core temperature and hydration status during an Ironman triathlon. Br J Sports Med. 2006;40(4):320–5.

Speedy DB, et al. Exercise-induced hyponatremia in ultradistance triathletes is caused by inappropriate fluid retention. Clin J Sports Med. 2000;10:272–8.

Neumayr G, et al. Heart rate response to ultraendurance cycling. Br J Sports Med. 2003;37(1):89–90.

Knechtle B, et al. Effect of a multistage ultra-endurance triathlon on body composition: world challenge Deca iron triathlon 2006. Br J Sports Med. 2008;42(2):121–5.

Knechtle B, et al. Effect of a multistage ultraendurance triathlon on aldosterone, vasopressin, extracellular water and urine electrolytes. Scott Med J. 2012;57(1):26–32.

Knechtle B, et al. A triple iron triathlon leads to a decrease in total body mass but not to dehydration. Res Q Exerc Sport. 2010;81(3):319–27.

Rüst C, et al. No case of exercise-associated hyponatraemia in top male ultra-endurance cyclists: the ‘Swiss cycling marathon’. Eur J Appl Physiol. 2012;112(2):689–97.

Noakes TD, et al. Physiological and biochemical measurements during a 4-day surf-ski marathon. S Afr Med J. 1985;67:212–6.

Noakes TD, St Clair Gibson A, Lambert EV. From catastrophe to complexity: a novel model of integrative central neural regulation of effort and fatigue during exercise in humans: summary and conclusions. Br J Sports Med. 2005;39(2):120–4.

Noakes TD. Fatigue is a brain-derived emotion that regulates the exercise behavior to ensure the protection of whole body homeostasis. Front Physiol. 2012;3:82.

Nybo L, Secher NH. Cerebral perturbations provoked by prolonged exercise. Prog Neurobiol. 2004;72:223–61.

Peddie MC, et al. Breaking prolonged sitting reduces postprandial glycemia in healthy, normal-weight adults: a randomized crossover trial. Am J Clin Nutr. 2013;98(2):358–66.

Kontos N. Perspective: biomedicine—menace or straw man? reexamining the biopsychosocial argument. Acad Med. 2011;86(4):509–15.

Daniel JA, Sizer PSJ, Latman NS. Evaluation of body composition methods for accuracy. Biomed Instrum Technol. 2005;39(5):397–405.

Shalabi A, et al. Tendon injury and repair after core biopsies in chronic Achilles tendinosis evaluated by serial magnetic resonance imaging. Br J Sports Med. 2004;38(5):606–12.

Collins MS. Imaging evaluation of chronic ankle and hindfoot pain in athletes. Magn Reson Imaging Clin N Am. 2008;16(1):39–58.

Schütz UHW, et al. The TransEurope Footrace Project: longitudinal data acquisition in a cluster randomized mobile MRI observational cohort study on 44 endurance runners at a 64-stage 4486 km transcontinental ultramarathon. BMC Med. 2012;10:78.

Marti B, et al. Is excessive running predictive of degenerative hip disease? controlled study of former elite athletes. Br Med J. 1989;299(6691):91–3.

Schueller-Weidekamm C, et al. Incidence of chronic knee lesions in long-distance runners based on training level: findings at MRI. Eur J Radiol. 2006;58(2):286–93.

Roesler H. The history of some fundamental concepts in bone biomechanics. J Biomech. 1987;20(11):1025–34.

Huiskes R, et al. Effects of mechanical forces on maintenance and adaptation of form in trabecular bone. Nature. 2000;405(6787):704–6.

Van Rietbergen B, et al. The mechanism of bone remodeling and resorption around press-fitted THA stems. J Biomech. 1993;26(4–5):369–82.

Fries JF, et al. Running and the development of disability with age. Ann Intern Med. 1994;121(7):502–9.

Schütz UHW, et al. Biochemical cartilage alteration and unexpected signal recovery in T2* mapping observed in ankle joints with mobile MRI during a transcontinental multistage footrace over 4486 km. Osteoarthr Cartil. 2014;22(11):1840–50.

Felson DT. An update on the pathogenesis and epidemiology of osteoarthritis. Radiol Clin North Am. 2004;42(1):1–9.

Cymet TC, Sinkov V. Does long-distance running cause osteoarthritis? J Am Osteopath Assoc. 2006;106(6):342–5.

Krampla W, et al. Changes on magnetic resonance tomography in the knee joints of marathon runners: a 10-year longitudinal study. Skeletal Radiol. 2008;37(7):619–26.

Franklyn M, Oakes B. Aetiology and mechanisms of injury in medial tibial stress syndrome: current and future developments. World J Orthop. 2015;6(8):577–89.

Rochcongar P. Knee tendinopathies and sports practice. Rev Prat. 2009;59(9):1257–60.

Schulze I. Transeuropalauf 2003. Lissabon—Moskau 5036 km in 64 Tagesetappen. 1st ed. Engelsdorfer Verlagsgesellschaft; 2004.

Lewis B. Running the trans America footrace: trials and triumphs of life on the road. 1st ed. Mechanicsburg, PA: Stackpole Books; 1994.

Shah S, Miller B, Kuhn J. Chronic exertional compartment syndrome. Am J Orthop. 2004;33(7):335–41.

Jefferies JG, Carter T, White TO. A delayed presentation of bilateral leg compartment syndrome following non-stop dancing. BMJ Case Rep. 2015. doi:10.1136/bcr-2014-208630.

Cheung SS, Daanen HAM. Dynamic adaptation of the peripheral circulation to cold exposure. Microcirculation. 2012;19(1):65–77.

Dixon SJ, Creaby MW, Allsopp AJ. Comparison of static and dynamic biomechanical measures in military recruits with and without a history of third metatarsal stress fracture. Clin Biomech. 2006;21(4):412–9.

Finestone A, Milgrom C. How stress fracture incidence was lowered in the Israeli Army: a 25-year struggle. Med Sci Sports Exerc. 2008;40(11):S623–9.

Freund W, et al. Ultra-marathon runners are different: investigations into pain tolerance and personality traits of participants of the TransEurope FootRace 2009. Pain Pract. 2013;13(7):524–32.

Noakes TD. Time to move beyond a brainless exercise physiology: the evidence for complex regulation of human exercise performance. Appl Physiol Nutr Metab. 2011;36(1):23–35.

Saugy J, et al. Alterations of neuromuscular function after the world’s most challenging mountain ultra-marathon. PLoS One. 2013;8(6):e65596.

Lucas SJE, et al. The impact of 100 h of exercise and sleep deprivation on cognitive function and physical capacities. J Sports Sci. 2009;27(7):719–28.

Opstad PK. Alterations in the morning plasma levels of hormones and the endocrine responses to bicycle exercise during prolonged strain: the significance of energy and sleep deprivation. Acta Endocrinol. 1991;125:14–22.

Opstad PK, et al. The dynamic response of the ß2- and a2-adrenoreceptors in human blood cells to prolonged exhausting strain, sleep and energy deficiency. Biog Amines. 1994;10:329–44.

Eysmann SB, et al. Prolonged exercise alters beta-adrenergic responsiveness in healthy sedentary humans. J Appl Physiol. 1996;80(2):616–22.

Lucas SJE, et al. Cardiorespiratory and sympathetic responsiveness during very prolonged exercise. In: Medical sciences congress of New Zealand. Queenstown; 2008. Available online at: http://physoc.org.nz/assets/files/proceedings/MedSci2008%20abstracts.pdf.

Mattsson CM, et al. Reversed drift in heart rate but increased oxygen uptake at fixed work rate during 24 h ultra-endurance exercise. Scand J Med Sci Sports. 2010;20(2):298–304.

George K, et al. The endurance athletes heart: acute stress and chronic adaptation. Br J Sports Med. 2012;46(Suppl 1):i29–36.

La Gerche A, et al. Exercise-induced right ventricular dysfunction and structural remodelling in endurance athletes. Eur Heart J. 2012;33(8):998–1006.

Shave R, et al. Postexercise changes in left ventricular function: the evidence so far. Med Sci Sports Exerc. 2008;40(8):1393–9.

Baldesberger S, et al. Sinus node disease and arrhythmias in the long-term follow-up of former professional cyclists. Eur Heart J. 2008;29(1):71–8.

Aizer A, et al. Relation of Vigorous Exercise to Risk of Atrial Fibrillation. Am J Cardiol. 2009;103(11):1572–7.

La Gerche A, Prior DL, Heidbüchel H. Strenuous endurance exercise: is more better for everyone? Our genes won’t tell us. Br J Sports Med. 2011;45(3):162–4.

Guasch E, Nattel S. cross talk proposal: prolonged intense exercise training does lead to myocardial damage. J Physiol. 2013;591(20):4939–41.

Ruiz JR, Joyner M, Lucia A. Crosstalk opposing view: prolonged intense exercise does not lead to cardiac damage. J Physiol. 2013;591(20):4943–5.

Thomas BP, et al. Life-long aerobic exercise preserved baseline cerebral blood flow but reduced vascular reactivity to CO2. J Magn Reson Imaging. 2013;38(5):1177–83.

Immink RV, et al. Impaired cerebral autoregulation in patients with malignant hypertension. Circulation. 2004;110(15):2241–5.

Kim Y-S, et al. Dynamic cerebral autoregulatory capacity is affected early in Type 2 diabetes. Clin Sci. 2008;115(8):255–62.

den Abeelen AS, et al. Impaired cerebral autoregulation and vasomotor reactivity in sporadic Alzheimer’s disease. Curr Alzheimer Res. 2014;11(1):11–7.

Portegies ML, et al. Cerebral vasomotor reactivity and risk of mortality: the Rotterdam study. Stroke. 2014;45(1):42–7.

Burr JF, et al. Long-term ultra-marathon running and arterial compliance. J Sci Med Sport. 2014;17(3):322–5.

Paulus MP, et al. Subjecting elite athletes to inspiratory breathing load reveals behavioral and neural signatures of optimal performers in extreme environments. PLoS One. 2012;7(1):e29394.

Paulus MP, et al. A neuroscience approach to optimizing brain resources for human performance in extreme environments. Neurosci Biobehav Rev. 2009;33(7):1080–8.

Paulus MP, et al. Differential brain activation to angry faces by elite warfighters: neural processing evidence for enhanced threat detection. PLoS One. 2010;5(4):e10096.

McMillan S. Looking back at ECSS 2014. Sports Med - Open. 2015;1(1):25.

Marcora SM, Staiano W, Merlini M. A randomized controlled trial of brain endurance training (bet) to reduce fatigue during endurance exercise. In: Annual meeting of the American College of Sports Medicine. San Diego; 2015.

Matsui T, et al. Brain glycogen decreases during prolonged exercise. J Physiol. 2011;589(13):3383–93.

Matsui T, et al. Brain glycogen supercompensation following exhaustive exercise. J Physiol. 2012;590(3):607–16.

Kong J, et al. Brain glycogen decreases with increased periods of wakefulness: implications for homeostatic drive to sleep. J Neurosci. 2002;22(13):5581–7.

Freund W, et al. Substantial and reversible brain gray matter reduction but no acute brain lesions in ultramarathon runners: experience from the TransEurope-FootRace Project. BMC Med. 2012;10:170.

Smith CD, et al. Age and gender effects on human brain anatomy: a voxel-based morphometric study in healthy elderly. Neurobiol Aging. 2007;28(7):1075–87.

Good CD, et al. A voxel-based morphometric study of ageing in 465 normal adult human brains. NeuroImage. 2001;14(1):21–36.

Freund W, et al. Regionally accentuated reversible brain grey matter reduction in ultra marathon runners detected by voxel-based morphometry. BMC Sports Sci, Med Rehabil. 2014;6:4–4.

Raichle ME, Mintun MA. Brain work and brain imaging. Annu Rev Neurosci. 2006;29(1):449–76.

Tesarz J, et al. Alterations in endogenous pain modulation in endurance athletes: an experimental study using quantitative sensory testing and the cold-pressor task. Pain. 2013;154(7):1022–9.

Freund W, et al. Correspondence to Tesarz et al. Alterations in endogenous pain modulation in endurance athletes: An experimental study using quantitative sensory testing and the cold pressor task. PAIN® 154, 1022–29, 2013. Pain. 2013;154(10):2234–5.

Fernström M, et al. Reduced efficiency, but increased fat oxidation, in mitochondria from human skeletal muscle after 24-h ultraendurance exercise. J Appl Physiol. 2007;102(5):1844–9.

Guezennec CY, et al. Physical performance and metabolic changes induced by combined prolonged exercise and different energy intakes in humans. Eur J Appl Physiol. 1994;68(6):525–30.

Doel K, Hellemans I, Thomson C. Energy expenditure and energy balance in an adventure race. In: Combined annual meetings of sports medicine New Zealand and sport and exercise science New Zealand. Queenstown: Sports Medicine New Zealand Inc; 2005.

Ranchordas M. Nutrition for adventure racing. Sports Med. 2012;42(11):915–27.

Saris WHM, et al. Study on food intake and energy expenditure during extreme sustained exercise: the tour de France. Int J Sports Med. 2008;10(S 1):S26–31.

Helge JW, et al. Low-intensity training dissociates metabolic from aerobic fitness. Scand J Med Sci Sports. 2008;18(1):86–94.

Rosenkilde M, et al. Inability to match energy intake with energy expenditure at sustained near-maximal rates of energy expenditure in older men during a 14-day cycling expedition. Am J Clin Nutr. 2015;. doi:10.3945/ajcn.115.109918.

Authors’ contributions

SJEL participated in the conception and outline, led the drafting of the manuscript and made critical revision. JWH and JDC participated in the conception and outline, manuscript drafting and critical revision. UHWS participated in manuscript drafting and critical revision. RFG participated in the conception and outline and contributed to manuscript drafting. All authors read and approved the final manuscript.

Acknowledgements

The authors wish to thank Extreme Physiology and Medicine for hosting these reviews, Dr. Robin Orr for his permission to use Fig. 1 and colleagues Dr. Olivia Faull and Mr. Neil Dallaway for their contribution and feedback on brain-related topics contained within this review.

Competing interests

The authors declare that they have no competing interests.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Lucas, S.J.E., Helge, J.W., Schütz, U.H.W. et al. Moving in extreme environments: extreme loading; carriage versus distance. Extrem Physiol Med 5, 6 (2016). https://doi.org/10.1186/s13728-016-0047-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13728-016-0047-z