Abstract

By introducing some parameters perturbed by white noises, we propose a class of stochastic inertial neural networks in random environments. Constructing two Lyapunov–Krasovskii functionals, we establish the mean-square exponential input-to-state stability on the addressed model, which generalizes and refines the recent results. In addition, an example with numerical simulation is carried out to support the theoretical findings.

Similar content being viewed by others

1 Introduction

Recently, Babcock and Westervelt [1, 2] have introduced the well-known inertial neural networks that take the following second-order delay differential equations:

to discover the complicated dynamic behavior of electronic neural networks. Here the initial conditions are defined as

where \(x(t)=(x_{1}(t), x_{2}(t),\ldots , x_{n}(t))\) is the state vector, \(x_{i}''(t)\) is called the ith inertial term, the positive parameters \(a_{i}\), \(b_{i}\), the nonnegative parameters \(\tau _{j}\), and the other parameters \(c_{ij}\), \(d_{ij }\) are all constant, \(I_{i}(t)\) is the external input of ith neuron at time t and \(I=(I_{1}(t), I_{2}(t),\ldots , I_{n}(t))\in \ell _{\infty }\), where \(\ell _{\infty }\) denotes the family of essential bounded functions I from \([0,\infty )\) to \(\mathbb{R}^{n}\) with norm \(\|I\|_{\infty }=\operatorname{ess}\sup_{t\geq 0}\sqrt{\sum_{i=1}^{n}I_{i}^{2}(t)} \). The activation functions \(f_{j} \) and \(g_{j} \) satisfy \(f_{j}(0)=g_{j}(0)=0\) and Lipschitz conditions, i.e., there exist positive constants \(F_{j}\) and \(G_{j}\) such that

There are two main methods to study inertial neural network (1.1). One is the so-called reduced order method that has been adopted to study Hopf bifurcation [3–8], stability of equilibrium point [9–13] and periodicity [14–16], synchronization [17–21] and dissipativity [22, 23]. The other is the non-reduced order method that can overcome the great increase of dimension, and many researchers have used this approach to consider dynamic behaviors of (1.1) and its generalizations [22–36].

However, both reduced order and non-reduced order methods involve only deterministic inertial neural networks, do not incorporate stochastic inertial neural networks under the effect of environmental fluctuations. Remarkably, Haykin [37] has pointed out that synaptic transmission, caused by random fluctuations in neurotransmitter release and other probabilistic factors, is a noisy process in real nervous systems and in the implementation of artificial neural networks, hence one should take into consideration noise in modeling since it is unavoidable.

Assume that the parameter \(b_{i}\) (\(i\in J\)) is affected by environmental noise, with \(b_{i}\rightarrow b_{i}-\sigma _{i}\,dB_{i}(t)\), where \(B_{i}(t)\) is independent white noise (i.e., standard Brownian motion) with \(B_{i}(0) = 0\) defined on a complete probability space \((\Omega ,\{\mathcal{F}_{t}\}_{t\geq 0},\mathcal{P})\), \(\sigma _{i}^{2}\) denotes noise intensity. Then, corresponding to inertial neural network (1.1), we obtain the following stochastic system:

Obviously, the white noise disturbance term \(\sigma _{i}x_{i}(t)\,dB_{i}(t)\) will induce randomness such that the traditional deterministic inertial neural network (1.1) becomes stochastic system (1.4). One difficulty of this paper is to process white noise disturbances and the other is to introduce a suitable concept of stability to explain the dynamics of (1.4) precisely. The main aim of this paper is to investigate the mean-square exponential input-to-state stability of stochastic inertial neural network (1.4) with initial conditions (1.2). Input-to-state stability, different from the traditional stability such as asymptotical stability, almost sure stability, and exponential stability that means the system states will converge to an equilibrium point as time tends to infinity, can describe the system states varying within a certain region under external control. For more details about input-to-state stability, one can refer to [38–42]. However, as far as we know, almost no one has studied mean-square exponential input-to-state stability of stochastic inertial neural networks.

The remaining part of this paper includes four sections. In Sect. 2, we give the main result: several sufficient conditions that ensue the stochastic inertial neural network (1.4) is mean-square exponentially input-to-state stable. In Sect. 3, we provide numerical examples to check the effectiveness of the developed result. Finally, we summarize and evaluate our work in Sect. 4.

2 Mean-square exponential stability

Although Wang and Chen [43] have studied the mean-square exponential stability of stochastic inertial neural network (1.4) with two groups of different initial conditions (1.2), it is not appropriate to mean-square exponentially input-to-state stability. Fortunately, motivated by Zhu and Cao [38], who introduced the definition of the mean-square exponential input-to-state stability for stochastic delayed neural networks, together with the mean-square exponential stability (Wang and Chen [43]), we present the following definition.

Definition 2.1

Let \(x (t,\psi )=(x_{1}(t), x_{2}(t),\ldots , x_{n}(t))\) be a solution of (1.4) with initial conditions (1.2) \(\psi (s)=(\psi _{1}(s), \psi _{2}(s),\ldots , \psi _{n}(s))\). The stochastic inertial neural network (1.4) is said to be mean-square exponentially input-to-state stable if there exist positive constants λ, η, and K such that

where \(\|\bullet \|\) means square norm.

Theorem 2.1

Under assumptions (1.3), the stochastic inertial neural network (1.4) is mean-square exponentially input-to-state stable if there exist positive constants \(\beta _{i}\), \(\bar{\beta }_{i}\), and nonzero constants \(\alpha _{i}\), \(\gamma _{i}\), \(\bar{\alpha }_{i}\), \(\bar{\gamma }_{i}\), \(i\in J\) such that

and

where

Proof

Let \(x (t)=(x_{1}(t), x_{2}(t),\ldots , x_{n}(t))\) be a solution of stochastic system (1.4) with initial values (1.2) such that \(x_{i}(s)=\psi _{i}(s)\), \(x_{i}'(s) = \psi _{i}'(s)\), \(s\in [-\tau , 0]\), \(i\in J\). In view of (2.1) and (2.2), for \(i\in J\), we can find a sufficient little positive number λ such that

and

where

Then we construct the following two Lyapunov–Krasovskii functionals:

and

Using Itô’s formula, we obtain the following stochastic differential:

and

where \(\mathcal{L}\) is the weak infinitesimal operator such that

and

Integrating both sides of (2.5), (2.6) and taking the expectation operator, we obtain from (2.3), (2.4), (2.7), and (2.8) that

and

Choosing \(\gamma =\max_{i\in J}\{\alpha _{i}^{2}+|\alpha _{i}\gamma _{i}|, \bar{\beta }_{i}+\bar{\alpha }_{i}^{2}+|\bar{\alpha }_{i}\bar{\gamma }_{i}| \} \) and \(\beta =\min_{i\in J}\{\beta _{i},\bar{\beta }_{i}\}\), we obtain from (2.9) and (2.10) that

and

Combining (2.11) and (2.12), the following holds:

which, together with Definition 2.1, implies that the stochastic inertial neural network (1.4) is mean-square exponentially input-to-state stable. This completes the proof of Theorem 2.1. □

Remark 2.1

From Definition 2.1, it is obvious that if stochastic inertial neural networks are mean-square exponentially input-to-state stable, the second moments of states and their first-order derivatives will remain bounded, but not converge to the equilibrium point. This reveals that the external inputs influence the dynamics of the stochastic inertial neural networks, and when they are bounded, the second moments of states and their first-order derivatives remain bounded. In Theorem 2.1, we derive some sufficient conditions for stochastic inertial neural network (1.4) to ensure the mean-square exponential input-to-state stability. To the best of our knowledge, it is the first time to consider the mean-square exponential input-to-state stability for stochastic inertial neural networks. Since references [1–18] and [20–36] are concerned with the deterministic inertial neural networks, Prakash et al. [19] only consider synchronization of Markovian jumping inertial neural networks, and the authors of [38–42] only study input-to-state stability of non-inertial neural networks. Those results are invalid for mean-square exponential input-to-state stability of stochastic inertial neural network (1.4).

3 An illustrative example

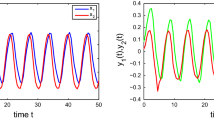

In order to verify correctness and effectiveness of the theoretical results, we show an example with numerical simulations.

Example 3.1

where \(f_{i}(u) = g_{i}(u) = 0.25(|u + 1|-|u-1|)\), \(i=1,2 \).

Choosing \(\alpha _{1}=\alpha _{2}=\gamma _{1}=\gamma _{2}=1\), \(\beta _{1}=8\), \(\beta _{2}=9\), \(\bar{\alpha }_{1}=\frac{1}{10}\), \(\bar{\alpha }_{2}=\frac{1}{4}\), \(\bar{ \gamma }_{1}=10\), \(\bar{\gamma }_{2}=4\), \(\bar{\beta }_{1}=1.1\), \(\bar{\beta }_{2}=1\), we obtain \(A_{1}=-0.65\), \(A_{2} =-1.2\), \(B_{1}=-2.95\), \(B_{2}=-3.6\), \(C_{1}=-2\), \(C_{2}=-4\), \(\bar{A}_{1}=-0.7715\), \(\bar{A}_{2} =-1.3375\), \(\bar{B}_{1}=-1.757\), \(\bar{B}_{2}=-4.2219\), \(\bar{C}_{1}=0.82\), \(\bar{C}_{2}=0.14\). Then (2.1) and (2.2) hold. Therefore, by Theorem 2.1, we see that the stochastic inertial neural network (3.1) is mean-square exponentially input-to-state stable. Furthermore, Fig. 1 shows this fact.

The states and their first-order derivatives of (3.1) with initial values \((x_{1}(s),x_{2}(s),x'_{1}(s),x'_{2}(s))=(1,-3,0,0)\), \(s\in [-2,0]\)

4 Concluding remarks

In this paper, we have studied the mean-square exponential input-to-state stability for a class of stochastic inertial neural networks. By applying non-reduced order method and Lyapunov–Krasovskii functional, we have obtained several sufficient conditions to guarantee the mean-square exponential input-to-state stability of the suggested stochastic system, which has been considered by few authors. An example and its numerical simulation have been presented to check the theoretical result well.

Availability of data and materials

Data sharing not applicable to this paper as no data sets were generated or analyzed during the current study.

References

Babcock, K., Westervelt, R.: Stability and dynamics of simple electronic neural networks with added inertia. Physica D 23, 464–469 (1986)

Babcock, K., Westervelt, R.: Dynamics of simple electronic neural networks. Physica D 28, 305–316 (1987)

Ge, J., Xu, J.: Weak resonant double Hopf bifurcations in an inertial four neuron model with time delay. Int. J. Neural Syst. 22, 63–75 (2012)

Li, C., Chen, G., Liao, L., Yu, J.: Hopf bifurcation and chaos in a single inertial neuron model with time delay. Eur. Phys. J. B 41, 337–343 (2004)

Liu, Q., Liao, X., Liu, Y., Zhou, S., Guo, S.: Dynamics of an inertial two-neuron system with time delay. Nonlinear Dyn. 58, 573–609 (2009)

Song, Z., Xu, J., Zhen, B.: Multi-type activity coexistence in an inertial two-neuron system with multiple delays. Int. J. Bifurc. Chaos 25, 1530040 (2015)

Wheeler, D., Schieve, W.: Stability and chaos in an inertial two-neuron system. Physica D 105, 267–284 (1997)

Zhao, H., Yu, X., Wang, L.: Bifurcation and control in an inertial two-neuron system with time delays. Int. J. Bifurc. Chaos 22, 1250036 (2012)

Ke, Y., Miao, C.: Stability analysis of inertial Cohen–Grossberg-type neural networks with time delays. Neurocomputing 117, 196–205 (2013)

Yu, S., Zhang, Z., Quan, Z.: New global exponential stability conditions for inertial Cohen–Grossberg neural networks with time delays. Neurocomputing 151, 1446–1454 (2015)

Zhang, Z., Quan, Z.: Global exponential stability via inequality technique for inertial BAM neural networks with time delays. Neurocomputing 151, 1316–1326 (2015)

Wang, J., Tian, L.: Global Lagrange stability for inertial neural networks with mixed time varying delays. Neurocomputing 235, 140–146 (2017)

Kumar, R., Das, S.: Exponential stability of inertial BAM neural network with time-varying impulses and mixed time-varying delays via matrix measure approach. Commun. Nonlinear Sci. Numer. Simul. 81, 105016 (2020)

Ke, Y., Miao, C.: Stability and existence of periodic solutions in inertial BAM neural networks with time delay. Neural Comput. Appl. 23, 1089–1099 (2013)

Ke, Y., Miao, C.: Anti-periodic solutions of inertial neural networks with time delays. Neural Process. Lett. 45, 523–538 (2017)

Xu, C., Zhang, Q.: Existence and global exponential stability of anti-periodic solutions for BAM neural networks with inertial term and delay. Neurocomputing 153, 108–116 (2015)

Cao, J., Wan, Y.: Matrix measure strategies for stability and synchronization of inertial BAM neural network with time delays. Neural Netw. 53, 165–172 (2014)

Lakshmanan, S., Prakash, M., Lim, C., Rakkiyappan, R., Balasubramaniam, P., Nahavandi, S.: Synchronization of an inertial neural network with time varying delays and its application to secure communication. IEEE Trans. Neural Netw. Learn. Syst. 29(1), 195–207 (2018)

Prakash, M., Balasubramaniam, P., Lakshmanan, S.: Synchronization of Markovian jumping inertial neural networks and its applications in image encryption. Neural Netw. 83, 86–93 (2016)

Rakkiyappan, R., Kumari, E., Chandrasekar, A., Krishnasamy, R.: Synchronization and periodicity of coupled inertial memristive neural networks with supremums. Neurocomputing 214, 739–749 (2016)

Rakkiyappan, R., Premalatha, S., Chandrasekar, A., Cao, J.: Stability and synchronization analysis of inertial memristive neural networks with time delays. Cogn. Neurodyn. 10, 437–451 (2016)

Tu, Z., Cao, J., Alsaedi, A., Alsaadi, F.: Global dissipativity of memristor-based neutral type inertial neural networks. Neural Netw. 88, 125–133 (2017)

Tu, Z., Cao, J., Hayat, T.: Matrix measure based dissipativity analysis for inertial delayed uncertain neural networks. Neural Netw. 75, 47–55 (2016)

Li, X., Li, X., Hu, C.: Some new results on stability and synchronization for delayed inertial neural networks based on non-reduced order method. Neural Netw. 96, 91–100 (2017)

Huang, C., Liu, B.: New studies on dynamic analysis of inertial neural networks involving non-reduced order method. Neurocomputing 325, 283–287 (2019)

Xu, Y.: Convergence on non-autonomous inertial neural networks with unbounded distributed delays. J. Exp. Theor. Artif. Intell. (2019). https://doi.org/10.1080/0952813X.2019.1652941

Yao, L.: Global exponential stability on anti-periodic solutions in proportional delayed HIHNNs. J. Exp. Theor. Artif. Intell. (2020). https://doi.org/10.1080/0952813X.2020.1721571

Huang, C.: Exponential stability of inertial neural networks involving proportional delays and non-reduced order method. J. Exp. Theor. Artif. Intell. (2019). https://doi.org/10.1080/0952813X.2019.1635654

Huang, C., Yang, L., Liu, B.: New results on periodicity of non-autonomous inertial neural networks involving non-reduced order method. Neural Process. Lett. 50, 595–606 (2019)

Huang, C., Liu, B., Qian, C., Cao, J.: Stability on positive pseudo almost periodic solutions of HPDCNNs incorporating D operator. Math. Comput. Simul. 190, 1150–1163 (2021)

Zhang, X., Hu, H.: Convergence in a system of critical neutral functional differential equations. Appl. Math. Lett. 107, 106385 (2020)

Huang, C., Huang, L., Wu, J.: Global population dynamics of a single species structured with distinctive time-varying maturation and self-limitation delays. Discrete Contin. Dyn. Syst., Ser. B (2021). https://doi.org/10.3934/dcdsb.2021138

Tan, Y.: Dynamics analysis of Mackey–Glass model with two variable delays. Math. Biosci. Eng. 17(5), 4513–4526 (2020)

Zhang, J., Huang, C.: Dynamics analysis on a class of delayed neural networks involving inertial terms. Adv. Differ. Equ. 2020, 120 (2020)

Huang, C., Zhang, H.: Periodicity of non-autonomous inertial neural networks involving proportional delays and non-reduced order method. Int. J. Biomath. 12(2), 1950016 (2019)

Huang, C., Yang, H., Cao, J.: Weighted pseudo almost periodicity of multi-proportional delayed shunting inhibitory cellular neural networks with D operator. Discrete Contin. Dyn. Syst., Ser. S 14(4), 1259–1272 (2021)

Haykin, S.: Neural Networks. Prentice Hall, New York (1994)

Zhu, Q., Cao, J.: Mean-square exponential input-to-state stability of stochastic delayed neural networks. Neurocomputing 131, 157–163 (2014)

Wang, W., Gong, S., Chen, W.: New result on the mean-square exponential input-to-state stability of stochastic delayed recurrent neural networks. Syst. Sci. Control Eng. 6(1), 501–509 (2018)

Shu, Y., Liu, X., Wang, F., Qiu, S.: Exponential input-to-state stability of stochastic neural networks with mixed delays. Int. J. Mach. Learn. Cybern. 9, 807–819 (2018)

Zhou, L., Liu, X.: Mean-square exponential input-to-state stability of stochastic recurrent neural networks with multi-proportional delays. Neurocomputing 219, 396–403 (2017)

Zhou, W., Teng, L., Xu, D.: Mean-square exponentially input-to-state stability of stochastic Cohen–Grossberg neural networks with time-varying delays. Neurocomputing 153, 54–61 (2015)

Wang, W., Chen, W.: Mean-square exponential stability of stochastic inertial neural networks. Int. J. Control (2020). https://doi.org/10.1080/00207179.2020.1834145

Acknowledgements

Not applicable.

Funding

This work was supported by the Natural Scientific Research Fund of Zhejiang Provincial of China (grant no. LY16A010018).

Author information

Authors and Affiliations

Contributions

The authors declare that the study was realized in collaboration with the same responsibility. All authors read and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, W., Chen, W. Mean-square exponential input-to-state stability of stochastic inertial neural networks. Adv Differ Equ 2021, 430 (2021). https://doi.org/10.1186/s13662-021-03586-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-021-03586-4