Abstract

In this paper, we first recall some well-known results on the solvability of the generalized Lyapunov equation and rewrite this equation into the generalized Stein equation by using Cayley transformation. Then we introduce the matrix versions of biconjugate residual (BICR), biconjugate gradients stabilized (Bi-CGSTAB), and conjugate residual squared (CRS) algorithms. This study’s primary motivation is to avoid the increase of computational complexity by using the Kronecker product and vectorization operation. Finally, we offer several numerical examples to show the effectiveness of the derived algorithms.

Similar content being viewed by others

1 Introduction

In this paper, we consider the generalized Lyapunov equation as follows:

where A, \(N_{j}\in \mathbb{R}^{n\times n}\) (\(j=1,2,\ldots ,m\)), \(m\ll n\) and \(C\in \mathbb{R}^{n\times n}\) is symmetric, \(X\in \mathbb{R}^{n\times n}\) is the symmetric solution of (1).

The generalized Lyapunov equation (1) is related to several linear matrix equations displayed in Table 1. A large and growing amount of literature has considered the solution for these equations; see [1, 2] and the references therein for an overview of developments and methods.

The generalized Lyapunov equation (1) often appears in the context of bilinear systems [3, 4], stability analysis of linear stochastic systems [5, 6], special linear stochastic differential equations [7] and other areas. For example, we discuss the origin of Eq. (1) in bilinear systems. The bilinear system is an interesting subclass of nonlinear control systems that naturally occurs in some boundary control dynamics [6]. The bilinear control system has been studied by scholars for many years and has the following state space representation:

where t is the time variable, \(x(t)\in \mathbb{R}^{n}\), \(u(t)\in \mathbb{R}^{m}\), \(y(t)\in \mathbb{R}^{n}\) are the stable, input and output vectors, respectively, \(u_{j}(t)\) is the jth component of \(u(t)\). \(B \in \mathbb{R}^{n\times m}\), and A, \(N_{j}\), C are defined in (1).

For the bilinear control system (2), define

Using the concept of reachability in [3, 8], the reachability corresponding to (2) is

where P is the solution of (1).

Moreover, the generalized Lyapunov equation (1) has wide applications in PDEs. Consider the heat equation subjected to mixed boundary conditions [9]

where \(\Gamma _{1}\), \(\Gamma _{2}\), \(\Gamma _{3}\) and \(\Gamma _{4}\) are the boundaries of Ω. For example, for a simple \(2\times 2\) mesh, the state vector \(x=[x_{11}, x_{21}, x_{12}, x_{22}]^{T}\) contains the temperatures at the inner points and the Laplacian is approximated via

with meshsize \(h=1/3\). If the Robin condition is imposed on the whole boundary, then we have

Altogether this leads to the bilinear system

where \(E_{j}=e_{j}e_{j}^{T}\) with canonical unit vector \(e_{j}\in \mathbb{R}^{2}\), and

Thus, the optimal control problem of (4) reduces to the bilinear control system (2) and we ultimately need solve the generalized Lyapunov equation:

Therefore, considering the important applications of the generalized Lyapunov equation (1), many researchers pay much attention to study the solution for this equation in recent years. Damm showed the direct method to solve the generalized Lyapunov equation [9]. Fan et al. transformed this equation into the generalized Stein equation by generalized Cayley transformation and solved it using GSM [10]. Dai et al. proposed the HSS algorithm to solve the generalized Lyapunov equation. Li et al. proposed the PHSS iterative method for solving this equation when A is asymmetric positive definite [11]. Based on the recent results, we mainly discuss the matrix iteration algorithms for the generalized Lyapunov equation (1).

The rest of the paper is organized as follows. In Sect. 2, we recall some known results on the generalized Lyapunov equation’s solvability and rewrite this equation into the generalized Stein equation by using Cayley transformation. In Sect. 3, we present the matrix versions and variant forms of the BICR, Bi-CGSTAB, and CRS algorithms. In Sect. 4, we offer several numerical examples to test the effectiveness of the derived algorithms. In Sect. 5, we draw some concluding remarks.

Throughout this paper, we shall adopt the following notations. \(\mathbb{R}^{m\times n}\) and \(\mathbb{Z}^{+}\) stand for the set of all \(m\times n\) real matrices and positive integers. For \(A = (a_{ij})=(a_{1},a_{2},\ldots , a_{n})\in \mathbb{R}^{m\times n}\), the symbol \(\text{vec}(A)\) is a vector defined by \(\text{vec}(A) = (a_{1}^{T},a_{2}^{T},\ldots , a_{n}^{T})^{T}\). \(A^{T}\) and \(\|A\|\) represent the transpose and 2-norm of matrix A, respectively. The symbol \(A\geq 0\) means that A is symmetric positive semi-definite. For \(B\in \mathbb{R}^{m\times n}\), the Kronecker product and inner product of A and B are defined by \(A\otimes B = (a_{ij}B)\) and \(\langle A, B\rangle = \text{tr}(B^{T}A)\). The open right-half and left-half planes are denoted by \(\mathbb{C}_{+}\) and \(\mathbb{C}_{-}\), respectively.

2 Solvability and Cayley transformation

2.1 Solvability of the generalized Lyapunov equation

This section introduces the solvability for the generalized Lyapunov equation (1).

Denote \(\sigma (T)\in \mathbb{C}\) by the spectrum of a linear operator T and \(\rho (T)=\max \{|\lambda | |\lambda \in \sigma (T)\}\) by the spectral radius. Define the linear matrix operators \(\mathcal{L}_{A}\) and \(\Pi :\mathbb{R}^{n\times n}\rightarrow \mathbb{R}^{n\times n}\) by

Obviously, \(\Pi (X)\geq 0\) when \(X\geq 0\).

Therefore, using Theorem 3.9 in [6], we immediately get the generalized Lyapunov equation’s stability result.

Theorem 2.1

Let \(A\in \mathbb{R}^{n\times n}\) and Π be positive. The following conclusions are equivalent:

-

(a)

For all \(Y>0\), \(\exists X>0\) such that \(\mathcal{L}_{A}(X)+\Pi (X)=-Y\);

-

(b)

\(\exists Y, X>0\) such that \(\mathcal{L}_{A}(X)+\Pi (X)=-Y\);

-

(c)

\(\exists Y\geq 0\) with \((A,Y)\) controllable, \(\exists X>0\) such that \(\mathcal{L}(X)+\Pi (X)=-Y\);

-

(d)

\(\sigma (\mathcal{L}_{A}(X)+\Pi (X))\subset \mathbb{C}_{-}\);

-

(e)

\(\sigma (\mathcal{L}_{A}(X))\subset \mathbb{C}_{-}\) and \(\rho (\mathcal{L}^{-1}_{A}(X)\Pi (X))<1\),

where the linear matrix operators \(\mathcal{L}_{A}\) and Π are defined by (5).

Remark 2.1

For the generalized Lyapunov equation (1), we often choose \(C=BB^{T}\), i.e., C is symmetric positive semi-definite. Using Theorem 2.1, Eq. (1) has a positive definite solution X if A is stable, \((A, B)\) is controllable, and the norm of the \(N_{j}\) is sufficiently small.

2.2 Cayley transformation for (1)

In this section, we introduce Cayley transformation for the generalized Lyapunov equation.

It is well known that Cayley transformation is a link between the classical Lyapunov and Stein equations. Fan et al. have shown that the stability of the Lyapunov and Stein equations is different. Naturally, we wonder if the stability is different and the counterparty method has other effects. It is verified in Sect. 4 that our iteration methods are more efficient after applying Cayley transformation to the generalized Lyapunov equation. We first recall the definition of Cayley transformation.

Definition 2.1

(Cayley transformation)

Let \(M\in \mathbb{R}^{n\times n}\) be a skew-symmetric matrix. Then \(\mathcal{N}=(I+M)^{-1}(I-M)\) is called Cayley transformation of M.

Next, we show that the generalized Lyapunov equation can be changed to the generalized Setin equation after Cayley transformation.

Theorem 2.2

For the generalized Lyapunov equation (1), take the positive parameter γ such that the matrices \((\gamma I+A)\) and \((\gamma I+A^{T})\) are both nonsingular. Then (1) is equivalent to the generalized Stein equation

where

Proof

Introducing the positive parameter γ to (1), we get

Since \((\gamma I+A)\) and \((\gamma I+A^{T})\) are both nonsingular, premultiplying \((\gamma I+A)^{-1}\) and postmultiplying \((\gamma I+A^{T})^{-1}\) on both sides to (7) yield (6). Thus we complete the proof of Theorem 2.2. □

Remark 2.2

Viewing Theorem 2.2, it involves a positive parameter γ. We offer a practical way to choose γ. Set

then \((\gamma I+A)\) and \((\gamma I+A^{T})\) are both nonsingular. Thus the condition of Theorem 2.2 is satisfied. Appropriate adjustments can be made according to different situations.

Remark 2.3

Next, we show the relationship between the generalized Lyapunov equation (1) and the generalized Stein equation (6) by using the preconditioner method of linear systems.

By utilizing the operator vec, the generalized Lyapunov equation can be rewritten as

The generalized Stein equation can be rewritten as

Hence, it is not difficult to derive the following relation between \(\mathcal{A}_{1}\) and \(\mathcal{A}_{2}\):

where

is the preconditioning matrix and the corresponding generalized Stein equation is the preconditioning system.

By Remark 2.2, the operator \(\mathcal{P}\) can be defined as

In Sect. 3, we apply this operator to derive the variant forms of the BICR, Bi-CGSTAB, and CRS algorithms, respectively. The iteration methods are efficient. Numerical examples address this point in Sect. 4.

3 Iteration algorithms

This section presents the matrix versions and variant forms of the BICR, Bi-CGSTAB, and CRS algorithms in three subsections, respectively.

3.1 BICR algorithm

The BiCR method [12] has been proposed as a generalization of the conjugate residual (CR) [13] method for nonsymmetric matrices. Recently, Abe et al. designed BiCR for symmetric complex matrices (SCBiCR) and analyzed the factor in the loss of convergence speed [14]. It is easy to see that the BICR algorithm cannot be directly used to solve the generalized Lyapunov equation. Naturally, one can convert this matrix equation into the linear system through Kronecker product and vectorization operators. However, this makes the computational cost especially expensive. When the matrix order becomes larger, as the computer memory is limited, it is hard to implement in practice.

Therefore, we need to modify the BICR algorithm and ensure that the calculation cost is relatively cheap. In this subsection, we propose the matrix version of the BICR algorithm (Algorithm 1). Then we show the variant version of the BICR algorithm (Algorithm 2).

Using the iteration schemes of Algorithm 1 and Algorithm 2, we can directly solve the generalized Lyapunov equation. Further, we show the bi-orthogonal properties and convergent analysis of Algorithm 1 by Theorem 3.1 and Theorem 3.2.

Theorem 3.1

For Algorithm 1, we assume that there exists a positive integer number such that \(W(k)\neq 0\) and \(R(k)\neq 0\) for all \(k=1, 2, \ldots , r\). Then we get

For the proof of Theorem 3.1, refer to the Appendix.

Theorem 3.2

For Algorithm 1, the relative residual error has the following property:

Proof

Using Theorem 3.1, we have

Hence, the proof of Theorem 3.2 is completed. □

Remark 3.1

In terms of Theorem 3.2, the property \(\|R(k+1)\|\leq \|R(k)\|\) ensures that Algorithm 1 possesses fast and smooth convergence.

3.2 Bi-CGSTAB algorithm

Sonneveld [15] has shown a variant of BiCG, referred to the conjugate gradient squared (CGS). Van der Vorst [16] has derived one of the most successful variants of BiCG, known as the Bi-CGSTAB method. The Bi-CGSTAB algorithm is an effective algorithm for solving large sparse linear systems [16, 17]. Chen et al. [18] proposed a flexible version of the BiCGStab algorithm for solving the linear system. It is easy to see that the Bi-CGSTAB algorithm cannot be directly used to solve the generalized Lyapunov equation. Similarly, we need to modify the Bi-CGSTAB algorithm to the matrix version. The matrix version of the Bi-CGSTAB algorithm is summarized in Algorithm 3. The variant form of the BICR algorithm is shown in Algorithm 4.

Viewing the iteration schemes, we can be seen that Algorithm 3 is a simple matrix form of the Bi-CGSTAB algorithm. Hence, Algorithm 3 has the same properties as the Bi-CGSTAB algorithm. Algorithm 4 is an improved version of the Bi-CGSTAB algorithm, which has high computing efficiency. This point has been addressed by numerical examples in Sect. 4.

3.3 CRS algorithm

Zhang et al. proposed the conjugate residual squared (CRS) method in [19, 20] to solve the linear system. The CRS algorithm is mainly aimed to avoid using the transpose of A in the BiCR algorithm and get faster convergence for the same computational cost [19]. Recently, Ma et al. [21] used the matrix CRS iteration method to solve a class of coupled Sylvester-transpose matrix equations. Later, they extended two mathematical equivalent forms of the CRS algorithm to solve the periodic Sylvester matrix equation by applying Kronecker product and vectorization operator [21]. In fact, in many cases, the CRS algorithm converges twice as fast as the BiCR algorithm [22, 23]. The BiCR method can be derived from the preconditioned conjugate residual (CR) algorithm [24]. In exact arithmetic, they terminate after a limited number of iterations. In short, we can expect that the CRS algorithm will work well in many cases. The numerical examples in Sect. 4 are shown to address this point.

It is easy to see that the CRS algorithm cannot be directly used to solve the generalized Lyapunov equation. Similarly, we need to modify the CRS algorithm to the matrix version. The matrix version of the CRS algorithm is summarized in Algorithm 5. The variant version of the CRS algorithm is shown in Algorithm 6.

Viewing the iteration schemes, it can be seen that Algorithm 5 is a simple matrix form of the CRS algorithm. Hence, Algorithm 5 has the same properties as the CRS algorithm. Algorithm 6 is the variant version of the CRS algorithm, which has high computing efficiency. The numerical examples have verified the validity of the iteration algorithms in Sect. 4.

Remark 3.2

The BICGSTAB and CRS algorithms have an orthogonality property similar to that of BICR and thus are omitted.

The convergence result of Algorithms 2 to 6 has been summarized in Theorem 3.3.

Theorem 3.3

For the generalized Lyapunov equation (1), if Algorithms 2 to 6 do not break down by zero division, for any initial matrix \(X(1)\in \mathbb{R}^{n\times n}\), Algorithms 2 to 6 can compute the solution of (1) within a finite number of iterations in the absence of the roundoff error.

4 Numerical experiments

In this section, we give several examples to show the numerical feasibility and effectiveness of Algorithm 1 (BICR), Algorithm 3 (Bi-CGSTAB algorithm), Algorithm 5 (CRS algorithm) and their improved algorithms, including Algorithm 2 (Var-BICR algorithm), Algorithm 4 (Var-Bi-CGSTAB algorithm), Algorithm 6 (Var-CRS algorithm). Set \(tol=1.0e-8\). The numerical behavior of iteration methods will be listed with respect to the number of iteration steps (ITs), the computing time (CPU)(s) and relative residual error (Error). All experiments are performed in Matlab (version R2017a) with double precision on a personal computer with 3.20 GHz central processing unit (Inter(R) Core(TM) i5-6500 CPU), 6.00G memory and Windows 7 operating system.

Example 4.1

Consider the generalized Lyapunov equation (1) with

Set the initial value

We use Table 2 to show the error analysis for this example.

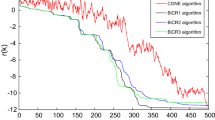

Moreover, when \(n=600\), we use Fig. 1 to show the error analysis of Algorithms 1 to 6.

Comparison between the residual error of Algorithms 1 to 6 for Example 4.1

By comparing with these algorithms, it is clear that the algorithms’ efficiency will greatly be improved after using a Cayley transformation. The variant versions of the Bi-CGSTAB and CRS algorithms have the best efficiency.

Example 4.2

Let P be the block tridiagonal sparse \(m^{2}\times m^{2}\) matrix, given by a finite difference disretization of the heat equation (3) on an \(m\times m\)-mesh, i.e.,

If the Robin condition is imposed on the whole boundary, then we have

where \(E_{j} = e_{j}e_{j}^{T}\) with canonical unit vector \(e_{j}\). The coefficient matrices \(N_{j}\) and the columns \(b_{j}\) of B corresponding to the left, upper, lower, and right boundaries are given by

Then the above heat equation’s optimal control problem reduces to solving the generalized Lyapunov equation (1).

We use Table 3 to show the residual error analysis. It is obvious that the effect of the Var-Bi-CGSTAB algorithm is optimal compared with other algorithms.

Further, we use Fig. 2 to show the error analysis when \(n = 64\). It can be seen that the variant versions of the algorithms perform better.

Comparison between the residual error of Algorithms 1 to 6 for Example 4.2

Example 4.3

Consider the RC trapezoidal circuit with m resistors with g extensions

Since the original system is nonlinear, it is linearized by the second-order Carleman bilinear method to obtain a system of order \(n= m+m^{2}\).

The matrices A, N and b can be referred to [25]. The corresponding generalized Lyapunov equation is

We use Table 4 to show the residual error analysis. Further, we use Fig. 3 to show the error analysis when \(n = 8\). It can be seen that the Var-Bi-CGSTAB algorithm performs best.

Comparison between the residual error of Algorithms 3 to 6 for Example 4.3

Remark 4.1

From the three numerical examples above, it can be seen that the variant algorithms proposed in this paper will greatly improve the operating efficiency. In other words, the conjugate gradient-like methods are more efficient than the generalized Setin equation.

5 Conclusions

This paper has proposed the matrix versions of the BICR algorithm, Bi-CGSTAB algorithm, and CRS algorithm to solve the generalized Lyapunov equation (1). Then we have introduced the variant versions of these three algorithms. Finally, we have provided numerical examples to illustrate the feasibility and effectiveness of the derived algorithms.

Availability of data and materials

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

References

Kurschner, P.: Efficient Low-Rank Solution of Large-Scale Matrix Equations. Dissertation, Otto von Guericke Universitat, Magdeburg (2016)

Simoncini, V.: Analysis of the rational Krylov subspace projection method for large-scale algebraic Riccati equations. SIAM J. Matrix Anal. Appl. 37, 1655–1674 (2016)

Paolo, D.A., Alberto, I., Antonio, R.: Realization and structure theory of bilinear dynamical systems. SIAM J. Control Optim. 12, 517–535 (1974)

Samir, A., Baiyat, A.L., Bettayeb, M.A., Saggaf, M.A.L.: New model reduction scheme for bilinear systems. Int. J. Syst. Sci. 25, 1631–1642 (1994)

Kleinman, D.L.: On the stability of linear stochastic systems. IEEE Trans. Autom. Control 14, 429–430 (1969)

Benner, P., Damm, T.: Lyapunov equations, energy functionals, and model order reduction of bilinear and stochastic systems. SIAM J. Control Optim. 49, 686–711 (2011)

Gray, W.S., Mesko, J.: Energy functions and algebraic Gramians for bilinear systems. IFAC Proc. Vol. 31, 101–106 (1998)

Dorissen, H.: Canonical forms for bilinear systems. Syst. Control Lett. 13, 153–160 (1989)

Damm, T.: Direct methods and ADI-preconditioned Krylov subspace methods for generalized Lyapunov equation. Numer. Linear Algebra Appl. 15, 853–871 (2008)

Fan, H.Y., Weng, P., Chu, E.: Numerical solution to generalized Lyapunov, Stein and rational Riccati equations in stochastic control. Numer. Algorithms 71, 245–272 (2016)

Li, S.Y., Shen, H.L., Shao, X.H.: PHSS iterative method for solving generalized Lyapunov equations. Mathematics 7, 1–13 (2019)

Sogabe, T., Sugihara, M., Zhang, S.L.: An extension of the conjugate residual method to nonsymmetric linear systems. J. Comput. Appl. Math. 226, 103–113 (2009)

Stiefel, E.L.: Relaxationsmethoden bester strategie zur losung linearer gleichungssysteme. Comment. Math. Helv. 29, 157–179 (1955)

Abea, K., Fujino, S.: Converting BiCR method for linear equations with complex symmetric matrices. Appl. Math. Comput. 321, 564–576 (2018)

Sonneveld, P.: CGS, a fast Lanczos-type solver for nonsymmetric linear systems. SIAM J. Sci. Stat. Comput. 10, 36–52 (1989)

Vander, H.A.: Bi-CGSTAB: a fast and smoothly converging variant of Bi-CG for the solution of nonsymmetric linear systems. SIAM J. Sci. Stat. Comput. 13, 631–644 (1992)

Hajarian, M.: Developing Bi-CG and Bi-CR methods to solve generalized Sylvester-transpose matrix equations. Int. J. Autom. Comput. 11, 25–29 (2014)

Chen, J., McInnes, L.C., Zhang, H.: Analysis and practical use of flexible BiCGStab. J. Sci. Comput. 68, 803–825 (2016)

Zhang, L.T., Zuo, X.Y., Gu, T.X., Huang, T.Z.: Conjugate residual squared method and its improvement for non-symmetric linear systems. Int. J. Comput. Math. 87, 1578–1590 (2010)

Zhang, L.T., Huang, T.Z., Gu, T.X., Zuo, X.Y.: An improved conjugate residual squared algorithm suitable for distributed parallel computing. Microelectron. Comput. 25, 12–14 (2008) (in Chinese)

Chen, L.J., Ma, C.F.: Developing CRS iterative methods for periodic Sylvester matrix equation. Adv. Differ. Equ. 1, 1–11 (2019)

Zhao, J., Zhang, J.H.: A smoothed conjugate residual squared algorithm for solving nonsymmetric linear systems. In: 2009 Second Int. Confe. Infor. Comput. Sci., vol. 4, pp. 364–367 (2009)

Sogabe, T., Zhang, S.L.: Extended conjugate residual methods for solving nonsymmetric linear systems. In: International Conference on Numerical Optimization and Numerical Linear Algebra, pp. 88–99 (2003)

Sogabe, T., Sugihara, M., Zhang, S.L.: An extension of the conjugate residual method to nonsymmetric linear systems. J. Comput. Appl. Math. 226, 103–113 (2009)

Bai, Z.J., Skoogh, D.: A projection method for model reduction of bilinear dynamical systems. Linear Algebra Appl. 415, 406–425 (2006)

Acknowledgements

The research is supported by Hunan Key Laboratory for Computation and Simulation in Science and Engineering, School of Mathematics and Computational Science, Xiangtan University.

Funding

The work was supported in part by National Natural Science Foundation of China (11771368, 11771370) and the Project of Education Department of Hunan Province (19A500).

Author information

Authors and Affiliations

Contributions

All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Appendix: Proof of Theorem 3.1

Appendix: Proof of Theorem 3.1

We prove Theorem 3.1 by mathematical induction to v and u. It is enough to prove (8)–(11) for \(1\leq u < v \leq r\).

-

(i)

If \(v=2\), \(u=1\), then we have

$$\begin{aligned} &\operatorname{tr}\bigl(R(2)^{T}W(1)\bigr)=\operatorname{tr}\bigl( \bigl(R(1)-\alpha (1)-W(1)\bigr)^{T}W(1)\bigr) \\ &\hphantom{\operatorname{tr}\bigl(R(2)^{T}W(1)\bigr)}=\operatorname{tr}\bigl(R(1)^{T}W(1)\bigr)-\operatorname{tr} \bigl(W(1)^{T}R(1)\bigr) \\ &\hphantom{\operatorname{tr}\bigl(R(2)^{T}W(1)\bigr)}=0, \\ &\operatorname{tr}\bigl(S(2)^{T}Z(1)\bigr)=\operatorname{tr}\bigl( \bigl(S(1)-\beta (1)Z(1)\bigr)^{T}Z(1)\bigr) \\ &\hphantom{\operatorname{tr}\bigl(S(2)^{T}Z(1)\bigr)}=\operatorname{tr}\bigl(S(1)^{T}Z(1)\bigr)-\operatorname{tr} \bigl(Z(1)^{T}S(1)\bigr) \\ &\hphantom{\operatorname{tr}\bigl(S(2)^{T}Z(1)\bigr)}=0, \\ &\operatorname{tr}\bigl(Z(2)^{T}Z(1)\bigr) \\ &\quad =\operatorname{tr}\Biggl( \Biggl(A^{T}R(2)+R(2)A+\sum_{j=1}^{m}N_{j}^{T}R(2)N_{j}- \eta (1)Z(1)\Biggr)^{T}Z(1)\Biggr) \\ &\quad =\operatorname{tr}\bigl(\bigl(A^{T}R(2)\bigr)^{T}Z(1) \bigr) + \operatorname{tr}\bigl(\bigl(R(2) A\bigr)^{T} Z(1)\bigr) + \operatorname{tr}\Biggl(\Biggl( \sum_{j=1}^{m}N_{j}^{T}R(2)N_{j} \Biggr)^{T}Z(1)\Biggr) \\ &\qquad {}-\operatorname{tr}\bigl(Z(1)^{T}\bigl(A^{T}R(2)\bigr) \bigr)-\operatorname{tr}\bigl(Z(1)^{T}\bigl(R(2)A\bigr)\bigr)- \operatorname{tr}\Biggl(Z(1)^{T}\Biggl( \sum _{j=1}^{m}N_{j}^{T}R(2)N_{j} \Biggr)\Biggr) \\ &\quad =0, \end{aligned}$$and

$$\begin{aligned} \operatorname{tr}\bigl(W(2)^{T}W(1)\bigr)={}&\operatorname{tr}\Biggl( \Biggl(AS(2)+S(2)A^{T}+\sum_{j=1}^{m}N_{j}S(2)N_{j}^{T}- \gamma (1)W(1)\Biggr)^{T}W(1)\Biggr) \\ ={}&\Biggl(\Biggl(AS(2)+S(2)A^{T}+\sum_{j=1}^{m}N_{j}S(2)N_{j}^{T} \Biggr)^{T}W(1)\Biggr) \\ &{}-\operatorname{tr}\Biggl(W(1)^{T}\Biggl(AS(2)+S(2)A^{T}+ \sum_{j=1}^{m}N_{j}S(2)N_{j}^{T} \Biggr)\Biggr) \\ ={}&0. \end{aligned}$$ -

(ii)

Now for \(u< w< r\), we assume that

$$\begin{aligned}& \operatorname{tr}\bigl(R(w)^{T}W(u)\bigr)=0, \\& \operatorname{tr}\bigl(S(w)^{T}Z(u)\bigr)=0, \\& \operatorname{tr}\bigl(Z(w)^{T}Z(u)\bigr)=0, \\& \operatorname{tr}\bigl(W(w)^{T}W(u)\bigr)=0. \end{aligned}$$ -

(iii)

Next, we will prove (8)–(11) for \(w+1\). Using the induction hypothesis, we get

$$\begin{aligned}& \operatorname{tr}\bigl(R(w+1)^{T}W(u)\bigr)= \operatorname{tr}\bigl( \bigl(R(w)-\alpha (w)W(w)\bigr)^{T}W(u)\bigr)=0 \\& \operatorname{tr}\bigl(S(w+1)^{T}Z(u)\bigr)= \operatorname{tr}\bigl( \bigl(S(w)-\beta (w)Z(w)\bigr)^{T}Z(u)\bigr)=0, \end{aligned}$$and

$$\begin{aligned} &\operatorname{tr}\bigl(Z(w+1)^{T}Z(u)\bigr) \\ &\quad =\operatorname{tr}\Biggl(\Biggl(A^{T}R(w+1)+R(w+1)A+\sum _{j=1}^{m}N_{j}^{T}R(w+1)N_{j}- \eta (w)Z(w)\Biggr)^{T}Z(u)\Biggr) \\ &\quad = \frac{1}{\beta (u)}\Biggl[\operatorname{tr}\bigl(R(w+1)^{T} \bigl(A\bigl(S(u)-S(u+1)\bigr)\bigr)\bigr) \\ &\qquad {}+\operatorname{tr}\bigl(R(w+1)^{T} \bigl(S(u)-S(u+1)A^{T}\bigr)\bigr) \\ &\qquad {}+\operatorname{tr}\Biggl(R(w+1)^{T}\Biggl(\sum _{j=1}^{m}N_{j}^{T} \bigl(S(u)-S(u+1)\bigr)N_{j}\Biggr)\Biggr)\Biggr] \\ &\quad =\frac{1}{\beta (u)}\bigl[\operatorname{tr}\bigl(R(w+1)^{T} \bigl(W(u)+\gamma (u-1)W(u-1)\bigr)\bigr) \\ &\qquad {}-\operatorname{tr}(R(w+1)^{T}\bigl(W(u+1)+\gamma (u)W(u) \bigr)\bigr] \\ &\quad =-\frac{1}{\beta (u)}\bigl[\operatorname{tr}\bigl(R(w+1)^{T}W(u+1) \bigr)\bigr], \end{aligned}$$(12)$$\begin{aligned} &\operatorname{tr}\bigl(W(w+1)^{T}W(u)\bigr) \\ &\quad =\operatorname{tr}\Biggl(\Biggl(AS(w+1)+S(w+1)A^{T}+\sum _{j=1}^{m}N_{j}S(w+1)N_{j}^{T} \\ &\qquad {}-\gamma (w)W(w)\Biggr)^{T}w(u)\Biggr) \\ &\quad =\operatorname{tr}\bigl(S(w+1)^{T}\bigl(AS(w+1) \bigr)^{T}\bigr)+\operatorname{tr}\bigl(S(w+1)^{T} \bigl(S(w+1)A^{T}\bigr)^{T}\bigr) \\ &\qquad {}+\operatorname{tr}\Biggl(S(w+1)^{T}\Biggl(\sum _{j=1}^{m}N_{j}S(w+1)N_{j}^{T} \Biggr)^{T}\Biggr) \\ &\quad = \frac{1}{\alpha (u)}\Bigg[\operatorname{tr}\bigl(S(w+1)^{T} \bigl(A\bigl(R(u)-R(u+1)\bigr)\bigr)\bigr) \\ &\qquad {}+\operatorname{tr}\bigl(S(w+1)^{T} \bigl(R(u)-R(u+1)A^{T}\bigr)\bigr) \\ &\qquad {}+\operatorname{tr}\Biggl(S(w+1)^{T}\Biggl(\sum _{j=1}^{m}N_{j}^{T} \bigl(R(u)-R(u+1)\bigr)N_{j}\Biggr)\Biggr)\Bigg] \\ &\quad =\frac{1}{\alpha (u)}[\operatorname{tr}\bigl(S(w+1)^{T} \bigl(Z(u)+\eta (u-1)Z(u-1)\bigr)-Z(u+1)- \eta (u)Z(u)\bigr) \\ &\quad =-\frac{1}{\alpha (u)}\bigl[\operatorname{tr}\bigl(S(w+1)^{T}Z(u+1) \bigr)\bigr]. \end{aligned}$$(13)For \(u=w\), again from the induction hypothesis we can obtain

$$\begin{aligned} &\operatorname{tr}\bigl(R(w+1)^{T}W(w)\bigr) = \operatorname{tr} \bigl(\bigl(R(w)-\alpha (w)W(w)^{T}\bigr)W(w)\bigr), \\ &\operatorname{tr}\bigl(R(w)^{T}W(w)\bigr)-\operatorname{tr} \bigl(W(w)^{T}R(w)\bigr)= 0, \\ &\operatorname{tr}\bigl(S(w+1)^{T}Z(w)\bigr) = \operatorname{tr} \bigl(\bigl(S(w)-\beta (w)Z(w)\bigr)^{T} Z(w)\bigr) \\ & \hphantom{\operatorname{tr}\bigl(S(w+1)^{T}Z(w)\bigr)}= \operatorname{tr}\bigl(S(w)^{T}Z(w)\bigr)-\operatorname{tr} \bigl(Z(w)^{T}S(w)\bigr) \\ &\hphantom{\operatorname{tr}\bigl(S(w+1)^{T}Z(w)\bigr)} =0, \end{aligned}$$and

$$\begin{aligned} &\operatorname{tr}\bigl(Z(w+1)^{T}Z(w)\bigr)=\operatorname{tr} \Biggl(\Biggl(A^{T}R(w+1)+R(w+1)A \\ &\hphantom{\operatorname{tr}\bigl(Z(w+1)^{T}Z(w)\bigr)=}{}+\sum_{j=1}^{m}N_{j}^{T}R(w+1)N_{j}- \eta (w)Z(w)\Biggr)^{T}Z(w)\Biggr) \\ &\hphantom{\operatorname{tr}\bigl(Z(w+1)^{T}Z(w)\bigr)}=\operatorname{tr}\bigl(\bigl(A^{T}R(w+1)\bigr)^{T}Z(w) \bigr) + \operatorname{tr}\bigl(\bigl(R(w+1) A\bigr)^{T} Z(w)\bigr) \\ &\hphantom{\operatorname{tr}\bigl(Z(w+1)^{T}Z(w)\bigr)=}{}+\operatorname{tr}\Biggl(\Biggl(\sum_{j=1}^{m}N_{j}^{T}R(w+1)N_{j} \Biggr)^{T}Z(w)\Biggr) \\ &\hphantom{\operatorname{tr}\bigl(Z(w+1)^{T}Z(w)\bigr)=}{}-\operatorname{tr}\bigl(Z(w)^{T}\bigl(A^{T}R(w+1)\bigr) \bigr)-\operatorname{tr}\bigl(Z(w)^{T}\bigl(R(w+1)A\bigr)\bigr) \\ &\hphantom{\operatorname{tr}\bigl(Z(w+1)^{T}Z(w)\bigr)=}{}-\operatorname{tr}\Biggl(Z(w)^{T}\Biggl(\sum _{j=1}^{m}N_{j}^{T}R(w+1)N_{j} \Biggr)\Biggr) \\ &\hphantom{\operatorname{tr}\bigl(Z(w+1)^{T}Z(w)\bigr)}=0, \\ &\operatorname{tr}\bigl(W(w+1)^{T}W(w)\bigr) \\ &\quad =\operatorname{tr} \Biggl(\Biggl(AS(w+1)+S(w+1)A^{T} \\ &\qquad {}+\sum_{j=1}^{m}N_{j}S(w+1)N_{j}^{T}- \gamma (w)W(w)\Biggr)^{T}W(w)\Biggr) \\ &\quad =\Biggl(\Biggl(AS(w+1)+S(w+1)A^{T}+\sum _{j=1}^{m}N_{j}S(w+1)N_{j}^{T} \Biggr)^{T}W(w)\Biggr) \\ &\qquad {}-\operatorname{tr}\Biggl(W(w)^{T}\Biggl(AS(w+1)+S(w+1)A^{T}+ \sum_{j=1}^{m}N_{j}S(w+1)N_{j}^{T} \Biggr)\Biggr) \\ &\quad =0. \end{aligned}$$

Noting that \(\operatorname{tr}(Z(w)^{T}Z(u)) = 0\), \(\operatorname{tr}(R(w+1)^{T}W(w)) = 0\) with (12) we deduce that

Similarly from \(\operatorname{tr}(W(w)^{T}W(u))=0\), \(\operatorname{tr}(S(w)^{T}Z(w))=0\) and (13), it can be seen that

Hence, (8)–(11) hold true for \(w+1\). Using mathematical induction, we complete the proof.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhang, J., Kang, H. & Li, S. Matrix iteration algorithms for solving the generalized Lyapunov matrix equation. Adv Differ Equ 2021, 221 (2021). https://doi.org/10.1186/s13662-021-03381-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-021-03381-1