Abstract

In this work, we formulate and analyze a new mathematical model for COVID-19 epidemic with isolated class in fractional order. This model is described by a system of fractional-order differential equations model and includes five classes, namely, S (susceptible class), E (exposed class), I (infected class), Q (isolated class), and R (recovered class). Dynamics and numerical approximations for the proposed fractional-order model are studied. Firstly, positivity and boundedness of the model are established. Secondly, the basic reproduction number of the model is calculated by using the next generation matrix approach. Then, asymptotic stability of the model is investigated. Lastly, we apply the adaptive predictor–corrector algorithm and fourth-order Runge–Kutta (RK4) method to simulate the proposed model. Consequently, a set of numerical simulations are performed to support the validity of the theoretical results. The numerical simulations indicate that there is a good agreement between theoretical results and numerical ones.

Similar content being viewed by others

1 Introduction

Mathematical models describing infectious diseases have an important role both in theory and practice (see, for example, [6–8, 18, 34]). The construction and analysis of models of this type can help us understand the mechanism of the transmission as well as characteristics of diseases, and therefore, we can propose effective strategies to predict, prevent, and restrain diseases, as well as to protect population health. Up to now, many mathematical models for infectious diseases formulated by differential equations have been constructed and analyzed to study the spreading of viruses, for instance, [6–8, 18, 34]. Recently, mathematical models for COVID-19 epidemic have attracted the attention of many mathematicians, biologists, epidemiologists, pharmacists, chemists with many notable and important results (see, for instance, [1, 5, 9, 14, 15, 19, 23, 26, 28–30, 33, 35, 36] and references therein). This can be considered an effective approach to study, simulate, and predict the mechanism and transmission of COVID-19.

Motivated by the above reason, in this work, we formulate and analyze a new mathematical model for COVID-19 epidemic. This model is described by a system of fractional-order differential equations model and includes five classes, namely, S (susceptible class), E (exposed class), I (infected class), Q (isolated class), and R (recovered class). This model is a generalization of a well-known ODE model formulated in [35]. In the proposed fractional-order model, we use the Caputo fractional derivative instead of the integer-order one because when modeling processes and phenomena arising in the real world, fractional-order models are more accurate than integer-order ones. In particular, fractional-order models provide more degrees of freedom in the model while an unlimited memory is also guaranteed in contrast to integer-models with limited memory [11, 24, 27].

Our main aim is to study the dynamics and numerical approximations for the proposed fractional-order model. Firstly, the positivity and boundedness of the model are investigated based on standard techniques of mathematical analysis. Secondly, the basic reproduction number of the model is calculated by using the next generation matrix approach. Then, asymptotic stability of the model is investigated based on the Lyapunov stability theorem for fractional dynamical systems. Lastly, we apply the adaptive predictor–corrector algorithm and fourth-order Runge–Kutta (RK4) method formulated in [20] to simulate the proposed model. Consequently, a set of numerical simulations is performed to support the validity of the theoretical results. The numerical simulations indicate that there is a good agreement between theoretical results and numerical ones.

This work is organized as follows. The fractional-order differential model is formulated in Sect. 2. Dynamics of the model is investigated in Sect. 3. Numerical simulations by the adaptive predictor–corrector algorithm are performed in Sect. 4. The last section present some remarks, conclusions, and discussions.

2 Model formulation

The total population is divided into five compartments: susceptible (S), exposed (E), infected (I), isolated (Q), and recovered (R) from the disease. We assume that all the classes are normalized. This leads to the mathematical model formulation in which the interaction of the exposed population and infected population is linked to the susceptible population. In this model, we assume that the natural death rate includes the disease death rate. When there is no symptom of the disease, the exposed class moves with a certain rate to the isolated class, but in the case when symptoms are developed, it moves to the infected class. Keeping in mind the above assumptions, we obtain the following ODE model (see [35]):

where the parameters and variables used are described in Table 1.

Let us introduce the notation

From the ODE system (1), we obtain

which implies that the total population in this case we take as constant because \(\varLambda =\mu N\).

To include into the model (1) the past history or hereditary properties, we replace the first derivative by the Caputo fractional derivative. More precisely, we propose the following system of fractional differential equations:

where \(0 < \alpha < 1\), and \({}_{0}^{C}D_{t}^{\alpha }\) denotes the fractional derivative in the Caputo sense. We refer the readers to [2–4, 10, 25] for the definition of the fractional Caputo derivative.

3 Dynamics of the fractional-order model

3.1 Positivity and boundedness

Let us denote

The following theorem is proved by using a generalized mean value theorem [21, 22] and a fractional comparison principle [16, Lemma 10].

Theorem 1

(Positivity and boundedness)

Let \((S_{0}, E_{0}, I_{0}, Q_{0})\)be any initial data belonging to \(\mathbb{R}_{+}^{4}\)and \((S(t), E(t), I(t), Q(t) )\)be the solution corresponding to the initial data. Then, the set \(\mathbb{R}^{4}_{+}\)is a positively invariant set of the model (3). Furthermore, we have

Proof

First, it is easy to prove the existence and unique of solutions of the model thanks to results proved in [17].

For the model (3), we have

By (5) and the generalized mean value theorem [21, 22], we deduce that \(S(t), E(t), I(t), Q(t) \geq 0\) for all \(t \geq 0\).

From the first equation of the system (3), we obtain

By using the fractional comparison principle, we have the first estimate of (4).

From the second equation of the system (3), we have

which implies that

Consequently, we have the second estimate of (4).

From the third equation of the system (3), we get

for t large enough. This implies the third estimate of (4).

From the fourth equation of the system (3), we have

for t large enough. From this we get the fourth estimate of (4).

Finally, the fifth equation of (3) implies that

which implies the fifth estimate of (4). The proof is complete. □

3.2 Equilibria and the reproduction number

Firstly, to find equilibria of the model (3), we consider the following algebraic system:

By some algebraic manipulations, we obtain two solutions of the system (6) that are

and

Note that \(S^{*}\), \(E^{*}\), \(I^{*}\), \(Q^{*}\) and \(R^{*}\) are positive if and only if

We now compute the reproduction number of the model (3) by using the next generation matrix approach developed by van den Driessche and Watmough [31]. Let \(x = (E, I, Q, R, S)\). We rewrite the model (3) in the matrix form

where

Hence, the reproduction number of the model (3) can be determined by

It is easy to verify that \(S^{*}\), \(E^{*}\), \(I^{*}\), \(Q^{*}\), and \(R^{*} > 0\) if and only if \(\mathcal{R}_{0} > 1\).

Theorem 2

(Equilibria)

The model (3) always possesses a disease-free equilibrium (DFE) point \(F_{0} = (S_{0}, E_{0}, I_{0}, Q_{0}, R_{0})\)given by (8) for all values of the parameters. Moreover, the model has a unique disease endemic equilibrium point \(F^{*} = (S^{*}, E^{*}, I^{*}, Q^{*}, R^{*})\)given by (9) if and only if \(\mathcal{R}_{0} > 1\).

3.3 Stability analysis

Theorem 3

The DFE point of the model (3) is locally asymptotically stable if \(\mathcal{R}_{0} < 1\).

Proof

The Jacobian matrix of the model (3) at the DFE point is

The characteristic polynomial of J is

where

It is easy to verify that if \(\mathcal{R}_{0} < 1\), then \(\tau _{1} > 0\) and \(\tau _{2} > 0\). This implies that two roots of the polynomial \(\lambda ^{2} + \tau _{1} \lambda + \tau _{2}\) have negative real parts. Consequently, the real parts of five eigenvalues of the matrix \(J(F_{0})\) are all negative, or equivalently, \(F_{0}\) is locally stable. The proof is complete. □

We now prove the uniform asymptotical stability of the DFE point of the model (3) by using the Lyapunov stability theorem [12, Theorem 3.1].

Theorem 4

If \(\mathcal{R}_{0} < 1\), then the DFE point of the model (3) is not only locally asymptotically stable but also uniformly asymptotically stable.

Proof

Since the first three equations of the model (3) do not depend on the states Q and R, we need only consider the following subsystem:

From (4), it is sufficient to consider the model (3) in its feasible set defined by

Consider a Lyapunov function \(V: \varOmega \to \mathbb{R}_{+}\) given by

By using the linearity property of the Caputo derivative and [32, Lemma 3.1] and some algebraic manipulations, we obtain

where

It is easy to check that if \(\mathcal{R}_{0} < 1\), then \(\tau _{1}, \tau _{2} < 0\). Consequently, by the Lyapunov stability theorem for fractional dynamical systems, we deduce that \(F_{0}\) is uniformly asymptotically stable. The proof is complete. □

Remark 1

The analysis of stability of \(F^{*}\) is an interesting mathematical problem, but in this work, we mainly focus on the case \(\mathcal{R}_{0} < 1\) to find an effective strategy to prevent the disease.

3.4 \(\mathcal{R}_{0}\) sensitivity analysis

Theorem 3 suggests that we should control the parameters such that \(\mathcal{R}_{0} < 1\). This provides a good strategy to prevent and restrain the disease. More precisely, when \(\mathcal{R}_{0} < 1\), then

which means that the disease will be completely controlled and prevented. Motivated by this, we now perform an \(\mathcal{R}_{0}\) sensitivity analysis to find ways to choose suitable parameters.

It is easy to verify that

Equation (16) suggests some ways to choose the parameters such that \(\mathcal{R}_{0} < 1\). Hence, based on this, we can propose suitable strategies to control and prevent the disease.

4 Numerical simulations by the adaptive predictor–corrector algorithm

4.1 The adaptive predictor–corrector algorithm

In this section we review the method that is proposed by [20]. The proposed algorithm is given as follows. Consider the initial value problem (IVP) governed by:

where \(D^{\alpha ,\rho }_{a+}\) is the proposed generalized Caputo-type fractional derivative operator given [20, Definition 4]. Initially, for \(m-1< \alpha \leq m\), \(a\geq 0\), \(\rho >0\) and \(y\in C^{m} ([a,T])\), the IVP (17) is equivalent, using [20, Theorem 3], to the Volterra integral equation:

where

The first step of our algorithm, under the assumption that the function f is such that a unique solution exists on some interval \([a,T]\), consists of dividing the interval \([a,T]\) into N unequal subintervals \([t_{k},t_{k+1} ]\), \(k=0,1,\ldots ,N-1\) using the mesh points

where \(h=\frac{(T^{\rho }-a^{\rho })}{N}\). Now, we are going to generate the approximations \(y_{k}\), \(k=0,1,\ldots ,N\), to solve numerically the IVP (17). The basic step, assuming that we have already evaluated the approximations \(y_{i}\approx y(t_{j} )\) (\(j=1,2,\ldots ,k\)), is that we want to get the approximation \(y_{k}\approx y(t_{k+1})\) by means of the integral equation

Making the substitution

we get

that is,

Next, if we use the trapezoidal quadrature rule with respect to the weight function \((t_{k+1}^{\rho }-z)^{\alpha -1}\) to approximate the integrals appear in the right-hand side of Eq. (24), replacing the function \(f (z^{\frac{1}{\rho }},y(z^{\frac{1}{\rho }}) )\) by its piecewise linear interpolant with nodes chosen at the \(t_{j}^{\rho } \) (\(j=0,1,\ldots ,k+1\)), then we obtain

Thus, substituting the above approximations into Eq. (24), we obtain the corrector formula for \(y(t_{k+1})\), \(k=0,1,\ldots ,N-1\),

where

The last step of our algorithm is to replace the quantity \(y(t_{k+1})\) shown on the right-hand side of formula (26) with the predictor value \(y^{p} (t_{k+1})\) that can be obtained by applying the one-step Adams–Bashforth method to the integral equation (23). In this case, by replacing the function \(f (z^{\frac{1}{\rho }},y(z^{\frac{1}{\rho }}) )\) with the quantity \(f (t_{j},y(t_{j} ) )\) at each integral in Eq. (24), we get

Therefore, the present adaptive predictor–corrector algorithm, for evaluating the approximation \(y_{k+1}\approx y(t_{k+1})\), is completely described by the formula

where \(y_{j}\approx y(t_{j} )\), \(j=0,1,\ldots ,k\), and the predicted value \(y_{k+1}^{p}\approx y^{p} (t_{k+1})\) can be determined as described in Eq. (28) with the weights \(a_{j,k+1}\) being defined according to (27). It is easily observed that when \(\rho =1\), the present adaptive predictor–corrector algorithm will be reduced to the predictor–corrector approach presented in [13].

4.2 Implementation with numerical simulation

In this section, we solve numerically Eq. (3) using the method given in the former section. In view of the above algorithm, following the rule (27), the approximations \(S_{k+1}\), \(E_{k+1}\), \(I_{k+1}\), \(Q_{k+1}\), and \(R_{k+1}\) can be simply evaluated using the iterative formulas, for \(N\in \mathbb{N}\) and \(T>0\),

where \(h=\frac{T^{\rho }}{N}\) and

Parameter values which are calculated for basic time of coronavirus used in numerical example are \(\varLambda =0.145\); \(\mu =0.000411\); \(\beta =0.00038\); \(\pi =0.00211\); \(\gamma =0.0021\); \(\sigma =0.0169\); \(\theta =0.0181\), with total population \(N = 355\) and initial data \((S_{0},E_{0},I_{0},Q_{0},R_{0} )=(153,55,79,68,20)\).

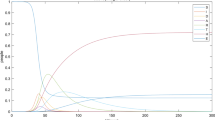

In Figs. 1–5, we plot numerical solutions of the model (3) with \(T=30\) obtained using the proposed algorithm and the RK4 method when \(N=355\) and \((S_{0},E_{0},I_{0},Q_{0},R_{0} )=(153,55,79,68,20)\) for \(\alpha =1\). From the graphical results in Figs. 1–5, it can be seen that the results obtained using the proposed algorithm match the results of the RK4 method very well, which implies that the presented method can predict the behavior of these variables accurately in the region under consideration.

Figures 6–10 show the approximate solutions for \(S(t)\), \(E(t)\), \(I(t)\), \(Q(t)\), and \(R(t)\) obtained for different values of α using the proposed algorithm. From the graphical results given in Figs. 6–10, it is clear that the approximate solutions depend continuously on the time-fractional derivative α. It is evident that the efficiency of this approach can be dramatically enhanced by decreasing the step size.

5 Remarks and conclusions

In this paper, we have formulated and analyzed a new mathematical model for COVID-19 epidemic. The model is described by a system of fractional-order differential equations and includes five classes, namely, S (susceptible class), E (exposed class), I (infected class), Q (isolated class), and R (recovered class). It should be emphasized that the model is a generalization of our recent work proposed in [35].

Firstly, the positivity, boundedness, and stability of the model have been established. Furthermore, the basic reproduction number of the model has been calculated by using the next generation matrix approach. Lastly, we have applied the adaptive predictor–corrector algorithm and fourth-order Runge–Kutta (RK4) method to simulate the proposed model. A set of numerical simulations has been performed to support the validity of the theoretical results. The numerical simulations indicate that there is a good agreement between theoretical results and numerical ones.

In the near future, we will extend the results in this work to propose new mathematical models for COVID-19 epidemic. Especially, effective strategies to control and prevent the disease will be investigated.

References

Abdo, M.S., Hanan, K.S., Satish, A.W., Pancha, K.: On a comprehensive model of the novel coronavirus (COVID-19) under Mittag-Leffler derivative. Chaos Solitons Fractals 135, 109867 (2020)

Atangana, A.: Fractal-fractional differentiation and integration: connecting fractal calculus and fractional calculus to predict complex system. Chaos Solitons Fractals 102, 396–406 (2017). https://doi.org/10.1016/j.chaos.2017.04.027

Atangana, A.: Blind in a commutative world: simple illustrations with functions and chaotic attractors. Chaos Solitons Fractals 114, 347–363 (2018). https://doi.org/10.1016/j.chaos.2018.07.022

Atangana, A.: Fractional discretization: the African’s tortoise walk. Chaos Solitons Fractals 130, 109399 (2020). https://doi.org/10.1016/j.chaos.2019.109399

Bekiros, S., Kouloumpou, D.: SBDiEM: a new mathematical model of infectious disease dynamics. Chaos Solitons Fractals 136, 109828 (2020)

Bocharov, G., Volpert, V., Ludewig, B., Meyerhans, A.: Mathematical Immunology of Virus Infections. Springer, Berlin (2018)

Brauer, F.: Mathematical epidemiology: past, present, and future. Infect. Dis. Model. 2, 113–127 (2017)

Brauer, F., van den Driessche, P., Wu, J.: Mathematical Epidemiology. Springer, Berlin (2008)

Cakan, S.: Dynamic analysis of a mathematical model with health care capacity for COVID-19 pandemic. Chaos Solitons Fractals 139, 110033 (2020)

Caputo, M.: Linear models of dissipation whose Q is almost frequency independent-II. Geophys. J. Int. 13(5), 529–539 (1967). https://doi.org/10.1111/j.1365-246X.1967.tb02303.x

Daftardar-Gejji, V.: Fractional Calculus and Fractional Differential Equations. Birkhäuser, Basel (2019)

Delavari, H., Baleanu, D., Sadati, J.: Stability analysis of Caputo fractional-order nonlinear systems revisited. Nonlinear Dyn. 67, 2433–2439 (2012)

Diethelm, K., Ford, N., Freed, A.: A predictor–corrector approach for the numerical solution of fractional differential equations. Nonlinear Dyn. 29, 3–22 (2002)

Higazy, M.: Novel fractional order SIDARTHE mathematical model of COVID-19 pandemic. Chaos Solitons Fractals 138, 110007 (2020)

Kumar, S., Cao, J., Abdel-Aty, M.: A novel mathematical approach of COVID-19 with non-singular fractional derivative. Chaos Solitons Fractals 139, 110048 (2020)

Li, Y., Cheng, Y., Podlubny, I.: Mittag-Leffler stability of fractional order nonlinear dynamic systems. Automatica 45, 1965–1969 (2009)

Lin, W.: Global existence theory and chaos control of fractional differential equations. J. Math. Anal. Appl. 332(1), 709–726 (2007). https://doi.org/10.1016/j.jmaa.2006.10.040

Martcheva, M.: An Introduction to Mathematical Epidemiology. Springer, New York (2015)

Ming, W., Huang, J.V., Zhang, C.J.P.: Breaking down of the healthcare system: mathematical modelling for controlling the novel coronavirus (2019-nCoV) outbreak in Wuhan, China (2020). https://doi.org/10.1101/2020.01.27.922443

Odibat, Z., Baleanu, D.: Numerical simulation of initial value problems with generalized Caputo-type fractional derivatives. Appl. Numer. Math. 156, 94–110 (2020)

Odibat, Z., Baleanu, D.: Numerical simulation of initial value problems with generalized Caputo-type fractional derivatives. Appl. Numer. Math. 156, 94–105 (2020). https://doi.org/10.1016/j.apnum.2020.04.015

Odibat, Z., Shawagfeh, N.: Generalized Taylor’s formula. Appl. Math. Comput. 186(1), 286–293 (2007). https://doi.org/10.1016/j.amc.2006.07.102

Okuonghae, D., Omame, A.: Analysis of a mathematical model for COVID-19 population dynamics in Lagos, Nigeria. Chaos Solitons Fractals 139, 110032 (2020)

Oldham, K., Spanier, J.: The Fractional Calculus Theory and Applications of Differentiation and Integration to Arbitrary Order. Elsevier, Amsterdam (1974)

Podlubny, I.: Fractional Differential Equations. Academic Press, San Diego (1998)

Postnikov, E.B.: Estimation of COVID-19 dynamics “on a back-of-envelope”: does the simplest SIR model provide quantitative parameters and predictions? Chaos Solitons Fractals 135, 109841 (2020)

Samko, S.G., Kilbas, A.A., Mariuchev, O.I.: Fractional Integrals and Derivatives. Taylor & Francis, Milton Park (1993)

Shah, K., Abdeljawad, T., Mahariq, I., Jarad, F.: Qualitative analysis of a mathematical model in the time of COVID-19. BioMed Res. Int. 2020, Article ID 5098598 (2020). https://doi.org/10.1155/2020/5098598

Torrealba-Rodriguez, O., Conde-Gutiérrez, R.A., Hernández-Javiera, A.L.: Modeling and prediction of COVID-19 in Mexico applying mathematical and computational models. Chaos Solitons Fractals 138, 109946 (2020)

Ud Din, R., Shah, K., Ahmad, I., Abdeljawad, T.: Study of transmission dynamics of novel COVID-19 by using mathematical model. Adv. Differ. Equ. 2020, 323 (2020)

van den Driessche, P., Watmough, J.: Reproduction numbers and sub-threshold endemic equilibria for compartmental models of disease transmission. Math. Biosci. 180, 29–48 (2002)

Vargas-De-Leon, C.: Volterra-type Lyapunov functions for fractional-order epidemic systems. Commun. Nonlinear Sci. Numer. Simul. 24, 75–85 (2015)

Yousaf, M., Muhammad, S.Z., Muhammad, R.S., Shah, H.K.: Statistical analysis of forecasting COVID-19 for upcoming month in Pakistan. Chaos Solitons Fractals 138, 109926 (2020)

Zaman, G., Jung, H., Torres, D.F.M., Zeb, A.: Mathematical modeling and control of infectious diseases. Comput. Math. Methods Med. 2017, Article ID 7149154 (2017)

Zeb, A., Alzahrani, E., Erturk, V.S., Zaman, G.: Mathematical model for coronavirus disease 2019 (COVID-19) containing isolation class. BioMed Res. Int. 2020, Article ID 3452402 (2020). https://doi.org/10.1155/2020/3452402

Zhang, Z.: A novel COVID-19 mathematical model with fractional derivatives: singular and nonsingular kernels. Chaos Solitons Fractals 139, 110060 (2020). https://doi.org/10.1016/j.chaos.2020.110060

Acknowledgements

The authors thank the editor and anonymous referees for useful comments that led to a great improvement of the paper.

Availability of data and materials

The authors confirm that the data supporting the findings of this study are available within the article cited herein.

Funding

This paper was supported by the Natural Science Foundation of the Higher Education Institutions of Anhui Province (No. KJ2020A0002).

Author information

Authors and Affiliations

Contributions

Authors have equally contributed in preparing this manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that there is no conflict of interest regarding the publication of this paper.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhang, Z., Zeb, A., Egbelowo, O.F. et al. Dynamics of a fractional order mathematical model for COVID-19 epidemic. Adv Differ Equ 2020, 420 (2020). https://doi.org/10.1186/s13662-020-02873-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-020-02873-w