Abstract

We study the inhomogeneous nonlinear time-fractional Schrödinger equation for linear potential, where the order of fractional time derivative parameter α varies between \(0 < \alpha < 1\). First, we begin from the original Schrödinger equation, and then by the Caputo fractional derivative method in natural units, we introduce the fractional time-derivative Schrödinger equation. Moreover, by applying a finite-difference formula to time discretization and cubic B-splines for the spatial variable, we approximate the inhomogeneous nonlinear time-fractional Schrödinger equation; the simplicity of implementation and less computational cost can be mentioned as the main advantages of this method. In addition, we prove the convergence of the method and compute the order of the mentioned equations by getting an upper bound and using some theorems. Finally, having solved some examples by using the cubic B-splines for the spatial variable, we show the plots of approximate and exact solutions with the noisy data in figures.

Similar content being viewed by others

1 Introduction

The most famous equation in quantum mechanics that can explain the behavior of particles in Hilbert spaces is the Schrödinger equation. This equation is obtained in a different form in quantum physics, for example, in canonical quantization of the quantum mechanics, time evolution of the wave function leads to the Schrödinger equation. Also, Feynman [1] applied a path integral approach for a Gaussian probability distribution function to achieve the Schrödinger equation. The mathematical appearance of this equation is similar to a diffusion equation that can be derived by taking into account probability distributions.

Recently, the word fractional has been widely used in various sciences, especially, physics and mathematics. This concept for the first time was introduced by Mandelbrot [2] in many research items. Feynman’s path integral first introduced the concept of fractal into quantum mechanics.

The fractional derivative Schrödinger equation was generalized by Laskin [3–7] two decades ago. It is an extension of the Feynman path integral formalism. This prominent formalism of quantum mechanics is a basis for the fractional quantum mechanics. As we know, the Schrödinger equation has two parts: the first part contains the first-order time derivative, and the second part has the second-order space derivative. Laskin [3–7] used the time-fractional derivative Schrödinger equation and showed that the connection between the statistical form of the quantum path was of the same nature as basic equations of quantum mechanics. In the Laskin scenario, the time-fractional derivative Schrödinger equation correlates with consideration of the non-Markovian evolutions. Also, he introduced the space-fractional derivative by the Levy distributions for all the possible paths. Furthermore, it has been shown that the fractional Hamiltonian and parity remain conserved, and by the time-fractional derivative the spectrum of energy levels of the hydrogen atom and the harmonic oscillator are computed. He, in his paper, investigated the energy levels for the fractional three-dimensional Coulomb potential Bohr atom; see [3–7]. The fractional Laplacian–Schrödinger equation for initial value problems was investigated by Hu and Kallianpur [8], in which the solutions are represented as probability. Also, the Green function in quantum scattering and the barrier influence were computed by Guo and Xu [9].

Some of the properties of time-fractional derivation nonlinear Schrödinger equation, such as fractal oscillator, were investigated by Naber [10]. This process was done by replacing the first order of time derivatives with a Caputo fractional derivative [11]; meanwhile, the second-order space derivatives remained unchanged. In [10], the order of the time-fractional Schrödinger derivative is \(0< \alpha < 1\), and with this order, the Schrödinger equation for a free particle in box and a finite potential well was solved. After that, the Schrödinger equation with both space and time-fractional derivatives was developed and computed by Wang and Xu [12] for free particle and an infinite rectangular potential well. The solution of Coulomb potential, linear potential, and δ-potential for the fractional Schrödinger equation were investigated in [13].

In this paper, we study the Schrödinger equation based on fractal time. This equation was discussed in [3–7, 10, 14–21]. In paper [14], according to Caputo’s fractional derivatives, Planck mass and constant were represented by fractal equations whose dimensions are also fractal quantities. By this technique, they have shown that the time-dependent fractal Schrödinger differential equation for a particle in the potential field exactly matches the standard form of the equation. In [10], the Schrödinger fractal time wave function for free particle and wells was obtained by using the Mittag-Leffler function with the complex argument, and the eigenvalues corresponding to this function were also shown. In paper [3–7] also presented by Laskin, a Schrödinger time-independent fractal equation was considered and applications of this equation such as determining the shape of the Schrödinger wave function and its exact solution, the wave function and the eigenvalues for infinite potential wells, and in particular the values for a linear potential field, were obtained for \(0 < \alpha < 1\). In all of these papers, the Schrödinger fractional time differential equation was investigated, and for different potentials, the wave function and specific differential equation values were specified. In this paper, we solve the general form of a heterogeneous fractal time differential equation corresponding to a heterogeneous fractal time Schrödinger equation using the B-spline method [22] and obtain numerical answers for such a function. To make this more concrete, we solve the fractal time-dependent Schrödinger equation for a linear potential field with this method and present the exact solutions.

2 The inhomogeneous nonlinear time-fractional Schrödinger equation

One way to do a fractal form of the time-fractional derivation of nonlinear Schrödinger equation is to apply the natural units \(\hbar =c=1\) for a wave function. Some people such as [10] used Planck’s units for our models, both of which are the same. The definition of Plank’s units [23] in terms of three theoretical physics constants \(G,\hbar, c\), where G states for gravitational constant, ħ is Planck’s constant, and c denotes the speed of light, is as follows:

where the last equation denotes the energy in Planck’s scale. Indeed, in natural units,  .

.

In our model, for the construction of the time-fractional derivative for a nonlinear Schrödinger equation in natural units, we start from the primary definition of this equation. The Schrödinger equation in one-dimensional space-time was introduced in [24] as follows:

where \(V(x)\) indicates the potential of the system. In quantum mechanics literature, various potential functions are usually chosen to describe the models. In this work, to construct a nonlinear time-derivative fractional Schrödinger equation, we consider Eq. (2) in terms of natural units as follows:

Now, we apply the Caputo fractional derivation method to introduce nonlinear Schrödinger equation in one-dimensional spaces as follows:

In Eq. (4), \(D^{\nu }_{t}\) denotes the Caputo fractional time derivative of order \(0<\nu <1\). The main intention of this paper is to consider the abstract time-fractional evolution equation (4) on a potential well, where \(\frac{\partial ^{\nu } \varPsi }{{\partial t}^{\nu }}\) denotes the Caputo fractional derivative.

Now, we consider the motion of a particle in a linear potential field [25] which is generated by an external field. The potential arising from this field reads as follows:

where F is the force that affects the particle in the external field. Thus one can apply an example of inhomogeneous nonlinear time-fractional Schrödinger equation for a linear potential given by

The Schrödinger equation is the basic equation in quantum mechanics. This equation is the generator of probability function producing the temporal and local evaluation of a mechanical system. In this article, we consider the time-fractional Schrödinger equation

where δ has a real constant. Also, the initial, boundary, and overspecified conditions, respectively, read as follows:

Therefore, we have

By substitution of (9) into (7), the coupled real differential equations are obtained as follows:

For any positive integer m, the Caputo fractional derivatives of order α read as follows:

If \(h=\frac{1}{N}\) is a step size in the x-axis and \(\Delta t=\frac{T}{M}\) is a step size in the t-axis, then, for any point of \((x_{i}, t_{k})\), we have

By using the discretization of the time-fractional derivative term in [26], we have

where \(u_{i}^{k}\) has a numerical approximation of \(u(x_{i}, t_{k})\) and

3 Implementation of the cubic B-spline functions

In this section, we introduce a set of nodes over \([0,1]\) such as

and \(h=x_{i+1} - x_{i}, i=0,1,\ldots, N-1 \), is a step length. We extend the sets as

Definition 3.1

Let

Then the cubic B-spline is defined as

for \(i=-1, 0, \ldots, N+1\).

Also, by using Definition 3.1, the value and derivatives of \(B_{i} (x)\) at the nodes \(x_{i}\text{ s}\) are given by

If \(c_{i}\) and \(d_{i}\) are unknown time-dependent quantities, which should be determined, then

By discretizing the time derivative of Eq. (10) and using the finite difference, we have

where Δt is the time step. By applying (15) and (16) at the point \(x = x_{m}\) and using (14), we have

where \(\gamma _{\alpha }=(\Delta t)^{\alpha } \varGamma (2-\alpha )\). For simplicity, we set

and

Then we have

System (17) consists of \(2(N + 1)\) equations in \(2(N + 3)\) unknown coefficients. In addition, by imposing the boundary conditions (8), we complete the following equations:

where \(x_{s}=x^{*}, 1\leq s \leq N-1\). This system can be rewritten as

where

The matrices \(\mathbf{M}_{1}\) and O have the same size \((N + 3)\times (N + 3)\), where O is the zero matrix and

and

where

Also,

By solving (18), the coefficients \(c_{j}\) and \(d_{j}\) are obtained.

Hence, for \(j=0, 1, \ldots, N\), we have

By using the boundary conditions in (8), the initial vector \(C^{0}\) can be obtained as

or

where

Since the matrix A is singular and ill-posed, the estimate of \(X^{0}\) by (20) will be unstable. In this work, we adapt the Tikhonov regularization method (TRM) to solve matrix equations (18) and (20), which are given by

By using the first- and second-order TRM, the matrices \(R^{(1)}\) and \(R^{(2)}\) are given by [27]

where \(M=2(N+3)\). Therefore for equations (18) and (20), by using TRM, we have

To compute the equation and to determine a suitable value of σ, we use the generalized cross-validation (GCV) scheme (see [28–30]).

4 Convergence analysis

In this section, we discuss the convergence of scheme (7). For this purpose, we introduce Δ on the interval \([a, b]\) as

Also, the spline function is interpolated, the values of the function \(u\in C^{4} [a, b]\) named \(S_{\Delta }\). First we begin with a useful theorem that was proved by Stoer and Bulirsch [31].

Theorem 4.1

Suppose that\(u \in C^{4} [a, b]\)and that\(|f^{(4)} (x)| \leq L\)for\(x \in [a, b]\). Then there exist constants\(\lambda _{j} \leq 2 \), which do not depend on the partition Δ, such that for\(x \in [a, b]\)it follows that

Theorem 4.2

The collocation approximations\(U_{n}(x)\)and\(V_{n}(x)\)for the solutions\(u_{n}(x)\)and\(v_{n}(x)\)of problem (7) for any constant of\(\mu >0\)satisfy the following error estimate:

Proof

Let \(\hat{U}_{n} (x,t)\) and let \(\hat{V}_{n} (x,t)\) be the computed splines for \(U_{n}(x,t)\) and \(V_{n} (x,t)\) defined in (15) such that

and

Following (18), for \(\hat{U}_{n}\) and \(\hat{V}_{n}\), we have

where

and

By subtracting (18) and (25), we have

where

and for every \(0 \leq m \leq N\), we have

Therefore

By using the Cauchy–Schwarz inequality, we have

Then, after simplification and differentiation, we have

By using Theorem 4.1, we have

After simplification, we get

So we can rewrite (28) as follows:

where

Similarly, we have

If \(\mathbf{M} = \max \{M_{1}, M_{2}\}\), then

From (27), Eq. (29) is deduced

By applying (26) and (21), we have

Using relation (30) and taking the infinity norm, we find

where

Thus

such that

and

We can easily see that

so

Therefore, according to (23) and (32), we obtain

Setting \(\gamma = 2 \lambda _{0} L h^{2} + 20 \mathbf{M}_{1} \), we have

In addition, we calculate the time discretization process of Eq. (17). For this purpose, we discretize the system of (7) in the time variable:

Hence, according to Theorem 4.2 and Eqs. (33) and (34), we have

where τ is constant and the order of convergence is \(O(\Delta t^{2-\alpha } +h^{2})\). □

5 Illustrative examples

The results of the proposed method are compared with the RBF method (RBFM), and the accuracy of the method is shown in all examples. Also, we define the root mean square (RMS) or total error as follows:

where the total error is RMS and the total number of estimated values is M. Also, we consider \(T=1\), \(N=\frac{1}{10}\), and \(\Delta t=\frac{1}{1000}\).

Example 5.1

Let

with the initial and boundary condition as follows:

and the exact solution is \(\varPsi (x,t)= t^{2} ( \sin (x) + i \cos (x))\).

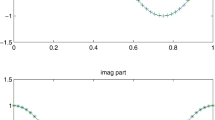

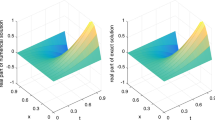

The plots of approximation and exact solutions between of \(p_{1}(t)\), \(p_{2}(t)\) and \(u(x,t)\), \(v(x,t)\) for Example 5.1 with noisy data, are drawn in Fig. 1 and Fig. 2, respectively. Also, the plots of error of \(u(x,t)\) and \(v(x,t)\) for Example 5.1 with the noisy data is drawn in Fig. 3. In addition, the numerical solutions of Example 5.1 with \(\alpha=0.5\), is shown in Table 1.

The plots of approximation and exact solutions of \(p_{1}(t)\) and \(p_{2}(t)\) for Example 5.1 with the noisy data

The plots of approximate solutions and exact solutions of \(u(x,t)\) and \(v(x,t)\)for Example 5.1 with the noisy data

The plots of error of \(u(x,t)\) and \(v(x,t)\) for Example 5.1 with the noisy data

Example 5.2

Let

with the initial and boundary condition as follows:

The exact solution is

Also, \(R(x)\) is obtained by the conditions of the problem.

Similar to the previous examples, the plots of approximation and exact solutions between of \(p_{1}(t)\), \(p_{2}(t)\) and \(u(x,t)\), \(v(x,t)\) for Example 5.2 with noisy data, are drawn in Fig. 4 and Fig. 5, respectively. Also, the plots of error of \(u(x,t)\) and \(v(x,t)\) for Example 5.2 with the noisy data is drawn in Fig. 6. In addition, the numerical solutions of Example 5.2 with \(\alpha=0.75\), is shown in Table 2.

The plots of approximation and exact solutions of \(p_{1}(t)\) and \(p_{2}(t)\) for Example 5.2 with the noisy data

The plots of approximate solutions and exact solutions of \(u(x,t)\) and \(v(x,t)\) for Example 5.2 with the noisy data

The plots of error of \(u(x,t)\) and \(v(x,t)\) for Example 5.2 with the noisy data

6 Conclusion

It is a fact that the time evolution of the system or the wave function in quantum mechanics is described by the Schrödinger equation. By applying a finite-difference formula to time discretization and cubic B-splines for the spatial variable, we got a numerical method for solving the Schrödinger equation, the simplification of implementation and lower computational cost can be mentioned as its main advantages. We proved the convergence of the method by getting an upper bound and using some theorems. We also computed the order of the mentioned equations in the convergence analysis section. Finally, in the illustrative examples section, we showed two examples with the approximate solutions, which were obtained by using the cubic B-splines for the spatial variable. The plots of approximate and exact solutions with the noisy data are presented in figures.

References

Feynman, R.P., Hibbs, A.R.: Quantum Mechanics and Path Integrals. McGraw-Hill, New York (1965)

Mandelbrot, B.B.: The Fractal Geometry of Nature. Freeman, New York (1982)

Laskin, N.: Fractional quantum mechanics. Phys. Rev. E 62, 3135–3145 (2000)

Laskin, N.: Fractional quantum mechanics and Levy path integrals. Phys. Lett. A 268, 298 (2000). arXiv:hep-ph/9910419

Laskin, N.: Fractional Schrödinger equation. Phys. Rev. E 66, 056108 (2002). arXiv:quant-ph/020609

Laskin, N.: Fractals and quantum mechanics. Chaos 10, 780 (2000)

Laskin, N.: Levy flights over quantum paths. e-print. arXiv:quant-ph/0504106

Hu, Y., Kallianpur, G.: Schrödinger equations with fractional Laplacians. Appl. Math. Optim. 42, 281 (2000)

Guo, X.Y., Xu, M.Y.: Some physical applications of fractional Schrödinger equation. J. Math. Phys. 47, 082104 (2006)

Naber, M.: Time fractional Schrödinger equation. J. Math. Phys. 45(8), 3339–3352 (2004)

Caputo, M.: Linear models of dissipation whose Q is almost frequency independent—II. Geophys. J. Int. 13(5), 529–539 (1967). https://doi.org/10.1111/j.1365-246X.1967.tb02303.x

Wang, S.W., Xu, M.Y.: Generalized fractional Schrödinger equation with space-time fractional derivatives. J. Math. Phys. 48, 043502 (2007)

Dong, J., Xu, M.: Some solutions to the space fractional Schrödinger equation using momentum representation method. J. Math. Phys. 48, 072105 (2007). https://doi.org/10.1063/1.2749172

Narahari, B.N., Achar, B.T., Yale, J.W.: Time fractional Schrodinger equation revisited. Adv. Math. Phys. https://doi.org/10.1155/2013/290216

Bashan, A., Murat Yagmurlu, N., Yusuf Ucar, Y., Esen, A.: An effective approach to numerical soliton solutions for the Schröodinger equation via modified cubic B-spline differential quadrature method. Chaos Solitons Fractals 100, 45–56 (2017)

Bashan, A.: A mixed method approach to Schroödinger equation: finite difference method and quartic B-spline based differential quadrature method. Int. J. Optim. Control Theor. Appl. 9(2), 223–235 (2019)

Bashan, A., Murat Yagmurlu, N., Yusuf Ucar, Y., Esen, A.: A new perspective for quintic B-spline based Crank–Nicolson-differential quadrature method algorithm for numerical solutions of the nonlinear Schroödinger equation. Eur. Phys. J. Plus 133(12), 129–142 (2018)

Bashan, A.: An efficient approximation to numerical solutions for the Kawahara equation via modified cubic B-spline differential quadrature method. Mediterr. J. Math. 16, 14 (2019)

Ucar, Y., Murat Yagmurlu, N., Bashan, A.: Numerical solutions and stability analysis of modified Burgers equation via modified cubic B-spline differential quadrature methods. Sigma J. Eng. & Nat. Sci. 37(1), 129–142 (2019)

Bashan, A., Ucar, Y., Murat Yagmurlu, N., Esen, A.: Numerical solutions for the fourth order Extended Fisher-Kolmogorov equation with high accuracy by differential quadrature method. Sigma J Eng & Nat Sci 9(3), 273–284 (2018)

Bashan, A.: An effective application of differential quadrature method based on modified cubic B-splines to numerical solutions of the KdV equation. Turk. J. Math. 42, 373–394 (2018)

Erfanian, M., Zeidabadi, H.: Approximate solution of linear Volterra integro-differential equation by using cubic B-spline finite element method in the complex plane. Adv. Differ. Equ. 2019, 62 (2019)

Hatfield, B.: Quantum Field Theory of Point Particles and Strings. Addison-Wesley, Reading (1992)

Gasiorowicz, S.: Quantum Physics. Wiley, New York (1974)

Landau, L.D., Lifshitz, E.M.: Quantum Mechanics (Non-Relativistic Theory), Course of Theoretical Physics, vol. 3, 3rd edn. Butterworth, Oxford (2003)

Murio, D.A.: Implicit finite difference approximation for time fractional diffusion equations. Comput. Math. Appl. 56, 1138–1145 (2008)

Martin, L., Elliott, L., Heggs, P.J., Ingham, D.B., Lesnic, D., Wen, X.: Dual reciprocity boundary element method solution of the Cauchy problem for Helmholtz-type equations with variable coefficients. J. Sound Vib. 297, 89–105 (2006)

Aster, R.C., Borchers, B., Thurber, C.: Parameter Estimation and Inverse Problems. Textbook, New Mexico (2003)

Elden, L.: A note on the computation of the generalized cross-validation function for ill-conditioned least squares problems. BIT 24, 467–472 (1984)

Golub, G.H., Heath, M., Wahba, G.: Generalized cross-validation as a method for choosing a good ridge parameter. Technometrics 21(2), 215–223 (1979)

Stoer, J., Bulrisch, R.: An Introduction to Numerical Analysis. Springer, Berlin (1991)

Acknowledgements

This work was funded by University of Zabol, Iran (PR-UOZ-97-18). The authors would like to express their gratitude to the Vice Chancellery for Research and Technology, University of Zabol, for funding this study.

Availability of data and materials

Please contact the author for data requests.

Funding

We have no funding.

Author information

Authors and Affiliations

Contributions

All authors read and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Erfanian, M., Zeidabadi, H., Rashki, M. et al. Solving a nonlinear fractional Schrödinger equation using cubic B-splines. Adv Differ Equ 2020, 344 (2020). https://doi.org/10.1186/s13662-020-02776-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-020-02776-w