Abstract

In this paper, we investigate the Sumudu transforms and homotopy analysis method (S-HAM) for solving a system of fractional partial differential equations. A general framework for solving such a kind of problems is presented. The method can also be utilized to solve systems of fractional equations of unequal orders. The algorithm is reliable and robust. Existence and convergence results concerning the proposed solution are given. Numerical examples are introduced to demonstrate the efficiency and accuracy of the algorithm.

Similar content being viewed by others

1 Introduction

The fractional differential equation (FDE) is one of the most important topics in the recent years, not only because it can be used for modeling real-life phenomena, but also it gives researchers a wide range as regards the material properties. The fractional-order models are more adequate than the previously used integer-order models [1, 2], because fractional-order derivatives and integrals enable the description of the memory and hereditary properties of different substances. A system of fractional partial differential equations is a tool with impact on modeling several phenomena in different fields, such as fluid mechanic, biology, finance and material science.

Finding the exact solution for a FDE is very difficult even for a the linear one, so approximate solutions are needed. The solution of the system of fractional partial differential equations was pointed out by several researchers such as Ertürk and Momani who applied the differential transform method [3]. Ghazanfari investigated the fractional complex transform method [4]. Jafari et al. presented a Laplace transform with the iterative method [5]. Ahmed et al. used the Laplace Adomian decomposition method and the Laplace variational iteration method [6].

Homotopy analysis method is one of the most effective methods for solving FDE [7, 8]. It can give a convergent series solution that depends on a convergent control parameter, and the series can be represented using various basis functions. The major drawback of the method is that for each term you have to solve a sub-differential equation or the evaluation of some sub-integration, which affects the speed and memory usage. So these limitations call for other efficient and practical algorithms. In this article, we use the Sumudu transformation to overcome these limitations, which further allows one to use and apply all of the HAM features such as choosing the initial guess, the control parameter, and the basis function. Sumudu transforms had been incorporated with other several methods such as the homotopy perturbation method [9], the Adomian decomposition method [10] and the homotopy analysis method [11, 12]. Comparing with the standard HAM the proposed method is capable for reducing the volume of the computational work while still maintaining the high accuracy of the numerical results, and therefore amounts to an improvement in the performance of the approach [13].

The rest of the paper is organized as follows. In Sect. 2, we review some facts about fractional derivative and the Sumudu transformation and then introduce the solution procedure in Sect. 3. The existence of the solution is given in Sect. 4. The convergence of the method is illustrated in Sect. 5. Numerical examples illustrating the theoretical results are provided in Sect. 6.

2 Preliminaries and notations

In this section, some definitions and properties of the fractional calculus and Sumudu transform are briefly mentioned. For more details see [14–20].

2.1 Fractional calculus

We start with the following definition.

Definition 2.1

A real function \(f(t)\); \(t > 0\), is said to be in the space \(C_{\mu }\); \(\mu \in \Re \), if there exists a real number \(p > \mu \), such that \(f(t)=t^{p}f_{1}(t)\), where \(f_{1}(t)\in C(0,\infty )\), and it is said to be in the space \(C_{\mu }^{n}\) if and only if \(f^{(n)}\in C_{\mu }\); \(n \in \mathbb{N}\).

Now we can give the main definitions of fractional integrals and derivatives.

Definition 2.2

The Riemann–Liouville fractional integral operator \((J^{\alpha })\) of order \(\alpha \geq 0\), of a function \(f\in C_{\mu }\), \(\mu \geq -1\), is defined as

where \(\varGamma (\alpha )\) is the well-known gamma function.

Definition 2.3

The fractional derivative of a function \(f\in C_{-1}^{n}\) in the Caputo sense is defined as

where \(n-1< \alpha < n\) and \(n \in \mathbb{N}\).

We mention the following basic properties of fractional derivatives and integrals:

-

1

If \(f\in C_{-1}^{n}\) for some \(n \in \mathbb{N}\), then \(D^{\alpha }f\) is well defined for all \(0\leq \alpha \leq n\) with \(D^{\alpha }f \in C_{-1}\).

-

2

If \(f\in C_{\mu }^{n}\) for some \(\mu \geq -1\), then

$$ \bigl(J^{\alpha }D^{\alpha }\bigr)f(t) = f(t) - \sum _{k = 0}^{n - 1} {f^{(k)} \bigl(0^{+} \bigr)} \frac{{t^{k} }}{{k!}}, $$(4)provided \(n-1\leq \alpha \leq n\).

-

3

For all \(\gamma >\alpha \) one has

$$ D^{\alpha }t^{\gamma }= \frac{\varGamma (\gamma +1)}{\varGamma (\gamma -\alpha +1)}t^{\gamma - \alpha }. $$

2.2 Sumudu transform

The Sumudu transform is given by [21]

where \(f\in A\) with

The Sumudu transform possesses the following main properties:

-

1

\(S[c]=c\) for any constant c;

-

2

\(S[t^{m}]=\frac{\eta ^{m}}{\varGamma (m+1)}\) for any \(m>0\);

-

3

\(S[\alpha f(t)\pm \beta g(t)]=\alpha S[f(t)]\pm \beta S[g(t)]\);

-

4

For \(n-1<\alpha \leq n\), we have

$$ S\bigl[D^{\alpha }_{t} f(t)\bigr]=\eta ^{-\alpha }S\bigl[f(t) \bigr]-\sum_{i=0}^{n-1}\eta ^{- \alpha +i}f^{(i)}\bigl(0^{+}\bigr). $$

The inverse Sumudu transform of a function \(F(\eta )\) is given by [21]

which exists provided \(F(1/s)/s\) is a meromorphic function, with singularities s satisfying \(\mathit{Re}(s) < c\) for some constant c, and

for some positive constants R, M, and K.

3 Solution procedure

To express the solution by the proposed method, let us consider the fractional partial differential equation

where \(n-1<\alpha <n\) for positive integer n, subject to the initial conditions

By taking the Sumudu transform for both sides of Eq. (6), we have

where

Now the main difficulty here is to find the solution \(u(x,t)\) by invoking the inverse Sumudu transform for Eq. (10), in particular for the nonlinear term \(\eta ^{\alpha }S[N[u(x,t)]]\). To tackle this, we can utilize the HAM by defining the homotopy map

where \(q\in [0,1]\) is an embedding parameter, ħ is the convergence control parameter, \(N_{1}[\phi (x,t;q)]\) the nonlinear operator given by

and \(\phi (x,t;q)\) is a Taylor series with respect to q defined by

We can note that, as q varies from 0 to 1, the zeroth-order deformation equation (13) varies from the initial guess \(\phi (x,t;0)=u_{0}(x,t)\) to the exact solution \(\phi (x,t;1)=u(x,t)\).

We have the following auxiliary result.

Theorem 3.1

The nonlinear term \(N[\phi (x,t;q)]\) satisfies the property

Proof

The Maclaurin series of \(N[\phi (x,t;q)]\) with respect to q is given by

which completes the proof. □

The next theorem presents the recursive formula of the unknown coefficients \(u_{m}(x,t)\).

Theorem 3.2

If we substitute Eq. (13) into the zeroth-order deformation equation (11), then the unknown functions\(u_{m}(x,t)\)are given by

where

and

for all\(m=1,2,3,\ldots \) .

Proof

By substituting the series in Eq. (13) in the left-hand side of Eq. (11) and equating the coefficients of the powers \(q^{i}\), \(i=1,2,\ldots, m\), we have

With the aid of Theorem 3.1, the right-hand side can be written as

from which follows that

Applying the inverse Sumudu transform for Eq. (17) yields

and this ends the proof. □

In practice, we define the mth approximate solution of the given problem as

while the residual error for the given solution is defined as

4 Existence and convergence results

In this section, we introduce the main results regarding the existence and convergence of the proposed algorithm.

Theorem 4.1

If optimal\(\hbar \neq 0\)exists, and\(u_{0}(x,t)\)is properly chosen in Eq. (15) in such a way that\(\Vert u_{n+1}(x,t) \Vert \leq \lambda \Vert u_{n}(x,t) \Vert \), where\(0\leq \lambda < 1\), then the series\(\sum_{n=0}^{\infty }u_{n}(x,t)\)converges uniformly, where\(\Vert \cdot \Vert \)denotes the usual infinite norm.

Proof

Let \(S_{n}\) be the sequence of partial sums \(S_{n}=\sum_{i=0}^{n} u_{i}(x,t)\). We show that the sequence \(\{S_{n}\}_{n=0}^{\infty }\) is Cauchy. First we observe that

With the help of the above equation, for all \(n,m\in \mathbb{N} \) with \(n\geq m\), we have

which leads to

and consequently \(\Vert S_{n}-S_{m} \Vert \rightarrow 0\) as \(n,m\rightarrow \infty \). Thus, the sequence \(\{S_{n}\}\) is a Cauchy sequence, and hence it converges. □

Corollary 4.2

Suppose\(\sum_{i=0}^{\infty }u_{i}(x,t)\)converges to the solution\(u(x,t)\)of Eq. (6) and satisfies the hypotheses of Theorem 4.1, then the maximal absolute truncation error using the firstmterms in the domain\((x,t)\in \varOmega \)can be estimated as

where\({\varXi =\sup_{\substack{(x,t)\in \varOmega }} \vert u_{0}(x,t) \vert }\).

Proof

Since \(S_{n}=\sum_{i=0}^{n} u_{i}(x,t)\), as \(n\rightarrow \infty \) the partial sum \(S_{n}\rightarrow u(x,t)\). Therefore, Eq. (19) can be written as

Thus, the maximum absolute truncation error on Ω satisfies

which ends the proof. □

It is worthy to mention that, for the initial value problem, we can choose the initial guess as \(u_{0}(x,t)=\sum_{i=0}^{M-1}f_{i}(x)\frac{t^{i}}{i!}\). Moreover, when \(N[\phi (x,t;q)]\) is a polynomial of \(\phi (x,t;q)\) and it s derivative and the nonhomogeneous term is analytic at the initial point then \(R_{m}\) can be written as \(\sum_{i=0}^{\infty }c_{i}(x)t^{ri}\) for \(r\in \Re \) and \(0<\alpha \leq 1\).

Theorem 4.3

If\(R_{m-1}\)in Eq. (15) is of the form\(R_{m-1}=c_{0}(x)+\sum_{n=1}^{M}c_{n}(x)t^{rn}\)for positive real numberr, then Eq. (6) subject to the initial conditions Eq. (7) admits at least one solution.

Proof

Using the properties of the Sumudu transform, we have

Since \(\alpha >0\), the Sumudu inverse for \(\eta ^{\alpha }S[R_{m-1}]\) exists and is given by

Hence, as \(M\rightarrow \infty \) the series \(u(x,t)=\lim_{M\rightarrow \infty }\sum_{n=0}^{M}u_{n}(x,t)\) becomes a solution of Eq. (6) and it satisfies the initial conditions by choosing \(u_{0}(x,t)=\sum_{i=0}^{M-1}f_{i}(x)\frac{t^{i}}{i!}\). This completes the proof. □

5 Numerical examples

In this section we present several examples to show the feasibility and robustness of the proposed technique.

5.1 Example 1

Consider the fractional linear system of PDE [6]

where \(0<\alpha ,\beta \leq 1\), subject to the initial conditions

According to the solution procedure, we can choose \(u_{0}(x,t)=1+e^{x}\) and \(v_{0}(x,t)=e^{x}-1\). To determine \(R_{m-1}\), we substitute

in Eq. (16) to give

and

Then the mth-order approximations are given by

Then the first few terms of the series are

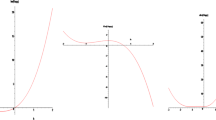

To determine the region for which the solution is convergent, we plot the ħ–curve in Fig. 1. Clearly, the values of \(D_{t}^{0.99}u(0.9,0)\) and \(D_{t}^{0.99}v(0.9,0)\) do not change in the region \(-1.5\leq \hbar \leq -0.5\). For simplicity, we fix \(\hbar =-1\). Then the solution for Example 1 becomes

where \(E_{\gamma ,1}(z)=\sum_{k=0}^{\infty }\frac{z^{k}}{\varGamma (\gamma k+1)}\) is the Mittag-Leffler function which is the exact solution. We note that the S-HAM solution can generate the Laplace Adomian decomposition solution when \(\hbar =-1\) given by [6].

5.2 Example 2

Consider the fractional coupled Burgers equations [22]

subject to the initial conditions \(u(x,0)=v(x,0)=\cos x\). According to the S-HAM algorithm, we can choose \(u_{0}=v_{0}=\cos x\). The mth orders are given by

The first few terms are of the series solutions are

With \(\alpha =\beta \) and \(\hbar =-1\), the solutions become

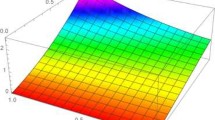

For \(\alpha \neq \beta \), we present the solution when \(\alpha =0.9\), \(\beta =0.8\) and \(\hbar =-0.2\) in Fig. 2 and its residual error in Fig. 3. We note that the exact solution of the fractional coupled Burger equation when (\(\alpha =\beta \)) is obtained via S-HAM but in the fractional variational iteration method (FVIM) the approximate one is only obtained; see [22]. Moreover, the S-HAM solution is discussed for \(t\in [0,1]\) whereas the FVIM solution is addressed for \(t\in [0,0.005]\), which is a small time. Figure 4 represent the S-HAM solution when \(\alpha =0.5\) and \(\beta =0.25\) for \(t\in [0,1]\) with \(\hbar =-0.324\).

5.3 Example 3

Consider the following nonlinear FPDE:

where \(0<\alpha \leq 1\) and \(0<\beta \leq 1\), subject to the initial conditions \(u(x,0)=e^{x}\), \(v(x,0)=e^{-x}\). According to the solution procedure, we can choose \(u_{0}(x,t)=e^{x}\) and \(v_{0}(x,t)=e^{-x}\), the mth order is given by

Thus, the solution becomes

To determine the region for which the solution converges, we plot the ħ-curve in Fig. 5. It is clear that the values of \(D_{t}^{0.99}u(0.9,0)\) and \(D_{t}^{0.98}v(0.9,0)\) do not change in the region \(-1.5\leq \hbar \leq -0.5\). For simplicity, we fixed \(\hbar =-1\). When \(\alpha =\beta =1\) the solution becomes

The solution for Example 3 is presented in Fig. 6 and the residual error is given in Fig. 7. Clearly, the present method can solve this kind of system of fractional partial differential equation that accurate within 10−7. Finally, the solution when \(\alpha =0.7\) and \(\beta =0.5\) is plotted in Fig. 8. Tables 1–3 present the solutions and their residual errors for several values of α and β along x and \(t \in [0,1]\) with proper selection of ħ. Via those tables, we can observe that the method is effective for these kinds of problems. Different from the published research [23], the present one considers this problem when \(\alpha =\beta \) and \(\alpha \neq \beta \).

6 Conclusion

Our concern was to provide asymptotic solutions to the system of fractional partial differential equations, using a relatively new analytical technique, the homotopy-Sumudu transformation method. A sufficient condition for convergence is presented. Moreover, based on sufficient conditions for convergence, an estimation of the maximum absolute error is obtained. Several examples are presented to demonstrate the efficiency of the method. Besides, the calculations involved in the method are very simple and straightforward.

References

Vyawahare, V., Nataraj, P.S.: Fractional-Order Modeling of Nuclear Reactor: From Subdiffusive Neutron Transport to Control-Oriented Models: A Systematic Approach. Springer, Berlin (2018)

Yu, Q., Vegh, V., Liu, F., Turner, I.: A variable order fractional differential-based texture enhancement algorithm with application in medical imaging. PLoS ONE 10(7), e0132952 (2015)

Ertürk, V.S., Momani, S.: Solving systems of fractional differential equations using differential transform method. J. Comput. Appl. Math. 215(1), 142–151 (2008)

Ghazanfari, B., Ghazanfari, A.G.: Solving system of fractional differential equations by fractional complex transform method. Asian J. Appl. Sci. 5(6), 438–444 (2012)

Jafari, H., Nazari, M., Baleanu, D., Khalique, C.M.: A new approach for solving a system of fractional partial differential equations. Comput. Math. Appl. 66(5), 838–843 (2013)

Ahmed, H.F., Bahgat, M.S., Zaki, M.: Numerical approaches to system of fractional partial differential equations. J. Egypt. Math. Soc. 25(2), 141–150 (2017)

Alomari, A.K., Awawdeh, F., Tahat, N., Ahmad, F.B., Shatanawi, W.: Multiple solutions for fractional differential equations: analytic approach. Appl. Math. Comput. 219(17), 8893–8903 (2013)

Alomari, A.K.: A novel solution for fractional chaotic Chen system. J. Nonlinear Sci. Appl. 8, 478–488 (2015)

Khader, M.M.: Application of homotopy perturbation method for solving nonlinear fractional heat-like equations using Sumudu transform. Sci. Iran. 24(2), 648 (2017)

Patel, T., Meher, R.: Adomian decomposition Sumudu transform method for convective fin with temperature-dependent internal heat generation and thermal conductivity of fractional order energy balance equation. Int. J. Appl. Comput. Math. 3(3), 1879–1895 (2017)

Rathore, S., Kumar, D., Singh, J., Gupta, S.: Homotopy analysis Sumudu transform method for nonlinear equations. Int. J. Ind. Math. 4(4), 301–314 (2012)

Pandey, R.K., Mishra, H.K.: Homotopy analysis Sumudu transform method for time-fractional third order dispersive partial differential equation. Adv. Comput. Math. 43(2), 365–383 (2017)

Singh, J., Shishodia, Y.S., et al.: An efficient analytical approach for MHD viscous flow over a stretching sheet via homotopy perturbation Sumudu transform method. Ain Shams Eng. J. 4(3), 549–555 (2013)

Alomari, A.K., Noorani, M.S.M., Nazar, R., Li, C.P.: Homotopy analysis method for solving fractional Lorenz system. Commun. Nonlinear Sci. Numer. Simul. 15(7), 1864–1872 (2010)

Podlubny, I.: Fractional Differential Equations: An Introduction to Fractional Derivatives, Fractional Differential Equations, to Methods of Their Solution and Some of Their Applications, vol. 198. Elsevier, San Diego (1998)

Eltayeb, H., Kilicman, A., Mesloub, S.: Application of Sumudu decomposition method to solve nonlinear system Volterra integrodifferential equations. Abstr. Appl. Anal. 2014, 503141 (2014)

Eltayeb, H., Kilicman, A.: Application of Sumudu decomposition method to solve nonlinear system of partial differential equations. Abstr. Appl. Anal. 2012, 412948 (2012)

Kilicman, A., Silambarasan, R.: Computing new solutions of algebro-geometric equation using the discrete inverse Sumudu transform. Adv. Differ. Equ. 2018(1), 323 (2018)

Geethamalini, S., Balamuralitharan, S.: Semianalytical solutions by homotopy analysis method for EIAV infection with stability analysis. Adv. Differ. Equ. 2018(1), 356 (2018)

Saad, K.M., Al-Sharif, E.H.: Analytical study for time and time–space fractional Burgers equation. Adv. Differ. Equ. 2017(1), 300 (2017)

Belgacem, F.B.M., Karaballi, A.A., Kalla, S.L.: Analytical investigations of the Sumudu transform and applications to integral production equations. Math. Probl. Eng. 2003(3), 103–118 (2003)

Prakash, A., Kumar, M., Sharma, K.K.: Numerical method for solving fractional coupled Burgers equations. Appl. Math. Comput. 260, 314–320 (2015)

Ahmed, H.F., Bahgat, M.S., Zaki, M.: Numerical approaches to system of fractional partial differential equations. J. Egypt. Math. Soc. 25(2), 141–150 (2017)

Acknowledgements

The author would like to thank the editor and the reviewers for their valuable comments, which improved the paper.

Availability of data and materials

Not applicable.

Funding

No funding is received for this paper.

Author information

Authors and Affiliations

Contributions

All of this work was done by A.K. Alomari. The author read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Competing interests

The authors declare that they have no competing interests

Consent for publication

Not applicable.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Alomari, A.K. Homotopy-Sumudu transforms for solving system of fractional partial differential equations. Adv Differ Equ 2020, 222 (2020). https://doi.org/10.1186/s13662-020-02676-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-020-02676-z