Abstract

In this paper, the iterative learning control (ILC) technique is extended to multi-input multi-output (MIMO) systems governed by parabolic partial difference equations with time delay. Two types of ILC algorithm are presented for the system with state delay and input delay, respectively. The sufficient conditions for tracking error convergence are established under suitable assumptions. Detailed convergence analysis is given based on discrete Gronwall’s inequality and discrete Green’s formula for the systems with time-varying uncertainty coefficients. Numerical results show the effectiveness of the proposed ILC algorithms.

Similar content being viewed by others

1 Introduction

The system governed by partial difference equations is a class of important dynamic systems which firstly arises as numerical solution of partial differential equations. In fact, the state space model of partial difference system can cover many nature laws, such as logistic model with spatial migration, mathematics physical processes (discrete heat equation), engineering technology (image processing, digital signal processing, circuit systems) (see [1, 2] and the references therein). There are many excellent results on the partial difference equations/systems, including exist/no exist [3, 4], stability [5], oscillation [6], positivity of solutions [7], etc. From the view point of practicality, since the first step, that is, the implementation of control for distributed parameter systems modeled by partial differential equations, requires to discretize the variable of systems, studying the control of partial difference systems has a great value.

Iterative learning control (ILC) is an intelligent control method which imitates human learning behavior [8]. For a repeatable system in a given finite time interval, based on tracking objective and previous input and output information, ILC can improve the system performance with the increase in the iteration number. ILC algorithm is simple but it can deal with many complex systems with nonlinear and uncertain characteristics [9, 10]. As an effective control algorithm, ILC has been used to track a given target in many types of systems, including ordinary difference/differential systems [11, 12], partial differential systems or distributed parameter systems [13, 14], impulsive systems [15], stochastic systems [16], fractional systems [17], etc. However, there are few results on ILC for partial difference systems.

Motivated by the above, in this paper, we investigate the ILC problem of MIMO parabolic partial difference equations with delay. Both the P-type ILC scheme and ILC scheme with time delay parameter are proposed for systems with state delay and input delay, respectively. Convergence conditions are given by using a discrete form inequality/formula. It is shown that selecting the learning gain parameter through the iterative learning process can guarantee the convergence of the output tracking error between the given desired output and the actual output.

Compared with the current literature, the main features of this work are summarized as follows: (1) It can handle MIMO parabolic partial difference systems. Although Refs. [18, 19] studied ILC for partial difference systems, the systems are only the single-input and single-output (SISO). Because a MIMO system often involves multi-input variables and multi-output variables, the input and output of a SISO system only are one, respectively, so the mathematical analysis of a MIMO system is more complex than that of a SISO system. (2) The systems include time delay in state and input. The ILC of systems with time delay is studied in [12, 20], but the systems are stated by ordinary difference equations, which is different to this paper. The system in this paper is governed by partial difference equations and simultaneously involving three different indices: time, space, and iteration; therefore the convergence analysis is more complex. (3) We used the methods of partial difference equations which have been applied in stability analysis from partial difference systems [2, 4–6], instead of Lyapunov method or linear matrix inequality (for multi dimensional dynamic systems) [21, 22].

The rest of the paper is arranged as follows. In Sect. 2, we present the formulation and some preliminaries. Section 3 provides ILC design and rigorous convergence analysis. In Sect. 4, the simulation results are illustrated. Finally, the conclusions of this paper are shown in Sect. 5.

2 ILC system description

In this paper, we consider the following two classes of parabolic type partial difference systems, which run a given task repeatedly on a finite time interval \([0,J]\). The first class is the system with time delay in state as follows:

The second class is the system with time delay in input, that is,

In systems (1a)–(1b) and (2a)–(2b), k is the index of iteration. \(\mathbf {Z}_{k}\in \mathbb{R}^{n}\), \(\mathbf {U}_{k}\in\mathbb{R}^{m}\), and \(\mathbf {Y}_{k}\in\mathbb {R}^{l}\) denote the system state vector, input and output vector, respectively. \(x,s\) are spatial and time discrete variables, respectively, \(1\leq x\leq I\), \(0\leq s\leq J\), where \(I,J\) are given integers. \(\mathbf {A}(s),{\mathbf {A}}_{\tau}(s)\in\mathbb {R}^{n\times n}\), \(\mathbf {B}(s),{\mathbf {B}}_{\tau}(s)\in\mathbb{R}^{n\times m}\), \(\mathbf {C}(s)\in\mathbb{R}^{l\times n}\), \(\mathbf {G}(s),{\mathbf {G}}_{\tau}(s)\in\mathbb{R}^{l\times m}\) are uncertain bounded real matrices for all \(0\leq s\leq J\), \(\mathbf {D}(s)\) is a positive bounded diagonal matrix for all \(0\leq s\leq J\), written as

where \(p_{i}\) is a known constant for \(i=1,2,\ldots,n\). τ is known time delay. The corresponding boundary and initial conditions of systems (1a)–(1b) and (2a)–(2b) will be given later. In the two systems, the partial differences are defined as usual, i.e.,

where (3a) is the first order difference scheme for time variable s, (3b) and (3c) are the first order and the second order difference schemes for space variable x, respectively.

The control objective of this paper is to design an ILC controller to track the given desired target \(\mathbf {Y}_{d}(x,s)\) based on the measurable system output \(\mathbf {Y}_{k}(x,s)\) so that the tracking error \({\mathbf {e}}_{k}(x,s)\) would vanish when the iteration time k tends to infinity, that is,

For convenience, some notations used in this paper are defined as follows.

(1) The norm \(\|\cdot\|\) is defined as \(\|{\mathbf {A}}\|=\sqrt{\lambda _{\max}({\mathbf {A}}^{\mathrm{T}}\mathbf {A})},{\mathbf {A}}\in\mathbb{R}^{n\times n}\), where \(\lambda_{\max}\) denotes the maximum eigenvalue. If \({\mathbf {A}}(s):[0,1,2,\ldots,J]\rightarrow\mathbb{R}^{n\times n}\), then \(\|{\mathbf {A}}\|=\sqrt{\lambda_{\max_{0\leq s\leq J }}({\mathbf {A}(s)}^{\mathrm{T}}\mathbf {A}(s))} \), where \(\lambda_{\max_{0\leq s\leq J }}\) indicates the maximum eigenvalue of \({\mathbf {A}(s)}^{\mathrm{T}}\mathbf {A}(s)\ ({0\leq s\leq J })\). We will simply write \(\bar{\lambda}_{A}\) as \(\|{\mathbf {A}}\|^{2}\).

(2) For \({\mathbf {f}}(x,s)\in\mathbb{R}^{n}\), \(0\leq x\leq I, 0\leq s\leq J\), the \(\mathbf {L}^{2}\)-norm of \({\mathbf {f}}(x,s)\) denotes \(\|{\mathbf {f}}(\cdot,s)\|^{2}_{\mathbf {L}^{2}}=\sum_{x=1}^{I}({\mathbf {f}(x,s)}^{\mathrm{T}}{\mathbf {f}}(x,s))\). For a given constant \(\lambda>0\), the \((\mathbf {L}^{2},\lambda)\)-norm of \({\mathbf {f}}(x,s)\) can be defined as

(3) For \(\mathbf {f}_{k}(x,s)\in\mathbb{R}^{n},\xi \geq 1\), the \(\|\mathbf {f}_{k}\|^{2}_{(\mathbf {L}^{2},\lambda(\xi))}\) norm (satisfying three basic requirements as norm definition) is defined as follows:

if \(\xi=1\), then \(\|\mathbf {f}\|^{2}_{(\mathbf {L}^{2},\lambda(1))}=\|\mathbf {f}\| ^{2}_{(\mathbf {L}^{2},\lambda)}\).

(4) According to the Rayleigh–Ritz theorem, for a symmetry matrix \({\mathbf {A}}\in\mathbb{R}^{n\times n}\), we have \(\lambda_{1}x^{\mathrm{T}}x\leq x^{\mathrm{T}}{\mathbf {A}}x \leq \lambda_{n}x^{\mathrm{T}}x\), for \(x\in\mathbb{R}^{n}\), where \(\lambda_{i}\ (i=1,2,\ldots,n,\lambda _{1}\leq \cdots \leq \lambda_{n})\) are the eigenvalues of the square matrix A. Similar results can be obtained for a time-varying matrix. That is, letting \({\mathbf {A}(j)}\in\mathbb{R}^{n\times n}\ (0\leq j\leq J)\), we can obtain \(\lambda_{\min_{0\leq j\leq J }}(\mathbf {A}(j))x^{\mathrm{T}}x\leq x^{\mathrm{T}}{\mathbf {A}{(j)}}x \leq \lambda_{\max_{0\leq j\leq J }}(\mathbf {A}{(j)})x^{\mathrm{T}}x\), where \(\lambda_{\min_{0\leq j\leq J }}(\mathbf {A}{(j)}), \lambda_{\max_{0\leq j\leq J }} ({\mathbf {A}}{(j)})\) denote the maximum and minimum eigenvalues of the square matrix \({\mathbf {A}}(j), 0\leq j\leq J\), respectively.

The following lemmas will be used in later sections.

Lemma 1

(Discrete Gronwall’s inequality, [2, 5])

Let constant sequences \(\{v(x)\}, \{B(x)\}\), and \(\{D(x)\}\) be real sequences defined for \(x\geq 0\), which satisfy

Then

Lemma 2

(Discrete Green’s formula for vector)

Under the zero boundary value condition, i.e., \({\mathbf {Z}}_{k}(0,s)=0={\mathbf {Z}}_{k}(I+1,s)\), for system (1a)–(1b), we have

Proof

In view of the boundary condition \({\mathbf {Z}}_{k}(0,s)=0={\mathbf {Z}}_{k}(I+1,s)\), we can obtain that

This is the end of proof of Lemma 2. □

3 ILC design and convergence analysis

In this section, we propose our iterative learning control algorithms, establish a sufficient condition for the convergence of the algorithm, and provide a rigorous proof. First, we consider the case of the system with time delay in state (i.e., system (1a)–(1b)) in the following Sect. 3.1.

3.1 System with time delay in state

For system (1a)–(1b), we assume the corresponding initial and boundary conditions as follows:

for \(k=1,2,\ldots \) .

We propose the P-type iterative learning control algorithm of looking for a control input sequence \(\mathbf {U}_{k+1}(x,s) \) in system (1a)–(1b) as follows:

where \(\mathbf {e}_{k}(x,s)=\mathbf {Y}_{d}(x,s)-{\mathbf {Y}}_{k}(x,s)\) is the kth output error corresponding to the kth input \(\mathbf {U}_{k}(x,s)\), and \(\mathbf {\Gamma}(s)\) is the gain matrix in the learning process. Thus, for system (1a)–(1b), (4) is transformed into

For simplicity of presentation, we denote

Then, based on the kth and the \((k+1)\)th learning system of (1a)–(1b), we have

In order to derive the convergence conditions of the ILC algorithm described by (7), we give the following proposition.

Proposition 1

Under the initial and boundary conditions given in (5), (6), for \(\bar {{\mathbf {Z}}}_{k}(x,s)\) (\(0\leq j\leq J\)) of in (9a)–(9b), we have

where \(c_{1},c_{2},c_{3}\) are positive bounded constants that will be given later.

Proof

By (9a) and the definition of partial difference (3a), we have

Here and in later sections, I denotes a unit matrix.

Multiplying two sides of (11) by \({\bar{{\mathbf {Z}}}_{k}}^{\mathrm{T}}(x,s)\) from left, we have

On the other hand, from (3a), we have

(13) yields

Then, substituting (12) into (14), we have

Summing both sides on (15) from \(x=1\) to I, we get

where \(\Omega_{i}\ (i=1,2,\ldots,5)\) are the first term to fifth term of the right of the first equality sign in (16), respectively. We will estimate \(\Omega_{i}\) separately.

For \(\Omega_{1}\), by (9a) and (3c), we have

Using the elementary inequality \((\sum_{i=1}^{N}z_{i})^{2}\leq N\sum_{i=1}^{N}z_{i}^{2}\), one can show that

where

Furthermore, as boundary condition (6) means

Then

By Lemma 2 and the positive definiteness of \(D(s)\), we have

where \(d_{\min}=\min_{0\leq s\leq J}\{d_{1}(s),d_{2}(s),\ldots ,d_{n}(s)\}>0\) exists because \(p_{i}\) is known.

For \(\Omega_{3}\sim\Omega_{5}\), using the inequality \(y^{\mathrm{T}}H^{\mathrm{T}}Lz\leq \frac{1}{2} (y^{\mathrm{T}}H^{\mathrm{T}}Hy+z^{\mathrm{T}}L^{\mathrm{T}}Lz)\) (\(H\in\mathbb{R}^{n\times m},L \in\mathbb{R}^{n\times l},y\in\mathbb{R}^{m},z\in\mathbb {R}^{l}\)), we have

where constants \(g=\lambda_{\max_{0\leq s\leq J}}[(I+2A(s))^{\mathrm{T}}(I+2A(s))]+1\).

Finally, substituting (17)–(21) into (16), we obtain

where \(c_{1}=5\bar{\lambda}_{A-2D}+10{\bar{\lambda}}_{D}+g+\bar{\lambda}_{A}+\bar{\lambda}_{B}\), \(c_{2}=5\bar{\lambda}_{A_{\tau}}+1\), \(c_{3}=5\bar{\lambda}_{B}+1\).

This completes the proof of Proposition 1. □

With the help of the above technical lemmas and Proposition 1, the following theorem establishes convergent conditions of the partial difference systems with time delay in state described by (1a)–(1b).

Theorem 1

If the gain matrix \(\mathbf {\Gamma}(s)\) in algorithm (7) satisfies

Then, under the initial and boundary conditions (5), (6), the output error of system (1a)–(1b) converges to zero in mean \(\mathbf{L}^{2}\) norm, that is,

Proof

According to algorithm (7), we have

where \({\hat{\mathbf {G}}}(s)={\mathbf {I}}-{\mathbf {G}}(s){\mathbf {\Gamma}}(s)\).

Multiplying both sides of (25) by \({{\mathbf {e}}_{k+1}^{\mathrm{T}}}(x,s)\) from left, we have

where \(\rho={\lambda}_{\max_{0\leq s\leq J}} ({\hat{{\mathbf {G}}}^{\mathrm{T}}(s)}\hat{\mathbf {G}}(s) )\) and \({\bar{\lambda}_{C}}=\lambda_{\max_{0\leq s\leq J}} (\mathbf {C}^{\mathrm{T}}(s){\mathbf {C}}(s) )\).

Summing both sides of (26) from \(x=1\) to I, we get

Multiplying \(\lambda^{s}\ (0<\lambda<1)\) to both sides of (27) and using the definition of \(\|\cdot\|_{\mathbf{L}^{2}}^{2}\), we have

thus

We rewrite the conclusion of Proposition 1 as follows:

Then, by Lemma 1, we obtain

According to (5), the initial setting is the same for every iterative process, we have

We consider \(\sum_{t=0}^{s-1}c_{2}\|\bar{{\mathbf {Z}}}_{k}(\cdot,t-\tau)\| ^{2}_{{ \mathbf {L}}^{2}}c_{1}^{s}\) as follows:

If \(s<\tau\), then \(t-\tau<0\),

If \(s>\tau\), then

Thus, from (32) and (33), we obtain

On the other hand, by iterative learning control scheme (7) again, we have

which yields

where \({\bar{\lambda}_{\Gamma}}=\lambda_{\max_{0\leq s\leq J}} (\mathbf {\Gamma}^{\mathrm{T}}(s)\mathbf {\Gamma}(s) )\). Hence

Then, by (34) and (36), we obtain

Multiplying \(\lambda^{s}\ (0<\lambda<1)\) to both sides of (37), meanwhile taking λ small enough, such that \(\lambda(c_{1}+c_{2})<1\), we get

then we have

namely

Therefore, substituting (39) into (29), we have

Let \(\delta=[2\rho+\lambda\frac{2 c_{3}{\bar{\lambda }_{C}\bar{\lambda}_{\Gamma}}}{1-(c_{1}+c_{2})}]\), because \(2\rho<1\), by the continuity of real number, we can take λ small enough such that \(\delta<1\). Rewrite (40) as

Then, from (41), we have

Selecting a suitable ξ, such that \(\xi>1\) and \(\delta\xi<1\), multiplying both sides of (42) by \(\xi^{k}\), we obtain

Therefore,

Noting \(\xi>1\) and \(I,J,\lambda\) are bounded in (44), thus we obtain

This completes the proof of Theorem 1. □

Next, we will consider system (2a)–(2b) in the following Sect. 3.2.

3.2 System with time delay in input

We assume the corresponding boundary value and initial value conditions of system (2a)–(2b) to be

for \(k=1,2,\ldots \) .

For system (2a)–(2b), we propose that the iterative learning control scheme is

where \(-\tau \leq s\leq J-\tau\).

Theorem 2

If the gain matrix \(\mathbf {\Gamma_{\tau}}(s)\) in algorithm (48) satisfies

Then, under the initial setting (46) and boundary value (47), the output error of system (2a)–(2b) converges to zero in mean \(\mathbf {L}^{2}\) norm, that is,

Proof

According to algorithm (48) with \(-\tau \leq s\leq J-\tau\), we have

that is,

Furthermore,

where \(\rho_{\tau}={\lambda}_{\max_{0\leq s\leq J}} (\hat {{\mathbf {G}}_{\tau}^{\mathrm{T}}}(s)\hat{\mathbf {G}}_{\tau}(s) )\).

On the other hand, similar to Proposition 1, we can obtain

where \(c_{4},c_{5}\) are positive bounded constants.

Using Lemma 1 again for (54) and noting (47), we conclude

Multiplying \(\lambda^{j}\) to both sides of (55), we get

Substituting (56) into (53), we have

By the condition of Theorem 2: \(2\rho_{\tau}<1\), we can find λ such that

Then, similar to Theorem 1, we can obtain that

In the end, we have

This is the conclusion of Theorem 2. □

Remark 1

For discrete time and spatial variables \(x=0,1,\ldots ,I,s=0,1,2,\ldots,J\), \(I,J\) are bounded, one can easily show that (4) holds by the conclusions of Theorems 1 and 2. That is, the actual output (iterative output) can completely track the desired output as iteration number tends to infinity for system (1a)–(1b) and system (2a)–(2b).

4 Numerical simulations

To illustrate the effectiveness of the algorithm, we give two examples for systems (1a)–(1b) and (2a)–(2b), respectively. First, giving consideration to system (1a)–(1b), let the system state, the control input, and the output be

The space and time variables \((x,s)\in[0,10]\times[0,200]\), time delay \(\tau=5\). And the coefficient matrices, the gain matrices are as follows:

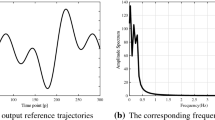

The desired trajectory is

From \({\hat{\mathbf {G}}}(s)={\mathbf {I}}-{\mathbf {G}}(s){\mathbf {\Gamma}}(s)\), we can easily calculate \(\rho<0.5\) and find it meets the conditions of Theorem 1.

Figures 1 and 3 are the desired surfaces, Figs. 2 and 4 are output surfaces of the 20th iteration. Figures 5 and 6 are the corresponding error surface of the 20th iteration. Figure 9 is \({\mathbf {L}}^{2}\) error convergence history with iterations, the maximum values of the twentieth iteration errors are \(2.0615\times10^{-7}\),\(1.9855\times 10^{-6}\), respectively. Therefore, the iterative learning algorithm (7) is effective for system (1a)–(1b).

Secondly, we consider systems described by (2a)–(2b) with input time delay. Let

and select time delay \(\tau=8\), \(\mathbf {G}_{\tau}=\mathbf {G}\), the rest are the same as those in system (1a)–(1b). Figures 7 and 8 describe the two error surfaces in the twenty times iteration. According to Figs. 7 and 8, we observe that the maximum values of the twentieth iteration errors are \(0.8856\times10^{-15}\), \(0.8913\times10^{-15}\), respectively. At the same time, the data from Figs. 9 and 10 denote that the tracking errors are already acceptable at the 10th iteration. The two error curves in Figs. 9 and 10 also demonstrate the efficacy of the proposed algorithms (7) and (48).

5 Conclusions

In this paper, a study of the ILC problem for parabolic partial difference systems with time delay is performed. Convergence results are proved for two different time delay cases. Simulation studies are used to illustrate the applicability of the theoretical results.

References

Roesser, R.P.: A discrete state-space model for linear image processing. IEEE Trans. Autom. Control 20(1), 1–10 (1975)

Cheng, S.S.: Partial Difference Equations. Advances in Discrete Mathematics and Applications, vol. 3. Taylor Francis, London (2003)

Cheng, S.S.: Sturmian theorems for hyperbolic partial difference equations. J. Differ. Equ. Appl. 2(4), 375–387 (1996)

Wong, P.J.Y., Agarwal, R.P.: Nonexistence of unbounded nonoscillatory solutions of partial difference equations. J. Math. Anal. Appl. 214(2), 503–523 (1997)

Xie, S.L., Cheng, S.S.: Stability criteria for parabolic type partial difference equations. J. Comput. Appl. Math. 75(1), 57–66 (1996)

Zhang, B.G., Tian, C.J.: Oscillation criteria of a class of partial difference equation with delays. Comput. Math. Appl. 48(1), 291–303 (2004)

Liu, S.T., Guan, X.P., Jun, Y.: Nonexistence of positive solutions of a class of nonlinear delay partial difference equations. J. Math. Anal. Appl. 234(2), 361–371 (1999)

Arimoto, S., Kawamura, S., Miyazaki, F.: Bettering operation of robots by learning. J. Robot. Syst. 1(2), 123–140 (1984)

Sun, M.X., Wang, D.W.: Iterative learning control design for uncertain dynamic systems with delayed states. Dyn. Control 10(4), 341–357 (2000)

Sun, M.X., Wang, D.W.: Initial condition issues on iterative learning control for nonlinear systems with time delay. Int. J. Syst. Sci. 32(11), 1365–1375 (2001)

Zhu, Q., Hu, G.-D., Liu, W.-Q.: Iterative learning control design method for linear discrete-time uncertain systems with iteratively periodic factors. IET Control Theory Appl. 9(15), 2305–2311 (2015)

Li, X.-D., Chow, T.W.S., Ho, J.K.L.: 2D system theory based iterative learning control for linear continuous systems with time delays. IEEE Trans. Circuits Syst. I, Regul. Pap. 52(7), 1421–1430 (2005)

He, W., Meng, T.T., Huang, D.Q., Li, X.F.: Adaptive boundary iterative learning control for an Euler–Bernoulli beam system with input constrain. IEEE Trans. Neural Netw. Learn. Syst. 29(5), 1539–1549 (2018)

Dai, X.S., Xu, C., Tian, S.P., Li, Z.L.: Iterative learning control for MIMO second-order hyperbolic distributed parameter systems with uncertainties. Adv. Differ. Equ. 2016(1), 94 (2016)

Liu, S.D., Wang, J.R., Wei, W.: A study on iterative learning control for impulsive differential equations. Commun. Nonlinear Sci. Numer. Simul. 24(1), 4–10 (2015)

Shen, D., Xu, J.-X.: A novel Markov chain based ILC analysis for linear stochastic systems under general data dropouts environments. IEEE Trans. Autom. Control 62(11), 5850–5857 (2018)

Li, Y., Jiang, W.: Fractional order nonlinear systems with delay in iterative learning control. Appl. Math. Comput. 257(15), 546–552 (2015)

Dai, X., Mei, S., Tian, S.: D-type iterative learning control for a class of parabolic partial difference systems. Trans. Inst. Meas. Control 40(10), 3105–3114 (2018)

Dai, X., Tian, S., Guo, Y.: Iterative learning control for discrete parabolic distributed parameter systems. Int. J. Autom. Comput. 12(3), 316–322 (2015)

Liang, C., Wang, J., Feckan, M.: A study on ILC for linear discrete systems with single delay. J. Differ. Equ. Appl. 24(3), 358–374 (2018). https://doi.org/10.1080/10236198.2017.140922

Meng, D.Y., Jia, Y.M., Du, J.P., Yu, F.: Robust iterative learning control design for uncertain time-delay systems based on a performance index. IET Control Theory Appl. 4(5), 759–772 (2010)

Cichy, B., Galkowski, K., Rogers, E.: Iterative learning control for spatio-temporal dynamics using Crank–Nicholson discretization. Multidimens. Syst. Signal Process. 23(1), 185–208 (2012)

Funding

The authors gratefully acknowledge the financial support of the National Natural Science Foundation of China (Grant Nos. 61863004, 61364006, 61563005), the Natural Science Foundation of Guangxi (Grant No. 2017GXNSFAA198179), and the Key Laboratory of Industrial Process Intelligent Control Technology of Guangxi Higher Education Institutes Director Foundation (No. IPICT-2016-02).

Author information

Authors and Affiliations

Contributions

This work was carried out in collaboration among all authors. XD raised these interesting problems in this research. XM (xuemin@ku.edu), YZ (zhaoyong_er@126.com), and GX (253475365@qq.com) proved the theorems, interpreted the results, and wrote the article. The numerical example is given by XY (staryuz@163.com). All authors defined the research theme, read, and approved the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Dai, X., Tu, X., Zhao, Y. et al. Iterative learning control for MIMO parabolic partial difference systems with time delay. Adv Differ Equ 2018, 344 (2018). https://doi.org/10.1186/s13662-018-1797-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-018-1797-2