Abstract

In this paper, the problem of synchronization control is investigated for complex dynamical networks with discrete intervals and distributed time-varying delays. The main thing is to design a properly pinning controller, for which the error system of complex dynamical networks is asymptotically stable. Based on the theory of Lyapunov stability and linear matrix inequality, the suitable Lyapunov-Krasovskii functional is constructed in terms of the Kronecker product, and then we obtain a novel synchronization criterion. Finally, a numerical example is given to illustrate the effectiveness of the proposed methods.

Similar content being viewed by others

1 Introduction

Over the past two decades, extensive efforts have been devoted to the research on complex dynamical networks due to their theoretical importance, and practical applications in various fields, such as the Internet and World Wide Web, food web, genetic networks, and neural networks [1–6]. Many of these networks exhibit complexity in the overall topological properties and dynamical properties of the networks nodes and the coupled units. It is not surprising that one witnesses a surge of research interest in the analysis of the dynamical behavior of complex dynamical networks.

An important concern in the research of complex dynamical networks is the synchronization problem, of which the purpose is to design the proper controller to achieve synchronization of the considered complex dynamical networks. Many significant advances on this issue have been reported in the literature; see [7–22] and the references therein. In this paper, we study a non-fragile approach [7–10], the authors of [7] investigated the controlled synchronization for complex dynamical networks with random delayed information exchanges, for which stochastic variables are utilized to model randomly occurring phenomena. Reference [9] investigates the non-fragile synchronization for bidirectional associative memory neural networks with time-varying delays. The sufficient conditions are derived to guarantee its synchronization based on a master-slave system approach. The discrete-time complex dynamical networks with interval time-varying delays is studied in [11]. In this paper, the randomly occurring perturbations will be considered, which are assumed to belong to the binomial sequence. According to the author in [12], the complex dynamical networks are synchronized, by using the sampled-data control method. Pinning sample-data control in [14] for synchronization of complex networks has been studied with probabilistic time-varying delays, by applying quadratic convex approach. The impulsive control [15–17] for synchronization of complex networks also is investigated. Impulsive control [15] is applied to the synchronization for complex dynamical networks with coupling delays. Two types of time-varying coupling delays are considered: the delays without any restriction on the delay derivatives, and the delays whose derivatives are strictly less than the former. The pinning control [18–22] is one of the most commonly used to achieve the synchronization between the nodes. In [18], pinning synchronization of nonlinearly coupled complex networks with time-varying delays is investigated, by using M-matrix strategies. Through to pinning control all reached the purpose of synchronization, by using different methods. For instance, in [19], one investigated the pinning adaptive hybrid synchronization of two general complex dynamical networks with mixed coupling. Also the authors of [22] studied the intermittent pinning control for cluster synchronization of delayed heterogeneous dynamical networks. In this paper, the complex networks system is a system with non-identical delayed dynamical nodes. By these mentioned control methods, pinning control can effectively decrease the number of nodes causing the system to achieve synchronization. Since pinning control can reduce the control of nodes, it is very valuable to study the synchronization of a complex dynamical networks system via pinning control.

The phenomena of time delays are common in various systems. They exist for the case the events occur. It is known clearly that time delays can destabilize the behavior of networks. Hence, a system with time delays may be complicated and interesting. As is well known, time delays would degrade the synchronization performance or even destroy the synchronization. So, the problem of time delays should be introduced in the synchronization of complex networks. Furthermore, we have mixed delays including discrete delays and distributed delays, but the mixed time delay can have the effect of more pressure close to the actual application. There are a lot of papers about the delay of complex network using different methods. For instance in [23–33], and in [25], the authors considered the synchronization and exponential estimates of complex networks with mixed time-varying coupling delays. A generalized complex networks model involving both neutral delays and retarded ones is presented. By utilizing the free weighting matrix technique, a less conservative delay-dependent synchronization criterion is derived. In [27], delay-distribution-dependent synchronization of T-S fuzzy stochastic complex networks with mixed time delays was studied, in which the mixed time delays are composed of discrete and distributed delays. The discrete-time delays are assumed to be random and its probability distribution is known a priori. The stochastic nonlinear time-delay system [30] is studied. The purpose of this paper is to investigate the dynamic output feedback tracking control for this time-delay system with the prescribed performance. The problem of synchronization of T-S fuzzy complex dynamical networks with time delay, impulsive delays, and stochastic effects is studied in [33], based on T-S fuzzy methods and the LMIs approach. On the basis of the above discussion, we can see that the time delay has very much significance for any systems. Therefore, it is significant to study the synchronization for complex dynamical networks system with mixed mode-dependent time delays.

Inspired by the above discussion, in this paper we deal with the synchronization for complex dynamical networks with mixed mode-dependent time delays. The main contribution of this paper is summarized as follows: (1) A more general system was introduced to solve practical problems. (2) Sufficient conditions were obtained to ensure the complex dynamical networks synchronization. (3) The pinning controller is designed to ensure the complex dynamical networks synchronization. (4) The design of the control results can be seen from Figures 1-6. (5) The simulation results demonstrate the feasibility of the method.

The rest of the paper is organized as follows. In Section 2, the complex dynamical networks model is introduced, several assumptions, lemmas, and definitions are also proposed. The main results of the synchronization on complex dynamical networks are given in Section 3. In Section 4, we provide a numerical example to illustrate the effectiveness of the obtained results. Conclusions are finally drawn in Section 5.

Notation: The notation in this paper is standard. \({\mathbb{R}^{n}}\) denotes the n dimensional Euclidean space, \({\mathbb{R}^{m \times n}}\) represents the set of all \(m \times n\) real matrices. For a real asymmetric matrix X and Y, the notation \(X > Y\) (respectively, \(X \geqslant Y\)) means \(X - Y\) is semi-positive definite (respectively, positive definite). The superscript ‘T’ denotes matrix transposition. Moreover, in symmetric block matrices, ‘∗’ is used as an ellipsis for the terms that are introduced by asymmetry and \(\operatorname{diag} \{ \cdots \}\) denotes a block-diagonal matrix. Let \(\tau > 0\) and \(C ( { [ { - \tau,0} ],{\mathbb{R}^{n}}} )\) denote the family of continuous functions ϕ, from \([ { - \tau,0} ]\) to \({\mathbb{R}^{n}}\). \(( {\Omega,F, \{ {{F_{t}}} \},P} )\) is a complete probability space with a filtration, \({ \{ {{F_{t}}} \}_{t \geqslant0}}\) satisfying the usual conditions. \(\mathcal{E} \{ \cdot \}\) represents the mathematical expectation. \({\lambda_{\max}}( \cdot)\) means the largest eigenvalue of a matrix. If not explicitly stated the matrices are assumed to have compatible dimensions.

2 System description and preliminary lemma

Let \(\{ r(t)(t \geqslant0)\} \) be a right-continuous Markovian chain on the probability space \((\Omega,F, {\{ {F_{t}}\} _{t \geqslant0}},P)\) taking values in the finite space \(S = \{ 1,2, \ldots, m\} \) with generator \(\Pi = {\{ {\pi_{ij}}\} _{m \times m}}\) (\(i,j \in S\)) given by

where \(\Delta t > 0\), \(\lim_{\vartriangle t \to 0} (o\Delta t/\Delta t) = 0\), and \({\pi_{ij}}\) is the transition rate from mode i to mode j satisfying \({\pi_{ij}} \geqslant0\) for \(i \ne j\) with \({\pi_{ij}} = - \sum_{j = 1 j \ne i}^{m} {{\pi _{ij}}}\) (\(i,j \in S\)).

Considering the following Markovian jumping complex networks with mixed mode-dependent time-varying delays consisting of N nodes, in which each node is an n-dimensional dynamical subsystem:

where \({x_{k}}(t) = {({x_{k1}}(t),{x_{k2}}(t), \ldots ,{x_{kn}}(t))^{T}} \in{\mathbb{R}^{n}}\) denotes the state vector of the ith node, \(D(r(t)) = \operatorname{diag}\{ {d_{1,r(t)}},{d_{2,r(t)}}, \ldots,{d_{n,r(t)}}\} > 0\); \(A(r(t))\), \(B(r(t))\), and \(C(r(t)) \in {\mathbb{R}^{n \times n}}\), are, respectively, the connection weight matrix, the discretely delayed connection weight matrix, and the distributively delayed connection weight matrix; \(\Gamma (r(t))\) is an inner-coupling matrix; \(c > 0\) represents the coupling strength; the bounded functions \({\tau_{1}}(t)\) and \({\tau_{2}}(t)\) represent the unknown discrete-time delay and the distributed delay of system with \(0 \leqslant{\tau_{1}}(t) \leqslant{\tau _{1}}\), \(0 \leqslant{\tau_{2}}(t) \leqslant{\tau_{2}}\), \(0 \leqslant {\dot{\tau}_{1}}(t) \leqslant{d_{1}}\), and \(0 \leqslant{\dot{\tau}_{2}}(t) \leqslant{d_{2}}\). \({\tau_{1}}\), \({\tau_{2}}\), \({d_{1}}\) and \({d_{2}}\) are positive constants. \(G = {({G_{ij}})_{N \times N}}\) denotes the coupling configuration matrix, if there is a connection between node i and node j, then \({G_{kj}} = {G_{jk = 1}} = 1\) (\(i \ne j\)), otherwise \({G_{kj}} = {G_{jk}} = 0\) (\(k \ne j\)). The row sums of G are zero, i.e. \(\sum_{k \ne j}^{N} {{G_{kj}}} = - {G_{kk}}\), \(k = 1,2, \ldots,N\).

Correspondingly, the response complex networks with the control inputs \({u_{k}}(t) \in{\mathbb{R}^{n}}\) (\(k = 1,2, \ldots,N\)) can be written as

where \({u_{k}}(t)\) is given as

For simplicity, we denote \(D(r(t)) = {D_{i}}\), \(A(r(t)) = {A_{i}}\), \(B(r(t)) = {B_{i}}\), \(C(r(t)) = {C_{i}}\), \(\Gamma(r(t)) = {\Gamma _{i}}\). Let the error be \({e_{k}}(t) = {y_{k}}(t) - {x_{k}}(t)\), we can arrive the error dynamical networks:

where

With the matrix Kronecker product, we can rewrite the error networks system (5) in the following compact form:

where

The purpose of this paper is to design a series of pinning controllers (4) to ensure the asymptotical synchronization of complex dynamical networks (2). Before proceeding with the main results, we present the following definitions, lemmas, and assumptions.

Definition 2.1

[34]

Complex dynamical networks (2) are said to be asymptotically synchronized by pinning control, if \(\lim_{t \to0} \Vert {{x_{k}}(t) - {y_{k}}(t)} \Vert = 0\), \(k = 1,2, \ldots, N\).

Lemma 2.1

(Jensen’s inequality)

For a positive matrix M, scalar \({h_{U}} > {h_{L}} > 0\) such that the following integrations are well defined, we have:

-

(i)

\(- ({h_{U}} - {h_{L}})\int_{t - {h_{U}}}^{t - {h_{L}}} {{x^{T}}(s)} Mx(s)\,ds \le - (\int_{t - {h_{U}}}^{t - {h_{L}}} {{x^{T}}(s)\,ds} )M(\int_{t - {h_{U}}}^{t - {h_{L}}} {{x^{T}}(s)\,ds} )\),

-

(ii)

\(- (\frac{{h_{U}^{2} - h_{L}^{2}}}{2})\int_{t - {h_{U}}}^{t - {h_{L}}} {\int_{s}^{t} {{x^{T}}(u)} Mx(u)} \,du\,ds \le - (\int_{t - {h_{U}}}^{t - {h_{L}}} {\int_{s}^{t} {{x^{T}}(u)} } \,du\,ds)M(\int_{t - {h_{U}}}^{t - {h_{L}}} {\int_{s}^{t} {x(u)} } \,du\,ds)\).

Lemma 2.2

[35]

If for any constant matrix \(R \in {\mathbb{R}^{n \times n}}\), \(R = {R^{T}} > 0\), scalar \(\gamma > 0\), and vector function \(\phi:[0,\gamma] \to{\mathbb{R}^{m}}\) the integrations concerned are well defined, the following inequality holds:

Lemma 2.3

[36]

Let \(f( \cdot)\) be a non-negative function defined on \([ {0, + \infty} ]\), if \(f( \cdot )\) is Lebesgue integrable and uniformly continuous on \([ {0, + \infty} ]\), then \(\lim_{t \to\infty} f(t) = 0\).

Lemma 2.4

[37]

For a given symmetric positive definite matrix \(R > 0\) any function and differentiable signal ω in \([ {a,b} ] \to{R^{n}}\) and any twice differential function \([ {a,b} ] \to R\), then the following inequality holds:

where \(\eta(a,b) = \omega(b) - \omega(a)\), \({\nu_{0}}(a,b) = \frac{{\omega(b) + \omega(a)}}{2} - \frac{1}{{b - a}}\int_{a}^{b} {\omega(u)} \,du\).

Assumption 2.1

The nonlinear function \(f:{\mathbb{R}^{n}} \to{\mathbb{R}^{n}}\) satisfies

where U and \(V \in{\mathbb{R}^{n}}\) are constant matrices with \(V - U > 0\). For presentation simplicity and without loss of generality, it is assumed that \(f(0) = 0\).

Remark 1

In Assumption 2.1, the sector-bounded description of the nonlinear term is quite general, which includes the common Lipschitz and norm-bounded conditions as special cases. It is possible to reduce the conservation of the main results caused by quantifying nonlinear functions via the well-known convex optimization technique. The same lemma, Lemma 2.4, will play a key role in the derivation of a less conservation delay-dependent condition than the general Jensen inequality in [38].

3 Main results

Theorem 3.1

The error dynamical network (6) is asymptotically stable with mixed time-varying delays \({\tau _{1}}(t)\) and \({\tau_{2}}(t)\), constant matrices \({P_{i}} > 0\), \({R_{kl}} > 0\), \(N{_{kl}} > 0\) (\(l = 1,2,3,4\)), \({W_{kb}} > 0\), \({M_{kb}} > 0\), and \({K_{kb}}\) (\(b = 1,2\)) with appropriate dimensions. The following LMIs holds, if all \(k \in S\):

where

Proof

Define the Lyapunov functional candidate as follows:

Let \({\mathcal{L}}\) be the weak infinitesimal generator of the random process:

Then for each \(i \in S\) along the trajectory of (6),

where

Then it follows from Lemma 2.1, Lemma 2.2, and Lemma 2.4 that

Similarity

We can get from Assumption 2.1 that for any \({\lambda_{1}} > 0\), \({\lambda_{1}} > 0\):

where

Substituting (9)-(23) into (8) yields

Then from (24) we can obtain

where

Defining \({\lambda^{*} } = \max(\lambda(\Theta(t)))\), it follows from (7) and combining with (25) that

By integrating the inequality in (26) between 0 and t and taking the expectation, one can readily obtain

Notice that \({\lambda^{*} } < 0\) and \(V(e(t),i,t) > 0\), we can infer that \(\int_{0}^{t} {{{\Vert {e(s)} \Vert }^{2}}} \,ds\) is convergent when \(t \to\infty\). Then we have \({\mathcal{E}}\int_{0}^{ + \infty} {{{\Vert {e(s)} \Vert }^{2}}\,ds} \le\frac{{ - {\mathcal {E}}V(0)}}{{{\lambda^{*} }}}\).

It can be shown from Lemma 2.3 that

Basing on Definition 2.1, the complex networks dynamical networks (2) are asymptotically synchronized via the pinning controller. The proof is completed. □

Remark 2

The main purpose of this paper is to design suitable pinning controller, for which one can be sure that the complex dynamic networks is asymptotically stable. The constant \({\sigma _{k}}\) in the controller can be chosen suitably to adapt the synchronization criterion and the identification speed. And we can ensure that the inequalities (7) are just only the sufficient conditions but not the necessary ones.

4 Example

In this section, a numerical example is provided to demonstrate that the proposed method in this paper is effective.

Example 1

For the sake of simplification, we consider the following 3-nodes error complex dynamical networks (6) with matrix parameters. We have

\({\sigma_{1}} = 2\), \({\sigma_{2}} = 4\), \({\sigma_{3}} = - 1\), \(c = 1\), \({c_{2}} = 2\).

The outer-coupling matrix is assumed to be \(G = {({G_{ij}})_{3 \times3}}\) with

The time-varying delay is chosen \({\tau_{1}}(t) = 0.5 + 0.5\sin t\), \({\tau_{2}}(t) = 2.9 + 0.1\sin t\). Accordingly, we have \({\lambda _{1}} = 1\), \({\lambda_{2}} = 3\), \({d_{1}} = 0.5\), \({d_{2}} = 0.1\). The nonlinear functions \(f( \cdot)\), \(g( \cdot)\), and \(h( \cdot)\) are taken as \({f_{1}}(x) = {f_{2}}(x) = {g_{1}}(x) = {g_{2}}(x) = {h_{1}}(x) = {h_{2}}(x) = \frac{1}{2}(\vert {x + 1} \vert - \vert {x - 1} \vert )\). It can easily be verified that \(f( \cdot)\) and \(g(x)\) satisfy Assumption 2.1 with

Suppose the transition probability matrix

By using Matlab, the LMI (7) can be solved and a feasible solution is obtained. In order to save space we list only part of the results:

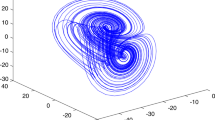

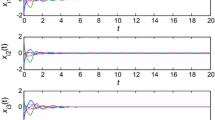

By Theorem 3.1, the Markovian jumping complex networks with varying delays achieve synchronization through the pinning controller \(u(t)\), with the above mentioned parameters. The numerical simulations are presented in Figures 1-6. We pay attention in Figure 1 to Figure 3 to see that the error dynamical system (6) is non-synchronous without the pinning controller \(u(t)\). As a comparison, it can been seen in Figures 4-6 that the synchronization errors can first converge asymptotically to zero with the pinning control \(u(t)\). In accordance with the transition probability matrix in (28), a possible time sequence of the mode jumps is illustrated in Figure 7.

5 Conclusion

In this paper, we have developed an approach to solve the problem of synchronization for a class of complex dynamical networks with mixed mode-dependent delays. By building properly Lyapunov-Krasovskii functions involving triple integral terms and using integral inequalities, new synchronization criteria have been obtained, which guaranteed the synchronization of complex dynamical networks. The result is expressed in terms of LMIs. Finally, a numerical example is provided to illustrate the effectiveness of the proposed methods.

References

Boccaletti, S, Latora, V, Moreno, Y, Chavez, M, Huang, D: Complex networks: structure and dynamics. Phys. Rep. 424, 175-308 (2006)

Albert, R, Barabasi, A: Statistical mechanics of complex networks. Rev. Mod. Phys. 74, 47-97 (2002)

Li, X, Chen, GR: Synchronization and desynchronization of complex dynamical networks: an engineering viewpoint. IEEE Trans. Circuits Syst. I, Fundam. Theory Appl. 50(11), 1381-1390 (2003)

Liu, Y, Wang, ZD, Liang, JL, Liu, XH: Synchronization and state estimation for discrete-time complex networks with distributed delays. IEEE Trans. Syst. Man Cybern., Part B, Cybern. 38(5), 1314-1325 (2008)

Hoppensteadt, FC, Izhikevich, EM: Pattern recognition via synchronization in phase-locked loop neural networks. IEEE Trans. Neural Netw. 11(3), 734-738 (2000)

Wei, GW, Jia, YQ: Synchronization-based image edge detection. Europhys. Lett. 59(6), 814-819 (2002)

Li, D, Wang, Z, Ma, G: Controlled synchronization for complex dynamical networks with random delayed information exchanges: a non-fragile approach. Neurocomputing 171, 1047-1052 (2016)

Mathiyalagan, K, Anbuvithya, R, Sakthivel, R, Park, JH, Prakash, P: Non-fragile \({H_{\infty}}\) synchronization of memristor-based neural networks using passivity theory. Neural Netw. 74, 85-100 (2016)

Anbuvithya, R, Mathiyalagan, K, Sakthivel, R, Prakash, P: Non-fragile synchronization of memrisitive BAM networks with random feedback gain fluctuations. Commun. Nonlinear Sci. Numer. Simul. 29, 427-440 (2015)

Mathiyalagan, K, Park, JH, Sakthivel, R: Exponential synchronization for fractional-order chaotic systems with mixed uncertainties. Complexity 21, 114-125 (2015)

Park, MJ, Kwon, OM, Ju, HP, Lee, SM, Cha, EJ: Synchronization of discrete-time complex dynamical networks with interval time-varying delays via non-fragile controller with randomly occurring perturbation. J. Franklin Inst. 351, 4850-4871 (2014)

Su, L, Shen, H: Mixed/passive synchronization for complex dynamical networks with sampled-data control. Appl. Math. Comput. 259(15), 931-942 (2015)

Hua, CC, Ge, C, Guan, XP: Synchronization of chaotic Lur’e systems with time delays using sampled-data control. IEEE Trans. Neural Netw. Learn. Syst. 26, 1214-1221 (2015)

Rakkiyappan, R, Sakthivel, N: Pinning sampled-data control for synchronization of complex networks with probabilistic time-varying delays using quadratic convex approach. Neurocomputing 162(25), 26-40 (2015)

Chen, WH, Jiang, ZY, Lu, XM, Luo, SX: \({H_{\infty}}\) synchronization for complex dynamical networks with coupling delays using distributed impulsive control. Nonlinear Anal. Hybrid Syst. 17, 111-127 (2015)

Li, HL, Jiang, YL, Wang, Z, Zhang, L, Teng, Z: Parameter identification and adaptive-impulsive synchronization of uncertain complex networks with nonidentical topological structures. Optik 126(24), 5771-5776 (2015)

Li, Z, Fang, JA, Miao, Q, He, G: Exponential synchronization of impulsive discrete-time complex networks with time-varying delay. Neurocomputing 157, 335-343 (2015)

Wang, JY, Feng, JW, Xu, C, Zhao, Y, Feng, JQ: Pinning synchronization of nonlinearly coupled complex networks with time-varying delays using M-matrix strategies. Neurocomputing 177, 89-97 (2016)

Li, B: Pinning adaptive hybrid synchronization of two general complex dynamical networks with mixed coupling. Appl. Math. Model. 40(4), 2983-2998 (2016)

Liu, XW, Xu, Y: Cluster synchronization in complex networks of nonidentical dynamical systems via pinning control. Neurocomputing 168, 260-268 (2015)

Guo, L, Pan, H, Nian, XH: Adaptive pinning control of cluster synchronization in complex networks with Lurie-type nonlinear dynamics. Neurocomputing 182, 294-303 (2016)

Cai, SM, Zhou, PP, Liu, ZR: Intermittent pinning control for cluster synchronization of delayed heterogeneous dynamical networks. Nonlinear Anal. Hybrid Syst. 18, 134-155 (2015)

Jing, TY, Chen, FQ, Li, QH: Finite-time mixed outer synchronization of complex networks with time-varying delay and unknown parameters. Appl. Math. Model. 39(23-24), 7734-7743 (2015)

Ma, YC, Zheng, YQ: Synchronization of continuous-time Markovian jumping singular complex networks with mixed mode-dependent time delays. Neurocomputing 156, 52-59 (2015)

Shi, KB, Zhong, SM, Zhu, H, Liu, XZ, Zeng, Y: New delay-dependent stability criteria for neutral-type neural networks with mixed random time-varying delays. Neurocomputing 168, 896-907 (2015)

Dai, Y, Cai, YZ, Xu, XM: Synchronization and exponential estimates of complex networks with mixed time-varying coupling delays. Int. J. Autom. Comput. 6(3), 301-307 (2009)

Li, HJ: Delay-distribution-dependent synchronization of T-S fuzzy stochastic complex networks with mixed time delays. In: Chinese Control and Decision Conference, pp. 23-25 (2011)

Song, Q, Cao, JD, Liu, F: Pinning-controlled synchronization of hybrid-coupled complex dynamical networks with mixed time-delays. Int. J. Robust Nonlinear Control 22(6), 690-706 (2012)

Sakthivel, R, Anbuvithya, R, Mathiyalagan, K, Ma, Y, Prakash, P: Reliable anti-synchronization conditions for BAM memristive neural networks with different memductance functions. Appl. Math. Comput. 275, 213-228 (2016)

Hua, CC, Zhang, LL, Guan, XP: Decentralized output feedback adaptive NN tracking control for time-delay stochastic nonlinear systems with prescribed performance. IEEE Trans. Neural Netw. Learn. Syst. 26, 2749-2759 (2015)

Ali, MS, Saravanakumar, R, Zhu, QX: Less conservation delay-dependent control of uncertain neural networks with discrete interval and distributed time-varying delays. Neurocomputing 166, 84-95 (2015)

Hua, CC, Guan, XP: Smooth dynamic output feedback control for multiple time-delay systems with nonlinear uncertainties. Automatica 68, 1-8 (2016)

Yang, XS, Yang, ZC: Synchronization of T-S fuzzy complex dynamical networks with time-varying impulsive delays and stochastic effects. Fuzzy Sets Syst. 235(16), 25-43 (2014)

Saaban, AB, Ibrahim, AB, Shehzad, M, Ahmad, I: Global chaos synchronization of identical and nonidentical chaotic systems using only two nonlinear controllers. Int. J. Math. Comput. Phys. Electr. Comput. Eng. 7(12), 338-344 (2013)

Duan, WY, Cai, CX, Zou, Y, You, J: Synchronization criteria for singular complex dynamical networks with delayed coupling and non-delayed coupling. Control Theory Appl. 30(8), 947-955 (2013)

Wang, Y, Wang, ZD, Liang, JL: Global synchronization stability for delayed complex networks switch randomly occurring nonlinearities and multiple stochastic disturbances. J. Phys. A, Math. Theor. 42(13), 1243-1247 (2009)

Seuret, A, Gouaisbaut, F: Jensen’s and Wirtinger’s inequalities for time-delay systems. IFAC Proc. Ser. 46(3), 343-348 (2013)

Li, B, Shen, H, Song, XN, Zhao, JJ: Robust exponential \({H_{\infty}}\) control for uncertain time-varying delay systems with input saturation: a Markov jump model approach. Appl. Math. Comput. 237, 190-202 (2014)

Acknowledgements

This paper is supported by the National Natural Science Foundation of China (No. 61273004) and the Natural Science Foundation of Hebei province (No. F2014203085). The authors would like to thank the editor and anonymous reviewers for their many helpful comments and suggestions, improving the quality of this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

NM carried out the main part of this paper, YM participated in the discussion and revision of the paper. All authors read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Ma, Y., Ma, N. Synchronization for complex dynamical networks with mixed mode-dependent time delays. Adv Differ Equ 2016, 242 (2016). https://doi.org/10.1186/s13662-016-0942-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-016-0942-z