Abstract

In this paper, delayed high-order cellular neural networks with impulses are investigated. Some sufficient conditions on the existence and exponential stability of anti-periodic solutions are established. An example with its numerical simulations is presented. Our results are new and complement previously known results.

Similar content being viewed by others

1 Introduction

During the past decades, high-order cellular neural networks (HCNNs) have been extensively investigated due to their immense potential of application perspective in various fields such as signal and image processing, pattern recognition, optimization, and many other subjects. Many results on the problem of global stability of equilibrium points and periodic solutions of HCNNs have been reported (see [1–9]). In applied sciences, the existence of anti-periodic solutions plays a key role in characterizing the behavior of nonlinear differential equations [10–13]. In recent years, there have been some papers which deal with the problem of existence and stability of anti-periodic solutions. For example, Gong [14] investigated the existence and exponential stability of anti-periodic solutions for a class of Cohen-Grossberg neural networks; Peng and Huang [15] studied the anti-periodic solutions for shunting inhibitory cellular neural networks with continuously distributed delays, Zhang [16] focused on the existence and exponential stability of anti-periodic solutions for HCNNs with time-varying leakage delays. For details, we refer readers to [15, 17–35]. We know that many evolutionary processes exhibit impulsive effects [33, 36–43]. Thus, it is worthwhile to investigate the existence and stability of anti-periodic solutions for HCNNs with impulses. To the best of our knowledge, very few scholars have considered the problem of anti-periodic solutions for such impulsive systems. In this paper, we study the anti-periodic solution of the following high-order cellular neural network with mixed delays and impulses modeled by

where \(i=1, 2, \dots, n\), \(x_{i}(t)\) denotes the state of the ith unit, \(b_{i}(t)>0\) denotes the passive decay, \(c_{ij}\), \(d_{ij}\), \(e_{ijl}\) are the synaptic connections strengths, \(\tau_{ij}(t)\geq0\), \(\alpha_{jl}(t)\geq0\) and \(\beta_{jl}(t)\geq0\) correspond to the delays, \(I_{i}(t)\) stands for the external inputs, \(g_{j}\) is the activation function of signal transmission, the delay kernels \(k_{ij}\) is a real-valued negative continuous function defined on \(R^{+}:=[0,\infty)\), \(t_{k}\) is the impulsive moment, and \(\gamma_{ik}\) characterizes the impulsive jump at time \(t_{k}\) for the ith unit.

For convenience, we introduce some notations as follows.

Throughout this paper, we assume that

(H1) For \(i,j,l=1,2,\dots,n\), \(b_{i}, c_{ij}, d_{ij}, e_{ijl}, I_{i}(t), g_{j}: R\rightarrow{R}\), \(k_{ij}: R^{+}\rightarrow{R^{+}}\), \(\alpha_{jl},\beta_{jl}: R\rightarrow{R^{+}}\) are continuous functions, and there exists a constant \(T>0\) such that

(H2) The sequence of times \(\{t_{k}\}\) (\(k\in{N}\)) satisfies \(t_{k}< t_{k+1}\) and \(\lim_{k\rightarrow{+\infty}}t_{k}=+\infty\), and \(\gamma_{ik}\) satisfies \(-2\leq\gamma_{ik}\leq0\) for \(i\in\{1,2,\dots,n\}\) and \(k\in{N}\).

(H3) There exists \(q\in{N}\) such that \(\gamma_{i(k+q)}=\gamma_{ik}\), \(t_{k+q}=t_{k}+T\), \(k\in{N}\).

(H4) For each \(j\in\{1,2,\dots,n\}\), the activation function \(g_{j}: R\rightarrow{R}\) is continuous, and there exist nonnegative constants \(L_{g}^{j}\) and \(M_{g}\) such that, for all \(u,v\in{R}\),

(H5) There exist constants \(\eta>0\), \(\lambda>0\), \(i=1, 2, \dots, n\), such that

(H6) For \(i=1,2,\ldots,n\), the following condition holds:

Let \(x=(x_{1},x_{2},\dots,x_{n})^{T}\in{R^{n}}\), in which `T’ denotes the transposition. We define \(|x|=(|x_{1}|,|x_{2}|,\dots,|x_{n}|)^{T}\) and \(\|x\|=\max_{1\leq{i}\leq{n}}|x_{i}|\). Obviously, the solution \(x(t)=(x_{1}(t),x_{2}(t), \dots,x_{n}(t))^{T}\) of (1.1) has components \(x_{i}(t)\) piece-wise continuous on \((-\tau,+\infty)\), \(x(t)\) is differentiable on the open intervals \((t_{k-1},t_{k})\) and \(x(t_{k}^{+})\) exists.

Definition 1.1

Let \(u(t):R\rightarrow{R}\) be a piece-wise continuous function having a countable number of discontinuous \(\{t_{k}\}|_{k=1}^{+\infty}\) of the first kind. It is said to be T-anti-periodic on R if

Definition 1.2

Let \(x^{*}(t)= (x^{*}_{1}(t), x^{*}_{2}(t),\dots, x^{*}_{n}(t) )^{T} \) be an anti-periodic solution of (1.1) with initial value \(\varphi^{*}=(\varphi^{*}_{1}(t), \varphi^{*}_{2}(t), \dots, \varphi^{*}_{n}(t))^{T} \). If there exist constants \(\lambda>0\) and \(M >1\) such that for every solution \(x(t)=(x_{1}(t), x_{2}(t),\dots,x_{n}(t))^{T} \) of (1.1) with an initial value \(\varphi=(\varphi_{1}(t), \varphi_{2}(t), \dots, \varphi_{n}(t))^{T}\),

where

Then \(x^{*}(t)\) is said to be globally exponentially stable.

The purpose of this paper is to present sufficient conditions of existence and exponential stability of anti-periodic solution of system (1.1). Not only can our results be applied directly to many concrete examples of cellular neural networks, but they also extend, to a certain extent, the results in some previously known ones. In addition, an example with its numerical simulations is presented to illustrate the effectiveness of our main results.

The rest of this paper is organized as follows. In the next section, we give some preliminary results. In Section 3, we derive the existence of T-anti-periodic solution, which is globally exponential stable. In Section 4, we present an example to illustrate the effectiveness of our main results.

2 Preliminary results

In this section, we present two important lemmas which are used to prove our main results in Section 3.

Lemma 2.1

Let (H1)-(H6) hold. Suppose that \({x}(t)= ({x}_{1}(t), {x}_{2}(t),\dots, {x}_{n}(t))^{T} \) is a solution of (1.1) with initial conditions

Then

where δ satisfies

Proof

For any given initial condition, hypothesis (H4) guarantees the existence and uniqueness of \(x(t)\), the solution to (1.1) in \([-\tau, +\infty)\). Consider the following polynomial \(ax^{2}+bx+c\), where a, b, c are all real numbers. If \(a<0\) and \(b^{2}-4ac<0\), then \(ax^{2}+bx+c>0\). In view of (H6), we know that there exists a positive constant δ which satisfies (2.3). By way of contradiction, we assume that (2.2) does not hold. Notice that \({x}_{i}(t_{k}^{+})=(1+\gamma_{ik}){x}_{i}(t_{k})\) and by assumption (H2), \(-2\leq\gamma_{ik}\leq0\), then \(|{x}_{i}(t_{k}^{+})|=|(1+\gamma_{ik})||{x}_{i}(t_{k})|\leq|{x}_{i}(t_{k})|\). Then, if \(|{x}_{i}(t_{k}^{+})|\geq\delta\), then \(|{x}_{i}(t_{k})|\geq\delta\). Thus we may assume that there must exist \(i\in\{1,2,\dots,n \}\) and \(\widetilde{t}\in(t_{k},t_{k+1}]\) such that

By directly computing the upper left derivative of \(|{x}_{i}(t)|\), together with assumptions (2.3), (H4) and (2.4), we deduce that

which is a contradiction and implies that (2.2) holds. This completes the proof. □

Lemma 2.2

Suppose that (H1)-(H6) hold. Let \(x^{*}(t)=(x^{*}_{1}(t), x^{*}_{2}(t),\dots, x^{*}_{n}(t))^{T} \) be the solution of (1.1) with initial value \(\varphi^{*}=(\varphi^{*}_{1}(t), \varphi^{*}_{2}(t), \dots, \varphi^{*}_{n}(t))^{T} \), and \(x(t)=(x_{1}(t), x_{2}(t), \dots,x_{n}(t))^{T} \) be the solution of (1.1) with initial value \(\varphi=(\varphi_{1}(t), \varphi_{2}(t), \dots, \varphi _{n}(t))^{T}\). Then there exist constants \(\lambda>0\) and \(M>1\) such that

Proof

Let \(y(t)=\{y_{ j}(t) \}=\{x_{ j}(t)-x^{\ast}_{ j}(t) \}=x(t)-x^{*}(t)\). Then

where \(i=1, 2, \dots, n\). Next, define a Lyapunov functional as

It follows from (2.6), (2.7) and (2.8) that

and

where \(i=1, 2, \dots,n\). Let \(M>1\) denote an arbitrary real number and set

Then, by (2.8), we have

Thus we can claim that

Otherwise, there must exist \(i \in\{ 1, 2, \dots, n \}\) and \(\sigma\in(-\tau, t_{1}]\) such that

Combining (2.9), (2.10) with (2.12), we obtain

Then

which contradicts (H5). Then (2.11) holds. In view of (2.11), we know that

and

Then

Thus, for \(t\in[t_{1},t_{2}]\), we can repeat the above procedure and obtain

Similarly, we have

Namely,

This completes the proof. □

Remark 2.1

If \(x^{*}(t)=(x^{*}_{1}(t), x^{*}_{2}(t),\dots,x^{*}_{n}(t))^{T} \) is a T-anti-periodic solution of (1.1), it follows from Lemma 2.2 and Definition 1.2 that \(x^{*}(t)\) is globally exponentially stable.

3 Main result

In this section,we present our main result that there exists the exponentially stable anti-periodic solution of (1.1).

Theorem 3.1

Assume that (H1)-(H6) are satisfied. Then (1.1) has exactly one T-anti-periodic solution \(x^{*}(t)\). Moreover, this solution is globally exponentially stable.

Proof

Let \(v(t)= (v_{1}(t), v_{2}(t),\dots, v_{n}(t))^{T} \) be a solution of (1.1) with initial conditions

Thus, according to Lemma 2.1, the solution \(v(t)\) is bounded and

For \(p\in N\), if \(t\notin{t_{k}}\), then \(t + (p+1)T\notin\{t_{k}\}\); if \(t\in{t_{k}}\), then \(t + (p+1)T\in\{t_{k}\}\). From (1.1), we obtain

and

where \(i=1, 2, \dots,n\). Thus \((-1)^{p+1} v(t +(p+1)T)\) are solutions of (1.1) on \(R^{+}\) for any natural number p. Then, from Lemma 2.2, there exists a constant \(M>1\) such that

and

where \(k\in{N}\), \(i=1,2,\dots,n\). Thus, for any natural number m, we have

Hence

and

where \(i =1,2,\dots,n\). It follows from (3.5)-(3.9) that \((-1)^{m+1}v_{i}(t+(m+1)T)\) is a fundamental sequence on any compact set of \({R}^{+}\). Obviously, \(\{(-1)^{m} v (t + mT)\}\) uniformly converges to a piece-wise continuous function \(x^{*}(t)=(x^{*}_{1}(t), x^{*}_{2}(t),\dots,x^{*}_{n}(t))^{T}\) on any compact set of \({R}^{+}\).

Now we show that \(x^{*}(t)\) is T-anti-periodic solution of (1.1). Firstly, \(x^{*}(t)\) is T-anti-periodic, since

and

In the sequel, we prove that \(x^{*}(t)\) is a solution of (1.1). Noting that the right-hand side of (1.1) is piece-wise continuous, (3.3) and (3.4) imply that \(\{((-1)^{m+1} v (t +(m+1)T))'\}\) uniformly converges to a piece-wise continuous function on any compact subset of \({R}^{+}\). Thus, letting \(m \to\infty\) on both sides of (3.3) and (3.4), we can easily obtain

where \(i=1,2,\dots,n\). Therefore, \(x^{*}(t)\) is a solution of (1.1). Finally, by applying Lemma 2.2, it is easy to check that \(x^{*}(t)\) is globally exponentially stable. The proof of Theorem 3.1 is completed. □

Remark 3.1

In [10–12, 14, 15, 20–24, 44], although authors consider the existence and exponential stability of anti-periodic solutions of neural networks, they do not consider the impulsive case. In this paper, we consider the high-order cellular neural networks with impulses. The obtained results show that impulses play a certain role in the existence and exponential stability of anti-periodic solutions of cellular neural networks. If the \(\gamma_{ik}=0 \) (i.e., there is no impulse), then Theorem 3.1 is still valid if we delete the condition on the impulse. All the results in [10–12, 14, 15, 20–24, 44] cannot be applicable to system (1.1) to obtain the existence and exponential stability of anti-periodic solutions. This implies that the results of this paper are essentially new. Our results complement the previous work.

4 An example

In this section, we give an example to illustrate our main results obtained in previous sections. Consider the high-order cellular neural network with delays and impulses

where \(g_{1}(u)=g_{2}(u)=\frac{1}{2}(|u+1|-|u-1|)\) and

where \(l=1,2\). By a simple calculation, we get

Let \(\eta=0.2\), \(\lambda=0.001\). Then

and

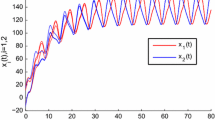

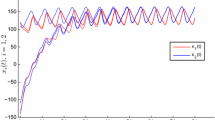

which implies that system (4.1) satisfies all the conditions in Theorem 3.1. Thus, (4.1) has exactly one π-anti-periodic solution. Moreover, this solution is globally exponentially stable. The results are shown in Figure 1.

Times series of \(\pmb{x_{1}(t)}\) and \(\pmb{x_{2}(t)}\) of system ( 4.1 ).

References

Cao, J, Wang, J: Global exponential stability and periodicity of recurrent neural networks with time delays. IEEE Trans. Circuits Syst. I 52(5), 920-931 (2005)

Chen, Z, Zhao, DH, Ruan, J: Dynamic analysis of high order Cohen-Grossberg neural networks with time delay. Chaos Solitons Fractals 32(4), 1538-1546 (2007)

Lou, XY, Cui, BT: Novel global stability criteria for high-order Hopfield-type neural networks with time-varying delays. J. Math. Anal. Appl. 330(1), 144-158 (2007)

Wu, C, Ruan, J, Lin, W: On the existence and stability of the periodic solution in the Cohen-Grossberg neural network with time delay and high-order terms. Appl. Math. Comput. 177(1), 194-210 (2006)

Xiang, H, Yan, KM, Wang, BY: Existence and global exponential stability of periodic solution for delayed high-order Hopfield-type neural networks. Phys. Lett. A 352(4-5), 341-349 (2006)

Xu, BJ, Liu, XZ, Liao, XX: Global exponential stability of high order Hopfield type neural networks. Appl. Math. Comput. 174(1), 98-116 (2006)

Xu, BJ, Liu, XZ, Liao, XX: Global exponential stability of high order Hopfield type neural networks with time delays. Comput. Math. Appl. 45(10-11), 1729-1737 (2003)

Wu, W, Cui, BT: Global robust exponential stability of delayed neural networks. Chaos Solitons Fractals 35(4), 747-754 (2008)

Zeng, ZG, Wang, J: Improved conditions for global exponential stability of recurrent neural networks with time-varying delays. IEEE Trans. Neural Netw. 17(3), 623-635 (2006)

Li, YK, Yang, L: Anti-periodic solutions for Cohen-Grossberg neural networks with bounded and unbounded delays. Commun. Nonlinear Sci. Numer. Simul. 14(7), 3134-3140 (2009)

Shao, JY: Anti-periodic solutions for shunting inhibitory cellular neural networks with time-varying delays. Phys. Lett. A 372(30), 5011-5016 (2008)

Fan, QY, Wang, WT, Yi, XJ: Anti-periodic solutions for a class of nonlinear nth-order differential equations with delays. J. Comput. Appl. Math. 230(2), 762-769 (2009)

Li, YK, Xu, EL, Zhang, TW: Existence and stability of anti-periodic solution for a class of generalized neural networks with impulses and arbitrary delays on time scales. J. Inequal. Appl. 2010, Article ID 132790 (2010)

Gong, SH: Anti-periodic solutions for a class of Cohen-Grossberg neural networks. Comput. Math. Appl. 58(2), 341-347 (2009)

Peng, GQ, Huang, LH: Anti-periodic solutions for shunting inhibitory cellular neural networks with continuously distributed delays. Nonlinear Anal., Real World Appl. 10(40), 2434-2440 (2009)

Zhang, AP: Existence and exponential stability of anti-periodic solutions for HCNNs with time-varying leakage delays. Adv. Differ. Equ. 2013, Article ID 162 (2013). doi:10.1186/1687-1847-2013-162

Aftabizadeh, AR, Aizicovici, S, Pavel, NH: On a class of second-order anti-periodic boundary value problems. J. Math. Anal. Appl. 171(2), 301-320 (1992)

Aizicovici, S, McKibben, M, Reich, S: Anti-periodic solutions to nonmonotone evolution equations with discontinuous nonlinearities. Nonlinear Anal. TMA 43(2), 233-251 (2001)

Liu, BW: An anti-periodic LaSalle oscillation theorem for a class of functional differential equations. J. Comput. Appl. Math. 223(2), 1081-1086 (2009)

Huang, ZD, Peng, LQ, Xu, M: Anti-periodic solutions for high-order cellular neural networks with time-varying delays. Electron. J. Differ. Equ. 2010, 59 (2010)

Li, YK, Yang, L, Wu, WQ: Anti-periodic solutions for a class of Cohen-Grossberg neural networks with time-varying on time scales. Int. J. Syst. Sci. 42(7), 1127-1132 (2011)

Pan, LJ, Cao, JD: Anti-periodic solution for delayed cellular neural networks with impulsive effects. Nonlinear Anal., Real World Appl. 12(6), 3014-3027 (2011)

Li, YK: Anti-periodic solutions to impulsive shunting inhibitory cellular neural networks with distributed delays on time scales. Commun. Nonlinear Sci. Numer. Simul. 16(8), 3326-3336 (2011)

Fan, QY, Wang, WT, Yi, XJ, Huang, LH: Anti-periodic solutions for a class of third-order nonlinear differential equations with deviating argument. Electron. J. Qual. Theory Differ. Equ. 2010, 8 (2010)

Wang, W, Shen, J: Existence of solutions for anti-periodic boundary value problems. Nonlinear Anal. 70(2), 598-605 (2009)

Chen, YQ, Nieto, JJ, O’Regan, D: Anti-periodic solutions for fully nonlinear first-order differential equations. Math. Comput. Model. 46(9-10), 1183-1190 (2007)

Peng, L, Wang, WT: Anti-periodic solutions for shunting inhibitory cellular neural networks with time-varying delays in leakage terms. Neurocomputing 111, 27-33 (2013)

Park, JY, Ha, TG: Existence of anti-periodic solutions for quasilinear parabolic hemivariational inequalities. Nonlinear Anal. TMA 71(7-8), 3203-3217 (2009)

Yu, YH, Shao, JY, Yue, GX: Existence and uniqueness of anti-periodic solutions for a kind of Rayleigh equation with two deviating arguments. Nonlinear Anal. TMA 71(10), 4689-4695 (2009)

Lv, X, Yan, P, Liu, DJ: Anti-periodic solutions for a class of nonlinear second-order Rayleigh equations with delays. Commun. Nonlinear Sci. Numer. Simul. 15(11), 3593-3598 (2010)

Li, YQ, Huang, LH: Anti-periodic solutions for a class of Liénard-type systems with continuously distributed delays. Nonlinear Anal., Real World Appl. 10(4), 2127-2132 (2009)

Liu, BW: Anti-periodic solutions for forced Rayleigh-type equations. Nonlinear Anal., Real World Appl. 10(5), 2850-2856 (2009)

Shi, PL, Dong, LZ: Existence and exponential stability of anti-periodic solutions of Hopfield neural networks with impulses. Appl. Math. Comput. 216(2), 623-630 (2010)

Wang, Q, Fang, YY, Li, H, Su, LJ, Dai, BX: Anti-periodic solutions for high-order Hopfield neural networks with impulses. Neurocomputing 138, 339-346 (2014)

Liu, Y, Yang, YQ, Liang, T, Li, L: Existence and global exponential stability of anti-periodic solutions for competitive neural networks with delays in the leakage terms on time scales. Neurocomputing 133, 471-482 (2014)

Zheng, QQ, Shen, JW: Bifurcations and dynamics of cancer signaling network regulated by micro RNA. Discrete Dyn. Nat. Soc. 2013, Article ID 176956 (2013)

Shen, JW, Liu, ZR, Zheng, WX, Xu, FD, Chen, LN: Oscillatory dynamics in a simple gene regulatory network mediated by small RNAs. Physica A 388(14), 2995-3000 (2009)

Tang, XH, Zou, XF: The existence and global exponential stability of a periodic solution of a class of delay differential equations. Nonlinearity 22(10), 2423-2442 (2009)

Xu, CJ, Tang, XH, Liao, MX: Bifurcation analysis of a delayed predator-prey model of prey migration and predator switching. Bull. Korean Math. Soc. 50, 353-373 (2013)

Ferrara, M, Munteanu, F, Udriste, C, Zugravescu, D: Controllability of a nonholonomic macroeconomic system. J. Optim. Theory Appl. 154, 1036-1054 (2012)

Ferrara, M, Bianca, C, Guerrini, L: High-order moments conservation in thermostatted kinetic models. J. Glob. Optim. 58(2), 389-404 (2014)

Ferrara, M, Khademloob, S, Heidarkhani, S: Multiplicity results for perturbed fourth-order Kirchhoff type problems. Appl. Math. Comput. 234, 316-325 (2014)

Ferrara, M, Heidarkhani, S: Multiple solutions for perturbed p-Laplacian boundary-value problems with impulsive effects. Electron. J. Differ. Equ. 2014, 106 (2014)

Ou, CX: Anti-periodic solutions for high-order Hopfield neural networks. Comput. Math. Appl. 56(7), 1838-1844 (2008)

Acknowledgements

The first author was supported by the National Natural Science Foundation of China (No. 11261010) and Governor Foundation of Guizhou Province ([2012]53). The second author was supported by the National Natural Science Foundation of China (No. 11101126). The authors would like to thank the referees and the editor for helpful suggestions incorporated into this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

The authors have made the same contribution. All authors read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Xu, C., Wu, Y. Anti-periodic solutions for high-order cellular neural networks with mixed delays and impulses. Adv Differ Equ 2015, 161 (2015). https://doi.org/10.1186/s13662-015-0497-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-015-0497-4